Abstract

K-nearest neighbor based structural twin support vector machine (KNN-STSVM) performs better than structural twin support vector machine (S-TSVM). It applies the intra-class KNN method, and different weights are given to the samples in one class to strengthen the structural information. For the other class, the redundant constraints are deleted by the inter-class KNN method to speed up the training process. However, the empirical risk minimization principle is implemented in the KNN-STSVM, so it easily leads to over-fitting and reduces the prediction accuracy of the classifier. To enhance the generalization ability of the classifier, we propose an efficient regularized K-nearest neighbor structural twin support vector machine, called RKNN-STSVM, by introducing a regularization term into the objective function. So there are two parts in the objective function, one of which is to maximize the margin between the two parallel hyper-planes, and the other one is to minimize the training errors of two classes of samples. Therefore the structural risk minimization principle is implemented in our RKNN-STSVM. Besides, a fast DCDM algorithm is introduced to handle relatively large-scale problems more efficiently. Comprehensive experimental results on twenty-seven benchmark datasets and two popular image datasets demonstrate the efficiency of our proposed RKNN-STSVM.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The support vector machine (SVM) approach, introduced by Vapnik [1], is an extremely powerful kernel-based machine learning technique for data classifications. At present SVM has been successfully applied in various aspects, such as pattern recognition [2], text classification [3], and imbalance classification [4]. For SVM, the target is to find a hyper-plane that can separate different classes well. Many hyper-planes can meet this requirement, and SVM finds the one which follows the maximum margin principle in statistical learning theory [1]. SVM solves a quadratic programming problem (QPP), assuring that once an optimal solution is obtained, it is the unique (global) solution. By introducing kernel trick into the dual QPP, SVM can solve nonlinear classification problems successfully and can also effectively overcome the “curse of dimension”. It is well known that the training cost of SVM is O(l3), where l is the total size of the training data. As the increasing of the number of training samples, SVM costs much time.

To improve the computational speed of SVM. Recently, Jayadeva et al. [5] proposed a twin support vector machine (TSVM) for the binary classification data, which is based on the idea of the GEPSVM [6]. TSVM generates two nonparallel hyper-planes such that each hyper-plane is closer to one class and as far as possible from the other. It is implemented by solving two smaller-sized QPPs, which makes the learning speed of TSVM approximately four times faster than that of the classical SVM. Now, TSVM has been widely used because of its low computational complexity. Many variants of TSVM have been proposed, such as smooth TSVM [7], twin support vector regression [8,9,10], non-parallel Universum support vector machine [11]. However, each of them minimizes the empirical risk, which easily leads to the over-fitting problem. Twin bounded support vector machine (TBSVM) [12, 13] minimizes the structural risk by adding a regularization term. Wang et al. [14] proposed an improved ν-twin bounded support vector machine (Iν-TBSVM). Various algorithms [15,16,17,18,19,20] also implement the structural risk minimization principle, and have better generalization ability. But these algorithms do not sufficiently apply the prior distribution information of samples within classes.

In fact, different classes may possess diverse structural information when dealing with real world data. This structural information may be important for classification and has a great impact on the decision function. One obvious disadvantage of TSVM is that TSVM usually pays more attention to separating two classes well but ignores the underlying structural information within classes. Based on the studies of structural SVM, such as SLMM [21], SRSVM [22]. In 2013, Qi et al. [23] proposed a novel structural twin support vector machine (S-TSVM). This S-TSVM extracts the structural information by using a hierarchical clustering method and minimizes the tightness of each cluster by using the form of the covariance matrix. To improve the computational speed of S-TSVM, Xu et al. [24] proposed the structural least square twin support vector machine (S-LSTSVM). Since S-LSTSVM only needs to solve a pair of linear equations, it greatly accelerates the calculation speed.

Although experimental results reveal that S-TSVM owns excellent generalization ability, the S-TSVM has an obvious disadvantage. It ignores that samples within different clusters have different importance, and we can improve the classification accuracy by studying the different information of samples. Ye et al. [25] proposed a weighted TSVM with local information (WLTSVM), and it introduces a novel KNN method which gives different treatments to different classes in objective function or constraints of the model. Inspired by S-TSVM and WLTSVM, Pan et al. [26] proposed a K-nearest neighbor based structural twin support vector machine (KNN-STSVM). Recently, Mir et al. [27] proposed KNN-based least squares twin support vector machine (KNN-LSTSVM), which applies the similarity information of samples into LSTSVM. And Tanveer et al. [28] proposed an efficient regularized K-nearest neighbor based weighted twin support vector regression (RKNNWTSVR). By introducing regularization terms into KNNWTSVR [10] and replacing 1-norm of slack variables with 2-norm, RKNNWTSVR not only saves computational cost, but also improves the generalization performance.

However, KNN-STSVM involves the empirical risk minimization principle, which easily leads to the over-fitting problem if outliers exist [29,30,31] and reduces the prediction accuracy of the classifier. RKNNWTSVR solves the above problems by introducing extra regularization terms in each objective function to implement the structural risk minimization principle. But RKNNWTSVR ignores the helpful underly structural information of the data, which may contain useful prior domain knowledge for training a model. Motivated by the studies above, we improve the primal problem of KNN-STSVM by adding regularization terms into the objective function. By doing this, our new formulation, called RKNN-STSVM, is not only singularity free but also theoretically supported by statistical learning theory [32,33,34]. Similar to KNN-STSVM, RKNN-STSVM constructs two nonparallel hyper-planes by solving two smaller QPPs. We also incorporate the structural information of the corresponding class into the model. In addition, we not only strengthen the structural information by giving different weights to the samples, but also delete the redundant constraints to speed up the computation process. The contributions of our paper are as follows: (1) By introducing a regularization term, the matrix is guaranteed to be reversible when solving the dual problems. However, this extra prerequisite cannot always be satisfied without a regularization term. The dual problem of our algorithm can be derived without any extra assumption and need not be modified anymore; (2) In the primal problem of KNN-STSVM, the empirical risk is minimized, whereas in our RKNN-STSVM the structural risk is minimized by adding a regularization term with the idea of maximizing the margin; (3) In order to shorten training time, an effective method (the dual coordinate descent method, DCDM) is applied to our RKNN-STSVM.

Nevertheless, like the SVM, the long training time is still one of the main challenges of our RKNN-STSVM. So far, many fast optimization algorithms have been presented to accelerate the training speed, including the decomposition method [35], sequential minimal optimization (SMO) [36], the geometric algorithms [37], the dual coordinate descent method (DCDM) [38], and so on. The recently proposed DCDM algorithm can directly solve the dual QPP of SVM by updating one variable sub-problem during each iteration. The updated variable will derive the largest decrease on the objective value. This DCDM method shows a fast learning speed and great efficiency in experiments. In this paper, we introduce it into our RKNN-STSVM. Comparisons of the RKNN-STSVM with some other algorithms in terms of classification accuracy and learning time have been made on several UCI datasets and Caltech image datasets, indicating the superiority of our RKNN-STSVM.

The rest of the paper is organized as follows. Section 2 outlines the TSVM, S-TSVM, and KNN-STSVM. Details of RKNN-STSVM are given in Section 3, both the linear and nonlinear cases are included. Section 4 discusses the experimental results on twenty-seven benchmark datasets and two popular image recognition datasets to investigate the effectiveness of our proposed RKNN-STSVM. In Section 5, the DCDM algorithm is introduced into RKNN-STSVM to accelerate the learning speed. The last section contains conclusions.

2 Related work

In this section, we give a brief description of TSVM, S-TSVM and KNN-STSVM. The training samples are denoted by a set T = {(x1,y1),…,(xl,yl)}, where xi ∈ Rn,yi ∈{1,− 1},i = 1,2,…,l. For convenience, matrix \(A \in R^{l_{1}\times n}\) represents the positive samples and matrix \(B \in R^{l_{2}\times n}\) represents the negative samples.

2.1 Twin support vector machine

TSVM generates two nonparallel hyper-planes instead of a single one as in the conventional SVMs. The two nonparallel hyper-planes are obtained by solving two smaller sized QPPs as opposed to a single large one in the standard SVMs. The linear TSVM aims to find two nonparallel hyper-planes in n-dimensional input space,

such that each hyper-plane is closer to one class and as far as possible from the other. A new sample is assigned to class + 1 or − 1 depending upon its proximity to the two nonparallel hyper-planes.

The formulations of linear TSVM can be written as follows:

and

where \(c_{1}, c_{2} \geqslant 0\) are pre-specified penalty factors, e1 and e2 are vectors of ones of appropriate dimensions. By introducing the lagrangian multipliers, the Wolfe dual of QPPs (2) and (3) can be represented as follows:

where G = [Be] and H = [Ae], and

where P = [Ae] and Q = [Be].

After resolving the QPPs (4) and (5), we can obtain

and

A new testing sample x ∈ Rn is assigned to a class i = (+ 1,− 1) by comparing the following perpendicular distan ce which measures the distance of the sample from the two hyper-planes (1), i.e.,

In TSVM, if the number of samples in two classes is approximately equal to l/2, the computational complexity of TSVM is O(2 × (l/2)3). Thus the ratio of run-times between SVM and TSVM is l3/(2 × (l/2)3) = 4, which implies that TSVM works approximately four times faster than SVM [5].

2.2 Structural twin support vector machine

S-TSVM extracts the structural information of each class by some clustering methods. Then it applies the data distributions of the clusters in different classes into the objective functions of TBSVM, which makes S-TSVM fully exploit the structural information of samples into the model. Suppose there are CP clusters in the positive class P and CN clusters in the negative class N, respectively, ie., \(P=P_{1} \cup {\ldots } P_{i} \cup {\ldots } P_{C_{P}}, N=N_{1} \cup {\ldots } N_{j} \cup {\ldots } N_{C_{N}}\). Then the subsequent optimization problem in the S-TSVM model can be formulated as follows [23]:

and

where \(c_{i} \geqslant 0 (i = 1,\ldots ,6)\) are the pre-specified penalty factors, e1 and e2 are vectors of ones of appropriate dimensions, both ξ and η are slack variables. \({\Sigma }_{+}={\Sigma }_{P_{1}} +\ldots +{\Sigma }_{P_{C_{P}}}\), \({\Sigma }_{-}={\Sigma }_{N_{1}}+\ldots +{\Sigma }_{N_{C_{N}}}\), \({\Sigma }_{P_{i}}\) and \({\Sigma }_{N_{j}}\) are respectively the covariance matrices of clusters in the two classes. The corresponding dual problems of S-TSVM are given as follows:

and

where

A new data point x ∈ Rn is classified as the positive class or negative class depending on which of the two hyper-planes it lies closer to. The decision function of S-TSVM is

2.3 K-nearest neighbor structural twin support vector machine

To further improve the computational precision and speed of a classifier, KNN-STSVM is proposed in the spirit of S-TSVM. The formulation of KNN-STSVM is similar to TSVM, as it also tries to obtain two nonparallel hyper-planes for two classes by solving a pair of small-sized QPPs as follows [26]:

and

where the parameters \(c_{1}, c_{2}, c_{3}, c_{4}\geqslant 0\) determine the penalty weights, and ξ, η are slack variables. Both e+ and e− are vectors of ones with appropriate dimensions, Ws,ij is the weight matrix and fj is the vector with only 0 or 1. \({\Sigma }_{+}={\Sigma }_{P_{1}}+\ldots +{\Sigma }_{P_{C_{P}}}\), \({\Sigma }_{-}={\Sigma }_{N_{1}}+\ldots +{\Sigma }_{N_{C_{N}}}\), \({\Sigma }_{P_{i}}\) and \({\Sigma }_{N_{j}}\) are respectively the covariance matrixes corresponding to the i th and the j th clusters in two classes. The corresponding dual problems of KNN-STSVM are given as follows:

and

A new testing sample x ∈ Rn is assigned to a class i = (+ 1,− 1) by comparing the following perpendicular distance which measures the distance of the sample from the two hyper-planes, i.e.,

3 An efficient regularized K-nearest neighbor structural twin support vector machine

Motivated by [23, 25, 26, 28], we propose a reasonable and efficient variant of KNN-STSVM called regularized KNN-based weighted structural twin support vector machine, RKNN-STSVM for short. RKNN-STSVM has three steps: clustering, applying KNN tricks, and learning. RKNN-STSVM adopts some clustering techniques to capture the data distribution within classes, and KNN tricks are also applied to our model to improve the generalization performance.

3.1 Clustering

Structural support vector machine usually considers the data distribution through clustering methods. Here, we use the same clustering methods (the Ward’s linkage clustering, which is one of the hierarchical clustering method) as S-TSVM to study the clusters in each individual class. The main advantage of Ward’s linkage clustering (WIL) [39] is that clusters derived from this clustering method are compact and spherical, which provides a reliable basis to calculate the covariance matrices. Concretely, if S and T are two clusters, μS and μT are means of the clusters, the Ward’s linkage W(S,T) between clusters S and T can be computed as [21]

Initially, each individual sample is considered as a cluster. The Ward’s linkage of two samples xi and xj is W(xi,xj) = ∥xi − xj∥2/2. When two clusters are being merged to a new cluster A′, the linkage W(A′,C) can be represented by W(A,C),W(B,C) and W(A,B) as [21]

The Ward’s linkage between clusters to be merged increases as the number of clusters decreases during the hierarchical clustering. A relation curve between the merge distance and the number of clusters can be determined by finding the knee point [40]. Furthermore, the WIL can be also applicable to the nonlinear case.

3.2 K-nearest neighbor method

Weighted TSVM [25] mines as much underlying similarity information within samples as possible. Weighted TSVM firstly constructs two neighbor graphs in the input space, i.e. intra-class graph Gs and inter-class graph Gd, to search the weights of samples from one class and to extract the possible SVs residing in the other class. For each sample xi in class + 1, it defines two nearest sets: Neas(xi) and Nead(xi). Neas(xi) contains its neighbors in class + 1, while Nead(xi) contains its neighbors in class -1. Specifically,

and

Clearly, Neas denotes a set of its k1-nearest neighbors in class + 1, and Nead stands for a set of its k2-nearest neighbors in class − 1. Two adjacent (i.e. similarity) matrices of the plane of class + 1 corresponding to Gs and Gd can be defined [41], respectively, as follows:

and

When Ws,ij = 1 or Wd,ij = 1, an undirected edge between xi and xj is added to the corresponding graph. To find the possible SVs from samples in class -1, here we redefine the weight matrix Wd,ij of Gd as follows:

3.3 Linear case

By introducing the extra regularization terms \((\|w_{+}\|_{2}^{2}+b_{+}^{2})\) and \((\|w_{-}\|_{2}^{2}+b_{-}^{2})\) into primal problems (14) and (15), respectively, our RKNN-STSVM can be expressed as follows:

and

where \(c_{1}, c_{2},\ldots , c_{6}\geqslant 0\) are positive parameters given in advance, which are used to denote the tradeoff among each term in the objective function, respectively. ξ and η are slack variables, both e+ and e− are vectors of ones of appropriate dimensions, Ws,ij andfj are defined as \({\Sigma }_{+}={\Sigma }_{P_{1}}+\ldots +{\Sigma }_{P_{C_{P}}} \), \({\Sigma }_{-}={\Sigma }_{N_{1}} +\ldots +{\Sigma }_{N_{C_{N}}} \), \({\Sigma }_{P_{i}}\) and \({\Sigma }_{N_{j}}\) are respectively the covariance matrixes corresponding to the i th and the j th clusters in the two classes.

To solve (26), the lagrangian function is defined as:

where \(d_{j}^{(1)}={{\sum }_{i=1}^{l_{1}}}W_{s,ij}^{(1)}\), α,β are lagrangian multipliers. Differentiating the lagrangian function L1 with respect to variables w+,b+ and ξ yields the following Karush-Kuhn-Tucker (KKT) conditions:

Rewrite (29) and (30) in their matrix forms, we get the following equations:

where \(D= diag(d_{1}^{(1)}, d_{2}^{(1)}, \ldots , d_{l_{1}}^{(1)})\) and \(F=diag(f_{1}^{(2)}, f_{2}^{(2)}, \ldots , f_{l_{2}}^{(2)})\) are diagonal matrices, and \({f_{j}^{(2)}}(j = 1, 2, \ldots , l_{2})\) is either 0 or 1. The following equation is obtained by combining (32) and (33).

i.e.,

where

Finally, we can derive the dual formulation of (26) as follows:

Similarly, the lagrangian function for (27) is defined as

where \(d_{j}^{(2)}={{\sum }_{i=1}^{l_{2}}}W_{s,ij}^{(2)}, \gamma , \nu \) are lagrangian multipliers. After differentiating the lagrangian function (37) with respect to variables w−,b− and η, we can obtain the simplified dual QPP of (27), which is,

where \(Q=diag(d_{1}^{(2)}, d_{2}^{(2)}, \cdots , d_{l_{2}}^{(2)})\) and HCode \(P=diag (f_{1}^{(1)},f_{2}^{(1)}, \cdots , f_{l_{1}}^{(1)})\) are diagonal matrices, \(f_{j}^{(1)}\) is either 0 or 1, \(J_{-}=\left [\begin {array}{ll} {\Sigma }_{-} & 0\\ 0 & 0 \end {array}\right ]\).

Once the solution γ is calculated, we will get the following augmented vector,

3.4 Nonlinear case

For the nonlinear case, the two nonparallel hyper-planes are modified as the following kernel-generated expressions:

where

and K(⋅) stands for a chosen kernel function. The primal QPPs of nonlinear RKNN-STSVM corresponding to the hyper-planes (40) are respectively given as follows:

and

where \(c_{1}, c_{2}, c_{3}, c_{4} \geqslant 0\) are predefined parameters, and e is the vector of appropriate dimensions, Ws,ij and fj are defined as in the linear case. \({\Sigma }_{+}^{\phi }\) and \({\Sigma }_{-}^{\phi }\) are respectively the covariance matrices in the two classes by the kernel Ward’s linkage clustering.

The Wolfe dual of problem (42) is formulated as follows:

where Hϕ = [K(A) e+], Gϕ = [K(B) e−], \(J_{+}^{\phi }=\left [\begin {array}{ll}{\Sigma }_{+}^{\phi } & 0 \\0 & 0 \end {array}\right ]\), then we can further get

In a similar manner, the dual formulation of (43) is

Once the solution γ is found, we get the following result,

Here, specifications of the matrices D and Q are analogous to the linear case.

A new point x ∈ Rn is classified as the positive class or negative class depending on which of the two hyper-planes given by (45) and (47) it lies closer to. The decision function of RKNN-STSVM is

3.5 Analysis of algorithm

We first discuss the differences between TSVM, S-TSVM, KNN-STSVM, and our RKNN-STSVM.

- (1)

Optimization of traditional TSVM costs around O(l3/4) if we assume that the patterns in two classes are approximately equal. As for S-TSVM, the computational complexity of the clustering step is O\((({l^{2}_{1}}+{l^{2}_{2}})n)\). So the computational complexity of S-TSVM is O\((({l^{2}_{1}}+{l^{2}_{2}})n+l^{3}/4)\). Thus, the overall computational complexity of KNN-STSVM and RKNN-STSVM is around O\((l^{2}log(l)+({l^{2}_{1}}+{l^{2}_{2}})n+l^{3}/4)\). Certainly, this conclusion is under the assumption of no constraints deleted. In fact, some components of f are set to be 0, which implies that the constraints are redundant, so the computational complexity of KNN-STSVM and RKNN-STSVM is less than O\((({l^{2}_{1}}+{l^{2}_{2}})n+l^{3}/4)\).

- (2)

Unlike TSVM and KNN-STSVM, our RKNN-STSVM implements the structural risk minimization principle by introducing extra regularization terms in each objective function so that the problem becomes well-posed. It can not only help to alleviate over-fitting issue and improve the generation performance as in conventional SVM but also introduce invertibility in the dual formulation.

- (3)

Two parameters c3 and c6 introduced in our RKNN-STSVM are the weights between the regularization term and the empirical risk, so that they can be chosen to improve the RKNN-STSVM performance.

4 Numerical experiments

To validate the superiority of our algorithm, in this section, we compare our proposed RKNN-STSVM with TSVM, Iν-TBSVM, S-TSVM, KNN-STSVM, KNN-LSTSVM on twenty-seven benchmark datasets from UCI machine learning repositoryFootnote 1 or the LIBSVM Database [42]. They are Australian (A), BCI-1a (B), Breast Cancer1 (B1), Breast Cancer2 (B2), Breast Cancer3 (B3), Balance Scale (BS), BUPA (BU), DBworld e-mail (D), Echocardiogram (E), Fertility (F), Hepatitis (H), Haberman (HA), Heart (HE), Ionosphere (I), IRIS (IR), LSVT (L), Liver Disorder (LD), Monks (M), Pima (P), Parkinson (PA), Planning Relax (PR), Sonar (S), Spect Heart (SH), Spambase (SP), Vertebral (V), WPBC (W), Waveform (WA). For convenience, we will use the abbreviations of them in the following paper. Table 1 lists the characteristics of these datasets. To further evaluate our algorithm, we also conduct experiments and make comparisons on popular Caltech image datasets.

To make the results more convincing, we use the 5-fold cross validation method to get the classification accuracy. More specifically, we spilt each dataset into five subsets randomly, and one of those sets is reserved as a test set whereas remaining sets are considered for training. This process is repeated five times until all subsets have been a test one once. All experiments are carried out in Matlab R2017a on Windows 10.

4.1 Parameters selection

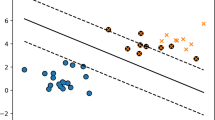

The performance of six algorithms depends heavily on the choice of kernel functions and their parameters. In this paper, we only consider Gaussian kernel function k(xi,xj) = exp(−∥xi − xj∥2/γ2) for these datasets. We choose optimal values of the parameters by the grid search method. The Gaussian kernel parameter γ is selected from the set {2i|i = − 1,0,…,8}. For brevity’s sake, we let c1 = c4,c2 = c5,c3 = c6 in S-TSVM, RKNN-STSVM, c1 = c3,c2 = c4 in KNN-STSVM, r1 = r2, ν1 = ν2 in Iν-TBSVM, and c1 = c2 in TSVM, KNN-LSTSVM. The parameters c1, c2 are selected from the set {2i|i = − 1,0,…,8} and r1, r2 are selected from the set {10i|i = − 6,− 5,…,0}. In order to save calculation time and maintain calculation accuracy, we calculate the accuracy of the Breast Cancer 1 and Echocardiogram datasets along with the curve of parameter c3 in Fig. 1, we found that the optimal parameters are chosen from 10− 6 to 1, so c3 is selected from the set {10i|i = − 6,− 5,…,0}. We also calculate the accuracy of the Australian and the Breast Cancer 1 datasets along with the curve of parameter k in Fig. 2, the optimal value for k in KNN-STSVM, KNN-LSTSVM and RKNN-STSVM is chosen from the set {3,4,5,6,10,20}. The parameter ν1 is searched from the set {0.1,0.2,…,0.7} in Iν-TBSVM. For large-scale datasets, the range of parameters will be narrowed uniformly due to the long running time.

4.2 Result comparison and discussion

The experimental results on twenty-seven datasets are summarized in Table 2, where the “Accuracy” denotes the mean value of five times testing results, and plus or minus the standard deviation. “Time” denotes the mean value of the time taken by six methods, and each consists of training time and testing time. And the optimal parameters of six algorithms are shown in Table 3.

From the perspective of prediction accuracy in Table 2, we can learn that our proposed RKNN-STSVM outperforms other five algorithms, i.e., TSVM, S-TSVM, Iν-TBSVM, KNN-STSVM and KNN-LSTSVM, for most datasets. RKNN-STSVM follows, it produces better testing accuracy than TSVM, Iν-TBSVM, S-TSVM, and KNN-LSTSVM for most cases. The TSVM yields the lowest testing accuracy and KNN-LSTSVM yields the lowest computational cost. Two main reasons lead RKNN-STSVM to produce better accuracy. One is that different weights are proposed to the samples according to their numbers of KNNs, and the point is important if it has more KNNs. The other is that our RKNN-STSVM implements the structural risk minimization principle by introducing extra regularization terms in each objective function.

Compared with other five algorithms, our RKNN-STSVM yields the highest testing accuracy on high-dimensional datasets including both BCI-1a and Breast Cancer3. This implies that our RKNN-STSVM is also suitable for the high-dimensional dataset. However, it yields the second lowest testing accuracy on high dimensional dataset Dbworld e-mails. The main reason lies in that the number of samples in this dataset is too small, it only has 64 samples, but has 4702 features. In large datasets, we can see RKNNS-TSVM yields the highest testing accuracy and its computational time is also lower than S-TSVM and KNN-STSVM in Spambase (SP) and Waveform (WA) datasets. But too many parameters leading to much training time is the major drawback of our algorithm. In Section 5, we add the DCDM fast algorithm to accelerate the calculation speed of our RKNN-STSVM.

4.3 Friedman tests

From Table 2, one can easily observe that our proposed RKNN-STSVM does not outperform other five algorithms for all the datasets in terms of testing accuracy. To analyze the performance of six algorithms on multiple datasets statistically, as it was suggested in Dems̆ar [43] and García and Fernández [44], we use Friedman test with the corresponding post hoc test which considered to be a simple, nonparametric yet safe test. For this, the average ranks of six algorithms on accuracy for all datasets are calculated and listed in Table 4. Under the null-hypothesis that all the algorithms are equivalent, one can compute the Friedman statistic [43] according to (49):

where \(R_{j}=\frac {1}{N}{\sum }_{i}{r^{j}_{i}}\), and \({r^{j}_{i}}\) denotes the j th of k algorithms on the i th of N datasets. Friedman’s \({\chi ^{2}_{F}}\) is undesirably conservative and derives a better statistic

which is distributed according to the F-distribution with k − 1 and (k − 1)(N − 1) degrees of freedom.

We can obtain \({\chi ^{2}_{F}}=59.7356\) and FF = 20.6356 according to (49) and (50). Where FF is distributed according to F-distribution with (5,130) degrees of freedom. The critical value of F(5,130) is 2.2839 for the level of significance α = 0.05, and similarly it is 2.6656 for α = 0.025 and 3.1612 for α = 0.01. Since the value of FF is much larger than the critical value, there is a significant difference between the six algorithms. Note that, the average rank of RKNN-STSVM is far lower than the remaining algorithms. It means that our RKNN-STSVM is more valid than the other five algorithms.

4.4 Influence of parameter k

To investigate the influence of parameter k, we conduct experiments on twenty datasets from former twenty-seven datasets. The value of parameter k is chosen from the set {3,4,5,6,10,20}.

Table 5 shows that the accuracy of our proposed RKNN-STSVM is closely related to the parameter of k, this phenomenon is more pronounced in dataset DBworld e-mail. It can be noticed that when k is gradually increased, the accuracy of DBworld e-mail is also increased. And a too large or too small value of k will both reduce the prediction accuracy. Therefore, an appropriate parameter k is very important for our model.

4.5 Image recognition datasets

We conduct experiments on Caltech image datasets, including the Caltech 101 [45] and the Caltech 256 datasets [46]. Caltech 101 dataset contains 102 categories, about 40 to 800 images per category. The size of each image is about 300 × 200 pixels. And the Caltech 256 dataset has 256 categories of images and at least 80 images per category. In addition, the Caltech 256 dataset has a cluttered class and the clutter category can be seen as noises or backgrounds. In our experiments, we choose Emu, Pigeon in Caltech 101 and Bonsai-101, Cactus in Caltech 256. We show some image samples in Fig. 3. Every row of image samples come from the same class.

Due to the lack of samples in the majority class in the Caltech 101 dataset, we used no more than 50 samples from each category. The Caltech 256 dataset provides more categories and images and we used no more than 80 samples from each category. As for the image feature extraction, the popular dense-sift algorithm is used to extract features form those images. Then, we quantize those features into 1000 visual-words with bag-of-words models. We also reduce the feature vectors to appropriate dimensions with PCA, to retain 97% of the variance. Thus, we can use the kernel trick to improve classification accuracy.

The experimental results on the Caltech datasets are shown in Table 6. The experimental process and parameter selection are the same as the previous benchmark experiment. From the perspesctive of prediction accuracy in Table 6, compared with other five algorithms, we can learn that our RKNN-STSVM yields the highest testing accuracy of most datasets. In addition, our proposed RKNN-STSVM improves the classification accuracy of KNN-STSVM and ensures that the computational time is not slower than KNN-STSVM. It can be noticed that the TSVM yields the lowest testing accuracy and KNN-LSTSVM yields the lowest computational cost. At last, our proposed RKNN-STSVM performs better than TSVM, Iν-TBSVM, KNN-LSTSVM on four image datasets. The optimal parameters of six algorithms used in the experiments are shown in Table 7.

5 The DCDM for accelerating RKNN-STSVM

5.1 The DCDM algorithm

Although Matlab’s built-in algorithm “Quadprog” can give a generally precise solution, the consumption of time is expensive for large-scale problems. In this section, to further improve the solving efficiency, a novel fast algorithm dual coordinate descent method (DCDM) [38] is embedded into the learning process of our RKNN-STSVM to accelerate the learning speed. DCDM is a kind of coordinate descent method since it aims to decompose an optimization problem into a series of single-variable sub-problems. That is, in each iteration, DCDM only updates one variable. By solving these sub-problems efficiently, we can realize the optimization process in less time. The procedure to embed DCDM into our RKNN-STSVM is shown below.

One of the dual QPP of the RKNN-STSVM is as follows [38]:

The optimization process begins with an initial point α0 ∈ Rn. The process from αk to αk+ 1 is referred as an outer iteration. In each outer iteration, we have n inner iterations, so that sequentially α1,α2,…,αn are updated. Each outer iteration generates vectors αk,i ∈ Rn,i = 1,2,…,n + 1, such that αk,1 = αk,αk,n+ 1 = αk+ 1, and

For updating αk,i to αk,i+ 1, we solve the following one-variable sub-problem:

where ei = [0,…,0,1,0,…,0]T. The objective function of (51) is a simple quadratic function of d:

where \(\triangledown _{i}f\) is the i th component of the gradient \(\triangledown f\). So (51) has an optimum at d = 0 (i.e., no need to update αi) if and only if

where \(\triangledown ^{P}f(\alpha )\) means the projected gradient

If (54) holds, we move to the index i + 1 without updating \(\alpha ^{k,i}_{i}\). Otherwise, we must find the optimal solution of (51). If Qii > 0, the solution is:

The stopping criterion we set for DCDM is ∥αk+ 1 − αk∥ < 𝜖 (𝜖 is a sufficient small real number) or maximum iterations reaching 1000. A flowchart of DCDM is given in Algorithm 1.

5.2 Experiments of the DCDM algorithm for RKNN-STSVM

Now we introduce this DCDM algorithm into the dual QPPs of our RKNN-STSVM, which have been given by (44) and (46). Similarly, compare models (44) and (51), the matrix Q in (51) is substituted by \(G_{\phi }(H_{\phi }^{T}DH_{\phi }+c_{2}J_{+}^{\phi }+c_{3}I)^{-1}G_{\phi }^{T}\). Notably, we have known that the \(F=diag(f^{(2)}_{1}, f^{(2)}_{2}, \ldots , f^{(2)}_{l_{2}})\) in our QPP is a diagonal matrix and \(f^{(2)}_{j}\) is either 0 or 1. That is to say, the component of α corresponding to 0 in F has no contribution to the optimization problem (44), so it can be ignored during the iteration of DCDM, which will further improve the computing speed.

In this experiment, twenty-five datasets are selected to validate the significance of applying this DCDM algorithm to speed up the operation. This initial value of α is a random vector between 0 and c1, and the stopping condition in the DCDM algorithm is chosen as [38]

We set 𝜖 = 10− 2 in the experiments. Averages and standard deviations of test accuracies (in %) and computation time derived by RKNN-STSVM and RKNN-STSVM solved with DCDM are shown in Table 8. The last column shows the speedup of DCDM algorithm. These results indicate that DCDM algorithm gives similar performance on testing accuracy with the Matlab built-in algorithm, while it makes our RKNN-STSVM obtain a faster learning speed, the largest speedup almost reaches to 5.76 times.

6 Conclusion

In this paper, we present an improved version of KNN-STSVM. We reformulate the primal problems of RKNN-STSVM by adding regularization terms in the objective functions to utilize the structural risk minimization principle and to remove singularity in the solution. Therefore, our RKNN-STSVM avoids the over-fitting problem to a certain degree and yields higher testing accuracy. Numerical experiments on twenty-seven benchmark datasets and image recognition datasets demonstrate the feasibility and validity of our RKNN-STSVM. The DCDM fast algorithm is further employed to speed up our RKNN-STSVM. The selection of an appropriate parameter k can greatly improve the testing accuracy of our algorithms. It should be pointed out that there are several parameters in our RKNN-STSVM, so it is meaningful to address the parameter selection in future work.

References

Vapnik V (1995) The nature of statistical learning theory. Springer, New York

Xu Y, Wang L (2005) Fault diagnosis system based on rough set theory and support vector machine. Lect Notes Comput Sci 3614:980–988

Zhang W, Yoshida T, Tang X (2008) Text classification based on multi-word with support vector machine. Knowl-Based Syst 21(8):879–886

Zhang C, Bi J, Xu S, Ramentol E, Fan G, Qiao B, Fujita H (2019) Multi-imbalance: an open-source software for multi-class imbalance learning. Knowl-Based Syst 174:137–143

Jayadeva Khemchandani R, Chandra S (2007) Twin support vector machines for pattern classification. IEEE Trans Pattern Anal Mach Intell 29(5):905–910

Mangasarian O, Wild E (2005) Multisurface proximal support vector machine classification via generalized eigenvalues. IEEE Trans Pattern Anal Mach Intell 28(1):69–74

Kumar M, Gopal M (2008) Application of smoothing technique on twin support vector machines. Pattern Recognit Lett 29(13):1842–1848

Peng X (2010) TSVR: an efficient twin support vector machine for regression. Neural Netw: the Official Journal of the International Neural Network Society 23(3):365–372

Xu Y, Wang L (2012) A weighted twin support vector regression. Knowl-Based Syst 33:92–101

Xu Y, Wang L (2014) K-nearest neighbor-based weighted twin support vector regression. Appl Intell 41 (1):299–309

Zhao J, Xu Y, Fujita H (2019) An improved non-parallel Universum support vector machine and its safe sample screening rule. Knowl-Based Syst 170:79–88

Shao Y, Zhang C, Wang X, Deng N (2011) Improvements on twin support vector machines. IEEE Trans Neural Netw 22(6):962–968

Tian Y, Ju X, Qi Z, Shi Y (2014) Improved twin support vector machine. Sci China Mater 57 (2):417–432

Wang H, Zhou Z, Xu Y (2018) An improved ν-twin bounded support vector machine. Appl Intell 48(4):1041–1053

Yang Z, Wu H, Li C, Shao Y (2016) Least squares recursive projection twin support vector machine for multi-class classification. Int J Mach Learn Cybern 7(3):411–426

Tanveer M, Khan M, Ho S (2016) Robust energy-based least squares twin support vector machines. Appl Intell 45(1):174– 186

Khemchandani R, Saigal P, Chandra S (2016) Improvements on ν-twin support vector machine. Neural Netw 79:97–107

Tanveer M (2015) Application of smoothing techniques for linear programming twin support vector machines. Knowl Inf Syst 45(1):191–214

Shao Y, Wang Z, Chen W, Deng N (2013) Least squares twin parametric-margin support vector machine for classification. Appl Intell 39(3):451–464

Shao Y, Deng N, Yang Z (2012) Least squares recursive projection twin support vector machine for classification. Pattern Recognit 45(6):2299–2307

Yeung D, Wang D, Ng W, Tsang E, Wang X (2007) Structured large margin machines: sensitive to data distributions. Mach Learn 68(2):171–200

Xue H, Chen S, Yang Q (2011) Structural regularized support vector machine: a framework for structural large margin classifier. IEEE Trans Neural Netw 22(4):573–587

Qi Z, Tian Y, Shi Y (2013) Structural twin support vector machine for classification. Knowl-Based Syst 43:74–81

Xu Y, Pan X, Zhou Z, Yang Z, Zhang Y (2015) Structural least square twin support vector machine for classification. Appl Intell 42(3):527–536

Ye Q, Zhao C, Gao S, Zheng H (2012) Weighted twin support vector machines with local information and its application. Neural Netw 35:31–39

Pan X, Luo Y, Xu Y (2015) K-nearest neighbor based structural twin support vector machine. Knowl-Based Syst 88:34–44

Mir A, Nasiri J (2018) KNN-based least squares twin support vector machine for pattern classification. Appl Intell 48(12):4551–4564

Tanveer M, Shubham K, Aldhaifallah M, Ho S (2016) An efficient regularized k-nearest neighbor-based weighted twin support vector regression. Knowl-Based Syst 94:70–87

Thongkam J, Xu G, Zhang Y, Huang F (2008) Support vector machine for outlier detection in breast cancer survivability prediction. Adv Web Network Technol Appl 4977:99–109

Cui W, Yan X (2009) Adaptive weighted least square support vector machine regression integrated with outlier detection and its application in QSAR. Chemom Intell Lab Syst 98(2):130– 135

Xu Y, Liu C (2013) A rough margin-based one class support vector machine. Neural Comput Appl 22 (6):1077–1084

Shao Y, Wang Z, Chen W, Deng N (2013) A regularization for the projection twin support vector machine. Knowl-Based Syst 37:203–210

Shao Y, Zhang C, Yang Z, Jing L, Deng N (2013) An 𝜖-twin support vector machine for regression. Neural Comput Appl 23(1):175–185

Tanveer M (2015) Robust and sparse linear programming twin support vector machines. Cogn Comput 7 (1):137–149

Osuna E, Freund R, Girosi F (1997) An improved training algorithm for support vector machines. Neural Netw Signal Process VII(17):276–285

Platt J (1999) Fast training of support vector machines using sequential minimal optimization. Advances in kernel methods. MIT Press, Cambridge

Mavroforakis M, Theodoridis S (2006) A geometric approach to support vector machine (svm) classification. IEEE Trans Neural Netw 17(3):671–682

Hsieh C, Chang K, Lin C, Keerthi S, Sundararajan S (2008) A dual coordinate descent method for large-scale linear SVM. In: Proceedings of the international conference on machine learning, vol 9. ACM, pp 408–415

Ward J (1963) Hierarchical grouping to optimize an objective function. Publ Am Stat Assoc 58(301):236–244

Salvador S, Chan P (2004) Determining the number of clusters/segments in hierarchical clustering/segmentation algorithms. In: 16th IEEE international conference on tools with artificial intelligence. Proceedings, pp 576–584

Xue H, Chen S, Yang Q (2009) Discriminatively regularized least-squares classification. Pattern Recognit 42(1):93–104

Chang C, Lin C (2011) LIBSVM: a library for support vector machines. ACM

Ar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7:1–30

Salvador G, Alberto F, Julián L, Herrera F (2010) Advanced nonparametric tests for multiple comparisons in the design of experiments in computational intelligence and data mining: experimental analysis of power. Inf Sci 180(10):2044–2064

Feifei L, Fergus R, Perona P (2006) One-shot learning of object categories. IEEE Trans Pattern Anal Mach Intell 28(4):594–611

Griffin G, Holub A, Perona P (2006) The Caltech 256, Caltech Technical Report

Acknowledgments

The authors gratefully acknowledge the helpful comments of the reviewers, which have improved the presentation. This work was supported in part by National Natural Science Foundation of China (No.11671010) and Beijing Natural Science Foundation (No. 4172035).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Xie, F., Xu, Y. An efficient regularized K-nearest neighbor structural twin support vector machine. Appl Intell 49, 4258–4275 (2019). https://doi.org/10.1007/s10489-019-01505-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-019-01505-5