Abstract

In this paper, we propose a new classifier termed as an improved ν-twin bounded support vector machine (Iν-TBSVM) which is motivated by ν-twin support vector machine (ν-TSVM). Similar to the ν-TSVM, Iν-TBSVM determines two nonparallel hyperplanes such that they are closer to their respective classes and are at least ρ + or ρ − distance away from the other class. The significant advantage of Iν-TBSVM over ν-TSVM is that Iν-TBSVM skillfully avoids the expensive matrix inverse operation when solving the dual problems. Therefore, the proposed classifier is more effective when dealing with large scale problem and has comparable generalization ability. Iν-TBSVM also implements structural risk minimization principle by introducing a regularization term into the objective function. More importantly, the kernel trick can be applied directly to the Iν-TBSVM for nonlinear case, so the nonlinear Iν-TBSVM is superior to the nonlinear ν-TSVM theoretically. In addition, we also prove that ν-SVM is the special case of Iν-TBSVM. The property of parameters in Iν-TBSVM is discussed and testified by two artificial experiments. Numerical experiments on twenty-two benchmarking datasets are performed to investigate the validity of our proposed algorithm in both linear case and nonlinear case. Experimental results show the effectiveness of our proposed algorithm.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The support vector machine (SVM) [1], motivated by Vapnik’s statistical learning theory, is the state of the art algorithm in nonlinear pattern classification, together with multilayered neural network models. Compared with other machine learning methods like artificial neural networks[2], SVM gains many advantages. SVM solves a quadratic programming problem (QPP) which assures that once an optimal solution is obtained, it is the unique global solution. SVM implements the structural risk minimization principle rather than the empirical risk minimization principle, which minimizes the upper bound of the generalization error. The introduction of the kernel function [3] in SVM maps training vectors into a high-dimensional space directly. On the basis of the techniques above, SVM has been successfully applied in many fields [4–8] and various amendments have been suggested [9–17].

It is well known that solving the entire QPP in the SVMs is time consuming, which still remains challenging. To improve the computational speed, Jayadeva et al. have proposed a twin support vector machine (TSVM) [18] for the binary classification data. TSVM attempts to seek two nonparallel proximal hyperplanes for the two classes of samples, such that each hyperplane is closer to one class and as far as possible from the other. The greatest advantage of TSVM over SVM is that it solves two smaller-sized QPPs rather than a single large one, which makes TSVM work faster than SVM. Later, many variants of TSVM have been proposed [19], such as smooth TSVM [20], least squares TSVM [21], twin support vector regression [22], twin bounded SVM (TBSVM) [23], and structure information based TSVM [24]. Tian et al. proposed a new improved TSVM [25] to avoid solving the corresponding inverse matrices in most of the existing TSVMs.

In TSVM, the patterns of one class are at least a unit distance away from the hyperplane of other class, this may increase the number of support vectors thus leading to poor generalization ability. Recently, Peng proposed ν-TSVM [26]. The unit distance of TSVM is modified to variable ρ which needs to be optimized in the primal problem. And the parameter ν in ν-TSVM is used to control the bounds on the fractions of support vectors and error margins. Besides, ν-TSVM can be interpreted as a pair of minimum generalized Mahalanobis-norm problems on two reduced convex hulls. Based on the thoughts of ν-TSVM, many algorithms are studied extensively, such as ν-twin bounded SVM (ν-TBSVM) [27], rough margin based ν-TSVM [28, 29] and a novel improved ν-TSVM [30]. Nevertheless, the aforementioned ν-TSVMs all involve expensive matrix inverse operation, which makes them time consuming even though the Sherman-Morrison-Woodbury [31–33] for matrix inversion can be used. When using the linear kernel, the ν-TSVMs cannot transform to the linear case. However, our algorithm can achieve it.

In this paper, we present an improvement on ν-TSVM, called the improved ν-twin bounded support vector machine (Iν-TBSVM). Our Iν-TBSVM possesses the following attractive advantages:

-

1.

Iν-TBSVM leads to less computation time because it skillfully avoids the matrix inverse operation when solving dual QPPs. Therefore, it is more suitable to solve large scale problems.

-

2.

Unlike the ν-TSVM, when using the linear kernel, the Iν-TBSVM can degenerate to the linear case.

-

3.

Similar to ν-TBSVM, the structural risk is minimized by introducing a regularization term in the objective function in Iν-TBSVM, which makes sure the enhanced algorithm yields high testing accuracy.

The remainder of the paper is organized as follows. In Section 2 we give a brief overview on ν-TSVM and ν-TBSVM. In Section 3 we introduce our Iν-TBSVM in detail. In Section 4, we compare our Iν-TBSVM with ν-TBSVM and TBSVM. Section 5 performs two artificial experiments to verify the property of parameters in Iν-TBSVM, and twenty-two experiments to investigate the effectiveness of our proposed algorithm. We make conclusions in Section 6.

2 Related works

In this section, we review the basics of ν-TSVM and ν-TBSVM and summarize their drawbacks.

2.1 ν-twin support vector machine

Consider a binary classification problems with p samples belonging to class + 1 and q samples belonging to class − 1 in the n-dimensional real space \({{\mathbb {R}}^{n}}\). Let matrix \(\mathbf {A}\in {{\mathbb {R}}^{p\times n}},\text {} \mathbf {B}\in {{\mathbb {R}}^{q\times n}}\) stand for the positive and negative samples, respectively.

The ν-TSVM [26] generates two nonparallel hyperplane instead of a single one as in the standard SVM. The two nonparallel hyperplanes are obtained by solving two smaller-sized QPPs rather than a single large one. The ν-TSVM seeks the following pair of nonparallel hyperplanes:

for linear case, and it seeks the following kernel surfaces:

for nonlinear case, where \(\mathbf {C}=[\mathbf {A}^{T} \,\, \mathbf {B}^{T}] \in \mathbb {R}^{n\times (p+q)}\), such that each hyperplane is closer to one class and is as far as possible from the other.

The dual problems of ν-TSVM are as follows:

and

where G = [B e −] and H = [A e +] for linear case, and G = [K(B,C T)e −] and H = [(A,C T)e +] for nonlinear case.

A new sample x is assigned to a class i (i = +1,−1) by

where |⋅| is the perpendicular distance of the new sample x from the two hyperplanes (1).

2.2 ν-twin bounded support vector machine

Xu and Guo [27] introduced a regularization term into the objective function in the ν-TBSVM. The linear QPPs of ν-TBSVM are denoted as follows,

and

By introducing the Lagrange multipliers α and γ, their dual problems are derived as,

and

where G = [B e −] and H = [A e +].

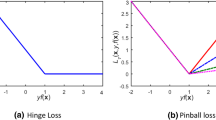

From the dual QPPs (3), (4), (8) and (9), we can clearly see that ν-TSVM and ν-TBSVM inevitably need to calculate the inverse matrices H T H and G T G, H T H + c 1 I and G T G + c 2 I, respectively, which is time consuming. Besides, the size of the inverse matrices is roughly (n + 1) × (n + 1) which means the linear ν-TSVM and ν-TBSVM only works on smaller n. Therefore, they are not suitable for, and have difficulty calculating, the high dimensional datasets. For the nonlinear case, the size is (l + 1) × (l + 1), which means they only work for the problem with smaller scale. And the nonlinear ν-TSVM and ν-TBSVM with the linear kernel is not equivalent to the linear ν-TSVM and ν-TBSVM, respectively. Here we illustrate it by a toy example, see Fig. 1.

Nonlinear ν-TSVM with the linear kernel is not equivalent to the linear ν-TSVM. The different points “*” and “o” are generated following two normal distributions respectively. Here the parameter ν 1 = ν 2 = 0.5. a Two nonparallel hyperplanes obtained from the linear ν-TSVM; b Two nonparallel hyperplanes obtained from nonlinear ν-TSVM with linear kernel

3 An improved ν-twin bounded support vector machine

In this section, we propose a new algorithm based on ν-TSVM, termed as improved ν-twin bounded support vector machine (Iν-TBSVM), which inherits the essence of the traditional ν-SVM and overcomes the aforementioned drawbacks in ν-TSVM and ν-TBSVM. Further speaking, Iν-TBSVM constructs two nonparallel hyperplanes by implementing the structural risk minimization principle. Due to the introduction of two new variables, the Iν-TBSVM does not require the expensive matrix inverse operation and the nonlinear case can degenerate to the linear case directly when linear kernel is applied.

3.1 Linear case

For the linear case, the Iν-TBSVM finds the following QPPs.

and

where c 1, c 2 are the penalty parameters; ξ + , ξ − are slack vectors; e +,e − are vectors of ones of appropriate dimensions; η +, η − are vectors of appropriate dimensions; ν 1, ν 2 are chosen a priori; ρ +, ρ − are additional variables.

It is worth noting that we only introduce two parameters η + and η − in (10) and (11) compared with QPPs (6) and (7). For the sake of conciseness, we only take the dual problem of (10) into account.

The first term in the objective function is the regularization term. That means our Iν-TBSVM still takes the structural risk minimization principle into consideration to improve the generalization ability. The distance between the hyperplane 〈w +,x〉 + b + = 0 and the boundary hyperplane 〈w +,x〉 + b + = −ρ + can be measured by \(\rho _{+}/\sqrt {||{{\mathbf {w}}_{+}}|{{|}^{2}}+b_{+}^{2}}\), which is maximized by minimization of \(\frac {{{c}_{1}}}{2}(||{{\mathbf {w}}_{+}}|{{|}^{2}}+b_{+}^{2})-{{\nu }_{1}}{{\rho }_{+}}\). It implies that the distance between two parallel hyperplanes is as large as possible.

The Lagrangian function corresponding to Iν-TBSVM (10) is as follows,

where α = (α 1,...,α q )T, β = (β 1,...,β q )T, λ = (λ 1,...,λ p )T and s are Lagrange multipliers. Differentiating the Lagrangian function L with respect to variables w +, b +, η + , ρ +, ξ − yields the following Karush-Kuhn-Tucker (KKT) conditions:

Since β ≥0, from (17) we have

Since s > 0, from (16) we also get

Equations (13) and (14) imply that

Using (13), (14) and (15), we derive the dual formulation of the QPP (10) as follows,

where

and I is the p × p identity matrix, E is the (p + q) × (p + q) matrix with all entries equal to 1.

Similarly, the dual formulation of (11) is derived as

where

and I is the q × q identity matrix, E is the (p + q) × (p + q) matrix with all entries equal to 1. Once the optimal solutions (𝜃,γ) are obtained, we can derive

To compute ρ ±, we choose the samples x i (or x j )with \(0< \alpha _{i} <\frac {1}{p}\) (or \(0< \alpha _{j} <\frac {1}{q}\), which means ξ i = 0 (or ξ j = 0) and \(\mathbf {w}_{-}^{T}\mathbf {x}_{i}+b_{-}=\rho _{-}\) (or \(\mathbf {w}_{+}^{T}\mathbf {x}_{j}+b_{+}=-\rho _{+}\)) according to the KKT conditions. Then ρ ± can be calculated by

There is similar conclusion for the QPPs (27) and QPP (29) above, so we only take QPP (27) into account. For convenience, we first give an equivalent formulation of the QPP (27). The optimal parameters ρ + in the QPP (10) is actually larger than zero. On the conditions above, we give the following Proposition 1.

Proposition 1

The QPP (27) can be transformed into the following QPP.

The QPPs (27) and (34) differ in the second constraint condition. In (27) , the second inequality constraint \(\mathbf {e}_{-}^{T}\boldsymbol {\alpha } \geq {{\nu }_{1}}\) can become an equality constraint \(\mathbf {e}_{-}^{T}\boldsymbol {\alpha } = {{\nu }_{1}}\) .

Proof

According to assumptions ρ + > 0 and the KKT condition s ρ + = 0, we can obtain that the Lagrangian multipliers s = 0. Then, by substituting it into (16), we can get the equality constraint \(\mathbf {e}_{-}^{T}\boldsymbol {\alpha } = {{\nu }_{1}}\). □

As in ν-SVM, ν-TSVM and ν-TBSVM, parameter ν in our Iν-TBSVM has its property. We discuss its property in the following Propositions.

Proposition 2

Denote by q 2 the number of support vectors in the negative class. Then we can obtain an inequality \(\nu _{1}\leq \frac {q_{2}}{q}\) , which implies that ν 1 is a lower bound on the fraction of support vectors in the negative class.

Proposition 3

Denote by p 2 the number of boundary errors in the negative class. Then we can obtain an inequality \(\nu _{1}\geq \frac {p_{2}}{q}\) , which implies that ν 1 is an upper bound on the fraction of boundary errors in the negative class.

Proof

The proof of Proposition 2 and 3 is similar to that of Proposition 2 and 3 in [27]. These results can be extended to the nonlinear case by considering kernel function. □

It is worthwhile to mention that the dual problems (27) and (29) don’t involve the matrix inverse operation according the expression of Q and \(\boldsymbol {\tilde {Q}}\) . More importantly, they can be easily extended to the nonlinear case.

3.2 Nonlinear case

By introducing the kernel function K(x i ,x j ) = (φ(x i ) ⋅ φ(x j )) and the corresponding transformation x = φ(x), where \(\mathbf {x}\in \mathcal {H}\), \(\mathcal {H}\) is the Hilbert space, we can get the nonlinear Iν-TBSVM as follows,

and

where c 1, c 2,ν 1, ν 2 are chosen a priori; ξ + , ξ − are slack vectors; e +,e − are vectors of ones of appropriate dimensions; η +, η − are vectors of appropriate dimensions.

In an exactly similar way as the linear case, we derive the dual formulation of (35) as follows,

where

Similarly, the dual of the QPP (36) is derived as

where

Once the optimal solutions (λ,α) and (𝜃,γ) are obtained, the pair of nonparallel hyperplanes in the Hilbert space can be obtained as follows,

where \({{b}_{+}}=\mathbf {e}_{+}^{T}\boldsymbol {\lambda } +\mathbf {e}_{-}^{T}\boldsymbol {\alpha }\), and

where \({{b}_{-}}=\mathbf {e}_{-}^{T}\boldsymbol {\theta } +\mathbf {e}_{+}^{T}\boldsymbol {\gamma } \).

Obviously, the dual QPPs (37) and (39) don’t involve the matrix inverse operation, and they can degenerate to the problems (27) and (29) of linear Iν-TBSVM when the linear kernel is applied.

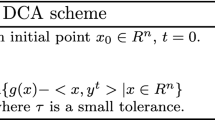

The flowchart of the nonlinear Iν-TBSVM is described as follows.

3.3 Analysis of algorithm

In this section, we summarize the superiorities of the proposed Iν-TBSVM.

-

1)

By introducing a new pair of variables in ν-TBSVM, our Iν-TBSVM skillfully avoids the matrix inverse operation while it is inescapable in other ν-TSVMs.

-

2)

When using the linear kernel, our Iν-TBSVM can degenerate to the linear case directly, while it is really not so in other ν-TSVMs.

-

3)

Our Iν-TBSVM can naturally and reasonably explain the regularization terms for both linear and nonlinear cases.

-

4)

ν-SVM is the special case of Iν-TBSVM. Let us combine QPP (10) and (11) together to be the following problem,

$$\begin{array}{@{}rcl@{}} && {\mathop{\min} }\quad \frac{{{c}_{1}}}{2}(||{{\mathbf{w}}_{+}}|{{|}^{2}}+b_{+}^{2})+\frac{{{c}_{2}}}{2}(||{{\mathbf{w}}_{-}}|{{|}^{2}}+b_{-}^{2}) \\ & &+\frac{1}{2}(\boldsymbol{\eta}_{+}^{T}{{\boldsymbol{\eta} }_{+}}+\boldsymbol{\eta}_{-}^{T}{{\boldsymbol{\eta} }_{-}})-{{\nu}_{1}}{{\rho}_{+}}-{{\nu}_{2}}{{\rho}_{-}}+\frac{1}{q}\mathbf{e}_{-}^{T}{{\boldsymbol{\xi} }_{-}}+\frac{1}{p}\mathbf{e}_{+}^{T}{{\boldsymbol{\xi} }_{+}} \\ && \, s.t.\quad \mathbf{A}{{\mathbf{w}}_{+}}+{{\mathbf{e}}_{+}}{{b}_{+}}={{\boldsymbol{\eta} }_{+}}, \\ && \qquad \, \mathbf{B}{{\mathbf{w}}_{-}}+{{\mathbf{e}}_{-}}{{b}_{-}}={{\boldsymbol{\eta} }_{-}}, \\ && \qquad -(\mathbf{B}{{\mathbf{w}}_{+}}+{{\mathbf{e}}_{-}}{{b}_{+}})\ge {{\rho}_{+}}{{\mathbf{e}}_{-}}-{{\boldsymbol{\xi} }_{-}}, \\ && \qquad \mathbf{A}{{\mathbf{w}}_{-}}+{{\mathbf{e}}_{+}}{{b}_{-}}\ge {{\rho}_{-}}{{\mathbf{e}}_{+}}-{{\boldsymbol{\xi} }_{+}}, \\ && \qquad {{\boldsymbol{\xi} }_{-}}\ge \mathbf{0}, \, {{\rho}_{+}}\ge 0, \\ && \qquad {{\boldsymbol{\xi} }_{+}}\ge \mathbf{0},\,{{\rho}_{-}}\ge 0. \end{array} $$(43)It is easy to prove that the solutions of QPP (43) are the solutions of QPP (10) and (11). If we delete the term \(\frac {1}{2}(\boldsymbol {\eta }_{+}^{T}{{\boldsymbol {\eta } }_{+}}+\boldsymbol {\eta } _{-}^{T}{{\boldsymbol {\eta } }_{-}})\) in the objective function in QPP (43), then QPP (43) can degenerate to be

$$\begin{array}{@{}rcl@{}} && {\mathop{\min} }\quad \frac{{{c}_{1}}}{2}(||{{\mathbf{w}}_{+}}|{{|}^{2}}+b_{+}^{2})+\frac{{{c}_{2}}}{2}(||{{\mathbf{w}}_{-}}|{{|}^{2}}+b_{-}^{2}) \\ && \qquad -{{\nu}_{1}}{{\rho}_{+}}-{{\nu}_{2}}{{\rho}_{-}}+\frac{1}{q}\mathbf{e}_{-}^{T}{{\boldsymbol{\xi} }_{-}}+\frac{1}{p}\mathbf{e}_{+}^{T}{{\boldsymbol{\xi} }_{+}} \\ && \, s.t.\quad -(\mathbf{B}{{\mathbf{w}}_{+}}+{{\mathbf{e}}_{-}}{{b}_{+}})\ge {{\rho}_{+}}{{\mathbf{e}}_{-}}-{{\boldsymbol{\xi} }_{-}}, \\ && \qquad \mathbf{A}{{\mathbf{w}}_{-}}+{{\mathbf{e}}_{+}}{{b}_{-}}\ge {{\rho}_{-}}{{\mathbf{e}}_{+}}-{{\boldsymbol{\xi} }_{+}}, \\ && \qquad {{\boldsymbol{\xi} }_{-}}\ge \mathbf{0}, \, {{\rho}_{+}}\ge 0, \\ && \qquad {{\boldsymbol{\xi} }_{+}}\ge \mathbf{0},\,{{\rho}_{-}}\ge 0. \end{array} $$(44)Furthermore, if we want to get the solutions satisfying w + = w −,b + = b −,ρ + = ρ −, we only need to solve the special case of QPP (44), namely,

$$\begin{array}{@{}rcl@{}} && {\mathop{\min} }\quad \frac{1}{2}(||{\mathbf{w}}|{{|}^{2}}+b^{2})-{\nu} {\rho} +\frac{1}{l}(\mathbf{e}_{-}^{T}{{\boldsymbol{\xi} }_{-}}+\mathbf{e}_{+}^{T}{{\boldsymbol{\xi} }_{+}}) \\ && \, s.t.\quad -(\mathbf{B}{\mathbf{w}}+{{\mathbf{e}}_{-}}{b})\ge {\rho} {{\mathbf{e}}_{-}}-{{\boldsymbol{\xi} }_{-}}, \\ && \qquad \mathbf{A}{\mathbf{w}}+{{\mathbf{e}}_{+}}{b}\ge \rho {{\mathbf{e}}_{+}}-{{\boldsymbol{\xi} }_{+}}, \\ && \qquad {{\boldsymbol{\xi} }_{-}}\ge \mathbf{0}, \, {{\boldsymbol{\xi} }_{+}}\ge \mathbf{0}, \, \rho \ge 0. \end{array} $$(45)It is obvious that (45) is the ν-SVM for binary classification problem. In other words, the ν-SVM with parallel hyperplane is a special case of Iν-TBSVM with nonparallel hyperplanes. Iν-TBSVM is more flexible than ν-SVM and has better generalization ability.

4 Comparison with other algorithms

4.1 Iν-TBSVM vs. ν-TBSVM

The matrix inverse operation is inescapable in ν-TBSVM. In the linear case, ν-TBSVM has to solve two matrix inverse operations of order (n + 1), where n denotes the number of dimensions of training samples. In the nonlinear case, ν-TBSVM has to solve two matrix inverse operations of order (l + 1), where l denotes the number of training samples. Therefore, ν-TBSVM is not suitable for large scale problems. Compared with ν-TBSVM, our Iν-TBSVM perfectly avoids the matrix inverse operation.

Besides, ν-TBSVM with the linear kernel is not equivalent to the linear case. Namely ν-TBSVM cannot obtain the exact solutions when using a nonlinear kernel. When using the linear kernel, our Iν-TBSVM can degenerate to the linear case easily. Therefore, our nonlinear Iν-TBSVM is superior to the nonlinear ν-TBSVM theoretically.

Their common ground is that they both implement the structural risk minimization principle.

4.2 Iν-TBSVM vs. TBSVM

Both Iν-TBSVM and TBSVM [25] find two nonparallel hyperplanes and classify two classes of training samples.

In TBSVM, the patterns of one class are at least a unit distance away from the hyperplane of other class, this may increase the number of support vectors thus leading to poor generalization ability. The unit distance of Iν-TBSVM is modified to variable ρ which is optimized in the primal problem involved therein. And the parameters ν in Iν-TBSVM are used to control the bounds on the fractions of support vectors and error margins. It implies that the parameters in our Iν-TBSVM have a better theoretical interpretation than TBSVM.

5 Numerical experiments

To validate the superiorities of our algorithm, in this section, we conduct the experiments on two artificial datasets and twenty-two benchmarking dataset. In the artificial datasets we verify the property of parameter ν in our Iν-TBSVM. In the twenty-two benchmarking experiments, we compare our Iν-TBSVM with three other algorithms, i.e. TSVM, ν-TSVM and ν-TBSVM, from both accuracy and time aspects. All experiments are carried out in Matlab R2014a on Windows 7 running on a PC with system configuration Inter Core i3-4160 Duo CPU (3.60GHz) with 4.00 GB of RAM.

5.1 Experiments on two artificial datasets

In this subsection, two artificial datasets have been used to show the properties of our proposed Iν-TBSVM. Firstly, we randomly generate two classes of points, each class has 50 points. They all follow two-dimensional normal distributions, where positive samples X 1 ∼ N(−2,2), and negative samples X 2 ∼ N(3.5,2). Their distributions are shown in Fig. 2. By our proposed Iν-TBSVM, we can easily obtain a pair of nonparallel hyperplanes for the two classes of samples, also shown as Fig. 2. The green and pink dashed represents the positive hyperplane (f + = 0.002x 1 + 0.0018x 2 − 0.0188) and the negative hyperplane (f − = −0.0022x 1 − 0.0022x 2 + 0.0044), respectively. And the red solid line stands for the final classification hyperplane.

To further investigate the property of parameter ν in our Iν-TBSVM, we present an intuitive Fig. 3, where the x-axis denotes the values of parameter ν. The blue curve denotes the changing curve between the fraction of support vectors in negative class and parameter ν 1. The green curve denotes the changing curve between the values of \(\frac {p_{2}}{q}\) and parameter ν 1. This artificial experiment confirms the property of parameter ν described in Propositions 2 and 3. Namely ν 1 is a lower bound on the fraction of support vectors in the negative class; ν 1 is an upper bound on the fraction of boundary errors in the negative class. This is helpful to understand our algorithm and helpful for the choice of parameter.

Secondly, we conduct the experiment on crossplane (XOR) dataset which has 101 points for each class. Similarly, we draw the pair of nonparallel hyperplanes, which is shown in Fig. 4. And from Fig. 5, Propositions 2 and 3 are also confirmed on XOR dataset.

5.2 Experiments on benchmarking datasets

We also test the effectiveness of our proposed algorithm on a collection of twenty-two benchmarking datasets from UCI machine learning repository Footnote 1. These datasets are constructed for binary classification problems. In order to make the results more convincing, we use 10-fold cross-validation for each experiment. More specifically, each dataset is split randomly into ten subsets, and one of those sets is reserved as a test set whereas the remaining data are considered for training. This process is repeated ten times.

5.2.1 Parameters selection

Choosing the optimal parameters is an important problem for SVMs. In our experiments, we adopt the grid search method [34] to obtain the optimal parameters. The Gaussian kernel function K(x i ,x j ) = e x p(−||x i −x j ||2/r 2) is used as it is often employed and yields great generalization performance. In the four algorithms, the Gaussian kernel parameter r is selected from the set {2i|i = −5,−4,...,10}. For brevity’s sake, we let c 1 = c 2 in TSVM, ν 1 = ν 2 in ν-TSVM, and c 1 = c 2,ν 1 = ν 2 in ν-TBSVM and Iν-TBSVM. The parameter c 1 is selected from the set {2i|i = −10,−9,...,10}. The parameter ν 1 is searched from the set {0.1,0.2,...,0.9}.

5.2.2 Results comparisons and discussion

We report testing accuracy to evaluate the performance of classifiers. ‘Accuracy’ denotes the mean value of ten testing results, plus or minus the standard deviation. ‘Time’ denotes the mean value of the time taken by ten experiments, and each experiment’s time consists of training time and testing time, and the unit of time is seconds. At the same time, we record the optimal parameters of four algorithms during the experiments. To compare it more comprehensively, we do the experiments both in the linear and nonlinear cases on the twenty-two benchmarking datasets, and the results are reported in Tables 1 and 2, respectively. The bold values indicate best mean of accuracy (in %).

By analyzing our experimental results on the twenty-two benchmarking datasets, we can easily draw the following conclusions.

-

–

No matter the linear case or the nonlinear case, our Iν-TBSVM outperforms other three algorithms for most datasets in terms of classification accuracy. ν-TBSVM follows, it produces better testing accuracy than ν-TSVM and TSVM for most cases. The main reason is that both Iν-TBSVM and ν-TBSVM implement the structural risk minimization principle.

-

–

It is worthwhile to mention that our Iν-TBSVM takes the least computational time on all twenty-two benchmarking dataset in nonlinear case. The mean running time of Iν-TBSVM for twenty-two datasets is 2.47 seconds as compared to 3.08, 4.53 and 4.96 seconds for ν-TBSVM, ν-TSVM and TSVM. The main reason is also that our Iν-TBSVM doesn’t involve matrix inverse operation when solving the dual QPPs which greatly reduced the time spent to run the algorithms.

-

–

Compared with the other three algorithms, our Iν-TBSVM has better performance on Dbworld datasets. This implies that our Iν-TBSVM is suitable for the high dimensional dataset. At the same time, when the number of training sample is large, such as Abalone dataset, our Iν-TBSVM still performs best in both testing accuracy and running time. As the aforementioned analysis, the other three algorithms take much time to do matrix inverse operation when solving the dual QPPs.

5.3 Friedman test

In order to further analyze the performance of the four comparable algorithms on twenty-two datasets with statistic methods, we use Friedman test [35] with the corresponding post hoc tests as suggested in Dems~ar [36] and García et al. [37]. The Friedman test is proved to be simple, nonparametric and safe for comparing three or more related samples and it makes no assumptions about the underlying distribution of the data. For this, the average ranks of four linear and nonlinear algorithms on accuracy for twenty-two datasets are calculated and listed in Tables 3 and 4, respectively. Under the null-hypothesis that all the algorithms are equivalent, one can compute the Friedman statistic [36] according to the following equation,

where \(R_{j}=\frac {1}{N}{\sum }_{i}{{r_{i}^{j}}}\), and \({r_{i}^{j}}\) denotes the j th of k algorithms on the i th of N datasets. Friedman’s \({\chi ^{2}_{F}}\) is undesirably conservative and derives a better statistic

which is distributed according to the F-distribution with k − 1 and (k − 1)(N − 1) degrees of freedom.

We can obtain \({\chi ^{2}_{F}}=24.9606\) and F F = 12.7724 according to (46) and (47) for linear case. Similarly, we obtain \({\chi ^{2}_{F}}=17.0320\) and F F = 7.3042 for nonlinear case where F F is distributed according to F-distribution with (3, 63) degrees of freedom. The critical value of F(3,63) is 2.17 for the level of significance α = 0.1, similarly, it is 2.75 for α = 0.05 and 3.33 for α = 0.025. These results suggest that there is significant difference among the four algorithms since the value of F F is 12.7724 for linear case and 7.3042 for nonlinear case. Both of the values are much larger than the critical value. We can also illustrate that our Iν-TBSVM outperforms the other three algorithms in the linear case or nonlinear case, because Iν-TBSVM gets the lowest average rank both in Tables 3 and 4.

6 Conclusion

In this paper, we present a novel algorithm, i.e., the improved ν-twin bounded support vector machine. This Iν-TBSVM not only maximizes the margin between two parallel hyperplanes by introducing a regularization term into the objective function, but also inherits the merits of standard SVM. Firstly, the matrix inverse operation is skillfully avoided in our Iν-TBSVM while it is inevitable for most existing ν-TSVMs. Secondly, the kernel trick can be applied directly to Iν-TBSVM for the nonlinear case, which is essential to obtain a model with higher testing accuracy. Thirdly, we prove that the ν-SVM is a special case of Iν-TBSVM. Iν-TBSVM is more flexible than ν-SVM and has better generalization ability. Experimental results indicate that our algorithm gives better performance than others. What’s more, this novel method can be applied in many fields, such as multi-class classification, semi-supervised learning and so on.

References

Vapnik V (1995) The nature of statistical learning theory. Springer, New York

Ripley BD (1996) Pattern recognition and neural networks. Cambridge University Press, Cambridge

Shawe-Taylor J, Cristianini N (2004) Kernel methods for pattern analysis. Cambridge University Press, Cambridge

Brown MPS, Grundy WN, Lin D, Cristianini N, Sugnet C, Furey TS, Ares JM, Haussler D (2000) Knowledge-based analysis of microarray gene expression data by using support vector machine. Proc Natl Acad Sci USA 97:262–267

Cao XB, Xu YW, Chen D, Qiao H (2009) Associated evolution of a support vector machine-based classifier for pedestrian detection. Inf Sci 179:1070–1077

Ghosh S, Mondal S, Ghosh B (2014) A comparative study of breast cancer detection based on SVM and MLP BPN classifier. In: First international conference on automation, control, energy & systems (ACES-14), pp 87–90

Cortes C, Vapnik V (1995) Support vector networks. Mach Learn 20:273–297

Osuna E, Freund R, Girosi F (1997) Support vector machines: training and applications. Technical Report, MIT Artificial Intelligence Laboratory, Cambridge, MA

Platt J (1998) Sequential minimal optimization: a fast algorithm for training support vector machines. In: Scholkopf et al. (eds.), Technical report MSR-TR-98-14, Microsoft research, pp 185– 208

Schölkopf B, Burges CJC, Smola AJ (eds.) (1999) Advances in kernel methods: support vector learning. MIT Press, Cambridge

Keerthi SS, Shevade SK, Bhattacharyya C, Murthy K (2001) Improvements to platts SMO algorithm for SVM classifier design. Neural Comput 13(3):637–649

Schölkopf B, Smola AJ, Bartlett P, Williamson R C (2000) New support vector algorithms. Neural Comput 12(5):1207–1245

Lee Y, Mangasarian OL (2001) Ssvm: a smooth support vector machine for classification. Comput Optim Appl 20(1):5– 22

Schölkopf B, Bartlett P L, Smola A J, Williamson R (1999) Shrinking the tube: a new support vector regression algorithm. Advances in neural information processing systems, pp 330–336

Suykens JA, Vandewalle J (1999) Least squares support vector machine classifiers. Neural Process Lett 9 (3):293–300

Mangasarian OL, Wild EW (2001) Proximal support vector machine classifiers. In: Proceedings KDD-2001: knowledge discovery and data mining. Citeseer

Mangasarian OL, Wild EW (2006) Multisurface proximal support vector machine classification via generalized eigenvalues. IEEE Trans Pattern Anal Mach Intell 28(1):69–74

Jayadeva, Khemchandani R, Chandra S (2007) Twin support vector machines for pattern classification. IEEE Trans Pattern Anal Mach Intell 29(5):905–910

Tian Y, Qi Z (2014) Review on: twin support vector machines. Annals Data Sci 1(2):253–277

Kumar MA, Gopal M (2008) Application of smoothing technique on twin support vector machines. Pattern Recogn Lett 29:1842–1848

Kumar MA, Gopal M (2009) Least squares twin support vector machines for pattern classification. Expert Syst Appl 36:7535–7543

Peng XJ (2010) TSVR: an efficient twin support vector machine for regression. Neural Netw 23(3):365–372

Shao YH, Zhang CH, Wang XB, Deng NY (2011) Improvements on twin support vector machines. IEEE Trans Neural Netw 22:962–968

Peng X, Wang Y, Xu D (2013) Structural twin parametric-margin support vector machine for binary classification. Knowl-Based Syst 49:63–72

Tian Y, Ju X, Qi Z, Shi Y (2014) Improved twin support vector machine. Sci China (Mathematics) 57:417–432

Peng XJ (2010) A ν-twin support vector machine (ν-TSVM) classifier and its geometric algorithms. Inf Sci 180(20):3863– 3875

Xu Y, Guo R (2014) An improved ν-twin support vector machine. Appl Intell 41:42–54

Xu Y, Wang L, Zhong P (2012) A rough margin-based ν-twin support vector machine. Neural Comput Appl 21:1307–1317

Xu Y, Yu J, Zhang Y (2014) KNN-based weighted rough ν-twin support vector machine. Knowl-Based Syst 71:303–313

Khemchandani R, Saigal P, Chandra S (2016) Improvements on ν-twin support vector machine. Neural Netw 79:97–107

Duncan WJ (1944) Some devices for the solution of large sets of simultaneous linear equations. The London, Edinburgh and Dublin Philosophical Magazine and Journal of Science, Seventh Series 35(249):660–670

Sherman J, Morrison WJ (1949) Adjustment of an inverse matrix corresponding to changes in the elements of a given column or a given row of the original matrix. Ann Math Stat 20:621

Woodbury M (1950) Inverting modified matrices. Memorandum Report 42. Statistical Research Group Princeton University, Princeton

Lin CJ, Hsu CW, Chang CC (2003) A practical guide to support vector classification. National Taiwan U., www.csie.ntu.edu.tw/cjlin/papers/guide/guide.pdf

Holm S (1979) A simple sequentially rejective multiple test procedure. Scand J Stat 6(2):65–70

Dems~ar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7:1–30

García S, Fernández A, Luengo J, Herrera F (2010) Advanced nonparametric tests for multiple comparisons in the design of experiments in computational intelligence and data mining: experimental analysis of power. Inform Sci 180:2044–2064

Acknowledgements

The authors gratefully acknowledge the helpful comments and suggestions of the reviewers, which have improved the presentation. This work was supported in part by National Natural Science Foundation of China (No.11671010) and Beijing Natural Science Foundation (No.4172035).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wang, H., Zhou, Z. & Xu, Y. An improved ν-twin bounded support vector machine. Appl Intell 48, 1041–1053 (2018). https://doi.org/10.1007/s10489-017-0984-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-017-0984-2