Abstract

Large-scale structural topology optimization has always suffered from prohibitively high computational costs that have till date hindered its widespread use in industrial design. The first and major contributor to this problem is the cost of solving the Finite Element equations during each iteration of the optimization loop. This is compounded by the frequently very fine 3D models needed to accurately simulate mechanical or multi-physical performance. The second issue stems from the requirement to embed the high-fidelity simulation within the iterative design procedure in order to obtain the optimal design. The prohibitive number of calculations needed as a result of both these issues, is often beyond the capacities of existing industrial computers and software. To alleviate these issues, the last decade has opened promising pathways into accelerating the topology optimization procedure for large-scale industrial sized problems, using a variety of techniques, including re-analysis, multi-grid solvers, model reduction, machine learning and high-performance computing, and their combinations. This paper attempts to give a comprehensive review of the research activities in all of these areas, so as to give the engineer both an understanding as well as a critical appreciation for each of these developments.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Structural and material design for enhanced performance at a lighter overall weight has been the quintessential industrial challenge of the 21\(^{st}\) century. This involves a combination of: material selection, volume fraction depending on weight limits, and the “architecture”. This third stage of design, which is essentially designing the topology or material distribution using mathematical optimization, can allow for drastic improvements in performance. The paradigm for this, termed as topology optimization i.e. finding the optimal relative density distribution over a voxel grid for a chosen volume fraction under a prescribed set of external loads and boundary conditions, was first presented in 1989 by Bendsoe [24] and has today greatly matured with wide spread application in structural optimization [216, 218] among other fields. The technique is widely used in industry as well as in academia for myriad applications, such as aerospace, mechanical, and biomedical engineering [44].

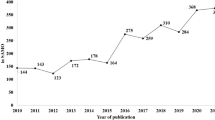

A review of the papers published since 1989 sheds light on its extensive application to structural mechanics, as seen from both books and journals [27, 28, 132, 166,167,168, 178]. [25] first applied it to the optimization of continuum structures, while [187] considered topology optimization with the homogenization method. [60] was the first to consider local stress constraints, [23] studied linearly elastic continuous structures for topology optimization. Ananthasuresh et al, [146] and others worked on compliant mechanism design using topology optimization. The optimal stiffener design of shell/plate structures with the small deformation was studied by [41, 43, 129, 155] carried out studies on linearly elastic structures to maximize eigen frequencies for topology design. [81] worked on topology optimization with pressure loading. [37] and others studied topology optimization with geometric nonlinearities. [26, 28, 154] and it has been successfully applied to find the optimum topology of a linearly elastic structure for its global stiffness and applied it to dynamic problems. [243] used sequential convex programming (SCP) method. [130] considered a frequency response optimization problem for both the optimal layout and the reinforcement of an elastic structure. Some researchers [154] studied the drawbacks in Optimality criteria method. Generally SCP (Sequential convex programming) method is used for frequency problems. [244] studied very large scale optimization by Sequential convex programming. Extensive work on optimum topology of discrete structures such as trusses and grid-type structures was done by [168]. The performance-based optimization (PBO) method was developed by [117] for topology design of continuum structures with stress, displacement and mean compliance constraints. Topology optimization with genetic algorithms was studied with morphological geometric representation scheme by [193, 194, 204, 205].

[47] used topology optimization for minimum-weight structural design under local stress-based constraints for fatigue resistance and a global constraint on compliance. [48] used topology optimization for designing periodic microstructures with stress constraints to prevent high stress concentrations at the microscale. [64] recently presented a strategy using aggregation functions for maximum size-constrained topology optimization.

Significant research was conducted on the topology optimization of multi-scale nonlinear problems by Xia and Breitkopf [66, 215, 215, 218]. The literature shows that it has also been applied to multi-physics problems [139], fluid dynamics [33, 56, 171], heat transfer [221], acoustics [54, 232], electromagnetism [206], and optics [110].

For structural problems, density-based methods are today the most widely used by engineers along with level-set methods [242] (that provide an unambiguous boundary description), topological derivative procedures [6, 147], phase field techniques [219], etc [65]. A good review of key developments in structural topology optimization post 2000 was presented by [50].

For years, topology optimized solutions were seen as impractical and requiring interpretation before fabrication (obviously until additive manufacturing). To directly address the issue of manufacturability, significant research has been carried out, under both the density-based [27] and level set [6, 202] frameworks. Related literature surveys can be found in [112, 125]. Targeting conventional machining and injection molding, the length scale issue [5, 73, 75, 77, 158, 238] no-undercut restriction [220] and feature-driven design [126].

The main impetus for its sudden increase in popularity is very likely the recent advancements in additive manufacturing [95, 240], a rapidly evolving technology that allows for quick and direct fabrication of a complicated optimized topology, which was not the case with conventional manufacturing. With the modern-day mastery of these manufacturing techniques, the topology optimization technique is increasingly being applied in the design of engineered materials for aerospace applications [141].

During the last three decades, several typical topology optimization methods have been developed, such as the homogenization-based approach [26], the solid isotropic material with penalization (SIMP) approach [169, 241], the evolutionary structural optimization approach [226], the level set-based approach [6, 202] and the recently developed moving morphable components/voids (MMC/MMV) approach [76]. The most-commonly used Solid Isotropic Material with Penalization (SIMP) or power-law approach is developed to ensure void(0)-solid(1) solutions for \(\rho _e\) by penalizing intermediate densities [241]. A similar approach can be applied for stress-constrained topology optimization problems to address the singularity problem [113]. [164] studied the effects of using the artificial power law exponent on the solutions obtained in the SIMP approach. The vast majority of commercial TO softwares today such as OptiStruct and Genesis are based on SIMP. While the SIMP is theoretically well-established, issues such as an ill-conditioned stiffness matrix due to low density elements can cause computational problems as well as singularity issues [185]. Alternatives to the SIMP method are the RAMP (Rational Approximation of Material Properties) method [182] and the explicit penalization method. Popular schemes for solving this problem are gradient-based schemes like the Optimality Conditions (OC) method [27], Sequential Convex Programming (SCP) as mentioned previously and the Method of Moving Asymptotes (MMA) [188, 189]. The Evolutionary Structural Optimization (ESO) [160] and Bi-directional Evolutionary Structural Optimization (BESO) [91, 92] are also very successful and must be mentioned in this context.

Topology optimization is surprisingly far from attaining mainstream popularity among structural engineers, despite nearly two decades of research that have been devoted to the subject. Besides obvious challenges such as interpreting topology optimized solutions using CAD/CAE software, this is mainly due to the frequently prohibitive computational cost associated with these procedures.

There are two main problems hindering large-scale topology optimization:

-

1.

It involves repeated expensive high-fidelity solutions of a physical model (e.g. the FE equilibrium equation in structural mechanics), whose size is directly related to the grid resolution.

-

2.

Prohibitively high memory requirement, which is indirectly related to the grid resolution.

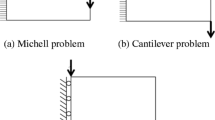

Consider the simplest structural design problem where the reference state equation is modeled using linear elasticity, and information about the material distribution is incorporated through an auxiliary function: the classical (self-adjoint) compliance minimization problem:

where \(\varvec{\rho }\) is a vector containing the values of all element design variables (that is, the element densities \(\rho _e\)), \(\varvec{u}\) is the displacement vector, \(\varvec{F}\) is the force load vector, c is the objective function, i.e. compliance in this case, \(\varvec{K(\rho )}\) (i.e. \(\varvec{K}\) hereafter) is the global stiffness matrix, \(v_e\) is the element volume and N is the number of FE elements, \(v_{frac}\) and V are the volume fraction and volume of design domain respectively.

In the simplest case, SIMP is used to relate the elemental relative density variable \(\rho _e\) with the interpolated/penalized (elemental) material property \(E_e\) by:

where \(p \in (1,\infty )\) is the penalization parameter, \(E_{max} \in {\mathcal {R}}\) denotes the material property (for e.g. Young’s modulus) of solid material, \(E_e \in {\mathcal {R}}\) denotes the penalized property, \(E_{min}\) is a very small positive number to avoid problems related to vanishing stiffness, usually taken as 0.001.

Regardless of the optimization algorithm, the computational bottleneck is generally the solution of the large scale linear system (i.e. the equilibrium equation) during each optimization iteration [11, 44, 70, 98, 223]

despite having a sparse symmetric positive definite stiffness matrix \(\varvec{K}\) [1, 9, 50]. Since \(\varvec{K}\) is positive definite, the FE problem may be solved using a direct solver, such as Cholesky factorization.

The performance and therefore feasibility of a topology optimization procedure could thus be affected by the number of degrees of freedom, complexity/non-linearity of the material model, the performance of the FE solver (for structural problems), type of sensitivity analysis depending on self-adjointness, and whether or not uncertainties need to be taken into account, etc. In the field of structural design, the key challenge has always been dealing with high-dimensional problems [1] such as those encountered in additive manufacturing, i.e. the number of degrees of freedom(n) required by 3D printing resolution (for example). Grid resolution is of critical importance as [2, 177] explained: using coarse grid resolution could cause an artificial length-scale limitation and limit the solution space of the optimization problem. By using a sufficiently high-resolution FE mesh, we may obtain finer structures with additional details and improved performance [2]. Therefore, the ability to handle high-resolution topology optimization formulation is (in many cases) non-negotiable, despite the computational cost entailed.

In the classical nested approach, the Finite Element (FE) equilibrium equation (3) needs to be solved during each iteration of the optimization routine. Even assuming the simplest linear elastic (Hookean) material under small displacements, a single linear system has to be solved at each iteration to determine the nodal displacements U to evaluate the objective function (equation (1)). For the density-based approach using a classical optimality criteria design update, this cost can already be around 90\(\%\) of the total computational cost for a benchmark problem with 100,000 design variables using a direct solver [44], and this further scales up with the size of the problem, not to mention as additional physics gets integrated into the structural performance. Iterative solvers, mostly preconditioned Krylov subspace solvers, such as Conjugate Gradients (CG) [11, 13], MINRES or GMRES, have a major computational advantage since they do not need high accuracy (i.e. low tolerances) during the early/intermediate stages of the optimization routine. However, in the later stages of the optimization routine, linear solvers tend to become ill-conditioned and an ordinary iterative method can quickly run into difficulties [185].

Another way to reduce FEA costs is the adaptive p-refinement [18], where the mesh topology stays the same but higher order shape functions are used. p-refinement confers robustness against locking and high aspect ratios, gives an exponential rate of convergence and is generally superior to h-refinement for the same computational cost. However, due to the fact that the conventional TO approaches typically assume a constant relative density distribution within the element, the efficacy of these methods is questionable [78].

To speed up the convergence of the procedure, second-order techniques have been used including variants of the MMA [36, 189], sequential quadratic programming (SQP) [15, 165], meshless methods [53] and interior point algorithms [44, 88, 131]. However, these techniques are usually difficult to implement and don’t scale up well with problem size, compared with the classical first-order methods. To resolve the scalability issue, [116] presented a fixed-point iteration with periodic Anderson extrapolations.

[185] proposed a PareTO approach based on topological sensitivity [61, 148], with several reported advantages over SIMP including faster convergence of solvers due to better conditioned matrices (by not relying on relative densities), fewer optimization iterations and multiple Pareto optimal topologies for a given volume fraction. The last few decades have seen different approaches that have been tested for alleviating the computational cost, using one of the following strategies:

-

1.

Allowing approximate linear solutions

-

2.

Reducing the number of iterations in Krylov subspace methods.

-

3.

Increasing computational efficiency by leveraging existing capacity

which may be broadly classified under: multi-grid (MG) methods, reanalysis (using inexact solutions computed using classical methods), reanalysis with state-of-the-art reduced order models based on machine learning (ROM), high-performance computing (HPC) approaches [2, 133] and much more recently Iso-Geometric Analysis (IGA) [52, 67, 123, 200]. It goes without saying, a combination of two or more of these approaches tends to yield superior improvements compared to using each one on by itself. The goal of this paper is to comprehensively and critically discuss the latest developments in each of these branches of research, as well as shed some light on emerging topics (such as Neural networks and Deep Learning [170]) highlighting advantages and potential limitations, in a bid to help the structural engineer make appropriate choices when attempting to use topology optimization in industrial-sized problems.

This paper is organized in the following manner: Sect. 2 deals with high-performance computing (HPC) approaches, Sect. 3 with approximate reanalysis using ROM, Sect. 4 with multi-grid (MG) methods, Sect. 5 with IGA and Sect. 6 with emerging approaches like Deep Learning and Neural Networks. The paper ends with concluding comments including recommendations and proposed areas for future work.

2 High Performance Computing

Obtaining high resolution solutions to accurately predict the physical shape and simulate properties is a challenging task using personal desktop machines, since the memory and computational capacity needed would be above and beyond what is generally available [214]. From the point of view of applications such as 3D printing, manufacturing-specific constraints are required to ensure printability of the final solutions [141]. Iterative computing is always used to find the optimized design by topology optimization, which will lead to expensive computational cost as has already been seen, particularly for 3D large-scale problems or problems requiring high-resolution. Using parallel computing [186] to circumvent this problem is the second oldest strategy for accelerating the optimization procedure, and probably has the maximum potential both by itself, as well as in conjunction with the other strategies described in this paper.

Despite the vast increase in computational power of microprocessors today, the capacity to sustain the dramatic increase in processing speed (from Moore’s law) shifts the focus from increasing the speed of a single processing unit to increasing parallelism (width) by switching to multiple cores or parallel architectures [172]. This is because there is a gap between the processing power and the ability of the memory to transmit data at the same rate.

Amdahl’s law:

states that increasing the number of processors for a problem of fixed size will increase the algorithmic speed up (SPA) asymptotically up to a theoretical limit \(1/(1-R)\), where S is the time spent in the sequential portion of the code, P is the time spent in the parallel portion, N is the number of processors and R is the ratio of the time spent in the parallel portion to the total execution time. Therefore rather than powerful parallel (often heterogenous) systems alone, the need is for numerical algorithms which are able to achieve adequate scalability with such systems [172]. Distributed computing power has today reached an almost unimaginable number of 2.3 exaFLOPS (floating point operations per second) with Peta-FLOP (10\(^{15}\) FLOP) computers being more commonly used in very large research organizations. With the decrease in cost of computer hardware, almost every research group today has access to parallel computing systems.

The key element to successfully use HPC in FE-based topology optimization is a combination of physical partitioning of the design domain among processors or nodes, or domain decomposition [29], an iterative solver such as PCG or MG, and parallel algorithms for the matrix operations using Message Passing Interface (MPI) [149], Open Multi-Processing (OpenMP) [150], etc. Domain decomposition is a classical technique for solving PDEs by decomposing the spatial domain of the problem into multiple subdomains, in order to allow solvers that can be parallelized on coarse-grain computing systems [40]. It could be either implicit or explicit. [63] also demonstrated a material-based decomposition by decomposing the solid and void portions of the domain separately and then merged the two FE meshes together. Next, parallel algorithms attempt to manage the communication between different processors, through shared memory (e.g. OpenMP) or message passing (e.g. MPI). Shared memory processing entails an overhead of additional processor and bus cycles, while message passing adds transfer overhead on the bus, and additional memory need for queues, message boxes and message latency. Multiple instruction multiple data (MIMD) is the most important approach where parallelism is achieved using a number of processors functioning asynchronously and independently of each other. A popular subset of this, called the Single Program Multiple Data (SPMD) paradigm, allows all processors involved in the program to co-operatively execute the same main program, but at any given moment different processors may be running different instruction-streams or different sections of the same program and/or act on different data sets. These processors self-schedule in dynamic fashion according to the program and through synchronization built in the program [49].

In what is likely the earliest work about parallel topology optimization, [32] used a Cray T3E supercomputer and presented a CPU parallel strategy for 3D large-scale topology optimization based on the regularized intermediate density control method along with domain decomposition, and the MMA algorithm, to get a high-quality resolution of realistic designs in 3D. The equilibrium equations were solved by a preconditioned conjugate gradient algorithm. However, their convergence rate was on the lower side and the iterative optimization procedure was not very stable. Subsequently, [101] used parallel topology optimization for large-scale eigenvalue-related structural design. [199] presented a parallel algorithm based the SIMP and the OC criterion for 2D structural topology optimization. [133] suggested the combination of parallel computing environment and domain decomposition aiming to reduce the computational cost of the optimality criteria method, adopting the master-slave programming paradigm in combination with multiple instruction multiple data (MIMD) shared memory architecture, and the Jacobi preconditioned Conjugate Gradient to solve the equilibrium equation (Algorithm 1).

Here \(\varvec{P}\) is the search direction vector and \(r_2\) is the residual, for the PCG algorithm. In typical Master-Slave fashion, every slave processor handled every single element within its own (p) subdomain separately and assembly of global matrices was avoided. However, they applied it only to 2D problems with a maximum size of only 2.56 \(\times\) 10\(^4\) dofs. An OpenMP implementation would look similar to Algorithm 2.

[62] considered efficient parallel computing assisted topology optimization for fluid mechanics problems. [45] used parallel programming with the IBM Cluster 1350 in a two-scale optimization problem in order to compute simultaneously the optimal material and structure. [63] used Finite Element Tearing and Interconnect (FETI) with a primal dual solver.

A key point was discussed in detail by [63], and that is the important distinction between numerical scalability and parallel scalability. Parallel scalability is the ability of the parallel implementation of an algorithm, i.e. on a given parallel system to demonstrate a speedup that increases with the number of working processors, for a fixed problem size, and hides the true amount of inter-processor communication overhead. Given that the objective of using parallel computing for topology optimization is to also enable the solution of larger-scale (3D) problems rather than simply solving problems of a fixed size faster, numerical scalability of the algorithm is equally important, i.e. the computational complexity of an algorithm needs to grow (ideally linearly) with the size of the problem. This is intimately related to the increase in iterations (for convergence) with the problem size for the same error criteria. Therefore, both parallel as well as numerical stability need to be addressed by any developments in this sub-field.

Owing to their high computing capacity, which in turn is due to their high bandwidth (that hides the latency under thread parallelism), an overwhelmingly popular approach for accelerating topology optimization is using Graphics Processing Units or General Processing Units (GPU) computing. GPUs are a class of multi-core processors with a faster and smaller set of instructions, and capable of handling a large number of concurrent threads [236]. These are specially designed for rapidly manipulating computer memory, and are today widespread in embedded systems, cellphones, PCs, and workstations. Their highly parallel structure makes them significantly more efficient than ordinary CPUs for processing large blocks of data in parallel. The first use of GPUs in topology optimization was by [201] in 2009. This work used a Preconditioned Conjugate Gradient (PCG) solver on a GPU for heat conduction based topology optimization. However, convergence of the solver was affected by round off errors arising from the early GPUs not supporting double-precision. [172] then wrote a nodal-wise assembly-free implementation of SIMP topology optimization using a commodity graphics card showing equivalent performance to that obtained with a 48 core shared memory system. The CG algorithm was modified to avoid accumulating numerical errors due to the limitations of single precision at the time. Speed ups of 10 to 60 were reported on a GeForce GT X280 with 1GB memory. [184] also used a node-wise strategy implemented on both a CPU as well as GPU. The 15 million degrees of freedom tip-cantilever problem required 2 hrs on a 1.5 GB memory GPU, while a 92 million degrees of freedom version of the same problem required close to 12 days on a 6 GB memory CPU, both implementations being limited by available memory. [39] presented a graphics processing unit (GPU) implementation of the level set method and demonstrated the efficiency of this implementation by solving the inverse homogenization problem for designing isotropic materials with maximized bulk modulus.

[236] was the first to combine unstructured meshes and GPU computing. To prevent a race condition, a fast greedy coloring algorithm had to be used before the topology optimization procedure. As expected, the performance, while good, was not comparable to that obtainable using a structured mesh. However, unstructured meshes allow the definition of complex design domains, loadings and/or constraints. [185] executed his non-SIMP PareTO algorithm on both quad-core CPUs with 6 GB memory (parallelism through OpenMP) as well as 480-core GPU, with the GPU implementation for 15 million dof problem being over 19 times faster than the CPU implementation. [66] extended the current concepts of topology optimization to the design of structures made of nonlinear micro-heterogeneous materials considering a two scale approach; in order to regain the computational feasibility of the computational scale transition, a modern model reduction technique was employed: the potential-based reduced basis model order reduction with GPU acceleration. [162] addressed two key difficulties when solving discrete structural topology optimization problems using evolutionary algorithms, i.e. to generate geometrically feasible structures and handling a high computation time. These difficulties were addressed by adopting triangular representation for two-dimensional continuum structures, correlated crossover and mutation operators, and by performing computations in parallel on GPU. In [136, 137] the authors alleviated the computational constraints of the robust topology optimization of continuum structures and those of evolutionary topology optimization problems and obtained good speed-ups using a GPU computing based strategy based on the SIMP and the optimality criterion (OC) updating scheme [138] (Algorithm 3).

The literature reveals many computational strategies to address the problem of scalability. [2, 3] proposed a fully Message-Passing-Interface (MPI) based parallel topology optimization framework by using the SIMP approach and the method of moving asymptotes (MMA) [188]. [1] then designed a full-scale aircraft wing with more than one billion 3D elements by using parallel computation on a cluster with 8000 processors based on the SIMP approach. An OpenMP based parallel technique was employed by [153] for structural topology optimization of minimum weight formulation with stress constraints. [57] presented a framework for CPU and GPU parallel structural topology optimization using the SIMP approach based on polygonal elements, considering 12 million 3D elements.

[222] proposed a parallel computation formulation based on GPUs by using the parameterized level-set method and IGA applied to 2D problems. Most recently, [122] presented a fully parallel parameterized level set method to realize large-scale or high-resolution structural topology optimization design. In their work, the entire optimization process is parallelized, consisting of mesh generation, sensitivity analysis, assembly of the element stiffness matrices, solving of equilibrium equations, parameterization and updating of the level set function, as well as the output of the computational results.

Most recently [180] presented their Topology Optimization module, based on SIMP and OC, of their High-Performance Optimization Computing Platform (HP-OCP). This module considered a variety of performance objectives including compliance both with and without structural constraints, with all libraries programmed in C#. The module was coupled with the commercial structural software SAP2000. However, there was little discussion on the parallelization and message passing schemes involved.

While the rush to incorporate parallel computing, especially with GPUs in this field is understandable, we must note that proper implementation of topology optimization requires adequate technology as well as proper formulation to take full advantage of its potential at accelerating computing, which is far from straightforward. Every strategy has its limitations as well as advantages, and the cost–benefit ratio must be weighed carefully before attempting a one-size-fits-all approach.

GPUs have certain shortcomings: although GPUs are faster than CPUs, the time needed for transferring large amounts of data from the CPU to the GPU could lead to a significantly higher overhead. Also, while they have several cores, GPU cores tend to run slower than CPU cores. From the point of view of managing inter-processor communication, the OpenMP strategy is used in shared memory architecture computers such as multi-core processors, which will limit the scale of calculation due to the limitation of computer memory. When the number of threads increases shared memory architecture performs worse than than distributed memory clusters, and also have lower scalability than MPI clusters. MPI has the obvious disadvantage of greater communication due to the message passing needed (since nothing is shared). OpenMP allows seamless transition between sequential and parallel operations following the fork-join model [153]. CUDA (Compute Unified Device Architecture)-aware MPI [17] is a potential solution for combining the advantages of MPI and GPUs, when the data size (or single node computation) is too large to fit into the memory/capability of a single GPU.

From the point of view of scalability, besides using an iterative solver for the equilibrium equations, it is better to use a separable convex approximation of the objective function, like in the MMA algorithm [188, 189], for large-scale optimization on a cluster. This is due to both its lower memory requirements and ease of parallelization [63].

3 Approximate Re-analysis and Reduced-order Modeling

As we have discussed in the main introduction, the goal of accelerating large-scale topology optimization is alleviating overall computational cost without sacrificing problem resolution [2, 177]. In this context, approximate reanalysis is the oldest approach in design optimization, where we efficiently and rapidly analyze the structure after any change in its design, i.e. topology (in the context of this paper). The holy grail of reanalysis in mechanics and design is to (approximately) determine the structural response (displacements, forces, stresses, strains, etc) after any such change, using the initial response of the structure i.e. without solving the high-fidelity model equations. In reality, the high-fidelity model need to be occasionally resolved over the course of the optimization, but only over a few iterations. The first major paper on reanalysis in the context of topology optimization was inarguably the seminal work of Kirsch and Papalambros [109] in 2001, using the Combined Approximations (CA) method [103, 105, 106, 108, 174]. This paper has spawned several different contributions by various authors over the last two decades, using a variety of strategies.

Approximate reanalysis hinges on reusing the Cholesky factorization for a series of consecutive iterations (for a direct solver) usually based on a preset error criterion; and Krylov subspace methods [9, 203] for Iterative solvers. Some key references in this regard are [10, 12, 90, 124, 245]. Krylov subspace solvers have low memory requirement and have good scalability for parallel computing [13], but they need a large number of iterations to converge. Their performance (when using the popular PCG) depends on the quality of the pre-conditioner used, such as Jacobi, incomplete Cholesky factorization, multi-grid etc. Krylov subspace recycling is another very useful numerical technique used to improve convergence in iterative solvers by reusing the data generated during previously-performed exact solves. To our best knowledge, the most influential work in this context is that of [203] who used an iterative MINRES (minimum residual) method for efficient Krylov subspace recycling and applied it to compliance minimization. [7] used multi-grid pre-conditioners generated at selected iterations and subsequently reused, along with Krylov subspace recycling. [38] proposed a three-stage hybrid Proper Orthogonal Decomposition (POD)-based procedure to speed up the solution: direct solution over the first few POD basis vectors (obtained from the Krylov vectors), iterative solution over the full augmenting space using the augmented CG algorithm, and iterative solution over the full space using the augmented PCG.

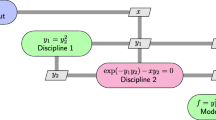

Reanalysis can thus predict the current topology using the solutions of previous iterations. The central theme in successfully using inexact solutions within the optimization procedure, is the paradigm of construction and updating/enrichment of a reduced basis \(\varvec{\varPhi }\) during the course of the procedure, e.g. Galerkin projection [19]. This basis \(\varvec{\varPhi } = [\phi _1 \cdots \phi _M]\) is obtained using an effective set of M solution vectors (displacement field vectors) \([U_1 \cdots U_M]\). \(\varvec{\varPhi }\) is then used to approximate U as follows:

The equilibrium equation (3) is projected onto \(\varvec{\varPhi }\) (which is usually of much lower dimension) yielding a reduced system:

where

The approximate solution of the full-scale system may then be recovered by a linear combination of the basis vectors.

A good ROM (i.e. basis \(\varvec{\varPhi }\)) can approximate the exact solution of (3), at a drastically reduced computational cost. Previous and ongoing developments in statistical and physics-based reduced-order modeling ensure that this branch of research gets special attention. These have gained significant interest in recent years in various domains, be it in analysis [14, 99, 111] or optimization [46, 58, 59, 74, 161] with significant contributions to nonlinear structural topology optimization [66, 217] and material characterization [140, 218].

There are several ways of obtaining \(\varvec{\varPhi }\) within the subspace spanned by M previously computed solutions [44], for example:

-

1.

a simple collection of M solution vectors \([U_1 \cdots U_M]\)

-

2.

an ortho-normalized basis, e.g., QR decomposition

-

3.

Principal Components Analysis (PCA) or POD

Several approaches of this kind have been proposed. The Combined Approximations (CA) method has been a fixture in the early literature, right since [109] where the reduced basis vectors were obtained from the binomial series expansion, with higher order terms incorporated depending on accuracy needed. The key advantage of CA is combining both local approximations as well as global approximations [109], and it has been applied to linear reanalysis as well as eigenvalue problems [107]. [9] also used reanalysis with the CA method, presented the notion of consistent sensitivities (for reanalysis) and applied his approach to 2D and 3D compliance minimization problems, reporting a speed up of 3 to 5 for 3D problems. [31] applied the CA to topology optimization for repeated eigenvalue problem analysis. For eigenvalue problems, CA is reportedly useful only for obtaining lower mode shapes accurately, therefore [239] applied reanalysis using a modified version of CA for eigenvalue problems, the Block Combined Approximations with Shifting (BCAS) method for repeated solutions of the eigenvalue problem in the mode acceleration method. [183] also used the BCAS and proposed an indicator for controling the reanalysis. The CA was also used for reanalysis by [12] for robust topology optimization (i.e. manufacturing uncertainty tolerant designs) and applied to compliant mechanism design. [83] used reanalysis in topology optimization for dynamic problems using multiple dynamic condensation model by combining mass orthogonalization and Rayleigh–Ritz analysis. [230, 231] used a variety of techniques, such as mode superposition, Ritz and quasi-static Ritz vectors for topology optimization problems involving frequency response.

[230] used eigenmodes and Ritz vectors for a reduced basis to approximate the vibration response in topology optimization.

In 1999, [104] had proposed Gram–Schmidt orthonormalization to generate \(\varvec{\varPhi }\). [70, 71] extended the approach of [109] and used Gram–Schmidt orthonormalization for the on the fly construction of \(\varvec{\varPhi }\) based on the violation of an error residual \(\varepsilon _{rb}\), against a user-specified tolerance (\(\varepsilon _{Tol}\)).

He also added a term to “correct” the sensitivities when using the approximate solution, similar to [9]. Despite their accuracy and efficiency, which was demonstrated for a variety of 2D and 3D compliance minimization problems problems, two drawbacks appeared to remain:

-

1.

The limiting effect of the numerical instability of successive Gram–Schmidt orthogonalizations in high-dimensional spaces.

-

2.

The prohibitive high cost of the “updating” phase of the reduced basis, requiring a large number of full-field solutions of the linear system.

Surprisingly, none of the previously mentioned ROMs considered a reduced basis obtained by Principal Components Analysis (PCA), which is known to provide a mathematically optimal basis for a given set of data.

Xia, Breitkopf and others [216, 218] were the first to bring PCA into topology optimization. They used a non-intrusive ROM to alleviate the cost of repeated RVE (Representative Volume Element) computations in the FE\(^2\) analysis during multi-scale level-set topological optimization for minimum compliance. \(\varvec{\varPhi }\) extracted using PCA and the ROM was built using Diffuse Approximation [34, 142], in an on-line manner: built during the first iteration and updated in the following iterations.

[44] performed reanalysis by combining a Singular Value Decomposition (SVD)-based ROM (at the start of the topology optimization procedure) and Krylov subspace methods with ROM-recycling (towards the end). [8] resolved the second limitation by exploiting specific characteristics of a multi-grid preconditioned conjugate gradients (MGCG) solver. However, they obtained the sequential solution by solving the linear system. [174] combined approximate reanalysis technique (based on CA) with Sequential Piecewise Linear Programming (SPLP) [72], due to the method’s demonstrated superiority over Sequential Linear Programming (SLP) as well as Convex Separable Approximations (CSA) [189], for use in problems involving geometric nonlinearities.

[223] extended the approach of [70, 109] to topology optimization (Algorithm 4) and with basis construction using Principal Components Analysis and mono-fidelity data. [224] then combined their previous work with multi-grid methods, using successive resolutions of variable-fidelity solutions of successive approximations to the equilibrium equation (thus at a lower cost), all within the optimization algorithm. The main challenge in the PCA-based approach is the relatively substantial number of high-fidelity solutions needed leading to a potentially high computational cost, with the addition of the multi-grid (MG) solver a moderate enhancement to the previous work. For this, criteria and transition schemes between the low and high-fidelity models were established. However, memory-efficient schemes are still needed to perform the SVD incrementally. Also using an adaptive stopping criterion would have conceivably improved the performance further.

A key area of future work here would be using either incremental SVD (or even randomized SVD [20]) in place of simply adding or subtracting snapshots while generating the basis \(\varvec{\varPhi }\) (Algorithm 5 above [156]). Incremental SVD goes further than Algorithm 4 by using adaptive snapshot selection, on-the-fly snapshot selection and on-the-fly truncation. Adaptive snapshot selection can identify the optimization iterations at which the equilibrium equation must be solved. On-the-fly snapshot selection can pre-evaluate the potential contribution of a displacement vector snapshot before modifying \(\varvec{\varPhi }\), while on-the-fly truncation can limit the size of \(\varvec{\varPhi }\) during adaptations. In Algorithm 2, \(\epsilon _{trunc}\) is the threshold on singular value truncation error, \(\epsilon _{orth}\) is a threshold on \(\varvec{\varPhi (:,1)^T \varPhi (:,end)}\), \(\epsilon _{in}\) and \(\epsilon _{out}\) are variables used to track the loss of information due to truncation during on-the-fly basis updation.

4 Multi-grid Methods

The challenge of reducing computational effort in topology optimization has been approached from different angles, such as Approximate Reanalysis (previous section). Multi-grid methods (MG) where use multiple computational scales i.e. resolutions, and bypass the computational cost associated with performing all solutions of the equilibrium equation (3) (i.e. all iterations) on a high resolution mesh. These are state-of-the-art recursive and iterative numerical methods for the large-scale linear systems arising in partial differential equations stemming from various physical problems [35, 79, 134, 198], that have proved to be the most effective techniques today from the points of view of accuracy and efficiency [8].

There are two main types of MG methods [176], geometric multigrid (GMG) and algebraic multigrid (AMG) methods, for solving linear systems. AMG methods are in turn of two different types: CF (Coarse-Fine) AMGs and SA (Smooth Aggregation) AMGs. MG methods use Jacobi, Gauss-Seidel, or successive over/under relaxation methods to smooth out high-frequency errors, and accelerate convergence by distributing the residual and correction vectors across different resolutions/levels through prolongation and restriction operators [128]. As a result, The MG solver’s computational workload increases linearly with the number of DOF, and the speed of convergence is independent of the grid resolution as a result of three important steps: pre-smoothing, coarse-grid correction and post-smoothing.

In order to better reflect on the applications of MG methods in topology optimization, i.e. gaining rapidly a large number of high-fidelity solutions at a relative low computational cost for updating the basis using a MG solver, several crucial steps of the MG method must be pre-defined:

-

1.

Discretization scheme This step requires that the discrete form of the differential equation must be numerically stable so that the relaxation method can effectively smooth out the high-frequency error, in other words, the FE equilibrium equation (3) must provide a numerical-stable iterative solution. Fortunately, equation (3) is itself a stable discrete form of the corresponding differential equations.

-

2.

Relaxation method The general principle is that the residual is supposed to become fully smooth before it is projected (restricted) from a fine grid on to a coarse grid. The relaxation method can use either Gauss–Seidel iterations, weighted Jacobi iterations, CG etc, with a small number of iterations (2–3 are usually enough) on each mesh level. This can rapidly eliminate the high-frequency error and then the rest of the low-frequency error will dominate and couple with the high-frequency error, meaning that too many relaxations are unnecessary. It is also important to note that the choice of the particular relaxation method needs to be sufficiently robust in order to avoid high-frequency oscillations during the iteration.

-

3.

Restriction and Prolongation The smoothed residual is transferred from the finest grid to the coarsest grid step-by-step using a series of restriction operators corresponding to each individual mesh level. Once we reach the coarsest mesh, the residual equations can be directly solved, and then (like during the restriction phase), the corresponding correction is transferred from the coarsest grid to the finest grid step-by-step through a series of prolongation operators corresponding to each level. Usually, restriction operator and prolongation operator on same grid are transposed with each other and their constructions could refer to literature [224]. In short, restriction transfers the finer-grid residual to a coarser-gird and prolongation transfers each coarser-grid correction to the finer-grid.

-

4.

Mesh coarsening Proceeding from the modularity and portability of the program design, standard mesh coarsening strategy is generally used (step size is doubled along all directions). Especially for some FEM-based topology optimization problems, standard rectangular elements are naturally convenient for mesh coarsening, which in some way can be viewed as GMG, based on a true geometric grid background. With the coarsening of the mesh, each level of restriction and prolongation matrices are constructed and stored in advance. For the coefficient matrices at each layer of coarse grid, a typical symmetric positive definite operator is the Galerkin projection approximation. The global stiffness matrix in equation (3) is exactly symmetric positive definite, and thus the Galerkin approximation maintain the property of symmetric positive definiteness at each grid level.

-

5.

Nested iteration Generally, “V” or “W” cycles are used. “W” cycles are more robust but relatively expensive. When the number of grid layer is small, “V” cycles can replace “W” cycle, with small computation and the same stability. In addition, there are “S cycles” [42], “F cycles” [135], etc., whose performances lie in between.

These are the basic steps of the MG method, among them, fine-grid relaxation, coarse-grid correction and nested iteration form the backbone of MG. Fine-grid relaxation takes care of eliminating high-frequency errors, coarse-grid correction eliminates low-frequency smoothed errors, while nested iterations connecting all levels by using restriction operators and prolongation operators to solve the same problem. The basic idea of the MG method can be interpreted as simply redistributing the same problem on a series of grids of different sizes (multiple-resolutions).

Assume total m levels of grid \(\varOmega _1 \supset \varOmega _2 \supset \cdots \supset \varOmega _m\): A typical “V” cycle MG iteration of recursive form is given in Algorithm 6:

In ROM-based topology optimization, criteria and transition schemes between the low and high-fidelity POD snapshots information has then been established by using multiple grid resolutions of to break down the size of the problem.

The literature reveals the popularity of MG methods in topology optimization. [102] was the first to officially propose multi-resolution multi-scale topology optimization (MTOP) using wavelets to parameterize \(\varvec{\rho }\) (similar to the regularization technique in the MOLE method [157]) and progressive refinement of the grid during the optimization. [55] firstly presented a partially reduced SQP approach which uses multigrid methods for the solution of the linearized model equation, which is applied well in the shape optimization of turbine blades. Secondly, [55] and [131] both gave a new nonlinear interior point strategy for the treatment of the arising inequality conditions profitably coupled with a so-called simultaneous multigrid strategy for the solution of the quadratic subproblems in a SQP-algorithm, which makes for the equilibrium equation in elastic structure topology optimization is simultaneously solved only once together with the overall optimization problem leading to a significant reduction of computational complexity. [100] then extended their previous work to adaptive wavelet-Galerkin analysis for multi-scale topology optimization. [181] proposed a multi-resolution approach where the optimization was first performed on a coarse (low resolution) grid, followed by adaptive refinement along the solid-void interface. The goal was to obtain a high-resolution solution at relatively low computational cost. The issue with this was that the solution space for the design problem was probably artificially limited by using the coarse grid at the beginning. [213] describes topology optimization of electromagnetic systems using the multigrid method which works effectively as a fast linear solver. [235] proposed a methodology based on multigrid scheme used to accelerate the cellular automata design algorithm by coupling the iterations on the finest grid with the iterations of the correction solution on the coarse grids, which is demonstrated to be a powerful tool for solving topology optimization problems compared to other algorithms based on finite element analysis. [143, 144] proposed another multi-resolution topology optimization scheme using different length scales for the density distribution \(\varvec{\rho }\) the displacements U.

Very recently [118] demonstrated a triple acceleration method using a combination of a multilevel mesh, an initial-value-based PCG, and local-updating. Most recently [209] proposed a new high-efficiency iso-geometric topology optimization (HITO), where the powerful multigrid conjugate gradient method (MGCG) [8] was used for accelerating solving the large-scale linear system (i.e. equilibrium equation), resulting in a significant improvement in computational efficiency.

While MG clearly accelerates the solution of the large-scale linear system arising in structural topology optimization and significantly reduce computational costs, very few researchers appear to have investigated the effect of coupling a ROM with MG, which should conceivably improve the performance further. This was studied by [224] who coupled their previous “on-the-fly” POD based AR [224] with MG and demonstrated the performance improvements on compliance minimization as well as compliant mechanism design. The improvements were admittedly modest in comparison to a vanilla MG, but this could have been significantly better if they had used an adaptive stopping criterion as well.

5 Iso-geometric Topology Optimization

In most engineering simulations, pre-processing or geometric modeling takes up significant computational effort and time, especially for complex shapes. To this end, most of the commercial numerical simulation engineering softwares perform a sequential procedure of computer aided design (CAD) followed by computer aided engineering (CAE). In general, the creation of CAD models takes up the majority of the time and effort in the overall simulation. To meet the need of today’s complex engineering designs, an integrated CAD–CAE can help cope with the complex and simulation- specific geometry creation and automated mesh generation so as to improve the scalability of products in industry. Iso-Geometric analysis (IGA) was introduced by Hughes and his research group in early 2000 [93, 200] in order to overcome the shortcomings of the popular CAE methods, in particular FEA, and to integrate CAD–CAE for better numerical precision.

The following problems typically encountered by FEA were addressed by IGA:

-

1.

The finite element mesh is unable to capture the exact structural geometry, which will considerably lower the numerical precision

-

2.

Lower-order continuity between the neighboring finite elements, even with the higher-order elements.

The main idea behind IGA is creating basis functions that can concurrently form the complex structural geometry (boundaries and edges), and also obtain the problem solution of primary and secondary unknowns in a finite dimensional discretized space. IGA uses several basis functions, for example, B-splines [51], Non-Uniform Rational B-Splines (NURBS) [195], and recently developed functions like T-splines [22].

In IGA, we consider the whole domain to be sub-divided into multiple patches, on which basis functions like NURBS are defined. For an analogy with standard FEA, a patch may be considered as an element where the shape functions for the primary unknowns are defined. The global characteristic matrix, for e.g. the stiffness matrix for elastic deformable bodies may be evaluated by adopting a similar assembly strategy as in FEA:

Let us consider knot vectors \(\varvec{\xi } =\{\xi _0, \xi _1,...\xi _i,...\xi _{n+p+1}\)} and \(\varvec{\eta } =\{\eta _0, \eta _1,...\eta _j,...\eta _{m+q+1}\)}, a sequence of non-decreasing real numbers used to define the parametric coordinate space in 2D [200]. Here, \(\xi _i\) and \(\eta _j\) represent the ith and jth knot; p and q are the polynomial order, n and m are the number of basis functions used to construct a patch in isoparametric coordinates. The knot vectors are used to define the basis functions for creating geometry and evaluating unknowns.

Formulating the NURBS function for a typical 2D patch, the approximated primary unknown, i.e. the displacement vector \({{\mathbf{u }}}^p\) of the patch can be written as [51, 200]:

where \(\mathbf{u }_{i,j}\) are the displacement functions defined at (\(n+1) \times (m+1)\) control points for the patch. \(B_{i,j}(\xi ,\eta )\) are the 2D NURBS basis function defined to both create the geometry as well as evaluate the displacement field in parametric coordinates \((\xi ,\eta )\) as:

where \({N}_{i}^p(\xi )\) and \(N_{j}^q(\eta )\) are the normalized B-spline basis functions of polynomial degree p and q, respectively, and \(w_{i,j}\) are the weight factors associated with the normalized functions. NURBS basis are derived from the standard B-splines by using these weights for more numerical precision. The ith B-spline basis function of polynomial degree p, is defined for \(p = 0\) as

and for \(p \ge 1\) as

Similar to the displacement field, the geometries can be created using NURBS basis functions [84]. For representing the patch geometry in isoparametric coordinates \({\mathbf {x}}(\xi ,\eta )\) using 2D NURBS, the following equation is used:

Classical variational principles can be used to derive the characteristic matrix of a patch. For example, by formulating the energy principle on an elastic deformable body acted upon by a volumetric body force, \(\varvec{b}\) and a surface traction force, \(\varvec{T}\) we can write:

where \(V^P\) is the volume and \(\varGamma ^P\) is the surface boundary of a patch P.

By substituting \(\delta \varvec{\varepsilon } = {\varvec{B}}\delta {{\mathbf{u }}}\) and using the material constitutive matrix \(\varvec{C}\) in Eq. 15, the stiffness matrix of a patch P may be obtained as:

where \(\varvec{B}\) is the strain-displacement matrix of the differential operator of the basis functions. Once the stiffness matrices of individual patches are obtained, assembly is performed to obtain the global stiffness matrix of the domain.

Recently, researchers have tried to exploit the features of IGA to create optimal structural designs [82, 121, 173]. [175] was the first contribution to IGA-based topology optimization (ITO) using trimmed spline basis functions. The control point based density allocation with the popular solid isotropic material penalization (SIMP) method was used by [159, 229]. A typical implementation of the minimum compliance problem using IGA-based SIMP method is shown in Algorithm 7.

In IGA, as discussed above, NURBS are applied to construct the complex structural geometry and boundaries and also conform to higher order continuities of the shape functions at the finite element nodes. The initial works on ITO used splines to present the structural boundaries [159, 175]. Researchers have reported the advantages of IGA coupled with LSM for getting 0/1 designs for complex materials and structures [210, 228]. An isogeometric structural optimization method for the topology optimization of structures using bi-directional evolutionary structural optimization (BESO) method was adopted in [210]. Also, the parameterized level set method (LSM) was integrated with IGA to get topologically optimized materials and structures in [207, 208].

IGA-based level-set topology optimization has been applied to material and structure level designs, including the optimization of shell structures [85,86,87, 97, 237], flexo-electric materials [80] and material microstructures [94]. Multi-objective topology optimization has been successfully implemented for plane elasticity problems by IGA-based LSM topology optimization framework [94]. [52] developed a phase field model with the IGA to solve the compliance minimization problem.

IGA-based moving morphable component (MMC) topology optimization methods were developed for compliance or volume minimization problems in [89, 225]. [67] recently presented a SIMP-based ITO method using density distribution function adopted from the concept of parameterized level set functions.

In the aforementioned discussion about the development of ITO methods, the popular topology optimization methods and IGA have been discussed, from the micro-structural or material description to the macro-structural models. Next, we provide a brief description about the applications of ITO methods to complex set of problems. The dynamic classes of problems in topology optimization have been considered for designing functionally graded materials with optimal eigenfrequencies [190], to characterize free vibration [191], and for fast explicit dynamic solver development [69].

Compliant mechanism design has also been attempted using ITO by [120], however, the framework needs to be tested on complex designs. Multi-resolution topology optimization has also been studied using IGA and various classes of problems, including plane stress, compliant mechanism and heat conduction [119, 211]. Multi-scale isogeometric topology optimization using NURBS basis has been implemented for the design of lattice structures [233] and layered beam designs [192].

[212] applied ITO to the multi-material distribution and functional graded materials. Since most of the ITO works are done at the structural level, the optimization of meta-materials has recently started picking up. Very recently, IGA-based optimization has been applied to the design of smoothed petal auxetic material structures [68], and a number of works are devoted to the optimization of 2D and 3D auxetic metamaterials using ITO [68], and architected materials [145, 227].

An obvious extension is using AR with a PCA-based ROM for ITO, given that the computational cost can scale up severely when using ITO. This, in our opinion, should be the subject of future research by interested groups.

6 Emerging Methods

Over the last few decades, it is clear that the science of computational mechanics has moved from insisting on classical mechanical models with rigorous mathematical development to accepting Soft Computing (SC) heuristic approaches and finding statistically significant patterns in material behavioral data. This is for good reason, these methods are usually very scalable as well as reliable. Some important examples are Genetic Algorithms/GAs (meta-heuristics), Machine Learning (ML), Artificial Neural networks (ANNs), Fuzzy Logic (FL) [96]. Note that some of the methods described in the section on AR could conceivably be included in this section, especially the ones involving PCA since these are classified under ML techniques. Deep learning is a subset of ML stemming from bio-inspired artificial neural networks (ANNs). In this section, we mostly focus on the applications of NNs, Deep Learning (DL) and Data-Driven methods.

Shallow NNs were the precursors to Deep Learning (DL) for training/testing data before computing power increased to its current level. Applications of shallow NNs in problems of structural optimization may be found in the available literature. [4] and [152] were the first authors to successfully integrate shallow NNs into structural optimization. [151] also used a shallow NN model in reliability based structural optimization.

In the context of ML, [127] used K-means clustering to reduce the dimensionality of the design domain in meta-modeling based topology optimization.

Open-source deep learning frameworks like Theano, Tensorflow and Keras promoted the application of DL in various fields.The use of NNs and DL for topology optimization is relatively new but it has gained traction in the past few years. [16] first proposed a heuristic approach for topology optimization where analytical sensitivities are difficult to obtain, by substituting approximate sensitivities using a trained ANN. An older (but slowly gaining popularity) branch of research appears to follow the so-called data-driven paradigm instead of replying on computationally demanding physics-based topology optimization using constitutive models. The theoretical ideal of this approach is that by using a sufficiently broad dataset that spans variations in loads, boundary conditions, material models, objective functions and design domains, one can train a regressor (given enough degrees of freedom) to construct a mapping from the input conditions of a given problem to its corresponding optimal topology [21]. [30] discussed the challenges associated with the data-driven techniques like inaccurate and sub-optimal structural predictions. [197] proposed a data-driven technique using PCA and a fully connected NN to learn the mapping between loading configurations and optimal topologies. [196] applied a critical instant analysis method to solve the optimization problem under force uncertainty.

Typical modifications of the ANN include convolutional neural networks (CNNs), recurrent neural networks (RNNs), auto encoders (AEs) and variational auto encoders (VAEs). Convolution operation in ANNs is a cross-correlation between input data and a convolutional filter, represented in terms of a weighting matrix. The convolution filter strides on the input image to produce an output feature map as output. [179] was likely the first to apply a deep convolutional encoder–decoder architecture to 2D topology optimization by framing it as an image segmentation problem, and training a meta-model to pixel-wise predict the final image (\(\varvec{\rho }\)). The proposed model had an hourglass shape with three layers: encoder network (6 layers), decoder network (mirror of the encoder network), and a final pixel-wise classification network. The model’s input was two grayscale images; the density distribution \(\varvec{\rho }^i\) obtained after the last topology optimization iteration, and the last performed update (gradient) of the densities \(\varvec{\rho }^i-\varvec{\rho }^{i-1}\). Classical SIMP was used for the initial iterations yielding a non-binary distribution; the NN was then used for segmentation of the resulting image to obtain a 0/1 solution.The model’s output (final predicted structure) was a grayscale image with the same grid resolution. A major issue was that their approach only considered the density distribution (\(\varvec{\rho }\) and its gradient as model training inputs, and ignored boundary conditions and key optimization parameters. Also the CNN could not predict a new structure that did not already exist in the training datasets.

Among various deep learning methods, generative models such as the Generative Adversarial Network (GAN), approximate a probability density function of the given data to try and learn the true input data distribution; and are widely used in computational physics. [234] used supervised learning to learn a target function for \(\varvec{\rho }\) from a training dataset, including boundary conditions and other parameters paired with the corresponding optimal topologies. A two-stage refinement was performed using GANs with variational auto encoders (VAE) to non-iteratively predict a near-optimal structure. The inputs in the form of loading and displacement boundary conditions were provided to their generative networks. The modified VAE could efficiently predict the optimized topology but it was difficult to train the neural network to predict a very detailed structure.

[114] proposed a Moving Morphable Components (MMC) explicit framework for generating training sets and used support vector regression (SVR) along with K-nearest-neighbors (KNN) ML models for mapping the optimal layout/topology and the external loading, in a bid to obtain “real time” design topology optimization. [115] also presented a DL-based non-iterative topology optimizer for conductive heat transfer structures, trained on black-and-white density distributions and generate near-optimal topologies. They used a two-stage hierarchical prediction-refinement pipeline consisting of a GAN for low resolution topology, coupled with a super resolution generative adversarial network (SRGAN) for a high resolution topology solution. CWGANs have the advantage of requiring limited training datasets that pre-satisfy the optimization conditions.

[163] used conditional Wasserstein generative adversarial networks (CWGAN) consisting of two deep CNNs: a generator and a discriminator, to replace conventional topology optimization while drastically reducing computationally cost. [96] integrated Deep Belief Networks (DBNs) into SIMP topology optimization and was able to drastically reduce the number of optimization iterations needed for the final solution. The striking feature of their methodology was that their model was only trained once using the topologies obtained for a simple 2D test minimum compliance test case of fixed size and with a fixed set of boundary and loading conditions, but the trained DBN was able to predict topologies for any 2D or 3D test case, different boundary/loading conditions and problem size. This performance gives us a glimpse into the full potential of AI if properly applied in this field.

ML and NN approaches probably give the best computational performance when sufficient computing power is available. The advantage of being able to side step or reduce the physics behind the system being optimized and work on data can allow for additional developments in soft computing to permeate into field of topology optimization, potentially allowing for real time structural design.

7 Conclusions

The challenges inherent in the admittedly computation-heavy large-scale topology optimization continue to hinder its widespread industrial use in this day and age, despite the availability of both computing power and resources, numerical methods, soft computing algorithms as well as manufacturing methods that can take full advantage of the methodology. The eventual goal of accelerating large-scale topology optimization would be real-time design obtaining directly manufacturable solutions, without sacrificing grid resolution. With that in mind, this paper has attempted to provide an honest and critical appraisal of developments in various areas related to attenuating these issues, such as High Performance Computing, AR, Multi-Grid methods, multi-level Reduced Order Models, Isogeometric Topology Optimization (ITO) and Deep Learning. Each approach comes with its own set of advantages and disadvantages, as we have seen. A judicious combination of the mentioned approaches, as is the case with some of the newest contributions in the literature, has been shown to yield superior acceleration compared with using them in standalone fashion. High-Performance Computing is an evolving field, and using both GPUs as well as CPUs with CUDA-aware MPI should be investigated for memory-intensive topology optimization problems. The combination of Multi-Grid methods and Reanalysis needs more investigation as we believe that this will reap the highest dividends out of all of the other approaches. Also with the rising popularity of Iso-Geometric Analysis, a potential area of research could be the use of Approximate Reanalysis and Machine/Deep Learning methods in ITO.

References

Aage N, Andreassen E, Lazarov B, Sigmund O (2017) Giga-voxel computational morphogenesis for structural design. Nature 550(7674):84–86. https://doi.org/10.1038/nature23911

Aage N, Andreassen E, Lazarov BS (2015) Topology optimization using petsc: an easy-to-use, fully parallel, open source topology optimization framework. Struct Multidiscip Optim 51(3):565–572. https://doi.org/10.1007/s00158-014-1157-0

Aage N, Lazarov BS (2013) Parallel framework for topology optimization using the method of moving asymptotes. Struct Multidiscip Optim 47(4):493–505. https://doi.org/10.1007/s00158-012-0869-2

Adeli H, Park HS (1995) A neural dynamics model for structural optimization—theory. Comput Struct 57(3):383–390. https://doi.org/10.1016/0045-7949(95)00048-l

Allaire G, Jouve F, Michailidis G (2016) Thickness control in structural optimization via a level set method. Struct Multidiscip Optim 53(6):1349–1382. https://doi.org/10.1007/s00158-016-1453-y

Allaire G, Jouve F, Toader AM (2004) Structural optimization using sensitivity analysis and a level-set method. J Comput Phys 194(1):363–393. https://doi.org/10.1016/j.jcp.2003.09.032

Amir O (2014) Revisiting approximate reanalysis in topology optimization: on the advantages of recycled preconditioning in a minimum weight procedure. Struct Multidiscip Optim 51(1):41–57. https://doi.org/10.1007/s00158-014-1098-7

Amir O, Aage N, Lazarov BS (2013) On multigrid-CG for efficient topology optimization. Struct Multidiscip Optim 49(5):815–829. https://doi.org/10.1007/s00158-013-1015-5

Amir O, Bendsoe MP, Sigmund O (2009) Approximate reanalysis in topology optimization. Int J Numer Methods Eng 78(12):1474–1491. https://doi.org/10.1002/nme.2536

Amir O, Kirsch U, Sheinman I (2008) Efficient non-linear reanalysis of skeletal structures using combined approximations. Int J Numer Methods Eng 73(9):1328–1346. https://doi.org/10.1002/nme.2128

Amir O, Sigmund O (2010) On reducing computational effort in topology optimization: how far can we go? Struct Multidiscip Optim 44(1):25–29. https://doi.org/10.1007/s00158-010-0586-7

Amir O, Sigmund O, Lazarov BS, Schevenels M (2012) Efficient reanalysis techniques for robust topology optimization. Comput Methods Appl Mech Eng 245–246:217–231. https://doi.org/10.1016/j.cma.2012.07.008

Amir O, Stolpe M, Sigmund O (2010) Efficient use of iterative solvers in nested topology optimization. Struct Multidiscip Optim 42(1):55–72. https://doi.org/10.1007/s00158-009-0463-4

Amsallem D, Zahr MJ, Farhat C (2012) Nonlinear model order reduction based on local reduced-order bases. Int J Numer Methods Eng 92(10):891–916. https://doi.org/10.1002/nme.4371

Andrei N (2017) A SQP algorithm for large-scale constrained optimization: SNOPT. In: Continuous nonlinear optimization for engineering applications in GAMS technology, pp 317–330. Springer. https://doi.org/10.1007/978-3-319-58356-3_15

Aulig N, Olhofer M (2014) Topology optimization by predicting sensitivities based on local state features

Awan AA, Hamidouche K, Venkatesh A, Panda DK (2016) Efficient large message broadcast using NCCL and CUDA-aware MPI for deep learning. In: Proceedings of the 23rd European MPI users group meeting on EuroMPI 2016. ACM Press. https://doi.org/10.1145/2966884.2966912

Babuška I, Guo B (1992) The h, p and h-p version of the finite element method; basis theory and applications. Adv Eng Softw 15(3–4):159–174. https://doi.org/10.1016/0965-9978(92)90097-y

Babuska I, Tempone R, Zouraris GE (2004) Galerkin finite element approximations of stochastic elliptic partial differential equations. SIAM J Numer Anal 42(2):800–825. https://doi.org/10.1137/s0036142902418680

Bach C, Ceglia D, Song L, Duddeck F (2019) Randomized low-rank approximation methods for projection-based model order reduction of large nonlinear dynamical problems. Int J Numer Methods Eng 118(4):209–241. https://doi.org/10.1002/nme.6009

Banga S, Gehani H, Bhilare S, Patel S, Kara L (2018) 3d topology optimization using convolutional neural networks. arXiv:1808.07440

Bazilevs Y, Calo V, Cottrell J, Evans J, Hughes T, Lipton S, Scott M, Sederberg T (2010) Isogeometric analysis using t-splines. Comput Methods Appl Mech Eng 199(5–8):229–263. https://doi.org/10.1016/j.cma.2009.02.036

Beckers M (1999) Topology optimization using a dual method with discrete variables. Struct Optim 17(1):14–24. https://doi.org/10.1007/bf01197709

Bendsoe M (1989) Optimal shape design as a material distribution problem. Struct Optim. https://doi.org/10.1007/BF01650949

Bendsøe MP, Díaz A, Kikuchi N (1993) Topology and generalized layout optimization of elastic structures. In: Topology design of structures, pp 159–205. Springer Netherlands

Bendsøe MP, Kikuchi N (1988) Generating optimal topologies in structural design using a homogenization method. Comput Methods Appl Mech Eng 71(2):197–224. https://doi.org/10.1016/0045-7825(88)90086-2

Bendsoe MP, Sigmund O (2004) Topology optimization: theory, methods and applications. Springer, Berlin

Bendsøe MP (1995) Optimization of structural topology, shape, and material. Springer, Berlin. https://doi.org/10.1007/978-3-662-03115-5

Bitzarakis S, Papadrakakis M, Kotsopulos A (1997) Parallel solution techniques in computational structural mechanics. Comput Methods Appl Mech Eng 148(1–2):75–104. https://doi.org/10.1016/s0045-7825(97)00028-5

Bobby S, Spence SMJ, Kareem A (2016) Data-driven performance-based topology optimization of uncertain wind-excited tall buildings. Struct Multidiscip Optim 54(6):1379–1402. https://doi.org/10.1007/s00158-016-1474-6

Bogomolny M (2010) Topology optimization for free vibrations using combined approximations. Int J Numer Methods Eng 83:617–636. https://doi.org/10.1002/nme.2778

Borrvall T, Petersson J (2001) Large-scale topology optimization in 3d using parallel computing. Comput Methods Appl Mech Eng 190(46–47):6201–6229. https://doi.org/10.1016/s0045-7825(01)00216-x

Borrvall T, Petersson J (2003) Topology optimization of fluids in stokes flow. Int J Numer Methods Fluids 41(1):77–107. https://doi.org/10.1002/fld.426

Breitkopf P, Touzot G, Villon P (1998) Consistency approach and diffuse derivation in element free methods based on moving least squares approximation. Comput Assist Mech Eng Sci 5(4):479–501

Brezina M, Doostan A, Manteuffel T, McCormick S, Ruge J (2014) Smoothed aggregation algebraic multigrid for stochastic PDE problems with layered materials. Numer Linear Algebra Appl 21(2):239–255. https://doi.org/10.1002/nla.1924

Bruyneel M, Fleury C. Composite structures optimization using sequential convex programming. In: Computational techniques for materials, composites and composite structures. Civil-Comp Press. https://doi.org/10.4203/ccp.67.6.1

Buhl T (2002) Simultaneous topology optimization of structure and supports. Struct Multidiscip Optim 23(5):336–346. https://doi.org/10.1007/s00158-002-0194-2

Carlberg K, Forstall V, Tuminaro R (2016) Krylov-subspace recycling via the POD-augmented conjugate-gradient method. SIAM J Matrix Anal Appl 37(3):1304–1336. https://doi.org/10.1137/16m1057693

Challis VJ, Roberts AP, Grotowski JF (2013) High resolution topology optimization using graphics processing units (GPUs). Struct Multidiscip Optim 49(2):315–325. https://doi.org/10.1007/s00158-013-0980-z

Chan TF, Mathew TP (1994) Domain decomposition algorithms. Acta Numerica 3:61–143. https://doi.org/10.1017/s0962492900002427

Chang GH, Yong F (1997) Optimal 3d stiffener design with frequency considerations. Adv Eng Softw 28(8):525–531. https://doi.org/10.1016/s0965-9978(97)00033-1

Chen M, Qi W, Wang Y (2019) Uniform convergence of v-cycle multigrid finite element method for one-dimensional time-dependent fractional problem. Appl Math Lett 98:49–56. https://doi.org/10.1016/j.aml.2019.05.039

Chen TY, Wu SC (1998) Multiobjective optimal topology design of structures. Comput Mech 21(6):483–492. https://doi.org/10.1007/s004660050327

Choi Y, Oxberry G, White D, Kirchdoerfer T (2019) Accelerating design optimization using reduced order models

Coelho PG, Cardoso JB, Fernandes PR, Rodrigues HC (2011) Parallel computing techniques applied to the simultaneous design of structure and material. Adv Eng Softw 42(5):219–227. https://doi.org/10.1016/j.advengsoft.2010.10.003

Coelho RF, Breitkopf P, Knopf-Lenoir C (2008) Model reduction for multidisciplinary optimization—application to a 2d wing. Struct Multidiscip Optim 37(1):29–48. https://doi.org/10.1007/s00158-007-0212-5

Collet M, Bruggi M, Duysinx P (2016) Topology optimization for minimum weight with compliance and simplified nominal stress constraints for fatigue resistance. Struct Multidiscip Optim 55(3):839–855. https://doi.org/10.1007/s00158-016-1510-6

Collet M, Noël L, Bruggi M, Duysinx P (2018) Topology optimization for microstructural design under stress constraints. Struct Multidiscip Optim 58(6):2677–2695. https://doi.org/10.1007/s00158-018-2045-9

Darema F (2011) SPMD computational model. Springer, Boston, pp 1933–1943. https://doi.org/10.1007/978-0-387-09766-4_26

Deaton JD, Grandhi RV (2014) A survey of structural and multidisciplinary continuum topology optimization: post 2000. Struct Multidiscip Optim 49(1):1–38. https://doi.org/10.1007/s00158-013-0956-z

de Boor C (1978) A practical guide to splines. Springer, New York. https://doi.org/10.1007/978-1-4612-6333-3

Dedè L, Borden MJ, Hughes TJR (2012) Isogeometric analysis for topology optimization with a phase field model. Arch Comput Methods Eng 19(3):427–465. https://doi.org/10.1007/s11831-012-9075-z

DeRose GCA Jr, Díaz AR (2000) Solving three-dimensional layout optimization problems using fixed scale wavelets. Comput Mech 25(2–3):274–285. https://doi.org/10.1007/s004660050476