Abstract

The maximum size constraint restricts the amount of material within a test region in each point of the design domain, leading to a highly constrained problem. In this work, the local constraints are gathered into a single one using aggregation functions. The challenge of this task is presented in detail, as well as the proposed strategy to address it. The latter is validated on different test problems as the compliance minimization, the minimum thermal compliance, and the compliant mechanism design. These are implemented in the MATLAB software for 2D design domains. As final validation, a 3D compliance minimization problem is also shown. The study includes two well-known aggregation functions, p-mean and p-norm. The comparison of these functions allows a deeper understanding about their behavior. For example, it is shown that they are strongly dependent on the distribution and amount of data. In addition, a new test region is proposed for the maximum size constraint which, in 2D, is a ring instead of a circle around the element under analysis. This slightly change reduces the introduction of holes in the optimized designs, which can contribute to improve manufacturability of maximum size–constrained components.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Since the seminal work of Bendsøe and Kikuchi (1988), a huge amount of contributions have allowed to establish topology optimization as a practical design tool. The method looks for the optimal material distribution within a design space by minimizing an objective function subject to a set of design constraints. The concept is developed in many approaches, see, e.g., Sigmund and Maute (2013) and Deaton and Grandhi (2014), where density-based stands out due to the easy adaptation to finite element codes. The process is iterative and illustrates the metamorphosis of the design space, a gradual change in each iteration that converts the design space into an innovative, complex, and high-performance structure. However, mainly due to technological limitations, the optimized structure sometimes is solely a conceptual design idea since the solution can not be manufactured. For this reason, manufacturing constraints became one of the important topics in topology optimization, since they impose the appearance of manufacturable designs and reduce the need of postprocessing. Perhaps, the most important manufacturing constraint is the minimum size because it is related to the resolution allowed by the fabrication process. The reader is referred to the work of Lazarov et al. (2016) for a deeper insight on the subject.

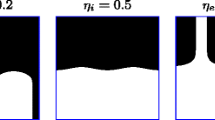

Length scale control could be owed not only to manufacturing limitations, but also to include indirect desired properties on the design. For example, the maximum size of the solid phase could be a tool for designers to deal with overheating-related problems in additive manufacturing by restricting the size of bulky parts Thompson et al. (2016), or to improve the performance of structures under local damage by diversification of the load path with the appearance of redundant members Jansen et al. (2014). In addition, the maximum size could be required for aesthetic reasons due to its ability to produce surprising changes in the material distribution, as exemplified in Fig. 1. Applications of the maximum size in fluid flows are mentioned in the pioneering work of Guest (2009a), where the reader is referred for a more complete discussion. In his work, the maximum size control is enforced by local constraints which restrict the amount of material in the neighborhood of each point in the design domain. The method proves to be robust in the context of structural and fluid topology optimization, but it demands a big computational cost due to the large number of constraints that are introduced in the optimization problem. Then Zhang et al. (2014) reduce the amount of constraints by collecting those that belong to the structural skeleton. The main drawback is the neglected sensitivity of the skeleton which makes the solution more likely to fall into a local optima. Later, Lazarov and Wang (2017) present two methods to impose maximum size. The first one applies band-pass filters on the frequency domain after a Fourier transform. The method produces manufacturable black and white designs but the maximum size is loosely controlled. The second method is based on morphological operators and provides more control over the geometry. Their method shows a satisfactory performance for compliance minimization and the compliant mechanism design. However, Lazarov and Wang (2017) encounter some geometrical difficulties in the optimized design: a large number of hollow circles in the structure and a wavy pattern in the vicinity of the connections between solid structural elements, which can lead to a lost of manufacturability and a reduction of performance. More recently. Carstensen and Guest (2018) propose a projection-based method to control the maximum length scale. The method is based on the multiple phase projection strategy (Guest 2009b), but they reduce the number of design variables using weighting functions. They report multiple 2D benchmark problems satisfying the desired length scale. The disadvantage of the multiple phase projection method is that it uses a series of weighting and projection functions that make the method highly non-linear.

Aggregation functions are well known in the field of stress constraints in topology optimization. They were initially introduced by Yang and Chen (1996) to reduce the size of highly constrained problems. Among the popular functions in topology optimization are the KS (Kreisselmeier – Steinhauser) function, p-norm, and p-mean (see for instance, Yang and Chen 1996; Duysinx and Sigmund 1998). These are smooth and differentiable approximations of the max(⋅) function to resort to gradient-based optimizers. Unfortunately, the smooth approximation brings numerical issues, e.g., the non-linearity of the method increases as the function approaches the maximum value, which is essential to capture the critical constraints. In the last decade, a large amount of contributions have addressed this issue. Among others, we can cite the work of París et al. (2010). They proposed to aggregate the constraints by groups; this increases the number of global constraints but reduces the non-linearity of the problem. Despite the known drawbacks, the successful idea of the aggregation is still applied in the field since it is an efficient way to solve large and highly constrained problems in a reasonable amount of time.

However, to the best of our knowledge, aggregation functions have not been successfully applied in the maximum size field. Among the few works that can be found in the literature, we can cite Guest (2009a) and Wu et al. (2017, 2018). In the pioneering work, Guest (2009a) highlights the need of using aggregation functions to reduce the computation time. Motivated by this fact, Guest mentions that barrier functions were tested but results were not encouraging enough to be reported. More recently, Wu et al. (2017, 2018) use p-mean function to aggregate a local volume constraint and produce sparse structures that mimic bone-like porous structures. Their method is related to the length scale problem presented by Guest (2009a) but differs from an exact formulation of the maximum size. This will be discussed with more details later.

In this work, we take over the maximum size formulation proposed by Guest (2009a) to perform an efficient aggregation of the local constraints. The challenge of this task is exposed in detail as well as the proposed strategy to address it. In addition, we introduce another test region to limit the amount of material which reduces the hollow circles introduced by the constraint. The developments are validated on 2D domains in classical linear problems such as compliance minimization, heat transfer problem, and the compliant mechanism design.

The paper is organized as follows. In Section 2, we describe the formulation of the topology optimization problems addressed in this work. In Section 3, we recall the Guest’s formulation to impose the maximum size. Then we introduce a new test region to count the amount of material. Section 4 presents the aggregation functions used in this work, the difficulties of aggregating the maximum size constraints, and the proposed strategy. Section 5 gathers the results and discussions, Section 6 the final conclusions of this work, and Section 7 provides some indications to facilitate the replication of results.

2 Problem formulation and definition of test cases

The proposed method to introduce maximum size control in topology optimization is tested on usual problems of literature, i.e., the compliance minimization, the minimum thermal compliance, and the compliant mechanism design. In each problem, we solve some of the well-known test cases, i.e., the MBB beam, the squared heat sink, and the compliant force inverter, which are depicted in Fig. 2. These domains are discretized using four-node quadrilateral elements and they are extended on the boundaries to facilitate the treatment of the filter (Sigmund 2007). The extension is represented with white squares outside the design domain in Fig. 2. Here, we define Ψ as the set of indexes that contains all the element numbers in the design domain including those on the extended domain, while Φ contains only the indexes whose elements belong to the optimizable design space, thus Φ ⊂ Ψ as shown in Fig. 2.

The methods presented in this work were implemented in the free PolyTop MATLAB code (Talischi et al. 2012), which is slightly modified to solve the heat transfer problem and the compliant mechanism design, as well as to include the Method of Moving Asymptotes as solver (Svanberg 1987). The MATLAB environment is well known in the topology optimization community because of the free access codes that are available, see, e.g., Sigmund (2001), Andreassen et al. (2011), and Talischi et al. (2012). It is also known that the best performance is obtained with loop vectorization and memory preallocation. Thus, throughout the article, the equations will be presented in such a way to allow an efficient use of the software. In addition with this paper, a sample MATLAB implementation of the maximum size constraint is provided for use with PolyTop (Talischi et al. 2012) but it can be adapted to other topology optimization scripts.

Since the aforementioned problems share a common basis, we present a generic formulation of the optimization problem given as:

The objective is the linear function \(\boldsymbol {t}^{\intercal }\boldsymbol {u}\) defined in (1a). For compliance minimization and heat conduction problems, t represents the input force/heat flux over the domain, i.e., is equal to the array f. For the compliant mechanism, t is an array that contains value 1 at the output degree of freedom and 0 otherwise. The array u contains the nodal displacements/temperatures which are obtained after solving the state equation (1b).

The design variables on the extended design domain are set to 0 in (1f). For other design variables, we use a three-field scheme (see Sigmund and Maute 2013) which utilizes the design field ρ, a filtered field \(\tilde {\boldsymbol {\rho }}\), and a physical field \(\bar {\boldsymbol {\rho }}\). The filtered field \(\tilde {\boldsymbol {\rho }}\), used to ensure mesh independency and checkerboard-free solutions, is computed as:

where DI is the matrix that contains the linear weights of the filter. Its components are defined as:

Here, wi, j represents the linear weight of the variable j in the filtering of variable i. dfil is the diameter of the filtering region. xi and xj are the locations at the centroid of the elements i and j, respectively, where i and j ∈Ψ. The condition applied in (3) avoids the computation of the filter on the passive elements. Without this treatment, the DI matrix would be identical to the P matrix presented by Talischi et al. (2012).

The physical field \(\bar {\boldsymbol {\rho }}\) is obtained from the filtered field \(\tilde {\boldsymbol {\rho }}\) using a smoothed Heaviside projection defined as:

where β and μ control the steepness and the threshold of the projection, respectively. The projection (5) is suited for the robust design approach (Wang et al. 2011). However, here, it is used because it offers smoother convergence than the classic projection proposed by Guest et al. (2004).

The aim of the projection (5) in this work is to reduce the amount of gray elements. This in turns promotes the minimum size of the solid phase. However, as shown by (Wang et al. 2011), simple projection strategies do not ensure minimum length scale control since they do not prevent small local features as the well-known one-node connected hinges. Therefore, results in this work are not exempt from small structural details that do not fulfil minimum size. Knowing this, in this work we call dmin the theoretical minimum size obtained by the smoothed Heaviside projection. As shown by Wang et al. (2011), dmin depends on the filter diameter dfil and on the threshold parameter μ. In this work, we use μ = 0.25, therefore, dmin = 0.5dfil (for further details, see Wang et al. 2011; Qian and Sigmund 2013).

The global stiffness/conductivity matrix K is assembled from the local stiffness matrices defined as ki = Eik0. Here, k0 denotes the element stiffness matrix of base material. Ei is the material stiffness/conductivity obtained by using the modified SIMP (Sigmund 2007) given as:

where η is the penalization of the material interpolation law. For the three problems, the parameters η and β are defined by a continuation method. This is a common practice in topology optimization as it is known to help avoid convergence to undesirable local minima (see for instance, Sigmund and Maute 2013; Rojas-Labanda and Stolpe 2015). E0 is the stiffness/conductivity of the solid phase equal to 1 in all three problems. Emin is a small number to avoid the numerical singularity issue in the finite element analysis. For the compliance minimization and force inverter problems, Emin = 10− 6, while for the heat sink Emin = 10− 3.

The volume constraint is in (1c), where V∗ is the upper bound of the constraint and v the array that gathers the volume of all the elements. The maximum size is imposed through the global constraint Gms in (1d).

3 Local maximum size constraint

The method to restrict the amount of material and impose a maximum size was originally proposed by Guest (2009a). As mentioned before, the method showed to be robust in the context of structural and fluid topology optimization and, therefore, it is selected as the fundamental formulation in this work. The local constraint is defined as:

where Ωe is the maximum size region of the element e and ε is a small positive number used for relaxing the problem. In this work, we use ε = 0.05. The most usual approach in literature is to define the test region Ωe as a circle around the element e. This is shown in Fig. 3a and is defined as:

where dmax represents the maximum size defined by the user.

The constraint (7) restricts the amount of material around the element e by imposing, at least, the amount ε of void in Ωe. Since gray elements contribute to the void counting, they must be penalized. To this end, we use the same SIMP exponent η as suggested by Guest (2009a). Equation (7) must be evaluated at every design point; therefore, it is convenient to vectorize the computation of the local constraints as follows:

where DII is the matrix that stores the maximum size neighborhoods and δ the array containing the measure of void. These entities are defined in (10) and (12), respectively. In the Appendix, the DII matrix is assembled in lines 36–44 and δ is computed in line 26.

3.1 New test region for maximum size

The maximum size constraint splits the bulky material during the optimization process by introducing void in each test region Ωi. In 2D, void introduced by the constraint can form circular or channel-shaped cavities. Designers may prioritize a cavity type depending on the manufacturing process. For instance, if the purpose of the constraint is to produce bar-like structures, then channel-shaped cavities must be prioritized over circular ones.

We have noticed in 2D that most circular cavities tend to be placed in the connection of bars. To illustrate this, consider Fig. 4a which shows the connection of two bars during the optimization process. The red circle shows the neighborhood of a potentially violated constraint. There, to satisfy the constraint and keep the connection at the same time, two possibilities are presented: either introduce a hole as in Fig. 4b or remove the edges as in Fig. 4c. Thus, aiming to reduce the introduction of small circular holes, we propose to take the internal zone out of the analysis, as Fig. 4d shows. We consider as inner zone the minimum size region imposed through the Heaviside projection (see Fig. 3b). Therefore, the proposed test region is defined as:

Representation of a connection with maximum size constraints during the optimization process. In a the circular test region. In b and c, two possibilities to satisfy the maximum size constraint and keep the material connectivity. In d, the inner zone is removed from the circular test region to avoid the introduction of a hole

Even if a circular hole is introduced in the middle of the connection when using the annular region, the cavity is expected to be of size dmin, so that void elements can pass into the annular zone through the inner diameter of the ring.

It is important to note that the annular test region does not affect the maximum size imposed by the user. The only difference with the circular region is that void elements are forced to be placed in the outer ring.

4 Aggregation of local constraints

4.1 Aggregation functions

Two aggregation functions are tested in the context of maximum size, p-mean and p-norm. These are defined as follows:

where Pm and Pn are the p-mean and p-norm functions, respectively. N is the total amount of design variables in Φ, p is the exponent that controls the accuracy of the aggregation, and se is the quantity of interest (QoI) that is being aggregated.

For positive values of se and large p exponents, it is known that both aggregation functions converge to the maximum value of the data set (see for instance, Duysinx and Sigmund 1998). It is widely known also that the main difference between these functions is that p-norm overestimates the maximum value while p-mean underestimates it. However, within the context of topology optimization and to the best of our knowledge, it has not been mentioned that these functions are also highly dependent on the data distribution. This can be clearly seen by comparing the aggregation curves of two sets of data, such as those shown in Fig. 5a. The aggregation of those sets using p-mean and p-norm is shown in Fig. 5b. For this particular case, it is interesting to note that p-norm, with p > 12, provides a better approximation of the maximum when data is located mostly at low values (set 1). But the opposite occurs when data is located at higher values. In this configuration (set 2), p-mean provides a better approximation of the maximum, even for low p exponents. This also shows that p-mean is less sensitive to the exponent p when data is placed at higher values, since the approximation of p-mean is slightly improved with the increment of p.

In a are the histograms of two randomly produced sets of data with values between 0 and 1. In b are the aggregation curves using p-mean and p-norm. In c is the aggregation of the set 2 with different number of variables. In d is the sensitivity information using p-norm for data set 2. In the latter, sensitivities are incrementally sorted and normalized

Another interesting observation is their dependency to the amount of data. As Fig. 5c shows, the greater the amount of data, the lower the performance of the p-norm function. This does not apply for p-mean, since it has the scaling factor N− 1 that places the function around the arithmetic mean, regardless the quantity of data. The same situation arises when comparing the KS and the lower bound KS (see for instance Verbart et al. 2017, for definitions). The latter has the scaling factor N− 1 and therefore it is less sensitive to the amount of aggregated data.

The main attractiveness of these aggregation functions is that they provide sensitivity information, which is essential for gradient-based optimizers. Unfortunately, as shown in Fig. 5d, as p increases the sensitivities of the smooth aggregation function resemble those of the max(s) function, for which only one value of the sensitivities is different from zero. This issue affects the linearization performed by gradient-based optimizers since sensitivity information tends to be lost for closer approximations of the max(s) function. In this situation, few local constraints are being considered by the optimizer and when updating design variables, a new set of local constraints will stand out as critical. A common practice to reduce oscillations, which is also adopted in this work, is to limit the maximum change of the design variables (see for instance, Yang and Chen 1996). Being ml the move limit, the maximum change of the design variables is controlled as follows:

4.2 Adopted aggregation strategy

Given that the constraint gi ∈ [ε-1, ε], the QoI is chosen such that si ∈ [0, 1]. Therefore, QoI is defined as follows:

Considering Pm/n as either p-mean or p-norm, the global constraint Gms is recovered as follows:

The purpose of the aggregation is to catch the most critical constraints from the set (9), i.e., the maximum size constraints with positive values. The main difficulty of this task is that few constraints are being violated at every iteration. These ones are located in the narrow band [0, ε] shown in the histogram of Fig. 6a. This small band is a difficult target for the aggregation functions. As shown in Fig. 6b, large p values are required to catch the band [0, ε].

The band [0, ε] is narrow because ε must be small. The ε parameter acts as a buffer of voids in the maximum size constraint. It should be big enough to split the bulky material but small enough to avoid affecting the length scale. As big value ε reduces the allowable amount of solid material within the test region, therefore it reduces the maximum size defined by the user. In addition, in the connection of bars, the Heaviside projection and the maximum size constraint act in opposition. As shown in Fig. 7a, the maximum size constraint tries to introduce amount ε of void, but the Heaviside projection tries to introduce material. This contradiction compromises the convergence of the problem.

The attractiveness of using big ε values, for example greater than 0.2, is that the aggregation process becomes easier since the band [0, ε] is no longer narrow. But this approach works only if the minimum size of the solid phase is sufficiently small to not contradict the maximum size constraint, as shown in Fig. 7b. The previous condition forms part of the method proposed by Wu et al. (2018) to produce infill structures. The difference between the later method and the method that controls the maximum size can be seen in Fig. 8. There, both solutions have the same maximum size diameter dmax, but ε in Fig. 8a is 10 times bigger than in Fig. 8b. The attached code computes the maximum size constraint with the method proposed in the following section, but it can be easily adapted to obtain the method that produces infill structures, for which the reader is referred to Section 7.

The adopted strategy in this paper uses (17) as QoI and a large p value (greater than 100) to ensure the capturing of the band [0, ε]. The non-linearity of the constraint is addressed in the optimizer by restricting the maximum change of the design variables. However, the slow evolution of the design variables demands a big number of iterations, being this the main drawback of the method. Each example reported in this article is solved using 450 iterations, with a continuation method defined as follows. In the three problems, the SIMP exponent η is initialized at 1.0 and is increased in increments of 0.25 to a maximum of 3.0. The Heaviside parameter β is initialized at 1.5 and is increased by a factor 1.5 to a maximum of 38. The continuation procedure includes 50 iteration between parameters increment.

We have noticed that at the beginning of the optimization process, when η is still smaller than 3, a large ml can be used without compromising the convergence of the objective function. This is probably due to the fact that a significantly larger amount of sensitivities are different from 0 at earlier stages of the optimization problem, as shown in Fig. 6c where p = 100. As the optimization progresses and the values of μ and β rise, smaller ml should be used for avoiding the divergence of the optimization process. In practice, we start the optimization with ml = 0.3 and finish with ml = 0.05. During the optimization process, a linear interpolation sets ml as follows:

This scheme is preferable than introducing a small ml from the beginning of the optimization problem because the slow evolution of the design variables can demand even a larger amount of iterations or promote the design topology get locked in the early stages of the optimization. In the later situation, we have observed designs with disconnected material, or designs that do not use all the allowed material. A similar observation is described by Lazarov and Wang (2017).

Figure 9 shows the evolution of the objective function \(\boldsymbol {t}^{\intercal } \boldsymbol {u}\) and of the constraint Gms. The problem corresponds to the solution shown in Table 2, using p = 100 and N = 30000. The picks on the objective and on the constraint come from the abrupt increase of the parameters η and β. It is worth mentioned that problems shown in this article start from an uniform initial guess satisfying both the volume and the maximum size constraint.

Convergence plot of the MBB beam design shown in Table 2, obtained with p = 100 and N = 30000

4.3 Sensitivity analysis

Taking into account that we use column arrays, the sensitivity expression of the global constraint is obtained by applying the chain rule as follows:

The sensitivities in (21) consider p-mean as aggregation function. The expression using p-norm is identical to (21) but without the term N− 1. Here, \(J_{\bar {\rho }}\), Jδ, and Js respectively represent the Jacobian matrix of the smoothed Heaviside projection, the measure of void, and the QoI to aggregate. These matrices are defined as:

with \(\bar {\rho }^{\prime }\), δ′, and s′ being the derivatives of (5), (12), and (17), respectively, and NΨ being the total number of elements in Ψ.

5 Numerical examples and discussion

The problems addressed here were introduced in Section 2. These ones are implemented in the PolyTop code (Talischi et al. 2012) as well as the proposed developments related to the maximum size constraint. In the Appendix, it is attached a sample MATLAB code that includes the formulation of the constraint and its sensitivities. This code is intended for integration with PolyTop and as a supporting material of the theoretical details discussed in this paper.

In this section, the reported objective values Obj are normalized with respect to the solution that does not consider the maximum size constraint. In addition, a measure of discreteness Mnd (Sigmund 2007) is also provided for each result. The measure is defined as follows:

When two topologies are compared, a topology equality index is provided which is defined in (24), where VTot is the total volume of the design domain. \(\bar {\boldsymbol {\rho }}^{(a)}\) and \(\bar {\boldsymbol {\rho }}^{(b)}\) are the two density maps being compared. For two identical maps Teq = 100, and for two totally different maps, Teq = 0.

5.1 Comparison of test regions

The three optimization problems introduced in Section 2 are solved using the circular and annular test regions. For the MBB beam, V∗ = 40%, dfil = 0.08L, and dmax = 0.06L. For the force inverter, V∗ = 30%, dfil = 0.03L, and dmax = 0.025L. And for the heat sink, V∗ = 30%, dfil = 0.025L, and dmax = 0.031L. The maximum size constraint is formulated using p-mean function with p = 200. Table 1 gathers the results.

Teq, reported in parenthesis, shows that designs obtained with different test regions share more than 76% of the topology. The main difference between them is that the circular region introduces more hollow circles into the topology. These are highlighted with red lines in the Table 1. The annular region introduces more channel-shaped cavities than circular ones. In addition, circular cavities in the designs obtained with the annular region are larger in diameter. These slight changes in the topology reduce the complexity of the component, which improves manufacturing. We observed a similar effect of the annular region, but less noticeable, with the inverse projection proposed by Almeida et al. (2009), i.e., when weights le, i decrease as they approach the center of the circular test region.

The annular test region improved structural performance in the MBB beam, but in the other test cases it was detrimental to the mechanical performance. However, the objective functions were affected by less than 0.7%, which can be considered negligible compared to the topological change. Therefore, changing the test region from Ω(c) to Ω(r) reduces the number of cavities in the design, having a greater impact on the topology than on the structural performance.

Another attractiveness of the annular region is that it makes possible to alleviate the memory load when dealing with large-scale topology optimization. There, maximum size neighborhoods easily come to contain thousands of elements, so removing the internal zone has a significant impact on the required memory to store the weights le, i in DII matrix.

5.2 Mesh dependency

In this example, the compliance minimization and the force inverter problems are solved using different discretizations. For the MBB beam, V∗ = 40%, dmax = 0.06L, dmin = 0.04L, and dfil = 0.08L. For the force inverter, V∗ = 30%, dmax = 0.04L, dmin = 0.02L, and dfil = 0.04L. For both problems, the annular test region Ω(r) is used to compute the local maximum size constraint. Solutions using p-mean and p-norm are summarized in Tables 2 and 3, respectively. Designs for two different p values are shown in each Table. The number of elements N is found on top of each solution, next to the indicator Teq that compares solutions obtained with different p values but same aggregation function. Comments about the essential differences between the chosen aggregation functions are listed below.

p-mean promotes mesh independence: When comparing topologies obtained with different discretizations but same p, it can be seen that the position and length of the larger bars are practically the same when using p-mean. For instance, taking into account the bar labelling shown in the MBB beam design with p-mean, p = 100 and N = 7500, it can be seen that bars 1 to 8 are easily recognizable in all discretizations. In the force inverter, the number of bars that compose the right diagonal structure is 3 in all discretizations. The main difference is in the central bar which tends to shrink as the number of elements in the discretization increases. However, in the designs obtained with p-norm, it is difficult to recognize a common topology. The internal number of bars in the MBB beam is different in all topologies, and in the force inverter, the right diagonal structure does not have the same number of bars in all discretizations.

Figures 10 and 11 were obtained from the MBB beam designs shown in Table 2, using p-mean with p = 100. These Figures display the constraints information of two different iterations, an early one and the last one. It can be seen that the local formulation of the maximum size constraint allows nearly the same data distribution, regardless of the discretization. It is precisely this property of the maximum size constraint that allows p-mean to promote mesh independence, because, as discussed in Section 4.1, for the same data distribution, the amount of data does not affect the p-mean function. Since histograms of different discretizations are not exactly the same, small differences between aggregation curves are expected. This probably justifies the subtle differences between the topologies obtained with p-mean, for instance, the absence of bar 7 in the MBB beam design obtained with p-mean, p = 300 and N = 120000.

p-mean underestimates: Because p-mean underestimates the most critical restriction, it introduces a lower penalization in the design. This is why the designs obtained with p-mean have a better structural performance than those obtained with p-norm. Increasing p when using p-mean increases the penalization and reduces structural performance, as can be seen in the objective values in Table 2 for designs obtained with different p. Although p-mean with p = 100 underestimates a significant fraction of critical restrictions, as can be seen in Fig. 11, it also divides the bulky material. There, the violated restrictions are mainly found in the union of bars, as can be seen in Fig. 11a. This is interesting since the restriction limits the maximum size of the bars but allows a rigid bond between them.

p-norm overestimates: Overestimating the local constraint (7) is equivalent to increasing ε. For this reason, p-norm places more voids in the test region Ω resulting in designs with larger cavities, with greater number of structural elements and thinner too. Having more partitioned material increases the perimeter of the design, this is why most of the results using p-norm have a greater Mnd.

p-mean is less parameter dependent: The disadvantage of the aggregation strategy is that it adds additional parameters to the optimization problem, which must be tuned by the user. In the context of maximum size constraints, we have observed that when p-mean is used, the parameters that most influence the final topology are the move limits ml and the chosen continuation procedure. Compared to these, the value of p has a smaller effect. As can be seen in Table 2, the results obtained with different p are very similar. These share about 80% of the topology. This is consistent with Section 4.1, which shows that the p-mean function is less sensitive to p than the p-norm.

Minimum length scale: As mentioned in Section 2, the adopted projection strategy does not guarantee complete control of the minimum size, i.e., local mesh convergence is not reached, especially in the areas where bulky material is divided by a thin layer composed of void and gray elements. As can be seen in Tables 2 and 3, small hinges are introduced as the number of elements in the discretization increases. This undesirable feature could be avoided by adding the geometric constraints proposed by Zhou et al. (2015) to impose the minimum size of the solid and void phases, or, as shown in the following example, by using the robust design approach (Wang et al. 2011).

5.3 Maximum and minimum length scale controls

This example is simply to analyze the performance of the maximum size constraint on a problem with minimum size control. The three problems are solved using the robust design approach. To this end, three designs are obtained in the projection stage, named as eroded \(\bar {\boldsymbol {\rho }}_{\text {ero}}\), intermediate \(\bar {\boldsymbol {\rho }}_{\text {int}}\), and dilated \(\bar {\boldsymbol {\rho }}_{\text {dil}}\). The objective function considers the design that offers the worst performance (for further details see Wang et al. 2011). The volume constraint is evaluated on the dilated design only since the projection strategy adds more material in \(\bar {\boldsymbol {\rho }}_{\text {dil}}\) than in the other two fields. Given that \(\bar {\boldsymbol {\rho }}_{\text {int}}\) is the design intended for manufacturing, the upper bound of the volume constraint \(V^{*}_{\text {dil}}\) is updated according to the desired volume constraint \(V^{*}_{\text {int}}\) of the intermediate design. For the latter, we use the strategy adopted by Amir and Lazarov (2018). The maximum size is imposed in the dilated design only, i.e., \(G_{\text {ms}}(\bar {\boldsymbol {\rho }}_{\text {dil}})\). For the sake of brevity, the effect on the length scale from restricting the dilated field is omitted.

In this example, we use μero = 0.75, μint = 0.50 and μdil = 0.25 to obtain \(\bar {\boldsymbol {\rho }}_{\text {ero}}\), \(\bar {\boldsymbol {\rho }}_{\text {int}}\), and \(\bar {\boldsymbol {\rho }}_{\text {dil}}\), respectively. Here, differently from the previous examples, the length scale is linearly changed within the design domain. This is just to highlight the fact that it is not necessary to introduce extra constraints to impose, simultaneously, different maximum and minimum sizes. This task just requires assembling the \(\mathbb {\mathrm {D_{I}}}\) and \(\mathbb {\mathrm {D_{II}}}\) matrices with the desired neighborhoods. The minimum and maximum sizes, dmin and dmax, are given next to each solution, which are shown in Fig. 12. Since the thresholds μero and μdil are symmetric with respect to μint, the theoretical minimum size of the solid and void phases are equal. The p-mean function with p = 300 is used to aggregate the constraints and the remaining optimization parameters are kept as in the previous examples.

It can be seen in the MBB beam that there are two portions of material disconnected from the structure. These are pointed with two arrows in Fig. 12a. We have observed that this issue can be avoided by increasing the total number of iterations, or by changing the move limit ml to be less conservative.

As expected, by imposing the minimum size, it is possible to avoid the small hinges. For this reason, compared to previous examples, designs obtained with the robust design approach have approximately 1.5% less gray elements, which contributes to improve the manufacturability of the optimized design.

Unlike the examples in previous sections, the designs in Fig. 12 have a considerably lower performance than the reference solution. This is associated with the additional penalization introduced by the minimum size control, which avoids gray elements and increases the separation distance between the bars.

It is worth mentioning that after the work of Yan et al. (2018), it is known that needle-like structures in thermal compliance offer a better performance that tree-like structures. This was also observed in the context of maximum size by Carstensen and Guest (2018). However, in the thermal compliance designs shown in this work, the maximum size restriction did not improve the performance of the component despite of promoting needle-like structures, as shown in Fig. 12b. The reason is that a large portion of the material is removed from the area near the heat sink, interrupting the heat conduction towards the cold zone.

5.4 3D cantilever beam

The MATLAB code in the Appendix is transcribed into the free access code TopOpt (Aage et al. 2015), to solve the 3D cantilever beam for compliance minimization with maximum size constraints. The design domain is shown in Fig. 13a and the parameters are in the notes of Table 4. Most of these are defined by default in the TopOpt code. The maximum size constraint is formulated with the annular test region, which in 3D takes the form of a spherical shell of thickness (dmax − dmin)/2. The p-mean function is used to aggregate the local constraints with p = 300.

Differently from the 2D case, the treatment of the filter is performed numerically since an extension of the design domain is computationally expensive when it comes to large-scale topology optimization. With a regular mesh, it is possible to redefine the filter and the maximum size matrices as:

where w and l are matrices containing the weights. These are defined in (4) and (11), respectively. Then, the local maximum size constraint considering a numerical extension of the design domain is computed as:

where 1 is an all-one array. cg contains the fraction of the test regions that remains outside the design domain. For instance, the test region of an element located at the edge of the design domain (see Fig. 13a) has a cgi = 0.75 which represents the portion shown in Fig. 13c.

Table 4 summarizes the results. The first column shows the reference solution and the second column shows the solution with maximum size control. It can be observed that in this example, differently from the 2D case, the constraint promotes plate-like structures rather than bar-like structures. Where plates bond, a long-narrow-closed circular cavity is present, which hinders manufacturing if, for example, additive manufacturing technologies are considered. This suggests that the maximum size constraint may demand other methods to reduce the geometric complexity of the design.

In the 3D example, the computation time is increased by 7%, which can be considered negligible. However, the additional computation time and memory required to store the DII matrix could be considerably high if larger test regions Ω are used.

6 Conclusion

This article presents the aggregation of maximum size constraints. In particular, it is used the formulation that limits the amount of material within a test region of each element in the design domain. A detailed analysis of this constraint and a comparison between the studied aggregation functions lead to the following conclusions:

For large p-exponents, the sensitivity information of the aggregation function resembles that of the max(⋅) function, for which only one value of the sensitivities is different from zero. In this situation, oscillations are expected since few local constraint are being considered by the optimizer. Here, more conservative updating strategies are necessary to guaranty the convergence of the problem.

The aggregation of the maximum size constraints can be eased by increasing the amount of void within the test regions, since smaller p-exponents can be used to catch the critical constraints. However, this strategy limits the minimum size of the solid phase because of a contradiction between the smoothed Heaviside projection and the maximum size constraint.

Some aggregation functions, such as p-norm, are sensitive to the amount of aggregated data. Therefore, they are sensitive to different discretizations of the same optimization problem.

The aggregation functions are sensitive to the data distribution. This suggests that it is advisable to check the distribution of the constraints for a proper selection of the aggregation function.

In addition, it is observed that by removing the inner zone of the circular test region, less holes are introduced in the topology. This can contribute to improve manufacturability of maximum size–constrained components.

7 Replication of results

The attached code can be easily integrated into the PolyTop MATLAB code (Talischi et al. 2012). To facilitate its implementation and make it easy to read, some strategies have been simplified. For example, the PolyTop code by default does not include any treatment for the filter with respect to the boundaries, and the smoothed Heaviside projection is not threshold commanded. By default, it is solved the compliance minimization problem subject to volume and maximum size constraints, and the design is discretized using polygonal elements.

Some indications are given below to ease the replication of results.

The proposed strategy: The attached code includes by default the proposed aggregation strategy that uses (17) as QoI and (21) to obtain sensitivities. A big p exponent is set line 21, therefore a small move limit ml must be used.

Infill structures: As explained in Section 4.1, the maximum size constraint is related to the method that produces infill structures. To obtain a result similar to Fig. 8a, the attached code must be changed as described in lines 20, 21, 22, and 39.

Comparison of test regions: By default, the code in the Appendix assembles the DII matrix using the annular test region, but it is possible to recover the circular one by commenting the line 40.

p-mean and p-norm: By default the attached code uses p-mean as aggregation function, but it is possible to get p-norm simply by defining N = 1 in line 23.

References

Aage N, Andreassen E, Lazarov BS (2015) Topology optimization using petsc: an easy-to-use, fully parallel, open source topology optimization framework. Struct Multidiscip Optim 51(3):565– 572

Almeida SRMd, Paulino GH, Silva ECN (2009) A simple and effective inverse projection scheme for void distribution control in topology optimization. Struct Multidiscip Optim 39(4):359–371

Amir O, Lazarov BS (2018) Achieving stress-constrained topological design via length scale control. Struct Multidiscip Optim 58(5):2053–2071

Andreassen E, Clausen A, Schevenels M, Lazarov BS, Sigmund O (2011) Efficient topology optimization in matlab using 88 lines of code. Struct Multidiscip Optim 43(1):1–16

Bendsøe MP, Kikuchi N (1988) Generating optimal topologies in structural design using a homogenization method. Comput Methods Appl Mech Eng 71(2):197–224

Carstensen JV, Guest JK (2018) Projection-based two-phase minimum and maximum length scale control in topology optimization. Struct Multidiscip Optim 58(5):1845–1860

Deaton JD, Grandhi RV (2014) A survey of structural and multidisciplinary continuum topology optimization: post 2000. Struct Multidiscip Optim 49(1):1–38

Duysinx P, Sigmund O (1998) New developments in handling stress constraints in optimal material distribution. In: 7th AIAA/USAF/NASA/ISSMO symposium on multidisciplinary analysis and optimization, p 4906

Guest J (2009a) Imposing maximum length scale in topology optimization. Struct Multidiscip Optim 37:463–473

Guest JK (2009b) Topology optimization with multiple phase projection. Comput Methods Appl Mech Eng 199(1–4):123– 135

Guest J, Prévost J, Belytschko T (2004) Achieving minimum length scale in topology optimization using nodal design variables and projection functions. Int J Numer Methods Eng 61:238–254

Jansen M, Lombaert G, Schevenels M, Sigmund O (2014) Topology optimization of fail-safe structures using a simplified local damage model. Struct Multidiscip Optim 49:657–666

Lazarov BS, Wang F (2017) Maximum length scale in density based topology optimization. Comput Methods Appl Mech Eng 318:826–844

Lazarov B, Wang F, Sigmund O (2016) Length scale and manufacturability in density-based topology optimization. Arch Appl Mech 86:189–218

París J, Navarrina F, Colominas I, Casteleiro M (2010) Block aggregation of stress constraints in topology optimization of structures. Adv Eng Softw 41(3):433–441

Qian X, Sigmund O (2013) Topological design of electromechanical actuators with robustness toward over-and under-etching. Comput Methods Appl Mech Eng 253:237–251

Rojas-Labanda S, Stolpe M (2015) Automatic penalty continuation in structural topology optimization. Struct Multidiscip Optim 52(6):1205–1221

Sigmund O (2001) A 99 line topology optimization code written in matlab. Struct Multidiscip Optim 21 (2):120–127

Sigmund O (2007) Morphology-based black and white filters for topology optimization. Struct Multidiscip Optim 33(4):401–424

Sigmund O, Maute K (2013) Topology optimization approaches. Struct Multidiscip Optim 48(6):1031–1055

Svanberg K (1987) The method of moving asymptotes—a new method for structural optimization. Int J Numer Methods Eng 24(2):359–373

Talischi C, Paulino GH, Pereira A, Menezes IF (2012) Polytop: a matlab implementation of a general topology optimization framework using unstructured polygonal finite element meshes. Struct Multidiscip Optim 45 (3):329–357

Thompson MK, Moroni G, Vaneker T, Fadel G, Campbell RI, Gibson I, Bernard A, Schulz J, Graf P, Ahuja B et al (2016) Design for additive manufacturing: Trends, opportunities, considerations, and constraints. CIRP Ann-Manuf Technol 65(2):737–760

Verbart A, Langelaar M, Van Keulen F (2017) A unified aggregation and relaxation approach for stress-constrained topology optimization. Struct Multidiscip Optim 55(2):663–679

Wang F, Lazarov BS, Sigmund O (2011) On projection methods, convergence and robust formulations in topology optimization. Struct Multidiscip Optim 43(6):767–784

Wu J, Clausen A, Sigmund O (2017) Minimum compliance topology optimization of shell–infill composites for additive manufacturing. Comput Methods Appl Mech Eng 326:358–375

Wu J, Aage N, Westermann R, Sigmund O (2018) Infill optimization for additive manufacturing—approaching bone-like porous structures. IEEE Trans Vis Comput Graph 24(2):1127–1140

Yan S, Wang F, Sigmund O (2018) On the non-optimality of tree structures for heat conduction. Int J Heat Mass Transf 122:660–680

Yang R, Chen C (1996) Stress-based topology optimization. Struct Optim 12(2–3):98–105

Zhang W, Zhong W, Guo X (2014) An explicit length scale control approach in SIMP-based topology optimization. Comput Methods Appl Mech Eng 282:71–86

Zhou M, Lazarov BS, Wang F, Sigmund O (2015) Minimum length scale in topology optimization by geometric constraints. Comput Methods Appl Mech Eng 293:266–282

Funding

The work was supported by the project AERO+, funded by the Plan Marshall 4.0 and the Walloon Region of Belgium. Computational resources have been provided by the Consortium des Équipements de Calcul Intensif (CÉCI), funded by the Scientific Research Fund of Belgium (F.R.S.-FNRS).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no conflict of interest.

Additional information

Responsible Editor: James K Guest

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: MATLAB code of the maximum size constraint

Appendix: MATLAB code of the maximum size constraint

Rights and permissions

About this article

Cite this article

Fernández, E., Collet, M., Alarcón, P. et al. An aggregation strategy of maximum size constraints in density-based topology optimization. Struct Multidisc Optim 60, 2113–2130 (2019). https://doi.org/10.1007/s00158-019-02313-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-019-02313-8