Abstract

We propose a new scenario penalization matheuristic for a stochastic scheduling problem based on both mathematical programming models and local search methods. The application considered is an NP-hard problem expressed as a risk minimization model involving quantiles related to value at risk which is formulated as a non-linear binary optimization problem with linear constraints. The proposed matheuritic involves a parameterization of the objective function that is progressively modified to generate feasible solutions which are improved by local search procedure. This matheuristic is related to the ghost image process approach by Glover (Comput Oper Res 21(8):801–822, 1994) which is a highly general framework for heuristic search optimization. This approach won the first prize in the senior category of the EURO/ROADEF 2020 challenge. Experimental results are presented which demonstrate the effectiveness of our approach on large instances provided by the French electricity transmission network RTE.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The practice of decision making under uncertainty frequently resorts to two criteria mean-risk models (cf. Markowitz and Todd 2000) where the mean represents the expected outcome, and the risk corresponds to a scalar measure of the variability of outcomes, in which the mean is maximized and the risk is minimized. The standard mean-variance model, called the Markowitz model, uses the variance as the risk measure in the mean-risk analysis. The mean-variance model under a set of linear constraints can be formulated as a quadratic programming problem (Markowitz 1952). Sharpe (1971) proposed a linear programming (LP) approximation to the mean-variance model for the general portfolio analysis problem. Several authors (cf. Ogryczak and Ruszczynski 2002; Ogryczak and Ruszczyński 2002) have pointed out that in general the mean-variance model is not consistent with stochastic dominance rules (Whitmore and Findlay 1978). To overcome this flaw of the mean-variance model, we consider a mean-risk model involving q-quantiles in risk measure. Note that the measure of value at risk (VAR) is defined as the maximum loss at a level q, consequently, VAR is a widely used quantile risk measure (cf. Jorion 1997, and references therein).

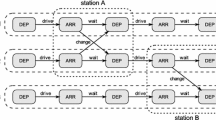

We consider the stochastic scheduling problem (SSP) proposed by Réseau de Transport d’Electricité, usually known as RTE. RTE is the electricity transmission system operator of France, responsible for the operation, maintenance and development of the French high-voltage transmission system, which with approximately 100,000 kms, is the largest of Europe. Maintenance policies have a major economic impact in many areas of the industry such as the electricity sector (Froger et al. 2016), the manufacturing industry and civil engineering. The main goal is to build an optimal maintenance schedule to ensure the delivery and supply of electricity. This problem corresponds to the competition of ROADEF/EURO challenge 2020 (ROADEF 2020).

The stochastic scheduling problem consists in determining the start time of maintenance activities (called interventions) in a high-voltage transmission network over a given time horizon. Each intervention needs a certain number of time units to be achieved without interruption that depends on its starting time. All interventions must be planned and finished before the end of the time horizon. A feasible plan must be consistent with all activity related restrictions such as resource constraints (e.g. each intervention consumes resources and the total amount of resources used at each time step is bounded from below and above) and exclusions between interventions (e.g. some interventions cannot take place at the same time). Given a set of scenarios, where the risk value of each intervention is known at each time step and for each scenario, the goal is to minimize a convex combination of the expectation and the quantile of the risk.

The stochastic scheduling problem can be formulated as a non-linear Mixed Integer Programming (MIP) model with a non-linear (generally non convex) objective function and linear constraints. Note that SSP optimization problem is similar to mean-VAR portfolio problem. Benati and Rizzi (2007) showed that mean-VAR portfolio problem is NP-hard and proposed MIP formulation using Cplex to solve medium size instances. It is well known that in general the exact methods require exponential growth of computational effort for large scale instances. These practical limitations have occasioned a considerable research effort focusing on approximation approaches such as heuristics, metaheuristics and matheuristics (cf. Hashimoto et al. 2011; Gavranović and Buljubašić 2016; Buljubašić et al. 2018; Hanafi and Todosijević 2017). Gouvine (2021) proposed a hybrid approach combining a branch and cut algorithm (Padberg and Rinaldi 1991) with a constraint generation method based on Benders decomposition (Rahmaniani et al. 2017). Cattaruzza et al. (2022) presented a finite convergent adaptive scenario clustering algorithm that guarantees an optimal solution but is only useful for problems of small size. They also developed an overlapping alternating direction method (Glowinski and Marroco 1975; Gabay and Mercier 1976) that serves as a primal heuristic for quickly computing feasible solutions of good quality for problems in the size range examined. Zholobova et al. (2021) developed a hybrid approach combining the Covariance Matrix Adaptation Evolution Strategy (Hansen et al. 2003) and Variable Neighborhood Search (Hansen et al. 2017) metaheuristics.

In this paper, we introduce a new matheuristic for the SSP optimization problem, called Scenario Penalization Matheuristic (SPM). The proposed matheuritic involves a parameterization of the objective function that is progressively modified to generate feasible solutions which are improved by a local search procedure and which is applicable to applications of practical sizes.

The SPM is based on the ghost image process (GIP) approach by Glover (1994) which is a highly general framework for heuristic search optimization. Note that GIP is a generalization of many relaxation methods (e.g. Lagrangian, surrogate and composite relaxations) and self-organizing neural networks of Kohonen (1988) as applied to optimization problems. GIP has been applied to some interesting optimization problems. For example, Woodruff (1995) developed a GIP application to the problem of computing the minimum covariance determinant estimators. With regard to the parameterized objective function, the GIP approach is related to the concept of slope scaling applied within the context of fixed-charge networks (see e.g. Kim and Pardalos 1999; Gendron et al. 2003; Crainic et al. 2004; Glover 2005; Gendron et al. 2018). This approach SPM won the first prize in the senior category of the EURO/ROADEF 2020 challenge. Experimental results are presented which demonstrate the effectiveness of our approach on large instances provided by RTE.

The remainder of this paper is organized as follows. A description of the stochastic scheduling problem is provided in Sect. 2 and the scenario penalization matheuristic development for the stochastic scheduling problem is presented in Sect. 3. Computational results obtained from the available set of instances, provided by RTE, are provided in Sect. 4. Finally, Sect. 5 summarizes our conclusions.

2 Stochastic scheduling problem

In this section, first we will describe the input parameters of the stochastic scheduling problem (SSP). We refer the reader to the challenge subject in ROADEF (2020) for a more thorough description of the maintenance of electricity transmission lines and the implications of the problem from business and environmental perspectives. Additional details of the global RTE strategy and risk management can be found at Crognier et al. (2021). Next we propose a mathematical programming formulation of this problem.

2.1 Input parameters of the problem

We consider a finite set of maintenance activities \({\mathcal {I}}\) (called \({\textit{interventions}}\) in the challenge application) to be scheduled over a discrete time horizon \({\mathcal {T}}\). Interventions are not equal in terms of duration or resource requirements. Because of days off (weekends...), the duration of a given intervention is not fixed in time and depends on when it starts. Therefore, \(\delta _{i,t} \in {\mathbb {N}}\) denotes the actual duration of intervention \(i \in {\mathcal {I}}\) if it starts at time \(t \in {\mathcal {T}}\).

To carry out the different interventions, a workforce is necessary, split into teams (or resources) of various sizes and specific skills. Each team has different specific skills and can potentially be required for any task. The available resources are always limited and vary over the time horizon. Let \({\mathcal {J}}\) denote the set of resources. The amount of resource \(j \in {\mathcal {J}}\) used at time \(t \in {\mathcal {T}}\) by intervention \(i \in {\mathcal {I}}\) starting at time \(t'\) is given by \(a_{i,t'}^{j,t}\). So for every resource \(j \in {\mathcal {J}}\) and every time step \(t \in {\mathcal {T}}\), the amount of resource consumed must be between the lower bound \(l_t^j\) and the upper bound \(u_t^j\).

When an intervention is being performed, the power lines involved must be disconnected, causing the electricity network to be weakened at this time. This implies a certain risk for RTE, which is highly linked to the grid operation: if another nearby site were to break down (due to extreme weather for example), the network may not be able to handle the electricity demand correctly. Even if such events have an extremely low probability of occurring, they must be taken into account in the schedule. In order to financially quantify these risks, RTE previously conducted simulations for various scenarios with different time steps. Let \({\mathcal {S}}_t\) be the set of scenarios at time \(t \in {\mathcal {T}}\) and \({\mathcal {S}} = \cup _{t \in {\mathcal {T}}}{\mathcal {S}}_t\) be the set of all scenarios. The risk value depends on the intervention concerned and on the time period, as it is often much less risky to perform interventions in summer (when there is less demand on the electricity network) rather than in winter. So the risk value (expressed in Euros in the challenge application) is denoted by \(risk_{i,t'}^{s,t} \in {\mathbb {R}}\) for time period \(t \in {\mathcal {T}}\), scenario \(s \in {\mathcal {S}}\) and intervention \(i \in {\mathcal {I}}\) when i starts at time step \(t' \in {\mathcal {T}}\). Further, some maintenance activities cannot be performed at the same time. This exclusion between interventions is given by a set \({\mathcal {E}}\) of triplets \((i, i', t)\) such that interventions i and \(i'\) cannot be both in process at time t.

Intervention preemption is not allowed, and each intervention must be terminated at time \(|{\mathcal {T}}|\). To more formally derive the optimization models and their associated solutions, we require some additional notation. Let \({\mathcal {T}}(i) = \{t \in {\mathcal {T}}: t + \delta _{i,t} \le |{\mathcal {T}}|+ 1\}\) denote the set of feasible starting times of intervention \(i \in I\), and let \({\mathcal {T}}^+(i,t) = \{t' \in {\mathcal {T}}: t \le t' < t + \delta _{i,t}\}\) denote the set of times for which the intervention i is in process if it starts at time t. We further let \({\mathcal {T}}^-(i,t) = \{t' \in {\mathcal {T}}: t' \le t < t' + \delta _{i,t'}\} = \{t' \in {\mathcal {T}}: t \in {\mathcal {T}}^+(i,t') \}\) denote the set of starting times of intervention i for which the intervention is in process at time t, and let \({\mathcal {I}}(t) \subseteq {\mathcal {I}}\) denote the set of interventions in process at time \(t \in {\mathcal {T}}\). For any real value \(v \in {\mathbb {R}}\), let \(\lceil v\rceil = \text {min}\{v'\in {\mathbb {N}}:v' \ge v\}\).

2.2 Mathematical programming formulation

A schedule (or solution) consists of a list of starting times of interventions. Hence a solution will be represented by an integer vector \(\sigma \in {\mathbb {N}}^{|{\mathcal {I}}|}\) where each component \(\sigma _i\) represents the starting time of intervention \(i \in {\mathcal {I}}\). An alternative is to define a solution by a binary matrix \(x \in \{0,1\}^{|{\mathcal {I}}|\times |{\mathcal {T}}|}\) such that

for \(i \in {\mathcal {I}}\) and \(t \in {\mathcal {T}}\). The compact integer representation \(\sigma \) of a feasible schedule will be used in heuristic procedures and the binary representation x will be exploited in the mathematical programming formulation.

2.2.1 Non linear objective function

The evaluation score of a feasible schedule depends only on the distribution of risks. Two criteria are taken into account: the average and the excess of the risk values. The excess is defined from the quantile value of the risk distribution.

More formally, given a feasible schedule represented by the integer vector \(\sigma \) or the binary matrix x, the mean risk \({\textit{Mean}}(x)={\textit{Mean}}(\sigma )\) and the expected excess risk \({\textit{Excess}}(x)={\textit{Excess}}(\sigma )\) are evaluated as follows.

Mean cost The cumulative planning risk at \(t \in {\mathcal {T}}\) for a scenario \(s \in {\mathcal {S}}_t\), denoted by \({\textit{risk}}^{s,t}(x)\) or \({\textit{risk}}^{s,t}(\sigma )\), is the sum of risks in scenario s over the in-process interventions at t:

Then the mean cost overall planning risk is

Excess cost The planning quality also takes into account the cost variability. Computing the mean risk over all scenarios induces a loss of information, and critical scenarios inducing extremely high costs may not be adequately captured by the mean. To prevent this kind of outcome from happening, a metric exists to quantify the variability of the scenarios. The expected excess indicator relies on the \(\tau \) quantile values where \(\tau \in ]0,1]\). For every time period t, we define the quantile value \(Q_{\tau }^t\) as follows:

Note that if we sort elements of the set \({\mathcal {S}}_t\) in increasing order, then \(Q_{\tau }^t(x)\) will be equal to the element at position \(\lceil \tau \times |{\mathcal {S}}_t |\rceil \) in this sorted set. The expected excess of a planning is:

The two metrics \({\textit{Mean}}(x)\) and \({\textit{Excess}}(x)\) described above cannot necessarily be compared directly, as they depend on risk aversion (or risk policies). That is why a scaling factor \(\alpha \in [0, 1]\) is needed. Then the final score of a planning is:

In general, the quantile function \(Q_{\tau }^t(x)\) is non-convex as shown by Gouvine (2021). Hence, the objective function \({\textit{Objective}}(x)\) is non-convex. Moreover, the objective function \({\textit{Obj}}(r)\) of the vector of decision variables risk r associated with a solution x (i.e. \(r=({\textit{risk}}^{s,t}(x))_{t \in {\mathcal {T}}, s \in {\mathcal {S}}_t}\)) is a non-convex function as can be observed from Table 1.

The goal is to find a feasible schedule which minimizes the objective function \({\textit{Objective}}(x)\). A schedule x is feasible if satisfies all the linear constraints presented below.

2.2.2 Linear constraints

Schedule Interventions have to start at the beginning of a period. Moreover, as interventions require shutting down some lines of the electricity network, once an intervention starts, it cannot be interrupted. More precisely, if intervention \(i \in {\mathcal {I}}\) starts at time \(t \in {\mathcal {T}}\), then it must end at \(t + \delta _{i,t}\). All interventions must be executed and completed no later than the end of the horizon. If intervention \(i \in {\mathcal {I}}\) starts at time \(t \in {\mathcal {T}}\), then \(t + \delta _{i,t} \le |{\mathcal {T}} |+ 1\). Hence, the following multiple choice constraints must be satisfied:

From the definition of this schedule problem, we have the reduction

which can be incorporated in the preprocessing step.

Resource limitation The resources needed cannot exceed the resource capacity but have to be at least equal to the minimum workload, and hence the resource constraints are:

Exclusion between interventions The exclusion constraints can formally be written as:

This implication can formulated using the binary variables \(x_{i,t}\) as follows:

2.3 MIP_full formulation

Finally, the stochastic scheduling problem SSP can be expressed as a non-linear binary MIP model, denoted MIP_full, given by

The quantile value \(Q_{\tau }^t(x)\) for each scenario s in time step t can be expressed by introducing a binary variable \(y_{s,t}\) which indicates whether \(Q_{\tau }^t(x)\) is greater than or equal to the total risk for scenario s at time step t, i.e.

with additional linear constraints requiring that the number of \(y_{s,t}\) binary variables that take the value 1 must be greater than or equal to \(\lceil \tau \times |{\mathcal {S}}_t |\rceil \), i.e.

The implicit definition (4) of auxilary binary variables y for quantile can be formulated as quadratic constraints

Linear MIP formulations are proposed by Gouvine (2021) and Cattaruzza et al. (2022). To strengthen the linear relaxation of the quantile model, they generate a set of valid inequalities derived from the expression of the quantile function and linear programming duality theory. The existence of binary variables x and y with \({\textit{max}}(0,\cdot )\) function and the non-convexity of quantile function \(Q_{\tau }^t(x)\) makes the MIP_full model very difficult to solve optimally with state-of-the-art MIP solvers like Cplex and Gurobi except for small size instances.

3 Scenario penalization matheuristic

Our new scenario penalization matheuristic (SPM) for solving the SSP optimization problem is based on four main steps. Each solution obtained throughout Step 1 and Step 2 of the SPM (Algorithm 1) is evaluated as a candidate for the best solution \(x^*\) currently found.

In the following, we give details of these steps as adapted to the present context.

3.1 Step 0: initial MIP_mean \((c^0)\) approximation generating initial solution \(x^0\)

As noted above, the MIP_full model is too hard to solve for real world instances. Hence, the initial approximation we consider corresponds to the mean model ignoring the excess. Formally, let \(c^0_{i,t}\) denote the initial resulting mean risk if intervention \(i \in {\mathcal {I}}\) starts at time step \(t \in {\mathcal {T}}\), defined as follows

Then the initial linear approximation of the \(\texttt {MIP\_full}\) model without excess cost is given below.

Required computational time to solve MIP_mean\((c^0)\) varies across the data sets: it is usually quite fast but can take up to a few minutes for largest instances. Consequently, solving MIP_mean\((c^0)\) optimally or approximatively with an MIP solver will generate an initial feasible solution \(x^0\) for the MIP_full model (i.e. \(x^0\) corresponds to the first solution generated by SPM() Algorithm.

3.2 Step 3: scenario penalization heuristic updating the current MIP_mean(c) approximation

Our goal in this step is to modify the current objective coefficient matrix c of the MIP_mean(c) model, stating with \(c=c^0\) to make the resulting model closer to the original MIP_full model. To achieve this, let x be an optimal or best solution of the current MIP_mean(c) model and define the excess risk cost at time step \(t\in {\mathcal {T}}\) by:

Hence, \({\textit{Excess}}(x) = \frac{1}{|{\mathcal {T}} |} \sum _{t \in {\mathcal {T}}} {\textit{Excess}}^t(x)\). We first provide observations that describe the behavior of the function \({\textit{Excess}}^t(x)\). For any step time \(t \in {\mathcal {T}}\), we define the set of scenarios \(S^+_t(x) = \{ s \in {\mathcal {S}}_t: {\textit{risk}}^{s,t}(x) > Q_{\tau }^t(x)\}\).

It is obvious that this modification increases the mean value \(\frac{1}{|{\mathcal {S}}_t |} \sum _{s \in {\mathcal {S}}_t} {\textit{risk}}^{s,t}(x)\). Moreover, this modification involves only binary variables \(y_{s,t} = 0\) since \(S^+_t(x) = \{ s \in {\mathcal {S}}_t: y_{s,t} = 0\}\), and the constraints (5) imposed on the quantile remain satisfied. Consequently, the quantile value \(Q_{\tau }^t(x)\) is unchanged and the value \({\textit{Excess}}^t(x)\) will decrease after this change. Hence for any time step \(t\in {\mathcal {T}}\), increasing the risk value \({\textit{risk}}^{s,t}(x)\) for any scenario \(s \in S^+_t(x)\) decreases the value \({\textit{Excess}}^t(x)\).

Let \({\textit{Risk}}_{{\textit{mean}}}^t(x)\) (resp. \({\textit{Risk}}_{{\textit{max}}}^t(x)\)) denote the mean (resp. max) risk at time step \(t\in {\mathcal {T}}\):

Then we can state

Proposition 1

For any \(t\in {\mathcal {T}}\) such that \(|{\mathcal {S}}_t |= \lceil \tau \times |{\mathcal {S}}_t |\rceil \), we have

Proof

If \(|{\mathcal {S}}_t |= \lceil \tau \times |{\mathcal {S}}_t |\rceil \) then from the \(\tau \) quantile constraint (5) we deduce that the indicator binary variables \(y_{s,t}\) for all \(s \in {\mathcal {S}}_t\) are set to 1. Consequently, from (4) we have \(Q_{\tau }^t(x) \ge {\textit{risk}}^{s,t}(x)\) for all \(s \in {\mathcal {S}}_t\) and the operator \(\text {max}(0,.)\) can be dropped from the expression to compute \({\textit{Excess}}^t(x)\), i.e. now we have

Moreover, from the definition of the \(\tau \) quantile \(Q_{\tau }^t(x)\) in Sect. 2.2.1, in this case we have

Or equivalently

This completes the validation of Eq. (10). \(\square \)

Next, observe that the objective coefficients \(c_{i, t}\), can be written:

We replace \(\frac{1}{|{\mathcal {S}}_{t'} |}\) values in formula (11) by non-constant values \(\mu _{s,t'}\), to yield the following formula for calculating \(c_{i,t}\) values:

The \(\mu _{s,t}\) values, for \(s \in {\mathcal {S}}\) and \(t \in {\mathcal {T}}\), are set to \(\frac{1}{|{\mathcal {S}}_{t} |}\) initially and penalizing scenario s at time step t will be done by increasing \(\mu _{s,t}\) value. Note that the sum of these values over all scenarios in the time step equals 1 initially (\(\sum _{s \in {\mathcal {S}}_{t}} \frac{1}{|{\mathcal {S}}_{t} |} = 1\)) and we will keep the sum fixed at 1 when doing the modifications. This condition is imposed because we want the sum of values/coefficients to be the same in each time step in order to not prioritize some time steps over others—only the importance of scenarios inside the time step will change.

Formally, we calculate \(\mu _{s,t}\) values in the following way. Let \({\textit{Penalty}}_{s, t}\) be a current penalty for scenario s at time step t and let \({\textit{Freq}}_{s, t}\) denote how many times scenario s at time step t is penalized. Penalty and frequency values are initialized to 0 and they will be updated in each iteration i.e. after each call of MIP_mean(c) as follows. For each time step \(t \in {\mathcal {T}}\) with excess i.e. for which \(Q_{\tau }^t(x) > {\textit{Risk}}_{{\textit{mean}}}^t(x)\) we update the penalties and frequencies for \(|{\mathcal {S}}_t |- \lceil \tau \times |{\mathcal {S}}_t |\rceil \) scenarios with the highest \({\textit{risk}}^{s,t}(x)\) values (those are scenarios with risk values at least \(Q_{\tau }^t(x)\)):

Then the \(\mu _{s,t'}\) values are calculated by setting:

where

We increase the penalties (in formula (13)) such that no excess appear in the solution obtained by MIP_Mean(c) (i.e. \(Q_{\tau }^t(x) = {\textit{Risk}}_{{\textit{mean}}}^t(x)\)). Note that if \(|S^+_t(x) |= 0\), no penalties are given to the scenario coefficients of this time step.

3.3 Step 2: local search (LS)

Step 2 constitutes an improvement method that transforms a feasible solution into one or more best solutions. The efficacy of Local Search (LS) (also called neighborhood search) for solving a wide variety of optimization problems (cf. Kirkpatrick et al. 1983; Glover and Laguna 1997; Hoos and Stützle 2004; Hansen et al. 2017) depends strongly on the characteristics of one or more neighborhood structures involved and their combination. We are interested in two well-known moves, namely Shift and Swap moves. The shift neighborhood is the set of solutions that can be obtained by changing the start time of one intervention, while the swap neighborhood is the set of solutions that can be obtained by interchanging the start times of two interventions. Formally, given a feasible schedule \(\sigma \), for any intervention \(i \in {\mathcal {I}}\) and any period \(t \in {\mathcal {T}}\), the shift neighbor solution \(\sigma '= \texttt {Shift}(\sigma , i, t)\) is defined by

and for two interventions \( i_1, i_2 \in {\mathcal {I}}\) the swap neighbor solution \(\sigma ' = \texttt {Swap}(\sigma , i_1, i_2)\) is defined by

Consequently, the Shift and Swap neighborhood sets are defined by

where the statement that \(\sigma '\) is feasible means that the solution \(\sigma '\) satisfies the linear constraints (1), (2) and (3).

There are several ways to combine two or more neighborhoods and computational results show that certain combinations are superior to others (cf. Di Gaspero and Schaerf 2006; Lü et al. 2011; Mjirda et al. 2017; Hansen et al. 2017). The three basic combinations of two neighborhood structures are strong neighborhood union, selective neighborhood union and token-ring search. For strong neighborhood union, the LS algorithm picks each move (according to the algorithm’s selection criteria) from all the Shift and Swap moves. For selective neighborhood union, the LS algorithm selects one of the two neighborhoods to be used at each iteration, choosing the neighborhood Shift with a predefined probability p and choosing Swap with probability \(1-p\). Note that an LS algorithm using only Shift or Swap is a special case of an algorithm using selective neighborhood union where p is set to be 1 and 0 respectively. In token-ring search, the neighborhoods Shift and Swap are alternated, applying the currently selected neighborhood without interruption, starting from the local optimum of the previous neighborhood, until no improvement is possible.

For the SSP challenge problem, the token-ring search implemented in the LS\((\sigma , {\textit{TL}}, {\textit{TL}}_1, {\textit{TL}}_2)\) procedure is described in Algorithm 2. Specifically, the LS procedure starts from a given feasible solution \(\sigma \), and uses one neighborhood until a best solution is determined, subject to time limits imposed on the search (\({\textit{TL}}_1\) (resp. \({\textit{TL}}_2\)) for Shift (resp. Swap) neighborhood exploration. Then the method switches to the other neighborhood, starting from this best solution, and continues the search in the same fashion. The search comes back to the first neighborhood at the end of the second neighborhood exploration, repeating this process until time limit \({\textit{TL}}\) is reached. Neighborhoods Shift and Swap are explored by randomly selecting a candidate feasible move which is performed if it improves the cost. However, performing only the moves that improve the objective function can quickly lead to a local optimum. Escaping a local optimum is therefore achieved by occasionally allowing non-improving moves. This is done in a simple way: from time to time (for example once in 1000 iterations) a ‘bad’ move is accepted.

3.4 Full SPM algorithm

In this section, we specify the full description of the SPM algorithm. The preliminary initialization (Step 0) constructs the coefficient matrix \(c=c^0\) of the initial MIP_Mean(c) approximation ignoring the excess cost and set the penalties and frequencies to 0 (i.e. \({\textit{Penalty}}_{s,t} = Freq_{s,t} = 0, \forall t \in {\mathcal {T}}, s \in {\mathcal {S}}_t\)). A each iteration, the current MIP_Mean(c) approximation is solved to generate a current feasible solution x (i.e. Step 1). The LS heuristic procedure tries to generate an improved solution \(x'\) (i.e. Step 2). Step 3 updates the coefficient matrix c of the current MIP_Mean(c) approximation. The process is repeated until the time limit allocated is exceeded. The full algorithm pseudo-code is given in Algorithm 3.

To clarify the procedure, we consider the small example provided in the next section.

3.5 A small example

We illustrate Algorithm 3 for an instance with four interventions \({\mathcal {I}}=\{i_1,i_2,i_3,i_4\}\), three time steps \({\mathcal {T}}=\{1,2,3\}\), seven scenarios in each time step \({\mathcal {S}}=\{s_1,\ldots ,s_7\}\), \(\tau = 0.7\) and \(\alpha = 0.5\). The duration of the first three interventions is 1 (i.e. \(\delta _{i_1,t} = \delta _{i_2,t} = \delta _{i_3,t} = 1\)) and the duration of the last intervention is 2 (\(\delta _{i_4,t} = 2\)). All risk values are given in Table 2.

For example, starting at time step 1, the \({\textit{risk}}^{s_4,2}_{i_4,1}\) value of intervention \(i_4\) for scenario \(s_4\) at time step 2 is equal to 8 (bold cell). The initial objective function coefficients \(c_{i,t}\) are computed using formula (6): we obtain the following value for intervention \(i=1\) starting day \(t=1\): \(c_{1,1} = \frac{1}{3} \times \frac{1}{7} \times (10 + 12 + 8 + 5 + 12 + 7 + 10) = \frac{64}{21} = 3.048\). The evolution of these coefficients during 8 iterations of SPM procedure is given in Table 3.

Solving MIP_Mean(c) with these \(c_{i,t}\) values produces the solution with optimal mean risk. Since we do not have any resource or exclusion constraints, the optimal solution can be obtained by simply selecting the time step with the smallest \(c_{i,t}\) value for each intervention i. Selected time steps are bold at iteration 1 given the solution \(x_{1,1}=x_{2,3}=x_{3,3}=x_{4,1}=1\), and in italic at iteration 2 given the solution \(x_{1,2}=x_{2,3}=x_{3,1}=x_{4,2}=1\). We have calculated new scenario coefficients, update penalties and frequencies only for the two scenarios with greatest risk (\(|{\mathcal {S}}_t |- \lceil \tau \times |{\mathcal {S}}_t |\rceil = 7 - 5 = 2\))—achieved in Algorithm 3 by adding 1 to the previous frequency values and adding \(\Delta _{{\textit{penalty}}} = \frac{(Q_{\tau }^t(x) - {\textit{Risk}}_{{\textit{mean}}}^t(x))\times |{\mathcal {S}}_t |}{|{\mathcal {S}}_t |- \lceil \tau \times |{\mathcal {S}}_t |\rceil }\) to the previous penalty values, giving us the values shown in line 2 of Table 3. In the last line of Table 3 bolditalic values correspond to the solution \(x_{1,3}=x_{2,3}=x_{3,1}=x_{4,1}=1\) which is optimal.

4 Experimental results in the context of ROADEF-EURO challenge

Our SPM matheuristic obtains the best results in the final phase of the ROADEF/EURO 2020 challenge. In this phase, 13 teams (i.e. algorithms), denoted by \({\mathcal {A}}=\{J3, J24, J43, J49, J73, S14, S19, S28, S34, S56, S58, S66, S68\}\), were qualified as finalists over 74 registered junior and senior teams. The SPM matheuristic corresponds to the algorithm proposed by team S34 composed by the two first authors of this paper.

Platform The computer used to evaluate the programs of the teams is a Linux OS machine with 2 CPU, 16 GB of RAM, and the allowed list of MILP solvers are CPLEX, Gurobi and LocalSolver. Our winning SPM algorithm was implemented in C++ language and used Gurobi as an MIP solver to solve the MIP_Mean(c) approximations of MIP_full.

Dataset To evaluate the 13 finalist algorithms, a set of 30 industrial problem instances was provided by RTE, divided into two data sets (Data set C and Data set X) with 15 instances for each one. Those data sets are available at the website of the challenge (ROADEF 2020). The characteristics of these 30 instances are given in Table 4.

Time limit Two stopping criteria of each algorithm on each instance are used to differentiate the 13 algorithms by imposing two time limits of 15 and 90 min.

Objective evaluation Given a time limit \({\textit{TL}}\) and a dataset \(K = C\) or X. For each instance \(k \in K\) and each algorithm \(a \in {\mathcal {A}}\), the evaluation function of the returned solution x by algorithm a is given by \({\textit{obj}}^{{\textit{TL}}}_{k,a}={\textit{Objective}}(x)\) if x is feasible, and otherwise \({\textit{obj}}^{{\textit{TL}}}_{k,a}= \infty \). The final score attribuated to an algorithm \(a \in {\mathcal {A}}\) on an instance \(k \in K\) uses the convex weighting

Finally, given a dataset K, for each algorithm \(a \in {\mathcal {A}}\), the number of times where algorithm a fails to find a feasible solution or crashes with time limit \({\textit{TL}}\) is given by

Ranking method Let \({\textit{Better}}_{k,a}\) be the number of algorithms with a result strictly better than the result of algorithm a on instance k, i.e.

For each dataset K, we compute the sum of \({\textit{Better}}_{k,a}\) over \(k \in K\), i.e.

and the number of instances with \({\textit{Better}}_{k,a} = 0\) over \(k \in K\), i.e.

The score of an algorithm a for the instance k is defined by

where \({\textit{Better}}^*\) is the maximal score that an algorithm can earn from one instance. During both the qualification and final phases, \({\textit{Better}}^*\) is equal to 10. The global score of an algorithm a, on a given dataset K denoted as \({\textit{score}}(a)\) is defined by

The score of an algorithm a for the dataset K is given by

Given a time limit \({\textit{TL}}\) and a dataset K, for each algorithm \(a \in {\mathcal {A}}\), the number of times where the algorithm a is the best in terms of the relative gap value, is defined as follows

where

and where \({\textit{obj}}^*_{k}\) is the best value over the algorithms \(a \in {\mathcal {A}}\), i.e. \({\textit{obj}}^*_{k} = \text {min}\{{\textit{obj}}_{k,a}:a \in {\mathcal {A}}\}\).

Table 5 presents the values of the 6 parameters \(({\textit{Score}}_{K,a}\), \({\textit{Score}}^*_{K,a}\), \({\textit{Better}}_{K,a}\), \({\textit{Best}}^{{\textit{TL}}}_{K,a}\), \({\textit{Crash}}^{{\textit{TL}}}_{K,a})\) used to evaluate an algorithm \(a \in {\mathcal {A}}\) over a dataset \(K \in \{C, X, C+X\}\). The values provided in this table are obtained from disggregated information described in the 4 matrices \(({\textit{Score}}_{k,a}, {\textit{Better}}_{k,a}, \Delta ^{15}_{k,a}, \Delta ^{90}_{k,a})\) presented in the Appendix.

The final ranking of the ROADEF-EURO challenge is based on the \({\textit{Score}}^*_{C+X,a}\) values for the submitted algorithms \(a \in {\mathcal {A}}\). The winning algorithm \(a^*\) is the one that receives the highest score, i.e.

.

Our SPM algorithm (i.e. \(a^*=S34\)) strictly dominated all the other algorithms \(a \in {\mathcal {A}}-\{a^*\}\) on the 18 comparison criteria \(({\textit{Score}}_{K,a }, {\textit{Score}}^*_{K,a}, {\textit{Better}}_{K,a}, {\textit{Best}}^{{\textit{TL}}}_{K,a})\) for \(K \in \{C, X, C+X\}\) and \({\textit{TL}} \in \{15, 90\}\), (which justifies the word “efficient” in the title of this paper!). However, there is no dominance among the remaining 4 best algorithms, i.e. \({\mathcal {A}}^* = \{a \in \mathcal {A}: {\textit{Score}}^*_{C+X,a} \ge 150\}\) with or without the 6 criteria \({\textit{Crash}}^{{\textit{TL}}}_{K,a}\) for \(K \in \{C, X, C+X\}\) and \({\textit{TL}} \in \{15, 90\}\). For example, the second algorithm \(a^2=S66\) is dominated by the third algorithm \(a^3=S56\) on the two criteria \({\textit{Best}}^{15}_{K,a})\) and \({\textit{Crash}}^{15 }_{K,a}\). The fourth algorithm \(a^4=S19\) dominates the second algorithm \(a^2\) and the third algorithm \(a^3\) on the 4 criteria \(({\textit{Score}}^*_{C,a}, {\textit{Better}}^*_{C,a}, {\textit{Crash}}^{90}_{X,a}, {\textit{Crash}}^{15}_{C+X,a})\).

Basic lower and upper bounds: Since for any feasible solution x the value \({\textit{Excess}}(x)\) is non negative and \(\alpha \in [0,1]\) we have \({\textit{Objective}}(x) \ge {\textit{Mean}}(x)\). Consequently, a lower bound on the optimal value of MIP_full can be obtained by solving the MIP_mean\((c^0)\), i.e.

where v(P) is the optimal value of a given optimization problem P.

From Proposition 2, for any \(t\in {\mathcal {T}}\) such that \(|{\mathcal {S}}_t |= \lceil \tau \times |{\mathcal {S}}_t |\rceil \), we have \({\textit{Excess}}^t(x) = {\textit{Risk}}_{{\textit{max}}}^t(x) - {\textit{Risk}}_{{\textit{mean}}}^t(x)\) which is valid for \(\tau = 1\), the objective function can be expressed as follows:

In this case (i.e. \(\tau = 1\)), the non-linear optimization \(\texttt {MIP\_full}\) can be stated as the linear mixed integer programming problem:

where \(z_t\) represents \({\textit{Risk}}_{{\textit{max}}}^t(x)\) for \(t \in {\mathcal {T}}\). Since \({\textit{Risk}}_{{\textit{max}}}^t(x) \le {\textit{Risk}}_{{\textit{mean}}}^t(x)\), a basic upper bound

Table 6 gives the lower bound \(({\textit{LB}})\) and the upper bound \(({\textit{UB}})\) for C and X datasets. MIP solver has been used to compute the bounds: we have set parameters gap to 0.0005 and time limit to 3600 s. All solutions produced by \(\texttt {SPM}\) algorithm have a better objective than \({\textit{UB}}\). But non exact approaches can produce worse solutions than \({\textit{UB}}\).

Behavior of SPM algorithm Results have been obtained by running the \({\textit{SPM}}\) algorithm with 10 seeds. Last column gives the best values obtained by the challenge competitors. Table 6 below summarizes the results obtained by our approach.

5 Conclusion

Our new scenario penalization matheuristic (SPM), for the mean-risk model involving quantiles related to value at risk measure (VAR) is an instance of the highly general framework of ghost image processing (GIP) proposed by Glover (1994), and is based on both mixed integer programming (MIP) models and local search (LS) methods. In this paper, we considered the application of the mean-risk model arising in the stochastic scheduling problem (SSP) formulated as a MIP model (MIP_full) with binary decision variables where the objective function is a non-linear (generally non-convex) function and the constraints are linear. The SPM matheuritic involves a parameterization of the objective function that is progressively modified to generate feasible solutions which are improved by local search. The initial parameterization provides an approximation MIP_mean(c) that corresponds to the MIP_full model by ignoring the excess cost (the non linear part of the original objective function). At each iteration, from an optimal or best solution of the current approximation MIP_mean(c), the parameter cost matrix c is modified in order to improve the next approximation by penalizing the scenario risks. The current feasible solution is improved by a local search procedure based on the combination of shift and swap neighborhoods. This approach won the first prize in the senior category of the EURO/ROADEF 2020 challenge. Experimental results are presented which demonstrate the effectiveness of our approach on large instances provided by the French electricity transmission network RTE.

References

Benati, S., Rizzi, R.: A mixed integer linear programming formulation of the optimal mean/value-at-risk portfolio problem. Eur. J. Oper. Res. 176(1), 423–434 (2007)

Buljubašić, M., Vasquez, M., Gavranović, H.: Two-phase heuristic for SNCF rolling stock problem. Ann. Oper. Res. 271(2), 1107–1129 (2018)

Cattaruzza, D., Labbé, M., Petris, M., Roland, M., Schmidt, M.: Exact and heuristic solution techniques for mixed-integer quantile minimization problems (2022)

Crainic, T.G., Gendron, B., Hernu, G.: A slope scaling/Lagrangean perturbation heuristic with long-term memory for multicommodity capacitated fixed-charge network design. J. Heuristics 10(5), 525–545 (2004)

Crognier, G., Tournebise, P., Ruiz, M., Panciatici, P.: Grid operation-based outage maintenance planning. Electric Power Syst. Res. 190 (2021)

Di Gaspero, L., Schaerf, A.: Neighborhood portfolio approach for local search applied to timetabling problems. J. Math. Model. Algorithms 5(1), 65–89 (2006)

Froger, A., Gendreau, M., Mendoza, J.E., Pinson, E., Rousseau, L.-M.: Maintenance scheduling in the electricity industry: a literature review. Eur. J. Oper. Res. 251(3), 695–706 (2016)

Gabay, D., Mercier, B.: A dual algorithm for the solution of nonlinear variational problems via finite element approximation. Comput. Math. Appl. 2(1), 17–40 (1976)

Gavranović, H., Buljubašić, M.: An efficient local search with noising strategy for google machine reassignment problem. Ann. Oper. Res. 242(1), 19–31 (2016)

Gendron, B., Potvin, J.-Y., Soriano, P.: A Tabu search with slope scaling for the multicommodity capacitated location problem with balancing requirements. Ann. Oper. Res. 122(1), 193–217 (2003)

Gendron, B., Hanafi, S., Todosijević, R.: Matheuristics based on iterative linear programming and slope scaling for multicommodity capacitated fixed charge network design. Eur. J. Oper. Res. 268(1), 70–81 (2018)

Glover, F.: Optimization by ghost image processes in neural networks. Comput. Oper. Res. 21(8), 801–822 (1994)

Glover, F.: Parametric ghost image processes for fixed-charge problems: a study of transportation networks. J. Heuristics 11(4), 307–336 (2005)

Glover, F., Laguna, M.: Tabu Search. Kluwer Academic Publishers, Boston (1997)

Glowinski, R., Marroco, A.: Sur l’approximation, par éléments finis d’ordre un, et la résolution, par pénalisation-dualité d’une classe de problèmes de dirichlet non linéaires. Revue française d’automatique, informatique, recherche opérationnelle. Analyse numérique 9(R2), 41–76 (1975)

Gouvine, G.: Mixed-integer programming for the ROADEF/EURO 2020 challenge. arXiv preprint arXiv:2111.01047 (2021)

Hanafi, S., Todosijević, R.: Mathematical programming based heuristics for the 0–1 MIP: a survey. J. Heuristics 23(4), 165–206 (2017)

Hansen, N., Müller, S.D., Koumoutsakos, P.: Reducing the time complexity of the derandomized evolution strategy with covariance matrix adaptation (CMA-ES). Evol. Comput. 11(1), 1–18 (2003)

Hansen, P., Mladenović, N., Todosijević, R., Hanafi, S.: Variable neighborhood search: basics and variants. EURO J. Comput. Optim. 5(3), 423–454 (2017)

Hashimoto, H., Boussier, S., Vasquez, M., Wilbaut, C.: A grasp-based approach for technicians and interventions scheduling for telecommunications. Ann. Oper. Res. 183(1), 143–161 (2011)

Hoos, H.H., Stützle, T.: Stochastic Local Search: Foundations and Applications. Elsevier, Amsterdam (2004)

Jorion, P.: Value at Risk: The New Benchmark for Controlling Market Risk, vol. 2. McGraw-Hill, New York (1997)

Kim, D., Pardalos, P.M.: A solution approach to the fixed charge network flow problem using a dynamic slope scaling procedure. Oper. Res. Lett. 24(4), 195–203 (1999)

Kirkpatrick, S., Gelatt, C.D., Vecchi, M.P.: Optimization by simulated annealing. Science 220(4598), 671–680 (1983)

Kohonen, T.: Self Organization and Associative Memory. Springer, Berlin (1988)

Lü, Z., Hao, J.-K., Glover, F.: Neighborhood analysis: a case study on curriculum-based course timetabling. J. Heuristics 17(2), 97–118 (2011)

Markowitz, H.: Portfolio selection. J. Finance 7(1) (1952)

Markowitz, H.M., Todd, G.P.: Mean-variance Analysis in Portfolio Choice and Capital Markets, vol. 66. John Wiley & Sons, London (2000)

Mjirda, A., Todosijević, R., Hanafi, S., Hansen, P., Mladenović, N.: Sequential variable neighborhood descent variants: an empirical study on the traveling salesman problem. Int. Trans. Oper. Res. 24(3), 615–633 (2017)

Ogryczak, W., Ruszczynski, A.: Dual stochastic dominance and related mean-risk models. SIAM J. Optim. 13(1), 60–78 (2002)

Ogryczak, W., Ruszczyński, A.: Dual stochastic dominance and quantile risk measures. Int. Trans. Oper. Res. 9(5), 661–680 (2002)

Padberg, M., Rinaldi, G.: A branch-and-cut algorithm for the resolution of large-scale symmetric traveling salesman problems. SIAM Rev. 33(1), 60–100 (1991)

Rahmaniani, R., Crainic, T.G., Gendreau, M., Rei, W.: The benders decomposition algorithm: a literature review. Eur. J. Oper. Res. 259(3), 801–817 (2017)

ROADEF/EURO challenge (2020). https://www.euro-online.org/media_site/reports/Challenge_Subject

Sharpe, W.F.: A linear programming approximation for the general portfolio analysis problem. J. Financial Quant. Anal. 6(5), 1263–1275 (1971)

Whitmore, G.A., Findlay, M.C.: Stochastic Dominance: An Approach to Decision-making Under Risk. Lexington Books, Lexington (1978)

Woodruff, D.L.: Ghost image processing for minimum covariance determinants. ORSA J. Comput. 7(4), 468–473 (1995)

Zholobova, A., Zholobov, Y., Polyakov, I., Petrosian, O., Vlasova, T.: An industry maintenance planning optimization problem using CMA-VNS and its variations. In: International Conference on Mathematical Optimization Theory and Operations Research, pp. 429–443. Springer, Berlin (2021)

Acknowledgements

The authors are grateful to two referees for valuable suggestions to improve the exposition of this paper. Many thanks also to Fred Glover for his constructive comments.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Vasquez, M., Buljubasic, M. & Hanafi, S. An efficient scenario penalization matheuristic for a stochastic scheduling problem. J Heuristics 29, 383–408 (2023). https://doi.org/10.1007/s10732-023-09513-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10732-023-09513-y