Abstract

As a state-of-the-art computational method for simulating rock fracturing and fragmentation, the combined finite-discrete element method (FDEM) has become widely accepted since Munjiza (2004) published his comprehensive book of FDEM. This study developed a general-purpose graphic-processing-unit (GPGPU)-parallelized FDEM using the compute unified device architecture C/C ++ based on the authors’ former sequential two-dimensional (2D) and three-dimensional (3D) Y-HFDEM IDE (integrated development environment) code. The theory and algorithm of the GPGPU-parallelized 3D Y-HFDEM IDE code are first introduced by focusing on the implementation of the contact detection algorithm, which is different from that in the sequential code, contact damping and contact friction. 3D modelling of the failure process of limestone under quasi-static loading conditions in uniaxial compressive strength (UCS) tests and Brazilian tensile strength (BTS) tests are then conducted using the GPGPU-parallelized 3D Y-HFDEM IDE code. The 3D FDEM modelling results show that mixed-mode I–II failures are the dominant failure mechanisms along the shear and splitting failure planes in the UCS and BTS models, respectively, with unstructured meshes. Pure mode I splitting failure planes and pure mode II shear failure planes are only possible in the UCS and BTS models, respectively, with structured meshes. Subsequently, 3D modelling of the dynamic fracturing of marble in dynamic Brazilian tests with a split Hopkinson pressure bar (SHPB) apparatus is conducted using the GPGPU-parallelized 3D HFDEM IDE code considering the entire SHPB testing system. The modelled failure process, final fracture pattern and time histories of the dynamic compressive wave, reflective tensile wave and transmitted compressive wave are compared quantitatively and qualitatively with those from experiments, and good agreements are achieved between them. The computing performance analysis shows the GPGPU-parallelized 3D HFDEM IDE code is 284 times faster than its sequential version and can achieve the computational complexity of O(N). The results demonstrate that the GPGPU-parallelized 3D Y-HFDEM IDE code is a valuable and powerful numerical tool for investigating rock fracturing under quasi-static and dynamic loading conditions in rock engineering applications although very fine elements with maximum element size no bigger than the length of the fracture process zone must be used in the area where fracturing process is modelled.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Understanding the mechanism of the fracturing process in rocks is important in civil and mining engineering and several other fields, such as geothermal, hydraulic, and oil and gas engineering, in which rock fractures play an important role. Numerical methods have been increasingly applied to analyze the fracturing process of rocks (e.g., Mohammadnejad et al. 2018). Due to the limitations of computing power/environments and the difficulty in extending some numerical techniques to three-dimension, numerous previous studies of rock fracture modelling using various numerical methods have been limited to two-dimensional (2D) analyses. However, successful simulation of the three-dimensional (3D) fracturing process is essential for better understanding and solving many practical rock engineering problems because rock fracturing essentially involves complex 3D processes. Moreover, meaningful developments and applications of 3D numerical methods for 3D rock fracture modelling have been limited in the past, except those that had full access to the most advanced high-performance-computing (HPC) environments, such as supercomputers. This situation has been dramatically improved due to the recent advancements in computer technology, such as general-purpose graphic-processing-unit (GPGPU) accelerators and the many integrated cores architecture, which can be installed even in a personal computer (PC) or a workstation and can achieve HPC environments in ordinary computer environments with relatively low costs. These improvements have allowed scientists and engineers to apply and develop 3D rock fracture computational methods that were not tractable previously.

Recent advances in computational mechanics have resulted in modelling complex rock fracturing processes using various numerical approaches. Generally, these approaches can be classified mainly into continuous and discontinuous methods. In the framework of rock fracture process analysis, the continuum-based methods include the finite element method (FEM), finite difference method (FDM), boundary element method (BEM), scaled boundary finite element method (SBFEM), extended finite element method (XFEM), several mesh-less/mesh-free methods, such as smoothed particle hydrodynamics (SPH), and those based on peridynamics and phase-field method. The discontinuum-based methods include the distinct element method (DEM), discontinuous deformation analysis (DDA), lattice model and molecular dynamics. Comprehensive reviews of recent advances in computational fracture mechanics of rocks can be found in recent review articles (Lisjak and Grasselli 2014; Mohammadnejad et al. 2018). For a realistic simulation of the rock fracturing process, numerical techniques must be capable of capturing the transition of rock from a continuum to a discontinuum through crack initiation, growth and coalescence. Therefore, increasing attention has been paid in recent years to these techniques, which can combine/couple the advantages of the aforementioned continuum-based and discontinuum-based methods while overcoming the disadvantages of each method (Lisjak and Grasselli 2014; Mohammadnejad et al. 2018).

The combined finite-discrete element method (FDEM) proposed by Munjiza et al. (1995) has recently attracted the attention of many engineers and researchers in the field of rock engineering which deals with rock fracturing and fragmentation. The method incorporates the advantages of both continuous and discontinuous methods and is able to simulate the transition from a continuum to a discontinuum caused by rock fracturing. The two main implementations of the FDEM include the open-source research code, Y code (Munjiza 2004), and the commercial code, ELFEN (Rockfield 2005). Due to the open-source nature of the Y code, several attempts have been made to actively extend it, such as Y-Geo (Mahabadi et al. 2014), Y-Flow (Yan and Jiao 2018; Yan and Zheng 2017), Irazu (Lisjak et al. 2018; Mahabadi et al. 2016), Solidity (Guo 2014; Solidity 2017) and HOSS with MUNROU (Rougier et al. 2011, 2014). Moreover, the authors have developed Y-HFDEM IDE (An et al. 2017; Liu et al. 2015, 2016; Mohammadnejad et al. 2017). In addition, using user-defined subroutines in the explicit module of the commercial software ABAQUS, Ma et al. (2018) recently implemented the FDEM to investigate the effects of different fracture mechanisms on impact fragmentation of brittle rock-like materials. The principles of all of the FDEM codes are based on continuum mechanics, the cohesive zone model (CZM) and contact mechanics, which make the FDEM extremely computationally expensive. Thus, few practical rock engineering problems can be solved using the 3D FDEM based on sequential central-processing-unit (CPU)-based implementations. Therefore, it is imperative to develop robust parallel computation schemes to handle large-scale 3D FDEM simulations with massive numbers of nodes, elements and contact interactions.

To date, several successful parallel implementations of FDEM codes have been reported using the message-passing interface (MPI) (Elmo and Stead 2010; Hamdi et al. 2014; Lei et al. 2014; Lukas et al. 2014; Rockfield 2005; Rogers et al. 2015; Rougier et al. 2014) and shared memory programming, such as OpenMP (Xiang et al. 2016). Among these, Lukas et al. (2014) proposed a novel approach for the parallelization of 2D FDEM using MPI and dynamic domain decomposition-based parallelization solvers, and they successfully applied the parallelized Y code to a large-scale 2D problem on a PC cluster, which should be able to be applied to practical 3D problems, although future developments are needed. In addition, Lei et al. (2014) successfully developed the concept of the virtual parallel machine for the FDEM using MPI, which can be adapted to different computer architectures ranging from several to thousands of CPU cores. Rougier et al. (2014) introduced the HOSS with MUNROU code, in which they used 208 processors for parallel computation controlled by MPI and developed novel contact detection and contact force calculation algorithms (Munjiza et al. 2011). The MUNROU code was then successfully applied to perform 3D simulations of a dynamic Brazilian tensile test of rock with a SHPB apparatus. ELFEN (Elmo and Stead 2010; Hamdi et al. 2014; Rockfield 2005; Rogers et al. 2015) uses MPI in its parallelization scheme and has been employed successfully in 2D and 3D simulations of rock fracturing process. For example, analyses of the 3D fracturing process in conventional laboratory tests using up to 3 million elements have been reported (Hamdi et al. 2014). Xiang et al. (2016) optimized the contact detection algorithm in their Solidity code and parallelized the code using OpenMP. Although they modelled a packing system with 288 rock-like boulders and achieved a speedup of 9 times on 12 CPU threads, the details of the applied algorithm and its implementation were not given. In general, MPI requires large and expensive CPU clusters to achieve the best performance. In addition, the application of shared memory programming such as OpenMP is limited by the total number of multiprocessors that can reside in a single computer; thus, MPI is still required for large-scale problems, in which each computer uses both OpenMP and MPI to transfer the data between multiple computers. This means that the hybrid MPI/OpenMP is necessary. In all of these approaches, more than 100 CPUs are necessary to achieve a speed-up of more than 100 times compared with sequential CPU-based FDEM simulations, which results in the need for a larger space or expensive HPC environments.

In addition to the CPU-based parallelization schemes, a GPGPU accelerator controlled by either the Open Computing Language (OpenCL) (Munshi et al. 2011) or the Compute Unified Device Architecture (CUDA) (NVIDIA 2018) can be considered as another promising method for the parallelization of FDEM codes. Hundreds and thousands of GPU-core processors can reside and concurrently work in a small GPGPU accelerator within an ordinary laptop/desktop PC or a workstation, which also has lower energy consumption than CPU-based clusters. Zhang et al. (2013) developed a CUDA-based GPGPU parallel version of the Y code (2D) without considering the fracturing process and contact friction. Batinić et al. (2018) implemented a GPGPU-based parallel FEM/DEM that is based on the Y code to analyze cable structures using CUDA. However, none of these implementations have been employed to simulate rock fracturing. In this regard, a GPGPU-based FDEM commercial code, namely Irazu (Lisjak et al. 2017, 2018), has just been developed with OpenCL and used successfully in rock fracture simulations. Irazu is the only available commercial GPGPU-based FDEM code with OpenCL that is currently capable of modelling the rock fracturing process (Lisjak et al. 2017, 2018). In addition, the authors have developed a free FDEM research code, Y-HFDEM IDE (An et al. 2017; Liu et al. 2015, 2016; Mohammadnejad et al. 2017), and parallelized its 2D implementation using GPGPU with CUDA C/C ++ (Fukuda et al. 2019). This paper focuses on parallelizing the 3D implementation of the Y-HFDEM IDE code using GPGPU with CUDA C/C ++, which is completely different from Irazu’s GPGPU parallelization using OpenCL. Additional studies are required to verify and validate the GPGPU-based 3D FDEM code. Furthermore, for any newly implemented GPGPU-based codes, it is desirable to describe their complete details because the implementation of any GPGPU-based code is generally different from that of CPU-based sequential codes. Most importantly, there are no freely available GPGPU-based FDEM codes, whereas the GPGPU-based Y-HFDEM IDE is free to use, and the freely available GPGPU-parallelized 2D/3D Y-HFDEM IDE software may significantly contribute to researches in the field of rock engineering.

To validate and calibrate newly developed codes in the field of rock mechanics, two standard rock mechanics laboratory tests, the uniaxial compressive strength (UCS) tests and Brazilian tensile strength (BTS) tests, have often been modelled to simulate the fracturing process and associated failure mechanisms of rock materials under quasi-static loading conditions. Although the UCS and BTS tests have been actively modelled using 2D FDEM, their modelling in the framework of 3D FDEM has been very limited and less well explained. For example, UCS and BTS tests were three-dimensionally simulated using Y-Geo (Mahabadi et al. 2014) with a relatively large element size (2 mm for both tests) and a very high loading velocity (1 m/s) resulting in the appearance of dynamic effects in the model, such as multi-fracture propagation around the center of the BTS models, which is also shown in the dynamic BTS simulation discussed in Sect. 3.3. Moreover, although Mahabadi et al. (2014) observed splitting fractures in their BTS modelling, the post-peak behavior of the stress–strain curve was not well demonstrated. Later, Lisjak et al. (2018) introduced IRAZU based on GPGPU parallelization and three-dimensionally simulated UCS and BTS tests using a finer element size (1.5 mm for both tests) and slower loading rate (0.1 m/s). In comparison with the aforementioned simulations by Mahabadi et al. (2014), many reasonable results were achieved by Lisjak et al. (2018). Mahabadi et al. (2014) may have chosen the relatively high loading rate because 3D FDEM modelling using Y-Geo is computationally demanding, which may have required them to find ways to reduce the running time and accordingly the time required for calibration, although any remedies should not significantly affect the obtained results. Moreover, Lisjak et al. (2018) claimed that the effect of their high loading rates was compensated for by the critical damping scheme. However, it should be noted that the critical damping scheme implemented in almost all FDEM simulations is intended to model the quasi-static loading conditions using the dynamic relaxation method, which cannot reduce the effect of the loading rate, unlike what was claimed by Lisjak et al. (2018). Guo (2014) investigated 3D FDEM modelling of BTS tests at different loading rates using the critical damping scheme and showed that both the fracture pattern and obtained peak load were affected by the loading rate when the velocity of the loading platens is higher than 0.01 m/s (i.e., loading rate = 0.02 m/s). Correspondingly, the findings of Guo (2014) support the statement that “the critical damping scheme used in almost all FDEM simulations cannot reduce the effect of the loading rate”. Therefore, for an accurate simulation with quasi-static loading conditions in the framework of the FDEM, the loading rate should be selected correctly to avoid the dynamic effects of the loading rate before selecting any input parameters, which is another strong motivation for this study to conduct 3D simulations of UCS and BTS tests using the GPGPU-parallelized 3D Y-HFDEM IDE.

Furthermore, 3D FDEM simulations of dynamic fracturing processes of rock materials under dynamic loads, such as SHPB tests (e.g. Zhang and Zhao 2014), have been much more limited; in particular, 3D FDEM simulations of the full system of the SHPB test are rare. However, for any qualitative and quantitative discussion, accurate modelling and reasonable calibration of the SHPB test are paramount for any meaningful numerical simulations of the dynamic fracturing process in rock such as blasting. In fact, to date, only four peer-reviewed international journal papers have been reported for modelling SHPB tests in the framework of the 2D/3D FDEM. Using the 2D FDEM, Mahabadi et al. (2010) modelled the dynamic fracturing process of Barre granite in a dynamic BTS test with an SHPB apparatus and found good agreement between the numerical simulations and experiments. In their modelling, each of the SHPB bars was modelled as a large single triangular element, and not only was the element assigned mechanical properties, but velocities were prescribed to all nodes. This may cause the given mechanical properties except for the contact penalty and contact friction to have no meaning and the element used to model the SHPB bar to become rigid. Moreover, the mode I and mode II fracture energies cannot be distinguished, which has generally been recognized as important for reasonable simulations of rock fracturing by the current FDEM community. Using the 3D FDEM (HOSS), Rougier et al. (2014) modelled the dynamic BTS tests of weathered granite documented in Broome et al. (2012) by explicitly considering elastically deformable SHPB bars. The results showed remarkably good agreement with those from the experiment. Subsequently, although 2D HOSS was applied, Osthus et al. (2018) proposed a novel and detailed calibration procedure based on a general and probabilistic approach for numerical simulations of dynamic BTS tests of the weathered granite modelled by Rougier et al. (2014) with the SHPB apparatus. Furthermore, by targeting the same SHPB-based dynamic BTS tests of the same weathered granite, Godinez et al. (2018) conducted several sensitivity analyses with different combinations of input parameters using 2D HOSS. They showed that the simulation results are most sensitive to the parameters related to the tensile and shear strengths and the fracture energies, which are valuable information. In this way, additional knowledge has gradually been accumulated for the realistic modelling of dynamic BTS tests with the SHPB apparatus in the FDEM community. However, although the target rock in previous studies (Mahabadi et al. 2010; Osthus et al. 2018; Rougier et al. 2014) was granite, in which heterogeneity and anisotropy generally play important roles, none of the studies considered or discussed these important characteristics. In light of these results, further investigation of FDEM modelling is needed, especially for 3D modelling of dynamic fracturing of rocks of various types, because only one case of the 3D dynamic fracturing of granite in a dynamic BTS test has been modelled using the FDEM to date.

Based on this background, this paper aims to first explain the theory and algorithm of the recently developed GPGPU-parallelized FDEM implemented in the 3D Y-HFDEM IDE code. The capability of the 3D Y-HFDEM IDE code in rock engineering applications is then demonstrated by modelling the fracturing process of rocks and the movements of the resultant rock fragments under a series of quasi-static and dynamic loading conditions. Thus, this paper may provide a basis for further improvement and development of the FDEM codes based on GPGPU parallelization for modelling rock fracturing, especially the dynamic fracturing of rock. Many previous publications have focused on the advantages of the FDEM. However, although the FDEM is very useful, there is currently no universal/perfect method to simulate rock fracturing/fragmentation processes, so the disadvantages of this method other than the high computational burden must also be carefully addressed. In fact, the calibration of the FDEM, which is the most fundamental procedure for any meaningful numerical simulation, tends to be more complex than in non-combined methods. Although the calibration method for the 2D FDEM has been reported in the literature (e.g., Tatone and Grasselli 2015), it was found during the development of the 3D FEM/DEM code that the calibration of 3D simulations requires more careful treatment and is more sensitive to the input parameters than the 2D counterparts. Especially in 3D simulations of the fracturing process of rocks due to dynamic loading involving significant fragmentation of hard rock, special care must be paid to avoid spurious fracture modes, which have sometimes been misunderstood or omitted/hidden in the literature.

This paper is organized as follows. The theory used in the 3D FDEM, i.e., Y-HFDEM IDE code, is first introduced, and its implementation in the framework of the GPGPU parallel computation is then explained in detail. Subsequently, the accuracy and capability of the developed code are investigated by modelling several common examples in rock mechanics, including modelling the 3D fracturing process of rocks in BTS and UCS tests, which have been used to benchmark new computational methods for rock fracturing. The entire SHPB testing system of 3D dynamic BTS tests is then simulated using the newly developed GPGPU-parallelized hybrid FDEM, and the numerical results are compared with those from SHPB experiments by focusing on the dynamic fracturing process of rock in the 3D dynamic Brazilian tests. Finally, conclusions are drawn from this study.

2 GPGPU-Parallelized 3D FDEM

The FDEM code “Y-HFDEM 2D/3D IDE” was originally developed using object-oriented programming with visual C++ (Liu et al. 2015) based on the CPU-based sequential open-source Y 2D/3D libraries (Munjiza 2004; Munjiza et al. 2010) and OpenGL. The Y-HFDEM 2D/3D IDE code can significantly simplify the process of building and manipulating the input models and greatly reduce the possibility of erroneous model setup, and it can also display the calculated results graphically in real time with OpenGL. The code has been successfully employed in simulations of rock fracturing in various geotechnical engineering problems (An et al. 2017; Liu et al. 2015, 2016; Mohammadnejad et al. 2017). Because of the nature of sequential programming, it has mainly been applied to small-scale 2D problems using relatively rough meshes. To overcome this limitation, the parallel programming scheme using the GPGPU controlled by CUDA C/C++ was implemented in the code in a recent study by the authors (Fukuda et al. 2019) for 2D modelling and in this study for 3D modelling. Because the various FDEM-based codes reviewed in Sect. 1 were independently developed by each research institute/organization and have different features, the fundamental features of the 3D Y-HFDEM IDE code and its GPGPU-based parallelization scheme are explained in detail in the following subsections.

2.1 Fundamental Theory of 3D Y-HFDEM IDE

The principles of the FDEM are based on continuum mechanics, nonlinear fracture mechanics based on the CZM and contact mechanics, all of which are formulated in the framework of explicit FEM (Munjiza 2004). Therefore, this section focuses on introducing the features of 3D Y-HFDEM IDE unavailable in other FDEMs such as the hyperelastic model, the irreversible damage during unloading, and the extrinsic cohesive zone model. Of course, these features need to be introduced in context and some of the fundamental FDEM theory is reviewed here to provide the context, which is also motivated by the fact that there are some poor, unclear and even incorrect descriptions in some literatures of the FDEM community.

The continuum behavior of materials, including rocks, is modelled in 3D by an assembly of continuum 4-node tetrahedral finite elements (TET4s) (Fig. 1a). Two types of isotropic elastic constitutive models have been implemented. In the first type, which was implemented in the original Y-code and has been widely used, the isotropic elastic solid obeys Eq. (1) of the neo-Hookean elastic model:

where σij denotes the Cauchy stress tensor, Bij is the left Cauchy–Green strain, λ and µ are the Lame constants, J is the determinant of the deformation gradient, η is the viscous damping coefficient, δij is the Kronecker delta, and Dij is the rate of deformation tensor. However, Eq. (1) cannot model anisotropic elasticity, which is important in the field of rock engineering. Thus, in the second type, a hyperelastic solid obeying Eqs. (2) and (3) is also implemented:

where SKL denotes the second Piola–Kirchhoff stress tensor, CKLMN is the effective elastic stiffness tensor, EMN is the Green–Lagrange strain tensor, and FiK is the deformation gradient. The Einstein’s summation convention applies to Eqs. (2) and (3). By properly setting CKLMN in Eq. (2), both isotropic and anisotropic elastic behaviors can be simulated although only isotropic behavior is considered in this study since the rocks used in this study are better modelled as isotropic materials. The small strain tensor is not used in Eqs. (1) and (2); therefore, large displacements and large rotations can be simulated. Equation (1) is used for the 3D UCS and BTS modellings presented in Sect. 3.2 while both Eq. (1) and Eqs. (2, 3) are used for the 3D dynamic BTS modelling presented in Sect. 3.3 and no noticeable differences between Eq. (1) and Eqs. (2, 3) are observed since rock deformation is small. To simulate the deformation process of materials under quasi-static loading, η = ηcrit = 2 h√(ρE) is used to achieve critical damping (Munjiza 2004), where h, ρ, and E are the element length, density and Young’s modulus, respectively, of the target material. The value of σij within each TET4 is converted to the equivalent nodal force fint (e.g., Munjiza et al. 2015).

Fracturing of rock under mode I and mode II loading conditions (i.e., opening and sliding cracks, respectively) is modelled using the CZM with the concept of a smeared crack (Munjiza et al. 1999). To model the behavior of the fracture process zone (FPZ) in front of the crack tips, tensile and shear softening is applied using an assembly of 6-node initially zero-thickness cohesive elements (CE6s) (Fig. 1a) as a function of the crack opening and sliding displacements, (o, s), respectively (Fig. 1b). Two methods can be used for the insertion of the CE6s. One is to insert the CE6s into all of the boundaries of the TET4s at the beginning of the analysis, which is known as the intrinsic cohesive zone model (ICZM), and the second is to adaptively insert the CE6s into particular boundaries of the TET4s with the help of adaptive remeshing techniques where a given failure criterion is met, which is referred to as the extrinsic cohesive zone model (ECZM) (Zhang et al. 2007; Fukuda et al. 2017). Many existing FDEM codes, such as the family of Y codes that includes 3D Y-HFDEM IDE, have employed the ICZM, whereas some codes, such as ELFEN, use the ECZM. One of the advantages of the ICZM is that the implementation and application of parallel computing algorithms is straightforward, but an “artificial” intact and elastic behavior of CE6s before the onset of fracturing must be specified, which requires the introduction and correct estimation of penalty terms for CE6s and the careful selection of the time step increment, Δt, to avoid numerical instability. In the GPGPU-based 3D Y-HFDEM IDE, the normal and shear cohesive tractions, (σcoh and τcoh, respectively), acting on each face of the CE6s are computed using Eqs. (4) and (5) assuming tensile and shear softening behaviors, respectively:

where op and sp are the “artificial” elastic limits of o and s, respectively, ooverlap is the representative overlap when o is negative, Ts is the tensile strength of a CE6, c is the cohesion of a CE6, and ϕ is the internal friction angle of a CE6. Positive o and σcoh values indicate crack opening and a tensile cohesive traction, respectively. Equation (5) corresponds to the Mohr–Coulomb shear strength model with a tension cutoff. The cohesive tractions σcoh and τcoh are applied to the opposite directions of the relative opening and sliding in a CE6, respectively. The artificial elastic behavior of each CE6 characterized by op and sp along with ooverlap is necessary when the ICZM is used to connect the TET4s to express the intact deformation process, which is given as follows and has been used in most FDEM codes (Munjiza et al. 1999):

where Popen, Ptan, and Poverlap are the artificial penalty terms of the CE6 for opening in the normal direction, sliding in the tangential direction and overlapping in the normal direction, respectively, and h is the element length. In this paper, the terminologies “fracture penalties” for Popen, Ptan, and Poverlap used in previous publications are intentionally avoided because they should not be considered as penalties for the fracturing behavior but rather as those for controlling the artificial elastic (intact) regime of CE6. The values of Popen, Ptan, and Poverlap can be considered as the artificial stiffnesses of the CE6 for opening, sliding and overlapping, respectively. Ideally, their values should be infinity to satisfy the elastic (intact) behavior of rocks according to Eqs. (1) or (2), resulting in the requirement of the infinitesimal Δt. Therefore, reasonably large values of the artificial penalty terms of CE6s compared to the Young’s modulus or Lame constants are required because it is impossible to use infinity in actual numerical simulations. Otherwise, the intact behavior of the bulk rock shows significantly different (i.e., softer) behavior from that specified by Eqs. (1) or (2), and the elastic constants used in these elastic constitutive equations completely lose their meanings. In addition, during the development of the 3D Y-HFDEM IDE code, the authors found that the sufficiently large values for the penalty terms (10 times the Young’s modulus of rock, Erock, in most cases) recommended in many previous studies that applied the FDEM are not sufficient to satisfy the continuum elastic behavior of Eqs. (1) or (2); this topic is investigated and discussed in Sect. 3 for both quasi-static and dynamic loading problems. Some studies argue that the artificial penalty terms are mechanical properties. However, in that case, the CE6s must be considered as joint elements, and the artificial penalty terms of the CE6s should be called joint stiffnesses to describe discontinuous media, such as preexisting joints. The function, f(D), in Eqs. (4) and (5) is the characteristic function for the tensile and shear softening curves (Fig. 1b) and depends on a damage value D of the CE6. When 0 < D < 1 or D = 1 for a CE6, the CE6 can be considered to be a microscopic or macroscopic crack, respectively. The following definitions of D and f(D) are used to consider not only the mode I and II fracturing modes but also a mixed-mode I–II fracturing mode (Mahabadi et al. 2012; Munjiza et al. 1999):

where A, B and C are intrinsic rock properties that determine the shapes of the softening curves, and ot and st are the critical values of o and s, respectively, at which a CE6 breaks and becomes a macroscopic fracture. To avoid unrealistic damage recovery (i.e., an increase of f), the following treatment has been implemented in the code. If the trial f computed from Eq. (10) at the current time step becomes larger than that at the previous time step, fpre, a condition of f = fpre is assigned to avoid unrealistic damage recovery. The ot and st in Eq. (9) satisfy the mode I and II fracture energies GfI and GfII (Fig. 1b) specified in Eqs. (11) and (12), respectively:

where Wres is the amount of work per area of a CE6 done by the residual stress term in the Mohr–Coulomb shear strength model. Note that in the current formulation, mode II and III fracturing modes are not distinguished, and it is assumed that in-plane (mode II) and out-plane (mode III) responses of the micro cracks (i.e., CE6s) are simply described by the parameter GfII because the clear definition of crack tips and conducting reproducible/reliable mode II and Mode III fracture toughness tests are challenging. This paper uses the same f(D) with A, B and C equal to 0.63, 1.8, and 6.0, respectively (Munjiza et al. 1999), for both mode I and II fracture processes because of the lack of experimental data. However, it is worth mentioning that the recent studies by Osthus et al. (2018) and Godinez et al. (2018) using the FDEM code “2D HOSS” showed that the shape of the softening curve had minor influences on the obtained results and that the tensile and shear strengths and fracture energies are the main affecting factors. Unloading (i.e., a decrease of o or |s|) can also occur during the softening regime (i.e., o > op or |s| > sp) (see Fig. 1b), which is modelled based on Eqs. (13) and (14) (Camacho and Ortiz 1996):

In each CE6, the computed σcoh and τcoh are converted to the equivalent nodal force fcoh using a 3-point or 7-point Gaussian integration scheme depending on the required precision of the simulation. When either ot or st is achieved in a CE6, the CE6 is deactivated, and its surfaces are considered as new macroscopic fracture surfaces that are subjected to contact processes.

The contact processes between the material surfaces, including the new macroscopic fractures created by the separation of each CE6, are modelled by the penalty method (Munjiza 2004); a complete and excellent explanation of the method is given in the literature (Munjiza 2004). As a brief explanation, when any two TET4s subjected to contact detection (see Sect. 2.2 for the implementation of contact detection in the framework of GPGPU) are found to overlap each other, the contact potential due to the overlapping of the two TET4s (i.e., the contacting couple) is exactly computed. The normal contact force, fcon_n, is then computed for each contacting couple, which acts normally to the contact surface and is proportional to the contact potential. The proportional factor is called the normal “contact penalty”, Pn_con. After the normal contact force, fcon_n, and its acting point are obtained, the nominal normal overlap, on, and relative displacement vector, Δuslide, at the acting point of fcon_n are readily computed. The contact damping model proposed by An and Tannant (2007) (Fig. 2) can also be applied if the role of contact damping is very important. When this scheme is applied, the normal contact force, fcon_n, described above is regarded as a trial contact force, (fcon_n)try, and a trial contact stress (σcon_n)try is then computed by dividing (fcon_n)try by the contact area, Acon. Equation (15) is then used to determine the contact stress σcon_n:

where T is the transition force, b is the exponent, and on_max is the maximum value of on experienced during the loading process at the contact. T limits σcon_n and defines the transition between a linearly elastic stress–displacement relationship and a ‘recoverable’ displacement at a constant contact stress. The values of T may be related to the physical properties of rocks, such as the uniaxial compressive strength. The exponent b adjusts the power of the damping function that is applied to the rebound or extension phase of the contact. The value of the exponent has an effect on the energy loss during an impact event. A similar contact damping model is implemented in the 2D Y-Geo code in the framework of the FDEM, in which only b is considered (Mahabadi et al. 2012). After σcon_n is computed using Eq. (15), it is converted to fcon_n (= Acon × σcon_n). The verification of the implemented contact damping is discussed in Sect. 3.1. After fcon_n is determined, the magnitude of the tangential contact force vector, |fcon_tan|, is computed according to the classical Coulomb friction law. The |fcon_tan| is computed based on Eq. (16):

where μfric is the friction coefficient between the contact surfaces. The tangential contact force, fcon_tan, is applied parallel to the contact surface in the opposite direction to Δuslide. The verification of the implementation of the contact friction, which is important in any simulation of fracturing due to quasi-static loading, is discussed in Sect. 3.1. In each contacting couple, the contact force is converted to the equivalent nodal force fcon (Munjiza 2004).

[modified after An and Tannant (2007)]

Elastic–inelastic power function model for contact damping

By computing the nodal forces described above, the following equation of motion, Eq. (17), is obtained and solved in the framework of the explicit FEM (Munjiza 2004):

where M is a lumped nodal mass computed from the initial TET4 volume and element mass density ρ, u is the nodal displacement, and fext is the nodal force corresponding to the external load. The central difference scheme is employed for the explicit time integration to solve Eq. (17). A careful selection of the time step, Δt, is necessary to avoid numerical instability and spurious fracture modes. An excellent explanation of the reasonable selection of Δt in the ordinary FDEM can be found in Guo (2014).

2.2 GPGPU-Based Parallelization of 3D Y-HFDEM IDE by CUDA C/C++

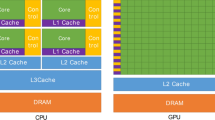

To speed up the simulation process of the 3D Y-HFDEM IDE code, a parallel computation scheme based on the NVIDIA® GPGPU accelerator is incorporated. In our case, the computation on the GPGPU device is controlled through NVIDIA’s CUDA C/C++ (NVIDIA 2018), which is essentially an ordinary C/C++ programming language with several extensions that make it possible to leverage the power of the GPGPU in the computations. The CUDA programming model uses abstractions of “threads”, “blocks” and “grids” (Fig. 3). A greater degree of parallelism occurs within the GPGPU device itself. Functions, also known as “kernels”, are launched on the GPGPU device and are executed by many “threads” in parallel. A “thread” is just an execution of a “kernel” with a given “thread index” within a particular “block”. As shown in Fig. 3, a “block” is a group of threads, and a unique “block index” is given to each “block”. The “block index” and “thread index” enable each thread to use its unique “index” to globally access elements in the GPGPU data array such that the collection of all threads processes the entire data set in massively parallel manner. The “grid” is just a group of “blocks”. Only a single “grid” system is used in this study. The concept of a GPGPU cluster with a massive number of GPGPU accelerators is also possible, although this is beyond the scope of this paper. The “blocks” can execute concurrently or serially depending on the number of streaming processors available in a GPGPU accelerator. Synchronization between “threads” within the same “block” is possible, but no synchronization is possible between the “blocks”. In each “thread” level, the corresponding code that the “threads” execute is very similar to the CPU-based sequential code (see Fukuda et al. 2019), which is one of the advantages of the application of CUDA C/C++. For example, the Quadro GP100 accelerator (in Pascal generation) used in this paper contains 56 and 3584 streaming processors and CUDA cores (NVIDIA 2018), respectively. Higher computational performance of the GPGPU-parallelized code running on the same GPU accelerator can be achieved than that of ordinary CPU-based sequential codes. The number of “blocks” per “grid” (NBpG) and the number of “threads” per “block” (NTpB) can be changed to speed up the GPGPU (Fig. 3). The current version of 3D Y-HFDEM IDE normally sets NTpB to either 256 or 512, and NBpG is automatically computed by dividing the total number of threads (Nthread) in each “kernel” by NTpB, in which an additional block is needed if Nthread/NTpB is not a multiple of NTpB. The value of Nthread is set to be equal to the total number of TET4s, CE6s, contact couples or nodes depending on the purpose of each “kernel”.

In the GPGPU implementation of 3D Y-HFDEM IDE, the computations for each TET4 (fint and M), CE6 (fcoh), contact couple (fcon) or nodal equation of motion (Eq. (17)) are assigned to each GPGPU “kernel” as shown in Fig. 4 and processed in a massively parallel manner. The CUDA code used in each “kernel” is similar to the functions/subroutines in CPU-based sequential codes, which also holds true for the computations shown in Fig. 4. Thus, most parts of the original sequential CPU-based code can be used with minimum modifications. For the computation of the contact force, fcon, “TET4 to TET4 (TtoT)” contact interaction kinematics are used in the earliest versions of the Y3D code (Munjiza 2004). This TtoT approach exactly considers the geometries of both the contactor and target TET4s, and the integration of the contact force distributed along the surfaces of the TET4s is performed analytically. Because this approach integrates the contact forces exactly, it is precise although quite time consuming. As pointed out in the literature (Lei et al. 2014), the contact interaction in 3D can be further simplified by “TET4 to point (TtoP)” contact interaction kinematics, which make the implementation simpler and more time efficient. However, the precision of the computed contact force using the TtoP approach is less accurate unless a sufficient number of target points per TET4 are used. Thus, the TtoT approach is intentionally applied for all of the numerical simulations in this paper instead of the TtoP approach to ensure the precision of the computed contact force.

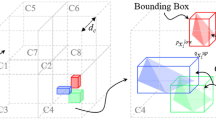

A flowchart of the GPGPU-based 3D Y-HFDEM IDE is shown in Fig. 5. One of the challenging tasks in Fig. 5 is the implementation of contact detection to identify each contacting couple only through the GPGPU without a sequential computational procedure. For sequential CPU implementation, powerful and efficient contact detection algorithms, such as No Binary Search and Munjiza–Rougier contact detection algorithms, have been proposed (Munjiza 2004; Munjiza et al. 2011), and these can achieve the fastest (i.e., linear) neighbor searches with the computational complexity of O(N), in which N is the number of elements and the required computation for the contact detection is proportional to the number of TET4 candidates subjected to contact detection. However, these contact detection algorithms are not straightforward to be implemented in the GPGPU-based code. In the GPGPU-based 3D Y-HFDEM IDE code, because the FDEM modelling requires a fine mesh that often consists of TET4s with similar sizes, the following contact detection algorithm is implemented. In this algorithm, the analysis domain comprising a massive number of TET4s is subdivided into multiple equal-sized (nx, ny, nz) cubic sub-cells (Fig. 6) along each direction, so the largest TET4 in the analysis domain is completely included in a single sub-cell. In this way, the center point of every TET4 can always belong to a unique sub-cell. Using integer coordinates (ix, iy, iz) (ix = 0,…, nx−1, iy = 0,…, ny−1, iz = 0,…, nz−1) for the location of each sub-cell (Fig. 6), unique hash values, h (= iz × nx + iyx ny + iy × nx + ix), are assigned to each sub-cell. The subsequent contact detection procedure is explained using a simplified example, as shown in Fig. 7, where ten TET4 candidates with similar sizes are subjected to contact detection. First, all of the TET4s are mapped into integer coordinates (ix = 0, 1 and 2, iy = 0, 1 and 2, and iz = 0, 1 and 2) with nx = ny = nz = 3 along with the computation of the hash values in each sub-cell. In this way, the list L-1 is readily constructed using the massively parallel computation based on the similar concept shown in Fig. 4 by assigning the computation of each TET4 to each CUDA “thread”. The IDs of the TET4s in the list L-1 in Fig. 7 are then sorted from the smallest to largest according to the hash values h as keys, which generates the list L-2 in Fig. 7. The radix sorting algorithm optimized for CUDA (Satish et al. 2009) and implemented in the open-source “thrust” library is used for the key-sorting by the hash values; therefore, this procedure can also be processed in a massively parallel manner. Utilizing the list L-2 and the GPGPU device’s shared memory (NVIDIA 2018), the list L-3 in Fig. 7 is further constructed in a GPGPU “kernel”, which makes it possible to identify the first and last indices for the particular hash value in the list L-2. Therefore, with the lists L-2 and L-3, the IDs of all the TET4s included in a particular sub-cell with its unique hash value are readily available. Finally, for a particular TET4 with its sub-cell position, it is sufficient to only search its adjacent 27 sub-cells [or 14 sub-cells using the concept of a contact mask (Munjiza 2004)] for contact detection, which makes it possible to achieve efficient contact detection using only the GPGPU device and without a sequential CPU procedure. When the sizes of the TET4s are completely different, the size of the cubic sub-cell is very large, resulting in very inefficient contact detection; thus, other parallel neighbor search schemes, such as the Barnes–Hut tree algorithm (Burtscher and Pingali 2011), should be used for efficient contact detection. The contact detection algorithm used in the GPGPU-based 3D Y-HFDEM IDE is similar to that applied by Lisjak et al. (2018) in terms of the applied hash-based contact detection algorithm. However, Lisjak et al. (2018) further incorporated the hyperplane separation theorem as the second step of contact detection. Unfortunately, Lisjak et al. (2018) did not discuss the algorithm used to generate the hash-table in the framework of OpenCL, which is also one of very important aspects of the performance gain, and the effect of introducing the second step. Furthermore, no discussion was given for the sped up performance of Irazu for the 3D simulation. Instead, the computing performance of our GPGPU-parallelized 3D Y-HFDEM IDE is discussed in Sect. 3.4 of this study proving that the GPGPU-parallelized 3D Y-HFDEM IDE is very efficient in terms of contact detection and has the computational complexity of O(N).

Therefore, the GPGPU-parallelized 3D Y-HFDEM IDE code can run in a completely parallel manner on the GPGPU device, and no sequential processing is necessary, except for the input and output procedures. The data transfer from the GPGPU device to the host computer is always necessary to output the analysis results, the time of which is often negligible compared to the entire simulation time for most Y-HFDEM IDE simulations. The results can be visualized in either OpenGL implemented in the 3D Y-HFDEM IDE code (Liu et al. 2015) or in the open-source visualization software Paraview (Ayachit 2015).

Finally, it should be noted that an efficient contact calculation activation approach has been applied in some publications about the FDEM in the framework of ICZM [e.g., Section 2.3.3.2 along with Fig. 2.14 in Guo (2014)]. In this approach, only the TET4s in the vicinity of newly broken/failed CE6s become contact candidates and are added to the contact detection list. One advantage of this approach is that the contact detection and contact force calculations are necessary only for the initial material surfaces by the time when the broken/failed CE6s are generated; thus, dramatic savings in the computational time for the contact detection are possible. This approach is called an efficient contact detection activation (ECDA) approach hereafter. However, in the ICZM-based FDEM simulation of hard rocks under compressive loading conditions, most of the TET4s can overlap during the progress of compression even before the generation of broken/failed CE6s. In this case, if the amount of overlap is not negligible when broken CE6s are generated, this ECDA approach generally results in the sudden application of the contact force like a step-function, which can easily cause numerical instability and result in unrealistic/spurious fragmentation. To avoid the numerical instability and spurious fracturing modes, an infinitesimally small Δt must be used, which makes the simulation intractable. One way to avoid this instability is to monitor the overlapping of the CE6s. If a significant overlap is detected in a CE6, the TET4s in the vicinity of the CE6 are immediately considered as new candidates for the contact detection although the threshold of this “significant overlap” is problem-dependent. Another simple remedy is to add all of the TET4s as contact candidates. This approach is called the brute-force contact detection activation approach. Although it is too time consuming, the brute-force contact detection activation approach can work as a remedy for a wide range of rock conditions, including very hard rock, which is used for the 3D dynamic BTS modelling of marble with the split Hopkinson pressure bar (SHPB) testing system. It should be noted that all FDEM simulations must deal with this problem carefully to avoid inaccurate simulation results, although it has not been reported in the literature. Otherwise the obtained fracture patterns may be spurious.

3 Numerical Tests and Code Validation

This section aims to verify and validate the GPGPU-parallelized 3D Y-HFDEM IDE code by conducting several numerical simulations. All of the numerical simulations in this section are conducted using the GPGPU-based code.

3.1 Verifications of Contact Damping and Contact Friction

To assess the accuracy of the contact damping model implemented in Sect. 2.1, a simple impact test is modelled (Fig. 8a) using the GPGPU-parallelized 3D Y-HFDEM IDE. The model is the extension of the 2D model reported by Mahabadi et al. (2012) to 3D, and the obtained results are discussed. The model consists of a spherical elastic body with a radius of 0.1 m impacting a fixed rigid surface vertically. The elastic body is not allowed to fracture in this model. Following the study (Mahabadi et al. 2012), gravitational acceleration is neglected, the density of the elastic body is 2700 kg/m3, and the initial total kinetic energy of the elastic body, \(E_{\text{kin}}^{0}\), before the impact event is 565.5 J. Because the Lame constants λ and µ for the elastic body are not available in that study (Mahabadi et al. 2012), it is simply assumed that λ = µ = 5.0 GPa and that the viscous damping coefficient η = 0 for internal viscous damping. Contact friction is also neglected. Thus, energy dissipation is only due to the contact damping to make it simple to discuss the effect of the contact damping. Parametric analyses are conducted by changing the exponent b, the transition force T in Eq. (15) and the normal contact penalty Pcon_n between the elastic body and the rigid surface. The normalized total kinetic energy of the elastic body by \(E_{\text{kin}}^{0}\) as a function of time is monitored during the parametric analyses.

Contact damping verification: a 3D model configuration [modified after Mahabadi et al. (2012)], b comparison between numerical and theoretical results

Figure 8b compares five cases with b values equal to 1, 2, 5, 20, and 30 when T = ∞ (very large value, i.e., 1.0e+30 Pa) and Pcon_n = 0.1 GPa. The case with b = 1 corresponds to an elastic contact; thus, no energy dissipation occurs due to the contact, although a very small decrease in the kinetic energy actually occurs after the impact because a small amount of the kinetic energy is converted to the strain energy of the elastic body. As the value of b increases, the amount of kinetic energy dissipates from the system increases. This behavior is similar to that reported in the literature (Mahabadi et al. 2012) using a sequential 2D FDEM. The cases with different values of Pcon_n (= 0.1 GPa and 10 GPa) but constant b = 2 and T = ∞ show that the same b does not result in the same energy dissipation when Pcon_n is different. This is a reasonable outcome because the maximum value of the nominal normal overlap on_max in Eq. (15) during the impact event changes for different values of Pcon_n (Munjiza 2004). However, this important fact has not been reported in the literature (Mahabadi et al. 2012). Likewise, the two cases with different values of T (= ∞ and 1 MPa) but constant b = 2 and Pcon_n = 0.1 GPa show different amounts of energy dissipation, which can be also explained by the change in on_max. These expected results verify that the contact detection and computation of fcon are properly processed in the GPGPU-based code, although this paper does not consider contact damping in the following numerical simulations because the calibration of these parameters against rock fall experiments is beyond the scope of this paper.

To assess the accuracy of the contact friction model implemented in Sect. 2.1, a simple sliding test, which was originally suggested by Xiang et al. (2009) as a 2D problem, is modelled as a 3D problem, and the obtained results are compared with those from theoretical analyses. The model consists of a simple cube sliding along a fixed plane with a friction coefficient of μfric = 0.5. The cube is assigned an initial velocity, which varies from 1 m/s to 6 m/s. With each initial velocity, the cube slows and stops due to the friction between the sliding cube and the rigid base. Theoretically, the sliding distance can be defined as a function of the initial velocity (vi), gravitational acceleration (g) and the friction coefficient (μfric) through Eq. (18).

Figure 9 shows an excellent agreement between the numerical simulation and the theoretical solution from Eq. (18), which validates the accuracy of the implemented contact friction model.

Contact friction verification: a 3D model configuration [modified after Xiang et al. (2009)], b comparison between numerical and theoretical results

3.2 3D FDEM Modelling of the Failure Process of Rock Under Quasi-Static Loading Conditions

In this subsection, two standard rock mechanics laboratory tests, the UCS test and BTS test, of a relatively homogeneous limestone are modelled to investigate the capabilities of the GPGPU-parallelized 3D Y-HFDEM IDE for simulating the fracturing process and associated failure mechanism of the rock under quasi-static loading conditions.

The numerical models for the 3D FDEM simulations of the UCS and BTS tests are shown in Fig. 10, in which the diameter of both specimens is 51.7 mm, and the height and thickness of the specimens are 129.5 mm and 25.95 mm, respectively. The rock specimens are placed between two moving rigid loading platens. Flat rigid loading platens are used in the UCS model, whereas curved rigid loading platens are used in the BTS model, whose curvature is 1.5 times the diameter of the BTS disk, as suggested by the International Society for Rock Mechanics (ISRM). It is important to note that in the FDEM simulation with the CZM, it is essential to use an unstructured mesh to obtain reasonable rock fracture patterns because the FDEM only allows the fractures to initiate and propagate along the boundaries of the solid elements. Moreover, the use of a very fine mesh is the key to reducing the mesh dependency of the crack propagation paths, which is why most 2D FDEM simulations in previous studies used very fine meshes. However, if very fine meshes are used in 3D models, the number of TET4s and CE6s can easily exceed several million, which makes the 3D modelling become intractable in terms of memory limitations and the simulation time required for the calibration process using FDEM codes parallelized by a single GPGPU accelerator. Thus, fine meshes comparable to those investigated by Lisjak et al. (2018) are used in the 3D FDEM models. Accordingly, the average edge length of the TET4s in both models is set to 1.5 mm, and the UCS and BTS models contain 695,428 TET4s and 1,298,343 CE6s and 187,852 TET4s and 348,152 CE6s, respectively. According to a recent study on 2D FDEM modelling conducted by Liu and Deng (2019), the effect of the element size can be negligible if there are not less than 27–28 meshes in the length corresponding to the diameter of the specimen and the maximum element size should not be longer than the length of fracture process zone of the CZM. The UCS and BTS models used in this study satisfy these requirements. Based on the review of the effects of the loading rate presented above, a constant velocity of 0.01 m/s is applied on the loading platens to satisfy the quasi-static loading conditions, as suggested by Guo (2014). Moreover, our preliminary sensitivity study of 3D UCS and BTS modellings under various loading rates confirms the quasi-static loading conditions can be achieved with the platen velocity of 0.01 m/s, which further shows little difference can be noticed from 3D UCS modelling even if the platen velocity is increased to 0.05 m/s although slightly higher peak loads and spurious fragmentations around the loading areas are observed from 3D BTS modelling with the platen velocity increasing.

The physical/mechanical properties of the limestone are obtained from laboratory measurements, which are used to determine the input parameters for the numerical modelling (Table 1). As introduced in Sect. 2, the intact behavior of the numerical model follows Eq. (1), and the elastic parameters are determined in such a way that the elastic region of the stress–strain curve from the 3D FDEM simulation of the UCS test agrees well with that of the laboratory experiment. Our preliminary investigation showed that the experimentally obtained elastic parameters can be directly used as the input parameters if sufficiently large artificial penalty terms of the CE6s are used. The penalty terms for the contacts and CE6s are determined as multiples of the elastic modulus of the rock (i.e., Erock). The value of the contact penalty (Pn_con) is set as 10 Erock. However, higher values are required for the artificial penalty terms of the CE6s (i.e., 100 Erock for Popen and Ptan and 1000 Erock for Poverlap) to allow the artificial increase in the compliance of the bulk rock to become negligible. Because the artificial elastic regime of the CE6 in Eqs. (6)–(8) can also be influenced by the strength parameters, an artificial increase in the bulk compliance of the rock becomes non-negligible if the artificial penalty terms for the CE6s are not set to be sufficiently large. In other words, smaller artificial penalty terms for the CE6s can result in non-negligible changes in the artificial elastic response of the CE6s during the calibration process, in which the strength parameters are also varied. This fact has not been pointed out by any studies of the development and/or application of the FDEM. Then, after setting the penalty terms of the CE6s, the strength parameters (i.e., tensile strength Ts_rock, cohesion crock and internal friction angle ϕrock) and the fracture energies, GfI_rock and GfI_rock, of the numerical model are calibrated by trial and error. To do this, a series of FDEM simulations was conducted to achieve a reasonable match between the numerical and experimental results so the peak load and fracture patterns from the numerical simulations agree well with those from the experiments. The same input parameters shown in Table 1 are also used for the numerical modelling of the BTS test. The friction coefficients, μfric, of the contact between the platens and the rock, and, that between rock surfaces generated by broken CE6s are assumed to be 0.1 and 0.5, respectively, as in Mahabadi (2012). The ECDA approach introduced in Sect. 2.2 is used in these simulations because the rock can be considered to be relatively soft, and no spurious modes are observed in this case. The concept of mass scaling (Heinze et al. 2016) with the mass-scaling factor = 5 is applied to increase Δt in such a way that the quasi-static loading condition is still satisfied. Thus, Δt = 4.5 and 9 ns are used for the simulations of the BTS and UCS tests, respectively. Further details about the mass-scaling concept can be found in the literature (Heinze et al. 2016). The critical damping scheme [η = ηcrit = 2 h√(ρE) in Eq. (1)] is also used in all of the FDEM simulations in this section. Hereafter, compressive stresses are considered negative (cold colors), whereas tensile stresses are regarded as positive (warm colors).

Figure 11a–c shows the modelled 3D progressive rock failure process in terms of the distributions of the minor principal (mostly compressive) stress (upper row) and the damage variable [i.e., D in Eq. (9); lower row] at different loading stages (points A, B and C in Fig. 11d) in the FDEM simulation of the UCS test. Figure 11d shows the obtained axial stress versus axial strain curve. Figure 11a shows the stress and damage distribution in the sample at the stage before the onset of nonlinearity in the axial stress versus axial strain curve (point A in Fig. 11d). As the loading displacement continues, the growth of unstable microscopic cracks commences and continues until the peak stress of the stress–strain curve is reached (point B in Fig. 11d). Subsequently, the microscopic cracks coalesce to form macroscopic cracks, which results in the loss of bearing capacity of the bulk rock, and the axial stress begins to decrease with increasing strain. Finally, the formed macroscopic cracks propagate further, resulting in the complete loss of the bearing capacity of the rock (point C in Fig. 11d). Figure 12a compares the final fracture patterns obtained from the FDEM simulation and the laboratory experiment. The resulting fracture patterns (Fig. 12a) and the peak loads (Fig. 11d) from the numerical simulation and laboratory experiment are in good agreement. Although the formation of the shearing planes is evident in Fig. 12b, it must be noted that mixed-mode I–II fractures are the dominant mechanism of rock fracturing, as shown in Fig. 12c, which will be explained in detail later. Therefore, the obtained results demonstrate that the developed GPGPU-parallelized 3D Y-HFDEM IDE is able to reasonably model the fracturing process of rock in UCS tests.

Figure 13a–c illustrates the modelled 3D progressive rock failure process in terms of the distributions of the horizontal stress, σxx, (upper row) and the damage variable D in Eq. (9) (lower row) at different stages (points A, B, and C in Fig. 13d) in the FDEM simulation of the BTS test. Figure 13d shows the obtained indirect tensile stress versus axial strain curve. As the loading displacement gradually increases, a uniform horizontal (tensile) stress (σxx) field gradually builds up around the central line of the rock disk. Figure 13a shows that although some microscopic damage, D < <1, appears in the rock disk near the loading platens due to stress concentrations, there is no macroscopic crack (i.e., CE6s with D = 1). Once the peak indirect tensile strength of the rock (point B in Fig. 13d) is reached, macroscopic cracks form around the central diametrical line of the rock disk due to the coalescence and propagation of microscopic cracks. As shown in Fig. 13b, the macroscopic crack that causes the splitting failure of the rock disk nucleates slightly away from the exact center of the disk. The reason for the nucleation of this vertically off-center macroscopic crack is that the curved loading platens provide a relatively narrow contact strip; accordingly, an off-center horizontal stress concentration first develops within the rock disk, which is consistent with the location of the macroscopic crack nucleation in Fig. 13b. This phenomenon was also addressed by Fairhurst (1964) and Erarslan et al. (2012), who further pointed out that a wider contact strip was required to ensure near-center crack initiation in a BTS test with curved loading platens. Moreover, Li and Wong (2013) conducted strain–stress analyses of a 50-mm-diameter rock disk in BTS tests using FLAC3D and pointed out that the maximum indirect tensile stress and strain were located approximately 5 mm away from the two loading points along the central loading diametrical line of the rock disk. Nearly the same results are observed in Fig. 13b; most importantly, the vertically off-center macroscopic cracks are captured explicitly. Moreover, following Lisjak et al. (2018), Fig. 13e compares the simulated stress distributions along the diameter of the rock disk in the middle and on the surface (i.e., lines AB and CD, respectively) with the analytical solution of Hondros (1959), where y and r represent the vertical distance from the center and the radius of the rock disk, respectively. It can be seen from Fig. 13e that the stress distribution on the surface of the rock disk differs from that in the middle plane, which is consistent with Hondros’ solution based on the plane-strain assumption. In other words, Hondros’ solution is invalid for the stress distributions on the surface of the rock disk, especially for the tensile stress concentrations in the regions near the loading platens, which are clearly depicted in Fig. 13e. The local tensile stress concentrations in the regions near the loading platens on the surface of the rock disk explain the nucleation of the off-center macroscopic cracks modelled in Fig. 13b, which will not be possible if 2D plane-strain modelling is conducted. As the loading platens continue to move toward each other, the resultant macroscopic cracks propagate and coalesce to split the rock disk into two halves (Fig. 13c), and the stress–strain curve decreases toward zero during the post-peak stage (i.e., line BC in Fig. 13d). These results show that mixed-mode I–II failure is the dominant mechanism during the nucleation, propagation and coalescence of the splitting macroscopic cracks (Fig. 14a), which is due to the unstructured mesh used in the FDEM modelling. To clarify this point, Fig. 14b shows the modelled failure pattern of the rock disk using the FDEM simulation with a structured mesh, in which the loading diametrical line aligns with the boundaries of the TET4 elemental mesh. The splitting fracture forms exactly along the loading diametrical line, and the mode I failure is the only failure mechanism. Figure 14c and d show the topological relationships between the horizontal indirect tensile stress (blue arrows in the x direction) and the TET4s in the cases of the unstructured and structured meshes used in Fig. 14a and b, respectively. In Fig. 14d, the normal directions of the planes A and A′ of the CE6 located between the two TET4s exactly aligns with the direction of the indirect tensile stress (i.e., the x direction). Therefore, pure mode I cracks preferably develop along the CE6s on the loading diametrical line in terms of the most efficient energy release due to fracturing. On the other hand, when the unstructured mesh in Fig. 14a is used, few CE6s have planes exactly on the loading diametrical line, as illustrated in Fig. 14c, in which two CE6s (i.e., planes A–A′ and B–B′) contributing to the fracturing process are depicted, and none of their normal directions are aligned with that of the indirect tensile stress (blue arrows in the x direction). In this case, it is obvious that a pure mode I crack cannot form due to the topological restriction, and a combination of mode I (opening) and mode II (sliding) cracks can always form resulting in a macroscopic fracture. This is why mixed-mode I–II fracturing is the main failure mechanism in the FDEM simulations of the BTS tests with unstructured meshes. Tijssens et al. (2000) conducted a comprehensive mesh sensitivity analysis for the CZM and concluded that the fractures tended to propagate along dominant directions of local mesh alignment. Guo (2014) further commented that unstructured meshes should be used in the numerical simulation using the CZM to reduce mesh dependency but fracture paths were still dependent on local mesh orientation in the unstructured meshes. Accordingly, when the CZM is applied to model material failure, it is unrealistic to pursue a pure mode I splitting fracture in the simulation of the BTS test with an unstructured mesh regardless of the numerical approach and 2D/3D modelling. In this sense, any intentional reduction of the mode I fracture energy GfI_rock and tensile strength Ts_rock to capture the unreasonable pure mode I fracture pattern prevalent in studies of the FDEM should be considered a manipulation of the input parameters. The same explanation is valid for the dominant mixed-mode I–II failures along the macroscopic shear fracture plane modelled in the UCS test. The boundaries of the TET4s will not exactly align with the macroscopic shear stress direction at each location; thus, mixed-mode I–II failure along the macroscopic shear fracture plane, rather than pure mode II failure, is the natural consequence.

3D simulation of the fracturing process of rock in the BTS test under quasi-static loading: a distributions of the horizontal stress and microscopic damage before the peak stress, b distributions of the horizontal stress, microscopic damage and macroscopic cracks at the peak stress, c distributions of the horizontal stress and macroscopic fracture pattern in the post-failure stage, d Brazilian indirect tensile stress versus axial strain curve and e comparison between the simulated stress distributions and those from Hondros’ solution along the loading diametrical line in the middle plane and on the surface of the disk

Modelled fracture patterns of rock in the BTS tests with unstructured and structured meshes. a Dominant mixed-mode I–II fractures in the BTS test with an unstructured mesh, b dominant pure-mode I fracture in the BTS test with a structured mesh, c schematic sketch of the tensile fracturing mechanism in a structured mesh, d schematic sketch of the tensile fracturing mechanism in an unstructured mesh

3.3 Full 3D Modelling of the Fracturing Process of Rock Under Dynamic Loads in SHPB Tests

In this subsection, the GPGPU-parallelized Y-HFDEM IDE is applied to model the dynamic fracturing of Fangshan marble, which is much more isotropic and homogeneous than granite, in dynamic BTS tests while considering the entire SHPB testing system.

The 4th and 5th authors of this paper conducted dynamic BTS tests of Fangshan marble with a SHPB apparatus (Zhang and Zhao 2013). The marble consists of dolomite (98%) and quartz (2%), and the size of the minerals ranges from 10 to 200 μm with an average dolomite size of 100 μm and an average quartz size of 200 μm (Zhang and Zhao 2013). The marble can be considered as a homogeneous and isotropic rock, which is ideal to avoid the complexity intrinsic to highly anisotropic rocks such as granite. The detailed procedure of the dynamic BTS test can be found in the literature (Zhang and Zhao 2013), and the test is briefly summarized here. As illustrated in Fig. 15a, a metal projectile called a striker is first accelerated by a gas gun, and the striker impacts one end of a long cylindrical metal bar called an incident bar (IB). Upon the impact of the striker on the IB, a dynamic compressive strain wave (εinci) is induced in the IB. The εinci propagates toward the other end of the IB, on which the target marble disk is placed. When the εinci arrives at the interface between the IB and the marble disk, some portion is reflected as a tensile strain wave (εrefl), and remaining portion is transmitted into the marble disk as a compressive strain wave (εtans_rock). The εtans_rock then propagates toward the interface between the marble disk and one end of another long cylindrical metal bar called a transmission bar (TB). When the εtans_rock arrives at the interface, the marble disk is subjected to dynamic loading (i.e., compressed by the IB and the TB). In addition, a compressive strain wave (εtans) generated in the TB propagates toward the other end of the TB. The diameter and thickness of the marble disk used in the experiment were 50 mm and 20 mm, respectively. The lengths of the IB and the TB were 2 m and 1.5 m, respectively, and the diameter of both the IB and the TB was 50 mm. Strain gauges are attached on the surfaces of the IB and TB at 1 m from the interfaces between the marble disk and each bar to measure the time history of the axial strain in the IB (to measure εinci and εrefl) and the TB (to measure εtrans). Assuming one-dimensional (1D) stress wave propagation in each bar without wave attenuation, the axial stresses on the metal bars (i.e., σinci, σrefl and σtans) are calculated by multiplication of the measured axial strains (εinci, εrefl and εtrans) by the Young’s modulus of each bar. In practice, the axial compressive force fIB in the IB is calculated from the superposition of the wave shapes corresponding to σinci and σrefl, whereas the axial compressive force fTB in the TB is directly calculated from σtans (Fig. 15b). Thus, the axial compressive forces fIB and fTB can be obtained by multiplication of (σinci–σrefl) and σtans by the cross-sectional areas of each bar. By ensuring that the time histories of the axial compressive forces fIB and fTB are nearly equal up to the peak, the dynamic indirect tensile stress can be defined at the center of the marble disk using the theory applied to the BTS test due to quasi-static loading conditions. Satisfying these conditions is equivalent to achieving dynamic stress equilibrium in the marble disk. The experimental results in Fig. 15b satisfy the dynamic stress equilibrium state, and the corresponding loading rate is approximately 830 GPa/s (Zhang and Zhao 2013). The peak value of the dynamic indirect tensile stress is called the dynamic indirect tensile strength. Due to this dynamic indirect tensile stress, the marble disk is dynamically split into two halves due to the formation of blocky fragments near the diametrical center line and numerous shear fractures near the impact region by the IB and the TB. The dynamic indirect tensile strength calculated under these conditions was 32 MPa, which was significantly higher than the quasi-static indirect tensile strength of 9.5 MPa (Zhang and Zhao 2013). The fracture pattern obtained after the test is shown in Fig. 15c. This paper attempts to model the test condition.

Overview of the SHPB-based dynamic BTS test simulated by the GPGPU-parallelized 3D Y-HFDEM IDE code. a Configuration of the SHPB system [after Fig. 3 in Zhang and Zhao (2013) with minor additions], b achievement of dynamic stress equilibrium between the axial forces in the IB and the TB [after Fig. 11d in Zhang and Zhao (2013) with minor additions] and c failure patterns of the marble specimen at a dynamic loading rate of 830 GPa/s [after Fig. 12b in Zhang and Zhao (2013)]