Abstract

Fuzzy phenomena exist widely in real life, and with the rapid development of big data technology we may gather information from multiple sources. So it is extremely meaningful to study fuzzy concepts in the context of multiple information sources. In this study, six novel kinds of double-quantitative multigranulation rough fuzzy set models are proposed. Both absolute and relative information are taken into account by utilizing the logical conjunction and disjunction operators to define the lower and upper approximations. Four decision regions can be computed based on the results of approximations, and the corresponding four decision rules are established. Some basic propositions of these models are discussed. The relationships among the six double-quantitative multigranulation rough fuzzy set models are analysed. The corresponding algorithms of obtaining four decision regions are given and the time complexity of them are analysed. Later a weather example is employed to illustrate that our models can divide data sets to the positive region, the negative region, the lower boundary region, and the upper boundary region, where the samples in the positive region completely support the concept set, the samples in the negative region completely oppose the concept set, and the samples in the lower and upper boundary may support or oppose the concept set. Finally, an experiment is conducted to demonstrate that our models perform better than the mean fusion method in terms of decision-making.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In traditional machine learning, the decision attributes are categorical data, but in some scenarios, we may be required to deal with fuzzy decision issues. For example, in sentiment analysis, the decision attributes are the degree of human emotions such as a little angry, moderately happy, or pretty sad. However, the traditional machine learning methods can not deal with this kind of issue very well. Thus, it is necessary and meaningful to research this kind of fuzzy decision issues. Fuzzy Set theory [54, 55] can be utilized to deal with fuzzy decision issues. Fuzzy Set theory(FST) is distinct from probability theory, which utilizes the membership degree of samples to depict the uncertainty, which can represent how much a sample is in a set. The classical set theory considers that one element either belongs to a set or not, but the fuzzy sets take into account membership function to describe the membership degree of an object to a fuzzy set. Oceans of researches concerning the fuzzy set theory have been conducted, such as classification [12, 34, 39], regression [13, 33, 37], cluster [1, 5, 7], and concept-cognitive learning [47]. It should be noted that one motivation of this study is to research the fuzzy phenomena existing in decision-making.

There is another method which is also commonly regarded as a useful uncertainty dealing tool. The rough set theory(RST) was firstly proposed by Pawlak [25], which can be commonly used in data analyses [26]. Oceans of researches concerning RST have been studied, such as attribute reduction [14, 57], information fusion [45, 46], and knowledge acquisition [35, 48]. With the development of RST, many useful extended models are established to handle different issues. Uncertainty measurement in RST, especially its models, has two fundamental styles: the absolute and relative quantization. On the one hand, the graded rough set (GRS) [51] considers the absolute quantitative information, which is an extended rough set model. On the other hand, in the classical rough theory, the low approximation operator is defined on the basis that equivalence classes of samples are completely contained in the concept set. The condition is too strict at times. In a real application, we can consider to put a sample into the low approximation set when the proportion of equivalence class of the sample in the concept set is greater than a given threshold. Based on the above thought, the decision-theoretic rough set(DTRS) model [52] reckons a way to make a decision under a minimum risk, which can reflect relative quantitative information. There is no denying that the two kinds of quantitative information are very suitable to describe approximation space effectively. In recent years, many double-quantitative models that fuse two quantitative information have been constructed [4, 15,16,17, 36, 48, 53, 56]. Furthermore, with the development of FST and RST, many studies have been conducted by combining the two theories to propose fuzzy rough set models [8, 11, 24, 40] and rough fuzzy set models [3, 4, 15, 16, 38]. The rough fuzzy set models can effectively address fuzzy phenomena. So in this work, six novel kinds of rough fuzzy set models are established considering the relative and absolute quantitative information.

The another motivation of this study is that multi-source data are common in real application, which can effectively characterize the uncertainty all over the human life. A lot of researches on multi-source data have been investigated, such as concept approximation [32] and chemical structure recognition [22]. In this paper, we concentrate on the multi-source data collected from multiple information sources, called multi-source information system(MsIS). Nevertheless, most studies have been conducted just based on a single information system [2, 3, 6, 27, 41, 58]. There is no denying that the above methods can not be directly utilized to cope with the uncertainty phenomena of multi-source data. So it is meaningful to study the uncertainty phenomena of MsIS. Multigranulation rough set(MGRS) can be regarded as a useful tool in dealing with uncertainty issues in a MsIS. The MGRS was first proposed by Qian et al. [28, 29], which is suitable to handle multiple information sources by regarding each information system as a granular structure. With the development of multigranulation rough set theory, many multigranulation issues [15, 18,19,20,21, 23, 30, 31, 36, 42,43,44, 49, 50] have been studied based on this model. For example, MGDTRS model, using probability as a measurement index, was proposed by Qian et al. [30]. Lin et al. [23] proposed a model that combines multiple thresholds and multiple granulations to cope with interval-valued decision information systems. Xu et al. [42, 43]studied multigranulation rough set with respect to a fuzzy background. However, the above studies do not consider the relative and absolute information in terms of describing granulation space. So in this study, we combine two types of quantitative information and the MGRS theory to propose six novel kinds of multigranulation rough fuzzy set models(MGRFS) in a MsIS.

All in all, in this study, we propose six kinds of novel MGRFS models by combining the double-quantitative information and the MGRS theory. We use the logical operators to utilize both the relative and absolute information in approximation operators, which can make the use of information more comprehensive compared with many existing double-quantitative models [15, 17, 36, 48, 53]. These models all have the disadvantage that only one kind of quantitative information is considered in the two approximation operators. For example, in [17], the upper approximation is defined as \({{\overline{R}} _{(\alpha ,\beta )}}\left( X \right) = \left\{ {x \in U\left| {P\left( {X{{\left| {\left[ x \right] } \right. }_R}} \right) > \beta } \right. } \right\}\) or \({{\overline{R}} _k}\left( X \right) = \left\{ {x \in U\left| {\left| {{{[x]}_R} \cap X} \right| > k} \right. } \right\}\), where \(\alpha ,\beta \in \left[ {0,1} \right]\), k is a nonnegative integer, and \({{{[x]}_R}}\) denotes the equivalence class of x under the equivalence relation R and \(P\left( {X{{\left| {\left[ x \right] } \right. }_R}} \right) = \frac{{\left| {{{\left[ x \right] }_R} \cap X} \right| }}{{\left| {{{\left[ x \right] }_R}} \right| }}\). The above two kinds of definition only utilize relative information(i.e. \({P\left( {X{{\left| {\left[ x \right] } \right. }_R}} \right) }\)) or absolute information(i.e. \({\left| {{{[x]}_R} \cap X} \right| }\)), not making full use of all the information. We can employ the logical operators to utilize both relative and absolute information at the same time, i.e. \({{\overline{R}} _{(\alpha ,\beta )}}\left( X \right) \cap {{\overline{R}} _k}\left( X \right) = \left\{ {x \in U\left| {P\left( {X{{\left| {\left[ x \right] } \right. }_R}} \right)> \beta \wedge \left| {{{[x]}_R} \cap X} \right| > k} \right. } \right\}\) and \({{\overline{R}} _{(\alpha ,\beta )}}\left( X \right) \cup {{\overline{R}} _k}\left( X \right) = \left\{ {x \in U\left| {P\left( {X{{\left| {\left[ x \right] } \right. }_R}} \right)> \beta \vee \left| {{{[x]}_R} \cap X} \right| > k} \right. } \right\}\). This improvement will use more information to make the approximation more reasonable, which is also considered in [4, 16]. In this study, we use the logical conjunction operator to define the optimistic, pessimistic, and mean models. And similarly, the logical disjunction operator is used to define the optimistic, pessimistic, and mean models. The six models utilize two types of quantitative information in the meantime to define the lower approximation operator and the upper approximation operator. It means that our models utilize more quantitative information in describing the approximation space.

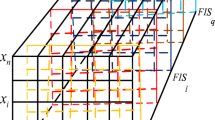

The configuration of this paper is constructed as following. In Sect. 2, some previous research work is reviewed, such as RST, the extended rough set models, FST, and MsDS. In Sect. 3, we propose three kinds of logical conjunction multigranulation rough fuzzy set models, and some propositions of the approximation operators are discussed. Furthermore, four rules are established for decision-making. In Sect. 4, we similarly propose three kinds of logical disjunction multigranulation rough fuzzy set models. The propositions of the approximation operators are discussed. And four rules are presented for decision-making. In Sect. 5, we discuss the relationships among these multigranulation rough fuzzy set models and a weather example is employed to illustrate that our models can divide data sets into four decision regions. In Sect. 6, we give the corresponding algorithms of these models, and the time complexity of them are analysed. Later, an experiment is conducted to demonstrate that our models perform better than the mean method in terms of decision-making. Finally, Sect. 7 comes up with the conclusion. We give an intuitive diagram of this study, which is shown in Fig 1.

2 Preliminaries

In this section, some basic concepts are reviewed, such as the fuzzy set theory, multi-source information system, the rough set theory, and the extended rough set theory.

2.1 Fuzzy set theory and multi-source information systems

In this section, we firstly introduce the basic concept of the fuzzy set theory [54, 55]. In fuzzy sets theory, since classical sets have no abilities to recognize the membership degree of a sample x belonging to one set, so the membership function was proposed by Zadeh. A fuzzy set \({\tilde{X}}\) on U is defined as a set of order pairs which is shown as following:

where \({\tilde{X}}\left( x \right)\) is the membership function, which satisfies \({\tilde{X}}\left( x \right) \in [0,1]\), \(\forall x \in U\). Let F(U) denote the set of all fuzzy subsets. And for any fuzzy sets \({\tilde{X}}\), \({\tilde{Y}}\in F(U)\), if \({\tilde{X}}\left( x \right) \le {\tilde{Y}}\left( x \right)\) , for all \(x \in U\), then we denote it as \({\tilde{X}} \subseteq {\tilde{Y}}\). And we reckon that \({\tilde{X}} = {\tilde{Y}}\), when \({\tilde{X}}(x) = {\tilde{Y}}(x)\), for all \(x \in U\). Furthermore, the basic computation rules are defined as

Now, let us introduce the basic concepts of MsIS, which is shown as following.

An information system can be denoted as \(IS = \left( U, AT, V_{AT}, F_{AT} \right)\), where U is a nonempty finite set of samples; AT is the nonempty finite set of condition attributes; \({V_{AT}}\) is the domain of attribute set; \(F_{AT}:\, \, \, U \times AT\, \rightarrow V_{AT}\) is an information function.

A multi-source information system can be denoted as \(MsIS = \left\{ I{S_i}=\left( U,A{T_i},V_{A{T_i}},F_{A{T_i}} \right) ,i = 1,2,\ldots ,n \right\}\), where \(I{S_i}\) denotes the i-th subsystem of the MsIS. It should be noted that in this study, we suppose that all subsystem have the same structure, which means that \(A{T_1} = A{T_2} = \cdots = A{T_n}\). So the multi-source information system can be denoted as

An intuitive illustration is shown in Fig 2.

Specially, a multi-source decision system can be denoted as

where \(MsIS = \left\{ {I{S_i}\left| {I{S_i}} \right. = \left( {U,AT,{V_{AT}},{F_i}} \right) ,i = 1,2,\ldots ,n} \right\}\), and D is the nonempty finite set of decision attributes, \({V_D}\) is the domain of decision attributes, and \({F_D}:\, \, \, U \times D\, \rightarrow {V_D}\) is an information function.

2.2 Rough set theory and extended rough set theory

In this subsection, we introduce the basic rough set theory [25] and its extended rough set theories.

The traditional rough set theory is established based on the equivalence relation. Let \(IS = \left( {U,AT,V_{AT},F_{AT}} \right)\) be an information system. For any \(B \subseteq AT\) ,an equivalence relation \({R_B}\) is defined by

Based on \({R_B}\), a partition of U can be denoted as \(U/{R_B} = \{ {[x]_{{R_B}}}\left| {x \in U} \right. \}\) , where \({[x]_{R_B}}\) is called the equivalence class of x under \({R_B}.\) For any \(X \subseteq U\), the lower and upper approximations of X are defined by

Now, let us introduce the concept of the Graded Rough Set(GRS). The GRS [51] model was proposed by Yao and Lin [51], which utilizes the absolute information. Assume k is a nonnegative integer. For any \(A \subseteq AT\) and \(X \subseteq U\), the lower and upper approximations of X are defined as

Moreover, if \(X \in U\), then lower and upper approximations are defined as

There is another important extended rough set model utilizing the relative information. Being different from the traditional RST theory, the decision-theoretic rough set (DTRS) [52]model make decision with minimum risk based on Bayesian decision procedure. In the Bayesian decision procedure, \(\mathrm{A} = \left\{ {{a_1},{a_2},...,{a_m}} \right\}\) is a finite set states, \(\Omega = \left\{ {{\omega _1},{\omega _2},...,{\omega _s}} \right\}\) is a finite set of possible actions, and \(P\left( {{\omega _j}\left| Y \right. } \right)\) is the conditional probability of an object x belongs to state \({\omega _j}\) when the object is described by Y. When state is \(w_j\), let \(\lambda \left( {{a_i}\left| {{\omega _j}} \right. } \right)\) denote the loss for taking action \(a_i\). For an object with description Y, the expected loss when taking action \(a_i\) is denoted as \(R\left( {{a_i}\left| Y \right. } \right) = \sum \limits _{j = 1}^s {\lambda \left( {{a_i}\left| {{\omega _j}} \right. } \right) } P\left( {{\omega _j}\left| Y \right. } \right)\). Furthermore, for the perspective of probabilistic rough set approximation operators, an equivalence class \([x]_R\) is regarded as the description of x [52]. And let \(\Omega = \left\{ {X,{X^C}} \right\}\) be the state set denoting a sample is in X and not in X. So the conditional probability can be denoted as \(P\left( {X\left| {[x]_R} \right. } \right)\) or \(P\left( {{X^C}\left| {[x]_R} \right. } \right)\). And the action set is given by \(\mathrm{A} = \left\{ {{a_1},{a_2},{a_3}} \right\}\) , where \(a_1\), \(a_2\), and \(a_3\) respectively denote \(x \in Pos\left( X \right)\), \(x \in Neg\left( X \right)\), and \(x \in Bnd\left( X \right)\). And \(\lambda \left( {{a_i}\left| X \right. } \right)\) denote the loss incurred for taking action \(a_i\) when an object is in X. Similarly, \(\lambda \left( {{a_i}\left| {{X^C}} \right. } \right)\) denote the loss incurred for taking action \(a_i\) when an object is not in X. Based on the above theory, the excepted loss can be denoted as

where \({\lambda _{i1}} = \lambda \left( {{a_i}\left| X \right. } \right) , {\lambda _{i2}} = \lambda \left( {{a_i}\left| {{X^C}} \right. } \right) , i = 1, 2, 3\) .

And the Bayesian decision rules are listed as

When \({\lambda _{11}} \le {\lambda _{31}}< {\lambda _{21}}\, \, and \, \, \, {\lambda _{22}} \le {\lambda _{32}} < {\lambda _{12}}\), the minimum-risk decision rules can be denoted as following:

where

Furthermore, when \(\alpha> \gamma > \beta\), the minimum-risk decision rules can be denoted as

The lower and upper approximations of the DTRS model are defined as

where \(P\left( {X\left| {{{[x]}_R}} \right. } \right) = \frac{{\left| {{{[x]}_R} \cap X} \right| }}{{\left| {{{[x]}_R}} \right| }}\). And furthermore, Sun et al.[38]defined the fuzzy conditional probabilistic operator, which is defined as

Similarly, the lower and upper approximations of fuzzy set \({\tilde{X}}\) are defined as

The above rough set theories are structured on the basis of only one indiscernibility relation, but in a real application, we may need to utilize multiple indiscernibility relations to approximate the sample set. So in order to deal with the issue, the multigranulation rough set(MGRS)[28] model was proposed, where a granularity represents an aspect of the sample set approximating. Let \(IS = \left( {U,AT,V_{AT},F_{AT}} \right)\) be an information system. Given \({B_1},{B_2},...,{B_n} \subseteq AT\), for any \(Z \subseteq U\), the optimistic lower and upper approximations are defined as

And the pessimistic lower and upper approximations are defined as

3 Logical conjunction multigranulation rough fuzzy set model in multi-source decision systems

In this paper, when considering \(I{S_i}\), \(\forall {}x \in U\), we define the type-I lower support function which is denoted as \(I - LSF_{{\tilde{Z}}}^{I{S_i}}\left( x \right)\), the type-I upper support function which is denoted as \(I - USF_{{\tilde{Z}}}^{I{S_i}}\left( x \right)\), the type-II lower support function which is denoted as \(II - LSF_{{\tilde{Z}}}^{I{S_i}}\left( x \right)\) and the type-II upper support function which is denoted as \(II - USF_{{\tilde{Z}}}^{I{S_i}}\left( x \right)\). We utilize the four support function operators to define the lower and upper approximations of optimistic and pessimistic models.

Given a MsDS \(MsDS = MsIS \cup \left\{ {D,{V_D},{F_D}} \right\}\), where \(MsIS = \left\{ {I{S_i}\left| {I{S_i}} \right. = \left( {U,AT,{V_{AT}},{F_i}} \right) ,i = 1,2,...,n} \right\}\). Assume \(\beta \le \alpha\) and \(\beta ,\alpha \in [0,1]\), and k is a nonpositive integer. For any fuzzy set \({\tilde{Z}} \in F(U)\), these support functions are defined as follow:

where \({[x]}_{I{S_i}}\) denotes the equivalence class of the sample x under \(I{S_i}\).

3.1 Logical conjunction optimistic multigranulation rough fuzzy set model(LCO-MRFSM)

Definition 1

Given a MsDS \(MsDS = MsIS \cup \left\{ {D,{V_D},{F_D}} \right\}\), where \(MsIS = \left\{ {I{S_i}\left| {I{S_i}} \right. = \left( {U,AT,{V_{AT}},{F_i}} \right) ,i = 1,2,...,n} \right\}\). Assume \(\beta \le \alpha\) and \(\beta ,\alpha \in [0,1]\), and k is a nonpositive integer. For any fuzzy set \({\tilde{Z}} \in F(U)\), let \(L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{Z}}} \right)\) denote the lower approximation and \(U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^M\left( {{\tilde{Z}}} \right)\) denote the upper approximation, which are defined as

We can easily find that \(L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{Z}}} \right)\) is not included in \(U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{Z}}} \right)\). So just like the method used in many existing double-quantitative model [4, 15,16,17, 36, 48, 53], we put samples that that belong to both \(L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{Z}}} \right)\) and \(U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{Z}}} \right)\) into the positive region, samples that do not belong to either \(L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{Z}}} \right)\) or \(U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{Z}}} \right)\) into the negative region, samples that belong to \(L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{Z}}} \right)\) but do not belong to \(U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{Z}}} \right)\) into the lower boundary region, samples that belong to \(U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{Z}}} \right)\) but do not belong to \(L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{Z}}} \right)\) into the upper boundary region.

The four decision regions can be computed by

The four regions can divide sample set U into four parts, where the samples in the positive region completely support the concept set, the samples in the negative region completely oppose the concept set, and the samples in the lower and upper boundary may support or oppose the concept set. Moreover, based on Definition 1, we can get some propositions of LCO-MRFSM.

Proposition 1

For any fuzzy sets \({\tilde{Z}}\), \({\tilde{M}} \in F(U)\), the following propositions are true.

-

(1).

\(When\, \,{\tilde{Z}} \subseteq {\tilde{M}},L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{Z}}} \right) \subseteq L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{M}}} \right) .\)

-

(2).

\(When\,{\tilde{Z}} \subseteq {\tilde{M}}, U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{Z}}} \right) \subseteq U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{M}}} \right) .\)

-

(3).

\(If\, \, \, \, {k_{1\, \, }} \le {k_2},\, \,then\,L_{_{\left( {\alpha ,\beta } \right) \wedge {k_1}}}^O\left( {{\tilde{Z}}} \right) \subseteq L_{_{\left( {\alpha ,\beta } \right) \wedge {k_2}}}^O\left( {{\tilde{Z}}} \right) .\)

-

(4).

\(If\, \, \, \, {\beta _1} \le {\beta _2},\, \,then \, U_{_{\left( {\alpha ,{\beta _2} } \right) \wedge k}}^O\left( {{\tilde{Z}}} \right) \subseteq U_{_{\left( {\alpha ,{\beta _1} } \right) \wedge k}}^O\left( {{\tilde{Z}}} \right) .\)

-

(5).

\(U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{Z}}} \right) \cup U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{M}}} \right) \subseteq U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{Z}} \cup {\tilde{M}}} \right) .\)

-

(6).

\(L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{Z}}} \right) \cup L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{M}}} \right) \subseteq L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{Z}} \cup {\tilde{M}}} \right) .\)

-

(7).

\(U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{Z}} \cap {\tilde{M}}} \right) \subseteq U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{Z}}} \right) \cap U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\widetilde{M}}} \right) .\)

-

(8).

\(L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{Z}} \cap {\tilde{M}}} \right) \subseteq L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{Z}}} \right) \cap L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{M}}} \right) .\)

Proof

-

(1)

\(\forall \,x \in L_{\left( {\alpha ,\beta } \right) \wedge k}^O\left( {{\tilde{Z}}} \right)\), we have that \(\exists {} i\), \(\sum \limits _{y \in {{[x]}_{I{S_i}}}} {\left( {1 - {\tilde{Z}}\left( y \right) } \right) } {}\le {}k\) and \(\exists {} j\), \(P\left( {{\tilde{Z}}\left| {{{[x]}_{I{S_j}}}} \right. } \right) \ge {} \alpha\). And we have that \({\tilde{Z}} \subseteq {\tilde{M}}\), so \(\sum \limits _{y \in {{[x]}_{I{S_i}}}} \left( {1 - {\tilde{M}}\left( y \right) } \right) \le \sum \limits _{y \in {{[x]}_{I{S_i}}}} \left( {1 - {\tilde{Z}}\left( y \right) } \right) \le k\) and \(P\left( {{\tilde{M}}\left| {{{[x]}_{I{S_i}}}} \right. } \right) \ge P\left( {{\tilde{Z}}\left| {{{[x]}_{I{S_i}}}} \right. } \right) \ge \alpha\). Thus, we can get that \(x \in L_{\left( {\alpha ,\beta } \right) \wedge k}^O\left( {{\tilde{M}}} \right)\).

-

(2)

The process of proof is similar to (1).

-

(3)

\(\forall \,x \in L_{\left( {\alpha ,\beta } \right) \wedge {k_1}}^O\left( {{\tilde{Z}}} \right)\), we have that \(\exists {}i\), \(\sum \limits _{y \in {{[x]}_{I{S_i}}}} \left( {1 - {\tilde{Z}}\left( y \right) } \right) \le {k_1}\) and \(\exists {}j\), \(P\left( {{\tilde{Z}}\left| {{{[x]}_{I{S_j}}}} \right. } \right) \ge \alpha\). When \({k_1}{} \le {} {k_2}\), we have that \(\sum \limits _{y \in {{[x]}_{I{S_i}}}} \left( {1 - {\tilde{Z}}\left( y \right) } \right) \le {k_1} \le {k_2}\). So \(x \in L_{\left( {\alpha ,\beta } \right) \wedge {k_2}}^O\left( {{\tilde{Z}}} \right)\).

-

(4)

The process of proof is similar to (3).

-

(5)

\(\forall \,x \in U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{Z}}} \right) \cup U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\widetilde{M}}} \right)\), we have that \(\forall {}i\), \(P\left( {{\tilde{Z}}\left| {{{[x]}_{I{S_i}}}} \right. } \right) > \beta\) and \(\sum \limits _{y \in {{[x]}_{I{S_i}}}} {\tilde{Z}}\left( y \right) > k\) or \(\forall {}j\), \(P\left( {{\tilde{M}}\left| {{{[x]}_{I{S_j}}}} \right. } \right) > \beta\) and \(\sum \limits _{y \in {{[x]}_{I{S_j}}}} {\tilde{M}}\left( y \right) > k\). Then we can get \(\forall {}i\), \(P\left( {{{\tilde{Z}} \cup {\tilde{M}}}\left| {{{[x]}_{I{S_i}}}} \right. } \right) \ge max\left\{ {P\left( {{\tilde{Z}}\left| {{{[x]}_{I{S_i}}}} \right. } \right) ,P\left( {{\tilde{M}}\left| {{{[x]}_{I{S_i}}}} \right. } \right) } \right\}\) \(> \beta\) and \(\sum \limits _{y \in {{[x]}_{I{S_i}}}}\left( {{\tilde{Z}} \cup {\tilde{M}}} \right) \left( y \right) \ge max\left\{ \sum \limits _{y \in {{[x]}_{I{S_i}}}} {{\tilde{Z}}\left( y \right) },\sum \limits _{y \in {{[x]}_{I{S_i}}}} {{\tilde{M}}\left( y \right) }\right\} > k\). Thus, \(x \in U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{Z}} \cup {\tilde{M}}} \right)\).

-

(6)

The process of proof is similar to (5).

-

(7)

\(\forall \, x \in U_{\left( {\alpha ,\beta } \right) \wedge k}^O\left( {{\tilde{Z}} \cap {\tilde{M}}} \right)\), we have that \(\forall {}i\), \(P\left( {\left( {{\tilde{Z}} \cap {\tilde{M}}} \right) \left| {{{[x]}_{I{S_i}}}} \right. } \right) > \beta\) and \(\sum \limits _{y \in {{[x]}_{I{S_i}}}} {\left( {{\tilde{Z}} \cap {\tilde{M}}} \right) \left( y \right) } > k\). Then we can get that \(\forall i\), \(P\left( {{{\tilde{Z}}} \left| {{{[x]}_{I{S_i}}}} \right. }\right) \ge P\left( {\left( {{\tilde{Z}} \cap {\tilde{M}}} \right) \left| {{{[x]}_{I{S_i}}}} \right. } \right) > \beta\) and \(\sum \limits _{y \in {{[x]}_{I{S_i}}}} {{\tilde{Z}}} \left( y \right) \ge \sum \limits _{y \in {{[x]}_{I{S_i}}}} {\left( {{\tilde{Z}} \cap {\tilde{M}}} \right) \left( y \right) } > k\). So \(x \in U_{\left( {\alpha ,\beta } \right) \wedge k}^O\left( {{\tilde{Z}}} \right)\). We can also get \(x \in U_{\left( {\alpha ,\beta } \right) \wedge k}^O\left( {{\tilde{M}}} \right)\). Thus, we have \(x \in U_{\left( {\alpha ,\beta } \right) \wedge k}^O\left( {{\tilde{Z}}} \right) \cap U_{\left( {\alpha ,\beta } \right) \wedge k}^O\left( {{\tilde{M}}} \right)\).

-

(8)

The process of proof is similar to (7).

In this paper, the concept set \({\tilde{Z}}\) is a fuzzy set on U. Now, let us consider the situation that \({\tilde{Z}}\) degenerates into a classical set. The following Propositions 2 and 3 show that our model and optimistic multigranulation rough set model [28] have the similar form when each information system is regarded as a granularity.

Proposition 2

When \({\tilde{Z}} \subseteq U\), then we can get if \(k=0\) or \(\alpha\)=1,

Proof

When \({\tilde{Z}} \subseteq U\), which means \({\tilde{Z}}\) is a crisp set of the universe, then the membership function of it is degenerated as

If k=0, we can get that \(\sum \limits _{y \in {{[x]}_{I{S_i}}}} {\left( {1 - {\tilde{Z}}\left( y \right) } \right) } \le k\) is equivalent to \(\left| {{{\left[ x \right] }_{I{S_i}}}} \right|\)=\(\sum \limits _{y \in {{[x]}_{I{S_i}}}} {{\tilde{Z}}\left( y \right) }\). Thus, \(\forall y \in {{[x]}_{I{S_i}}}\), \(y \in {\tilde{Z}}\), which means that \({\left[ x \right] _{I{S_i}}} \subseteq {\tilde{Z}}\). Thus, the support function \(I - LSF_{{\tilde{Z}}}^{I{S_i}}\left( x \right) = 1\) is equivalent to \({\left[ x \right] _{I{S_i}}} \subseteq {\tilde{Z}}\). And in the meantime, we can get \(P\left( {{\tilde{Z}}\left| {{{\left[ x \right] }_{I{S_i}}}} \right. } \right)\) = 1 \(\ge \alpha\), which means \(II - LSF_{{\tilde{Z}}}^{I{S_i}}\left( x \right) = 1\). Similarly, if \(\alpha\)=1, we have that \(P\left( {{\tilde{Z}}\left| {{{[x]}_{I{S_i}}}} \right. } \right)\)=1 is equivalent to \(\frac{{\sum \limits _{y \in {{[x]}_{I{S_i}}}} {{\tilde{Z}}\left( y \right) } }}{{\left| {{{\left[ x \right] }_{I{S_i}}}} \right| }} = \mathrm{{1}}\), which also means that \(\left| {{{\left[ x \right] }_{I{S_i}}}} \right|\)=\(\sum \limits _{y \in {{[x]}_{I{S_i}}}} {{\tilde{Z}}\left( y \right) }\). Thus, the support function \(II - LSF_{{\tilde{Z}}}^{I{S_i}}\left( x \right) =1\) is equivalent to \({\left[ x \right] _{I{S_i}}} \subseteq {\tilde{Z}}\). And in the meantime, we can get \(\sum \limits _{y \in {{\left[ x \right] }_{I{S_i}}}} {\left( {1 - {\tilde{Z}}\left( y \right) } \right) }\) = 0 \(< k\), which means \(I - LSF_{{\tilde{Z}}}^{I{S_i}}\left( x \right) =1\). So we can get that \(L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^O\left( {{\tilde{Z}}} \right) = \left\{ {x \in U \left| {\mathop \vee \limits _{i = 1}^s \left( {{{[x]}_{I{S_i}}} \subseteq {\tilde{Z}}} \right) } \right. } \right\}\) if \(k=0\) or \(\alpha\)=1. \(\square\)

Proposition 3

When \({\tilde{Z}} \subseteq U\), then we can get if \(k=0\) and \(\beta =0\),

Proof

The process is similar to Proposition 2. \(\square\)

Through the above discussions, we all know that four decision regions can be employed to do decision-making. Thus, we can induce four decision rules based on the definition of four decision regions. For convenient, let \(P_{{\tilde{Z}},\,i}(x)\) denote \(P\left( {\tilde{Z}}|[x]_{I{S_i}}\right)\) and \(W_{{\tilde{Z}},\,i}(x)\) denote \(\sum \limits _{y \in {{[x]}_{I{S_i}}}} {{\tilde{Z}}\left( y \right) }\). Take \(\left( {{P^O}} \right)\) as an example. If \(\forall {}i\), \({P_{{\tilde{Z}},\,i}(x)> \beta } \wedge {W_{{\tilde{Z}},\,i}(x) > k}\), then we can get x is in the upper approximation. In the same way, when \(\exists {}i\), \(P_{{\tilde{Z}},\,i}(x) \ge \alpha \wedge \exists {}j, {W_{{\tilde{Z}},\,j}(x) \ge \left| [x]_{IS_{j}}\right| -k}\), we have x is in lower approximation. So if both of these conditions are met, we can decide that x is in the positive region. The remaining three decision rules can be generated similarly. The four rules are presented as following:

We propose LCO-MRFSM by considering the concept of optimistic multigranulation rough set model. Furthermore, we can define the pessimistic model by utilizing the concept of pessimistic multigranulation rough set model.

3.2 Logical conjunction pessimistic multigranulation rough fuzzy set model (LCP-MRFSM)

Definition 2

Given a MsDS \(MsDS = MsIS \cup \left\{ {D,{V_D},{F_D}} \right\}\), where \(MsIS = \left\{ {I{S_i}\left| {I{S_i}} \right. = \left( {U,AT,{V_{AT}},{F_i}} \right) ,i = 1,2,...,n} \right\}\). Assume \(\beta \le \alpha\) and \(\beta ,\alpha \in [0,1]\), and k is a nonpositive integer. For any fuzzy set \({\tilde{Z}} \in F(U)\), let \(L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^P\left( {{\tilde{Z}}} \right)\) denote the lower approximation and \(U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^P\left( {{\tilde{Z}}} \right)\) denote the upper approximation, which are defined as

The four decision regions, similar to LCO-MRFSM, can be computed as

The sample set U can be divided into four parts by the four decision regions. Furthermore, based on Definition 2, we can get some propositions of LCP-MRFSM, which are similar to the propositions of LCO-MRFSM.

Proposition 4

For any fuzzy sets \({\tilde{Z}}\), \({\tilde{M}} \in F(U)\), the following propositions are true.

-

(1).

\(When\, \,{\tilde{Z}} \subseteq {\tilde{M}},L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^P\left( {{\tilde{Z}}} \right) \subseteq L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^P\left( {{\tilde{M}}} \right) .\)

-

(2).

\(When{}{\tilde{Z}} \subseteq {\tilde{M}}, U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^P\left( {{\tilde{Z}}} \right) \subseteq U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^P\left( {{\tilde{M}}} \right) .\)

-

(3).

\(If\, \, \, \, {k_{1\, \, }} \le {k_2},\, \,then{}L_{_{\left( {\alpha ,\beta } \right) \wedge {k_1}}}^P\left( {{\tilde{Z}}} \right) \subseteq L_{_{\left( {\alpha ,\beta } \right) \wedge {k_2}}}^P\left( {{\tilde{Z}}} \right) .\)

-

(4).

\(If\, \, {\beta _1} \le {\beta _2},\, \,then {}U_{_{\left( {\alpha ,{\beta _2} } \right) \wedge k}}^P\left( {{\tilde{Z}}} \right) \subseteq U_{_{\left( {\alpha ,{\beta _1} } \right) \wedge k}}^P\left( {{\tilde{Z}}} \right) .\)

-

(5).

\(U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^P\left( {{\tilde{Z}}} \right) \cup U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^P\left( {{\tilde{M}}} \right) \subseteq U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^P\left( {{\tilde{Z}} \cup {\tilde{M}}} \right) .\)

-

(6).

\(L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^P\left( {{\tilde{Z}}} \right) \cup L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^P\left( {{\tilde{M}}} \right) \subseteq L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^P\left( {{\tilde{Z}} \cup {\tilde{M}}} \right) .\)

-

(7).

\(U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^P\left( {{\tilde{Z}} \cap {\tilde{M}}} \right) \subseteq U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^P\left( {{\tilde{Z}}} \right) \cap U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^P\left( {{\tilde{M}}} \right) .\)

-

(8).

\(L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^P\left( {{\tilde{Z}} \cap {\tilde{M}}} \right) \subseteq L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^P\left( {{\tilde{Z}}} \right) \cap L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^P\left( {{\tilde{M}}} \right) .\)

Proof

The proof process is similar to Proposition 4. \(\square\)

Now, let us consider the situation that \({\tilde{Z}}\) degenerates into a classical set. The following Propositions 5 and 6 show that our model and the pessimistic multigranulation rough set model [28] have the similar form when each information system is regarded as a granularity.

Proposition 5

When \({\tilde{Z}} \subseteq U\), then we can get if \(k=0\) or \(\alpha\)=1,

Proof

The proof process is similar to Proposition 2. \(\square\)

Proposition 6

When \({\tilde{Z}} \subseteq U\), then we can get if \(k=0\) and \(\beta =0\),

Proof

The proof process is similar to Proposition 3. \(\square\)

Four decision rules can be established based on the corresponding four decision regions, which are presented as following:

where \(P_{{\tilde{Z}},\,i}(x)\) denotes \(P\left( {\tilde{Z}}|[x]_{I{S_i}}\right)\) and \(W_{{\tilde{Z}},\,i}(x)\) denotes \(\sum \limits _{y \in {{[x]}_{I{S_i}}}} {{\tilde{Z}}\left( y \right) }\).

3.3 Logical conjunction mean multigranulation rough fuzzy set model(LCM-MRFSM)

The above \(O^\wedge\) and \(P^\wedge\) models utilize the support function operators to define the approximation operators. For any fuzzy set \({\tilde{Z}} \in F(U)\), the \(O^\wedge\) model considers one sample x to belong to the lower approximation when it satisfies \(\exists \, \, \, i\), \(\sum \limits _{y \in {{[x]}_{I{S_i}}}} \left( {1 - {\tilde{Z}}\left( y \right) } \right) \le k\) and \(\exists \, \, \, j\), \(P\left( {{\tilde{Z}}\left| {{{[x]}_{I{S_j}}}} \right. } \right) \ge \alpha\). The condition seems too lenient. While the \(P^\wedge\) model considers one sample x to belong to the lower approximation when it satisfies \(\forall \, \, \, i\), \(\sum \limits _{y \in {{[x]}_{I{S_i}}}} \left( {1 - {\tilde{Z}}\left( y \right) } \right) \le k\) and \(P\left( {{\tilde{Z}}\left| {{{[x]}_{I{S_i}}}} \right. } \right) \ge \alpha\). The condition seems too strict. So in 3.3 and 4.3, we propose a kind of moderate condition by considering the mean number of \(P\left( {\tilde{Z}}|[x]_{I{S_i}}\right)\) and \(\sum \limits _{y \in {{[x]}_{I{S_i}}}} {{\tilde{Z}}\left( y \right) }\).

Definition 3

Given a MsDS \(MsDS = MsIS \cup \left\{ {D,{V_D},{F_D}} \right\}\), where \(MsIS = \left\{ {I{S_i}\left| {I{S_i}} \right. = \left( {U,AT,{V_{AT}},{F_i}} \right) ,i = 1,2,...,n} \right\}\). Assume \(\beta \le \alpha\) and \(\beta ,\alpha \in [0,1]\), and k is a nonpositive integer. For any fuzzy set \({\tilde{Z}} \in F(U)\), let \(L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^M\left( {{\tilde{Z}}} \right)\) denote the lower approximation and \(U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^M\left( {{\tilde{Z}}} \right)\) denote the upper approximation, which are defined as

where \(P_{{\tilde{Z}},\,i}(x)\) denotes \(P\left( {\tilde{Z}}|[x]_{I{S_i}}\right)\) and \(W_{{\tilde{Z}},\,i}(x)\) denotes \(\sum \limits _{y \in {{[x]}_{I{S_i}}}} {{\tilde{Z}}\left( y \right) }\).

The four decision regions can be computed as

The four regions can divide sample set U into four parts. Moreover, we also can get some propositions of LCM-MRFSM based on Definition 3.

Proposition 7

For any fuzzy sets \({\tilde{Z}}\), \({\tilde{M}} \in F(U)\), the following propositions are true.

-

(1).

\(When\, \,{\tilde{Z}} \subseteq {\tilde{M}},L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^M\left( {{\tilde{Z}}} \right) \subseteq L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^M\left( {{\tilde{M}}} \right) .\)

-

(2).

\(When\,{\tilde{Z}} \subseteq {\tilde{M}}, U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^M\left( {{\tilde{Z}}} \right) \subseteq U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^M\left( {{\tilde{M}}} \right) .\)

-

(3).

\(If\, \, \, \, {k_{1\, \, }} \le {k_2},\, \,then{}L_{_{\left( {\alpha ,\beta } \right) \wedge {k_1}}}^M\left( {{\tilde{Z}}} \right) \subseteq L_{_{\left( {\alpha ,\beta } \right) \wedge {k_2}}}^M\left( {{\tilde{Z}}} \right) .\)

-

(4).

\(If\, \, \, \, {\beta _1} \le {\beta _2},\, \,then {}U_{_{\left( {\alpha ,{\beta _2} } \right) \wedge k}}^M\left( {{\tilde{Z}}} \right) \subseteq U_{_{\left( {\alpha ,{\beta _1} } \right) \wedge k}}^M\left( {{\tilde{Z}}} \right) .\)

-

(5).

\(U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^M\left( {{\tilde{Z}}} \right) \cup U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^M\left( {{\tilde{M}}} \right) \subseteq U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^M\left( {{\tilde{Z}} \cup {\tilde{M}}} \right) .\)

-

(6).

\(L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^M\left( {{\tilde{Z}}} \right) \cup L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^M\left( {{\tilde{M}}} \right) \subseteq L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^M\left( {{\tilde{Z}} \cup {\tilde{M}}} \right) .\)

-

(7).

\(U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^M\left( {{\tilde{Z}} \cap {\tilde{M}}} \right) \subseteq U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^M\left( {{\tilde{Z}}} \right) \cap U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^M\left( {{\tilde{M}}} \right) .\)

-

(8).

\(L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^M\left( {{\tilde{Z}} \cap {\tilde{M}}} \right) \subseteq L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^M\left( {{\tilde{Z}}} \right) \cap L_{_{\left( {\alpha ,\beta } \right) \wedge k}}^M\left( {{\tilde{M}}} \right) .\)

Proof

The proof process is similar to Proposition 3. \(\square\)

Now, let us consider the situation that \({\tilde{Z}}\) degenerates into a classical set. The following Propositions 8 and 9 show that our model and the pessimistic multigranulation rough set model [28] have the similar form when each information system is regarded as a granularity.

Proposition 8

When \({\tilde{Z}} \subseteq U\), then we can get if \(k=0\) or \(\alpha\)=1,

Proof

The proof process is similar to Proposition 2. \(\square\)

Proposition 9

When \({\tilde{Z}} \subseteq U\), then we can get if \(k=0\) and \(\beta =0\),

Proof

The proof process is similar to Proposition 3. \(\square\)

The decision rules can be established based on the four decision regions, which are presented as following:

where \(P_{{\tilde{Z}},\,i}(x)\) denotes \(P\left( {\tilde{Z}}|[x]_{D{S_i}}\right)\) and \(W_{{\tilde{Z}},\,i}(x)\) denotes \(\sum \limits _{y \in {{[x]}_{D{S_i}}}} {{\tilde{Z}}\left( y \right) }\).

4 Logical disjunction multigranulation rough fuzzy set model in multi-source decision systems

In this section, similar to LCO-MRFSM, LCP-MRFSM, and LCM-MRFSM, we define three other multigranulation rough fuzzy set models by using the logical disjunction operator. And some propositions of them are discussed.

4.1 Logical disjunction optimistic multigranulation rough fuzzy set model(LDO-MRFSM)

Definition 4

Given a MsDS \(MsDS = MsIS \cup \left\{ {D,{V_D},{F_D}} \right\}\), where \(MsIS = \left\{ {I{S_i}\left| {I{S_i}} \right. = \left( {U,AT,{V_{AT}},{F_i}} \right) ,i = 1,2,...,n} \right\}\). Assume \(\beta \le \alpha\) and \(\beta ,\alpha \in [0,1]\), and k is a nonpositive integer. For any fuzzy set \({\tilde{Z}} \in U\), let \(L_{_{\left( {\alpha ,\beta } \right) \vee k}}^O\left( {{\tilde{Z}}} \right)\) denote the lower approximation and \(U_{_{\left( {\alpha ,\beta } \right) \vee k}}^M\left( {{\tilde{Z}}} \right)\) denote the upper approximation, which are defined as

We also can easily find that \(L_{_{\left( {\alpha ,\beta } \right) \vee k}}^O\left( {{\tilde{Z}}} \right)\) is not included in \(U_{_{\left( {\alpha ,\beta } \right) \vee k}}^O\left( {{\tilde{Z}}} \right)\). So just like the above method, we put samples that that belong to both \(L_{_{\left( {\alpha ,\beta } \right) \vee k}}^O\left( {{\tilde{Z}}} \right)\) and \(U_{_{\left( {\alpha ,\beta } \right) \vee k}}^O\left( {{\tilde{Z}}} \right)\) into the positive region, samples that do not belong to either \(L_{_{\left( {\alpha ,\beta } \right) \vee k}}^O\left( {{\tilde{Z}}} \right)\) or \(U_{_{\left( {\alpha ,\beta } \right) \vee k}}^O\left( {{\tilde{Z}}} \right)\) into the negative region, samples that belong to \(L_{_{\left( {\alpha ,\beta } \right) \vee k}}^O\left( {{\tilde{Z}}} \right)\) but do not belong to \(U_{_{\left( {\alpha ,\beta } \right) \vee k}}^O\left( {{\tilde{Z}}} \right)\) into the lower boundary region, samples that belong to \(U_{_{\left( {\alpha ,\beta } \right) \vee k}}^O\left( {{\tilde{Z}}} \right)\) but do not belong to \(L_{_{\left( {\alpha ,\beta } \right) \vee k}}^O\left( {{\tilde{Z}}} \right)\) into the upper boundary region.

The four decision regions can be computed as

The four regions can divide sample set U into four parts, where the samples in the positive region completely support the concept set, the samples in the negative region completely oppose the concept set, and the samples in the lower and upper boundary may support or oppose the concept set. Furthermore, based on Definition 4, we can get some propositions of LDO-MRFSM.

Proposition 10

For any fuzzy sets \({\tilde{Z}}\), \({\tilde{M}} \in F(U)\), the following propositions are true.

-

(1).

\(When\, \,{\tilde{Z}} \subseteq {\tilde{M}},L_{_{\left( {\alpha ,\beta } \right) \vee k}}^O\left( {{\tilde{Z}}} \right) \subseteq L_{_{\left( {\alpha ,\beta } \right) \vee k}}^O\left( {{\tilde{M}}} \right) .\)

-

(2).

\(When{}{\tilde{Z}} \subseteq {\tilde{M}}, U_{_{\left( {\alpha ,\beta } \right) \vee k}}^O\left( {{\tilde{Z}}} \right) \subseteq U_{_{\left( {\alpha ,\beta } \right) \vee k}}^O\left( {{\tilde{M}}} \right) .\)

-

(3).

\(If\, \, \, \, {k_{1\, \, }} \le {k_2},\, \,then{}L_{_{\left( {\alpha ,\beta } \right) \vee {k_1}}}^O\left( {{\tilde{Z}}} \right) \subseteq L_{_{\left( {\alpha ,\beta } \right) \vee {k_2}}}^O\left( {{\tilde{Z}}} \right) .\)

-

(4).

\(If\, \, \, \, {\beta _1} \le {\beta _2},\, \,then {}U_{_{\left( {\alpha ,{\beta _2} } \right) \vee k}}^O\left( {{\tilde{Z}}} \right) \subseteq U_{_{\left( {\alpha ,{\beta _1} } \right) \vee k}}^O\left( {{\tilde{Z}}} \right) .\)

-

(5).

\(U_{_{\left( {\alpha ,\beta } \right) \vee k}}^O\left( {{\tilde{Z}}} \right) \cup U_{_{\left( {\alpha ,\beta } \right) \vee k}}^O\left( {{\tilde{M}}} \right) \subseteq U_{_{\left( {\alpha ,\beta } \right) \vee k}}^O\left( {{\tilde{Z}} \cup {\tilde{M}}} \right) .\)

-

(6).

\(L_{_{\left( {\alpha ,\beta } \right) \vee k}}^O\left( {{\tilde{Z}}} \right) \cup L_{_{\left( {\alpha ,\beta } \right) \vee k}}^O\left( {{\tilde{M}}} \right) \subseteq L_{_{\left( {\alpha ,\beta } \right) \vee k}}^O\left( {{\tilde{Z}} \cup {\tilde{M}}} \right) .\)

-

(7).

\(U_{_{\left( {\alpha ,\beta } \right) \vee k}}^O\left( {{\tilde{Z}} \cap {\tilde{M}}} \right) \subseteq U_{_{\left( {\alpha ,\beta } \right) \vee k}}^O\left( {{\tilde{Z}}} \right) \cap U_{_{\left( {\alpha ,\beta } \right) \vee k}}^O\left( {{\tilde{M}}} \right) .\)

-

(8).

\(L_{_{\left( {\alpha ,\beta } \right) \vee k}}^O\left( {{\tilde{Z}} \cap {\tilde{M}}} \right) \subseteq L_{_{\left( {\alpha ,\beta } \right) \vee k}}^O\left( {{\tilde{Z}}} \right) \cap L_{_{\left( {\alpha ,\beta } \right) \vee k}}^O\left( {{\tilde{M}}} \right) .\)

Proof

The demonstration process is similar to Proposition 1. \(\square\)

Now, let us consider the situation that \({\tilde{Z}}\) degenerates into a classical set. The following Propositions 11 and 12 demonstrate that our model and optimistic multigranulation rough set model [28] have the similar form when each information system is regarded as a granularity.

Proposition 11

When \({\tilde{Z}} \subseteq U\), then we can get if \(k=0\) or \(\alpha\)=1,

Proof

The proof process is similar to Proposition 2. \(\square\)

Proposition 12

When \({\tilde{Z}} \subseteq U\), then we can get if \(k=0\) and \(\beta =0\),

Proof

The proof process is similar to Proposition 3. \(\square\)

The decision rules can be established based on the four decision regions. For convenient, let \(P_{{\tilde{Z}},\,i}(x)\) denote \(P\left( {\tilde{Z}}|[x]_{I{S_i}}\right)\) and \(W_{{\tilde{Z}},\,i}(x)\) denote \(\sum \limits _{y \in {{[x]}_{I{S_i}}}} {{\tilde{Z}}\left( y \right) }\). Then these rules are presented as following:

4.2 Logical disjunction pessimistic multigranulation rough fuzzy set model(LDP-MRFSM)

Definition 5

Given a MsDS \(MsDS = MsIS \cup \left\{ {D,{V_D},{F_D}} \right\}\), where \(MsIS = \left\{ {I{S_i}\left| {I{S_i}} \right. = \left( {U,AT,{V_{AT}},{F_i}} \right) ,i = 1,2,...,n} \right\}\). Assume \(\beta \le \alpha\) and \(\beta ,\alpha \in [0,1]\), and k is a nonpositive integer. For any fuzzy set \({\tilde{Z}} \in F(U)\), let \(L_{_{\left( {\alpha ,\beta } \right) \vee k}}^P\left( {{\tilde{Z}}} \right)\) denote the lower approximation and \(U_{_{\left( {\alpha ,\beta } \right) \vee k}}^P\left( {{\tilde{Z}}} \right)\) denote the upper approximation, which are defined as

The four decision regions, similar to LDO-MRFSM, can be computed as

The four regions can divide sample set U into four parts. Furthermore, based on Definition 5, we can get some propositions of LDP-MRFSM.

Proposition 13

For any fuzzy sets \({\tilde{Z}}\), \({\tilde{M}} \in F(U)\), the following propositions are true.

-

(1).

\(When\, \,{\tilde{Z}} \subseteq {\tilde{M}},L_{_{\left( {\alpha ,\beta } \right) \vee k}}^P\left( {{\tilde{Z}}} \right) \subseteq L_{_{\left( {\alpha ,\beta } \right) \vee k}}^P\left( {{\tilde{M}}} \right) .\)

-

(2).

\(When{}{\tilde{Z}} \subseteq {\tilde{M}}, U_{_{\left( {\alpha ,\beta } \right) \vee k}}^P\left( {{\tilde{Z}}} \right) \subseteq U_{_{\left( {\alpha ,\beta } \right) \vee k}}^P\left( {{\tilde{M}}} \right) .\)

-

(3).

\(If\, \, {k_{1\, \, }} \le {k_2},\, \,then{}L_{_{\left( {\alpha ,\beta } \right) \vee {k_1}}}^P\left( {{\tilde{Z}}} \right) \subseteq L_{_{\left( {\alpha ,\beta } \right) \vee {k_2}}}^P\left( {{\tilde{Z}}} \right) .\)

-

(4).

\(If\, \, {\beta _1} \le {\beta _2},\, \,then{} U_{_{\left( {\alpha ,{\beta _2} } \right) \vee k}}^P\left( {{\tilde{Z}}} \right) \subseteq U_{_{\left( {\alpha ,{\beta _1} } \right) \vee k}}^P\left( {{\tilde{Z}}} \right) .\)

-

(5).

\(U_{_{\left( {\alpha ,\beta } \right) \vee k}}^P\left( {{\tilde{Z}}} \right) \cup U_{_{\left( {\alpha ,\beta } \right) \vee k}}^P\left( {{\tilde{M}}} \right) \subseteq U_{_{\left( {\alpha ,\beta } \right) \vee k}}^P\left( {{\tilde{Z}} \cup {\tilde{M}}} \right) .\)

-

(6).

\(L_{_{\left( {\alpha ,\beta } \right) \vee k}}^P\left( {{\tilde{Z}}} \right) \cup L_{_{\left( {\alpha ,\beta } \right) \vee k}}^P\left( {{\tilde{M}}} \right) \subseteq L_{_{\left( {\alpha ,\beta } \right) \vee k}}^P\left( {{\tilde{Z}} \cup {\tilde{M}}} \right) .\)

-

(7).

\(U_{_{\left( {\alpha ,\beta } \right) \vee k}}^P\left( {{\tilde{Z}} \cap {\tilde{M}}} \right) \subseteq U_{_{\left( {\alpha ,\beta } \right) \vee k}}^P\left( {{\tilde{Z}}} \right) \cap U_{_{\left( {\alpha ,\beta } \right) \vee k}}^P\left( {{\tilde{M}}} \right) .\)

-

(8).

\(L_{_{\left( {\alpha ,\beta } \right) \vee k}}^P\left( {{\tilde{Z}} \cap {\tilde{M}}} \right) \subseteq L_{_{\left( {\alpha ,\beta } \right) \vee k}}^P\left( {{\tilde{Z}}} \right) \cap L_{_{\left( {\alpha ,\beta } \right) \vee k}}^P\left( {{\tilde{M}}} \right) .\)

Proof

The proof process is similar to Proposition 1. \(\square\)

Now, let us consider the situation that \({\tilde{Z}}\) degenerates into a classical set. The following Propositions 14 and 15 demonstrate that our model and optimistic multigranulation rough set model [28] have the similar form when each information system is regarded as a granularity.

Proposition 14

When \({\tilde{Z}} \subseteq U\), then we can get if \(k=0\) or \(\alpha\)=1,

Proof

The proof process is similar to Proposition 2. \(\square\)

Proposition 15

When \({\tilde{Z}} \subseteq U\), then we can get if \(k=0\) and \(\beta =0\),

Proof

The proof process is similar to Proposition 3. \(\square\)

The decision rules can be established based on the four decision regions, which are presented as follow:

where \(P_{{\tilde{Z}},\,i}(x)\) denotes \(P\left( {\tilde{Z}}|[x]_{I{S_i}}\right)\) and \(W_{{\tilde{Z}},\,i}(x)\) denotes \(\sum \limits _{y \in {{[x]}_{I{S_i}}}} {{\tilde{Z}}\left( y \right) }\).

4.3 Logical disjunction mean multigranulation rough fuzzy set model(LDM-MRFSM)

Definition 6

Given a MsDS \(MsDS = MsIS \cup \left\{ {D,{V_D},{F_D}} \right\}\), where \(MsIS = \left\{ {I{S_i}\left| {I{S_i}} \right. = \left( {U,AT,{V_{AT}},{F_i}} \right) ,i = 1,2,...,n} \right\}\). Assume \(\beta \le \alpha\) and \(\beta ,\alpha \in [0,1]\), and k is a nonpositive integer. For any fuzzy set \({\tilde{Z}} \in F(U)\), let \(L_{_{\left( {\alpha ,\beta } \right) \vee k}}^M\left( {{\tilde{Z}}} \right)\) denote the lower approximation and \(U_{_{\left( {\alpha ,\beta } \right) \vee k}}^M\left( {{\tilde{Z}}} \right)\) represent the upper approximation, which are defined as

where \(P_{{\tilde{Z}},\,i}(x)\) denotes \(P\left( {\tilde{Z}}|[x]_{I{S_i}}\right)\) and \(W_{{\tilde{Z}},\,i}(x)\) denotes \(\sum \limits _{y \in {{[x]}_{I{S_i}}}} {{\tilde{Z}}\left( y \right) }\).

The four decision regions can be computed as

The sample set U can be divided into four parts by the corresponding decision regions. Furthermore, based on Definition 6, we can get some propositions of LDM-MRFSM.

Proposition 16

For any fuzzy sets \({\tilde{Z}}\), \({\tilde{M}} \in F(U)\), the following propositions are true.

-

(1).

\(When\, \,{\tilde{Z}} \subseteq {\tilde{M}},L_{_{\left( {\alpha ,\beta } \right) \vee k}}^M\left( {{\tilde{Z}}} \right) \subseteq L_{_{\left( {\alpha ,\beta } \right) \vee k}}^M\left( {{\tilde{M}}} \right) .\)

-

(2).

\(When{}{\tilde{Z}} \subseteq {\tilde{M}}, U_{_{\left( {\alpha ,\beta } \right) \vee k}}^M\left( {{\tilde{Z}}} \right) \subseteq U_{_{\left( {\alpha ,\beta } \right) \vee k}}^M\left( {{\tilde{M}}} \right) .\)

-

(3).

\(If\, \, {k_{1\, \, }} \le {k_2},\, \,then{}L_{_{\left( {\alpha ,\beta } \right) \vee {k_1}}}^M\left( {{\tilde{Z}}} \right) \subseteq L_{_{\left( {\alpha ,\beta } \right) \vee {k_2}}}^M\left( {{\tilde{Z}}} \right) .\)

-

(4).

\(If\, \, {\beta _1} \le {\beta _2},\, {}then {}U_{_{\left( {\alpha ,{\beta _2} } \right) \vee k}}^M\left( {{\tilde{Z}}} \right) \subseteq U_{_{\left( {\alpha ,{\beta _1} } \right) \vee k}}^M\left( {{\tilde{Z}}} \right) .\)

-

(5).

\(U_{_{\left( {\alpha ,\beta } \right) \vee k}}^M\left( {{\tilde{Z}}} \right) \cup U_{_{\left( {\alpha ,\beta } \right) \vee k}}^M\left( {{\tilde{M}}} \right) \subseteq U_{_{\left( {\alpha ,\beta } \right) \vee k}}^M\left( {{\tilde{Z}} \cup {\tilde{M}}} \right) .\)

-

(6).

\(L_{_{\left( {\alpha ,\beta } \right) \vee k}}^M\left( {{\tilde{Z}}} \right) \cup L_{_{\left( {\alpha ,\beta } \right) \vee k}}^M\left( {{\tilde{M}}} \right) \subseteq L_{_{\left( {\alpha ,\beta } \right) \vee k}}^M\left( {{\tilde{Z}} \cup {\tilde{M}}} \right) .\)

-

(7).

\(U_{_{\left( {\alpha ,\beta } \right) \wedge k}}^M\left( {{\tilde{Z}} \cap {\tilde{M}}} \right) \subseteq U_{_{\left( {\alpha ,\beta } \right) \vee k}}^M\left( {{\tilde{Z}}} \right) \cap U_{_{\left( {\alpha ,\beta } \right) \vee k}}^M\left( {{\tilde{M}}} \right) .\)

-

(8).

\(L_{_{\left( {\alpha ,\beta } \right) \vee k}}^M\left( {{\tilde{Z}} \cap {\tilde{M}}} \right) \subseteq L_{_{\left( {\alpha ,\beta } \right) \vee k}}^M\left( {{\tilde{Z}}} \right) \cap L_{_{\left( {\alpha ,\beta } \right) \vee k}}^M\left( {{\tilde{M}}} \right) .\)

Proof

The proof process is similar to Proposition 1. \(\square\)

Now, let us consider the situation that \({\tilde{Z}}\) degenerates into a classical set. The following Propositions 17 and 18 demonstrate that our model and pessimistic multigranulation rough set model [28] have the similar form when each information system is regarded as a granularity.

Proposition 17

When \({\tilde{Z}} \subseteq U\), then we can get if \(k=0\) or \(\alpha\)=1,

Proof

The proof process is similar to Proposition 2. \(\square\)

Proposition 18

When \({\tilde{Z}} \subseteq U\), then we can get if \(k=0\) and \(\beta =0\),

Proof

The proof process is similar to Proposition 3. \(\square\)

The decision rules can be established based on the four decision regions, which are presented as follow:

where \(P_{{\tilde{Z}},\,i}(x)\) denotes \(P\left( {\tilde{Z}}|[x]_{I{S_i}}\right)\) and \(W_{{\tilde{Z}},\,i}(x)\) denotes \(\sum \limits _{y \in {{[x]}_{I{S_i}}}} {{\tilde{Z}}\left( y \right) }\).

5 Relationship between logical conjunction and disjunction multigranulation rough fuzzy set models

In Sects. 3 and 4, we respectively define three logical conjunction models and three logical disjunction models. In the following, we will discuss the relationship among these models. The connections between these models are intuitively presented in Fig3.

For the lower approximation of the proposed six models, we can find that the conditions of LCO-MRFSM and LDO-MRFSM are the loosest among them, while LCP-MRFSM and LDP-MRFSM are the most stringent. On the contrary, for the upper approximation, LCO-MRFSM and LDO-MRFSM are the most stringent, while LCP-MRFSM and LDP-MRFSM are the most loosest. We all know that the looser the conditions, the bigger the approximation set and the more stringent the conditions, the smaller the approximation set. Proposition 19 shows the concrete form.

Proposition 19

Given a MsDS \(MsDS = MsIS \cup \left\{ {D,{V_D},{F_D}} \right\}\), where \(MsIS = \left\{ {I{S_i}\left| {I{S_i}} \right. = \left( {U,AT,{V_{AT}},{F_i}} \right) ,i = 1,2,...,n} \right\}\). Assume \(\beta \le \alpha\) and \(\beta ,\alpha \in [0,1]\), and k is a nonpositive integer, for any \({\tilde{Z}} \in F(U)\), we have that

-

(1).

\(L_{\left( {\alpha ,\beta } \right) \vee k}^P\left( {{\tilde{Z}}} \right) \subseteq L_{\left( {\alpha ,\beta } \right) \vee k}^M\left( {{\tilde{Z}}} \right) \subseteq L_{\left( {\alpha ,\beta } \right) \vee k}^O\left( {{\tilde{Z}}} \right) ,\)

-

(2).

\(U_{\left( {\alpha ,\beta } \right) \vee k}^O\left( {{\tilde{Z}}} \right) \subseteq U_{\left( {\alpha ,\beta } \right) \vee k}^M\left( {{\tilde{Z}}} \right) \subseteq U_{\left( {\alpha ,\beta } \right) \vee k}^P\left( {{\tilde{Z}}} \right) ,\)

-

(3).

\(L_{\left( {\alpha ,\beta } \right) \wedge k}^P\left( {{\tilde{Z}}} \right) \subseteq L_{\left( {\alpha ,\beta } \right) \wedge k}^M\left( {{\tilde{Z}}} \right) \subseteq L_{\left( {\alpha ,\beta } \right) \wedge k}^O\left( {{\tilde{Z}}} \right) ,\)

-

(4).

\(U_{\left( {\alpha ,\beta } \right) \wedge k}^O\left( {{\tilde{Z}}} \right) \subseteq U_{\left( {\alpha ,\beta } \right) \wedge k}^M\left( {{\tilde{Z}}} \right) \subseteq U_{\left( {\alpha ,\beta } \right) \wedge k}^P\left( {{\tilde{Z}}} \right) .\)

Proof

(1) \(\forall {}x \in L_{\left( {\alpha ,\beta } \right) \vee k}^P\left( {{\tilde{Z}}} \right)\), we have \(\forall {} i\), \(\sum \limits _{y \in {{[x]}_{I{S_i}}}} \left( {1 - {\tilde{Z}}\left( y \right) } \right) \le k\) or \(P\left( {{\tilde{Z}}\left| {{{[x]}_{I{S_i}}}} \right. } \right) \ge \alpha\). So we can get \(\frac{1}{s}\sum \limits _{i=1}^s P_{{\tilde{Z}},\,i}(x) \ge \alpha\) or \(\frac{1}{s}\sum \limits _{i=1}^s W_{{{\tilde{Z}}}^c,\,i}(x) < k\). Thus, \(L_{\left( {\alpha ,\beta } \right) \vee k}^P\left( {{\tilde{Z}}} \right) \subseteq L_{\left( {\alpha ,\beta } \right) \vee k}^M\left( {{\tilde{Z}}} \right)\). And \(\forall x \in L_{\left( {\alpha ,\beta } \right) \vee k}^M\left( {{\tilde{Z}}} \right)\), we have \(\frac{1}{s}\sum \limits _{i=1}^s P_{{\tilde{Z}},\,i}(x) \ge \alpha\) or \(\frac{1}{s}\sum \limits _{i=1}^s W_{{{\tilde{Z}}}^c,\,i}(x) \le k\). So we can get \(\exists {}i\), \(\sum \limits _{i=1}^s P_{{\tilde{Z}},\,i}(x) \ge \alpha\) or \(\sum \limits _{i=1}^s W_{{{\tilde{Z}}}^c,\,i}(x) \le k\). Thus, \(L_{\left( {\alpha ,\beta } \right) \vee k}^M\left( {{\tilde{Z}}} \right) \subseteq L_{\left( {\alpha ,\beta } \right) \vee k}^O\left( {{\tilde{Z}}} \right)\).

(2)-(4) The process is similar to (1).

Proposition 19 discusses the relationship of approximation operators of the logical conjunction and disjunction model respectively. Next, we will discuss the relationship between the logical conjunction and disjunction models.

Proposition 20

Given a MsDS \(MsDS = MsIS \cup \left\{ {D,{V_D},{F_D}} \right\}\), where \(MsIS = \left\{ {I{S_i}\left| {I{S_i}} \right. = \left( {U,AT,{V_{AT}},{F_i}} \right) ,i = 1,2,...,n} \right\}\). Assume \(\beta \le \alpha\) and \(\beta ,\alpha \in [0,1]\), and k is a nonpositive integer, if \(\alpha +\beta =1\),then we can induce that, for any \({\tilde{Z}} \in F(U)\),

-

(1).

\(U_{\left( {\alpha ,\beta } \right) \wedge k}^*\left( {{{{\tilde{Z}}}^c}} \right) = {\left( {L_{\left( {\alpha ,\beta } \right) \vee k}^*\left( {{\tilde{Z}}} \right) } \right) ^c},\)

-

(2).

\(L_{\left( {\alpha ,\beta } \right) \wedge k}^*\left( {{{{\tilde{Z}}}^c}} \right) = {\left( {U_{\left( {\alpha ,\beta } \right) \vee k}^*\left( {{\tilde{Z}}} \right) } \right) ^c},\)

where * denotes one of the optimistic, pessimistic, and mean models.

Proof

(1) We just prove the optimistic model. \(\forall {}x \in U_{\left( {\alpha ,\beta } \right) \wedge k}^O\left( {{{{\tilde{Z}}}^c}} \right)\), we have \(\forall {}i\), \(P\left( {{\tilde{Z}}^c\left| {{{[x]}_{I{S_i}}}} \right. } \right) > \beta\) and \(\sum \limits _{y \in {{[x]}_{I{S_i}}}} \left( {1 - {\tilde{Z}}\left( y \right) } \right) > k\). And we can find that \(P\left( {{\tilde{Z}}^c\left| {{{[x]}_{I{S_i}}}} \right. } \right) > \beta\) is equivalent to \(P\left( {{\tilde{Z}}\left| {{{[x]}_{I{S_i}}}} \right. } \right) < 1-\beta\) = \(\alpha\). So

which is equivalent to the definition of \({L_{\left( {\alpha ,\beta } \right) \vee k}^O\left( {{\tilde{Z}}} \right) }\).

(2) The process is familiar to (1).

Proposition 20 shows the relationship of approximation operators between logical conjunction and disjunction models, which means that the two kinds of models can be translated into each other when \(\alpha +\beta =1\). For example, under the case \(\alpha +\beta =1\), we can compute the lower approximation of the logical disjunction models, and then the upper approximation of the logical conjunction models can be obtained based on (1) of Proposition 20. Now, let us discuss the containment relationship of approximations and decision regions of the proposed six models.

Proposition 21

Given a MsDS \(MsDS = MsIS \cup \left\{ {D,{V_D},{F_D}} \right\}\), where \(MsIS = \left\{ {I{S_i}\left| {I{S_i}} \right. = \left( {U,AT,{V_{AT}},{F_i}} \right) ,i = 1,2,...,n} \right\}\). Assume \(\beta \le \alpha\) and \(\beta ,\alpha \in [0,1]\), and k is a nonpositive integer, then for any \({\tilde{Z}} \in F(U)\), we can get that

-

(1)

\(L_{\left( {\alpha ,\beta } \right) \wedge k}^*\left( {{\tilde{Z}}} \right) \subseteq L_{\left( {\alpha ,\beta } \right) \vee k}^*\left( {{\tilde{Z}}} \right) ,\)

-

(2)

\(U_{\left( {\alpha ,\beta } \right) \wedge k}^*\left( {{\tilde{Z}}} \right) \subseteq U_{\left( {\alpha ,\beta } \right) \vee k}^*\left( {{\tilde{Z}}} \right) ,\)

where * denotes one of the optimistic, pessimistic, and mean models.

Proof

It is easy to prove (1) and (2). \(\square\)

Proposition 21 manifests the containment relationship of approximations. The approximations of the logical disjunction models are larger than the approximations of the logical conjunction models. Based on it, we can induce the following Proposition 22.

Proposition 22

Given a MsDS \(MsDS = \left\{ {\left\{ {I{S_i} = \left( {U,AT,{V_{AT}},F_i } \right) ,i = 1,2,...,s} \right\} \cup MD} \right\}\), \(\beta\), \(\alpha\), and a positive integer k, where \(\beta \le \alpha\) and \(\beta ,\alpha \in [0,1]\), then for any \({\tilde{Z}} \in F(U)\), we can get that

-

(1).

\(Po{s_*}^ \wedge \left( {{\tilde{Z}}} \right) \subseteq Po{s_*}^ \vee \left( {{\tilde{Z}}} \right) ,\)

-

(2).

\(Ne{g_*}^ \vee \left( {{\tilde{Z}}} \right) \subseteq Ne{g_*}^ \wedge \left( {{\tilde{Z}}} \right) ,\)

where * denotes one of the optimistic, pessimistic, and mean models.

Proof

It is easy to prove based on Proposition 21. \(\square\)

Proposition 22 indicates the containment relationship of positive and negative regions. The positive regions of the logical disjunction models are larger than the logical conjunction models, while the negative regions of them are smaller than the logical conjunction models.

Next, we apply our models in a weather example to evaluate the effectiveness of them for decision-making. Given a MsDS MsDS which contains four information sources, which is shown in Table 1. And \({a_1}\), \({a_2}\), \({a_3}\), and \({a_4}\) respectively represents outlook, temperature, humidity, and windy. The decision attribute \({\tilde{d}}\) is a fuzzy set representing good weather and the memberships of samples are denoted as \({\tilde{d}} = \left\{ {\frac{1}{3},\frac{1}{2},\frac{1}{6},\frac{1}{6},\frac{1}{2},\frac{2}{3},\frac{5}{6},\frac{1}{2},\frac{5}{6},\frac{1}{3},\frac{5}{6},\frac{1}{2},\frac{1}{3},\frac{1}{3}} \right\} .\) There is no denying that the thresholds \(\alpha\), \(\beta\), and k will affect the performance of models. So in this study, we consider three cases to evaluate the effectiveness of our models for decision-making. And the corresponding loss functions are given. It should be noted that the large value of k will make the conditions of the support functions become too strict so that the samples in the approximation sets will become very small. Thus, in most situations the value of k should not be too large. Without loss of generality, in this study the value of k is set to 1.

Case 1. When \(\alpha +\beta\)=1, we consider the following loss function:

Then we can get \(\alpha =0.5\) and \(\beta =0.5\). Then the results of approximation are shown in Table 2.

From Table 2, we can easily find that the lower approximation of LDP-MRFSM is contained in LDM-MRFSM, and the lower approximation of LDM-MRFSM is contained in LDO-MRFSM, which satisfies (1) of Proposition 19. And (2)–(4) of Proposition 19 can also be verified to be true under case 1. Furthermore, when we consider the decision attribute \({{{\tilde{d}}}^c} = \left\{ {\frac{2}{3},\frac{1}{2},\frac{5}{6},\frac{5}{6},\frac{1}{2},\frac{1}{3},\frac{1}{6},\frac{1}{2},\frac{1}{6},\frac{2}{3},\frac{1}{6},\frac{1}{2},\frac{2}{3},\frac{2}{3}} \right\}\), we can obtain the approximations of the six models, which are presented in Table 3. Proposition 20 can be demonstrated to be true based on Tables 2 and 3.

The decision regions, according to Table 2, can be computed via definition. The results are shown in Table 4. Samples in the positive region completely support the concept set, and samples in the negative region completely oppose the concept set, while samples in the upper and lower boundary regions may support or oppose the concept set. For example, by using the LDO-MRFSM, \(x_6\), \(x_7\), \(x_9\), and \(x_{11}\) are judged to have good weather while \(x_{13}\) and \(x_{14}\) are judged to not have good weather. The rest of the days are uncertain whether they have good weather or bad weather. Furthermore, we can find that LCP-MRFSM and LCM-MRFSM can divide the data set to four decision regions, LCO-MRFSM and LDP-MRFSM can divide the data set to two decision regions, and LDO-MRFSM and LDM-MRFSM can divide the data set to three decision regions.

Case 2. When \(\alpha +\beta<\)1, we consider the following loss function:

Then we can get \(\alpha =0.5\), \(\beta =0.4\). The results of approximations are shown in Table 5.

The decision regions, according to Table 5, can be computed via definition. The results are shown in Table 6. Take the decision results of LCO-MRFSM as an example. \(x_1\), \(x_3\), \(x_4\), \(x_{10}\), \(x_{13}\), and \(x_{14}\) are judged to not have good weather. The rest of the days are uncertain whether they have good weather or bad weather. Furthermore, we can find that in Case 2, LCP-MRFSM and LCM-MRFSM can also successfully divide the data set to four decision regions, the LCO-MRFSM and LDP-MRFSM can divide the data set to two decision regions, and LDO-MRFSM and LDM-MRFSM can divide a data set to three decision regions.

Case 3. When \(\alpha +\beta>\)1, we consider the following loss function:

Then we can get \(\alpha =0.6\), \(\beta =0.5\). The results of approximations are shown in Table 7.

The decision regions, according to Table 7, can be computed via definition. The results are shown in Table 8. Take the LCM-MRFSM as an example. \(x_9\) and \(x_7\) are judged to have good weather. \(x_1\), \(x_2\), \(x_3\), \(x_4\), \(x_8\), \(x_{10}\), \(x_{12}\), \(x_{13}\), and \(x_{14}\) are judged to not have good weather. The rest of the days are uncertain whether they have good weather or bad weather. Furthermore, we can find that LCP-MRFSM, LCM-MRFSM and LDM-MRFSM can divide the data set to four decision regions, LCO-MRFSM can divide it to two decision regions, and LDO-MRFSM, LDP-MRFSM models can divide it to three decision regions.

All in all, we can find that the proposed six models can successfully divide a data set into two, three or four decision regions, and Proposition 19-22 can be verified based on the results of the case study. Furthermore, we can find that the positive regions of the logical conjunction models are smaller than the logical disjunction models, and the negative regions of them are larger than the logical disjunction models. It stems from Propositions 21 and 22, which can be shown in Fig 4.

6 The algorithm of the proposed six methods and the experimental analysis

In this section, we firstly give the corresponding six algorithms of obtaining the four regions based on the proposed six methods, and the time complexity of these algorithms is analysed. Later, we apply our models in ten data sets to demonstrate that our models perform better than the mean method.

When we obtain a MsDS \(MsDS = MsIS \cup \left\{ {D,{V_D},{F_D}} \right\}\), where \(MsIS = \left\{ {I{S_i}\left| {I{S_i}} \right. = \left( {U,AT,{V_{AT}},{F_i}} \right) ,i = 1,2,...,n} \right\}\). Take LCO-MRFSM as an example. The value of k, \(\alpha\), and \(\beta\) are set in the beginning. For each sample x, the equivalence class of it under \(I{S_i}\) is calculated. After that, the value of the support functions is computed, and then the lower and upper approximations can be computed based on Definition 1. Finally, we can obtain the four decision regions employing the results of approximations. The processes of the other five models are similar to the process of LCO-MRFSM. The corresponding six algorithms about how to calculate the four decision regions are given in Algorithm 1-6. For the algorithm 1, the time complexity of step 5 can be regarded as O(1) when we use the package in python named the Numpy 1.16.5 because the package can avoid doing loops when computing the equivalence classes. That is the reason why we use python as the programming language. And the time complexity of the step 6, 9, 12, and 15 is O(|U|) in the worst case. So the total complexity is \(O\left( {\left| U \right| \times \left( {s \times \left| U \right| } \right) } \right)\) = \(O\left( {s \times {{\left| U \right| }^2}} \right)\), where s denotes the source number of MsDS. The time complexity of the algorithm 2-4 is the same as the algorithm 1. For the algorithm 5 and 6 , the time complexity of step 6 and 7 is O(|U|) in the worst case. And the complexity of the step 9 is O(s). So the total complexity is \(O\left( {\left| U \right| \times \left( {s \times \left| U \right| + s} \right) } \right)\) = \(O\left( {s \times {{\left| U \right| }^2} + s \times \left| U \right| } \right)\). We can easily find that the time complexity of LCM-MRFSM and LDM-MRFSM methods is larger than LCO-MRFSM, LDO-MRFSM, LCP-MRFSM, and LDP-MRFSM in the worst case.

Next, we do the following experiment to show that our models can be applied in real data sets. We download eight data sets on UCI[9] and two data sets on KEEL[10]. The information of the data sets is given in Table 9. All programs of experiments were designed using Python on a personal computer. The details of the environment are shown in Table 10. To apply the models we proposed, the decision attribute values of data sets should be in the range [0, 1], so we can normalize the decision attribute values to fuzzy data before experiments. The normalization formula is

where \({\widetilde{v}}\left( {{e_s}} \right)\) denotes the normalization result of \({v\left( {{e_s}} \right) }\).

We all know that multi-source data sets are not easily obtainable directly from machine learning data sets. In this study, we employ a method similar to [36] to generate a multi-source information system by adding noise. First, two random number sets \(\left\{ {{\mathrm{{n}}_i}\left| {{\mathrm{{n}}_i} \sim N\left( {0,0.01} \right) ,i = 1,2,...,s} \right. } \right\}\) and \(\left\{ {{\mathrm{{r}}_i}\left| {{\mathrm{{r}}_i} \sim U\left( {0,0.1} \right) ,i = 1,2,...,s} \right. } \right\}\) are produced randomly. Next, we randomly select 40% of the original data to add \(n_i\), and 20% of the remaining original data to add \(r_i\), and the rest keep uncharged. By repeating the above process, we can obtain s information systems. The generation process is presented in Fig5. In the following experiment, we construct a multi-source information system which consists of ten information sources.

In the experiment, we compare our models with the mean fusion method which is commonly used. Before experiment, we introduce the mean fusion method. Given a \(MsIS = \, \, \{{I{S_1},I{S_2},...,I{S_q}} \}\). The mean method integrates these subsystems to a single information system by using

where \(NewIS\left( {{x_j},{a_k}} \right)\) represents the fused information and \({I{S_i}\left( {{x_j},{a_k}} \right) }\) represents the information of i-th source. The mean fusion method is suitable to deal with data that is little fluctuant. When data is high fluctuant, the method will have high errors.

It should be noted that in the following experiments, after we obtain a new information system by the mean fusion method, we can compute the lower and upper approximations and the four decision regions of it by the methods presented in [4]. So we can acquire two kinds of decision results, which correspond to the logical conjunction and disjunction models, denoted as \(MeanF^\wedge\) and \(MeanF^\vee\) respectively. Then, the comparison can be conducted on the results of the four decision regions. By utilizing the six proposed models and the mean fusion models, each data set will be divided into four decision regions, which correspond to the positive, negative, upper boundary, and lower boundary region. As previously stated, samples in the positive region completely support the concept set, and samples in the negative region completely oppose the concept set, while samples in the upper and lower boundary regions may support or oppose the concept set. As the previous weather example, let the value of k be 1, and we compare our models with mean method under three cases: \(\alpha\) = \(\beta\) = 0.5; \(\alpha\) = 0.6, \(\beta\) = 0.5; \(\alpha\) = 0.5, \(\beta\) = 0.4. The corresponding loss functions are the same as the previous example. The results are presented in Tables 11, 12, 13, 14, 15, 16, 17, 18, 19 and20. From Table11, we can find that, for the three cases, LDO-MRFSM, LDP-MRFSM and LDM-MRFSM can divide the data set to three decision regions, while the mean fusion models can only divide it to two decision regions. For the data sets CCS, CMC, and WQW, LDP-MRFSM, LCP-MRFSM, LCM-MRFSM, and LDM-MRFSM can divide datasets into three or four regions, while \(MeanF^\vee\) and \(MeanF^\wedge\) models can only divide datasets to two regions under three cases. It shows that the four proposed models perform better than the mean fusion models for the decision-making in three situations. However, LDO-MRFSM and LCO-MRFSM can only divide these data sets into two regions just like \(MeanF^\vee\) and \(MeanF^\wedge\) models for the three datasets. For the data set WP, the six proposed models can divide the data set to four decision regions or at least three decision regions, while the two kinds of mean fusion models can only divide the data set to the negative region and the lower boundary region or the positive region and the lower boundary region. For the data set WQR, the eight methods can all divide the data set to three decision regions. In the data set ANA, all proposed models perform better than the mean fusion methods for decision-making in three situations. For the data set ASN, the \(MeanF^\vee\) model performs better than the proposed six models, dividing the data set to three regions, while the \(MeanF^\wedge\) model has the same effect as the proposed six models, only dividing the data set to two regions. Similarly, for the data sets Shill and Ele, our proposed models and the mean fusion methods can only divide the data sets to two regions.

We can find that in the data sets ASN, Shill, and Ele, our models can only divide the data sets to two decision regions: the negative and the lower boundary region or the positive and the lower boundary region. It stems from the equivalence classes of all samples are equivalent to themselves, called over granulation, which means \({\left[ x \right] _{I{S_i}}} =\left\{ x \right\}\), \(\forall {}x \in U\). When the over granulation happens, for any fuzzy set \({\tilde{Z}} \in F(U)\), we have \(I - LSF_{{\tilde{Z}}}^{I{S_i}}\left( x \right) =\) 1, \(\forall {}x \in U\). So the lower approximation set of the logical disjunction models will be equivalent to U. Thus, none of samples will be put in the negative region or the upper boundary region. For the logical conjunction models, when the over granulation happens, we can have \(II - USF_{{\tilde{Z}}}^{I{S_i}}\left( x \right) = 0\), \(\forall {}x \in U\). So the upper approximation set will be equivalent to an empty set. Thus, none of samples will be put in the upper boundary region or the positive region. Now, take the data set ASN as an illustrative example. We compare the results of decision-making between our models and the mean fusion method \(MeanF^\wedge\) by setting the number of attributes from 1 to 5. Without loss of generality, set the value of k be 1 and \(\alpha\) be 0.5 and \(\beta\) be 0.5. The results are shown in Fig 6 and Fig 7. From the results, we can find that the data set can be divided to four or three decision regions when the number of attributes is 1, 2, 3 or 4 by using the proposed LCO-MRFSM, LDO-MRFSM, LCP-MRFSM, and LCM-MRFSM. However, by using these models, the data set can only be divided to two decision regions when the number of attributes is 5. Thus, we can get that the over granulation is caused by the 5-th attribute. And we can find that the data set ASN can only be divided to the lower boundary and the negative regions by using the mean fusion method \(MeanF^\wedge\) when the number of attributes is 1, 2, 3 or 4. This indicates that LCO-MRFSM, LDO-MRFSM, LCP-MRFSM, and LCM-MRFSM are superior to the mean fusion method \(MeanF^\wedge\) in terms of decision-making when over granulation has been resolved.

7 Conclusion