Abstract

Rough set theory is an effective mathematical tool to deal with uncertain information. With the arrival of the information age, we need to handle not only single-source data sets, but also multi-source data sets. In real life; most of the data we face are fuzzy multi-source data sets. However, the rough set model has not been found for multi-source fuzzy information systems. This paper aims to study how to use the rough set model in multi-source fuzzy environment. Firstly, we define a distance formula between two objects in an information table and further propose a tolerance relation through this formula. Secondly, the supporting characteristic function is proposed by the inclusion relation between tolerance classes and concept set X. And then, from the perspective of multi-granulation, each information source is regarded as a granularity. The optimistic, pessimistic, generalized multi-granulation rough set model and some important properties are discussed in multi-source fuzzy information systems. At the same time, the uncertainty measurement are considered for the different models. Finally, some experiments are carried out to interpret and evaluate the validity and significance of the approach.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

With the rapid development of computer sciences and information technology, people’s ability to collect data has been greatly enhanced. At the same time, information and data in various fields have increased dramatically. But these information and data are not accurate and complete, most of the time the data we collected are fuzzy and inaccurate data. How to deal with these fuzzy and inaccurate data is a problem we need to overcome. In 1982, Pawlak, a Polish mathematician, proposed rough set theory [1]. Rough set theory is an effective tool to deal with incomplete information, such as inaccurate, inconsistent and incomplete data. The mathematical basis of rough set theory is mature and does not require necessary prior knowledge. In recent years, many scholars have also done a lot of researches and theoretical promotion on rough set theory [2,3,4,5]. Combining fuzzy set theory with rough set theory mainly includes fuzzy rough set model, rough fuzzy set model [6, 7] and vaguely quantified rough sets (VQRS) model [8]. Rough set model is an important tool for granular computing, in which each partition corresponds to a basic granule, so the concept of extended partition can get the granular-based extended rough set model. Zakowski [9] extended partition to cover and gave a covering-based rough set model. At present, granular-based rough set model extension mainly combines covering and formal concept analysis theory and Zhu [10] studied covering-based rough set extension model from the perspective of topology. In the background of fuzzy datasets, Xu et al. [11] proposed granular computing approach to two-way learning based on formal concept analysis. Bonikowski [12] combined formal concept analysis to study covering rough set model. In addition, Wang and Feng et al. [13] gave the granular computing method of incomplete information system. In Refs. [14, 15], the Pawlak-based rough set granular computing model is regarded as a single granular model, and the multi-granulation rough set model is extended. Since then, many scholars have begun to study multi-granulation rough sets [16,17,18]. Xu et al. [19] proposed two kinds of generalized multi-granulation double-quantitative decision-theoretic rough set models. She et al. [20] introduced the idea of three decision makings into the multi-granulation rough set model and proposed the five-valued semantics of the multi-granulation rough set model. Li et al. [21] proposed a decision theory rough set (DTRS) method of multi-granulation for fc-decision information system.

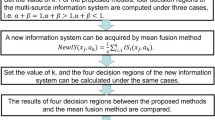

Now, we no longer face a single-source information system, but a multi-source information system in our real life. Most of the data we need to deal with are fuzzy multi-source information systems. At present, the main way to deal with multi-source information system is through information fusion [22,23,24]. Specially, in the background of multi-source decision system, Sang et al. [25] proposed three kinds of multi-source decision methods based on considering the uncertainty of decision-making process. Based on fuzzy multi-source incomplete information system, Xu et al. [26] proposed information fusion approach based on information entropy. From the perspective of granular computing, Xu and Yu [27] completed the selection of sources by internal and external confidence and then fused multi-source information by triangular fuzzy granules. Li and Zhang [28] proposed a method of information fusion based on conditional entropy, which realized the information fusion of incomplete multi-source information system. In multi-source interval-valued data sets, Huang and Li [29] proposed a method of information fusion based on trapezoidal fuzzy granules and realized the dynamic fusion method when the data source changes dynamically. In application, document [30] combined multi-sensor and information fusion to realize the prediction of machine tool residual life. The literature [31] proposed two new fusion methods to propose a new framework for human behavior recognition. However, up to now, no scholars have proposed a rough set model for multi-source fuzzy information system (MsFIS). How to give the rough set model of MsFIS directly is our motivation to study this topic.

Uncertainty is a prominent feature of rough sets, so a lot of uncertainty measurement [32,33,34,35,36] methods have been proposed in the extension of rough sets. Teng and Fan et al. [37] proposed a reasonable measurement method of uncertainty based on attribute recognition ability. Chen and Yu et al. [38] proposed uncertainty measurement methods for real data sets, including neighborhood accuracy, information quantity, neighborhood entropy and information granularity. Hu and Zhang et al. [39] proposed a fuzzy rough set model based on Gauss kernel approximation and introduced information entropy to evaluate the uncertainty of the core matrix and to calculate the approximation operator. Wei et al. [40] proposed a new comprehensive entropy measurement method to measure the uncertainty of UHP hydrodynamics and UHP hydrodynamics by considering the uncertainty of fuzziness and hysteresis. Huang and Guo et al. [41] discussed the hierarchical structure and uncertainty measure of IF-approximation space. Intuitionistic fuzzy granularity, intuitionistic fuzzy information entropy, intuitionistic fuzzy rough entropy and intuitionistic fuzzy information Shannon entropy are used to describe the uncertainty of optimistic and pessimistic multi-granulation intuitionistic fuzzy rough sets in multi-granulation intuitionistic fuzzy approximation space. Xiao et al. have systematically studied the uncertain database in document [42,43,44]. In this paper, aiming at the multi-granulation rough set models of MsFIS, we propose the related uncertainty measurement methods.

The rest of this paper is structured as follows: In Sect. 2, we mainly review the basic concepts related to multi-granulation rough sets and multi-source information systems. In Sect. 3, we first define the distance between objects in a single information system and then propose a tolerance relationship. Through this relationship, the support characteristic function of MsFIS is defined, and further, the multi-granulation rough set model and its related properties of MsFIS are studied. In Sect. 4, we propose different uncertainty measurement methods for the multi-granulation rough set model of MsFIS. In Sect. 5, a series of experiments are carried out to illustrate the relationship among different models. The conclusion is given in Sect. 6.

2 Preliminaries

This section mainly reviews the basic concepts of Pawlak’s rough set model, multi-granulation rough set model and multi-source fuzzy information system. More detailed descriptions can be found in the literature [1, 14, 15, 45].

2.1 Rough Set Model

For a given family of equivalence relations P, we obtain the corresponding indistinguishable relations ind(P), which can determine the corresponding basic knowledge. Furthermore, general knowledge can be obtained by using basic knowledge to do union. Based on this understanding, Polish mathematician Pawlak put forward the concept of rough set in 1982 and thus established the rough set theory. Let R be the equivalence relation on the universe U, and call (U, R) an approximate space. For \(\forall \,X\subseteq U\), if X can be expressed as a union of several R-basic knowledge, then X is R-definable, or X is R-exact set; otherwise, X is R-undefinable, or X is R-rough set. The exact set can be expressed as the union of basic knowledge, so that it can be described accurately. For rough sets, two exact sets can be used to give approximate descriptions in the upper and lower directions, respectively. This is called upper approximation set and lower approximation set.

Let (U, R) be an approximate space. For \(\forall\)\(X\subseteq U\), the lower approximation set of X and the upper approximation set of X are defined as follows:

Through the upper approximation set and the lower approximation set, we can divide the universe into three regions: positive region \((POS_{R}(X)=\underline{R}(X))\), negative region \((NEG_{R}(X)=U-\overline{R}(X))\) and boundary region \((Bn_{R}(X)=\overline{R}(X)-\underline{R}(X))\).

Through the uncertainty analysis of the set X, we can know that the larger the boundary region of the set, the more inaccurate the set will be. Next, we use digital features to measure the inaccuracy of the set, that is, to calculate the approximate accuracy or roughness of the set to represent the size of the boundary region.

Let (U, R) be an approximate space. For \(X\subseteq U\,(X\ne \emptyset )\), the approximate accuracy of X and the roughness of X are defined as follows:

2.2 Multi-granulation Rough Set Model

As we all know, the classical rough set theory is a theory formed on a single granularity space under an equivalence relation. In order to apply rough sets to more complex information systems, Qian et al. first proposed multi-granulation rough sets. Multi-granulation rough set is a rough set model based on multiple equivalence relations, which uses partition induced by multiple equivalence relations to carry out approximate characterization of concepts.

The support characteristic function is defined by the inclusion relation between the equivalence class and the concept set. Given an information system \(I=(U,AT,V,F)\), \(A_{i}\subseteq AT, i=1,2,\ldots ,s\,(s\le 2^{|AT|})\). For any \(X\subseteq U\), the support characteristic function of x for X is denoted as:

Let \(I=(U,AT,V,F)\) be an information system, \(A_{i}\subseteq AT\), \(i=1,2,\ldots ,s\,(s\le 2^{|AT|})\), \(\beta \in (0.5,1]\) (\(\beta\) as the information level for \(\sum \nolimits _{i=1}^{s} A_{i}\)), for any \(X\subseteq U\), \({\mathcal {S}}^{A_{i}}_{X}(x)\) is the support characteristic function of x for X. For any \(X\subseteq U\), the lower approximation and upper approximation of X for \(\sum \nolimits _{i=1}^{s} A_{i}\) are defined as follows:

Generalized multi-granulation rough set is the generalization of optimistic multi-granulation rough set and pessimistic multi-granulation rough set. Based on this, the definition of pessimistic multi-granulation rough set is given from the perspective of supporting eigenfunction.

Given an information system \(I=(U,AT,V,F)\), \(A_{i}\subseteq AT, i=1,2,\ldots ,s\,(s\le 2^{|AT|})\). For any \(X\subseteq U\), the pessimistic lower approximation and pessimistic upper approximation of X for \(\sum \nolimits _{i=1}^{s} A_{i}\) are defined as follows:

where “\(\vee\)”and “\(\wedge\)”denote “or” and “and”, respectively.

Similarly, the definition of optimistic multi-granulation rough set is given from the perspective of supporting characteristic function.

Given an information system \(I=(U,AT,V,F)\), \(A_{i}\subseteq AT\), \(i=1,2,\ldots ,s\,(s\le 2^{|AT|})\). For any \(X\subseteq U\), the optimistic lower approximation and optimistic upper approximation of X for \(\sum \nolimits _{i=1}^{s} A_{i}\) are defined as follows:

where “\(\vee\)”and “\(\wedge\)”denote “or” and “and”, respectively.

2.3 Multi-source Fuzzy Information Systems

As the name implies, a multi-source information system is to obtain information tables from different sources. The structure of the information table obtained may be the same or different. This paper mainly discusses the case of the same structure, that is, the same object, attributes and the value of the object’s attributes have the same digital characteristics under different information sources.

Let \(MsIS=\{IS_{1},IS_{2},\ldots ,IS_{q}\}\) be an isomorphic multi-source information system, where \(IS_{i}=(U,AT,V_{i},F_{i})\), U represents the entirety of the object we are studying, AT represents the set of features or attributes of the research object, \(V_{i}\) represents the range of values of the attribute under source i, and \(F_{i}\) represents the corresponding relation between the object and the feature under the information source i. Similarly, the isomorphic multi-source fuzzy information system (MsFIS) is also composed of a plurality of quads in the form of \(FIS_{i}=(U,AT,V_{i},F_{i})\). Different from MsIS, the range of \(V_{i}\) in MsFIS is between 0 and 1. A MsFIS is shown in Fig. 1.

3 Multi-granulation Rough Set Model for Multi-source Fuzzy Information System

In this section, we propose a multi-granulation rough set model for multi-source fuzzy data sets. They are the generalized multi-granulation rough set model of MsFIS and two special multi-granulation rough set models: the optimistic multi-granulation rough set model of MsFIS and the pessimistic multi-granulation rough set model of MsFIS.

As we all know, classical rough set theory can help us to do knowledge discovery and data mining from inaccurate and incomplete data. But when rough set is applied to fuzzy data sets, the original equivalence relation is no longer applicable. Therefore, many scholars extend the rough set model by defining different relations in order to solve different problems. In this paper, we propose a tolerance relation through Formula (9) so that study the rough set model for multi-source fuzzy data sets. That is to say, when the distance between two objects is less than a threshold, we say that there is a certain relation between the two objects.

Definition 3.1

Let \(FIS=\{U,\tilde{A},V,F\}\) be a fuzzy information system, where \(U=\{x_{1},x_{2},\ldots ,x_{n}\}\), \(A=\{a_{1},a_{2},\ldots ,a_{p}\}\). For any \(x_{i},x_{j}\in U\), we define the distance between two objects in a fuzzy information system as follows:

Definition 3.2

Let \(FIS=\{U,\tilde{A},V,F\}\) be a fuzzy information system. We define a binary tolerance relation in a fuzzy information system as follows:

where d is a threshold. Obviously, this binary tolerance relation satisfies reflexivity and symmetry. The tolerance class \([x]_{TR(\tilde{A})}\) induced by the tolerance \(TR(\tilde{A})\) is denoted as: \([x]_{TR(\tilde{A})}=\{y\,|\,(x,y)\in TR(\tilde{A})\}\).

Definition 3.3

Given a multi-source fuzzy information system \(MsFIS=\{FIS_{1},FIS_{2},\ldots ,FIS_{q}\}\), where \(FIS_{i}=(U,\tilde{A}_{i},V_{i},F_{i})\) (\(i\le q\)). For any \(X\subseteq U\), denoted:

The function \({\mathcal {F}}^{\tilde{A}_{i}}_{X}(x)\) is called the support characteristic function of x for X under the information source i, which is used to describe the inclusion relation between tolerance class \([x]_{{TR_{\tilde{A}_{i}}}}\) and concept X , which indicates whether object x accurately supports X by \(\tilde{A}_{i}\).

For convenience, we abbreviate the tolerance class \([x]_{TR(\tilde{A}_{i})}\) of x to \([x]_{\tilde{A}_{i}}\) under the information source i. Therefore, in the subsequent analysis, the support characteristic function is expressed as follows:

3.1 Generalized Multi-granulation Rough Set Model for Multi-source Fuzzy Information Systems

Definition 3.4

Let \(MsFIS=\{FIS_{1},FIS_{2},\ldots ,FIS_{q}\}\) be a multi-source fuzzy information system, where \(FIS_{i}=(U,\tilde{A}_{i},V_{i},F_{i})\) (\(i\le q\)). \(\beta\) is a threshold which satisfied \(\beta \in (0.5,1]\). \(X\subseteq U\), \({\mathcal {F}}^{\tilde{A}_{i}}_{X}(x)\) is the support characteristic function of x for X. For any \(X\subseteq U\), the lower approximate set and the upper approximate set of MsFIS are defined as follows:

The order pair \(\langle \underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta },\overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\rangle\) is called generalized multi-granulation rough set model for MsFIS. And

Theorem 3.1

Let\(MsFIS=\{FIS_{1},FIS_{2},\ldots ,FIS_{q}\}\)be a multi-source fuzzy information system, where\(FIS_{i}=(U,\tilde{A}_{i},V_{i},F_{i})\) (\(i\le q\)). \(\beta\)is a threshold which satisfied\(\beta \in (0.5,1]\). For any\(X,Y\subseteq U\), the following properties of the upper and lower approximation operators are true.

-

(1)

\(\underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(\sim X)_{\beta }=\sim \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta },\,\,\overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(\sim X)_{\beta }=\sim \underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }.\)

-

(2)

\(\underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\subseteq X\subseteq \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }.\)

-

(3)

\(\underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(\emptyset )_{\beta }= \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(\emptyset )_{\beta }=\emptyset .\)

-

(4)

\(\underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(U)_{\beta }= \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(U)_{\beta }=U.\)

-

(5)

\(X\subseteq Y\Rightarrow \underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\subseteq \underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(Y)_{\beta },\,\, \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\subseteq \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(Y)_{\beta }.\)

-

(6)

\(\underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X\cap Y)_{\beta }\subseteq \underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\cap \underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(Y)_{\beta },\,\, \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X\cap Y)_{\beta }\subseteq \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\cap \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(Y)_{\beta }.\)

-

(7)

\(\underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X\cup Y)_{\beta }\supseteq \underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\cup \underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(Y)_{\beta },\,\, \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X\cup Y)_{\beta }\supseteq \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\cup \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(Y)_{\beta }.\)

Proof

- (1):

-

Because of \(x\in \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\Leftrightarrow \frac{\sum \nolimits _{i=1}^{q}(1-{\mathcal {F}}^{\tilde{A}_{i}}_{X^{c}}(x))}{q}>1-\beta\), we can have \(x\in \sim \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\Leftrightarrow \frac{\sum \nolimits _{i=1}^{q}(1-{\mathcal {F}}^{\tilde{A}_{i}}_{X^{c}}(x))}{q}\le 1-\beta \Leftrightarrow \frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{X^{c}}(x)}{q}\ge \beta \Leftrightarrow x\in \underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(\sim X)_{\beta }.\) Because of \(x\in \underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\Leftrightarrow \frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{X}(x)}{q}\ge \beta\), we can have \(x\in \sim \underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\Leftrightarrow \frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{X}(x)}{q}<\beta \Leftrightarrow 1-\frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{X}(x)}{q}>1-\beta \Leftrightarrow \frac{\sum \nolimits _{i=1}^{q}(1-{\mathcal {F}}^{\tilde{A}_{i}}_{X}(x))}{q}=\frac{\sum \nolimits _{i=1}^{q}(1-{\mathcal {F}}^{\tilde{A}_{i}}_{(X^{c})^{c}}(x))}{q}>1-\beta \Leftrightarrow x\in \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(\sim X)_{\beta }.\)

- (2):

-

For any \(x\in \underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\), we can know \(\frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{X}(x)}{q}\ge \beta\). And \(\beta \in (0,1]\), so \(\exists\)\(i\le q\), s.t \([x]_{\tilde{A}_{i}}\subseteq X\), so \(x\in X\). Known by the arbitrariness of x, \(\underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\subseteq X\). Because of \(\underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(\sim X)_{\beta }=\sim \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\subseteq \sim X\), we can obtain \(X\subseteq \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\). Therefore, the property (2) has been proved.

- (3):

-

Because of \({\mathcal {F}}^{\tilde{A}_{i}}_{\emptyset }(x)=0\), we can obtain \(\underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(\emptyset )_{\beta }=\left\{ x\in U \left| \right. \frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{\emptyset }(x)}{q}=\frac{\sum \nolimits _{i=1}^{q}0}{q}=0\ge \beta \right\} =\emptyset ,\) and \(\overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(\emptyset )_{\beta }=\left\{ x\in U \left| \right. \frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{\emptyset }(x)}{q}=\frac{\sum \nolimits _{i=1}^{q}0}{q}=0> 1-\beta \right\} =\emptyset .\)

- (4):

-

Because of \({\mathcal {F}}^{\tilde{A}_{i}}_{U}(x)=1\), we can have \(\underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(U)_{\beta }=\left\{ x\in U \left| \right. \frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{U}(x)}{q}=\frac{\sum \nolimits _{i=1}^{q}1}{q}=1\ge \beta \right\} =U,\) and \(\overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(U)_{\beta }=\left\{ x\in U \left| \right. \frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{U}(x)}{q}=\frac{\sum \nolimits _{i=1}^{q}1}{q}=1> 1-\beta \right\} =U.\)

- (5):

-

For any \(x\in \underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\), we can know \(\frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{X}(x)}{q}\ge \beta\). And because of \(X\subseteq Y\), \({\mathcal {F}}_{X}^{\tilde{A}_{i}}(x)\le {\mathcal {F}}_{Y}^{\tilde{A}_{i}}(x)\). So we can obtain \(\frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{Y}(x)}{q}\ge \frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{X}(x)}{q}\ge \beta\). Therefore, \(x\in \underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(Y)_{\beta }.\) For any \(x\in \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\), we can know \(\frac{\sum \nolimits _{i=1}^{q}(1-{\mathcal {F}}^{\tilde{A}_{i}}_{X^{c}}(x))}{q}>1-\beta\). And because of \(X\subseteq Y\), \(Y^{c}\subseteq X^{c}\)\({\mathcal {F}}_{Y^{c}}^{\tilde{A}_{i}}(x)\le {\mathcal {F}}_{X^{c}}^{\tilde{A}_{i}}(x)\). So we can obtain \(\frac{\sum \nolimits _{i=1}^{q}(1-{\mathcal {F}}^{\tilde{A}_{i}}_{Y^{c}}(x))}{q}\ge \frac{\sum \nolimits _{i=1}^{q}(1-{\mathcal {F}}^{\tilde{A}_{i}}_{X^{c}}(x))}{q}>1-\beta\). Therefore, \(x\in \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(Y)_{\beta }.\)

- (6):

-

For any \(x\in \underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X\cap Y)_{\beta }\), we can know \(\frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{X\cap Y}(x)}{q}=\frac{\sum \nolimits _{i=1}^{q}({\mathcal {F}}^{\tilde{A}_{i}}_{X}(x))\wedge ({\mathcal {F}}^{\tilde{A}_{i}}_{Y}(x))}{q}\ge \beta\). Thus, \(\frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{X}(x)\wedge \sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{Y}(x)}{q}\ge \frac{\sum \nolimits _{i=1}^{q}({\mathcal {F}}^{\tilde{A}_{i}}_{X}(x)\wedge {\mathcal {F}}^{\tilde{A}_{i}}_{Y}(x))}{q}\ge \beta\). So \(\frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{X}(x)}{q}\ge \beta\) and \(\frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{Y}(x)}{q}\ge \beta\), i.e., \(x\in \underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\) and \(x\in \underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(Y)_{\beta }\). Therefore, \(x\in \underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\cap \underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(Y)_{\beta }.\) For any \(x\in \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X\cap Y)_{\beta }\), we can know \(\frac{\sum \nolimits _{i=1}^{q}(1-{\mathcal {F}}^{\tilde{A}_{i}}_{(X\cap Y)^{c}}(x))}{q}>1-\beta \Leftrightarrow \frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{(X\cap Y)^{c}}(x)}{q}<\beta \Leftrightarrow \frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{(X^{c}\cup Y^{c})}(x)}{q}<\beta\). Thus, \(\frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{X^{c}}(x)\vee \sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{Y^{c}}(x)}{q}\le \frac{\sum \nolimits _{i=1}^{q}({\mathcal {F}}^{\tilde{A}_{i}}_{X^{c}}(x)\vee {\mathcal {F}}^{\tilde{A}_{i}}_{Y^{c}}(x))}{q}\le \frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{(X^{c}\cup Y^{c})}(x)}{q}<\beta\). So \(\frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{X^{c}}(x)}{q}<\beta\) and \(\frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{Y^{c}}(x)}{q}<\beta\), \(1-\frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{X^{c}}(x)}{q}>1-\beta\) and \(1-\frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{Y^{c}}(x)}{q}>1-\beta\). i.e., \(x\in \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\) and \(x\in \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(Y)_{\beta }\). Therefore, \(x\in \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\cap \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(Y)_{\beta }.\)

- (7):

-

For any \(x\in \underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\cup \underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(Y)_{\beta }\), i.e., \(x\in \underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\) or \(x\in \underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(Y)_{\beta }\). We can know \(\frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{X}(x)}{q}\ge \beta\) or \(\frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{Y}(x)}{q}\ge \beta\). Thus, \(\frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{X\cup Y}(x)}{q}\ge \frac{\sum \nolimits _{i=1}^{q}({\mathcal {F}}^{\tilde{A}_{i}}_{X}(x)\vee {\mathcal {F}}^{\tilde{A}_{i}}_{Y}(x))}{q}\ge \frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{X}(x)\wedge \sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{Y}(x)}{q}\ge \beta\). So \(x\in \underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X\cup Y)_{\beta }\). For any \(x\in \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\cup \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(Y)_{\beta }\), i.e., \(x\in \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\) or \(x\in \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(Y)_{\beta }\). We can know \(\frac{\sum \nolimits _{i=1}^{q}(1-{\mathcal {F}}^{\tilde{A}_{i}}_{X^{c}}(x))}{q}>1-\beta\) or \(\frac{\sum \nolimits _{i=1}^{q}(1-{\mathcal {F}}^{\tilde{A}_{i}}_{Y^{c}}(x))}{q}>1-\beta\). Thus, \(\frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{X^{c}}(x)}{q}<\beta\) or \(\frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{Y^{c}}(x)}{q}<\beta\), and \(\frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{(X\cup Y)^{c}}(x)}{q}=\frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{(X^{c}\cap Y^{c})}(x)}{q}=\frac{\sum \nolimits _{i=1}^{q}({\mathcal {F}}^{\tilde{A}_{i}}_{X^{c}}(x)\wedge {\mathcal {F}}^{\tilde{A}_{i}}_{Y^{c}}(x))}{q}\le \frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{X^{c}}(x)\wedge \sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{Y^{c}}(x)}{q}<\beta\). So\(\frac{\sum \nolimits _{i=1}^{q}(1-{\mathcal {F}}^{\tilde{A}_{i}}_{(X\cup Y)^{c}}(x))}{q}>1-\beta\), i.e., \(x\in \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X\cup Y)_{\beta }\).

\(\square\)

Theorem 3.2

Let\(MsFIS=\{FIS_{1},FIS_{2},\ldots ,FIS_{q}\}\)be a multi-source decision information system, where\(DIS_{i}=(U,\tilde{A}_{i},V_{i},F_{i})\) (\(i\le q\)). \(\beta\)is a threshold which satisfied\(\beta \in (0.5,1]\). \(X\subseteq U\), for different levels of information (\(\alpha \le \beta\)), the following properties are established.

-

(1)

\(\underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\subseteq \underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\alpha }\).

-

(2)

\(\overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\alpha }\subseteq \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\).

Proof

- (1):

-

For \(\forall x\in \underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta }\), we know \(\frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{X}(x)}{q}\ge \beta\). And \(\alpha \le \beta\), so \(\frac{\sum \nolimits _{i=1}^{q}{\mathcal {F}}^{\tilde{A}_{i}}_{X}(x)}{q}\ge \alpha\), \(x\in \underline{MsF}_{\sum \nolimits _{i=1}^{q} \tilde{A}_{i}}(X)_{\alpha }\). Known by the arbitrariness of x, \(\underline{MsF}_{\sum \nolimits _{i=1}^{q} \tilde{A}_{i}}(X)_{\beta }\subseteq \underline{MsF}_{\sum \nolimits _{i=1}^{q} \tilde{A}_{i}}(X)_{\alpha }.\)

- (2):

-

For \(\forall x\in \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\alpha }\), we know \(\frac{\sum \nolimits _{i=1}^{q}(1-{\mathcal {F}}^{\tilde{A}_{i}}_{X^{c}}(x))}{q}> 1-\alpha\). And \(\alpha \le \beta\), so \(1-\alpha \ge 1-\beta\), \(\frac{\sum \nolimits _{i=1}^{q}(1-{\mathcal {F}}^{\tilde{A}_{i}}_{X^{c}}(x))}{q}> 1-\beta\), \(x\in \overline{MsF}_{\sum \nolimits _{i=1}^{q} \tilde{A}_{i}}(X)_{\beta }\). Known by the arbitrariness of x, \(\overline{MsF}_{\sum \nolimits _{i=1}^{q} \tilde{A}_{i}}(X)_{\alpha }\subseteq \overline{MsF}_{\sum \nolimits _{i=1}^{q} \tilde{A}_{i}}(X)_{\beta }.\)

\(\square\)

3.2 Optimistic Multi-granulation Rough Set Model for Multi-source Fuzzy Information Systems

Definition 3.5

Let \(MsFIS=\{FIS_{1},FIS_{2},\ldots ,FIS_{q}\}\) be a multi-source fuzzy information system, where \(FIS_{i}=(U,\tilde{A}_{i},V_{i},F_{i})\) (\(i\le q\)). \(X\subseteq U\), \({\mathcal {F}}^{\tilde{A}_{i}}_{X}(x)\) is the support characteristic function of x for X. For any \(X\subseteq U\), the optimistic lower approximate set and the optimistic upper approximate set of MsFIS are defined as follows:

The order pair \(\langle \underline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X),\overline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)\rangle\) is called optimistic multi-granulation rough set model for MsFIS. And

Theorem 3.3

Let\(MsFIS=\{FIS_{1},FIS_{2},\ldots ,FIS_{q}\}\)be a multi-source fuzzy information system, where\(FIS_{i}=(U,\tilde{A}_{i},V_{i},F_{i})\) (\(i\le q\)). For any\(X,Y\subseteq U\), the following properties of the optimistic upper and lower approximation operators are true.

-

(1)

\(\underline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(\sim X)=\sim \overline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X),\,\,\overline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(\sim X)=\sim \underline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X).\)

-

(2)

\(\underline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)\subseteq X\subseteq \overline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X).\)

-

(3)

\(\underline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(\emptyset )= \overline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(\emptyset )=\emptyset .\)

-

(4)

\(\underline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(U)= \overline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(U)=U.\)

-

(5)

\(X\subseteq Y\Rightarrow \underline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)\subseteq \underline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(Y),\,\, \overline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)\subseteq \overline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(Y).\)

-

(6)

\(\underline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X\cap Y)\subseteq \underline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)\cap \underline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(Y),\,\, \overline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X\cap Y)\subseteq \overline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)\cap \overline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(Y).\)

-

(7)

\(\underline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X\cup Y)\supseteq \underline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)\cup \underline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(Y),\,\, \overline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X\cup Y)\supseteq \overline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)\cup \overline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(Y).\)

Proof

It is similarly to Theorem 3.1. \(\square\)

3.3 Pessimistic Multi-granulation Rough Set Model for Multi-source Fuzzy Information Systems

Definition 3.6

Let \(MsFIS=\{FIS_{1},FIS_{2},\ldots ,FIS_{q}\}\) be a multi-source fuzzy information system, where \(FIS_{i}=(U,\tilde{A}_{i},V_{i},F_{i})\) (\(i\le q\)). \(X\subseteq U\), \({\mathcal {F}}^{\tilde{A}_{i}}_{X}(x)\) is the support characteristic function of x for X. For any \(X\subseteq U\), the pessimistic lower approximate set and the pessimistic upper approximate set of MsFIS are defined as follows:

The order pair \(\langle \underline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X),\overline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)\rangle\) is called pessimistic multi-granulation rough set model for MsFIS. And

Theorem 3.4

Let\(MsFIS=\{FIS_{1},FIS_{2},\ldots ,FIS_{q}\}\)be a multi-source fuzzy information system, where\(FIS_{i}=(U,\tilde{A}_{i},V_{i},F_{i})\) (\(i\le q\)). For any\(X,Y\subseteq U\), the following properties of the optimistic upper and lower approximation operators are true.

-

(1)

\(\underline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(\sim X)=\sim \overline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X),\,\,\overline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(\sim X)=\sim \underline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X).\)

-

(2)

\(\underline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)\subseteq X\subseteq \overline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X).\)

-

(3)

\(\underline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(\emptyset )= \overline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(\emptyset )=\emptyset .\)

-

(4)

\(\underline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(U)= \overline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(U)=U.\)

-

(5)

\(X\subseteq Y\Rightarrow \underline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)\subseteq \underline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(Y),\,\, \overline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)\subseteq \overline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(Y).\)

-

(6)

\(\underline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X\cap Y)=\underline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)\cap \underline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(Y),\,\, \overline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X\cap Y)\subseteq \overline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)\cap \overline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(Y).\)

-

(7)

\(\underline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X\cup Y)\supseteq \underline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)\cup \underline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(Y),\,\, \overline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X\cup Y)=\overline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)\cup \overline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(Y).\)

Proof

It is similarly to Theorem 3.1. \(\square\)

Theorem 3.5

Let\(MsFIS=\{FIS_{1},FIS_{2},\ldots ,FIS_{q}\}\)be a multi-source fuzzy information system, where\(FIS_{i}=(U,\tilde{A}_{i},V_{i},F_{i})\) (\(i\le q\)). For any\(X\subseteq U\), the following properties are established.

-

(1)

\(\underline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)\subseteq \underline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta } \subseteq \underline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)\),

-

(2)

\(\overline{OMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)\subseteq \overline{MsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)_{\beta } \subseteq \overline{PMsF}_{\sum \nolimits _{i=1}^{q}\tilde{A}_{i}}(X)\).

Proof

It can be proved by Definitions 3.4–3.6 and Theorem 3.2. \(\square\)

Example 3.1

The multi-source fuzzy information system shown in Table 1 is three information tables obtained by six patients going to three hospitals for physical examination. \(u_{i}\) represents patients, and \(a_{i}\) represents symptoms. If the patient has a certain symptom, it is represented by 1, and if not, 0 is used. But it is often difficult for a doctor to determine exactly whether a patient has a certain symptom. In this case, use a number in [0,1] to indicate the severity of the symptom.

According to Definition 3.1, we calculate the distance of objects under three sources as shown in Tables 2, 3 and 4 and then calculate the tolerance classes of objects under each source.

Tolerance classes under Source 1 when threshold d = 0.06:

\([u_{1}]_{\tilde{A}_{1}}=\{u_{1},u_{2},u_{3}\}\), \([u_{2}]_{\tilde{A}_{1}}=\{u_{1},u_{2},u_{3}\}\), \([u_{3}]_{\tilde{A}_{1}}=\{u_{1},u_{2},u_{3}\}\), \([u_{4}]_{\tilde{A}_{1}}=\{u_{4},u_{5},u_{6}\}\), \([u_{5}]_{\tilde{A}_{1}}=\{u_{4},u_{5}\}\), \([u_{6}]_{\tilde{A}_{1}}=\{u_{4},u_{6}\}\).

Tolerance classes under Source 2 when threshold d = 0.06:

\([u_{1}]_{\tilde{A}_{2}}=\{u_{1},u_{2},u_{4},u_{5}\}\), \([u_{2}]_{\tilde{A}_{2}}=\{u_{1},u_{2},u_{4}\}\), \([u_{3}]_{\tilde{A}_{2}}=\{u_{3},u_{4},u_{5}\}\), \([u_{4}]_{\tilde{A}_{2}}=\{u_{1},u_{2},u_{3},u_{4},u_{5}\}\), \([u_{5}]_{\tilde{A}_{2}}=\{u_{1},u_{3},u_{4},u_{5}\}\), \([u_{6}]_{\tilde{A}_{2}}=\{u_{6}\}\).

Tolerance classes under Source 3 when threshold d = 0.06:

\([u_{1}]_{\tilde{A}_{3}}=\{u_{1},u_{2},u_{4}\}\), \([u_{2}]_{\tilde{A}_{3}}=\{u_{1},u_{2},u_{6}\}\), \([u_{3}]_{\tilde{A}_{3}}=\{u_{3}\}\), \([u_{4}]_{\tilde{A}_{3}}=\{u_{1},u_{4}\}\), \([u_{5}]_{\tilde{A}_{3}}=\{u_{5}\}\), \([u_{6}]_{\tilde{A}_{3}}=\{u_{1},u_{2},u_{6}\}\).

Finally, given a concept set \(X=\{u_{1},u_{2},u_{4},u_{6}\}\), suppose the patients in set X were finally diagnosed as not ill. the support characteristic function of X and the support characteristic function of \(X^{c}\) under each source are computed.

According to Definition 3.4, the generalized lower and upper approximation set of set X when \(\beta =0.6\) are obtained as follows:

So the positive region, the negative region and the boundary region of the set X are as follows:

This means that patients No. 2 and No. 6 are definitely not sick, while patients No. 1, No. 3, No. 4 and No. 5 may or may not be sick.

According to Definition 3.5, the optimistic lower and upper approximation set of set X are obtained as follows:

So the positive region, the negative region and the boundary region of the set X are as follows:

This means that patients No. 1, No. 2, No. 4 and No. 6 are definitely not sick, while patients No. 3 and No. 5 are definitely sick.

According to Definition 3.6, the pessimism lower and upper approximation sets of set X are obtained as follows:

So the positive region, the negative region and the boundary region of the set X are as follows:

This means that patients NO. 6 is definitely not sick, while patients NO. 1, NO. 2, NO. 3, NO. 4 and NO. 5 may or may not be sick.

4 Uncertainty Measurement of Multi-source Fuzzy Information System

In this section, for the multi-granulation rough set models of MsFIS proposed in Sect. 3, several different uncertainty measurement methods are proposed. It is well known that the uncertainty of knowledge is caused by boundary region. The larger the boundary area is, the lower accuracy and the higher roughness. On the contrary, the smaller the boundary area is, the higher accuracy and the lower roughness.

Definition 4.1

Let \(MsFIS=\{FIS_{1},FIS_{2},\ldots ,FIS_{q}\}\) be a multi-source fuzzy information system, where \(FIS_{i}=(U,\tilde{A}_{i},V_{i},F_{i})\) (\(i\le q\)). \(\tilde{A}_{i}\) represents the condition attribute set under the source i. For any \(X\subseteq U\), the type-\(\text {I}\) generalized approximation accuracy and roughness of the set X with respect to \(\sum \nolimits _{i=1}^{q}\tilde{A}_{i}\) are defined as follows:

Similarly, the type-\(\text {I}\) optimistic approximation accuracy and roughness of the set X with respect to \(\sum \nolimits _{i=1}^{q}\tilde{A}_{i}\) are defined as follows:

The type-\(\text {I}\) pessimistic approximation accuracy and roughness of the set X with respect to \(\sum \nolimits _{i=1}^{q}\tilde{A}_{i}\) are defined as follows:

Definition 4.1 defines the accuracy and roughness of the set from the perspective of the approximate set. Because for a rough set, the larger the boundary field, the coarser the set. We give a definition of type \(\text {II}\) roughness and approximate accuracy.

Definition 4.2

Let \(MsFIS=\{FIS_{1},FIS_{2},\ldots ,FIS_{q}\}\) be a multi-source fuzzy information system, where \(FIS_{i}=(U,\tilde{A}_{i},V_{i},F_{i})\) (\(i\le q\)). \(\tilde{A}_{i}\) represents the condition attribute set under the source i. For any \(X\subseteq U\), the type \(\text {II}\) generalized approximation accuracy and roughness of the set X with respect to \(\sum \nolimits _{i=1}^{q}\tilde{A}_{i}\) are defined as follows:

Similarly, the type \(\text {II}\) optimistic approximation accuracy and roughness of the set X with respect to \(\sum \nolimits _{i=1}^{q}\tilde{A}_{i}\) are defined as follows:

the type \(\text {II}\) pessimistic approximation accuracy and roughness of the set X with respect to \(\sum \nolimits _{i=1}^{q}\tilde{A}_{i}\) are defined as follows:

Definition 4.3

Let \(MsFIS=\{FIS_{1},FIS_{2},\ldots ,FIS_{q}\}\) be a multi-source fuzzy information system, where \(FIS_{i}=(U,\tilde{A}_{i},V_{i},F_{i})\) (\(i\le q\)). \(\tilde{A}_{i}\) represents the condition attribute set under the source i. For any \(X\subseteq U\), the generalized approximation quality of the set X with respect to \(\sum \nolimits _{i=1}^{q}\tilde{A}_{i}\) is defined as follows:

Similarly, the optimistic approximation quality and pessimistic approximation quality of the set X with respect to \(\sum \nolimits _{i=1}^{q}\tilde{A}_{i}\) are defined as follows:

Theorem 4.1

Let\(MsFIS=\{FIS_{1},FIS_{2},\ldots ,FIS_{q}\}\)be a multi-source fuzzy information system, where\(FIS_{i}=(U,\tilde{A}_{i},V_{i},F_{i})\) (\(i\le q\)). For any\(X,Y\subseteq U\), there are several relations between several uncertainty measures.

-

(1)

\(\alpha _{PMsF}^{\text {I}}(X)\le \alpha _{MsF}^{\text {I}}(X)_{\beta }\le \alpha _{OMsF}^{\text {I}}(X) ,\,\,\,\rho _{OMsF}^{\text {I}}(X)\le \rho _{MsF}^{\text {I}}(X)_{\beta }\le \rho _{PMsF}^{\text {I}}(X).\)

-

(2)

\(\alpha _{PMsF}^{\text {II}}(X)\le \alpha _{MsF}^{\text {II}}(X)_{\beta }\le \alpha _{OMsF}^{\text {II}}(X) ,\,\,\,\rho _{OMsF}^{\text {II}}(X)\le \rho _{MsF}^{\text {II}}(X)_{\beta }\le \rho _{PMsF}^{\text {II}}(X).\)

-

(3)

\(\omega _{PMsF}(X)\le \omega _{MsF}(X)_{\beta }\le \omega _{OMsF}(X).\)

Proof

It is easy to prove by definition. \(\square\)

Example 4.1

(Continue Example 3.1) For \(X=\{u_{1},u_{2},u_{4},u_{6}\}\), the type \(\text {I}\) generalized approximation accuracy and roughness of the set X with respect to \(\sum \nolimits _{i=1}^{3}\tilde{A}_{i}\) are computed as follows: \(\alpha _{MsF}^{\text {I}}(X)_{0.6}=\frac{1}{3},\,\rho _{MsF}^{\text {I}}(X)_{0.6}=\frac{2}{3}.\) The type \(\text {II}\) generalized approximation accuracy and roughness of the set X with respect to \(\sum \nolimits _{i=1}^{3}\tilde{A}_{i}\) are defined as follows: \(\alpha _{MsF}^{\text {II}}(X)_{0.6}=\frac{1}{3} ,\,\rho _{MsF}^{\text {II}}(X)_{0.6}=\frac{2}{3}.\) The generalized approximation quality of the set X with respect to \(\sum \nolimits _{i=1}^{3}\tilde{A}_{i}\) is defined as follows: \(\omega _{MsF}(X)_{0.6}=\frac{1}{3}.\)

For \(X=\{u_{1},u_{2},u_{4},u_{6}\}\), the type \(\text {I}\) optimistic approximation accuracy and roughness of the set X with respect to \(\sum \nolimits _{i=1}^{3}\tilde{A}_{i}\) are computed as follows: \(\alpha _{OMsF}^{\text {I}}(X)=1,\,\rho _{OMsF}^{\text {I}}(X)=0.\) The type \(\text {II}\) optimistic approximation accuracy and roughness of the set X with respect to \(\sum \nolimits _{i=1}^{3}\tilde{A}_{i}\) are defined as follows: \(\alpha _{OMsF}^{\text {II}}(X)=1 ,\,\rho _{OMsF}^{\text {II}}(X)=0.\) The optimistic approximation quality of the set X with respect to \(\sum \nolimits _{i=1}^{3}\tilde{A}_{i}\) is defined as follows: \(\omega _{OMsF}(X)=\frac{2}{3}.\)

For \(X=\{u_{1},u_{2},u_{4},u_{6}\}\), the type \(\text {I}\) pessimistic approximation accuracy and roughness of the set X with respect to \(\sum \nolimits _{i=1}^{3}\tilde{A}_{i}\) are computed as follows: \(\alpha _{PMsF}^{\text {I}}(X)=\frac{1}{6},\,\rho _{MsF}^{\text {I}}(X)=\frac{5}{6}.\) The type \(\text {II}\) pessimistic approximation accuracy and roughness of the set X with respect to \(\sum \nolimits _{i=1}^{3}\tilde{A}_{i}\) are defined as follows: \(\alpha _{PMsF}^{\text {II}}(X)=\frac{1}{6} ,\,\rho _{PMsF}^{\text {II}}(X)=\frac{5}{6}.\) The pessimistic approximation quality of the set X with respect to \(\sum \nolimits _{i=1}^{3}\tilde{A}_{i}\) is defined as follows: \(\omega _{PMsF}(X)=\frac{1}{6}.\)

5 Experiment Evaluations

In this paper, three kinds of multi-granulation rough set models for MsFIS are proposed, and uncertainty measurement methods related to approximate sets are defined. In this section, in order to prove the correctness and practicability of the related theory, we propose an Algorithm 1 for calculating the uncertainty of multi-granulation rough sets of MsFIS. In this paper, we only propose the generalized multi-granulation correlation uncertainty algorithm for MsFIS. The corresponding pessimistic and optimistic algorithm can be realized by changing the threshold \(\beta\), which is not discussed in this paper. Then a series of experiments are carried out to demonstrate the effectiveness of the Algorithm 1 by downloading data sets from machine learning database.

We analyze the time complexity of the Algorithm 1 as shown below. From step 3 to step 9, the distance between objects under each source is calculated, and the time complexity is \(O(|U|^{2}*|A|*q)\) (q is the number of sources.). From step 11 to step 20, the tolerance classes of each object under each source are computed, and the time complexity is \(O(|U|^{2}*q)\). From step 21 to step 35, we calculate the sum of support characteristic function of each element for a given concept and the complement according to \(\sum \nolimits _{i=1}^{q}FIS_{i}\), and the time complexity is \(O(|U|*q)\). From step 37 to step 44, the upper and lower approximation sets of X are calculated, and the time complexity is O(|U|).

5.1 Generation of Multi-source Fuzzy Information System

As we all know, we can’t get multi-source fuzzy data sets directly from UCI(http://archive.ics.uci.edu/ml/datasets.html). In order to obtain the data needed in the experiment, we fuzzify the data set to make it a fuzzy information system and then obtain a multi-source fuzzy information system by adding white noise and random noise. The specific operation methods are given as follows. Next, we introduce the method of adding white noise to the original data. First, a set of numbers \((n_{1},n_{2},\ldots ,n_{q})\) satisfying normal distribution is generated. Add white noise as follows:

Similarly, the method of adding random noise is as follows:

where FIS(x, a) denotes the value of object x under attribute a in the original fuzzy information system, \(FIS_{i}(x,a)\) denotes the value of object x under attribute a in the ith fuzzy information system which adding noise. Then we randomly select 40% of the original data table to add white noise and then randomly select 20% of the remaining data to add random noise. The rest of the data information remains unchanged. Finally, we obtained a multi-source fuzzy information system.

5.2 Comparison of Uncertainty Measures of Three Multi-granulation Rough Sets in MsFIS

In this experiment, we downloaded two data sets from UCI, named “winequality-red” and “winequality-white” (“winequality-red” and “winequality-white” are not fuzzy information tables, after processing, they become fuzzy information tables) and then randomly generated two fuzzy data sets. The four data sets are used as the original information system to generate a multi-source fuzzy information system with 10 sources. More detailed information on these four data sets is given in Table 5. The entire experiment was run on a private computer. The specific operating environment (including hardware and software) is shown in Table 6.

In this paper, we conducted 10 experiments on the same data set by changing noise data. Because the generalized multi-granulation rough set of MsFIS is related to the selection of threshold \(\beta\), we make \(\beta =0.6\) in the process of experiment. Because the distances of objects under different datasets are different, we choose different thresholds d for different datasets. In the experimental process of dataset own-data1, \(d = 0.1\); in the experimental process of dataset winequality-red and winequality-white, \(d = 0.01\); and in the experimental process of dataset own-data2, \(d = 0.06\). The selection of concepts is a subset randomly generated by universe U. The uncertainties of the three rough set models corresponding to each data set are shown in Tables 7, 8, 9 and 10, respectively.

In order to see more intuitively and concisely the differences among the three models with different uncertainty measures, the experimental results in Tables 7, 8, 9 and 10 are shown in Figs. 2, 3, 4 and 5.

The following conclusions can be drawn from the experimental results.

-

From Figs. 2, 3, 4 and 5, we can see that the pessimistic approximation accuracy of type \(\text {I}\) is less than the generalized approximation accuracy of type \(\text {I}\) is less than the type \(\text {I}\) optimistic approximation accuracy; the type \(\text {I}\) optimistic roughness is less than the generalized roughness of type \(\text {I}\) is less than the type \(\text {I}\) pessimistic roughness. Similarly, the pessimistic approximation accuracy of type \(\text {II}\) is less than the generalized approximation accuracy of type \(\text {II}\) is less than the type \(\text {II}\) optimistic approximation accuracy; the type \(\text {II}\) optimistic roughness is less than the generalized roughness of type \(\text {II}\) is less than the type \(\text {II}\) pessimistic roughness. The pessimistic approximation quality is less than the generalized approximation quality is less than the optimistic approximation quality. The experimental results satisfy Theorem 4.1.

-

At the time of object selection, the optimistic multi-granulation rough set of MsFIS is too loose to be sufficiently accurate to characterize the concept. The main reason is that the optimistic multi-granulation rough set of MsFIS has a positive description when selecting objects. The object only needs to support the concept in at least one source. For objects with possible descriptions, it is possible to support the concept under all sources. This requirement will add a lot of useless descriptions in the lower approximation. In the upper approximation, many possible descriptions may be lost, which will make the conception of the concept inaccurate. Conversely, the pessimistic multi-granulation rough set of MsFIS is too strict on the concept of domain.

-

Because the pessimistic multi-granulation rough set and optimistic multi-granulation rough set models of MsFIS have limitations in practical application, we propose a generalized multi-granulation rough set model for MsFIS, which uses an information level \(\beta \in (0.5,1]\) to control the selection of objects. Threshold \(\beta\) is used to control that the object can be described positively in most sources, and at the same time, the object that may be described below the corresponding level is deleted.

-

Obviously, from Figs. 2, 3, 4 and 5, we can see that the results of different models in different data sets are inconsistent. Therefore, in practical application, different fields should select models according to their own requirements. Moreover, the uncertainty obtained by different measurement methods is not entirely consistent, so it is necessary to adopt different measures. In actual application, we also need to choose reasonable measurement methods according to different schemes.

6 Conclusions

From the perspective of multi-granulation, this paper considers the source of MsFIS as granularity and proposes the rough set model suitable for MsFIS: generalized multi-granulation rough set of MsFIS, optimistic multi-granulation rough set of MsFIS and pessimistic multi-granulation rough set of MsFIS. The related properties of different multi-granulation models are also discussed. In order to deeply study the rough set model of MsFIS, we also discuss the uncertainty measurement methods applicable to MsFIS in different senses. Finally, we also design an algorithm for calculating the uncertainty of MsFIS and verify the algorithm with four data sets. The experimental results show that the generalized multi-granulation rough set model of MsFIS has wider applicability.

References

Pawlak, Z.: Rough sets. Int. J. Comput. Inf. Sci. 11(5), 341–356 (1982)

Pawlak, Z.: Rough set theory and its applications to data analysis. J. Cybernet. 29(7), 661–688 (1998)

Peng, X.S., Wen, J.Y., Li, Z.H., Yang, G.Y., Zhou, C.K., Reid, A., Hepburn, D.M., Judd, M.D., Siew, W.H.: Rough set theory applied to pattern recognition of Partial Discharge in noise affected cable data. IEEE Trans. Dielectr. Electr. Insul. 24(1), 147–156 (2017)

Zhu, W.: Generalized rough sets based on relations. Inf. Sci. 177(22), 4997–5011 (2007)

Macparthalain, N., Shen, Q.: On rough sets, their recent extensions and applications. Knowl. Eng. Rev. 25(4), 365–395 (2010)

Morsi, N.N., Yakout, M.M.: Axiomatics for fuzzy rough sets. Fuzzy Sets Syst. 100(1–3), 327–342 (1998)

Yeung, D.S., Chen, D.G., Tsang, E.C.C., Lee, J.W.T., Wang, X.Z.: On the generalization of fuzzy rough sets. IEEE Trans. Fuzzy Syst. 13(3), 343–361 (2005)

Cornelis, C., Cock, M.D., Radzikowska, A.M.: Vaguely quantified rough sets. In: Rough Sets, Fuzzy Sets, Data Mining Granular Computing, International Conference, RSFDGrC, Toronto, Canada (2007)

Bonikowski, Z., Bryniarski, E., Wybraniec-Skardowska, U.: Rough pragmatic description logic. Intell. Syst. Ref. Libr. 43, 157–184 (2013)

Zhu, W.: Topological approaches to covering rough sets. Inf. Sci. 177(6), 1499–1508 (2007)

Xu, W.H., Li, W.T.: Granular computing approach to two-way learning based on formal concept analysis in fuzzy datasets. IEEE Trans. Cybernet. 46(2), 366–379 (2016)

Bonikowski, Z., Bryniarski, E., Wybraniec-Skardowska, U.: Extensions and intentions in the rough set theory. Inf. Sci. 107(1–4), 149–167 (1998)

Wang, G.Y., Feng, H.U., Huang, H., Yu, W.U.: A granular computing model based on tolerance relation. J. China Univ. Posts Telecommun. 12(3), 86–90 (2005)

Qian, Y.H., Liang, J.Y., Dang, C.Y.: Incomplete multigranulation rough set. IEEE Trans. Syst. Man Cybernet. Part A Syst. Hum. 40(2), 420–431 (2010)

Qian, Y.H., Liang, J.Y., Yao, Y.Y., Dang, C.Y.: MGRS: a multi-granulation rough set. Inf. Sci. Int. J. 180(6), 949–970 (2010)

Lin, G.P., Liang, J.Y., Li, J.J.: A fuzzy multigranulation decision-theoretic approach to multi-source fuzzy information systems. Knowl. Based Syst. 91, 102–113 (2016)

Yu, J.H., Zhang, B., Chen, M.H., Xu, W.H.: Double-quantitative decision-theoretic approach to multigranulation approximate space. Int. J. Approx. Reason. 98, 236–258 (2018)

Xu, W.H., Li, W.T., Zhang, X.T.: Generalized multigranulation rough sets and optimal granularity selection. Granul. Comput. 2(4), 271–288 (2017)

Xu, W.H., Guo, Y.T.: Generalized multigranulation double-quantitative decision-theoretic rough set. Knowl. Based Syst. 105, 190–205 (2016)

She, Y.H., He, X.L., Shi, H.X., Qian, Y.H.: A multiple-valued logic approach for multigranulation rough set model. Int. J. Approx. Reason. 82(3), 270–284 (2017)

Li, Z.W., Liu, X.F., Zhang, G.Q., Xie, N.X., Wang, S.C.: A multi-granulation decision-theoretic rough set method for distributed fc -decision information systems: an application in medical diagnosis. Appl. Soft Comput. 56, 233–244 (2017)

Wei, W., Liang, J.: Information fusion in rough set theory: an overview. Inf. Fusion 48, 107–118 (2019)

Che, X.Y., Mi, J.S., Chen, D.G.: Information fusion and numerical characterization of a multi-source information system. Knowl. Based Syst. 145, 121–133 (2018)

Guo, Y.T., Xu, W.H.: Attribute reduction in multi-source decision systems. Lect Notes. Comput Sci. 9920, 558–568 (2016).

Sang, B.B., Guo, Y.T., Shi, D.R., Xu, W.H.: Decision-theoretic rough set model of multi-source decision systems. Int. J. Mach. Learn. Cybernet. 9(1), 1–14 (2017)

Xu, W.H., Li, M.M., Wang, X.Z.: Information fusion based on information entropy in fuzzy multi-source incomplete information system. Int. J. Fuzzy Syst. 19(4), 1200–1216 (2017b)

Xu, W.H., Yu, J.H.: A novel approach to information fusion in multi-source datasets: a granular computing viewpoint. Inf. Sci. 378, 410–423 (2017)

Li, M.M., Zhang, X.Y.: Information fusion in a multi-source incomplete information system based on information entropy. Entropy 19(11), 570–586 (2017)

Huang, Y.Y., Li, T.R., Luo, C., Fujita, H., Horng, S.J.: Dynamic fusion of multi-source interval-valued data by fuzzy granulation. IEEE Trans. Fuzzy Syst. 26(6): 3403–3417 (2018)

Wu, J., Su, Y.H., Cheng, Y.W., Shao, X.Y., Chao, D., Cheng, L.: Multi-sensor information fusion for remaining useful life prediction of machining tools by adaptive network based fuzzy inference system. Appl. Soft Comput. 68, 13–23 (2018)

Elmadany, N.E.D., He, Y.F., Ling, G.: Information fusion for human action recognition via biset/multiset globality locality preserving canonical correlation analysis. IEEE Trans. Image Process. 27(11), 5275–5287 (2018)

Xiao, G.Q., Li, K.L., Li, K.Q., Xu, Z.: Efficient top-(k, l) range query processing for uncertain data based on multicore architectures. Distrib. Parallel Databases 33(3), 381–413 (2015)

Brunetti, A., Weder, B.: Investment and institutional uncertainty: a comparative study of different uncertainty measures. Weltwirtschaftliches Archiv 134(3), 513–533 (1998)

Wu, D.R., Mendel, J.M.: Uncertainty measures for interval type-2 fuzzy sets. Inf. Sci. 177(23), 5378–5393 (2007)

Dai, J.H., Xu, Q.: Approximations and uncertainty measures in incomplete information systems. Inf. Sci. 198(3), 62–80 (2012)

Xiao, G.Q., Li, K.L., Xu, Z., Li, K.Q.: Efficient monochromatic and bichromatic probabilistic reverse top-k query processing for uncertain big data. J. Comput. Syst. Sci. 89, 92–113 (2017)

Teng, S.H., Fan, L., Ma, Y.X., Mi, H., Nian, Y.J.: Uncertainty measures of rough sets based on discernibility capability in information systems. Soft Comput. 21(4), 1081–1096 (2017)

Chen, Y.M., Yu, X., Ying, M., Xu, F.F.: Measures of uncertainty for neighborhood rough sets. Knowl. Based Syst. 120, 226–235 (2017)

Hu, Q., Lei, Z., Chen, D., Pedrycz, W., Yu, D.: Gaussian kernel based fuzzy rough sets: model, uncertainty measures and applications. Int. J. Approx. Reason. 51(4), 453–471 (2010)

Wei, C.P., Rodriguez, R.M., Martinez, L.: Uncertainty measures of extended hesitant fuzzy linguistic term sets. IEEE Trans. Fuzzy Syst. 26(3), 1763–1768 (2018)

Huang, B., Guo, C.X., Li, H.X., Feng, G.F., Zhou, X.Z.: Hierarchical structures and uncertainty measures for intuitionistic fuzzy approximation space. Inf. Sci. 336, 92–114 (2016)

Xiao, G .Q., Wu, F., Zhou, X., Li, K .Q.: Probabilistic top-k range query processing for uncertain databases. J. Intell. Fuzzy Syst. 31(2), 1109–1120 (2016)

Xiao, G.Q., Li, K.L., Zhou, X., Li, K.Q.: Queueing analysis of continuous queries for uncertain data streams over sliding windows. Int. J. Pattern Recognit. Artif. Intell. 30(9), 1–16 (2016)

Xiao, G.Q., Li, K.L., Li, K.Q.: Reporting l most influential objects in uncertain databases based on probabilistic reverse top-k queries. Inf. Sci. 405, 207–226 (2017)

Lin, G.P., Liang, J.Y., Qian, Y.H.: An information fusion approach by combining multigranulation rough sets and evidence theory. Inf. Sci. 314, 184–199 (2015)

Acknowledgements

We would like to express our thanks to the Editor-in-Chief, handling associate editor and anonymous referees for his/her valuable comments and constructive suggestions. This paper is supported by the National Natural Science Foundation of China (Nos. 61472463, 61772002) and the Fundamental Research Funds for the Central Universities (No. XDJK2019B029).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Yang, L., Zhang, X., Xu, W. et al. Multi-granulation Rough Sets and Uncertainty Measurement for Multi-source Fuzzy Information System. Int. J. Fuzzy Syst. 21, 1919–1937 (2019). https://doi.org/10.1007/s40815-019-00667-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40815-019-00667-1