Abstract

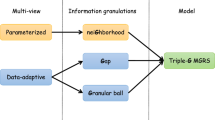

In recent years, more and more methods and theories of multi-granulation information systems have been explored. However, there is very limited investigation on the attribute reducts of multi-granulation rough sets. Therefore, the main objective of this paper is to draw attention to the attribute reducts of multi-granulation information system. For any subset of information system, we usually characterize it by its upper and lower approximations. In order to calculate the upper and lower approximations faster, we must reduce the redundant information of the information system. According to the preceding analysis, we first introduce three types of attribute reduct, which are called arbitrary union reduct, neighborhood union reduct and neighborhood intersection reduct, respectively. Then many basic and important results of these reducts are deeply explored. In order to apply the theories of attribute reducts to deal with practical issues, we develop three algorithms so as to compute multi-granulation upper and lower approximations. Next, we further study the interrelationships among these attribute reducts. Finally, we present a multi-granulation information system with respect to thirty students’ exam scores and calculate the corresponding attribute reducts by using the algorithms listed in the paper.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Rough set theory, proposed by Pawlak Pawlak (1982), provides a useful method for dealing with incomplete and inconsistent knowledge. The theory is widely used in various fields, such as knowledge recognition, data mining, image processing and so on.

We know that classical rough set theory is based on an equivalence relation. Objects in the same equivalence class are indiscernible. Pawlak’s rough sets are usually used to deal with data sets described with nominal features. However, in many practical situations, the data sets are not suitable for being handled by Pawlak’s rough sets (Bonikowski et al. 1998; Baszczyński et al. 2011; Cattaneo 1998; Chen et al. 2006, 2007, 2011; Diker and Ugur 2012). As a result, many generalized rough set models have been developed in terms of different requirements. Then, the neighborhood-based rough sets (Wang et al. 2016, 2017; Yao 2011), similarity relation rough sets (Slowinski and Vanderpooten 2000; Yao 1998), tolerance relation rough sets (Skowron and Stepaniuk 1996; Xu et al. 2013), and binary relation rough sets (Wang et al. 2017) were constructed, respectively. In particular, some objets have multiple attribute values for a multi-valued attribute. So, we need a covering of the universe instead of a partition. In 1983, Zakowski firstly proposed the covering rough set model Zakowski (1983). After Zakowski, many authors studied the properties of covering rough sets (Bonikowski et al. 1998; Chen et al. 2007; Ge and Li 2011; Kong and Xu 2018a, b; Kong and Wei 2015; Liu and Wang 2011; Liu et al. 2014; Liu and Zhu 2008; Liu and Sai 2009; Shi and Gong 2010). Especially, more and more scholars are working on the attribute reducts of covering rough sets or covering information systems. For example, a pioneering work related to the reducts of covering rough sets was constructed, where the concept of reducts of covering was introduced and the procedure to find a reduct for a covering was shown Zhu and Wang (2003). Meanwhile, the approach to attribute reducts of consistent and inconsistent covering decision systems are firstly introduced by Chen et al. (2007). At the same time, Chen et al. (2007) originally proposed the discernibility to design algorithms that compute all the reducts of consistent and inconsistent covering decision systems. Compared with the attribute reduct method presented in Chen et al. (2007), Wang et al. constructed a new discernibility matrix, which greatly reduced the computational complexity (Wang et al. 2014). In Wang et al. (2015), Wang et al. also provided a new method for constructing simpler discernibility matrix with covering rough sets, and improved some characterizations of attribute reduct provided by Tsang et al. (2008). Moreover, Tan et al. introduced matrix-based methods for computing set approximations and reducts of a covering information system (Tan et al. 2015).

From the perspective of granular computing, an equivalence relation on the universe can be regarded as a granularity, and the corresponding partition can be regarded as a granular structure. Hence, Pawlak’s rough set theory is based on a single granularity. In Qian et al. (2010), Qian and Liang firstly extended the single granulation rough sets to the multiple granulation rough sets, where the set approximations were defined by using multiple equivalence relations on the universe. At present, more and more attention has been paid to extending the multi-granulation rough set theory (Li et al. 2016, 2017; Lin et al. 2013; Liu and Wang 2011; Zhang and Kong 2016). Xu et al. developed the multi-granulation rough set model in ordered information systems (Xu et al. 2012). At the same time, based on the multi-granulation rough set theory and fuzzy set theory, Xu et al. proposed a multi-granulation fuzzy rough set model and a multi-granulation fuzzy rough sets in a fuzzy tolerance approximation space (Xu et al. 2014). Meanwhile, Yang also generalized the multi-granulation rough sets into fuzzy rough sets, and discussed the corresponding properties in incomplete information systems Yang et al. (2011). Kong et al. studied the operation and algebraic properties of multi-granulation rough sets and multi-granulation covering rough sets, respectively (Kong et al. 2018; Kong and Wei 2017). She et al. explored the topological structures and obtained many excellent conclusions (She and he 2003). Recently, Xu et al. proposed a generalized multi-granulation rough set model by introducing a support characteristic function and an information level (Xu et al. 2011). However, it is still an open problem regarding the attribute reducts of multi-granulation information systems. Therefore, the main objective of this paper is to study the attribute reduct theory of multi-granulation information system.

The rest of this paper is organized as follows. In Sect. 2, we briefly review some basic concepts of Pawlak’s rough sets, multi-granulation rough sets and covering. In Sect. 3, we firstly introduce the concept of the arbitrary union reduct, and discuss some basic properties of this reduct. Furthermore, we develop an algorithm to compute the arbitrary union reduct in an information system. In Sect. 4, we propose the concept of neighborhood union reduct, and study some important properties of neighborhood union reduct. In order to deal with real-life cases, we develop an algorithm to compute the neighborhood union reduct in an information system. In Sect. 5, we define the neighborhood intersection reduct. Many meaningful results of this reduct are explored. In Sect. 6, the interrelationships among the several types of reducts are discussed in detail. In Sect. 7, an illustrate example is given to show how to select the optimal reduct to compute the multi-granulation lower and upper approximations more efficiently. Finally, Sect. 8 concludes this study.

2 Preliminaries

In this section, we review some basic concepts and notions of Pawlak’s rough sets, multi-granulation rough sets and covering. More details can be seen in references Chen et al. (2007), Qian et al. (2010), Qian and Liang (2006), Zhu and Wang (2003).

Let  be an information system, where U is a nonempty finite set, called a universe; A is a nonempty attribute set; \(V=\cup _{a\in A}V_{a}\), \(V_{a}\) is a set of its values; \(f: U\times A\rightarrow V\) is an information function with \(f(x,a)\in V_{a}\) for each \(a\in A\) and \(x\in U\). The family of attribute subsets is denoted by

be an information system, where U is a nonempty finite set, called a universe; A is a nonempty attribute set; \(V=\cup _{a\in A}V_{a}\), \(V_{a}\) is a set of its values; \(f: U\times A\rightarrow V\) is an information function with \(f(x,a)\in V_{a}\) for each \(a\in A\) and \(x\in U\). The family of attribute subsets is denoted by  , where \(A_{i}\subseteq A,~ i=1,2,\ldots ,m.\) The equivalence class of an object x with respect to

, where \(A_{i}\subseteq A,~ i=1,2,\ldots ,m.\) The equivalence class of an object x with respect to  is defined by: \([x]_{A_{i}}=\{y\in U|f(x,a)=f(y,a), a\in A_{i}\}\). Let \(U/A_{i}=\{[x_{1_{i}}]_{A_{i}},[x_{2_{i}}]_{A_{i}},\ldots ,[x_{n_{i}}]_{A_{i}}\}\) is a partition of U and

is defined by: \([x]_{A_{i}}=\{y\in U|f(x,a)=f(y,a), a\in A_{i}\}\). Let \(U/A_{i}=\{[x_{1_{i}}]_{A_{i}},[x_{2_{i}}]_{A_{i}},\ldots ,[x_{n_{i}}]_{A_{i}}\}\) is a partition of U and  \([x_{1_{m}}]_{A_{m}},[x_{2_{m}}]_{A_{m}},\ldots ,[x_{n_{m}}]_{A_{m}}\}\). Then, for each \(X\subseteq U\), the lower and upper approximations of X with respect to \(A_{i}\) are defined as follows:

\([x_{1_{m}}]_{A_{m}},[x_{2_{m}}]_{A_{m}},\ldots ,[x_{n_{m}}]_{A_{m}}\}\). Then, for each \(X\subseteq U\), the lower and upper approximations of X with respect to \(A_{i}\) are defined as follows:

Definition 2.1

Qian et al. (2010) Let  be an information system, \(X\subseteq U\), and

be an information system, \(X\subseteq U\), and  . The optimistic multi-granulation lower and upper approximations of X with respect to

. The optimistic multi-granulation lower and upper approximations of X with respect to  are defined as follows:

are defined as follows:

where “\(\vee \)” means the logical operator “or”, which represents that the alternative conditions are satisfied, and “\(\wedge \)” means the logical operator “and”, which represents that all of the conditions are satisfied. Here, the word “optimistic” means that just one granular structure is needed to satisfy with the inclusion between an equivalence class and a target concept when multiple independent granular structures are available in problem processing.

Definition 2.2

Qian and Liang (2006) Let  be an information system, \(X\subseteq U\), and

be an information system, \(X\subseteq U\), and  . The pessimistic multi-granulation lower and upper approximations of X with respect to

. The pessimistic multi-granulation lower and upper approximations of X with respect to  are defined as follows:

are defined as follows:

Here, the word “pessimistic” means that all granular structures are needed to satisfy with the inclusion between an equivalence class and a target concept when multiple independent granular structures are available.

Definition 2.3

Zakowski (1983) Let  be a family of nonempty subsets of U.

be a family of nonempty subsets of U.  is called a covering of U if

is called a covering of U if  . The ordered pair

. The ordered pair  is called a covering approximation space.

is called a covering approximation space.

Definition 2.4

Zhu and Wang (2003) Let  be a covering approximation space and

be a covering approximation space and  . If K is a union of some sets in

. If K is a union of some sets in  , we say that K is a union reducible element of

, we say that K is a union reducible element of  ; Otherwise, we say that K is a union irreducible element of

; Otherwise, we say that K is a union irreducible element of  . Meanwhile, for a covering

. Meanwhile, for a covering  of U, the new union irreducible covering through above reduction is called a union reduct of

of U, the new union irreducible covering through above reduction is called a union reduct of  , and denoted by

, and denoted by  .

.

Notice that the union reduct of a covering can be computed by deleting all the union reducible elements. Therefore, the union reduct is the minimum covering by deleting redundancy. Meanwhile, for each subset of the universe, the union reduct of a covering can induce the same lower and upper approximations (Zhu and Wang 2003). However, for the union reduct of a covering, it may contain other redundant elements which are not the union reducible elements. To address this issue, the intersection reduct of a covering is proposed by Chen et al. (2015).

Definition 2.5

Chen et al. (2015) Let  be a covering approximation space and

be a covering approximation space and  . If K is an intersection of some sets in

. If K is an intersection of some sets in  , we say that K is an intersection reducible element of

, we say that K is an intersection reducible element of  ; Otherwise, we say that K is an intersection irreducible element of

; Otherwise, we say that K is an intersection irreducible element of  . Meanwhile, for a covering

. Meanwhile, for a covering  of U, the new intersection irreducible covering through above reduction is called an intersection reduct of

of U, the new intersection irreducible covering through above reduction is called an intersection reduct of  , and denoted by

, and denoted by  .

.

In Chen et al. (2015), the properties of the intersect reduct of a covering are examined. Particularly, the intersection reduct is investigated from the viewpoint of concept lattice theory.

3 Attribute reduction with respect to arbitrary union

In this section, we will introduce the concept of the arbitrary union reduct, and then discuss some interesting properties of this reduct. Meanwhile, we develop an algorithm to compute the arbitrary union reduct.

Definition 3.1

Let  be an information system, and

be an information system, and  the family of attribute subsets.

the family of attribute subsets.  is called an arbitrary union reducible element of

is called an arbitrary union reducible element of  , if for each \(x\in U\), there exist \(\Gamma _{x}\subseteq \{1,2,\ldots ,i-1,i+1,\ldots ,m\}\) and \(V_{x}\subseteq U\) such that \([x]_{A_{i}}=\cup _{j\in \Gamma _{x}} \cup _{y\in V_{x}} [y]_{A_{j}}\); Otherwise, \(A_{i}\) is called an arbitrary union irreducible element of

, if for each \(x\in U\), there exist \(\Gamma _{x}\subseteq \{1,2,\ldots ,i-1,i+1,\ldots ,m\}\) and \(V_{x}\subseteq U\) such that \([x]_{A_{i}}=\cup _{j\in \Gamma _{x}} \cup _{y\in V_{x}} [y]_{A_{j}}\); Otherwise, \(A_{i}\) is called an arbitrary union irreducible element of  . If every element in

. If every element in  is irreducible, we say that

is irreducible, we say that  is irreducible; Otherwise,

is irreducible; Otherwise,  is reducible.

is reducible.

Definition 3.2

Let  be an information system, and

be an information system, and  the family of attribute subsets. The new family of attribute subsets

the family of attribute subsets. The new family of attribute subsets  through the above reduct is called the arbitrary union reduct of

through the above reduct is called the arbitrary union reduct of  , and denoted by

, and denoted by  .

.

Notice that the covering  of a universe U has only one reduct. i.e.,

of a universe U has only one reduct. i.e.,  is unique (Zhu and Wang 2003). Here, we raise a question: is

is unique (Zhu and Wang 2003). Here, we raise a question: is  unique? In the following, we will employ an example to answer the question.

unique? In the following, we will employ an example to answer the question.

Example 3.1

Let  be an information system, where \(U=\{x_{1},x_{2},\ldots ,x_{20}\}\),

be an information system, where \(U=\{x_{1},x_{2},\ldots ,x_{20}\}\),  .

.

It is clear that  or \(\{A_{1},A_{2},A_{4}\}\). Therefore,

or \(\{A_{1},A_{2},A_{4}\}\). Therefore,  is not unique.

is not unique.

If  is a union reducible element of

is a union reducible element of  and

and  , then \(K_{1}\) is a union reducible element of

, then \(K_{1}\) is a union reducible element of  if and only if \(K_{1}\) is a union reducible element of

if and only if \(K_{1}\) is a union reducible element of  Zhu and Wang (2003). Similarly, we have the following result.

Zhu and Wang (2003). Similarly, we have the following result.

Proposition 3.1

Let  be an information system, and

be an information system, and  . Suppose

. Suppose  is an arbitrary union reducible element of

is an arbitrary union reducible element of  , and

, and  . If for each \(x\in U\), we have \([x]_{A_{i}}\ne [x]_{A_{j}}\), then \(A_{j}\) is an arbitrary union reducible element of

. If for each \(x\in U\), we have \([x]_{A_{i}}\ne [x]_{A_{j}}\), then \(A_{j}\) is an arbitrary union reducible element of  if and only if \(A_{j}\) is an arbitrary union reducible element of

if and only if \(A_{j}\) is an arbitrary union reducible element of  .

.

Proof

\((\Leftarrow )\) It is immediate.

\((\Rightarrow )\) Suppose \(A_{j}\) is an arbitrary union reducible element of  . For each \(x\in U\), there exist \(\Gamma _{x}\subseteq \{1,2,\ldots ,j-1,j+1,\ldots ,m\}\) and \(V_{x}\subseteq U\) such that \([x]_{A_{j}}=\cup _{t\in \Gamma _{x}} \cup _{y\in V_{x}} [y]_{A_{t}}\). Case1: If \(i~\overline{\in } ~\Gamma _{x}\), it is clear that \(A_{j}\) is an arbitrary union reducible element of

. For each \(x\in U\), there exist \(\Gamma _{x}\subseteq \{1,2,\ldots ,j-1,j+1,\ldots ,m\}\) and \(V_{x}\subseteq U\) such that \([x]_{A_{j}}=\cup _{t\in \Gamma _{x}} \cup _{y\in V_{x}} [y]_{A_{t}}\). Case1: If \(i~\overline{\in } ~\Gamma _{x}\), it is clear that \(A_{j}\) is an arbitrary union reducible element of  ; Case2: If \(i\in \Gamma _{x}\), without lost of generality, let \([x]_{A_{j}}=[z]_{A_{i}}\cup (\cup _{t\in \Gamma _{x}/\{i\}} \cup _{y\in V_{x}/\{z\}} [y]_{A_{t}})\), where \(z\in V_{x}\). On the one hand, if \([z]_{A_{i}}=[x]_{A_{i}}\), by the assumption in this proposition, we have \([z]_{A_{i}}\subset [x]_{A_{j}}\). Since \(A_{i}\) is an arbitrary union reducible element of

; Case2: If \(i\in \Gamma _{x}\), without lost of generality, let \([x]_{A_{j}}=[z]_{A_{i}}\cup (\cup _{t\in \Gamma _{x}/\{i\}} \cup _{y\in V_{x}/\{z\}} [y]_{A_{t}})\), where \(z\in V_{x}\). On the one hand, if \([z]_{A_{i}}=[x]_{A_{i}}\), by the assumption in this proposition, we have \([z]_{A_{i}}\subset [x]_{A_{j}}\). Since \(A_{i}\) is an arbitrary union reducible element of  , there exist \(\Gamma _{x}^{'}\subseteq \{1,2,\ldots ,i-1,i+1,\ldots ,m\}\) and \(V_{x}^{'}\subseteq U\) such that \([z]_{A_{i}}=\cup _{s\in \Gamma _{x}^{'}} \cup _{w\in V_{x}^{'}} [w]_{A_{s}}\). Because \(U/A_{i}\) is a partition of U, then \(j~\overline{\in }~ \Gamma _{x}^{'}\). Therefore, \([x]_{A_{j}}=(\cup _{s\in \Gamma _{x}^{'}} \cup _{w\in V_{x}^{'}} [w]_{A_{s}})\cup (\cup _{t\in \Gamma _{x}/\{i\}} \cup _{y\in V_{x}/\{z\}} [y]_{A_{t}})\). Denote \(\widetilde{\Gamma _{x}}=(\Gamma _{x}^{'}\cup \Gamma _{x})/\{i\},~\widetilde{V_{x}}=(V_{x}^{'}\cup V_{x})/\{z\}\), then \([x]_{A_{j}}=\cup _{k\in \widetilde{\Gamma _{x}}} \cup _{u\in \widetilde{V_{x}}} [u]_{A_{k}}\). So, \(A_{j}\) is an arbitrary union reducible element of

, there exist \(\Gamma _{x}^{'}\subseteq \{1,2,\ldots ,i-1,i+1,\ldots ,m\}\) and \(V_{x}^{'}\subseteq U\) such that \([z]_{A_{i}}=\cup _{s\in \Gamma _{x}^{'}} \cup _{w\in V_{x}^{'}} [w]_{A_{s}}\). Because \(U/A_{i}\) is a partition of U, then \(j~\overline{\in }~ \Gamma _{x}^{'}\). Therefore, \([x]_{A_{j}}=(\cup _{s\in \Gamma _{x}^{'}} \cup _{w\in V_{x}^{'}} [w]_{A_{s}})\cup (\cup _{t\in \Gamma _{x}/\{i\}} \cup _{y\in V_{x}/\{z\}} [y]_{A_{t}})\). Denote \(\widetilde{\Gamma _{x}}=(\Gamma _{x}^{'}\cup \Gamma _{x})/\{i\},~\widetilde{V_{x}}=(V_{x}^{'}\cup V_{x})/\{z\}\), then \([x]_{A_{j}}=\cup _{k\in \widetilde{\Gamma _{x}}} \cup _{u\in \widetilde{V_{x}}} [u]_{A_{k}}\). So, \(A_{j}\) is an arbitrary union reducible element of  . On the other hand, if \([z]_{A_{i}}\ne [x]_{A_{i}}\), then we have \([z]_{A_{i}}\subset [x]_{A_{j}}\). According to the above discussion, \(A_{j}\) is an arbitrary union reducible element of

. On the other hand, if \([z]_{A_{i}}\ne [x]_{A_{i}}\), then we have \([z]_{A_{i}}\subset [x]_{A_{j}}\). According to the above discussion, \(A_{j}\) is an arbitrary union reducible element of  . \(\square \)

. \(\square \)

In the following, we can develop an algorithm to compute the arbitrary union reduct  .

.

In Zhu and Wang (2003), Zhu and Wang indicated that if K is a union reducible element of  and \(X\subseteq U\), then the covering lower approximations of X with respect to

and \(X\subseteq U\), then the covering lower approximations of X with respect to  and

and  , respectively, are same. Here, we also raise a similar question: if

, respectively, are same. Here, we also raise a similar question: if  is an arbitrary union reducible element of

is an arbitrary union reducible element of  , and \(X\subseteq U\), are the optimistic multi-granulation lower approximations of X with respect to

, and \(X\subseteq U\), are the optimistic multi-granulation lower approximations of X with respect to  and

and  , respectively, same? To solve this problem, we have the following result.

, respectively, same? To solve this problem, we have the following result.

Proposition 3.2

Let  be an information system,

be an information system,  , and \(X\subseteq U\). If

, and \(X\subseteq U\). If  is an arbitrary union reducible element of

is an arbitrary union reducible element of  , then the optimistic multi-granulation lower approximations of X with respect to

, then the optimistic multi-granulation lower approximations of X with respect to  and

and  , respectively, are same.

, respectively, are same.

Proof

By Definition 2.1, it is obvious that \(\underline{OM_{\sum _{i=1,i\ne j}^m A_{i}}}(X)\subseteq \underline{OM_{\sum _{i=1}^m A_{i}}}(X)\). Then, for each \(x\in \underline{OM_{\sum _{i=1}^m A_{i}}}(X)\), there exists  such that \([x]_{A_{k}}\subseteq X\). If \(A_{k}\ne A_{j}\), then \(x\in \underline{OM_{\sum _{i=1,i\ne j}^m A_{i}}}(X)\). If \(A_{k}=A_{j}\), since \(A_{j}\) is a reducible element of

such that \([x]_{A_{k}}\subseteq X\). If \(A_{k}\ne A_{j}\), then \(x\in \underline{OM_{\sum _{i=1,i\ne j}^m A_{i}}}(X)\). If \(A_{k}=A_{j}\), since \(A_{j}\) is a reducible element of  , there exists

, there exists  such that \([x]_{A_{s}}\subseteq [x]_{A_{k}}\subseteq X\). According to Definition 2.1, we have \(x\in \underline{OM_{\sum _{i=1,i\ne j}^m A_{i}}}(X)\). So, \(\underline{OM_{\sum _{i=1}^m A_{i}}}(X)\subseteq \underline{OM_{\sum _{i=1,i\ne j}^m A_{i}}}(X)\). Therefore, \(\underline{OM_{\sum _{i=1,i\ne j}^m A_{i}}}(X)= \underline{OM_{\sum _{i=1}^m A_{i}}}(X)\). \(\square \)

such that \([x]_{A_{s}}\subseteq [x]_{A_{k}}\subseteq X\). According to Definition 2.1, we have \(x\in \underline{OM_{\sum _{i=1,i\ne j}^m A_{i}}}(X)\). So, \(\underline{OM_{\sum _{i=1}^m A_{i}}}(X)\subseteq \underline{OM_{\sum _{i=1,i\ne j}^m A_{i}}}(X)\). Therefore, \(\underline{OM_{\sum _{i=1,i\ne j}^m A_{i}}}(X)= \underline{OM_{\sum _{i=1}^m A_{i}}}(X)\). \(\square \)

Similarly, the result with respect to the optimistic multi-granulation upper approximation is shown as follows.

Proposition 3.3

Let  be an information system,

be an information system,  , and \(X\subseteq U\). If

, and \(X\subseteq U\). If  is an arbitrary union reducible element of

is an arbitrary union reducible element of  , then the optimistic multi-granulation upper approximations of X with respect to

, then the optimistic multi-granulation upper approximations of X with respect to  and

and  , respectively, are same.

, respectively, are same.

According to Propositions 3.2 and 3.3, we have the following result.

Theorem 3.1

Let  be an information system,

be an information system,  , and \(X\subseteq U\). Then the optimistic multi-granulation lower and upper approximations of X with respect to

, and \(X\subseteq U\). Then the optimistic multi-granulation lower and upper approximations of X with respect to  and

and  , respectively, are same.

, respectively, are same.

If  is an arbitrary union reducible element of

is an arbitrary union reducible element of  , and \(X\subseteq U\), then, are the pessimistic multi-granulation lower approximations of X with respect to

, and \(X\subseteq U\), then, are the pessimistic multi-granulation lower approximations of X with respect to  and

and  , respectively, same? In order to answer the question, a counterexample is given as follows:

, respectively, same? In order to answer the question, a counterexample is given as follows:

Example 3.2

Let  be an information system, where \(U=\{x_{1},x_{2},\ldots ,x_{9}\}\),

be an information system, where \(U=\{x_{1},x_{2},\ldots ,x_{9}\}\),  .

.

Clearly, \(A_{3}\) is an arbitrary union reducible element of  . For \(X=\{x_{7},x_{8},x_{9}\}\), we have \(\underline{PM_{\sum _{i=1}^3 A_{i}}}(X)=\{x_{8},x_{9}\}\). However, \(\underline{PM_{\sum _{i=1}^2 A_{i}}}(X)=\{x_{7},x_{8},x_{9}\}\). Therefore, \(\underline{PM_{\sum _{i=1}^3 A_{i}}}(X)\ne \underline{PM_{\sum _{i=1}^2 A_{i}}}(X)\).

. For \(X=\{x_{7},x_{8},x_{9}\}\), we have \(\underline{PM_{\sum _{i=1}^3 A_{i}}}(X)=\{x_{8},x_{9}\}\). However, \(\underline{PM_{\sum _{i=1}^2 A_{i}}}(X)=\{x_{7},x_{8},x_{9}\}\). Therefore, \(\underline{PM_{\sum _{i=1}^3 A_{i}}}(X)\ne \underline{PM_{\sum _{i=1}^2 A_{i}}}(X)\).

Similarly, it is not difficult to find that the pessimistic multi-granulation upper approximations of X with respect to  and

and  , respectively, are different.

, respectively, are different.

Proposition 3.4

Let  be an information system, and

be an information system, and  ,

,  two families of attribute subsets. If for each \(X\subseteq U\), the optimistic multi-granulation lower approximations of X with respect to

two families of attribute subsets. If for each \(X\subseteq U\), the optimistic multi-granulation lower approximations of X with respect to  and

and  , respectively, are same. Then we have that

, respectively, are same. Then we have that  .

.

Proof

Suppose that  . For each

. For each  , we have \(\underline{OM_{\sum _{k=1}^{m_{1}} A_{1k}}}(K)=K\). Since the optimistic multi-granulation lower or upper approximations of K with respect to

, we have \(\underline{OM_{\sum _{k=1}^{m_{1}} A_{1k}}}(K)=K\). Since the optimistic multi-granulation lower or upper approximations of K with respect to  and

and  , respectively, are same. Then we have \(\underline{OM_{\sum _{l=1}^{m_{2}} A_{2l}}}(K)=K\). Therefore, there exist

, respectively, are same. Then we have \(\underline{OM_{\sum _{l=1}^{m_{2}} A_{2l}}}(K)=K\). Therefore, there exist  such that \(K=\cup _{i=1}^{s}K_{i}\). Similar to the above proof, there exist

such that \(K=\cup _{i=1}^{s}K_{i}\). Similar to the above proof, there exist  such that \(K_{i}=\cup _{j=1}^{n_{i}}K_{ij}\). So, \(K=\cup _{i=1}^{s}\cup _{j=1}^{n_{i}}K_{ij}\). By the definition of the arbitrary union reduct, we have \(K_{ij}=K\) for all i, j. Therefore, for all i, \(K_{i}=K\). Hence, K is an element of

such that \(K_{i}=\cup _{j=1}^{n_{i}}K_{ij}\). So, \(K=\cup _{i=1}^{s}\cup _{j=1}^{n_{i}}K_{ij}\). By the definition of the arbitrary union reduct, we have \(K_{ij}=K\) for all i, j. Therefore, for all i, \(K_{i}=K\). Hence, K is an element of  .

.

On the other hand, we can similarly prove that any element of  is an element of

is an element of  . Therefore, we have

. Therefore, we have  . \(\square \)

. \(\square \)

Theorem 3.2

Let  be an information system, and

be an information system, and  ,

,  two families of attribute subsets. For each \(X\subseteq U\), the optimistic multi-granulation lower approximations of X with respect to

two families of attribute subsets. For each \(X\subseteq U\), the optimistic multi-granulation lower approximations of X with respect to  and

and  , respectively, are same if and only if

, respectively, are same if and only if  .

.

Proof

(\(\Rightarrow \)) It is immediate by Proposition 3.4.

(\(\Leftarrow \)) Since  . For each \(X\subseteq U\), we have that the optimistic multi-granulation lower approximations of X with respect to

. For each \(X\subseteq U\), we have that the optimistic multi-granulation lower approximations of X with respect to  and

and  , respectively, are same. By Theorem 3.1, we have that the optimistic multi-granulation lower approximations of X with respect to

, respectively, are same. By Theorem 3.1, we have that the optimistic multi-granulation lower approximations of X with respect to  and

and  , respectively, are same. \(\square \)

, respectively, are same. \(\square \)

Similar to Proposition 3.4 and Theorem 3.2, we have the following results.

Proposition 3.5

Let  be an information system, and

be an information system, and  ,

,  two families of attribute subsets. For each \(X\subseteq U\), the optimistic multi-granulation upper approximations of X with respect to

two families of attribute subsets. For each \(X\subseteq U\), the optimistic multi-granulation upper approximations of X with respect to  and

and  , respectively, are same. Then we have that

, respectively, are same. Then we have that  .

.

Theorem 3.3

Let  be an information system, and

be an information system, and  ,

,  two families of attribute subsets. For each \(X\subseteq U\), the optimistic multi-granulation upper approximations of X with respect to

two families of attribute subsets. For each \(X\subseteq U\), the optimistic multi-granulation upper approximations of X with respect to  and

and  , respectively, are same if and only if

, respectively, are same if and only if  .

.

4 Attribute reduction with respect to neighborhood union

In this section, we firstly propose the concept of neighborhood union reduct. Then we study some important properties of neighborhood union reduct. Finally, we develop an algorithm to compute the neighborhood union reduct in an information system.

Definition 4.1

Let  be an information system, and

be an information system, and  the family of attribute subsets.

the family of attribute subsets.  is called a neighborhood union reducible element of

is called a neighborhood union reducible element of  , if each \(x\in U\), there exists \(\Gamma _{x}\subseteq \{1,2,\ldots ,i-1,i+1,\ldots ,m\}\) such that \([x]_{A_{i}}=\cup _{j\in \Gamma _{x}} [x]_{A_{j}}\); Otherwise, \(A_{i}\) is called a neighborhood union irreducible element of

, if each \(x\in U\), there exists \(\Gamma _{x}\subseteq \{1,2,\ldots ,i-1,i+1,\ldots ,m\}\) such that \([x]_{A_{i}}=\cup _{j\in \Gamma _{x}} [x]_{A_{j}}\); Otherwise, \(A_{i}\) is called a neighborhood union irreducible element of  . If every element in

. If every element in  is irreducible, we say that

is irreducible, we say that  is irreducible; Otherwise,

is irreducible; Otherwise,  is reducible.

is reducible.

Definition 4.2

Let  be an information system, and

be an information system, and  the family of attribute subsets. The new family of attribute subsets

the family of attribute subsets. The new family of attribute subsets  through the above reduction is called the neighborhood union reduct of

through the above reduction is called the neighborhood union reduct of  , and denoted by

, and denoted by  .

.

Example 3.1 shows that the arbitrary union reduct  is not unique. Now, we investigate that whether the neighborhood union reduct

is not unique. Now, we investigate that whether the neighborhood union reduct  is unique. In the following, an example is employed to answer the question.

is unique. In the following, an example is employed to answer the question.

Example 4.1

Let  be an information system, where \(U=\{x_{1},x_{2},\ldots ,x_{9}\}\),

be an information system, where \(U=\{x_{1},x_{2},\ldots ,x_{9}\}\),  .

.

Then, we can find that  or \(\{A_{1},A_{2},A_{4},A_{5}\}\). So

or \(\{A_{1},A_{2},A_{4},A_{5}\}\). So  is not unique.

is not unique.

Compared with Proposition 3.1, the similar result with respect to the neighborhood union reduct is shown as follows:

Proposition 4.1

Let  be an information system, and

be an information system, and  . Suppose

. Suppose  is a neighborhood union reducible element of

is a neighborhood union reducible element of  , and

, and  . If for each \(x\in U\), we have \([x]_{A_{i}}\ne [x]_{A_{j}}\), then \(A_{j}\) is a neighborhood union reducible element of

. If for each \(x\in U\), we have \([x]_{A_{i}}\ne [x]_{A_{j}}\), then \(A_{j}\) is a neighborhood union reducible element of  if and only if \(A_{j}\) is a neighborhood union reducible element of

if and only if \(A_{j}\) is a neighborhood union reducible element of  .

.

In the following, we give an algorithm to find the neighborhood union reduct  .

.

Similarly, we have the following results.

Proposition 4.2

Let  be an information system,

be an information system,  , and \(X\subseteq U\). If

, and \(X\subseteq U\). If  is a neighborhood union reducible element of

is a neighborhood union reducible element of  , then the optimistic multi-granulation lower approximations of X with respect to

, then the optimistic multi-granulation lower approximations of X with respect to  and

and  , respectively, are same.

, respectively, are same.

Proof

The proof is similar to that of Proposition 3.2. \(\square \)

Proposition 4.3

Let  be an information system,

be an information system,  , and \(X\subseteq U\). If

, and \(X\subseteq U\). If  is a neighborhood union reducible element of

is a neighborhood union reducible element of  , then the optimistic multi-granulation upper approximations of X with respect to

, then the optimistic multi-granulation upper approximations of X with respect to  and

and  , respectively, are same.

, respectively, are same.

Proof

The proof is similar to that of Proposition 3.3. \(\square \)

According to Propositions 4.2 and 4.3, we have the following result.

Theorem 4.1

Let  be an information system,

be an information system,  , and \(X\subseteq U\). Then the optimistic multi-granulation lower and upper approximations of X with respect to

, and \(X\subseteq U\). Then the optimistic multi-granulation lower and upper approximations of X with respect to  and

and  , respectively, are same.

, respectively, are same.

If  is a neighborhood union reducible element of

is a neighborhood union reducible element of  , and \(X\subseteq U\), then, are the pessimistic multi-granulation lower approximations of X with respect to

, and \(X\subseteq U\), then, are the pessimistic multi-granulation lower approximations of X with respect to  and

and  , respectively, same?

, respectively, same?

Proposition 4.4

Let  be an information system,

be an information system,  , and \(X\subseteq U\). If

, and \(X\subseteq U\). If  is a neighborhood union reducible element of

is a neighborhood union reducible element of  , then the pessimistic multi-granulation lower approximations of X with respect to

, then the pessimistic multi-granulation lower approximations of X with respect to  and

and  , respectively, are same.

, respectively, are same.

Proof

Based on Definition 2.2, it is obvious that \(\underline{PM_{\sum _{i=1}^m A_{i}}}(X)\subseteq \underline{PM_{\sum _{i=1,i\ne j}^m A_{i}}}(X)\). On the other hand, for each \(x\in \underline{PM_{\sum _{i=1,i\ne j}^m A_{i}}}(X)\), we have that \(x\in [x]_{A_{i}},~i=1,2,\ldots ,j-1,j+1,\ldots ,m.\) Because  is a neighborhood union reducible element of

is a neighborhood union reducible element of  , then there exists \(\Gamma _{x}\subseteq \{1,2,\ldots ,j-1,j+1,\ldots ,m\}\) such that \([x]_{A_{j}}=\cup _{t\in \Gamma _{x}} [x]_{A_{t}}\). So \(x\in [x]_{A_{j}}\). i.e., \(x\in [x]_{A_{i}},~i=1,2,\ldots ,m.\) Therefore, \(\underline{PM_{\sum _{i=1,i\ne j}^m A_{i}}}(X)\subseteq \underline{PM_{\sum _{i=1}^m A_{i}}}(X)\). In a word, we have that \(\underline{PM_{\sum _{i=1}^m A_{i}}}(X)=\underline{PM_{\sum _{i=1,i\ne j}^m A_{i}}}(X)\). \(\square \)

, then there exists \(\Gamma _{x}\subseteq \{1,2,\ldots ,j-1,j+1,\ldots ,m\}\) such that \([x]_{A_{j}}=\cup _{t\in \Gamma _{x}} [x]_{A_{t}}\). So \(x\in [x]_{A_{j}}\). i.e., \(x\in [x]_{A_{i}},~i=1,2,\ldots ,m.\) Therefore, \(\underline{PM_{\sum _{i=1,i\ne j}^m A_{i}}}(X)\subseteq \underline{PM_{\sum _{i=1}^m A_{i}}}(X)\). In a word, we have that \(\underline{PM_{\sum _{i=1}^m A_{i}}}(X)=\underline{PM_{\sum _{i=1,i\ne j}^m A_{i}}}(X)\). \(\square \)

If  is a neighborhood union reducible element of

is a neighborhood union reducible element of  , and \(X\subseteq U\), then, are the pessimistic multi-granulation upper approximations of X with respect to

, and \(X\subseteq U\), then, are the pessimistic multi-granulation upper approximations of X with respect to  and

and  , respectively, still same?

, respectively, still same?

Proposition 4.5

Let  be an information system,

be an information system,  , and \(X\subseteq U\). If

, and \(X\subseteq U\). If  is a neighborhood union reducible element of

is a neighborhood union reducible element of  , then the pessimistic multi-granulation upper approximations of X with respect to

, then the pessimistic multi-granulation upper approximations of X with respect to  and

and  , respectively, are same.

, respectively, are same.

Proof

The proof is similar to that of Proposition 4.4. \(\square \)

From Propositions 4.4 and 4.5, we have the following result.

Theorem 4.2

Let  be an information system,

be an information system,  , and \(X\subseteq U\). Then the pessimistic multi-granulation lower and upper approximations of X with respect to

, and \(X\subseteq U\). Then the pessimistic multi-granulation lower and upper approximations of X with respect to  and

and  , respectively, are same.

, respectively, are same.

5 Attribute reduction with respect to neighborhood intersection

In this section, inspired by the concept of intersection reduct proposed by Chen et al. (2015), the concept of neighborhood intersection reduct are introduced. Meanwhile, some meaningful properties of neighborhood intersection reduct are studied.

Definition 5.1

Let  be an information system, and

be an information system, and  the family of attribute subsets.

the family of attribute subsets.  is called a neighborhood intersection reducible element of

is called a neighborhood intersection reducible element of  , if for each \(x\in U\), there exists \(\Gamma _{x}\subseteq \{1,2,\ldots ,i-1,i+1,\ldots ,m\}\) such that \([x]_{A_{i}}=\cap _{j\in \Gamma _{x}} [x]_{A_{j}}\); Otherwise, \(A_{i}\) is called a neighborhood intersection irreducible element of

, if for each \(x\in U\), there exists \(\Gamma _{x}\subseteq \{1,2,\ldots ,i-1,i+1,\ldots ,m\}\) such that \([x]_{A_{i}}=\cap _{j\in \Gamma _{x}} [x]_{A_{j}}\); Otherwise, \(A_{i}\) is called a neighborhood intersection irreducible element of  . If every element in

. If every element in  is irreducible, we say that

is irreducible, we say that  is irreducible; Otherwise,

is irreducible; Otherwise,  is reducible.

is reducible.

Definition 5.2

Let  be an information system, and

be an information system, and  the family of attribute subsets. The new family of attribute subsets

the family of attribute subsets. The new family of attribute subsets  through the above reduction is called the neighborhood intersection reduct of

through the above reduction is called the neighborhood intersection reduct of  , and denoted by

, and denoted by  .

.

Examples 3.1 and 4.1 show that the arbitrary union reduct  and the neighborhood union reduct

and the neighborhood union reduct  are both not unique. Next, we wonder if the neighborhood intersection reduct

are both not unique. Next, we wonder if the neighborhood intersection reduct  is unique. To address this issue, an example is shown as follows.

is unique. To address this issue, an example is shown as follows.

Example 5.1

Let  be an information system, where \(U=\{x_{1},x_{2},\ldots ,x_{9}\}\),

be an information system, where \(U=\{x_{1},x_{2},\ldots ,x_{9}\}\),  .

.

Then, we can find that  or \(\{A_{1},A_{3},A_{4},A_{5}\}\). Therefore,

or \(\{A_{1},A_{3},A_{4},A_{5}\}\). Therefore,  is not unique.

is not unique.

In the following, we will construct an algorithm for finding the neighborhood intersection reduct  .

.

Proposition 5.1

Let  be an information system,

be an information system,  , and \(X\subseteq U\). If

, and \(X\subseteq U\). If  is a neighborhood intersection reducible element of

is a neighborhood intersection reducible element of  , then the pessimistic multi-granulation lower approximations of X with respect to

, then the pessimistic multi-granulation lower approximations of X with respect to  and

and  , respectively, are same.

, respectively, are same.

Proof

It is clear that \(\underline{PM_{\sum _{i=1}^m A_{i}}}(X)\subseteq \underline{PM_{\sum _{i=1,i\ne j}^m A_{i}}}(X)\). Meanwhile, for each \(x\in \underline{PM_{\sum _{i=1,i\ne j}^m A_{i}}}(X)\), we have \([x]_{A_{s}}\subseteq X,~s\in \{1,2,\ldots ,j-1,j+1,\ldots ,m\}\). Because \(A_{j}\) is a reducible element of  , then there exists \(\Gamma _{x}\subseteq \{1,2,\ldots ,j-1,j+1,\ldots ,m\}\) such that \([x]_{A_{j}}=\cap _{t\in \Gamma _{x}} [x]_{A_{t}}\). Then, \([x]_{A_{j}}\subseteq [x]_{A_{t}},~t\in \Gamma _{x}\). i.e., \([x]_{A_{j}}\subseteq X\). So, \(x\in \underline{PM_{\sum _{i=1}^m A_{i}}}(X)\). Therefore, \(\underline{PM_{\sum _{i=1}^m A_{i}}}(X)=\underline{PM_{\sum _{i=1,i\ne j}^m A_{i}}}(X)\). \(\square \)

, then there exists \(\Gamma _{x}\subseteq \{1,2,\ldots ,j-1,j+1,\ldots ,m\}\) such that \([x]_{A_{j}}=\cap _{t\in \Gamma _{x}} [x]_{A_{t}}\). Then, \([x]_{A_{j}}\subseteq [x]_{A_{t}},~t\in \Gamma _{x}\). i.e., \([x]_{A_{j}}\subseteq X\). So, \(x\in \underline{PM_{\sum _{i=1}^m A_{i}}}(X)\). Therefore, \(\underline{PM_{\sum _{i=1}^m A_{i}}}(X)=\underline{PM_{\sum _{i=1,i\ne j}^m A_{i}}}(X)\). \(\square \)

Proposition 5.2

Let  be an information system,

be an information system,  , and \(X\subseteq U\). If

, and \(X\subseteq U\). If  is a neighborhood intersection reducible element of

is a neighborhood intersection reducible element of  , then the pessimistic multi-granulation upper approximations of X with respect to

, then the pessimistic multi-granulation upper approximations of X with respect to  and

and  , respectively, are same.

, respectively, are same.

According to Propositions 5.1 and 5.2, the following result holds.

Theorem 5.1

Let  be an information system,

be an information system,  , and \(X\subseteq U\). Then the pessimistic multi-granulation lower and upper approximations of X with respect to

, and \(X\subseteq U\). Then the pessimistic multi-granulation lower and upper approximations of X with respect to  and

and  , respectively, are same.

, respectively, are same.

If  is a neighborhood intersection reducible element of

is a neighborhood intersection reducible element of  , and \(X\subseteq U\), are the optimistic multi-granulation lower approximations of X with respect to

, and \(X\subseteq U\), are the optimistic multi-granulation lower approximations of X with respect to  and

and  , respectively, same? Then, a counterexample is given as follows:

, respectively, same? Then, a counterexample is given as follows:

Example 5.2

(Continued from Example 5.1) According to Example 5.1, we know that \(A_{5}\) is a neighborhood intersection reducible element of  . For \(X=\{x_{2},x_{4},x_{6},x_{7}\}\), we have that \(\underline{OM_{\sum _{i=1}^5 A_{i}}}(X)=\{x_{2},x_{4},x_{7}\}, \underline{OM_{\sum _{i=1}^4 A_{i}}}(X)=\{x_{4},x_{7}\}\). Therefore, \(\underline{OM_{\sum _{i=1}^5 A_{i}}}(X)\ne \underline{OM_{\sum _{i=1}^4 A_{i}}}(X)\).

. For \(X=\{x_{2},x_{4},x_{6},x_{7}\}\), we have that \(\underline{OM_{\sum _{i=1}^5 A_{i}}}(X)=\{x_{2},x_{4},x_{7}\}, \underline{OM_{\sum _{i=1}^4 A_{i}}}(X)=\{x_{4},x_{7}\}\). Therefore, \(\underline{OM_{\sum _{i=1}^5 A_{i}}}(X)\ne \underline{OM_{\sum _{i=1}^4 A_{i}}}(X)\).

Similarly, if \(A_{i}\) is a neighborhood intersection reducible element of  , then the optimistic multi-granulation upper approximations of X with respect to

, then the optimistic multi-granulation upper approximations of X with respect to  and

and  , respectively, are different.

, respectively, are different.

6 Interrelationships among the three types of attribute reductions

In this section, we will deeply explore the interrelationships among several types of attribute reducts.

Proposition 6.1

Let  be an information system, and

be an information system, and  . If

. If  is a neighborhood union reducible element of

is a neighborhood union reducible element of  , then \(A_{j}\) is an arbitrary union reducible element of

, then \(A_{j}\) is an arbitrary union reducible element of  .

.

Proof

It is clear by Definitions 3.1 and 4.1.

Conversely, if  is an arbitrary union reducible element of

is an arbitrary union reducible element of  , is \(A_{j}\) a neighborhood union reducible element of

, is \(A_{j}\) a neighborhood union reducible element of  ? A counterexample is given as follows: \(\square \)

? A counterexample is given as follows: \(\square \)

Example 6.1

(Continued from Example 3.2) It can be found that \(A_{3}\) is an arbitrary union reducible element of  . However, \(A_{3}\) is not a neighborhood union reducible element of

. However, \(A_{3}\) is not a neighborhood union reducible element of  .

.

Proposition 6.2

Let  be an information system,

be an information system,  , then

, then  and

and  are both the arbitrary union reducts of

are both the arbitrary union reducts of  .

.

Proof

It is clear by Definitions 3.1 and 4.1.\(\square \)

Theorem 6.1

Let  be an information system,

be an information system,  , and \(X\subseteq U\), then the optimistic multi-granulation lower and upper approximations of X with respect to

, and \(X\subseteq U\), then the optimistic multi-granulation lower and upper approximations of X with respect to  ,

,  and

and  , respectively, are same.

, respectively, are same.

Theorem 6.2

Let  be an information system,

be an information system,  , and \(X\subseteq U\), then the pessimistic multi-granulation lower and upper approximations of X with respect to

, and \(X\subseteq U\), then the pessimistic multi-granulation lower and upper approximations of X with respect to  ,

,  and

and  , respectively, are same.

, respectively, are same.

Example 6.2

Let  be an information system, where \(U=\{x_{1},x_{2},\ldots ,x_{10}\}\),

be an information system, where \(U=\{x_{1},x_{2},\ldots ,x_{10}\}\),  . Let

. Let

Then, It can be found that  or \(\{A_{1},A_{3}\}\),

or \(\{A_{1},A_{3}\}\),  . Furthermore, we have that

. Furthermore, we have that  .

.

Proposition 6.3

Let  be an information system, and

be an information system, and  . Then we have that

. Then we have that  .

.

Proof

It is clear by Definitions 3.1 and 4.1. \(\square \)

According to Proposition 6.3, we have that  . Then, does the equation

. Then, does the equation  hold? A counterexample is given as follows:

hold? A counterexample is given as follows:

Example 6.3

(Continued from Example 6.2) Let  . However,

. However,  . Therefore,

. Therefore,  . Furthermore, by Proposition 6.4, it can be easily found that

. Furthermore, by Proposition 6.4, it can be easily found that  .

.

Example 6.4

Let  be an information system, where \(U=\{x_{1},x_{2},\ldots ,x_{10}\}\),

be an information system, where \(U=\{x_{1},x_{2},\ldots ,x_{10}\}\),  . Let

. Let

It can be found that  ,

,  , and

, and  .

.

Then, we have  , and

, and  . Similarly, it can be found that

. Similarly, it can be found that  , and

, and  .

.

Here, we will raise a question: does the equation  hold? In the following, a counterexample is employed to answer the question.

hold? In the following, a counterexample is employed to answer the question.

Example 6.5

Let  be an information system, where \(U=\{x_{1},x_{2},\ldots ,x_{12}\}\), and

be an information system, where \(U=\{x_{1},x_{2},\ldots ,x_{12}\}\), and  . Let

. Let

Then we have  , and

, and  . Therefore,

. Therefore,  .

.

Next, the main results of this paper are shown in the following tables.

The main results with respect to optimistic multi-granulation lower and upper approximations

The main results with respect to pessimistic multi-granulation lower and upper approximations

Remark

In the above two tables, symbols  mean the optimistic multi-granulation lower approximations of \(X\subseteq U\) with respect to

mean the optimistic multi-granulation lower approximations of \(X\subseteq U\) with respect to  , respectively. Other symbols in tables indicate similar meanings.

, respectively. Other symbols in tables indicate similar meanings.

7 An example

As an application of several types of attribute reducts proposed in the paper, a real-world example of a multi-granulation information system with respect to exam scores is employed. Then, as many researchers are working on various optimization theories Xu et al. (2017) and algorithms (Abualigah et al. 2018a, b, c, d, 2017a, b, c; Abualigah and Khader 2017d; Abualigah et al. 2017e; Abualigah and Hanandeh 2015; Al-Betar and Abualigah 2017), we will explain how to choose the optimal attribute reduct so as to compute the multi-granulation lower and upper approximations more efficiently.

Example 7.1

The following table indicates a multi-granulation information system  with respect to exam scores, \(U=\{x_{1},x_{2},\ldots ,x_{30}\}\) is a universe including thirty students of mechanical engineering in Jimei University,

with respect to exam scores, \(U=\{x_{1},x_{2},\ldots ,x_{30}\}\) is a universe including thirty students of mechanical engineering in Jimei University,  is a family of attribute sets, where \(A_{1}=\{a_{1}^{1},a_{2}^{1},a_{3}^{1}\}\), and \(a_{1}^{1},a_{2}^{1},a_{3}^{1}\) stands for three courses: Advanced Mathematics, Linear Algebra, Probability and Statistics, respectively; \(A_{2}=\{a_{1}^{2},a_{2}^{2}\}\), and \(a_{1}^{2},a_{2}^{2}\) stands for two courses: College English 1, College English 2, respectively; \(A_{3}=\{a_{1}^{3},a_{2}^{3},a_{3}^{3}\}\), and \(a_{1}^{3},a_{2}^{3},a_{3}^{3}\) stands for three courses: Engineering Drawing, Engineering Mechanics, Feedback Control of Dynamic Systems, respectively; \(A_{4}=\{a_{1}^{4},a_{2}^{4}\}\), and \(a_{1}^{4},a_{2}^{4}\) stands for two courses: Programming in C, Computer Practice, respectively; \(A_{5}=\{a_{1}^{5}\}\), and \(a_{1}^{5}\) stands for College Physics; \(A_{6}=\{a_{1}^{6},a_{2}^{6}\}\), and \(a_{1}^{6},a_{2}^{6}\) stands for two courses: College PE 1, College PE 2, respectively; \(A_{7}=\{a_{1}^{7},a_{2}^{7}\}\), and \(a_{1}^{7},a_{2}^{7}\) stands for two courses: Experiment 1, Experiment 2, respectively. At the same time, the exam scores are divided into four grades: Good, Medium, Pass and Fail, denoted by 1, 2, 3 and 4, respectively.

is a family of attribute sets, where \(A_{1}=\{a_{1}^{1},a_{2}^{1},a_{3}^{1}\}\), and \(a_{1}^{1},a_{2}^{1},a_{3}^{1}\) stands for three courses: Advanced Mathematics, Linear Algebra, Probability and Statistics, respectively; \(A_{2}=\{a_{1}^{2},a_{2}^{2}\}\), and \(a_{1}^{2},a_{2}^{2}\) stands for two courses: College English 1, College English 2, respectively; \(A_{3}=\{a_{1}^{3},a_{2}^{3},a_{3}^{3}\}\), and \(a_{1}^{3},a_{2}^{3},a_{3}^{3}\) stands for three courses: Engineering Drawing, Engineering Mechanics, Feedback Control of Dynamic Systems, respectively; \(A_{4}=\{a_{1}^{4},a_{2}^{4}\}\), and \(a_{1}^{4},a_{2}^{4}\) stands for two courses: Programming in C, Computer Practice, respectively; \(A_{5}=\{a_{1}^{5}\}\), and \(a_{1}^{5}\) stands for College Physics; \(A_{6}=\{a_{1}^{6},a_{2}^{6}\}\), and \(a_{1}^{6},a_{2}^{6}\) stands for two courses: College PE 1, College PE 2, respectively; \(A_{7}=\{a_{1}^{7},a_{2}^{7}\}\), and \(a_{1}^{7},a_{2}^{7}\) stands for two courses: Experiment 1, Experiment 2, respectively. At the same time, the exam scores are divided into four grades: Good, Medium, Pass and Fail, denoted by 1, 2, 3 and 4, respectively.

An information system with respect to exam scores

U | \(~a_{1}^{1}\) | \(a_{2}^{1}\) | \(a_{3}^{1}\) | \(a_{1}^{2}\) | \(a_{2}^{2}\) | \(a_{1}^{3}\) | \(a_{2}^{3}\) | \(a_{3}^{3}\) | \(a_{1}^{4}\) | \(a_{2}^{4}\) | \(a_{1}^{5}\) | \(a_{1}^{6}\) | \(a_{2}^{6}\) | \(a_{1}^{7}\) | \(a_{2}^{7}\) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

\(x_{1}\) | 2 | 2 | 2 | 2 | 2 | 2 | 1 | 2 | 2 | 1 | 1 | 1 | 2 | 2 | 2 |

\(x_{2}\) | 2 | 2 | 3 | 2 | 2 | 2 | 2 | 3 | 2 | 2 | 1 | 2 | 3 | 2 | 2 |

\(x_{3}\) | 3 | 2 | 2 | 2 | 3 | 2 | 2 | 3 | 2 | 2 | 2 | 3 | 3 | 2 | 1 |

\(x_{4}\) | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 2 | 4 | 3 | 4 | 3 | 2 |

\(x_{5}\) | 1 | 1 | 2 | 1 | 1 | 1 | 1 | 2 | 1 | 1 | 1 | 2 | 2 | 1 | 1 |

\(x_{6}\) | 2 | 1 | 2 | 1 | 2 | 2 | 1 | 2 | 1 | 2 | 1 | 1 | 2 | 1 | 2 |

\(x_{7}\) | 4 | 3 | 4 | 3 | 2 | 3 | 2 | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3 |

\(x_{8}\) | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 2 | 4 | 3 | 4 | 3 | 2 |

\(x_{9}\) | 2 | 2 | 3 | 2 | 2 | 2 | 2 | 3 | 2 | 2 | 1 | 3 | 3 | 2 | 2 |

\(x_{10}\) | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 2 | 1 | 1 |

\(x_{11}\) | 2 | 2 | 3 | 2 | 2 | 2 | 2 | 3 | 2 | 2 | 1 | 2 | 3 | 2 | 2 |

\(x_{12}\) | 2 | 3 | 3 | 2 | 3 | 3 | 2 | 3 | 2 | 3 | 2 | 3 | 3 | 2 | 3 |

\(x_{13}\) | 2 | 1 | 1 | 2 | 2 | 1 | 1 | 1 | 1 | 2 | 1 | 2 | 3 | 2 | 2 |

\(x_{14}\) | 2 | 3 | 4 | 3 | 2 | 3 | 3 | 4 | 3 | 3 | 3 | 2 | 2 | 3 | 3 |

\(x_{15}\) | 2 | 3 | 3 | 2 | 3 | 3 | 2 | 3 | 2 | 3 | 2 | 3 | 4 | 2 | 3 |

\(x_{16}\) | 2 | 3 | 2 | 3 | 3 | 2 | 3 | 3 | 2 | 3 | 3 | 3 | 3 | 2 | 3 |

\(x_{17}\) | 1 | 1 | 2 | 1 | 2 | 1 | 1 | 2 | 1 | 1 | 1 | 2 | 2 | 1 | 1 |

\(x_{18}\) | 2 | 1 | 2 | 1 | 2 | 2 | 1 | 2 | 1 | 2 | 1 | 1 | 2 | 1 | 2 |

\(x_{19}\) | 3 | 2 | 2 | 2 | 3 | 2 | 2 | 3 | 2 | 2 | 2 | 3 | 3 | 2 | 1 |

\(x_{20}\) | 2 | 2 | 2 | 2 | 2 | 2 | 1 | 2 | 2 | 1 | 1 | 2 | 3 | 2 | 2 |

\(x_{21}\) | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 2 | 1 | 1 |

\(x_{22}\) | 2 | 3 | 2 | 3 | 3 | 2 | 3 | 3 | 2 | 3 | 3 | 3 | 4 | 2 | 3 |

\(x_{23}\) | 1 | 1 | 2 | 1 | 2 | 1 | 1 | 2 | 1 | 1 | 1 | 2 | 2 | 1 | 1 |

\(x_{24}\) | 2 | 2 | 3 | 2 | 2 | 2 | 2 | 3 | 2 | 2 | 1 | 2 | 3 | 2 | 2 |

\(x_{25}\) | 2 | 3 | 4 | 3 | 2 | 3 | 3 | 4 | 3 | 3 | 3 | 1 | 2 | 3 | 3 |

\(x_{26}\) | 3 | 2 | 2 | 2 | 3 | 2 | 2 | 3 | 2 | 2 | 2 | 3 | 3 | 2 | 1 |

\(x_{27}\) | 2 | 1 | 2 | 1 | 2 | 2 | 1 | 2 | 1 | 2 | 1 | 2 | 3 | 1 | 2 |

\(x_{28}\) | 2 | 3 | 3 | 2 | 3 | 3 | 2 | 3 | 2 | 3 | 2 | 3 | 4 | 2 | 3 |

\(x_{29}\) | 2 | 2 | 2 | 2 | 2 | 2 | 1 | 2 | 2 | 1 | 1 | 1 | 2 | 2 | 2 |

\(x_{30}\) | 2 | 2 | 2 | 2 | 2 | 2 | 1 | 2 | 2 | 1 | 1 | 2 | 3 | 2 | 2 |

From the above information system, we have that \(U/A_{1}=\{\{x_{1},x_{20},x_{29},x_{30}\},\{x_{2},x_{9},x_{11},x_{24}\},\{x_{3},x_{19},x_{26}\},\{x_{4},x_{8}\}, \{x_{5},x_{17},x_{23}\},\{x_{6},x_{18},x_{27}\},\{x_{7}\}, \{x_{10},x_{21}\},\{x_{12},x_{15},x_{28}\},\{x_{13}\},\{x_{14},x_{25}\},\{x_{16},x_{22}\}\}\). Similarly, \(U/A_{i}, i=2,3,4,5,6,7\) can be easily presented.

According to Algorithms 1, 2 and 3, we have  ,

,  ,

,  . Meanwhile, it is clear that

. Meanwhile, it is clear that  ,

,  , and

, and  . Therefore, we have seven attribute reducts with respect to

. Therefore, we have seven attribute reducts with respect to  . i.e.,

. i.e.,  ,

,  ,

,  ,

,  ,

,  ,

,  , and

, and  .

.

From the view of granular computing, we will raise a question: which reduct is the best selection to compute the multi-granulation lower and upper approximations? On the one hand, because the optimistic multi-granulation lower and upper approximations with respect to  ,

,  ,

,  , and

, and  , respectively, are same, and from above discussion, it can be found that

, respectively, are same, and from above discussion, it can be found that  . Therefore,

. Therefore,  ,

,  , and

, and  are all the optimal selections to compute the optimistic multi-granulation lower and upper approximations.

are all the optimal selections to compute the optimistic multi-granulation lower and upper approximations.

On the other hand, we can obtain the following relations:  and

and  , although the pessimistic multi-granulation lower and upper approximations with respect to

, although the pessimistic multi-granulation lower and upper approximations with respect to  ,

,  ,

,  and

and  , respectively, are same. Thus

, respectively, are same. Thus  is the optimal attribute reduct to compute the pessimistic multi-granulation lower and upper approximations.

is the optimal attribute reduct to compute the pessimistic multi-granulation lower and upper approximations.

8 Conclusion

In this part, we first introduce the main conclusions obtained in the paper. Then, we make further prospects for future research work.

(i) Main conclusions of our paper It is clear that reduct theory plays an important role in pattern recognition and machine learning. Although multi-granulation rough set theory has been widely studied, attribute reduct of multi-granulation rough sets has not yet been explored. Therefore, the main content of this paper is to investigate the attribute reduct theory of multi-granulation information system. Specifically, the following problems are solved in this paper. Based on the definitions of multi-granulation upper and lower approximations, three types of attribute reducts are proposed, which are called arbitrary union reduct, neighborhood union reduct and neighborhood intersection reduct, respectively. Then, many important and interesting properties of these attribute reducts are researched. In order to solve practical problems, three effective algorithms are designed. At the same time, the relationships among these three attribute reducts are deeply explored. At last, a real-world example of multi-granulation information system is employed. The multi-granulation information system consists of exam scores of thirty students of mechanical engineering in Jimei University. According to the given algorithms, all the reducts in the information system are calculated one by one. Meanwhile, how to select the optimal reduct is also discussed in detail.

(ii) Future research work The theoretical results in this paper establish a basis for studying attribute reducts of the multi-granulation information systems. On this basis, it is possible to further study the attribute reducts with respect to consistent and inconsistent multi-granulation information systems. Furthermore, we need to develop better algorithms to deal with practical issues in the future.

References

Abualigah L, Khader A, Hanandeh E (2018) Hybrid clustering analysis using improved krill herd algorithm. Appl Intell 48(11):4047–4071

Abualigah L, Khader A, Hanandeh E (2018) A combination of objective functions and hybrid krill herd algorithm for text document clustering analysis. Eng Appl Artif Intell 73:111–125

Abualigah L, Khader A, Hanandeh E (2018) A novel weighting scheme applied to improve the text document clustering techniques. Innovative computing, optimization and its applications. Springer, Cham, pp 305–320

Abualigah L, Khader A, Hanandeh E (2018) A hybrid strategy for krill herd algorithm with harmony search algorithm to improve the data clustering. intelligent decision technologies, preprint

Abualigah L, Khader A, Hanandeh E (2017) A new feature selection method to improve the document clustering using particle swarm optimization algorithm. J Comput Sci 25:456–466

Abualigah L, Khader A, Hanandeh E, Gandomi A (2017) A novel hybridization strategy for krill herd algorithm applied to clustering techniques. Appl Soft Comput 60:423–435

Abualigah L, Khader A, Al-Betar M, Hanandeh E (2017) A new hybridization strategy for krill herd algorithm and harmony search algorithm applied to improve the data clustering. Management 9:11

Abualigah L, Khader A (2017) Unsupervised text feature selection technique based on hybrid particle swarm optimization algorithm with genetic operators for the text clustering. J Supercomput 73(11):4773–4795

Abualigah L, Khader A, Al-Betar M, Alomari O (2017) Text feature selection with a robust weight scheme and dynamic dimension reduction to text document clustering. Expert Syst Appl 84:24–36

Abualigah L, Hanandeh E (2015) Applying genetic algorithms to information retrieval using vector space model. Int J Comput Sci Eng Appl 5(1):19

Al-Betar M, Abualigah L (2017) Big data and E-government: a review. In: The 8th IEEE international conference on information technology (ICIT). Amman, Jordan

Bonikowski Z, Bryniarski E, Wybraniec U (1998) Extensions and intentions in the rough set theory. Inf Sci 107:149–167

Baszczyński J, Slowiński R, Szelag M (2011) Sequential covering rule induction algorithm for variable consistency rough set approaches. Inf Sci 181(5):987–1002

Cattaneo G (1998) Abstract approximate spaces for rough theories. In: Polkowski Skowron (ed) Rough sets in knowledge discovery 1: methodology and applications. Physicaverlag, Heidelberg, pp 59–98

Chen D, hang W, Yeung D, Tsang E (2006) Rough approximation on a complete completely distributive lattice with applications to generalized rough sets. Inf Sci 176:1829–1848

Chen D, Wang C, Hu Q (2007) A new approach to attribute reduction of consistent and inconsistent covering decision systems with covering rough sets. Inf Sci 177(17):3500–3518

Chen D, Hu Q, Yang Y (2011) Parameterized attribute reduction with Gaussian kernel based fuzzy rough sets. Inf Sci 181(23):5169–5179

Chen J, Li J, Lin Y, Lin G, Ma Z (2015) Relations of reduction between covering generalized rough sets and concept lattices. Inf Sci 304:16–27

Diker M, Ugur A (2012) Textures and covering based rough sets. Inf Sci 184(1):44–63

Ge X, Li Z (2011) Definable subsets in covering approximation spaces. Int J Comput Math Sci 5(1):31–34

Kong Q, Xu W (2018) The comparative study of covering rough sets and multi-granulation rough sets. Soft Comput. https://doi.org/10.1007/s00500-018-3205-y

Kong Q, Xu W (2018) Operation properties and algebraic application of covering rough sets. Fundam Inf 160:385–408

Kong Q, Zhang X, Xu W (2018) Operation properties and algebraic properties of multi-covering rough sets. Granul Comput. https://doi.org/10.1007/s41066-018-0137-y

Kong Q, Wei Z (2017) Further study of multi-granulation fuzzy rough sets. J Intell Fuzzy Syst 32:2413–2424

Kong Q, Wei Z (2015) Covering-based fuzzy rough sets. J Intell Fuzzy Syst 29:2405–2411

Li J, Ren Y, Mei C, Qian Y, Yang X (2016) A comparative study of multi-granulation rough sets and concept lattices via rule acquisition. Knowl Based Syst 91:152–164

Li J, Huang C, Qi J, Qian Y, Liu W (2017) Three-way cognitive concept learning via multi-granularity. Inf Sci 378:244–263

Lin G, Liang J, Qian Y (2013) Multigranulation rough sers: from partition to covering. Inf Sci 241:101–118

Liu C, Wang M (2011) Covering fuzzy rough set based on multi-granulation. In: International conference on uncertainty reasoning and knowledge engineering. pp 146–149

Liu C, Miao D, Qian J (2014) On multi-granulation covering rough sets. Int J Approx Reason 55:1404–1418

Liu G, Zhu W (2008) The algebraic structures of generalized rough set theory. Inf Sci 178:4105–4113

Liu G, Sai Y (2009) A comparison of two types of rough sets induced by coverings. Int J Approx Reason 50:521–528

Pawlak Z (1982) Rough sets. Int J Comput Inf Sci 11(5):341–356

Qian Y, Liang J, Yao Y, Dang C (2010) MGRS: a multi-granulation rough set. Inf Sci 180:949–970

Qian Y, Liang J (2006) Rough set method based on multi-granulations, In: The 5th IEEE international conference on congnitive informatics. Beijing, China

She Y, he X (2003) On the structure of the multigranulation rough set model. Knowl Based Syst 151:81–92

Shi Z, Gong Z (2010) The futher investigation of covering-based rough sets: uncertainty characterization, similarity measure and generalized models. Inf Sci 180(19):3745–3763

Skowron A, Stepaniuk J (1996) Tolerance approximation spaces. Fundam Inf 27:245–253

Slowinski R, Vanderpooten D (2000) A generalized definition of rough approximations based on similarity. IEEE Trans Knowl Data Eng 12:331–336

Tan A, Li J, Lin Y (2015) Matrix-based set approximations and reductions in covering decision systems. Int J Approx Reason 59:68–80

Tsang E, Chen D, Yeung D (2008) Approximations and reducts with covering generalized rough sets. Comput Math Appl 56:279–289

Wang C, He Q, Chen D, Hu Q (2014) A novel method for attribute reduction of covering decision systems. Inf Sci 254:181–196

Wang C, Shao M, Sun B (2015) An improved attribute reduction scheme with covering based rough sets. Appl Soft Comput 26:235–243

Wang C, Shao M, He Q, Qian Y, Qi Y (2016) Feature subset selection based on fuzzy neighborhood rough sets. Knowl Based Syst 111(1):173–179

Wang C, Hu Q, Wang X, Chen D, Qian Y (2017) Feature selection based on neighborhood discrimination index. IEEE Trans Neural Netw Learn Syst. https://doi.org/10.1109/TNNLS.2710422

Wang C, He Q, Shao M, Xua Y, Hu Q (2017) A unified information measure for general binary relations. Knowl Based Syst 135(1):18–28

Xu W, Li Y, Liao X (2012) Approaches to attribute reductions based on rough set and matrix computation in inconsistent ordered information systems. Knowl Based Syst 41(5):78–91

Xu W, Wang Q, Zhang X (2011) Multi-granulation fuzzy rough sets in a fuzzy tolerance approximation space. Int J Fuzzy Syst 13:246–259

Xu W, Wang Q, Zhang X (2013) Multi-granulation rough sets based on tolerance relations. Soft Comput 17(7):1241–1252

Xu W, Wang Q, Luo S (2014) Multi-granulation fuzzy rough sets. J Intell Fuzzy Syst 26:1323–1340

Xu W, Li W, Zhang X (2017) Generalized multigranulation rough sets and optimal granularity selection. Granul Comput. https://doi.org/10.1007/s41066-017-0042-9

Yang X, Song X, Dou H, Yang J (2011) Multigranulation rough set: from crisp to fuzzy case. Ann Fuzzy Math Inf 1:55–70

Yao Y (2011) Information granulation and rough set approximation. Int J Intell Syst 16(1):87–104

Yao Y (1998) Constructive and algebraic methods of the theory of rough sets. Inf Sci 109:21–47

Zakowski W (1983) Approximations in the space (\(u,\pi \)). Demonstr Math 16:761–769

Zhang X, Kong Q (2016) On four types of multi-covering rough sets. Fundam Inf 147:457–476

Zhu W, Wang F (2003) Reduction and axiomization of covering generalized rough sets. Inf Sci 152:217–230

Acknowledgements

The authors are very grateful to the reviewers and editors for their valuable suggestions. This work is partially supported by National Natural Science Foundation of China (Nos.61472463, 61772002, 61402064), Fundamental Research Funds for the Central Universities (XDJK2019B029), Natural Science Foundation of Fujian Province (Nos. 2017J01763, 2017J01468, 2016J01310, 2016J01735, 2018J01538) and Research Startup Foundation of Jimei University (NO. ZQ2017004), Foundation of Education Department of Fujian Province, China (No. JAT160369).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kong, Q., Zhang, X., Xu, W. et al. Attribute reducts of multi-granulation information system. Artif Intell Rev 53, 1353–1371 (2020). https://doi.org/10.1007/s10462-019-09699-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10462-019-09699-3