Abstract

Probabilistic rough set model and graded rough set model are used to measure relative quantitative information and absolute quantitative information between equivalence classes and basic concepts, respectively. Since fuzzy concepts are more common in real life than classical concepts, how to use relative and absolute quantitative information to determine fuzzy concepts is a extremely important research topic. In this study, we propose a double-quantitative decision theory rough fuzzy set frame based on the fusion of decision theory rough set and graded rough set, and the framework mainly studies the fuzzy concepts in multigranulation approximation spaces. Three pairs of double-quantitative multigranulation decision theory rough fuzzy set models are established. Some basic characteristics of these models are discussed. The decision rules including relative and absolute quantitative information are studied. The intrinsic relationship between the double-quantitative decision theory rough fuzzy set and the multigranulation rough set is analyzed. Finally, an illustrative case of medical diagnosis is conducted to explain and evaluate the dual quantitative decision theory approach.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Rough set theory was proposed by Pawlak [1, 2] in 1982. It is an extension of classical set theory and can be considered as a mathematical and soft computing tool for dealing with inaccuracies, ambiguities and uncertainties in data analysis. In recent decades, it has attracted the attention of many researchers around the world [3,4,5,6,7] and has been applied to many fields, such as, machine learning, artificial intelligence, pattern recognition and decision making [8,9,10,11]. After decades of development and improvement, rough sets have produced many important fields, including attribute reduction [12], generalization of rough sets [13], mathematical structure [14] and uncertainty measurement of rough sets [7, 15,16,17,18,19]. Given there are no fault tolerance mechanisms between equivalence classes and basic concept set, several proposals of generalized quantitative rough set models are developed to resolve this limitation by using a graded set inclusion. The probabilistic rough set (PRS) introduces the probability uncertainty measure into rough set [20,21,22]. The probabilistic rough set extends the classical Pawlak rough set model. The major change is the consideration regarding the probability of an element being in a set to determine inclusion in approximation regions. Two probabilistic thresholds are used to determine the division between the boundary-positive region and boundary-negative region. Over the last two decades, probabilistic rough set theories, such as, 0.5-probabilistic rough set [22], decision-theoretic rough set [23], rough membership function [24], parameterized rough set [25], Bayesian rough set [26], game-theoretic rough set [27] and naive Bayesian rough set [26, 28] have been proposed to solve probabilistic decision-making problems by allowing a certain acceptable level of error. The graded rough set (GRS) model primarily consider the absolute quantitative information regarding the basic concepts and knowledge granules and is a generalization of the Pawlak rough set model.

DTRS and GRS as two useful expanded rough set models, they can reflect relative and absolute quantitative information about the degree of overlapping between equivalence classes and a basic set, respectively. The relative and absolute quantitative information are two distinctive objective sides that describe approximate space, and each has its own virtues and application environments, so none can be neglected. In recent decades, a lot of research interests are attracted by the double-quantitative fusion of relative quantitative information and absolute quantitative information [29,30,31,32].

The method of granular computing (GrC) was proposed by Zadeh [33], which is based on a single granulation structure and is another powerful tool in artificial intelligence and data processing. Since we can catch an element from different aspects [34] or different levels [35, 36], and we always meet different useful information sources for the same element, so we need to give an overall consideration for these information sources. Thus, the theory of granular computing should be generalized to suit multiple information sources. In order to meet actual needs, Qian et al. [37] first proposed multigranulation rough sets (MGRS). It has a more widely application scope, for example, decision making, feature selection, and so on [38,39,40,41,42,43,44]. Since for different requirements, a concept can be described by different multiple binary relations, many extensions of MGRSs have been proposed. For example, Qian et al. [45] discussed multigranulation decision-theoretic rough sets. The topological structures of multigranulation rough sets were discussed by She et al. [46]. Wu extended classical MGRS to a novel version based on a fuzzy binary relation [47]. Furthermore, multigranulation rough sets based on fuzzy binary relations [48] and multigranulation fuzzy rough sets based on classical tolerant relations [49] were defined. Liu et al. [50, 51] proposed fuzzy covering multigranulation rough sets. Liang et al. [52] proposed an efficient algorithm for feature selection in large-scale and multiple granulation data sets. These studies provide an abundant theoretical basis for studying the approach of double-quantitative decision-theoretic in multigranulation approximate space.

Decision making is an important issue in our daily life. However, the objects of many decision-making problems, such as measuring student achievement in comprehensive testing or the credit evaluation of a credit card applicant, could have more than two states in practice. Moreover, the states of the decision object are not necessarily disjoint and opposite each other. For a given student or credit card applicant, the evaluation results may not be described by two completely opposite states with Yes or No. That would be the case if a student is either a good student or a bad student, or if a credit card applicant is either a good credit risk or a bad credit risk. As a matter of fact, the evaluation results could be a semantic state with preferences, such as excellent or good or medium, high or medium or low and large or medium or small. This means that the evaluation results of a student or a credit card applicant could be excellent or good or medium. Obviously, these decision states are not completely opposite and disjoint, but they are fuzzy descriptions of the state of the object in the universe. So, for these decision-making problems, the states of the object approximated on the universe are a fuzzy set instead of a crisp set.

In the viewpoint of information quantification, the DTRS and GRS can respectively reflect relative and absolute quantitative information about the degree of overlap between equivalence classes and concept set. The relative and absolute quantitative information are two distinctive objective sides that describe approximate space, and each has its own virtues and application environments. Here, we illustrate a examples to highlight the significance of combining the relative quantification and absolute quantification in fuzzy approximation space, and the necessity of these two types of quantitative model is exhibited. Suppose a company is ready to purchase a large quantity of products in the near future. \(x_1\) and \(x_2\) are the suppliers of this product(i.e. the universe \(U=\{x_1, x_2\}\)), and the price difference between them is tiny. Therefore, the chief executive officer is preparing to check the credit ratings of the two companies over a period of 20 years to determine who will win the bid. The set of states is given by \(\Omega =\{\widetilde{A},\widetilde{B},\widetilde{C}\}\), where \(\widetilde{A}=Excellent(0.7<\widetilde{A}\le 1)\), \(\widetilde{B}=Good(0.5\le \widetilde{B}\le 0.7)\) and \(\widetilde{C}=Medium(0\le \widetilde{C}<0.5)\) are three fuzzy sets on universe U. We use the same symbol to denote a fuzzy set and the corresponding state. In real life, we only pay attention to whether the credit rating is up to the standard(=0.5). The survey results show that company \(x_1\) has not reached the credit rating standard\((<0.5)\) for 8 years in 20 years. company \(x_2\) has failed to meet the credit rating criteria\((<0.5)\) for 5 years in 20 years. It is clearly that the number of years \(x_1\) has not reached the standard is greater than \(x_2(8>5)\). But we still believe that \(x_1\) is a better candidate due to rate of compliance is \(48\%>37.5\%\), As this example suggests, the higher priority should be the relative quantitative information not the absolute quantitative information.

Based on these considerations, in this paper we focus on the rough approximation of a fuzzy concept on probabilistic approximation space. The motivation of this investigation is to develop a new double-quantitative multigranulation rough fuzzy decision approach by combining the graded rough set and decision-theoretic rough set based on fuzzy concept in multigranulation frame. There are three pairs of double-quantitative multigranulation decision-theoretic rough fuzzy set models be established which consist of two optimistic double-quantitative multigranulation decision-theoretic rough sets, two pessimistic double-quantitative multigranulation decision-theoretic rough sets and two mean double-quantitative multigranulation decision-theoretic rough sets.

The rest of this paper is structured as follows. Section 2 provides relevant basic concepts of rough set theory, fuzzy set, graded rough set, multigranulation rough set and decision-theoretic rough set. In Sect. 3, we establish several novel double-quantitative multigranulation decision-theoretic rough set models, the properties of these models are addressed and the decision rules are investigated. In Sect. 4, studies the relationships among three pairs of the proposed models. An illustrated case is conducted to evaluate the proposed double-quantitative multigranulation decision-theoretic approach and some decision rules are exhibited in Sect. 5. Finally, Sect. 6 gets the conclusions.

2 Preliminaries

In this section, we review some basic concepts such as rough set theory, fuzzy set, graded rough set, multigranulation rough set and decision-theoretic rough set.

2.1 Pawlak’s rough set

In the Pawlak’s rough set theory [1], an information system is a quadruple \(IS=(U,AT,V,f)\), where U is a nonempty finite set of objects, AT is a nonempty, and finite set of attributes, \(V=\bigcup \nolimits _{a\in AT}{{{V}_{a}}}\), where \(V_a\) is called the domain of the attribute a i.e. V is the union of attribute domains, and \(f : U \times AT\rightarrow V\) is an information function such that \(f(x,a)\in V_a\) for each \(a \in AT\) and \(x\in U\).

For \(\forall B\subseteq AT\), there is an associated indiscernibility relation or equivalence relation \(R_B\):

Obviously, \(R_B\) determines a partition of U,i.e.\(U/R_B=\{[x]_B|x\in U\}\), where \([x]_B=\{y\in U|(x,y)\in R_B\}\) is the equivalence class determined by \(x \in U\) with respect to equivalence relation \(R_B\).

In particular, a decision system (DS) is a quadruple \(DS=(U,AT\cup DT,V,f)\), where AT is the condition attribute set, DT is the decision attribute set, and \(AT \cap DT= \phi\).

Given an equivalence relation R on the universe U, and for any \(X \subseteq U\), the lower approximation and upper approximation of X are defined by

2.2 Fuzzy set

Zadeh [53] introduced the fuzzy set in which a fuzzy subset \(\widetilde{X}\) of U is defined as a function assigning is defined as a function assigning to each element x of U. The value \(\widetilde{X}(x)\in [0, 1]\) and \(\widetilde{X}(x)\) is referred to as the membership degree of x to the fuzzy set \(\widetilde{X}\). Let \(\mathcal {F}(U)\) denotes all fuzzy subsets of U. For any fuzzy concept \({\widetilde{X_1}}, {\widetilde{X_2}}\in \mathcal {F}(U)\), we say that \({\widetilde{X_1}}\) is contained in \({\widetilde{X_2}}\) , denoted by \({\widetilde{X_1}} \subseteq {\widetilde{X_2}}\), if \({\widetilde{X_1}}(x)\le {\widetilde{X_2}}(x)\) for all \(x \in U\), we say that\({\widetilde{X_1}}={\widetilde{X_2}}\) if and only if \({\widetilde{X_1}} \subseteq {\widetilde{X_2}}\) and\({\widetilde{X_1}} \supseteq {\widetilde{X_2}}\), given that \({\widetilde{X_1}}, {\widetilde{X_2}}\in \mathcal {F}(U)\) and \(\forall x \in U\). The basic computing rules of fuzzy set are described as follows.

Here “\(\vee\)” and “\(\wedge\) ”are the maximum operation and minimum operation, respectively. The \({\widetilde{X_{1}}}^{c}\) is the complementary set of \({\widetilde{X_{1}}}\). And, Sarkar [54] proposed a rough-fuzzy membership function for any two fuzzy sets (\({\widetilde{X_{1}}}\) and \({\widetilde{X_{2}}}\)) of the universe of discourse as: \({{\mu }_{\widetilde{{{X}_{1}}}}}=\frac{\left| {\widetilde{{{X}_{1}}}}\cup {\widetilde{{{X}_{2}}}} \right| }{\left| {\widetilde{{{X}_{2}}}} \right| }, x\in U\). Here \(\left| {\widetilde{{{X}_{1}}}} \right| =\sum {{\widetilde{{{X}_{1}}}}(x)}, x \in U\).

2.3 Graded rough set

Yao and Lin [55] explored the relationships between rough set theory and modal logics and proposed the GRS model based on graded modal logics. Suppose \(k \in \mathbf {N}\) is a non-negative integer, the lower and upper approximations are defined by following

These two approximations are called grade k lower and upper approximations of X with respect to A. If \(\overline{{{A}}}_{k}X = \underline{{{A}}}_{k}X,\) then X is called a definable set by grade k ; otherwise, X is called a rough set by grade k. It must be pointed out that the lower approximation included in the upper approximation does not hold usually. So, the boundary region could be defined as union of lower and upper boundary regions. Accordingly, we can get the following regions.

Moreover, if the set X is generalized to a fuzzy set \(\widetilde{X}\in \mathcal {F}(U)\), the GRS model will be generalized to graded rough fuzzy set (GRFS) model. The following definition can be got.

2.4 Multigranulation rough set

The multigranulation rough set(MGRS) is different from Pawlak rough set. The former is constructed on the basis of a family of indiscernibility relations instead of single indiscernibility relation. Let I be an information system in which \(A_1, A_2,\ldots , A_m \subseteq AT\), for any \(X\in U\), the optimistic multigranulation lower and upper approximations are denoted by:

Where the \([x]_{A_i}\) means the equivalence class of x in terms of attributes set \(A_i\) and \(\sim X\) is the complement of X. Obviously, we have the optimistic multigranulation upper approximation \({{\overline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}}^{O}}(X)=\{x\in U|{{[x]}_{{{A}_{1}}}}\cap X\ne \varnothing \wedge {{[x]}_{{{A}_{2}}}}\cap X\ne \varnothing \wedge \cdots \wedge {{[x]}_{{{A}_{m}}}}\cap X\ne \varnothing \}.\)

On the other strategy, the definition of pessimistic multigranulation lower and upper approximations can be given as follows:

Analogously, the pessimistic multigranulation upper approximation can be described as \({{\overline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}}^{P}}(X)=\{x\in U|{{[x]}_{{{A}_{1}}}}\cap X\ne \varnothing \vee {{[x]}_{{{A}_{2}}}}\cap X\ne \varnothing \vee \cdots \vee {{[x]}_{{{A}_{m}}}}\cap X\ne \varnothing \}.\)

2.5 Decision-theoretic rough set

Pawlak and Skowron [24] redefined the two approximations by using a rough membership function and the rough membership function \(\mu _A\) is defined by:

Bayesian decision procedure mainly deals with making decisions have minimum risk or cost under probabilistic uncertainty. In the Bayesian decision procedure, a finite set of states can be written as \(\Omega =\{ \omega _1 , \omega _2 , . . . , \omega _s\}\), and a finite set of r possible actions can be denoted by \(A = \{ a_1 , a_2 , . . . , a_r \}\). Let \(P(\omega _j|x)\) be the conditional probability of an object x being in state \(\omega _j\) given that the object is described by x. Let \(\lambda (a_i|\omega _j)\) denote the loss or the cost for taking action \(a_i\) when the state is \(\omega _j\) . The expected loss function associated with taking action \(a_i\) is given by

With respect to the membership of an object in X , we have a set of two states and a set of three actions for each state. The set of states is given by \(\Omega = \{ X, X^C \}\) indicating that an element is in X and not in X, respectively. The set of actions with respect to a state is given by \(A =\{ a_P , a_B , a_N \}\), where P, B and N represent the three actions in deciding \(x \in pos ( X ), x \in bn ( X )\), and \(x \in neg ( X )\), respectively. The loss function regarding the risk or the cost of actions in different states is given in the following:

\(\lambda _{PP} , \lambda _{BP}\) and \(\lambda {NP}\) denote the losses incurred for taking actions \(a_P , a_B\) and \(a_N\), respectively, when an object belongs to X . And \(\lambda _{PN} , \lambda _{BN}\) and \(\lambda _{NN}\) denote the losses incurred for taking the same actions when the object does not belong to X . The expected loss \(R ( a_i |[x]_A )\) associated with taking the individual actions can be expressed as [56].

When \(\lambda _{PP}\le \lambda _{NP}<\lambda _{BP}\) and \(\lambda _{BN}\le \lambda _{NN}<\lambda _{PN}\), the Bayesian decision procedure leads to the following minimum-risk decision rules:

-

(P) If \(P(X|[x]_A) \ge \gamma\) and \(P(X|[x]_A) \ge \alpha\), decide pos(X);

-

(N) If \(P(X|[x]_A)\le \beta\) and \(P(X|[x]_A) \le \gamma\), decideneg(X);

-

(B) If \(\beta \le P(X|[x]_A)\le \alpha\), decide bn(X).

Where the parameters \(\alpha\), \(\beta\) and \(\gamma\) are defined as:

If a loss function further satisfies the condition that \((\lambda _{PN}-\lambda _{BN})(\lambda _{NP}-\lambda _{BP}) \ge (\lambda _{BN}-\lambda _{NN})(\lambda _{BP}-\lambda _{PP})\), then we can get \(\alpha \ge \gamma \ge \beta\). Moreover, we can get that \(\alpha>\gamma >\beta\) if \(\alpha >\beta\), thus, the DTRS has the decision rules:

-

(P) If \(P(X|[x]_A)\ge \alpha\), decide pos(X);

-

(N) If \(P(X|[x]_A) \le \beta\), decide neg(X);

-

(B) If \(\beta<P(X|[x]_A) <\alpha\), decide bn(X).

Using these decision rules, we get the probabilistic approximations, namely, the lower and upper approximations of DTRS model as follows:

If \({{\overline{A}}_{(\alpha ,\beta )}}(X) = {{\underline{A}}_{(\alpha ,\beta )}}(X)\), then X is a definable set, otherwise X is a rough set. Here, \(pos_{(\alpha ,\beta )} (X) ={{\underline{A}}_{(\alpha ,\beta )}}(X)\), \(neg_{(\alpha ,\beta )} (X) = ( {{\overline{A}}_{(\alpha ,\beta )}}(X))^ c\), \(bn_{(\alpha ,\beta )}(X) = {{\overline{A}}_{(\alpha ,\beta )}}(X)-{{\underline{A}}_{(\alpha ,\beta )}}(X)\) are the positive region, negative region and boundary region, respectively.

Sun et al. [57] introduced the PRS model and its extensions. Let U be a non-empty finite universe and A be an equivalence relation of U and P be the probabilistic measure. For any \(\widetilde{X}\in \mathcal {F}(U)\), \(P(\widetilde{X}|{{[x]}_{A}})\) is called the conditional probability of fuzzy event \(\widetilde{X}\)given the description \([x]_A\). The\(P(\widetilde{X}|{{[x]}_{A}})\) is defined as follows:

The \(P(\widetilde{X}|{{[x]}_{A}})\) can also be explained as the probability that a randomly selected object \(x \in U\) belongs to the fuzzy concept \(\widetilde{X}\) given the description \([x]_A\). Based on the above conditional probability of fuzzy event \(\widetilde{X}\), the upper and lower approximations of fuzzy set \(\widetilde{X}\) with respect to \(\alpha\) and \(\beta\) are defined as follows:

3 Double-quantitative multigranulation decision-theoretic rough fuzzy set model

DTRS model based on Bayesian decision principle was initially proposed by Yao [56], which implies the relative quantitative information. The decision-theoretic approximations are made with \(0 \le \beta <\alpha \le 1\). The parameters \(\alpha\) and \(\beta\) were obtained from the losses of the Bayesian decision procedure, which are related to the relative quantitative information. The GRS is related to the absolute quantitative information. Therefore, the combination of DTR and GRS will further contribute to the relative and absolute quantification of the approximate space, so as to have relative and absolute fault tolerance. We know that there is a solid necessity to approximate a fuzzy concept in probabilistic approximation space or to discuss the theory of probabilistic rough set in a fuzzy environment for management decision-making in practice. Therefore, this paper attempts to establish double-quantitative multigranulation decision-theoretic rough fuzzy set(DqMDTRFS) model by using the idea of multigranulation in fuzzy space.

3.1 Decision-theoretic rough fuzzy set of Type-1 optimistic double-quantitative multigranulation (Type-1 O-DqMDTRFS)

Based on previous introduction, we will combine the DTRS and GRS models in a multigranulation framework to define an optimistic double-quantitative multigranulation decision theory rough fuzzy set model. The model that we discuss is a fuzzy concept that comprehensively describes relative and absolute quantitative information.

Definition 3.1

Let \(IS=(U,AT,V,f)\) be an information system, given \(A_1, A_2,\ldots , A_m\in AT(m\le 2^{|AT|})\) are granular structures, for any \(\widetilde{X}\in \mathcal {F}(U), \beta \le \alpha \in [0, 1]\), and \(k \in \mathbf {N}\). Then, the lower and upper approximations of the first type of optimistic double-quantitative multigranulation decision-theoretic rough fuzzy set are recorded as \((I^{O})\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{X})\) and \((I^{O})\overline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{X})\), respectively. They are defined as follows:

Based on this pair of approximation operators, the positive region, negative region, upper boundary region and lower boundary can be achieved as follows:

Here the “\(\vartriangle\)” is the symmetric difference of the upper and lower approximation sets.

Based on Definition 3.1, we can obtain some propositions of Type-1 O-DqMDTRFS, which are represented as follows:

Proposition 3.1

Let\(IS=(U,AT,V,f)\)be an information system, given\(A_1, A_2,\ldots , A_m\in AT(m\le 2^{|AT|})\)are granular structures, for any\(\widetilde{X}\in \mathcal {F}(U), \beta \le \alpha \in [0, 1]\), and\(k \in \mathbf {N}\). Then, the following properties hold.

-

1.

\((I^O)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{X})\supseteq {{\underline{{{A}_{i}}}}_{k}}(\widetilde{X})\);

-

2.

\((I^O)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{X})\subseteq {{\overline{{{A}_{i}}}}_{(\alpha ,\beta )}}(\widetilde{X})\);

-

3.

\((I^O)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{X})=\underset{i=1}{\overset{m}{\mathop \cup }}\,{{\underline{{{A}_{i}}}}_{k}}(\widetilde{X})\);

-

4.

\((I^O)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{X})=\underset{i=1}{\overset{m}{\mathop \cap }}\,{{\overline{{{A}_{i}}}}_{(\alpha ,\beta )}}(\widetilde{X})\);

-

5.

\((I^O)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{X})\subseteq (I^O)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{Y})\), when \(\widetilde{X}\subseteq \widetilde{Y}\in \mathcal {F}(U)\);

-

6.

\((I^O)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{X})\subseteq (I^O)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{Y})\), when \(\widetilde{X}\subseteq \widetilde{Y}\in \mathcal {F}(U)\);

-

7.

\((I^O)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{{{k}_{1}}}^{O}(\widetilde{X})\subseteq (I^O)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{{{k}_{2}}}^{O}(\widetilde{X})\), when \(k_1\le k_2\in \mathbf {N}\);

-

8.

\((I^O)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,{{\beta }_{1}})}^{O}(\widetilde{X})\supseteq (I^O)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,{{\beta }_{2}})}^{O}(\widetilde{X})\), when \(\beta _1\le \beta _2\in [0, 1]\);

-

9.

\((I^O)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{X}\cup \widetilde{Y})\supseteq (I^O)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{X})\cup (I^O)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{Y})\), \(\forall \widetilde{X}, \widetilde{Y}\in \mathcal {F}(U)\);

-

10.

\((I^O)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{X}\cup \widetilde{Y})\supseteq (I^O)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{X})\cup (I^O)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{Y})\), \(\forall \widetilde{X}, \widetilde{Y}\in \mathcal {F}(U)\);

-

11.

\((I^O)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{X}\cap \widetilde{Y})\subseteq (I^O)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{X})\cap (I^O)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{Y})\), \(\forall \widetilde{X}, \widetilde{Y}\in \mathcal {F}(U)\);

-

12.

\((I^O)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{X}\cap \widetilde{Y})\subseteq (I^O)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{X})\cap (I^O)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{Y})\), \(\forall \widetilde{X}, \widetilde{Y}\in \mathcal {F}(U)\).

Proof

It can be easily verified by the Definition 3.1 and fuzzy set theory. \(\square\)

The Type-1 O-DqMDTRFS is a generalization of optimistic multigranulation rough set model. According to Definition 3.1, we can get that

-

1.

If \(\widetilde{X}\) is a crisp set of the universe, then \((I^O)\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{X})=\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}^{O}({X})\) if and only if \(k=0\);

-

2.

If \(\widetilde{X}\) is a crisp set of the universe, then \((I^O)\overline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{X})=\overline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}^{O}({X})\) if and only if \(\beta =0\).

Based on the descriptions of the regions and Proposition 3.1, the decision rules of Type-1 O-DqMDTRFS can be obtained as follows:

-

(\((I^O)P\)) If \(\exists A_i\) such that \(\sum \nolimits _{y\in {{[x]}_{A_i}}}{(1-\widetilde{X}(y))\le k}\) and \(P(\widetilde{X}|{{[x]}_{A_j}})>\beta\) for any \(A_j\), decide \((I^O)Pos(\widetilde{X})\).

-

(\((I^O)N\)) If \(\sum \nolimits _{y\in {{[x]}_{A_i}}}{(1-\widetilde{X}(y))> k}\) for any \(A_i\) and \(\exists A_j\) such that \(P(\widetilde{X}|{{[x]}_{A_j}})\le \beta\), decide \((I^O)Neg(\widetilde{X})\).

-

(\((I^O)UB\)) If \(\sum \nolimits _{y\in {{[x]}_{A_i}}}{(1-\widetilde{X}(y))> k}\) for any \(A_i\) and \(P(\widetilde{X}|{{[x]}_{A_j}})>\beta\) for any \(A_j\), decide \((I^O)Ubn(\widetilde{X})\).

-

(\((I^O)LB\)) If \(\exists A_i\) such that \(\sum \nolimits _{y\in {{[x]}_{A_i}}}{(1-\widetilde{X}(y))\le k}\) and \(\exists A_j\) such that \(P(\widetilde{X}|{{[x]}_{A_j}})\le \beta\), decide \((I^O)Lbn(\widetilde{X})\).

With these rules, one can make decisions based on the following positive, upper boundary, lower boundary and negative rules. For Type-1 O-DqMDTRFS model, we have the following decisions:

Corresponding to the first kind of optimistic double-quantitative multigranulation decision-theoretic rough fuzzy set model, we can define the anther model by following way.

3.2 Decision-theoretic rough fuzzy set of Type-2 optimistic double-quantitative multigranulation (Type-2 O-DqMDTRFS)

Based on the Type-1 O-DqMDTRFS model, we can achieve the following definition for second type of double-quantitative optimistic multigranulation decision-theoretic rough fuzzy set.

Definition 3.2

Let \(IS=(U,AT,V,f)\) be an information system, given \(A_1, A_2,\ldots , A_m\in AT(m\le 2^{|AT|})\) are granular structures, for any \(\widetilde{X}\in \mathcal {F}(U), \beta \le \alpha \in [0, 1]\), and \(k \in \mathbf {N}\). Then, the lower and upper approximations of the second type of optimistic double-quantitative multigranulation decision-theoretic rough fuzzy set are respectively recorded as \((II^{O})\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{X})\) and \((II^{O})\overline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{X})\). They are defined as follows:

For the same reason, according to the definitions of lower and upper approximations, we can define the positive region, negative region, upper boundary region and lower boundary by following way:

Analogously, we can achieve the following propositions for Type-2 O-DqMDTRFS.

Proposition 3.2

Let\(IS=(U,AT,V,f)\)be an information system, given\(A_1, A_2,\ldots , A_m\in AT(m\le 2^{|AT|})\)are granular structures, for any\(\widetilde{X}\in \mathcal {F}(U), \beta \le \alpha \in [0, 1]\), and\(k \in \mathbf {N}\). Then, the following properties hold.

-

1.

\((II^{O})\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{X})\supseteq {{\underline{{{A}_{i}}}}_{(\alpha ,\beta )}}(\widetilde{X})\);

-

2.

\((II^{O})\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{X})\subseteq {{\overline{{{A}_{i}}}}_{k}}(\widetilde{X})\);

-

3.

\((II^{O})\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{X})=\underset{i=1}{\overset{m}{\mathop \cup }}\,{{\underline{{{A}_{i}}}}_{(\alpha ,\beta )}}(\widetilde{X})\);

-

4.

\((II^{O})\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{X})=\underset{i=1}{\overset{m}{\mathop \cap }}\,{{\overline{{{A}_{i}}}}_{k}}(\widetilde{X})\);

-

5.

\((II^{O})\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{X})\subseteq (II^{O})\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{Y})\), when \(\widetilde{X}\subseteq \widetilde{Y}\in \mathcal {F}(U)\);

-

6.

\((II^{O})\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{X})\subseteq (II^{O})\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k)}^{O}(\widetilde{Y})\), when \(\widetilde{X}\subseteq \widetilde{Y}\in \mathcal {F}(U)\);

-

7.

\((II^{O})\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k_1}^{O}(\widetilde{X})\supseteq (II^{O})\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k_2}^{O}(\widetilde{X})\), when \(k_1\le k_2\in \mathbf {N}\);

-

8.

\((II^{O})\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha _1,\beta )}^{O}(\widetilde{X})\supseteq (II^{O})\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha _2,\beta )}^{O}(\widetilde{X})\), when \(\alpha _1\le \alpha _2\in [0, 1]\);

-

9.

\((II^{O})\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{X}\cup \widetilde{Y})\supseteq (II^{O})\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{X})\cup (II^{O})\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{Y})\), \(\forall \widetilde{X}, \widetilde{Y}\in \mathcal {F}(U)\);

-

10.

\((II^{O})\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{X}\cup \widetilde{Y})\supseteq (II^{O})\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{X})\cup (II^{O})\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{Y})\), \(\forall \widetilde{X}, \widetilde{Y}\in \mathcal {F}(U)\);

-

11.

\((II^{O})\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{X}\cap \widetilde{Y})\subseteq (II^{O})\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{X})\cap (II^{O})\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{Y})\), \(\forall \widetilde{X}, \widetilde{Y}\in \mathcal {F}(U)\);

-

12.

\((II^{O})\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{X}\cap \widetilde{Y})\subseteq (II^{O})\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{X})\cap (II^{O})\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{Y})\), \(\forall \widetilde{X}, \widetilde{Y}\in \mathcal {F}(U)\).

Proof

It can be easily verified by the definition. \(\square\)

The Type-2 O-DqMDTRFS is also a generalization of optimistic multigranulation rough set model. According to the Definition 3.2, we can get that

-

1.

If \(\widetilde{X}\) is a crisp set of the universe, then \((II^{O})\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{X})=\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}^{O}({X})\) if and only if \(\alpha =1\);

-

2.

If \(\widetilde{X}\) is a crisp set of the universe, then \((II^{O})\overline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{X})=\overline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}^{O}({X})\) if and only if \(k=0\).

Based on the previous discussions, we can obtain the decision rules for Type-2 O-DqMDTRFS, and the decision rules of Type-2 O-DqMDTRFS are listed as follows:

-

(\((II^{O})P\)) If \(\exists A_i\) such that \(P(X|{{[x]}_{{{A}_{i}}}})\ge \alpha\) and \(\sum \nolimits _{y\in {{[x]}_{{{A}_{j}}}}}{\widetilde{X}(y)}>k\) for any \(A_j\), decide \((II^{O})Pos(\widetilde{X})\).

-

(\((II^{O})N\)) If \(P(X|{{[x]}_{{{A}_{i}}}})< \alpha\) for any \(A_i\) and \(\exists A_j\) such that \(\sum \nolimits _{y\in {{[x]}_{{{A}_{j}}}}}{\widetilde{X}(y)}\le k\) , decide \((II^{O})Neg(\widetilde{X})\).

-

(\((II^{O})UB\)) If \(P(X|{{[x]}_{{{A}_{i}}}})< \alpha\) for any \(A_i\) and \(\sum \nolimits _{y\in {{[x]}_{{{A}_{j}}}}}{\widetilde{X}(y)}>k\) for any \(A_j\), decide \((II^{O})Ubn(\widetilde{X})\).

-

(\((II^{O})LB\)) If \(\exists A_i\) such that \(P(X|{{[x]}_{{{A}_{i}}}})\ge \alpha\) and \(\exists A_j\) such that \(\sum \nolimits _{y\in {{[x]}_{{{A}_{j}}}}}{\widetilde{X}(y)}\le k\), decide \((II^{O})Lbn(\widetilde{X})\).

With these decision rules, one can make decisions based on the following positive, upper boundary, lower boundary and negative rules. For Type-2 O-DqMDTRFS model, we have the following decisions:

3.3 Decision-theoretic rough fuzzy set of Type-1 pessimistic double-quantitative multigranulation (Type-1 P-DqMDTRFS)

Similar to the double-quantitative optimistic multigranulation decision-theoretic rough fuzzy set, we will establish pessimistic double-quantitative multigranulation decision-theoretic rough set fuzzy models and discuss the decision rules of these models.

Definition 3.3

Let \(IS=(U,AT,V,f)\) be an information system, given \(A_1, A_2,\ldots , A_m\in AT(m\le 2^{|AT|})\) are granular structures, for any \(\widetilde{X}\in \mathcal {F}(U), \beta \le \alpha \in [0, 1]\), and \(k \in \mathbf {N}\). Then, the lower and upper approximations of the first kind of optimistic double-quantitative multigranulation decision-theoretic rough fuzzy set are respectively recorded as \((I^P)\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{X})\) and \((I^P)\overline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{X})\). They are defined as follows:

Identically, the positive region, negative region, upper boundary region and lower boundary can be achieved as follows:

According to Definition 3.3 and the description of these rough regions, we can achieve some propositions of Type-1 P-DqMDTRFS.

Proposition 3.3

Let\(IS=(U,AT,V,f)\)be an information system, given\(A_1, A_2,\ldots , A_m\in AT(m\le 2^{|AT|})\)are granular structures, for any\(\widetilde{X}\in \mathcal {F}(U), \beta \le \alpha \in [0, 1]\), and\(k \in \mathbf {N}\). Then, the following properties hold.

-

1.

\((I^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{X})\subseteq {{\underline{{{A}_{i}}}}_{k}}(\widetilde{X})\);

-

2.

\((I^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{X})\supseteq {{\overline{{{A}_{i}}}}_{(\alpha ,\beta )}}(\widetilde{X})\);

-

3.

\((I^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{X})=\underset{i=1}{\overset{m}{\mathop \cap }}\,{{\underline{{{A}_{i}}}}_{k}}(\widetilde{X})\);

-

4.

\((I^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{X})=\underset{i=1}{\overset{m}{\mathop \cup }}\,{{\overline{{{A}_{i}}}}_{(\alpha ,\beta )}}(\widetilde{X})\);

-

5.

\((I^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{X})\subseteq (I^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{Y})\), when \(\widetilde{X}\subseteq \widetilde{Y}\in \mathcal {F}(U)\);

-

6.

\((I^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{X})\subseteq (I^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{Y})\), when \(\widetilde{X}\subseteq \widetilde{Y}\in \mathcal {F}(U)\);

-

7.

\((I^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{{{k}_{1}}}^{P}(\widetilde{X})\subseteq (I^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{{{k}_{2}}}^{P}(\widetilde{X})\), when \(k_1\le k_2\in \mathbf {N}\);

-

8.

\((I^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,{{\beta }_{1}})}^{P}(\widetilde{X})\supseteq (I^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,{{\beta }_{2}})}^{P}(\widetilde{X})\), when \(\beta _1\le \beta _2\in [0, 1]\);

-

9.

\((I^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{X}\cup \widetilde{Y})= (I^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{X})\cup (I^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{Y})\), \(\forall \widetilde{X}, \widetilde{Y}\in \mathcal {F}(U)\);

-

10.

\((I^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{X}\cup \widetilde{Y})\supseteq (I^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{X})\cup (I^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{Y})\), \(\forall \widetilde{X}, \widetilde{Y}\in \mathcal {F}(U)\);

-

11.

\((I^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{X}\cap \widetilde{Y})\subseteq (I^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{X})\cap (I^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{Y})\), \(\forall \widetilde{X}, \widetilde{Y}\in \mathcal {F}(U)\);

-

12.

\((I^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{X}\cap \widetilde{Y})= (I^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{X})\cap (I^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{Y})\), \(\forall \widetilde{X}, \widetilde{Y}\in \mathcal {F}(U)\).

Proof

These propositions can be proved directly based on Definition 3.3. \(\square\)

The Type-1 P-DqMDTRFS is a generalization of pessimistic multigranulation rough set model. According to Definition 3.3, we can get that

-

1.

If \(\widetilde{X}\) is a crisp set of the universe, then \((I^P)\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{X})=\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}^{P}({X})\) if and only if \(k=0\);

-

2.

If \(\widetilde{X}\) is a crisp set of the universe, then \((I^P)\overline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{X})=\overline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}^{P}({X})\) if and only if \(\beta =0\).

Based on the representation of rough regions and Proposition 3.3, we can achieve the decision rules of Type-1 P-DqMDTRFS as follows:

-

(\((I^P)P\)) If \(\sum \nolimits _{y\in {{[x]}_{A_i}}}{(1-\widetilde{X}(y))\le k}\) for any \(A_i\) and \(\exists A_j\) such that \(P(\widetilde{X}|{{[x]}_{A_j}})>\beta\), decide \((I^P)Pos(\widetilde{X})\).

-

(\((I^P)N\)) If \(\exists A_i\) such that \(\sum \nolimits _{y\in {{[x]}_{A_i}}}{(1-\widetilde{X}(y))> k}\) and \(P(\widetilde{X}|{{[x]}_{A_j}})\le \beta\) for any \(A_j\), decide \((I^P)Neg(\widetilde{X})\).

-

(\((I^P)UB\)) If \(\exists A_i\) such that \(\sum \nolimits _{y\in {{[x]}_{A_i}}}{(1-\widetilde{X}(y))> k}\) and \(\exists A_j\) such that \(P(\widetilde{X}|{{[x]}_{A_j}})>\beta\), decide \((I^P)Ubn(\widetilde{X})\).

-

(\((I^P)LB\)) If \(\exists A_i\) such that \(\sum \nolimits _{y\in {{[x]}_{A_i}}}{(1-\widetilde{X}(y))\le k}\) and \(P(\widetilde{X}|{{[x]}_{A_j}})\le \beta\) for any \(A_j\), decide \((I^P)Lbn(\widetilde{X})\).

Similarly, for Type-1 P-DqMDTRFS model, we have the following decisions:

3.4 Decision-theoretic rough fuzzy set of Type-2 pessimistic double-quantitative multigranulation (Type-2 P-DqMDTRFS)

Similar to the Type-1 P-DqMDTRFS, we can establish the second type of pessimistic double-quantitative decision-theoretic rough fuzzy set model. It can be denoted by following way.

Definition 3.4

Let \(IS=(U,AT,V,f)\) be an information system, given \(A_1, A_2,\ldots , A_m\in AT(m\le 2^{|AT|})\) are granular structures, for any \(\widetilde{X}\in \mathcal {F}(U), \beta \le \alpha \in [0, 1]\), and \(k \in \mathbf {N}\). Then, the lower and upper approximations of the first kind of optimistic double-quantitative multigranulation decision-theoretic rough fuzzy set are respectively recorded as \((II^P)\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{X})\) and \((II^P)\overline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{X})\). They are defined as follows:

Analogously, the positive region, negative region, upper boundary region and lower boundary of Type-2 P-DqMDTRFS can be obtained as follows:

From the definition of Type-2 P-DqMDTRFS, we can get the following propositions.

Proposition 3.4

Let\(IS=(U,AT,V,f)\)be an information system, given\(A_1, A_2,\ldots , A_m\in AT(m\le 2^{|AT|})\)are granular structures, for any\(\widetilde{X}\in \mathcal {F}(U), \beta \le \alpha \in [0, 1]\), and\(k \in \mathbf {N}\). Then, the following properties hold.

-

1.

\((II^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{X})\subseteq {{\underline{{{A}_{i}}}}_{(\alpha ,\beta )}}(\widetilde{X})\);

-

2.

\((II^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{X})\supseteq {{\overline{{{A}_{i}}}}_{k}}(\widetilde{X})\);

-

3.

\((II^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{X})=\underset{i=1}{\overset{m}{\mathop \cup }}\,{{\underline{{{A}_{i}}}}_{(\alpha ,\beta )}}(\widetilde{X})\);

-

4.

\((II^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{X})=\underset{i=1}{\overset{m}{\mathop \cap }}\,{{\overline{{{A}_{i}}}}_{k}}(\widetilde{X})\);

-

5.

\((II^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{X})\subseteq (II^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{Y})\), when \(\widetilde{X}\subseteq \widetilde{Y}\in \mathcal {F}(U)\);

-

6.

\((II^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{X})\subseteq (II^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{Y})\), when \(\widetilde{X}\subseteq \widetilde{Y}\in \mathcal {F}(U)\);

-

7.

\((II^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k_1}^{P}(\widetilde{X})\supseteq (II^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k_2}^{P}(\widetilde{X})\), when \(k_1\le k_2\in \mathbf {N}\);

-

8.

\((II^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha _1,\beta )}^{P}(\widetilde{X})\supseteq (II^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha _2,\beta )}^{P}(\widetilde{X})\), when \(\alpha _1\le \alpha _2\in [0, 1]\);

-

9.

\((II^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{X}\cup \widetilde{Y})= (II^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{X})\cup (II^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{Y})\), \(\forall \widetilde{X}, \widetilde{Y}\in \mathcal {F}(U)\);

-

10.

\((II^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{X}\cup \widetilde{Y})\supseteq (II^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{X})\cup (II^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{Y})\), \(\forall \widetilde{X}, \widetilde{Y}\in \mathcal {F}(U)\);

-

11.

\((II^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{X}\cap \widetilde{Y})\subseteq (II^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{X})\cap (II^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{Y})\), \(\forall \widetilde{X}, \widetilde{Y}\in \mathcal {F}(U)\);

-

12.

\((II^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{X}\cap \widetilde{Y})=(II^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{X})\cap (II^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{Y})\), \(\forall \widetilde{X}, \widetilde{Y}\in \mathcal {F}(U)\).

Proof

These propositions can be proved directly based on the Definition 3.4. \(\square\)

The Type-2 P-DqMDTRFS is also a generalization of pessimistic multigranulation rough set model. According to Definition 3.4, we can get that

-

1.

If \(\widetilde{X}\) is a crisp set of the universe, then \((II^P)\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{X})=\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}^{P}({X})\) if and only if \(\alpha =1\);

-

2.

If \(\widetilde{X}\) is a crisp set of the universe, then \((II^P)\overline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{X})=\overline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}^{P}({X})\) if and only if \(k=0\).

Based on the description of rough regions and Proposition 3.4, the decision rules of the Type-2 P-DqMDTRFS can be achieved as follows:

-

(\((II^P)P\)) If \(P(\widetilde{X}|{{[x]}_{{{A}_{i}}}})\ge \alpha\) for any \(A_i\) and \(\exists A_j\) such that \(\sum \nolimits _{y\in {{[x]}_{{{A}_{j}}}}}{\widetilde{X}(y)}>k\), decide \((II^P)Pos(\widetilde{X})\).

-

(\((II^P)N\)) If \(\exists A_i\) such that \(P(\widetilde{X}|{{[x]}_{{{A}_{i}}}})< \alpha\) and \(\sum \nolimits _{y\in {{[x]}_{{{A}_{j}}}}}{\widetilde{X}(y)}\le k\) for any \(A_j\), decide \((II^P)Neg(\widetilde{X})\).

-

(\((II^P)UB\)) If \(\exists A_i\) such that \(P(\widetilde{X}|{{[x]}_{{{A}_{i}}}})< \alpha\) and \(\exists A_j\) such that \(\sum \nolimits _{y\in {{[x]}_{{{A}_{j}}}}}{\widetilde{X}(y)}>k\) for any \(A_j\), decide \((II^P)Ubn(\widetilde{X})\).

-

(\((II^P)LB\)) If \(P(\widetilde{X}|{{[x]}_{{{A}_{i}}}})\ge \alpha\) for any \(A_i\) and \(\exists A_j\) such that \(\sum \nolimits _{y\in {{[x]}_{{{A}_{j}}}}}{\widetilde{X}(y)}\le k\), decide \((II^P)Lbn(\widetilde{X})\).

For Type-2 P-DqMDTRFS model, we have also the following decisions:

3.5 Decision-theoretic rough fuzzy set of Type-1 mean double-quantitative multigranulation (Type-1 M-DqMDTRFS)

We will establish two kinds of mean double-quantitative multigranulation decision theory rough fuzzy set models through the mean.

Definition 3.5

Let \(IS=(U,AT,V,f)\) be an information system, given \(A_1, A_2,\ldots , A_m\in AT(m\le 2^{|AT|})\) are granular structures, for any \(\widetilde{X}\in \mathcal {F}(U), \beta \le \alpha \in [0, 1]\), and \(k \in \mathbf {N}\). Then, the lower and upper approximations of the first kind of optimistic double-quantitative multigranulation decision-theoretic rough fuzzy set are respectively recorded as \((I^M)\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{M}(\widetilde{X})\) and \((I^M)\overline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{M}(\widetilde{X})\). They are defined as follows:

Based on this pair of lower and upper approximation operators, the positive region, negative region, upper boundary region and lower boundary can be also obtained by following way.

According to Definition 3.5, we know that there is a difference between this model and classical multigranulation rough set model. Both the lower and upper approximations depend on a parameter that be induced by an average value of multi granular structures. Some essential mathematical properties of this model may be changed. Thus, we conduct an investigation on Type-1 M-DqMDTRFS and the following propositions are obtained.

Proposition 3.5

Let\(IS=(U,AT,V,f)\)be an information system, given\(A_1, A_2,\ldots , A_m\in AT(m\le 2^{|AT|})\)are granular structures, for any\(\widetilde{X}\in \mathcal {F}(U), \beta \le \alpha \in [0, 1]\), and\(k \in \mathbf {N}\). Then, the following properties hold.

-

1.

\((I^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{X})\subseteq (I^M)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{M}(\widetilde{X})\subseteq (I^O)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{X})\)

-

2.

\((I^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{X})\supseteq (I^M)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{M}(\widetilde{X})\supseteq (I^O)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{X})\)

-

3.

\((I^M)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{M}(\widetilde{X})\subseteq (I^M)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{M}(\widetilde{Y})\), when \(\widetilde{X}\subseteq \widetilde{Y}\in \mathcal {F}(U)\);

-

4.

\((I^M)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{M}(\widetilde{X})\subseteq (I^M)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{M}(\widetilde{Y})\), when \(\widetilde{X}\subseteq \widetilde{Y}\in \mathcal {F}(U)\);

-

5.

\((I^M)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{{{k}_{1}}}^{M}(\widetilde{X})\subseteq (I^M)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{{{k}_{2}}}^{M}(\widetilde{X})\), when \(k_1\le k_2\in \mathbf {N}\);

-

6.

\((I^M)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,{{\beta }_{1}})}^{M}(\widetilde{X})\supseteq (I^M)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,{{\beta }_{2}})}^{M}(\widetilde{X})\), when \(\beta _1\le \beta _2\in [0, 1]\).

Proof

-

1.

On the one hand, for any \(x\in (I^P)\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{X})\), we know that for all \(A_i\) have \(\sum \nolimits _{y\in {{[x]}_{{{A}_{i}}}}}{(1-\widetilde{X}(y))}\le k\) where \(i=1, 2, ..., m\). So, we obtain that \(\sum \nolimits _{i=1}^{m}{\left( \sum \nolimits _{y\in {{[x]}_{{{A}_{i}}}}}{(1-\widetilde{X}(y))}\right) }\le m\cdot k\). That means \(\frac{1}{m}\sum \nolimits _{i=1}^{m}{\left( \sum \nolimits _{y\in {{[x]}_{{{A}_{i}}}}}{(1-\widetilde{X}(y))}\right) }\le k\), that is \(x\in (I^M)\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{M}(\widetilde{X})\), i.e. \((I^P)\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{X})\subseteq (I^M)\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{M}(\widetilde{X})\). On the other hand, for any \(x\in (I^M)\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{M}(\widetilde{X})\), we can get that \(\frac{1}{m}\sum \nolimits _{i=1}^{m}{(\sum \nolimits _{y\in {{[x]}_{{{A}_{i}}}}}{(1-\widetilde{X}(y))}})\le k\), It indicates that there is at least one \(A_i\) that makes \(\sum \nolimits _{y\in {{[x]}_{{{A}_{i}}}}}{(1-\widetilde{X}(y))}\le k\) true, that is \(x\in (I^O)\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{X})\), i.e. \((I^M)\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{M}(\widetilde{X})\subseteq (I^O)\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{X})\) So, we can achieve that \((I^P)\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{X})\subseteq (I^M)\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{M}(\widetilde{X})\subseteq (I^O)\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{X})\).

-

2.

It’s similar to the process of (1).

-

3.

For any \(x\in (I^M)\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{M}(\widetilde{X})\), we have \(\frac{1}{m}\sum \nolimits _{i=1}^{m}{(\sum \nolimits _{y\in {{[x]}_{{{A}_{i}}}}}{(1-\widetilde{X}(y))}})\le k\), when \(\widetilde{X}\subseteq \widetilde{Y}\in \mathcal {F}(U)\), we have \({\sum \nolimits _{y\in {{[x]}_{{{A}_{i}}}}}{\widetilde{X}(y)}}\le {\sum \nolimits _{y\in {{[x]}_{{{A}_{i}}}}}{\widetilde{Y}(y)}}\). So, we can obtain that \(\frac{1}{m}\sum \nolimits _{i=1}^{m}{(\sum \nolimits _{y\in {{[x]}_{{{A}_{i}}}}}{(1-\widetilde{Y}(y))}}) \le \frac{1}{m}\sum \nolimits _{i=1}^{m}{\left( \sum \nolimits _{y\in {{[x]}_{{{A}_{i}}}}}{(1-\widetilde{X}(y))}\right) }\le k.\) That means \(x\in (I^M)\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{M}(\widetilde{Y})\), i.e. \((I^M)\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{M}(\widetilde{X})\subseteq (I^M)\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{M}(\widetilde{Y})\), when \(\widetilde{X}\subseteq \widetilde{Y}\in \mathcal {F}(U)\).

-

4.

It’s similar to the process of (3).

-

5.

For any \(x\in (I^M)\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{{{k}_{1}}}^{M}(\widetilde{X})\), we have \(\frac{1}{m}\sum \nolimits _{i=1}^{m}{\left( \sum \nolimits _{y\in {{[x]}_{{{A}_{i}}}}}{(1-\widetilde{X}(y))}\right) }\le k_1 \le k_2\). That means \(x\in (I^M) \underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{{{k}_{2}}}^{M}(\widetilde{X})\), i.e. \((I^M)\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{{{k}_{1}}}^{M}(\widetilde{X})\subseteq (I^M)\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{{{k}_{2}}}^{M}(\widetilde{X})\), when\(k_1\le k_2\in \mathbf {N}\).

-

6.

For any \(x\in (I^M)\overline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,{{\beta }_{2}})}^{M}(\widetilde{X})\), we have \(\frac{1}{m}\sum \nolimits _{i=1}^{m}{(P(\widetilde{X}|{{[x]}_{{{A}_{i}}}}))}>{\beta _{2}}>{\beta _{1}}\). So, we can achieve that \(x\in (I^M) \overline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,{{\beta }_{1}})}^{M}(\widetilde{X})\), i.e. \((I^M)\overline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,{{\beta }_{1}})}^{M}(\widetilde{X})\supseteq (I^M)\overline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,{{\beta }_{2}})}^{M}(\widetilde{X}),\) when \(\beta _1\le \beta _2\in [0, 1]\).

Thus, the proof is accomplished. \(\square\)

The Type-1 M-DqMDTRFS is a generalization of pessimistic multigranulation rough set model. According to Definition 3.5, we can get that

-

1.

If \(\widetilde{X}\) is a crisp set of the universe, then \((I^M)\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{M}(\widetilde{X})=\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}^{P}({X})\) if and only if \(k=0\);

-

2.

If \(\widetilde{X}\) is a crisp set of the universe, then \((I^M)\overline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{M}(\widetilde{X})=\overline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}^{P}({X})\) if and only if \(\beta =0\).

Similar to the previous double-quantitative multigranulation decision-theoretic rough fuzzy set, we can obtain the decision rules of Type-1 M-DqMDTRFS as follows:

-

(\((I^M)P\)) If \(\frac{1}{m}\sum \limits _{i=1}^{m}{\left( \sum \limits _{y\in {{[x]}_{{{A}_{i}}}}}{(1-\widetilde{X}(y))}\right) }\le k\) and \(\frac{1}{m}\sum \limits _{i=1}^{m}{(P(\widetilde{X}|{{[x]}_{{{A}_{i}}}}))}>{\beta }\) , decide \((I^M)Pos(\widetilde{X})\).

-

(\((I^M)N\)) If \(\frac{1}{m}\sum \limits _{i=1}^{m}{\left( \sum \limits _{y\in {{[x]}_{{{A}_{i}}}}}{(1-\widetilde{X}(y))}\right) }> k\) and \(\frac{1}{m}\sum \limits _{i=1}^{m}{(P(\widetilde{X}|{{[x]}_{{{A}_{i}}}}))}\le {\beta }\), decide \((I^M)Neg(\widetilde{X})\).

-

(\((I^M)UB\)) If \(\frac{1}{m}\sum \limits _{i=1}^{m}{\left( \sum \limits _{y\in {{[x]}_{{{A}_{i}}}}}{(1-\widetilde{X}(y))}\right) }> k\) and \(\frac{1}{m}\sum \limits _{i=1}^{m}{(P(\widetilde{X}|{{[x]}_{{{A}_{i}}}}))}>{\beta }\), decide \((I^M)Ubn(\widetilde{X})\).

-

(\((I^M)LB\)) If \(\frac{1}{m}\sum \limits _{i=1}^{m}{\left( \sum \limits _{y\in {{[x]}_{{{A}_{i}}}}}{(1-\widetilde{X}(y))}\right) }\le k\) and \(\frac{1}{m}\sum \limits _{i=1}^{m}{(P(\widetilde{X}|{{[x]}_{{{A}_{i}}}}))}\le {\beta }\), decide \((I^M)Lbn(\widetilde{X})\).

With these decision rules, for Type-1 M-DqMDTRFS model, we have the following decisions:

3.6 Decision-theoretic rough fuzzy set of Type-2 mean double-quantitative multigranulation (Type-2 M-DqMDTRFS)

Based on the previous discussion, we can achieve the following propositions for second type of double-quantitative mean multigranulation decision-theoretic rough fuzzy set.

Definition 3.6

Let \(IS=(U,AT,V,f)\) be an information system, given \(A_1, A_2,\ldots , A_m\in AT(m\le 2^{|AT|})\) are granular structures, for any \(\widetilde{X}\in \mathcal {F}(U), \beta \le \alpha \in [0, 1]\), and \(k \in \mathbf {N}\). Then, the lower and upper approximations of the first kind of optimistic double-quantitative multigranulation decision-theoretic rough fuzzy set are respectively recorded as \((II^M)\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{M}(\widetilde{X})\) and \((II^M)\overline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{M}(\widetilde{X})\). They are defined as follows:

Uniformly, we can characterize the rough regions by following way.

And, we can also achieve the propositions of Type-2 M-DqMDTRFS and they are represented as follows.

Proposition 3.6

Let\(IS=(U,AT,V,f)\)be an information system, given\(A_1, A_2,\ldots , A_m\in AT(m\le 2^{|AT|})\)are granular structures, for any\(\widetilde{X}\in \mathcal {F}(U), \beta \le \alpha \in [0, 1]\), and\(k \in \mathbf {N}\). Then, the following properties hold.

-

1.

\((II^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{X})\subseteq (II^M)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{M}(\widetilde{X})\subseteq (II^O)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{X})\);

-

2.

\((II^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{X})\supseteq (II^M)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{M}(\widetilde{X})\supseteq (II^O)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{X})\);

-

3.

\((II^M)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{M}(\widetilde{X})\subseteq (II^M)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{M}(\widetilde{Y})\), when \(\widetilde{X}\subseteq \widetilde{Y}\in \mathcal {F}(U)\);

-

4.

\((II^M)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{M}(\widetilde{X})\subseteq (II^M)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{M}(\widetilde{Y})\), when \(\widetilde{X}\subseteq \widetilde{Y}\in \mathcal {F}(U)\);

-

5.

\((II^M)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k_1}^{M}(\widetilde{X})\supseteq (II^M)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k_2}^{M}(\widetilde{X})\), when\(k_1\le k_2\in \mathbf {N}\);

-

6.

\((II^M)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha _1,\beta )}^{M}(\widetilde{X})\supseteq (II^M)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha _2,\beta )}^{M}(\widetilde{X})\), when \(\alpha _1\le \alpha _2\in [0, 1]\);

Proof

It is similar to the proof of Proposition 3.5. \(\square\)

According to Definition 3.6, we can get that

-

1.

If \(\widetilde{X}\) is a crisp set of the universe, then \((II^M)\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{M}(\widetilde{X})=\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}^{P}({X})\) if and only if \(\alpha =1\);

-

2.

If \(\widetilde{X}\) is a crisp set of the universe, then \((II^M)\overline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{M}(\widetilde{X})=\overline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}^{P}({X})\) if and only if \(k=0\).

Similar to the previous double-quantitative multigranulation decision-theoretic rough fuzzy set, we can obtain the decision rules of Type-2 M-DqMDTRFS as follows:

-

(\((II^M)P\)) If \(\frac{1}{m}\sum \limits _{i=1}^{m}{\left( \sum \nolimits _{y\in {{[x]}_{{{A}_{i}}}}}{(1-\widetilde{X}(y))}\right) }> k\) and \(\frac{1}{m}\sum \nolimits _{i=1}^{m}{(P(\widetilde{X}|{{[x]}_{{{A}_{i}}}}))}\ge {\alpha }\) , decide \((II^M)Pos(\widetilde{X})\).

-

(\((II^M)N\)) If \(\frac{1}{m}\sum \nolimits _{i=1}^{m}{\left( \sum \nolimits _{y\in {{[x]}_{{{A}_{i}}}}}{(1-\widetilde{X}(y))}\right) }\le k\) and \(\frac{1}{m}\sum \nolimits _{i=1}^{m}{(P(\widetilde{X}|{{[x]}_{{{A}_{i}}}}))}< {\alpha }\), decide \((II^M)Neg(\widetilde{X})\).

-

(\((II^M)UB\)) If \(\frac{1}{m}\sum \nolimits _{i=1}^{m}{\left( \sum \nolimits _{y\in {{[x]}_{{{A}_{i}}}}}{(1-\widetilde{X}(y))}\right) }> k\) and \(\frac{1}{m}\sum \nolimits _{i=1}^{m}{(P(\widetilde{X}|{{[x]}_{{{A}_{i}}}}))}<{\alpha }\), decide \((II^M)Ubn(\widetilde{X})\).

-

(\((II^M)LB\)) If \(\frac{1}{m}\sum \nolimits _{i=1}^{m}{\left( \sum \nolimits _{y\in {{[x]}_{{{A}_{i}}}}}{(1-\widetilde{X}(y))}\right) }\le k\) and \(\frac{1}{m}\sum \nolimits _{i=1}^{m}{(P(\widetilde{X}|{{[x]}_{{{A}_{i}}}}))}\ge {\alpha }\), decide \((II^M)Lbn(\widetilde{X})\).

Analogously, for Type-2 M-DqMDTRFS model, we have the following decisions:

4 Relationships among three pairs of DqMDTRFS models

Based on previous discussions, we obtain six DqMDTRFS models in this study. In these models, the conditional probability value (thresholds \(\alpha\) and \(\beta\)) and grade (threshold k) decide their detailed form of rough set. In this section, we shall investigate the relationships among the six types of DqMDTRFS models introduced in Sect. 3.

From the definitions of DqMDTRFS, we can obtain the following theorem.

Theorem 4.1

Let\(IS=(U,AT,V,f)\)be an information system, given\(A_1, A_2,\ldots , A_m\in AT(m\le 2^{|AT|})\)are granular structures, for any\(\widetilde{X}\in \mathcal {F}(U)\), and \(k \in \mathbf {N}\), we have that

-

1.

\(\left[ (I^{O})\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}({\widetilde{X}}^c)\right] ^c =(II^{O})\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{X})\);

-

2.

\(\left[ (I^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}({\widetilde{X}}^c)\right] ^c= (II^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{X})\).

Proof

-

1.

We know that \((I^{O})\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}({\widetilde{X}}^c)=\{x\in U|\sum \nolimits _{y\in {{[x]}_{{{A}_{1}}}}}{\widetilde{X}(y)}\le k \vee \sum \nolimits _{y\in {{[x]}_{A2}}}{\widetilde{X}(y)}\le k\vee \cdots \vee \sum \nolimits _{y\in {{[x]}_{{{A}_{m}}}}}{\widetilde{X}(y)} \le k\},\) then, we can have that \(\left[ (I^{O})\underline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}({\widetilde{X}}^c)\right] ^c=\{x\in U|\sum \nolimits _{y\in {{[x]}_{{{A}_{1}}}}}{\widetilde{X}(y)}>k\wedge \sum \nolimits _{y\in {{[x]}_{A2}}}{\widetilde{X}(y)}>k\wedge \cdots \wedge \sum \nolimits _{y\in {{[x]}_{{{A}_{m}}}}}{\widetilde{X}(y)}>k\}=(II^{O})\overline{\sum \nolimits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{X}).\)

-

2.

It is similar to the proof of (1).

\(\square\)

Theorem 4.2

Let\(IS=(U,AT,V,f)\)be an information system, given\(A_1, A_2,\ldots , A_m\in AT(m\le 2^{|AT|})\)are granular structures, for any\(\widetilde{X}\in \mathcal {F}(U), \alpha \le \beta \in [0, 1]\), we have that

-

1.

\((II^{O})\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{X})\supseteq (I^{O})\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{X});\)

-

2.

\((II^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{X})\subseteq (I^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{X}).\)

Proof

By definition, they are easy to prove. \(\square\)

Combining Proposition 3.5 of (1), Proposition 3.6 of (2) and Theorem 4.2, we can get that

Theorem 4.3

Let\(IS=(U,AT,V,f)\)be an information system, given\(A_1, A_2,\ldots , A_m\in AT(m\le 2^{|AT|})\)are granular structures, for any\(\widetilde{X}\in \mathcal {F}(U), \beta =\alpha \in [0, 1]\), and\(k \in \mathbf {N}\), we have that

-

1.

\((I^P)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{X})\subseteq (I^M)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{M}(\widetilde{X})\subseteq (I^O)\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{X})\subseteq (II^{O})\underline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{O}(\widetilde{X})\);

-

2.

\((I^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{(\alpha ,\beta )}^{P}(\widetilde{X}) \supseteq (II^P)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{P}(\widetilde{X})\supseteq (II^M)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{M}(\widetilde{X})\supseteq (II^O)\overline{\sum \limits _{i=1}^{m}{{{A}_{i}}}}_{k}^{O}(\widetilde{X})\).

Proof

These properties are easy to prove. \(\square\)

5 Case study

Compared with the multigranulation decision-theoretic rough fuzzy set model, the double-quantitative multigranulation decision-theoretic rough fuzzy set models consider not only the relative quantitative information but also the absolute quantitative information between the indiscernibility classes and fuzzy concept set. With the application of thresholds k, \(\alpha\)and \(\beta\) the fault-tolerant ability of model is improved. In this section, in order to exhibit the decision approach that combining relative and absolute quantitative simultaneously, we conduct an medical example.

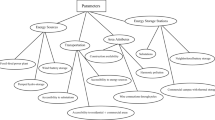

Let \(IS = (U, AT \cup \widetilde{d})\) be a fuzzy decision system, where \(U=\{x_1,x_2,...,x_{36}\}\) is composed of 36 patients, and the condition attributes \(a_1,a_2,a_3\) represent fever, headache, cough, respectively. And, decision attribute \(\widetilde{d}\) indicate that cold and the fuzzy decision attribute is represented as \(\widetilde{d}=\{0.1,0.4,0.6,...,0.6,0.1,0.5\}\). The detailed characteristics of the datasets are showed in Table 1.

For simplicity and without loss of generality, suppose there are three granular structures that \(A_1=\{a_1, a_2\}\), \(A_2=\{a_1, a_3\}\), \(A_3=\{a_2, a_3\}\), respectively. So, we can compute the \([x]_{A_i}\), \(\sum \nolimits _{y\in {{[x]}_{{{A}_{i}}}}}{\widetilde{d}(y)}\), \(P(\widetilde{d}|{{[x]}_{{{A}_{i}}}})\), \(\sum \nolimits _{y\in {{[x]}_{{{A}_{i}}}}}{(1-\widetilde{d}(y))}\) with respect to \(A_1, A_2\) and \(A_3\). These variables to characterize the double-quantitative decision-theoretic approach, which are listed in Table 2.

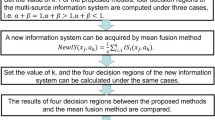

In the Bayesian decision procedure, the decision-making is based on a pair of threshold \((\alpha ,\beta )\). In general, it is divided into three cases that \(\alpha +\beta >1\), \(\alpha +\beta =1\)and \(\alpha +\beta <1\), respectively. In the calculation process, we can achieve the results for an arbitrary thresholds pair \((\alpha ,\beta )\). Then, we will discuss the decision rules based on different combinations of parameters.

For convenience and without loss of generality, we choose the grade \(k =2\) throughout this case study. In the Bayesian decision procedure, from the losses, one can give the values \(\lambda _{i1}, \lambda _{i2}\), and \(i = 1 , 2 , 3\). We make some changes to the loss function defined in [53], and the parameters can be calculated as follows.

Case 1 \(\alpha +\beta =1\). Consider the following loss function:

-

\(\lambda _{PP} = 0, \lambda _{PN} = 18\),

-

\(\lambda _{BP} = 9, \lambda _{BN} = 2\),

-

\(\lambda _{NP} = 12, \lambda _{NN} = 0\).

Then, we can get \(\alpha = 0.6, \beta = 0.4\) i.e. \(\alpha +\beta =1\). We can obtain the lower and upper approximations of \(\widetilde{d}\) for the proposed double-quantitative multigranulation decision-theoretic rough fuzzy set models, respectively. They are shown in Table 3.

According to Table 3, we can compute the rough regions of Type-1 O-DqMDTRFS, Type-2 O-DqMDTRFS, Type-1 P-DqMDTRFS, Type-2 P-DqMDTRFS, Type-1 M-DqMDTRFS and Type-2 M-DqMDTRFS with respect to \(\alpha = 0.6, \beta = 0.4\) and \(k =2\), respectively. In order to illustrate the effectiveness of our proposed models, we only calculate the rough region of Type-1 O-DqMDTRFS as an illustration. They are listed as follows:

For \(\alpha = 0.6, \beta = 0.4\) and \(k=2\), these models have their own quantitative semantics for the relative and absolute degree quantification. Furthermore, we can obtain the decision rules in practiced applications by using Type-1 O-DqMDTRFS model as follows:

-

\(((I^O)P)\) The patients \(x_2,x_4,x_6,x_8,x_9,x_{10},x_{11},x_{14},x_{18},x_{20},x_{24},x_{26},x_{29},x_{31},x_{33}\) and \(x_{34}\) are suffering from cold with respect to these diagnostic indexes and given parameters;

-

\(((I^O)N)\) The patients \(x_1,x_7,x_{19},x_{22}\) and \(x_{35}\) are not suffering from cold regarding current diagnostic conditions;

-

\(((I^O)B)\) The patients \(x_3,x_5,x_{12},x_{13},x_{15},x_{16},x_{17},x_{21},x_{23},x_{25},x_{27},x_{28},x_{30},x_{32}\) and \(x_{36}\) can not be diagnosed with respect to present information. A further diagnosis is need to them.

Case 2 \(\alpha +\beta <1\). Consider the following loss function:

-

\(\lambda _{PP} = 0, \lambda _{PN} = 19\),

-

\(\lambda _{BP} = 12, \lambda _{BN} = 3\),

-

\(\lambda _{NP} = 19, \lambda _{NN} = 0\).

Based on the loss function, we can get \(\alpha =0.5, \beta =0.3\), i.e. \(\alpha +\beta <1\). We can obtain the lower and upper approximations of \(\widetilde{d}\) for these constructed double-quantitative multigranulation decision-theoretic rough fuzzy set models and they are exhibited in Table 4.

Table 4 indicates that the lower and upper approximations of Type-1 O-DqMDTRFS, Type-2 O-DqMDTRFS, Type-1 P-DqMDTRFS, Type-2 P-DqMDTRFS, Type-1 M-DqMDTRFS and Type-2 M-DqMDTRFS with respect to \(\alpha = 0.5, \beta = 0.3\) and \(k =2\), respectively. Based on these results, we can di-rectly obtain the rough regions by the definitions. For simplicity and without loss of generality, we choose the Type-1 P-DqMDTRFS as an example, and the rough regions are exhibited by following way.

For \(\alpha =0.5\), \(\beta =0.3\) and \(k =2\), these models have their own quantitative semantics for the relative and absolute degree quantification. Analogously, the thresholds can be determined by the real acquirements. Then, the decision rules can be simply achieved based on these studied decision mechanisms as follows:

-

\(((I^P)P)\) The patients \(x_3,x_4,x_6,x_9,x_{14},x_{15},x_{17},x_{18},x_{20},x_{24},x_{26},x_{29}\) and \(x_{33}\) are suffering from cold with respect to these diagnostic indexes and given parameters;

-

\(((I^P)N)\) The patients \(x_1,x_7,x_{19},x_{22},x_{30}\) and \(x_{35}\) are not suffering from cold regarding current diagnostic conditions;

-

\(((I^P)B)\) The patients \(x_2,x_5,x_8,x_{10},x_{11},x_{16},x_{21},x_{23},x_{27},x_{28},x_{31},x_{34}\) and \(x_{36}\) can not be diagnosed with respect to present information. We need to take a further diagnosis to make decisions.

Case 3 \(\alpha +\beta >1\). Consider the following loss function:

-

\(\lambda _{PP} = 0, \lambda _{PN} = 21\),

-

\(\lambda _{BP} = 7, \lambda _{BN} = 2\),

-

\(\lambda _{NP} = 9, \lambda _{NN} = 0\).

According to the loss function, we can get that \(\alpha =0.7, \beta =0.5\), i.e. \(\alpha +\beta >1\). We can obtain the lower and upper approximations of \(\widetilde{d}\) for the designed double-quantitative multigranulation decision-theoretic rough set modes and results as shown in Table 5.

According to Table 5, we can compute the rough regions of Type-1 O-DqMDTRFS, Type-2 O-DqMDTRFS, Type-1 P-DqMDTRFS, Type-2 P-DqMDTRFS, Type-1 M-DqMDTRFS and Type-2 M-DqMDTRFS with respect to \(\alpha = 0.7, \beta = 0.5\) and \(k =2\), respectively. Without loss of generality, we take the Type-1 M-DqMDTRFS as an example, and the rough regions of this model are exhibited as follows:

For \(\alpha =0.7\), \(\beta =0.5\) and \(k =2\), these rough regions have their own quantitative semantics for the relative and absolute degree quantification. Based on these results, we can get that the rough regions are varied with the changes of thresholds. Furthermore, we can get the decision rules by using Type-1 M-DqMDTRFS model as follows:

-

\(((I^M)P)\) The patients \(x_3,x_4,x_6,x_8,x_9,x_{11},x_{14},x_{17},x_{18},x_{20},x_{23},x_{24},x_{26},x_{29},x_{31}\) and \(x_{33}\) are suffering from cold with respect to these diagnostic indexes and given parameters;

-

\(((I^M)N)\) The patients \(x_1,x_5,x_7,x_{12},x_{13},x_{16},x_{19},x_{22},x_{25},x_{27},x_{30},x_{32}\) and \(x_{35}\) are not suffering from cold with respect to current diagnostic conditions;

-

\(((I^M)B)\) The patients \(x_2,x_{10},x_{15},x_{21},x_{28},x_{34}\) and \(x_{36}\) can not be diagnosed with respect to present information. A further diagnosis is need to them.

With regard to one information system, the rough regions and decision rules rely on the parameters to solve different issues. According to these case studies, we can obtain that the rough regions and decision rules are varied with respect to different thresholds. For the same patient, the decision rule depends on the models and thresholds. For the thresholds are \(\alpha =0.6\), \(\beta =0.4\) and \(k =2\), the decision rule indicate that the patients\(x_2\),\(x_4\),\(x_6\),\(x_8\),\(x_9\),\(x_{10}\),\(x_{11}\),\(x_{14}\),\(x_{18}\),\(x_{20}\),\(x_{24}\), \(x_{26}\),\(x_{29}\),\(x_{31}\),\(x_{33}\) and \(x_{34}\) are suffering from cold. The patient \(x_2\) is diagnosed with a cold in the model of Type-1 O-DqMDTRFS, but the patient not be treated as sick in original Table 1. We analyzed the symptoms of \(x_2\), the possibility of misdiagnosis is present in initial medicinal data. For the thresholds are \(\alpha =0.5\), \(\beta =0.3\) and \(k =2\), we can achieve that \(x_3,x_4,x_6,x_9,x_{14},x_{15},x_{17},x_{18},x_{20},x_{24},x_{26},x_{29}\) and \(x_{33}\) are diagnosed with a cold in the model of Type-1 P-DqMDTRFS, we can get that the patient \(x_{15}\) is diseased but can not be diagnosed with respect to present information in Type-1 P-DqMDTRFS. Combining the symptoms of \(x_{15}\) that shown in the Table 1, we can think that \(x_{15}\) is not likely to catch a cold. For the thresholds are \(\alpha =0.7\), \(\beta =0.5\) and \(k =2\), the results show that the patients \(x_3,x_4,x_6,x_8,x_9,x_{11},x_{14},x_{17},x_{18},x_{20},x_{23},x_{24},x_{26},x_{29},x_{31}\) and \(x_{33}\) are suffering from cold in Type-1 M-DqMDTRFS. These diagnostic results are consistent with previous diagnostic results in Type-1 O-DqMDTRFS. The sets of health human are different in these models, but the people \(x_1, x_7, x_{19}, x_{22}, x_{35}\)are health that are diagnosed by model Type-1 O-DqMDTRFS, Type-1 P-DqMDTRFS, Type-1 M-DqMDTRFS. The symptoms of them indicate that they are certainly healthy. Utilizing these models, we can perform some preliminary diagnostic analysis for patients. The diagnostic results for different models and thresholds are not completely consistent. Thus, we should choose an appropriate model and thresholds based on practical requirements in applications.

6 Conclusions

In this paper, in order to study double-quantification decision-theoretic approach which fuses the relative and absolute quantitative information in fuzzy approximate space, we introduce the idea of double-quantification decision-theoretic into the framework of multigranulation. This paper mainly investigates double-quantification, namely the relative and absolute information by combining DTRFS and GRFS models together with a fuzzy concept. By recombining the DTRFS and GRFS models, three pairs of DqMDTRFS models are established. We not only research the basic properties of three pairs of models, but also derive the decision rules of each model based on Bayesian decision-making methods, analyse the relationship between each model and multigranulation rough sets, and discuss the relationships among three pairs of DqMDTRFS models. At the same time, a case of a patient’s cold, prove the feasibility of the proposed model and method. Using these models, we can make some preliminary diagnostic analysis of patients. The diagnostic results of different models and thresholds are not completely consistent. Therefore, we should choose the appropriate model and threshold according to the actual needs of the application. The proposed decision theory rough fuzzy set model opens up a way for decision making research of probabilistic rough sets in fuzzy environment.

References

Pawlak Z (1982) Rough set. Int J Comput Inf Sci 11(5):341–356

Pawlak Z (1991) Rough sets: theoretical aspects of reasoning about data. Kluwer Academic, Dordrecht

Zhao XR, Hu BQ (2016) Fuzzy probabilistic rough sets and their corresponding three-way decisions. Knowl Based Syst 91:126–142

Zhang XH, Miao DQ, Liu CH, Le ML (2016) Constructive methods of rough approximation operators and multigranulation rough sets. Knowl Based Syst 91:114–125

Zhang YL, Li CQ, Lin ML, Lin YJ (2015) Relationships between generalized rough sets based on covering and reflexive neighborhood system. Inf Sci 319:56–67