Abstract

In this paper, a new general approach is presented to fit a fuzzy regression model when the response variable and the parameters of model are as fuzzy numbers. In this approach, for estimating the parameters of fuzzy regression model, a new definition of objective function is introduced based on the different loss functions and under the averages of differences between the \(\alpha \)-cuts of errors. The application of the proposed approach is studied using a simulated data set and some real data sets in the presence of different types of outliers.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The fuzzy regression analysis is based on some regression models between a response variable and some explanatory variables when some quantities are as imprecise. Also, among the observed data, we may encounter with some outliers. In such situations, we need to introduce a regression model which supports these limitations. An approach for solving these limitations is using of the robust fuzzy regression models.

In the following, we review some works on robust fuzzy regression models. Arefi (2020) studied a robust fuzzy regression based on a generalized quantile loss function under the fuzzy outputs and fuzzy parameters. Chang and Lee (1994) presented fuzzy least absolute deviations regression based on the ranking of fuzzy numbers. An approach to fit a robust least squares fuzzy regression based on a kernel function is investigated by Khammar et al. (2020). Oussalah and De Schutter (2002) proposed a fuzzy regression model with the combination of least trimmed squares (LTS) and least median squares (LMS). They studied the performance of the proposed model when the data are contaminated by outliers. Sanli and Apaydin (2004) investigated a robust estimation procedure for fuzzy linear regression model with fuzzy input–output data based on the least median squares method. A robust approach to model a fuzzy linear regression based on M-estimators is studied by Shon (2005). Choi and Buckley (2008) utilized the least absolute deviations (LAD) method for estimating the parameters of a fuzzy regression model and investigated the performance of the proposed model under the fuzzy outlier data. D’Urso et al. (2011) and D’Urso and Massari (2013) proposed a robust fuzzy linear regression model with crisp inputs and fuzzy outputs based on the least median squares-weighted least squares (LMS-WLS) estimation procedure. Some approaches to fit the fuzzy regression models based the least absolutes method are investigated by Chachi and Taheri (2016), Taheri and Kelkinnama (2012), and Zeng et al. (2016). Based on the least trimmed squares estimation, Chachi and Roozbeh (2017) proposed a estimation procedure for determining the coefficients of a fuzzy regression model with crisp input–fuzzy output data. A weighted least-squares fuzzy regression model under crisp input–fuzzy output data and fuzzy coefficients is provided by Chachi (2019). For considering some other approaches of fuzzy regression models, see Chen and Hsueh (2007, 2009), Nasrabadi and Hashemi (2008), Hao and Chiang (2008), Kula et al. (2012), Mosleh et al. (2010), Arefi and Taheri (2015), Lopez et al. (2016), Hesamian and Akbari (2019), and Rapaic et al. (2019).

This paper is organized as follows: In Sect. 2, some preliminary concepts about fuzzy sets and fuzzy numbers are provided. In Sect. 3, a new general approach based on the different loss functions is investigated to fit the fuzzy regression models when the response variable and the parameters of models are as fuzzy numbers. In this section, we present some indices of goodness of fit to evaluate the proposed fuzzy regression models. Also, the cross-validation method is provided to examine the predictive ability of the proposed fuzzy regression models. Some numerical examples to assess the effectiveness of the proposed method are presented in Sect. 4. Finally, in Sect. 5, some concluding remarks are provided.

2 Preliminary concepts

In this section, we recall some notations and preliminary concepts on fuzzy sets (see Zimmermann 2001).

Let \(\Omega \) be an universal set. A fuzzy set \(\tilde{N}\) of \(\Omega \) is defined by the membership function \(\tilde{N}:\Omega \rightarrow [0, 1]\). The \(\alpha \)-cut of \(\tilde{N}\) is as \(\tilde{N}[\alpha ]=\{x\in R:\tilde{N}(x)\ge \alpha \}\) for \(0<\alpha \le 1\).

Definition 1

A fuzzy set \(\tilde{N}\) on \(\Omega \) is called a fuzzy number, if

-

(i)

\(\widetilde{N}(x)=1\) for some \(x\in \Omega \),

-

(ii)

\(\widetilde{N}[\alpha ]\) is a closed bounded interval for \(0<\alpha \le 1\).

Definition 2

Let \(\tilde{N}\) be a fuzzy number, then a LR fuzzy number is defined by the following membership function

where \(l, r\ge 0\) and L(.) and R(.) are the strictly decreasing functions as \(L, R :R^+ \rightarrow [0,1]\). It is denoted by \(\tilde{N}=(m, l,r)_{LR}\).

Remark 1

In a LR fuzzy number \(\tilde{N}\), if \(L(x) = R(x)\), then \(\tilde{N}\) is called the LL fuzzy number and is denoted as \(\tilde{N}=(m,l,r)_{LL}\). For \(L(x) = R(x) = 1-x\) for all \(x\in [0,1]\), \(\tilde{N}\) is called a triangular fuzzy number and is denoted by \(\tilde{N}=(m,l,r)_T\). Also, for \(l=r\), \(\tilde{N}\) is a symmetric triangular fuzzy number as \(\tilde{N}=(m,l)_T\).

Proposition 1

Assume that \(\tilde{A}=(m_a, l_a,r_a)_{LR}\) and \(\tilde{B}=(m_b, l_b,r_b)_{LR}\) are two LR fuzzy numbers and \(\lambda \in R-\{0\}\). Some of the arithmetic operations based on extension principle (Zimmermann 2001) are given as follows

3 Methodology

In this section, we introduce a new approach in fuzzy regression theory based on the concept of \(\alpha \)-cuts for the crisp inputs and the fuzzy output when the parameters of model are as fuzzy quantities.

3.1 Fuzzy regression model

In the following, we introduce fuzzy regression model under crisp input–fuzzy output variables. Our aim is to fit an optimal fuzzy linear regression model to this data set. Suppose that the fuzzy linear regression model based on crisp input–fuzzy output variables and the fuzzy parameters is given as follows

where, \(\tilde{\beta _j}=(\beta _j, \gamma _j)_{LL}\), \(j=0,\ldots ,p\), and \(\widetilde{Y}_i=(y_i, s_{i})_{LL}\), \(i=1,\ldots ,n\). The estimated fuzzy response variables are obtained as follows:

where \(x_{i0}=1\), \(i=1,\ldots ,n\).

3.2 Objective function

In the following, we first introduce some differences between the \(\alpha \)-cuts of fuzzy numbers, and then the objective functions of fuzzy regression models are provided based on loss function on these differences as follows.

Definition 1

Let \(\tilde{A}\) and \(\tilde{B}\) be two fuzzy numbers with \(\alpha \)-cuts \(\tilde{A}[\alpha ]=[\tilde{A}^L[\alpha ], \tilde{A}^R[\alpha ]]\) and \(\tilde{B}[\alpha ]=[\tilde{B}^L[\alpha ], \tilde{B}^R[\alpha ]]\). The averages of differences between the lower and upper \(\alpha \)-cuts of \(\tilde{A}\) and \(\tilde{B}\) are defined as

Remark 2

Let \(\tilde{A}\), \(\tilde{B}\) and \(\tilde{C}\) be three fuzzy numbers. Then, DL(., .) and DR(., .) in Definition 1 satisfy the following properties:

-

(i)

\(DL(\tilde{A},\tilde{A})=0\) and \(DR(\tilde{A},\tilde{A})=0\),

-

(ii)

\(DL(\tilde{A},\tilde{B})=-DL(\tilde{B},\tilde{A})\) and \(DR(\tilde{A},\tilde{B})=-DR(\tilde{B},\tilde{A})\),

-

(iii)

\(DL(\tilde{A},\tilde{B})+DL(\tilde{B},\tilde{C})=DL(\tilde{A},\tilde{C})\) and \(DR(\tilde{A},\tilde{B})+DR(\tilde{B},\tilde{C})=DR(\tilde{A},\tilde{C})\).

Remark 3

In a special case, if \(\tilde{A}=(m_a, l_a,r_a)_{LR}\) and \(\tilde{B}=(m_b, l_b,r_b)_{LR}\) are two LR fuzzy numbers, then

where \(\lambda =\int _{0}^{1}L^{-1}(\alpha )d\alpha \) and \(\rho =\int _{0}^{1}R^{-1}(\alpha )d\alpha \). Furthermore, if \(\tilde{A}=(m_a, l_a,r_a)_{T}\) and \(\tilde{B}=(m_b, l_b,r_b)_{T}\) are two triangular fuzzy numbers, then

Remark 4

If the LR fuzzy numbers \(\tilde{A}=(m_a, l_a,r_a)_{LR}\) and \(\tilde{B}=(m_b, l_b,r_b)_{LR}\) are reduced to the crisp numbers (i.e. \(l_a=r_a=l_b=r_b=0\)), then \(DL(\tilde{A},\tilde{B})=DR(\tilde{A},\tilde{B})=m_a-m_b\).

Definition 3

Suppose that \(\tilde{Y}_i\) and \(\hat{\widetilde{Y}}_i\) are the observed fuzzy response variable and the estimated fuzzy response variable, respectively. The objective function based on Definition 1 is defined as

where \(\psi (.)\) is a loss function.

In the objective function O, the relation \(\frac{1}{2}\big (\psi (DL(\tilde{Y_i},\hat{\tilde{Y_i}}))+\psi ((DR(\tilde{Y_i},\hat{\tilde{Y_i}}))\big )\) can be considered as the loss value of error between \(\tilde{Y}_i\) and \(\hat{\tilde{Y}}_i\). Note that based on the different loss functions \(\psi (.)\), we can obtain the different fuzzy regression models. Some loss functions are listed as follows:

Remark 5

In the loss function \(\psi ^3_\tau \), \(\tau \) is the quantile level and for \(\tau =0.5\), \(\psi ^3_\tau \) is reduced to the loss function \(\psi ^2\). Also, in the loss function \(\psi ^4_c\), based on some of indices of goodness of fit, we choose the value of c in interval [0, 2] (Huber 1981 says that the good value of c is in interval [1, 2]. So, it is taken as \(c=1.5\)).

From the relation (2), the objective functions based on the above loss functions are provided as follows:

By minimizing these objective functions under the parameters of model, we can obtain the optimal fuzzy regression models.

3.3 Estimation of model parameters

Based on the above objective functions, we can calculate the estimations of parameters of models as follows (assume that \(x_{ij}\ge 0\)).

(A) Squared error loss function: Based on relation (1), the objective function \(O_{\psi ^1}\) is rewritten as

where

By differentiating of \(O_{\psi ^1}\) with respect to \(\beta _j\) and \(\gamma _j\), the estimations of parameters are obtained as follows:

Remark 6

If we encounter with some negative spreads, we can again run the fuzzy regression model with considering such parameters as crisp (i.e. the related spreads are zero). Thus, the centers are obtained as before, but the spreads are calculated as follows

where \(\mathbf{X^{*}}\) is like \(\mathbf{X}\) in which the columns corresponding to crisp parameters are removed.

(B) Absolute error loss function and quantile loss function: For minimizing the objective functions \(O_{\psi ^2}\) and \(O_{\psi ^3}\), we can translate it into the linear programming problems as follows. Assume that \(DL(\tilde{Y_i},\hat{\tilde{Y_i}})=d^{L+}_i-d^{L-}_i\) and \(DR(\tilde{Y_i}, \hat{\tilde{Y_i}})=d^{R+}_i-d^{R-}_i\) with \(d^{L+}_i=\max (DL(\tilde{Y_i},\hat{\tilde{Y_i}}),0)\), \(d^{L-}_i=-\min (DL(\tilde{Y_i},\hat{\tilde{Y_i}}),0)\), \(d^{R+}_i=\max (DR(\tilde{Y_i},\hat{\tilde{Y_i}}),0)\), and \(d^{R-}_i=-\min (DR(\tilde{Y_i},\hat{\tilde{Y_i}}),0)\), then the objective functions are presented as

Hence, the linear programming problems are given as follows:

and

Remark 7

Note that for solving the above linear programming problems, we can use “LINGO” software (Schrage 2006) or “Mathematica” software (Wolfram 2015).

(C) Huber loss function: It is simple to verify that the Huber loss function can be equivalently rewritten as

Hence, the objective functions \(O_{\psi ^4_c}\) based on matrix forms are obtained as follows

where \(\mathbf{L}=(1, 1,\ldots ,1)'\). By differentiating (3) with respect to the vectors \(\beta \) and \(\gamma \), we have

where

Now, the estimations of parameters are obtained by the following simple iterative algorithm:

3.4 Goodness of fit of the model

In order to evaluate the proposed fuzzy regression models, we introduce some indices of goodness of fit. Also, using cross-validation method, the performance and predictive ability of proposed regression models are evaluated (see Geisser 1993; Wasserman 2006)

3.4.1 Goodness of fit

Definition 4

(Pappis and Karacapilidis 1993) Suppose that \(\tilde{Y}_i\) and \(\hat{\tilde{Y}}_i\) are the observed fuzzy response variable and the estimated fuzzy response variable, respectively. The mean of similarity measures (MSM) is defined as

where \(S_{PK}(\hat{\tilde{Y_i}},\tilde{Y_i})=\frac{\text {Card}(\hat{\tilde{Y_i}}\cap \tilde{Y_i})}{\text {Card}(\hat{\tilde{Y_i}}\cup \tilde{Y_i})}\) and \(\text {Card}(\tilde{A})\) denotes the cardinal number of \(\tilde{A}\) as \(\text {Card}(\tilde{A})=\int _{R}^{}\tilde{A}(x)dx\).

Definition 5

(Chen and Dang 2008) Suppose that \(\tilde{Y}_i\) and \(\hat{\tilde{Y}}_i\) are the observed fuzzy response variable and the estimated fuzzy response variable, respectively. The index for goodness of fit of regression model is defined as

where \(S_{CD}(\hat{\tilde{Y_i}},\tilde{Y_i})=\frac{1}{1+E(\hat{\tilde{Y_i}},\tilde{Y_i})}\) and \(E(\hat{\tilde{Y_i}},\tilde{Y_i})=\frac{\int _{S_{\tilde{Y_i}}\cup S_{\hat{\tilde{Y_i}}}}\mid \hat{\tilde{Y_i}}(y)-\tilde{Y_i}(y)\mid dy}{\int _{S_{\tilde{Y_i}}}\tilde{Y_i}(y)dy}\). Also, \(\hat{\tilde{Y_i}}(y)\) and \(\tilde{Y_i}(y)\) are the membership functions of \(\hat{\tilde{Y_i}}\) and \(\tilde{Y_i}\) with the supports \(S_{\hat{\tilde{Y_i}}}\) and \(S_{\tilde{Y_i}}\), respectively.

Definition 6

Suppose that \(\tilde{Y}_i\) and \(\hat{\tilde{Y}}_i\) are the observed fuzzy response variable and the estimated fuzzy response variable, respectively. Based on the objective function \(O_{\psi ^2}\), the index of goodness of fit of the model is defined as

where \(S_{O}(\hat{\tilde{Y_i}},\tilde{Y_i})=\frac{1}{1+O_{\psi ^2}(\hat{\tilde{Y_i}},\tilde{Y_i})}\).

Remark 8

The indices MSM, \(\bar{G}_1\) and \(\bar{G}_2\) are on the interval [0, 1]. The optimal model is the model with maximum values of MSM, \(\bar{G}_1\) or \(\bar{G}_2\).

3.4.2 Cross-validation

The cross-validation method is a well-known method for evaluating the performance and predictive ability of regression models. For doing this purpose, we divide the data set with size n into two sets. The first set contains the training data of size \(n-1\), which we can use to develop the regression model, and the second set is the testing data of size \(k=1\), which is used to evaluate the predictive ability of the presented regression model.

Comparison between fuzzy regression models based on the different loss functions and outliers in Example 2

In this method, we consider n steps. In each step, the ith observation is first deleted from the data set for \(i=1,2,\ldots ,n\), and then, the fuzzy regression model is obtained based on the remaining observations (training data set). In final, the value of the ith response variable is predicted based on the proposed fuzzy regression model and is denoted as \(\hat{\tilde{Y_i}}^{(-i)}\). To evaluate the performance of fuzzy regression model, we calculate the indices of goodness of fit MSM, \(\bar{G}_1\) and \(\bar{G}_2\) between \(\tilde{Y_i}\) and \(\hat{\tilde{Y_i}}^{(-i)}\).

4 Numerical examples

In this section, we present some numerical and simulation examples to illustrate our proposed approach.

Example 1

One of the classical problems in soil science is the measurement of physical, chemical, and/or biological soil properties. The problem results from the difficulty, time, and cost of direct measurements. Mohammadi and Taheri (2004) provided a data set that it includes some soil properties such as the cation exchange capacity (CEC), the sand content percentage (SAND), and the organic matter content (OM) (see Table 1). Now, we wish to model a relationship between CEC (as the response variable) and SAND and OM (as the explanatory variables) by the regression model:

where \(\tilde{\beta }_j=(\beta _j,\gamma _j)_T\), \(j=0,1,2\). But due to some impreciseness in related experimental environment, the response variable \(\tilde{Y}_i\) is reported as a symmetric triangular fuzzy number \((y_i, s_i)_T\), in which the spreads are proportional to centers. Here, we consider the sensitivity analysis of model by fuzzifying for different values of spreads as \(s_i=wy_i\) with \(w =0.05,0.10,0.20\). Note that Arefi (2020) recently presented a quantile fuzzy linear regression model on this data set. Since Arefi’s approach (AR) provided a optimal fuzzy regression model on this data set, we compare our work with this approach (the results are listed in Table 2). Results show that our proposed models based on the quantile loss function with \(\tau = 0.5\) and Huber loss function with \(c=0.5\) have better performance than the fuzzy model given by Arefi (2020) (for each \(w=0.05, 0.1, 0.2\)).

Now, to evaluate the performance and predictive ability of optimal regression model based on the quantile loss function with \(\tau = 0.5\), we apply the cross-validation method on data set in Table 1 (with \(s_i = 0.2y_i\)). The results are given in Table 3. Based on the values of goodness-of-fit indices (see Table 3), we can suggest the proposed fuzzy regression model has a suitable performance for predicting the response variables.

Example 2

Consider the data set in Table 4 (Tanaka and Lee 1998). To study the effect of outliers on the our proposed models, we have listed some of different types of outliers in Table 5. Assume that the original fuzzy regression model is as

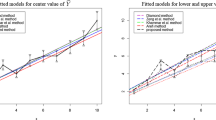

Table 6 lists the optimal fitted fuzzy regression models in the presence of outlier data. In original model, the models proposed based on the absolute error loss function and quantile loss functions (at \(\tau =0.75\)) have better performance than other models. In the presence of outlier data, the fitted fuzzy regression model based on the quantile loss function at \(\tau =0.75\) is robust because the obtained fuzzy regression models based on the quantile loss function at \(\tau =0.75\) are approximately similar in all cases (see Fig. 1).

Now, we apply the cross-validation method on data set in Table 4 based on the optimal fuzzy regression model with the quantile loss function at \(\tau =0.75\). The results are given in Table 7. The values of goodness-of-fit indices show that the predictive ability of the proposed regression model is suitable.

Example 3

(Tanaka et al. 1982) Consider the data set in Table 8. In this data set, the observations of independent variable are crisp and the observations of dependent variable are presented as the symmetric triangular fuzzy numbers. This data set has been considered by many numbers of researchers. Here, we compare our approach with some other approaches introduced by Diamond (1988) (DM), Chen and Hsueh (2007) \((CH_2)\), Chen and Hsueh (2009) \((CH_1)\), Mosleh et al. (2010) (ME), Taheri and Kelkinnama (2012) (TK), Roldan et al. (2012) (RE), Zeng et al. (2016) (ZE), and Lopez et al. (2016) (HE). Results are given in Table 9. By using the indices of goodness of fit, we can suggest the following cases:

-

In between the fuzzy linear regression models based on the least squared errors, the models (SLF), (DM), and \((CH_1)\) have the approximately similar results.

-

In between the fuzzy linear regression models based on the least absolute errors, the model (ALF) has better performance than the models \((CH_2)\), (TK), and (ZE).

-

The fuzzy linear regression model based on the quantile loss function (QLF) at \(\tau =0.6\) provides a model with better performance than other models considered in this example.

Example 4

(Simulation study) In this example, we want to design a data set that empirically assesses the robust performance of the proposed fuzzy regression model based on the quantile loss function. Based on a sample of size \(n=100\) simulated under a fuzzy regression model, we obtain a data set \((x_i,\tilde{Y_i})\), \(i=1,\ldots ,100\). Also, we add three different percentages of simulated outliers as \(p.out=\{0.05, 0.10, 0.20\}\) in this the data set, and we want to evaluate the proposed models. Note that we assume that the data set is simulated based on the following model

Fuzzy regression model under original data and based on quantile loss function with \(\tau =0.5\) in Example 4

Fuzzy regression models in the presence of outliers and based on quantile loss function with \(\tau =0.5\) in Example 4

where \(x_i\) and \(\varepsilon _i\) are generated from \(U(-40, 50)\) and N(0, 1.5), respectively. The outlier observations are generated according to two different types: Type I is the outliers in the centers of \(\tilde{Y}_i\), and Type II is the outliers in the spreads of \(\tilde{Y}_i\). We generate these two types of outliers from the bivariate Gaussian distribution \(N_2\left( \left[ \begin{array}{ll} \mu _x\\ \mu _y\\ \end{array} \right] , \left[ \begin{array}{ll} \sigma _X^2 \ \ \ \rho \sigma _X\sigma _Y\\ \rho \sigma _X\sigma _Y\ \ \ \sigma _Y^2\\ \end{array} \right] \right) \) and uniform distribution, respectively. In Type I, we generate observations \((x_i, y_i)\) from two bivariate Gaussian distributions \(N_2\left( \left[ \begin{array}{ll} \bar{x}+\varepsilon _i\\ max(y_i)+1.5s_y\\ \end{array} \right] , \left[ \begin{array}{ll} 20 \ \ \ 0\\ 0\ \ \ \ 5\\ \end{array} \right] \right) \) and \(N_2\left( \left[ \begin{array}{ll} \bar{x}+\varepsilon _i\\ min(y_i)-1.5s_y\\ \end{array} \right] , \left[ \begin{array}{ll} 20 \ \ \ 0\\ 0\ \ \ \ 5\\ \end{array} \right] \right) \), where \(\varepsilon _i\) is generated from \(U(-20, 40)\) and \(s_y=\sqrt{\frac{1}{n}\sum _{i=1}^{100}(y_i-\overline{y})^2}\). In Type II, we generate the spreads of triangular fuzzy response observations \((y_i, s_{i})_T\) based on U(9, 13). The results of the fitted fuzzy regression models based on the quantile loss function with \(\tau =0.25,0.5,0.75\) are given in Table 10. Based on the index of MSM, the model based on the quantile loss function with \(\tau =0.5\) has the best results. See Figs. 2 and 3 for the performance of the best fuzzy regression models (using quantile loss function at \(\tau =0.5\)). They show the robustness performances from our proposed model in original data set and in the models with the presence of outliers. Also, we can consider the similar results based on the results given in Tables 10 and 11. Based on these tables, we have

-

The MSM in the original data and the MSM without outliers (in the models with outliers) are approximately similar (see Table 10).

-

The values of the estimated parameters with original data and the mean values of the estimated parameters in the presence of outliers are approximately similar (see Table 11).

5 Conclusion

In this paper, we present a new approach to fit the fuzzy regression models based on some loss functions when the response variable and the parameters of model are as fuzzy numbers. Some of certain merits in this approach are as follows:

- (1):

-

It is a general approach for fitting the fuzzy regression models based on the different types of loss functions.

- (2):

-

A new definition of the objective function is presented based on loss function and the average of differences between the \(\alpha \)-cuts of errors.

- (3):

-

Among the proposed loss functions, the fuzzy regression models based on the quantile loss function and Huber loss function are robust under outlier data.

- (4):

-

To evaluate the goodness of fit of the proposed fuzzy regression models, we introduce three indices.

References

Arefi M (2020) Quantile fuzzy regression based on fuzzy outputs and fuzzy parameters. Soft Comput 24:311–320

Arefi M, Taheri SM (2015) Least-squares regression based on Atanassov’s intuitionistic fuzzy inputs–outputs and Atanassov’s intuitionistic fuzzy parameters. IEEE Trans Fuzzy Syst 23:1142–1154

Chachi J (2019) A weighted least-squares fuzzy regression for crisp input-fuzzy output data. IEEE Trans Fuzzy Syst 27:739–748

Chachi J, Roozbeh M (2017) A fuzzy robust regression approach applied to bedload transport data. Commun Stat Simul Comput 47:1703–1714

Chachi J, Taheri SM (2016) Multiple fuzzy regression model for fuzzy input–output data. Iran J Fuzzy Syst 13:63–78

Chang PT, Lee CH (1994) Fuzzy least absolute deviations regression based on the ranking of fuzzy numbers. In: Proceedings of the third IEEE world congress on computational intelligence, Orlando, FL, vol 2, pp 1365–1369

Chen SP, Dang JF (2008) A variable spread fuzzy linear regression model with higher explanatory power and forecasting accuracy. Inf Sci 178:3973–3988

Chen LH, Hsueh CC (2007) A mathematical programming method for formulating a fuzzy regression model based on distance criterion. IEEE Trans Cybern 37:705–12

Chen LH, Hsueh CC (2009) Fuzzy regression models using the least-squares method based on the concept of distance. IEEE Trans Fuzzy Syst 17:1259–1272

Choi SH, Buckley JJ (2008) Fuzzy regression using least absolute deviation estimators. Soft Comput 12:257–263

Diamond P (1988) Fuzzy least squares. Inf Sci 46:141–157

D’Urso P, Massari R (2013) Weighted least squares and least median squares estimation for the fuzzy linear regression analysis. Metron 71:279–306

D’Urso P, Massari R, Santoro A (2011) Robust fuzzy regression analysis. Inf Sci 181:4154–4174

Geisser S (1993) Predictive inference, vol 55. CRC Press, Boca Raton

Hao PY, Chiang JH (2008) Fuzzy regression analysis by support vector learning approach. IEEE Trans Fuzzy Syst 16:428–441

Hesamian G, Akbari MG (2019) Fuzzy quantile linear regression model adopted with a semi-parametric technique based on fuzzy predictors and fuzzy responses. Expert Syst Appl 118:585–597

Huber PJ (1981) Robust statistics. Wiley, New York, pp 153–195

Khammar AH, Arefi M, Akbari MG (2020) A robust least squares fuzzy regression model based on kernel function. Iran J Fuzzy Syst 17:105–119

Koenker R (2005) Quantile regression. Cambridge University Press, Cambridge

Kula KS, Tank F, Dalkyly TE (2012) A study on fuzzy robust regression and its application to insurance. Math Comput Appl 17:223–234

Lopez R, de Hierro AF, Martinez-Morenob J, Aguilar-Pena C, Lopez R, de Hierro C (2016) A fuzzy regression approach using Bernstein polynomials for the spreads: computational aspects and applications to economic models. Math Comput Simul 128:13–25

Mohammadi J, Taheri SM (2004) Pedomodels fitting with fuzzy least squares regression. Iran J Fuzzy Syst 1:45–61

Mosleh M, Otadi M, Abbasbandy S (2010) Evaluation of fuzzy regression models by fuzzy neural network. J Comput Appl Math 234:825–834

Nasrabadi E, Hashemi SM (2008) Robust fuzzy regression analysis using neural networks. Int J Uncertainty Fuzziness Knowl Based Syst 16:579–598

Oussalah M, De Schutter J (2002) Robust fuzzy linear regression and application for contact identification. Intell Autom Soft Comput 8:31–39

Pappis CP, Karacapilidis NI (1993) A comparative assessment of measure of similarity of fuzzy values. Fuzzy Sets Syst 56:171–174

Rapaic D, Krstanovic L, Ralevic N, Obradovic R, Klipa C (2019) Sparse regularized fuzzy regression. Appl Anal Discrete Math 13:583–604

Roldan C, Roldan A, Martinez-Moreno J (2012) A fuzzy regression model based on distances and random variables with crisp input and fuzzy output data: a case study in biomass production. Soft Comput 16:785–795

Sanli K, Apaydin A (2004) The fuzzy robust regression analysis, the case of fuzzy data set has outlier. Gazi Univ J Sci 17:71–84

Schrage L (2006) Optimization Modeling with Lingo, 6th edn. Lindo Systems, Chicago

Shon BY (2005) Robust fuzzy linear regression based on M-estimators. Appl Math Comput 18:591–601

Taheri SM, Kelkinnama M (2012) Fuzzy linear regression based on least absolute deviations. Iran J Fuzzy Syst 9:121–140

Tanaka H, Lee H (1998) Interval regression analysis by quadratic programming approach. IEEE Trans Syst 6:473–481

Tanaka H, Uegima S, Asai K (1982) Linear regression analysis with fuzzy model. IEEE Trans Syst 12:903–907

Wasserman L (2006) All of nonparametric statistics. Springer, Berlin

Wolfram S (2003) The Mathematica Book, 5th edn. Wolfram Media Inc, USA

Zeng W, Feng Q, Lia J (2016) Fuzzy least absolute linear regression. Appl Soft Comput 52:1009–1019

Zimmermann HJ (2001) Fuzzy set theory and its applications, 4th edn. Kluwer Nihoff, Boston

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interest regarding the publication of this paper.

Ethical approval

This article does not contain any studies with human participants or animals.

Additional information

Communicated by A. Di Nola.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Khammar, A.H., Arefi, M. & Akbari, M.G. A general approach to fuzzy regression models based on different loss functions. Soft Comput 25, 835–849 (2021). https://doi.org/10.1007/s00500-020-05441-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-020-05441-2