Abstract

A major concern in the design of foundations is to achieve a precise estimation of bearing capacity of the underlying soil or rock mass. The present study proposes a new design equation for the prediction of the bearing capacity of shallow foundations on rock masses utilizing artificial neural network (ANN). The bearing capacity is formulated in terms of rock mass rating, unconfined compressive strength of rock, ratio of joint spacing to foundation width, and angle of internal friction for the rock mass. Further, a conventional calculation procedure is proposed based on the fixed connection weights and bias factors of the best ANN structure. A comprehensive database of rock socket, centrifuge rock socket, plate load, and large-scaled footing load test results is used for the model development. Sensitivity and parametric analyses are conducted and discussed. The results clearly demonstrate the acceptable performance of the derived model for estimating the bearing capacity of shallow foundations. The proposed prediction equation has a notably better performance than the traditional equations.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

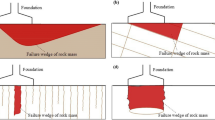

A major concern for foundation design of structures is to precisely estimate the bearing capacity of the underlying layer. The bearing capacity can be defined as the pressure required for causing failure through rupture of underlying soil or rock mass. Rock masses are commonly chosen as the underlying layer for important structures due to less settlement and high bearing capacity compared to soils. Figure 1 represents a typical sketch of a shallow foundation resting on a jointed rock mass. Bearing capacity failure in overloaded rock foundations is one of the common failure mechanisms in rocks (Sowers 1979). This failure mechanism mainly depends on the ratio of space between joints to foundation width (S/B), joint conditions (open or closed), the direction of joints, and rock type (Sowers 1979).

Direct determination of the ultimate bearing capacity using testing methods requires cumbersome and expensive laboratory or field tests. Therefore, several analytical and semi-empirical methods have been conducted to estimate the ultimate bearing capacity of rock beneath the foundations. Analytical methods such as finite element and limit equilibrium methods use initial assumptions for relating the bearing capacity to the footing geometry and rock properties (Terzaghi 1946; Bishoni 1968; Sowers 1979; Goodman 1989). The semi-empirical methods often propose a correlation between the bearing capacity and other properties of rock mass based on the empirical observations and experimental test results (Bowles 1996; Hoek and Brown 1988; Carter and Kulhawy 1988). One of the major drawbacks of the analytical methods is that they do not take into account the important role of the rock type and its qualitative mass parameters such as rock mass rating (RMR). On the other hand, the empirical methods often relate the bearing capacity to qualitative and rock mass classification parameters and do not account for the geometry of the foundations or space between joints. The limitations of the existing analytical and empirical methods imply the necessity of developing new models correlating the bearing capacity factor to both quantitative and qualitative parameters.

Soft computing techniques are considered as alternatives to traditional methods for tackling real-world problems. They automatically learn from data to determine the structure of a prediction model. Artificial neural network (ANN) is a well-known branch of soft computing (Alavi et al. 2010). This technique has been successfully employed to solve problems in civil engineering field (e.g., Kayadelen et al. 2009; Günaydın 2009; Kolay et al. 2010; Das et al. 2010; Yilmaz 2010a, b; Akgun and Türk 2010; Kaunda et al. 2010; Das et al. 2011a, b, c; Mert et al. 2011; Alavi and Gandomi 2011; Mollahasani et al. 2011; Yilmaz et al. 2012; Sattari et al. 2012; Tasdemir et al. 2013; Ocak and Seker 2012, 2013; Isik and Ozden 2013; Alkhasawneh et al. 2014; Wu et al. 2013; Maiti and Tiwari 2014; Park et al. 2013; Ceryan et al. 2013; Manouchehrian et al. 2014). Besides, ANN has been used to predict the bearing capacity of shallow foundations resting on soil layers (Soleimanbeigi and Hataf 2005; Padmini et al. 2008; Kuo et al. 2009; Kalinli et al. 2011).

This study is aimed at developing a new ANN model for the prediction of the bearing capacity of shallow foundations on rock masses. Despite the good performance of ANN in most cases, it is considered a black-box model. That is, it is not capable of generating practical prediction equations. To overcome this limitation, this study proposes an efficient approach to convert the derived ANN model into a relatively simple design equation through the interpretation of the fixed connection weights and bias factors of the best network structure. Multilayer perceptron (MLP), as one of the most popular ANN structures, is chosen for the analysis. A comparative database is used for the establishment of the models.

Artificial neural network

Artificial neural network is a computational simulated system that follows the neural networks of the human brain. The current interest in ANN is largely due to its ability to mimic natural intelligence in its learning from experience (Zurada 1992). ANN typically includes a series of processing elements, nodes or neurons, generally arranged in different layers such as input layer, output layer and one or more hidden layers between them. ANN and similar soft computing techniques are usually utilized to find the relationship or program between input and output variables. Unlike conventional methods, ANN has the ability to achieve acceptable results in less time and without need for predefined criteria, assumptions or rules. In the ANN process, inputs are adapted in hidden layer and after exit from output layer turn into the network’s results. ANN uses a learning rule to find a set of weights on training data. Then, the network produces new output with a particular accuracy. Thereafter, another data set is needed to validate the performance of training phase. This process is developed until the error reaches the minimum value (Alavi and Gandomi 2011).

Multilayer perceptron networks

Multilayer perceptron is one of the most widely used ANN structures utilizing feed-forward architecture. Rumelhart and McClelland (1986) and McClelland and Rumelhart (1988) developed back propagation (BP) or backward propagation of errors algorithm for training multilayer perceptrons. In MLP, each neuron of a layer is interconnected with weighted connections to all neurons of the next layer. Each layer may perform independent calculations on data that is received from the previous layer. In an artificial neuron, each input (x i ) from the previous layer is multiplied by an adaptive weight coefficient (w ij ) that connects two layers. Thereafter, the weighted inputs are summed (Summation Function) and a bias value (Bias j ) is added. This activity is then changed by a function (Transfer Function) to produce the output of the layer (y j ) or input of next layer. For nonlinear problems, the sigmoid functions (Hyperbolic tangent sigmoid or log-sigmoid) are usually selected as the transfer function (Alavi et al. 2010; Alavi and Gandomi 2011; Mollahasani et al. 2011). This process is typically shown by Eq. (1) and represented in Fig. 2.

The BP algorithm adjusts network weights by error propagation from the output to the input. In this algorithm, the process reverted and weight values changed to minimize the error (Alavi et al. 2010; Alavi and Gandomi 2011). Modifying the interconnections between layers will reduce the following error function (E):

where \( t_{k}^{n} \) and \( h_{k}^{n} \) are, respectively, the calculated output and the actual output value. n is the number of sample; and k is the number of output neurons.

Numerical simulation of bearing capacity

In order to reach reliable estimations of the bearing capacity of shallow foundations on rock masses, the impact of several parameters should be incorporated into the model development. The general forms of the existing prediction equations indicate that the ultimate bearing capacity of shallow foundations on rock mass mainly depends on the foundation width and properties of the rock beneath it (Terzaghi 1946; Bishoni 1968; Sowers 1979; Goodman 1989; Bowles 1996; Hoek and Brown 1988; Carter and Kulhawy 1988). The rock mass qualitative parameters such as rock quality designation (RQD) index and geological strength index (GSI) are widely used to develop empirical and semi-empirical equations for the evaluation of rock mass properties (Hoek and Brown 1988; Bowles 1996, 1988; Carter and Kulhawy 1988; AASHTO 2007; Paikowsky et al. 2010). The RMR index is another qualitative parameter that has found wide applications in various types of geological engineering projects. This parameter was introduced by Bieniawski (1978, 1989) to provide reliable estimation of rock mass properties. The RMR value represents different geologic parameters such as RQD index, joint or discontinuity spacing, joint condition, ground water condition, etc. Thus, the RMR parameter implicitly includes the effect of several important parameters for characterizing the rock mass behavior. The present study takes into account the effects of both these qualitative parameters, as well as other influencing quantitative parameters to predict the bearing capacity of shallow foundations on rock masses. It is notable that the rock mass is considered as a continuum equivalent medium. Consequently, the proposed model for the prediction of the ultimate bearing capacity (q ult) is considered to be a function of the following parameters:

where RMR is the rock mass rating, q u (MPa) the unconfined compressive strength of rock, S/B the ratio of joint spacing to foundation width (equivalent diameter), \( \phi \) (°) the angle of internal friction for rock mass.

Experimental database

The ANN-based models are developed using an extensive database including 102 elaborate experimental data obtained from different studies (Abu-Hejleh and Attwooll 2005; Baker 1985; Burland and Lord 1970; Carrubba 1997; Lord 1997; Glos and Briggs 1983; Goek and Hustad 1979; Hummert and Cooling 1988; Jubenville and Hepworth 1981; Lake and Simons 1970; Leung and Ko 1993; Maleki and Hollberg 1995; Mallard 1977; McVay et al. 2006; Nitta et al. 1995; Pellegrino 1974; Pells and Turner 1979, 1980; Radhakrishnan and Leung 1989; Spanovich and Garvin 1979; Thorne 1980; Ward and Burland 1968; Webb 1976; Williams 1980; Wilson 1976). The database contains results of 49 rock socket tests (6 centrifuge rock socket tests), 40 plate load tests and 13 load test on scaled model of footings. The database includes the results of experiments on circle and square footings of different sizes tested on various types of masses such as sandstone, claystone, shale, chalk, and basalt. The major bearing capacity values of rock mass is q ult, initially obtained or interpreted from load–displacement curves proposed by Hirany and Kulhawy (1988). Different parts of the employed database have been used by other researchers for the behavioral analysis of q ult (AASHTO 2007; Paikowsky et al. 2010). The descriptive statistics of the experimental results are given in Table 1. The complete list of the collected data is represented in Table 2.

Data preparation

Overfitting is one of the essential problems in generalization of ANN. Overfitting generally occurs when a model is excessively complex, such as having too many parameters relative to the number of observations. A model which has been overfitted will generally have poor predictive performance, as it can exaggerate minor fluctuations in the data. An approach to avoid overfitting is to test individuals from the run on a validation set to find a better generalization. Then, another data set should be used at the end of the data analysis to verify the generalization performance of the model (Banzhaf et al. 1998; Gandomi et al. 2011). Accordingly, in the present study, the available data sets are randomly classified into three subsets: (1) learning, (2) validation (check), and (3) test subsets. The learning set is used to fit the models and the validation set is used to estimate the prediction error for model selection. Since both of the learning and validation data are involved in the modeling process, they can be categorized into one group, namely training data. Finally, the test set is employed for the evaluation of the generalization ability of the final chosen model. The learning, validation and test data are usually taken as 50–70, 15–25 and 15–25 % of all data, respectively (Shahin and Jaksa 2005; Alavi et al. 2011). In the present study, 85 % of the data sets are taken for the learning and validation processes (72 data vectors for the learning process and 15 data sets as the validation data). The remaining 15 % of the data sets are used for the testing of the obtained models.

Statistical criteria for measuring performance

The best ANN models are chosen on the basis of a multi-objective strategy as follows: (Alavi and Gandomi 2011; Gandomi et al. 2011):

-

1.

The simplest model, although this is not a predominant factor.

-

2.

The best fitness value on the learning data sets.

-

3.

The best fitness value on the validation data sets.

In order to assess the performance of ANN model, correlation coefficient (R), root mean squared error (RMSE) and mean absolute error (MAE) are considered which are calculated using the following equations:

in which h i and t i are, respectively, the actual and predicted output values for the ith output, \( \overline{{h_{i} }} \) and \( \overline{{t_{i} }} \) are the average of the actual and predicted outputs, and n is the number of sample.

Data normalization

An important step to optimize the learning process is data scaling or normalization. Normalization of data increases the speed of learning in neural networks and is especially efficient where the inputs are in widely different scales. More, it is recommended to normalize or standardize the inputs in order to reduce the chances of getting stuck in local optima or unchanged outputs (Alavi et al. 2010). The activation functions tangent sigmoid or log-sigmoid provide an output in the ranges [−1 1] and [0 1], respectively. There are several normalization methods (Swingler 1996; Mesbahi 2000). In this study, after controlling several normalization methods, the following method is used to normalize the variables to a range of [L, U]:

where X max and X min are the maximum and minimum values of the variable and X n is the normalized value. In the present study, L = 0.05 and U = 0.95. Since the US Standard units are considered for the parameters in the original ANN modeling, the maximum and minimum values of the q u and q ult variables in Eq. (7) should be in kips per square foot (ksf). Consequently, q u,n and q ult,n , respectively, represent the normalized forms of q u and q ult, and can be readily determined using the following equations:

in which, q u and q ult are in MPa. Evidently, the RMR, S/B, and ϕ parameters are not affected by this issue. These three parameters are normalized using Eq. (7) and their corresponding X max and X min values shown in Table 1.

Model development

The available database is used for establishing the ANN prediction models. After developing different models with different combinations of the input parameters, the final explanatory variables (RMR, q u , S/B, and \( \phi \)) are selected as the inputs of the optimal model. For the development of the ANN models, a script is written in the MATLAB environment using Neural Network Toolbox 5.1 (MathWorks 2007). The performance of an ANN model mainly depends on the network architecture and parameter settings. According to a universal approximation theorem (Cybenko 1989), a single hidden layer network is sufficient for the traditional MLP to uniformly approximate any continuous and nonlinear function. Choosing the number of the hidden layers, hidden nodes, learning rate, epochs, and activation function type plays an important role in the model construction (Alavi et al. 2010; Alavi and Gandomi 2011; Mollahasani et al. 2011). Hence, several MLP network models with different settings for the mentioned characters were trained to reach the optimal configurations with the desired precision (Eberhart and Dobbins 1990). The written program automatically tries various numbers of neurons in the hidden layer and reports the R, RMSE and MAE values for each model. The model that provided the highest R and lowest RMSE and MAE values on the learning and validation data sets is chosen as the optimal model. Various training algorithms are implemented for the training of the MLP network such as gradient descent (traingd), Levenberg–Marquardt (trainlm), quasi-Newton back-propagation (trainbfg), and resilient (trainrp) back-propagation algorithms. The best results are obtained by Levenberg–Marquardt method. Also, the transfer function between the input and hidden layer is log-sigmoid of form 1/(1 + e −x). A linear transfer function (purelin) is adopted between the hidden layer and output layer.

The weights and biases are randomly assigned for each run. These assignments considerably change the performance of a newly trained network even when all the previous parameter settings and the architecture are kept constant. This leads to extra difficulties in selection of optimal architecture and parameter settings. To overcome this difficulty, the weights and biases are frozen after the networks are well-trained. Thereafter, the following function is used to convert the optimal ANN model into mathematical equations relating the input parameters and the output parameter (h) (Goh et al. 2005; Alavi and Gandomi 2011):

where bias h is the hidden layer bias, V k the weight connection between neuron k of the hidden layer and the single output neuron, bias hk the bias at neuron k of the hidden layer (k = 1, h), w ik the weight connection between the input variable (i = 1, m) and neuron k of the hidden layer, x i the input parameter i, f HO the transfer function between the hidden layer and output layer, and f IH is the transfer function between the input and hidden layer (Alavi and Gandomi 2011).

ANN-based formulation for q ult

The model architecture that gave the best results for the formulation of q ult is found to contain:

-

One invariant input layer, with 4 (n = 4) arguments (RMR, q u , S/B, and ϕ) and a bias term;

-

One invariant output layer with 1 node providing the value of q ult.

-

One hidden layer having 5 (m = 5) nodes.

Figure 3 shows a schematic illustration of the produced ANN network. The ANN model is built with a learning rate of 0.05 and trained for 1,000 epochs. After de-normalization of the output, the final ANN-based formulation of q ult (MPa) is as follows:

where,

in which RMR n , q u,n , (S/B) n , and ϕ n , respectively, represent rock mass rating, unconfined compressive strength of rock, ratio of joint spacing to foundation width, and angle of internal friction for rock mass normalized using Eqs. (7) and (8). k is the number of the hidden layer neurons. The input layer weights (W k ), input layer biases (bias k ), hidden layer weights (V k ), and hidden layer biases (bias h ) of the optimum ANN model are presented in Tables 3 and 4. A comparison of the measured and predicted q ult values by ANN is shown in Fig. 4.

Calculation procedure: design example

A calculation procedure is proposed based on the fixed connection weights and bias factors of the best obtained ANN structure (Alavi and Gandomi 2011). The provided illustrative design example clearly explains the implementation of the ANN prediction equation. For this aim, one of the samples used for the testing of the models is taken. The RMR, q u , S/B, and ϕ values for this sample are equal to 50.00, 12.5 MPa, 1.35, and 34°, respectively, q ult is required. The calculation procedure can be divided into three sections: (1) normalization of the input data; (2) calculation of the hidden layers; and (3) prediction of the output (Alavi and Gandomi 2011). The calculation procedure is outlined in the following steps:

Step 1: Normalization of the input data (RMR, q u , S/B, and ϕ) to lie in a range of 0.05–0.95 and calculation of the input neurons (RMR n , q u,n , (S/B) n , and ϕ n ) for each input data vector using Eqs. (7) and (8). The input neurons are calculated as:

For RMR: the maximum and minimum values of the variable are 15 and 100, respectively. Thus:

For q u : the q u,n value is obtained using Eq. (8). Thus:

Similarly,

Step 2: Calculation of the hidden layer. The input value of each neuron in the hidden layer is determined for five neurons using the input layer weights and biases shown in Table 3. Given the information provided, the input values of the neuron (F 1, …, F 5) are calculated using Eq. (13):

Similarly, \( F_{2} = \, 2.5286, \, F_{3} = \, 3.1551, \, F_{4} = \, - 4.0540,\quad{\text{and}}\quad F_{5} = \, 0.7077 \).

Step 3: Prediction of q ult. The input value of each output neuron is calculated using an activation function (log-sigmoid function). The calculated values are multiplied by the hidden layer connection weights (Table 4) and the summation is obtained:

where f(x) is the a log-sigmoid function of form 1/(1 + e −x). Using Eq. (11), the value of q ult is calculated as follows:

In this example, the results are in good agreement with the measured values (q ult = 14 MPa). The predicted q ult value is 3.4 % higher than the measured value.

Results and discussions

According to Smith (1986), if a model gives R > 0.8, and the error values (e.g., RMSE and MAE) are at the minimum, there is a strong correlation between the predicted and measured values. It can be observed from Fig. 4 that the ANN model with high R and low RMSE and MAE values is able to predict the target values with an acceptable degree of accuracy. The performance of the model on the training and testing data suggests that it has both good predictive abilities and generalization performance. Besides, new criteria recommended by Golbraikh and Tropsha (2002) are checked for external validation of the model on the testing data sets. It is suggested that at least one slope of regression lines (k or k′) through the origin should be close to 1. Also, the performance indexes of m and n should be lower than 0.1. Recently, Roy and Roy (2008) introduced a confirm indicator of the external predictability of models (R m ). For R m > 0.5, the condition is satisfied. Either the squared correlation coefficient (through the origin) between predicted and experimental values (Ro 2), or the coefficient between experimental and predicted values (Ro′2) should be close to \( R_{\text{Test}}^{2} \), and close to 1. The considered validation criteria and the relevant results obtained by the models are presented in Table 5. As it is seen, the derived model satisfies the required conditions. The validation phase ensures the derived ANN model is strongly valid.

In order to have an idea about the prediction performance of the proposed model against a classical model, a comparative study is conducted. For this aim, the obtained results are compared with those provided by the following well-known model developed by Goodman (1989) for the estimation of q ult of the non-fractured rocks:

where, q u is the unconfined compressive strength of rock, S/B the ratio of joint spacing to foundation width, ϕ the angle of internal friction for the rock mass, \( N_{\phi } \) the non-dimensional bearing capacity factor as a function of ϕ.

Figure 5 represents the prediction made by the Goodman’s and ANN models for the entire database. As can be observed, the proposed ANN model (R = 0.976, RMSE = 60.38, MAE = 32.97) model significantly outperforms the Goodman’s model (R = 0.880, RMSE = 443.22, MAE = 140.97). Note that another major advantage of the proposed model over the Goodman’s model is that it considers the important effect of rock mass classification through using RMR. It is worth mentioning that the most of the existing models are derived based on traditional statistical analyses (e.g., regression analysis). The major limitation of this type of analysis is that the structures of the models are designated after controlling only few equations established in advance. Thus, such models cannot efficiently consider the interactions between the dependent and independent variables. Conversely from the empirical and analytical methods, a distinction of ANN for determining the bearing capacity lies in its powerful ability to model the mechanical behavior without requesting a prior form of the existing relationships or any assumptions.

ANN sensitivity analysis of independent variables

It is known that the ANN weight values cannot be interpreted as regression coefficients nor can be used to compute the impact or response of variables. Considering the necessity of realizing the relative importance and output response, several approaches are proposed by various researchers to interpret the ANN weights (Garson 1991; Goh 1994; Olden et al. 2004). In this study, Garson (1991) approach is employed to obtain the relative importance of each variable. It is worth mentioning that the important role of RMR, q u , S/B and ϕ in the prediction of q ult is well-understood. Removing each of these parameters from the conducted analyses has resulted in decreasing the performance of the model. Thus, the sensitivity analysis is only performed to have a comparison between these important parameters.

However, according to the Garson’s approach, interconnection weights between layers of a trained neural network are partitioned and the absolute values of weights are taken to calculate the relative importance of each input variable. This approach has been implemented by several researchers (Das and Basudhar 2008; Alavi et al. 2010; Mollahasani et al. 2011). Figure 6 shows the procedure of this algorithm (Alavi et al. 2010). The relative importance contributions of RMR, q u , S/B and ϕ in the prediction of q ult obtained by ANN model are represented in Fig. 7. As represented in Fig. 6, the relative importance values for RMR, q u , S/B and ϕ are 19, 28, 35 and 19 %, respectively. These values indicate that the bearing capacity of shallow foundations on jointed (non-fractured) rock masses is more sensitive to q u and S/B compared to other input variables. The results generally conform to those noticed by Goodman (1989), Paikowsky et al. (2004, 2010).

Parametric study

A comparative parametric study is performed to evaluate the response of the ANN model to the variation of each independent variable. The methodology is based on changing only one predictor variable at a time while the other variables are kept constant at the average values of their entire data sets. This procedure is repeated using another variable until the model response is obtained for all of the predictor variables (Alavi et al. 2011). In order to determine and compare the capability of the proposed model, the results of the parametric analysis of the Goodman’ model are also included. Figure 8 represents the parametric analysis results. The results for the ANN model indicate that q ult increases with increasing RMR, q u , S/B and ϕ. For S/B, the increasing trend for the ANN model is not as intense as that for the Goodman’ model. On the other hand, while q ult is remarkably increasing with increasing ϕ in the ANN model, the Goodman’ model seems not be very sensitive to the changes of this parameter.

Summary and conclusion

In the present study, a new model is proposed for the estimation of the q ult of shallow foundations on jointed rock masses using the ANN technique. For this aim, a comprehensive and reliable set of data including rock socket, centrifuge rock socket, plate load and large-scaled footing load test results is collected to develop the model. One of the major criticisms about ANN is that it usually does not provide practical prediction equations. To deal with this issue, the optimal ANN model is converted to a relatively simple equation. The tractable ANN-based design equation provides an analysis tool accessible to practicing engineers. The calculation procedure can readily be performed using a spreadsheet or hand calculations to provide precise predictions of q ult. Besides, the proposed model performs significantly better than the widely used Goodman’s model. Moreover, the derived model takes into account the important role of rock classification through using RMR. The results of the sensitivity and parametric analyses are generally expected cases from an engineering viewpoint. The sensitivity analysis indicates that q ult is more sensitive to q u and S/B compared to RMR and ϕ.

References

AASHTO (2007) LRFD bridge design specifications, 4th edn. AASHTO, Washington, DC

Abu-Hejleh N, Attwooll WJ (2005) Colorado’s axial load tests on drilled shafts socketed in weak rocks: synthesis and future needs. Colorado Department of Transportation Research Branch, Report No. CDOT-DTD-R-2005-4, September, Colorado

Akgun A, Türk N (2010) Landslide susceptibility mapping for Ayvalik (Western Turkey) and its vicinity by multicriteria decision analysis. Environ Earth Sci 61(3):595–611

Alavi AH, Gandomi AH (2011) Prediction of principal ground-motion parameters using a hybrid method coupling artificial neural networks and simulated annealing. Comput Struct 89(23–24):2176–2194

Alavi AH, Gandomi AH, Mollahasani A, Heshmati AAR, Rashed A (2010) Modeling of maximum dry density and optimum moisture content of stabilized soil using artificial neural networks. J Plant Nutr Soil Sci 173(3):368–379

Alavi AH, Ameri M, Gandomi AH, Mirzahosseini MR (2011) Formulation of flow number of asphalt mixes using a hybrid computational method. Constr Build Mater 25(3):1338–1355

Alkhasawneh MS, Ngah UK, Tay LT, Isa NAM (2014) Determination of importance for comprehensive topographic factors on landslide hazard mapping using artificial neural network. Environ Earth Sci 72(3):787–799

Baker CN (1985) Comparison of caisson load tests on Chicago hardpan. In: Baker CN Jr (ed) Drilled piers and caissons II. Proceedings of Session at the ASCE Notional Convention, ASCE, Reston, VA, pp 99–113

Banzhaf W, Nordin P, Keller R, Francone F (1998) Genetic programming: an introduction on the automatic evolution of computer programs and its application. Morgan Kaufmann, San Francisco

Bieniawski ZT (1978) Determining rock mass deformability. Int J Rock Mech Min Sci 15:335–343

Bieniawski ZT (1989) Engineering rock mass classifications: a complete manual for engineers and geologists in mining, civil, and petroleum engineering. Wiley-Interscience, pp. 40–47. ISBN 0-471-60172-1

Bishoni L (1968) Bearing capacity of a closely tointed rock. Ph.D. Dissertation, Georgia Institute of Technology

Bowles JE (1996) Foundation analysis and design, 5th edn. McGraw-Hill Inc., New York

Burland JB, Lord JA (1970) The load deformation behavior of Middle Chalk at Mundford, Norfolk: a comparison between full-scale performance and in situ and laboratory measurements. In: Proceedings of the conference on in situ investigations in soils and rocks, British Geotechnical Society, London, pp 3–15

Butler A, Lord JA (1970) Discussion session A, Written Contributions. In: Proceedings of conference on in situ investigations in soils and rocks, May 13–15, 1969, British Geotechnical Society, London, pp 39–54

Carrubba P (1997) Skin friction of large-diameter piles socketed into rock. Can Geotech J 34(2):230–240

Carter P, Kulhawy FH (1988) Analysis and design of foundations socketed into rock. Report No. EL-5918. Empire State Electric Engineering Research Corporation and Electric Power Research Institute, New York

Ceryan N, Okkan U, Kesimal A (2013) Prediction of unconfined compressive strength of carbonate rocks using artificial neural networks. Environ Earth Sci 68(3):807–819

Cybenko J (1989) Approximations by superpositions of a sigmoidal function. Math Control Signal Syst 2:303–314

Das SK, Basudhar PK (2008) Prediction of residual friction angle of clays using artificial neural network. Eng Geol 100(3–4):142–145

Das SK, Samui P, Sabat AK, Sitharam TG (2010) Prediction of swelling pressure of soil using artificial intelligence techniques. Environ Earth Sci 61(2):393–403

Das SK, Biswal RK, Sivakugan N, Das B (2011a) Classification of slopes and prediction of factor of safety using differential evolution neural networks. Environ Earth Sci 64(1):201–210

Das SK, Samui P, Khan SZ, Sivakugan N (2011b) Machine learning techniques applied to prediction of residual strength of clay. Cent Eur J Geosci 3(4):449–461

Das SK, Samui P, Sabat AK (2011c) Application of artificial intelligence to maximum dry density and unconfined compressive strength of cement stabilized soil. Geotech Geol Eng 29(3):329–342

Eberhart RC, Dobbins RW (1990) Neural network PC tools: a practical guide. Academic Press, San Diego

Gandomi AH, Alavi AH, Yun GJ (2011) Nonlinear modeling of shear strength of SFRCB beams using linear genetic programming. Struct Eng Mech 38(1):1–25

Garson D (1991) Interpreting neural-network connection weights. Art Int Expert 6:47–51

Glos III, Briggs OH (1983) Rock sockets in soft rock. J Geotech Eng ASCE 110(10):525–535

Goeke PM, Hustad PA, (1979) Instrumented drilled shafts in clay-shale. In: Fuller EM (ed) Proceeding symposium on deep foundations. ASCE, New York, pp 149–164

Goh ATC (1994) Seismic liquefaction potential assessed by neural network. J Geotech Eng ASCE 120(9):1467–1480

Goh ATC, Kulhawy FH, Chua CG (2005) Bayesian neural network analysis of undrained side resistance of drilled shafts. J Geotech Geoenviron Eng 131(1):84–93

Golbraikh A, Tropsha A (2002) Beware of q2. J Mol Gr Model 20(4):269–276

Goodman RE (1989) Introduction to rock mechanics, 2nd edn. John Wiley & Sons, New York

Günaydın O (2009) Estimation of soil compaction parameters by using statistical analyses and artificial neural networks. Environ Geol 57(1):203–215

Hirany A, Kulhawy FH (1988) Conduct and interpretation of load tests on drilled shafts. Report EL-5915. Electric Power Research Institute, Palo Alto

Hoek E, Brown ET (1988) The Hoek–Brown failure criterion a 1988 update. In: Curran JH (ed) Proceedings of 15th Canadian rock mechanics symposium, Toronto, Civil Engineering Department, University of Toronto

Hummert JB, Cooling TL (1988) Drilled pier test, fort callings, Colorado. In: Prakash S (ed) Proceedings, 2nd international conference on case histories in geotechnical engineering, vol 3, Rolla, pp 1375–1382

Isik F, Ozden G (2013) Estimating compaction parameters of fine- and coarse-grained soils by means of artificial neural networks. Environ Earth Sci 69(7):2287–2297

Jubenville M, Hepworth RC (1981) Drilled pier foundations in shale––Denver, Colorado Area. In: O’Neill MW (ed) Drilled piers and caissons. ASCE, New York, pp 66–81

Kalinli A, Cemal Acar M, Gündüz Z (2011) New approaches to determine the ultimate bearing capacity of shallow foundations based on artificial neural networks and ant colony optimization. Eng Geol 117:29–38

Kaunda RB, Chase RB, Kehew AE, Kaugars K, Selegean JP (2010) Neural network modeling applications in active slope stability problems. Environ Earth Sci 60(7):1545–1558

Kayadelen C, Taşkıran T, Günaydın O, Fener M (2009) Adaptive neuro-fuzzy modeling for the swelling potential of compacted soils. Environ Earth Sci 59(1):109–115

Kolay E, Kayabali K, Tasdemir Y (2010) Modeling the slake durability index using regression analysis, artificial neural networks and adaptive neuro-fuzzy methods. Bull Eng Geol Environ 69(2):275–286

Kuo YL, Jaksa MB, Lyamin AV, Kaggwa WS (2009) ANN-based model for predicting the bearing capacity of strip footing on multi-layered cohesive soil. Comput Geotech 36:503–516

Lake LM, Simons NE (1970) Investigations into the engineering properties of chalk at Welford Theale, Berkshire. In: Proceedings of conference on in situ investigations into soils and rocks, British Geotechnical Society, London, pp 23–29

Leung CF, KO HY (1993) Centrifuge model study of piles socketed in soft rock. Soils Found 33(3):80–91

Lord JA (1997) Foundations, excavations and retaining structures. The geotechnics of hard soils––soft rocks: Proceedings of the second international symposium on hard soils–soft rocks, Athens, Greece

Maiti S, Tiwari RK (2014) A comparative study of artificial neural networks, Bayesian neural networks and adaptive neuro-fuzzy inference system in groundwater level prediction. Environ Earth Sci 71(7):3147–3160

Maleki H, Hollberg K (1995) Structural stability assessment through measurements. In: Proceedings of ISRM international workshop on rock foundations, Tokyo, Japan, pp 449–455

Mallard DJ (1977) Discussion: session 1—Chalk. In: Proceedings of ICE conference on piles in weak rock, pp 177–180

Manouchehrian A, Gholamnejad J, Sharifzadeh M (2014) Development of a model for analysis of slope stability for circular mode failure using genetic algorithm. Environ Earth Sci 71(3):1267–1277

MathWorks (2007) MATLAB the language of technical computing, version 9.1. Natick, MA, USA

McClelland L, Rumelhart DE (1988) Explorations in parallel distributed processing. MIT Press, Cambridge

McVay MC, Ko J, Otero J (2006) Distribution of end bearing and tip shearing on drilled shafts in Florida Limestone. 2006 Geotechnical Research in Progress Meeting, Florida Department of Transportation, August 16th, Florida

Mert E, Yilmaz S, Inal M (2011) An assessment of total RMR classification system using unified simulation model based on artificial neural networks. Neural Comput Appl 20:603–610

Mesbahi E (2000) Application of artificial neural networks in modelling and control of diesel engines. Ph.D. Thesis, University of Newcastle, UK

Mollahasani A, Alavi AH, Gandomi AH, Rashed A (2011) Nonlinear neural-based modeling of soil cohesion intercept. KSCE J Civ Eng 15(5):831–840

Nitta A, Yamamoto S, Sonoda T, Husono T (1995) Bearing capacity of soft rock foundation on in-situ bearing capacity tests under inclined load. In: Proceedings of ISRM international workshop on rock foundations, Tokyo. Japan, pp 327–331

Ocak I, Seker S (2012) Estimation of elastic modulus of intact rocks by artificial neural network. Rock Mech Rock Eng 45(6):1047–1054

Ocak I, Seker SE (2013) Calculation of surface settlements caused by EPBM tunneling using artificial neural network, SVM, and Gaussian processes. Environ Earth Sci 70(3):1263–1276

Olden JD, Joy MK, Death RG (2004) An accurate comparison of methods for quantifying variable importance in artificial neural networks using simulated data. Econ Model 178(3):389–397

Padmini D, Ilamparuthi K, Sudheer KP (2008) Ultimate bearing capacity prediction of shallow foundations on cohesionless soils using neurofuzzy models. Comput Geotech 35:33–46

Paikowsky S, Birgission G, McVay M, Nguyen T, Kuo C, Baecher G, Ayyub B, Stenerson K, O’Mally KK, Chernauskas L, O’Neill M (2004) NCHRP report 507: load and resistance factor design (LRFD) for deep foundations. Transportation Research Board of the National Academies

Paikowsky S, Cannif M, Lensy K, Aloys K, Amatya S, Muganga R (2010) NCHRP report 651: LRFD design and construction of shallow foundations for highway bridge structures. Transportation Research Board of the National Academies

Park S, Choi C, Kim B, Kim J (2013) Landslide susceptibility mapping using frequency ratio, analytic hierarchy process, logistic regression, and artificial neural network methods at the Inje area, Korea. Environ Earth Sci 68(5):1443–1464

Pellegrino A (1974) Surface footings on soft rocks. In: Proceedings, 3rd Congress of International Society for Rock Mechanics, vol 2, Denver, pp 733–738

Pells PJN, Turner RM (1979) Elastic solutions for the design and analysis of rocksocketed piles. Can Geotech J 16:481–487

Pells PJN, Turner RM (1980) End bearing on rock with particular reference to sandstone. In: Proceedings of the international conference on structural foundations on rock, vol 1. Sydney, Australia, pp 181–190

Radhakrishnan R, Leung CF (1989) Load transfer behavior of rock-socketed piles. J Geotech Eng ASCE 115(6):755–768

Roy PP, Roy K (2008) On some aspects of variable selection for partial least squares regression models. QSAR Comb Sci 27:302–313

Rumelhart DE, McClelland JL (1986) Parallel distributed processing, vol. 1: Foundations. The MIT Press, Cambridge

Sattari MT, Apaydin H, Ozturk F (2012) Flow estimations for the Sohu Stream using artificial neural networks. Environ Earth Sci 66(7):2031–2045

Shahin M, Jaksa M (2005) Neural networks prediction of pullout capacity of marquee ground anchors. Comput Geotech 32(3):153–163

Smith GN (1986) Probability and statistics in civil engineering. Collins, London

Soleimanbeigi A, Hataf N (2005) Predicting ultimate bearing capacity of shallow foundations on reinforced cohesionless soils using artificial neural networks. Geosynth Int 12(6):321–332

Sowers GF (1979) Introductory soil mechanics and foundations: geotechnical engineering, 4th edn. MacMillan, New York

Spanovich M, Garvin RG (1979) Field evaluation of Caisson–Shale interaction. In: Lundgren R (ed) Behavior of Deer, foundations (STP 670). ASTM, pp 537–557

Swingler K (1996) Applying neural networks a practical guide. Academic Press, New York

Tasdemir Y, Kolay E, Kayabali K (2013) Comparison of three artificial neural network approaches for estimating of slake durability index. Environ Earth Sci 68(1):23–31

Terzaghi K (1946) Rock defects and loads on tunnel supports. In: Proctor RV, White TL (eds) Rock tunneling with steel supports. Commercial Shearing and Stamping Company, Youngstown, pp 17–99

Thorne CP (1980) Capacity of piers drilled into rock. In: Proceedings, international conference on structural foundations on rock, vol 1. Sydney, pp 223–233

Ward WH, Burland JB (1968) Assessment of the deformation properties of jointed rock in the mass. In: Proceedings of the international symposium on rock mechanics, October 22nd–24th, Madrid, Spain, pp 35–44

Webb DL (1976) Behavior of bored piles in weathered diabase. Geotechnique 26(1):63–72

Williams AF (1980) Design and performance of piles socketed into weak rock. Ph.D. Dissertation, Monash University, Melbourne, Australia

Wilson LC (1976) Tests of bored and driven piles in cretaceous mudstone at Port Elizabeth, South Africa. Geotechnique 26(1):5–12

Wu X, Niu R, Ren F, Peng L (2013) Landslide susceptibility mapping using rough sets and back-propagation neural networks in the Three Gorges, China. Environ Earth Sci 70(3):1307–1318

Yilmaz I (2010a) The effect of the sampling strategies on the landslide susceptibility mapping by conditional probability and artificial neural networks. Environ Earth Sci 60(3):505–519

Yilmaz I (2010b) Comparison of landslide susceptibility mapping methodologies for Koyulhisar, Turkey: conditional probability, logistic regression, artificial neural networks, and support vector machine. Environ Earth Sci 61(4):821–836

Yilmaz I, Marschalko M, Bednarik M, Kaynar O, Fojtova L (2012) Neural computing models for prediction of permeability coefficient of coarse-grained soils. Neural Comput Appl 21(5):957–968

Zurada JM (1992) Introduction to artificial neural systems. West, St Paul

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ziaee, S.A., Sadrossadat, E., Alavi, A.H. et al. Explicit formulation of bearing capacity of shallow foundations on rock masses using artificial neural networks: application and supplementary studies. Environ Earth Sci 73, 3417–3431 (2015). https://doi.org/10.1007/s12665-014-3630-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12665-014-3630-x