Abstract

For a \(C^{m+1}\) differential system on \(\mathbb {R}^n\), we study the limit cycles that can bifurcate from a zero–Hopf singularity, i.e., from a singularity with eigenvalues \(\pm ~bi\) and \(n-2\) zeros for \(n\ge 3\). If the singularity is at the origin and the Taylor expansion of the differential system (without taking into account the linear terms) starts with terms of order m, then \(\ell \) limit cycles can bifurcate from the origin with \(\ell \in \{0,1,\ldots , 2^{n-3}\}\) for \(m=2\) [see Llibre and Zhang (Pac J Math 240:321–341, 2009)], with \(\ell \in \{ 0,1,\ldots ,3^{n-2}\}\) for \(m=3\), with \(\ell \le 6^{n-2}\) for \(m=4\), and with \(\ell \le 4\cdot 5^{n-2}\) for \(m=5\). Moreover, \(\ell \in \{0,1,2\}\) for \(m=4\) and \(n=3\), and \(\ell \in \{0,1,2,3,4,5\}\) for \(m=5\) and \(n=3\). In particular, the maximum number of limit cycles bifurcating from the zero–Hopf singularity grows up exponentially with n for \(m=2,3\).

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction and statement of the main results

We recall that a zero–Hopf singularity is an isolated equilibrium point of an n-dimensional autonomous system with \(n\ge 3\) with linear part having \(n-2\) zero eigenvalues and a pair of purely imaginary eigenvalues. It turns out that its unfolding has a rich dynamics in a neighborhood of the singularity (see for example Guckenheimer and Holmes [4, 5], Scheurle and Marsden [12], Kuznetsov [6] and the references therein). Moreover, a zero–Hopf bifurcation may lead to a local birth of “chaos.” More precisely, it was shown that some invariant sets of the unfolding can be obtained from the bifurcation from the singularity under appropriate conditions (cf. [3, 12]).

In this paper, we use the first-order averaging theory to study the zero–Hopf bifurcation of \(C^{m+1}\) differential systems on \(\mathbb {R}^n\) with \(n \ge 3\) and \(m\le 5\). We assume that these systems have a singularity at the origin with linear part with eigenvalues \(\varepsilon a \pm b i\) and \(\varepsilon c_k\) for \(k=3,\ldots ,n\), where \(\varepsilon \) is a small parameter. Each of these systems can be written in the form

where the constants \(a_{i_1\cdots i_n}\), \(b_{i_1\cdots i_n}\), \(c_{i_1\cdots i_n,k}\), a, b and \(c_k\) are real, \(ab \ne 0\) and P, Q and \(R_k\) are the remainder terms in the Taylor series. We refer the reader to [1, 2, 10, 11, 14] and the references therein for details on the study of limit cycles and averaging theory.

Our main result concerns the number of limit cycles that can bifurcate from the origin in a zero–Hopf bifurcation. We show that the number of bifurcated limit cycles can grow exponentially with the dimension of the system.

Theorem 1

For \(m=3\), there exist \(C^4\) differential systems of the form (1) for which \(\ell \) limit cycles, with \(\ell \in \{0,1,\ldots ,3^{n-2}\}\), bifurcate from the origin at \(\varepsilon =0\). In other words, for \(\varepsilon >0\) sufficiently small there are systems having exactly \(\ell \) limit cycles in a neighborhood of the origin and these tend to the origin when \(\varepsilon \searrow 0\).

Theorem 1 is proved in Sect. 3. For \(n=3\), this results was established in [7] also with the description of the type of stability of the limit cycles. For \(m=2\), a corresponding result was established earlier in [8] showing that there exist \(C^3\) differential systems of the form (1) for which \(\ell \) limit cycles, with \(\ell \in \{0,1,\ldots ,2^{n-3}\}\), bifurcate from the origin at \(\varepsilon =0\).

Now we consider the cases \(m=4\) and \(m=5\).

Theorem 2

For \(m=4\) and \(\varepsilon >0\) sufficiently small, any \(C^5\) differential system of the form (1) can have at most \(6^{n-2}\) limit cycles in a neighborhood of the origin, and these tend to the origin when \(\varepsilon \searrow 0\). When \(n=3\), the maximum number of limit cycles that can bifurcate from the origin is 2 and this bound is attained.

The proof of Theorem 2 is given in Sect. 4.

Theorem 3

For \(m=5\) and \(\varepsilon >0\) sufficiently small, any \(C^6\) differential system of the form (1) can have at most \(4 \cdot 5^{n-2}\) limit cycles in a neighborhood of the origin, and these tend to the origin when \(\varepsilon \searrow 0\). When \(n=3\), the maximum number of limit cycles which can bifurcate from the origin is 5 and this bound is attained.

2 First-order averaging method for periodic orbits

In this section, we describe briefly the first-order averaging method via the Brouwer degree obtained in [1] (see [11] for the general theory). Roughly speaking, the method relates the solutions of a non-autonomous periodic differential system to the singularities of its averaged differential system. The conditions for the existence of a simple isolated zero of the averaged function are expressed in terms of the Brouwer degree. We emphasize that the vector field need not be differentiable.

Consider the system

where \(f :\mathbb {R}\times D\rightarrow \mathbb {R}^n\) and \(g :\mathbb {R}\times D \times (-\delta ,\delta ) \rightarrow \mathbb {R}^n\) are continuous functions, T-periodic in the first variable, and D is a bounded open subset of \(\mathbb {R}^n\). We define a function \(f^0 :D \rightarrow \mathbb {R}^n\) by

Finally, we denote by \(d_B(f^0,V, v)\) the Brouwer degree of \(f^0\) in a neighborhood V of v.

Theorem 4

Assume that:

-

(i)

f and g are locally Lipschitz with respect to x;

-

(ii)

for \(v \in D\) with \(f^0(v)=0\), there exists a neighborhood V of v such that \(f^0(z) \ne 0\) for \(z \in \overline{V} {\setminus } \{v\}\) and \(d_B (f^0, V, v) \ne 0\).

Then for \(|\varepsilon |> 0\) sufficiently small, there exists an isolated T-periodic solution \(x(t,\varepsilon )\) of system (2) such that \(x(0,\varepsilon ) \rightarrow v\) when \(\varepsilon \rightarrow 0\). Moreover, if f is \(C^2\) and g is \(C^1\) in a neighborhood of a simple zero v of \(f^0\), the stability of the limit cycle \(x(t,\varepsilon )\) is given by the stability of the singularity v of the averaged system \(\dot{z} =\varepsilon f^0(z)\).

We recall that if \(f^0\) is of class \(C^1\) and the determinant of the Jacobian matrix at a zero v is nonzero, then \(d_B(f^0,V,v) \ne 0\), and the zeros are called simple zeros of \(f^0\) (see [9]).

3 Proof of Theorem 1

Making the change of variables

with \(r > 0\), system (1) becomes

where \(O_4=O_4(r,z_3,\ldots ,z_n)\) and with the sums going over \(i_1+\cdots +i_n=3\). Taking \(a_{00e_{ij}}=b_{00e_{ij}}=0\), where \(e_{ij} \in \mathbb {Z}_+^{n-2}\) has the sum of the entries equal to 3, one can easily verify that in some neighborhood of \((r,z_3,\ldots ,z_n)=(0,0,\ldots ,0)\) with \(r>0\) we have \(\dot{\theta }\ne 0\) since \(b \ne 0\) (\(\mathbb {Z}_+\) denotes the set of all nonnegative integers). Taking \(\theta \) as the new independent variable, in a neighborhood of \((r,z_3,\ldots ,z_n)=(0,0,\ldots , 0)\) with \(r > 0\), system (4) becomes

for \(k=3,\ldots ,n\), with the sums going over \(i_1+\cdots + i_n=3\). Note that this system is \(2 \pi \)-periodic in \(\theta \).

In order to apply the averaging theory, we rescale the variables by setting

Then system (1) becomes

for \(k=3,\ldots ,n\), where

Now system (7) has the normal form (2) for applying the averaging theory with \(x=(\sigma ,\tau _3,\ldots ,\tau _n)\), \(t =\theta \), \(T=2 \pi \), and

The averaged system of system (7) is given by

where \(\Omega \) is some neighborhood of the origin \((\sigma ,\tau _3,\ldots ,\tau _n)=(0,0,\ldots ,0)\), with \(\sigma > 0\) and

where

One can show after some calculations that

for \(k=3,\ldots ,n\), where \(e_j \in \mathbb {Z}_+^{n-2}\) is the unit vector with the jth entry equal to 1, and \(e_{ij} \in \mathbb {Z}_+^{n-2}\) has the sum of the ith and jth entries equal to 2 and the other equal to 0 (note that i can be equal to j), \(e_{ijl} \in \mathbb {Z}_+^{n-2}\) has the sum of the ith, jth and lth entries equal to 3 and the other equal to zero (again, i, j and l can be equal).

Now we apply Theorem 4 to obtain limit cycles of system (7). After applying the rescaling (6), these limits become infinitesimal limit cycles for system (5) that tend to the origin when \(\varepsilon \searrow 0\). Consequently they will be limit cycles bifurcating from the zero–Hopf bifurcation of (1) at the origin.

We first compute the simple singularities of system (9). Since the transformation from the Cartesian coordinates \((x,y,z_3,\ldots ,z_n)\) to the cylindrical coordinates \((r,\theta ,z_3,\ldots ,z_n)\) is not a diffeomorphism at \(r=0\), we consider the zeros of the averaged function \(f^0\) of (11) with \(\sigma > 0\). So we need to compute the zeros of the equations

for \(k=3,\ldots ,n\). Since the coefficients of this equation are arbitrary, one can simplify the notation by writing it in the form

for \(k=3,\ldots ,n\), where \(a_1, a_{ij}, c_{j,k}\) and \(c_{ijl,k}\) are arbitrary constants.

Let \(\mathcal {C}\) be the set of all algebraic systems of the form in (13). We claim that there is a system in \(\mathcal {C}\) with exactly \(3^{n-2}\) simple zeros. An example is

with all the coefficients nonzero. Equation (14) is linear in \(\sigma ^2\), and Eq. (15) is a cubic algebraic equation in the \(\tau _j\)’s. Substituting the unique positive solution \(\sigma _{0}\) of (14) into (15) with \(k=3\), we find that this last equation has exactly three different real solutions \(\tau _{30}\), \(\tau _{31}\) and \(\tau _{32}\) choosing appropriately the coefficients \(c_{3,3}\) and \(c_{333,3}\). Introducing one of the four solutions \((\sigma _{0}, \tau _{3i})\), \(i=0,1,2\), into (15) with \(k=4\), and choosing appropriately the values of the coefficients of (15) with \(k=4\), we obtain three different solutions \(\tau ^i_{40}\), \(\tau ^i_{41}\) and \(\tau ^i_{42}\) of \(\tau _4\). Moreover, one can choose the coefficients so that the nine solutions \((\tau _{3i},\tau ^i_{40},\tau ^i_{41}, \tau ^i_{42})\) for \(i=0,1,2\) are distinct. Repeating this process, one can show that for an appropriate choice of the coefficients in (14) and (15), these equations have \(3^{n-2}\) different zeros. Since \(3^{n-2}\) solutions of (14) and (15) is the maximum number that equations (13) can have by Bezout theorem (see [13]), we conclude that every solution is simple, and so the determinant of the Jacobian of the system evaluated at the solutions \((\tau _{3i},\tau ^i_{40},\tau ^i_{41}, \tau ^i_{42})\) is nonzero. This establishes the claim.

Using similar arguments, one can also choose the coefficients of the former system so that it has \(\ell \) simple real solutions with \(\ell \in \{0,1,\ldots ,3^{n-2}\}\). Taking the averaged system (9) with \(f^0\) having the coefficients as in (14)–(15), the averaged system (9) has exactly \(\ell \in \{0,1,\ldots ,3^{n-2}\}\) singularities with \(\sigma > 0\). Moreover, the determinants of the Jacobian matrix \(\partial f^0/\partial y\) at these singularities do not vanish, because all the singularities are simple. By Theorem 4, we conclude that there are systems (1) with a number \(\ell \in \{0,1,\ldots ,3^{n-2}\}\) of limit cycles bifurcating from the origin. This completes the proof of Theorem 1.

4 Proof of Theorem 2

Making the cylindrical change of variables in (3) in the region \(r > 0\), system (1) becomes system (4), now with the sum over \(i_1+\cdots + i_n=4\). Taking \(a_{00e_{ij}}=b_{00e_{ij}}=0\), where \(e_{ij} \in \mathbb {Z}_+^{n-2}\) has the sum of its entries equal to 4, it is easy to show that in an appropriate neighborhood of \((r,z_3,\ldots ,z_n)=(0,0,\ldots ,0)\) we have \(\dot{\theta }\ne 0\). Choosing \(\theta \) as the new independent variable, in a neighborhood of \((r,z_3,\ldots ,z_n)=(0,0,\ldots , 0)\) system (4) becomes system (5), again with the sum running over \(i_1+\cdots + i_n=4\). Note that this system is \(2 \pi \)-periodic in \(\theta \).

To apply the averaging theory in the proof of Theorem 2, we rescale the variables, setting

Then system (5) becomes system (7) with

Now (7) has the normal form (2) of the averaging theory with \(x=(\sigma ,\tau _3,\ldots ,\sigma _n)\), \(t =\theta \), \(T=2 \pi \), and f as in (8). The averaged system of system (7) with the previous functions \(f_1\) and \(f_k\) can be written as system (9), where \(\Omega \) is an appropriate neighborhood of the origin \((\sigma ,\tau _3,\ldots ,\sigma _n)=(0,0,\ldots ,0)\) with \(\sigma > 0\) and \(f^0(y)\) given by (10). After some computations, we obtain

for \(k=3,\ldots ,n\), where \(e_{ijlu} \in \mathbb {Z}_+^{n-2}\) has the sum of the ith, jth, lth and uth entries equal to 4 and the others equal to 0 (these entries can coincide).

Now we apply Theorem 4 to obtain the limit cycles of system (7) (with the sum running over \(i_1+\cdots + i_n=4\)). After the rescaling (6), these limits will become infinitesimal limit cycles for system (5), which tend to the origin when \(\varepsilon \searrow 0\). Consequently they will be limit cycles bifurcating from the origin of the zero–Hopf bifurcation of system (1).

Using Theorem 4 to study the limit cycles of system (7), we only need to compute the simple zeros of system (9) (with the sum running over \(i_1+\cdots + i_n=4\)). So we need to compute the zeros of the equations

for \(k=3,\ldots ,n\).

Isolating \(\sigma \) from the first equation in (16), taking into account that \(\sigma > 0\) and substituting it in the other equations of (16), the numerator becomes a polynomial equation of degree 6. By Bezout theorem, the maximum number of solution that system (16) can have is \(6^{n-2}\). We do not know whether the bound is reached because in the first equation of (16) it appears \(\sigma ^2 \tau _j\) instead of \(\sigma ^2\) as in the first equation of (12). So we cannot apply the same arguments as in the proof of Theorem 1, and thus, we cannot show that the bounds are reached.

Now we consider the particular case of \(\mathbb {R}^3\). In this case, we have

We need to compute the zeros of

Setting \(\sigma ^2 =R\), from the first equation we get

Substituting \(\sigma ^2 \) into the second equation and looking at the numerator, we get a polynomial of degree two in the variable \(\tau _3^3\). It has two solutions. Note that there is only one real solution for the cubic equation \(x^3=A\) for A real and so the second equation has at most two solutions. We claim that \(\sigma \) can be chosen to be positive for these two real solutions and that these solutions are simple. The claim can be verified using the example

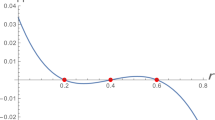

The averaged function for this system is

It has only two zeros with \(\sigma >0\), which give the two periodic solutions

for \(i=1,2\) such that

and

when \(\varepsilon \rightarrow 0\). This completes the proof of Theorem 2.

5 Proof of Theorem 3

Making the cylindrical change of variables in (3) in the region \(r > 0\), system (1) becomes system (4), with the sum running over \(i_1+\cdots + i_n=5\). Proceeding as in the proofs of Theorems 1 and 2, in a neighborhood of \((r,z_3,\ldots ,z_n)=(0,0,\ldots , 0)\) with \(r > 0\) system (4) becomes system (5), again with the sum running over \(i_1+\cdots + i_n=5\). We note that this system \(2 \pi \)-periodic in \(\theta \).

To apply the averaging theory, we rescale the variables, setting

Then system (1) becomes system (7), with \(i_1+\cdots +i_n=5\). Note that system (7) has the form (2) of the averaging theory. Proceeding as in the proofs of Theorems 1 and 2, we obtain

for \(k=3,\ldots ,n\), where \(e_{ijluv} \in \mathbb {Z}_+^{n-2}\) has the sum of the ith, jth, lth, uth and vth entries equal to 5 and the other entries equal to zero (these entries can coincide).

We need to compute the zeros of the equations

for \(k=3,\ldots ,n\). By Bezout theorem, the maximum number of solutions that we can have is \(4 \cdot 5^{n-2}\). As in the proof of Theorem 2, in general this upper bound is not reached, as we will verify in dimension three.

Now consider the particular case of \(\mathbb {R}^3\). In this case, we have

where

We need to compute the zeros of the system

If \(\tau _3=0\), then \(f_1^0=0\) has a unique real positive solution \(\sigma \) that we write as \((\sigma _0,0)\). Now we consider the case \(\tau _3 \ne 0\). Let

where

Setting \((f_1^0,f_2^0)=0\) in \(R=\sigma ^2\) and \(W=\tau _3^2\), we get four solutions, say

Since \(R=\sigma ^2\) must be positive, we conclude that only two of them are possible. Then we can have five simple solutions \((\sigma _0,0), (\sqrt{R_1}, \pm ~\sqrt{W_1})\) and \((\sqrt{R_3}, \pm ~ \sqrt{W_3})\) as illustrated by the system

The averaged function \((f_1^0,f_2^0)\) for this system is

This function has five zeros with \(\sigma >0\), which provide five periodic solutions \((x_i(t,\varepsilon ), y_i(t,\varepsilon ), z_i(t,\varepsilon ))\) for \(i=0,\ldots ,4\) such that

and

when \(\varepsilon \rightarrow 0\). This completes the proof of Theorem 3.

References

Buica, A., Llibre, J.: Averaging methods for finding periodic orbits via Brouwer degree. Bull. Sci. Math. 128, 7–22 (2004)

Cardin, P.T., Llibre, J.: Transcritical and zero–Hopf bifurcations in the Genesio system. Nonlinear Dynam. 88, 547–553 (2017)

Champneys, A.R., Kirk, V.: The entwined wiggling of homoclinic curves emerging from saddle-node/Hopf instabilities. Phys. D 195, 77–105 (2004)

Guckenheimer, J.: On a codimension two bifurcation. Lect. Notes Math. 898, 99–142 (1980)

Guckenheimer, J., Holmes, P.: Nonlinear Oscillations, Dynamical Systems and Bifurcations of Vector Fields. Springer, Berlin (1983)

Kuznetsov, Y.A.: Elements of Applied Bifurcation Theory, 3rd edn. Springer, Berlin (2004)

Llibre, J., Valls, C.: Hopf bifurcation for some analytic differential systems in \(R^3\) via averaging theory. Discret. Contin. Dyn. Syst. Ser. A 30, 779–790 (2011)

Llibre, J., Zhang, X.: Hopf bifurcation in higher dimensional differential systems via the averaging method. Pac. J. Math. 240, 321–341 (2009)

Lloyd, N.G.: Degree Theory. Cambridge University Press, Cambridge (1978)

Marsden, J.E., McCracken, M.: The Hopf bifurcation and its applications. In: Applied Mathematical Sciences, vol. 19, Springer: New York (1976)

Sanders, J.A., Verhulst, F., Murdock, J.: Averaging methods in nonlinear dynamical systems. In: Applied Mathematical Sciences, vol. 59, 2nd Edn. Springer, New York (2007)

Scheurle, J., Marsden, J.: Bifurcation to quasi-periodic tori in the interaction of steady state and Hopf bifurcations. SIAM. J. Math. Anal. 15, 1055–1074 (1984)

Shafarevich, I.R.: Basic Algebraic Geometry. Springer, Berlin (1974)

Zhang, Z.F., Ding, T.R., Huang, W.Z., Dong, Z.X.: Qualitative theory of differential equations. Translations of Mathematical Monographs, vol. 101. American Mathematical Society, Providence (1992)

Acknowledgements

The first and third author are partially supported by FCT/Portugal through UID/MAT/04459/2013. The second author is partially supported by a FEDER-MINECO Grant MTM2016-77278-P, a MINECO Grant MTM2013-40998-P, and an AGAUR Grant 2014SGR-568.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Barreira, L., Llibre, J. & Valls, C. Limit cycles bifurcating from a zero–Hopf singularity in arbitrary dimension. Nonlinear Dyn 92, 1159–1166 (2018). https://doi.org/10.1007/s11071-018-4115-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-018-4115-3