Abstract

An adjustable quantized approach is adopted to treat the \(\mathcal {H}_{\infty }\) sliding mode control of Markov jump systems with general transition probabilities. To solve this problem, an integral sliding mode surface is constructed by an observer with the quantized output measurement and a new bound is developed to bridge the relationship between system output and its quantization. Nonlinearities incurred by controller synthesis and general transition probabilities are handled by separation strategies. With the help of these measurements, linear matrix inequalities-based conditions are established to ensure the stochastic stability of the sliding motion and meet the required \(\mathcal {H}_{\infty }\) performance level. An example of single-link robot arm system is simulated at last to demonstrate the validity.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Recently, much research effort has been devoted into Markov jump systems (MJSs) due to theirs’ well prospective applications in economic systems, flight control systems and robot systems [1]. Transition probabilities (TPs) which dominate the system behavior in the jumping process, in most studies, are required to be known [2,3,4,5,6,7,8,9,10,11,12]. Unfortunately, it is impossible to get TPs precisely owing to environment factors, instrument errors and measure costs [13]. Hence, in the viewpoint of engineering, TPs allowed to be partial known are investigated in [14,15,16,17] by means of robust methodologies, Gaussian transition probability density function and Kronecker product technique, respectively.

Alternatively, sliding mode control (SMC) is an effective method to eliminate impact of uncertainty and has advantages of fast response and robustness [18, 19]. Regarding the sliding mode technique to cope with uncertain MJSs [20], proposes a linear matrix inequality solution to the existence of linear sliding surfaces. This result is extended by [21] where an integral sliding mode surface is developed for singular MJSs. In [22], a dynamic sliding surface relying on system states and inputs is developed for descriptor MJSs. Wei et al. [23] utilized a descriptor system setup to treat the sliding mode output control of semi-MJSs. Contrast to the above results with ideal TPs, [24] constructs an integral sliding mode control approach for stochastic MJSs with incomplete TPs. In this result, the sliding mode controller depends on the accessability of partly known TPs. Along this line, robust sliding mode synthesis of MJSs with time delay is addressed in [25] where TPs are known, uncertain but time varying, and unknown.

Noticeably, the above results are almost assumed that system outputs are transmitted via analog channels. To be consistent with digital channels in network environment, signal quantization is necessary [26]. Although the quantization could improve the transmission efficiency, it could cause nonlinearities which degenerate system performance or even destroy system stability [27,28,29,30,31]. Therefore, the investigation on MJSs with quantization has been conducted in [32,33,34,35]. To be specific [32], adopts the non-conservative sector bound approach to cope with the logarithmic quantized state feedback control of MJSs. Rasool and Nguang [33] treats the network delay as a finite state Markov process and utilizes a logarithmic quantization strategy to tackle the quantized robust \(\mathcal {H}_\infty \) control problem. Via augmenting the quantization error into system vector [34], converts the quantized fault-tolerant control of MJSs into the sliding mode control of a description system. In contrast to above results with known TPs, quantized output \(\mathcal {H}_\infty \) filtering of MJSs with known, uncertain and unknown TPs is built in [35]. While these quantized results have enriched the investigation on MJSs, the quantizer is nonuniform and requires infinite quantization levels around the equilibrium.

To propose a feasible solution, this paper is dedicated to the \(\mathcal {H}_{\infty }\) sliding mode control of MJSs with adjustable quantized parameter. TPs cover known, uncertain and unknown. Based on the quantized system output, an observer-based integral sliding mode surface is developed and the relationship between system output and the quantized parameter is built by a technique lemma explicitly. Separation techniques are utilized to cope with the nonlinearities caused by controller synthesis and general TPs. A mode-dependent sliding mode controller is developed via the known TPs information to ensure the sliding reachability. Synthesis conditions for observer and controller gains with the required disturbance attenuation performance level \(\gamma \) are solved in a unified framework. A single-link robot arm (SRA) system is simulated to show the validity of the proposed method.

The subsequent paper architecture is: some preliminaries are supplied in Sect. 2. In Sect. 3, an integral sliding mode surface based on the quantized system outputs and a sliding mode quantized controller are presented. Then, separation strategies are introduced to linearize the nonlinearities induced by quantization and incomplete TPs. A simulation is carried out in Sect. 4. Section 5 concludes this paper.

1.1 Notation

The transpose of H is presented by \(H^{\mathrm{T}}\). The positive (negative) definite of H is shortened as \(H>0 (H<0)\). \(|x |\) and \(|H |\) denote the 1-norm of the vector x and matrix H, respectively. Similarly, \(||x ||\) and \(||H ||\) denote 2-norm. \(\mathcal {L}_2\) denotes the space of square integrable vector functions over \( \left[ {0,\infty } \right) \) with \(\varepsilon \left\{ {{{\left\| x \right\| }^2}} \right\} < \infty \). Finally, the symbol He(X) is used to represent \(\left( X + X^{\mathrm{T}}\right) \).

2 Problem statement and preliminaries

Consider Markov jump systems with the following evolution

where \(x(t)\in \mathbb {R}^n\) is the system state vector, \(\omega (t)\in \mathbb {R}^r\) is energy bounded disturbance belonging to \(\mathcal {L}_2\). \(u(t)\in \mathbb {R}^m\) is control input, y(t) is measured output and z(t) is the regulated output. \( \tilde{A}(r(t))=M(r(t))E(t)N(r(t))\) where M(r(t)), N(r(t)) are known and \(E(t)^{\mathrm{T}}E(t)\le I\). r(t) is continuous-time Markov process in a finite space \(\mathcal {I}=\{1,2,\ldots ,N\}\) and satisfies

where \(h>0\), \(\lambda _{ij}\geqslant 0\) for \(i\ne j\) and \(\lambda _{ii}=-\sum \nolimits ^{N}_{j=1,j\ne i}\lambda _{ij}\) for each mode i, \(\lim \nolimits _{h\rightarrow 0}o(h)/h=0\).

Considering the fact that TPs may be known, uncertain and unknown [14, 36], the incomplete TP matrix with four operation modes is presented below

where \(\bar{\lambda }_{ij}=\lambda _{ij}+\varphi _{ij}\), \(\lambda _{ij}\) denotes known elements, \(\varphi _{ij}\left( \varphi _{ij} \in \left[ -\,\delta _{ij},\delta _{ij}\right] \right) \) represents uncertain estimate error with known upper and lower bound and “?” is unknown. Furthermore, \(\mathcal {I}_k^i\) is used to denote the set of known and uncertain TPs in ith row, while \(\mathcal {I}_{uk}^i\) represents the set of unknown ones. For convenience, let \(\hat{\lambda }_{ij}\) includes all possible cases (known, uncertain and unknown).

For the fluency of the derivative, some preliminaries of assumption, definition and lemma are given below:

Assumption 1

[36] \(\omega (t)\) satisfies the following boundary d (\(d>0\))

Definition 1

[2] The autonomous system (1) is stochastically stable (SS), if the following inequality holds:

under initial conditions \(x_0\) and \(r_0\),

Definition 2

[2] Given \(\gamma >0\) and \(x_0=0\), the system (1) meets the required \(\mathcal {H}_\infty \) level \(\gamma \) if

holds for all nonzero \(\omega (t) \in \mathcal {L}_2\).

Lemma 1

[37] The following inequality holds, for \(F(t)^{\mathrm{T}}F(t)\le I\),

where \(\varepsilon >0\).

Lemma 2

[37] Let \(\upsilon \in {\mathcal {R}^n}\), \(\mathcal {P} = {\mathcal {P}^{\mathrm{T}}} \in {\mathcal {R}^{n \times n}}\) and \(rank(\mathcal {H})=r<n\) (\(\mathcal {H} \in \mathcal {R}^{m\times n}\)), then equivalent statements are given below:

-

1.

\(\upsilon ^{\mathrm{T}}\mathcal {P}\upsilon \), for all \(\upsilon \ne 0\), \(\mathcal {H}\upsilon =0\);

-

2.

\(\mathcal {H}^{\bot T}\mathcal {P}\mathcal {H}^{\bot } < 0\);

-

3.

\(\exists \mathcal {X}\in \mathcal {R}^{n \times m}\) such that \(\mathcal {P}+He(\mathcal {XH})<0\).

For \(r(t)=i\in \mathcal {I}\), system matrices in the ith mode are simplified as \(A_i\), \(\tilde{A}_i\), \(B_{1i}\), \(B_{2i}\), \(C_{i}\), \(C_{di}\), \(M_{i}\) and \(N_{i}\).

As in [28], the dynamical uniform quantizer is given as follows:

where \(\mu (t)\) is the quantizer parameter.

Choosing the quantization error \({e_\mu (t) }\) as \({e_\mu (t) } = {q_\mu }(\alpha ) - \alpha \) gives

where \(\varDelta =\frac{\sqrt{p} }{2}\) and p is the dimension of \(\alpha \).

Lemma 3

For a positive constant \(\beta >1\), if the quantizer parameter \(\mu >0\) satisfies

then the following inequalities

holds.

Proof

Based on (8) and the result given in [28], the proof is completed. \(\square \)

Remark 1

Since the integral sliding mode surface is constructed by an observed state, the quantized parameter \(\mu (t)\) is determined by system output. Although this lemma is an extension of [28], it could render a more tighter bound to \(e_{\mu (t)}\).

3 Main results

3.1 Observer-based sliding manifold design

Consider the following integral sliding manifold

where \(X_i\) is a matrix variable to be designed and \(\hat{x}(t)\) is the observer state as below

where \(L_i\) is to be designed.

Remark 2

The choice of \(B_{1i}L_i\) facilitates the sliding mode reachability analysis which will be shown in the following derivation. Although this form has been adopted in [38], the observer gain matrix \(L_i\) should be given beforehand and no quantization has been considered.

To facilitate the controller construction in the following, Fig. 1 is given below.

For simplification, \({s}(\hat{x}(t))\) is abbreviated by s. Then the differential of \({s}_i\) is achieved from (11):

3.2 SMC design

In the following theorem, the sliding motion to the specified integral sliding surface \( s=0\) is ensured in finite time by exploiting a proper controller.

Theorem 1

Consider systems (1) with the sliding mode functions (13). With the SMC law designed as (14), (15), (16), the state trajectories are driven onto \(s=0\) in finite time and will keep on it.

where

Proof

Choose the candidate Lyapunov functional as

where \(\bar{X}_i=\left( B_{1i}^{\mathrm{T}}X_iB_{1i}\right) ^{-1}\).

Calculating the differential of (17) with (13) yields

Taking the controller (14)–(16) into (18) gives

Since \({\hat{\lambda } _{ij}}\) is incomplete, according to the accessibility of diagonal elements, two cases are discussed as below to ensure the negative definiteness of \(\dot{V}_{1i}\).

Case I \(\left( i\in \mathcal {I}_{k}^i\right) \):

Use the property \(\sum \nolimits _{l \in {I_{uk}^i}}^N {{\hat{\lambda } _{il}}} \mathrm{{ + }}\sum \nolimits _{j \in {I_k^i}}^N {{\hat{\lambda } _{ij}}} = 0\), one has the following fact

Resorting to \(\frac{{\sum \nolimits _{l \in {I_{uk}^i}}^N {{\hat{\lambda } _{il}}} }}{{ - \sum \nolimits _{j \in {I_k^i}}^N {{\hat{\lambda } _{ij}}} }}=1\), one further has

Consequently, taking (21) into (19) supplies

To get \(\dot{V}_{1i}<0\), a direct way is to ensure the following terms from (22) satisfying

Resorting to norm calculation, the left-hand side of (23) is scaled as

Applying the fact that \(\left| {s} \right| \ge \left\| {s} \right\| \), (23) holds when \(\rho _i\) satisfies

Since \({\sum \nolimits _{j \in {I_k^i}}^N {{\hat{\lambda } _{ij}}\left( { {{{\bar{X}}_j}} - {{{\bar{X}}_l}} } \right) } }\) include uncertain TPs \(\bar{\lambda }_{ij}\), with the help of Lemma 1, it is disposed as below

Substituting (26) into (25) produces

As a result, in this case, \( {\rho _i} \) should meet

which is the condition in (14)–(16).

Case II (\(i\in \mathcal {I}_{uk}^i\)):

Utilizing the property (2) as \( {\hat{\lambda }_{ii}}\) \(= - \sum \nolimits _{j \in {I_k^i}}^N {{\hat{\lambda }_{ij}}} - \sum \nolimits _{l \in {I_{uk}^i}}^N {{\hat{\lambda }_{il}}} \) and taking it into (21) gives

Let \(\bar{X}_j\), \(\bar{X}_l\) satisfy \( {{{\bar{X}}_l}} < {{{\bar{X}}_j}} \), then it leads to:

With respect to uncertain TPs, \(\sum \nolimits _{j=1}^N{\bar{\lambda }_{ij} {\bar{X}_j} } \) has the property as follows.

In a similar way, \(\sum \nolimits _{j = 1}^N {{\hat{\lambda } _{ij}} {{{\bar{X}}_j}} } \) is substituted by (30) and \(\rho _i\) is required to satisfy

which is presented in (14)–(16). \(\square \)

Remark 3

Due to the sign function, the sliding mode controller could render the chattering problem. To avoid this phenomenon, \(-\rho _i \mathrm{sign}(s)\) is substituted by \(-\rho \frac{s}{\left\| {s}\right\| +\iota }\), \((\iota >0)\) in the simulation part.

Remark 4

In [39], system states are premised to be available and the integral sliding mode surface is quantized. However, in this paper, the surface is built on the quantized system output at controller side. Moreover, the distinction of this paper and [38] is that the incomplete TPs in the former cover the cases in the latter.

3.3 Adjustment policy for \(\mu (t)\)

In this section, similar to [31], an adjustment policy of parameter \(\mu (t)\) in the quantizer is proposed as follows.

Initialization:

Choose \(\mu (t_0)\) as an arbitrary positive constant and set \(\mu (t)=\mu (t_0)\);

Adjustment steps:

If \(|y(t)|\ge 1\), \(\mu (t)\) is taken as \(\mu (t)=\frac{\lfloor |y(t)| \rfloor }{(\beta +1)\varDelta }\), where the notation \(\lfloor y\rfloor \) denotes the function \(\hbox {floor}(\cdot )\) which rounds the elements of y(t) to the nearest integers less than or equal to y(t).

If \(0<|y(t)|<1\), take \(\alpha (0<\alpha <1)\) as a fixed positive constant, there always exists a positive integer i such that \(\alpha ^i \le |y(t)| < \alpha ^(i-1)\); then, we take \(\mu (t)=\frac{\alpha ^i}{(\beta +1)\varDelta }\).

If \(|y(t)|=0\), which means the sliding surface is arrived, then we take \(\mu (t)=0\).

3.4 Stability analysis

The subsequent work pays attention to the stability of the closed-loop systems with the controller composed of (14)–(16). Since the designed sliding mode surface is proved to be accessible, a sliding motion is happened on the surface in a finite time. Then, the system stability is analyzed through \(\hat{x}(t)\) and e(t).

Taking into account \(s=0,\dot{s}=0\), the equivalent control law is given as follows:

Subsequently, the state trajectory under (32) is described as

Setting \(e(t)={x}(t)-\hat{x}(t)\) yields

To get the controller and observer gains, sufficient conditions for SS with the required \(\mathcal {H}_\infty \) performance are established Theorem 2.

Theorem 2

For given scalars \(\tau _{1i}\),\(\tau _{2i}\),\(\upsilon _{1i}\),\(\upsilon _{2i}\),\(\upsilon _{3i}\), \(\upsilon _{4i}\), if there exist symmetrical matrices \(X_i,{T}_{ij}>0\) and matrices \(U_{i},V_{i},W_{i},Y_{i}\) and positive scalars \(\varepsilon _{1i},\varepsilon _{2i} (i \in \mathbf {I})\) such that the following inequalities hold:

where

the controller (14) renders the system (1) to be SS with the \(\mathcal {H}_{\infty }\) index \(\gamma \). Moreover, \(K_i =U_i^{ - 1}{W_i}\) and \(L_i =-\,V_i^{ - 1}Y_i\).

Proof

The candidate Lyapunov function is chosen as:

where \(X_i>0\). Calculating \(\dot{V}_{2i}\) yields

Substituting (33)–(38) renders

Utilizing Lemma 1, the following inequality holds from (39):

Integrating \(z^{\mathrm{T}}(t)z(t) - \gamma ^2w^{\mathrm{T}}(t)w(t)\) into (40), one has the inequality (41).

To deal with \(2{e^{\mathrm{T}}}(t){X_i}{B_{1i}}{L_i}e_{\mu }(t) \), applying Lemma 3 gives

Substituting (42) into (41) yields

where

To ensure the stochastic stability with the required \(\mathcal {H}_\infty \) performance, one just needs to prove \({\varXi _{i}}<0\). With the help of Schur complement to \(\varXi _{i}\), one obtains

where \(\bar{\varLambda }_{11} = He({X_i}({A_i} + {B_{1i}}{K_i})) + {\varepsilon _{2i}}N_i^{\mathrm{T}}{N_i}+ \sum \nolimits _{j = 1}^N {{\hat{\lambda }_{ij}}{X_j}}\), \(\bar{\varLambda }_{22} = He({X_i}({A_i} - {B_{1i}}{L_i}{C_i})) + {\varepsilon _{1i}}N_i^{\mathrm{T}}{N_i}+ \sum \nolimits _{j = 1}^N {{\hat{\lambda }_{ij}}{X_j}}\), and \(\bar{\varLambda }_{77} = -{(\beta +1)^2}I\).

To linearize \(X_iB_{1i}K_i\) and \(X_iB_{1i}L_iC_i\), by setting \(K_i =U_i^{ - 1}{W_i}\), \(L_i =-\,V_i^{ - 1}Y_i\), a separated approach in [36] is utilized as below

Alternatively, \(\varXi _i\) is equivalent to

where

Calculating the kernel space of \({\mathcal {H}^ \bot }\) gives

Combining (45), (46) and Lemma 2 , \(\varXi _i\) is rewritten as (48).

where

Along the similar line as Theorem 1 to handle general TPs, one gets that \(\sum \nolimits _{j = 1}^N {{\hat{\lambda } _{ij}}{X_j}}\) satisfies the following inequalities

Case I (\(i\in \mathcal {I}_{k}^i\)):

Case II (\(i\in \mathcal {I}_{uk}^i\)):

Taking (49) and (50) into (48) produces the fact \(\varXi _i<0\), respectively. \(\square \) \(\square \)

Remark 5

In the course of derivation, to make the obtained controller and observer synthesis conditions be solvable, Lemma 3 is utilized to deal with the nonlinear term \(e^{\mathrm{T}}_{\mu }(t)e_{\mu }(t)\). As a result, the established synthesis conditions are formulated by means of linear matrix inequalities.

4 Numerical simulation

The simulation is developed on a SRA system appeared in [40] to verify the proposed method.

The evolutionary of SRA system dynamic is

where \(\theta (t)\), \(\mathcal {M}\) and \(\mathcal {L}\) are the arm’s angle position, mass payload and length, respectively. u(t), w(t), J, \(\mathcal {D}(t)\) and g are the control input, external disturbance, inertia moment, the uncertain viscous friction coefficient and gravity acceleration. Moreover, \(g= 9.81\), \(\mathcal {L}=0.8\), and \(\mathcal {D}(t)=2\). As [40], linearizing (51) gives, for \(\mathcal {M}\) and J with four modes,

where \(x(t)=\left[ {\begin{array}{*{20}{c}}x_1(t)&x_2(t) \end{array}}\right] ^{\mathrm{T}}\), \(r(t)=\{1,2,3,4\}\), \(\tilde{A}(r(t),t)=M(r(t))E(t)N(r(t))\),\({D(1)} = -\, 0.1\), \({D(2)} = 0.3\),\({D(3)} = 0.5\),\({D(4)} = 0.2\), \(\mathcal {M}(r(t))\) and J(r(t)) change depending on the jump mode. \(J(1)=0.82\), \(J(2)=0.74\), \(J(3)=0.93\) and \(J(4)=0.89\) \((\mathcal {M}(r(t))=J(r(t)))\).

The matrices of parameter perturbation and disturbance are given as:

For initialization, the real and estimated state vectors are given as \(x = {\left[ {\begin{array}{*{20}{c}} 4&5 \end{array}} \right] ^{\mathrm{T}}}\), \(\hat{x} = {\left[ {\begin{array}{*{20}{c}} 0&0 \end{array}} \right] ^{\mathrm{T}}}\). Other parameters are selected as follows \(\theta =0.01;\iota =0.01;\tau _{1i}=1;\tau _{2i}=1; \upsilon _{1i}=1; \upsilon _{2i}=1 ; \upsilon _{3i}=1 ;\upsilon _{4i}=1; \eta _{ij}=1(i\in \{1,2,3,4\})\) and the parameters in quantizer \(\beta =4;\alpha =0.5\).

The general TP matrix is

where \(\varphi _{11}=\varphi _{33}=[-\,0.1,0.1]\).

Solving conditions (35) and (36) in Theorem 2 yields

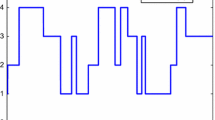

Applying the proposed sliding mode controller, the response curves of system states, quantizer parameters, input, quantized output and measured output are given in Figs. 2, 3 and 4, respectively.

It is seen that system state trajectories are stochastically stable in Fig. 2. Figure 3 shows the variation of the mode-dependent sliding mode control input with the possible mode evolution. The system output y(t) and its corresponding quantized case are depicted in Fig. 4. Figure 5 tells the quantized range varied by the proposed quantization adjustable steps.

In summary, the validity of the proposed sliding mode control approach are shown in these figures.

5 Conclusions

The quantized sliding mode control of MJSs with incomplete TPs is investigated. An observer-based integral sliding mode surface is built on the quantized system output, and effective techniques are established to cope with the quantization nonlinearities, unknown and uncertain TPs. Linear matrix inequalities-based sufficient conditions are obtained to guarantee the sliding mode reachability and the required \(\mathcal {H}_\infty \) performance. The SRA system is employed to verify the proposed method.

References

Mariton, M.: Jump Linear Systems in Automatic Control. M. Dekker, New York (1990)

Revathi, V.M., Balasubramaniam, P., Park, J.H., Lee, T.H.: \({\cal{H}}_ {\infty }\) filtering for sample data systems with stochastic sampling and Markovian jumping parameters. Nonlinear Dyn. 78(2), 813–830 (2014)

Ramasamy, S., Nagamani, G., Zhu, Q.: Robust dissipativity and passivity analysis for discrete-time stochastic T–S fuzzy cohengrossberg Markovian jump neural networks with mixed time delays. Nonlinear Dyn. 85(4), 2777–2799 (2016)

Shen, H., Park, J.H., Wu, Z.: Finite-time synchronization control for uncertain Markov jump neural networks with input constraints. Nonlinear Dyn. 77(4), 1709–1720 (2014)

Samidurai, R., Manivannan, R., Ahn, C.K., Karimi, H.R.: New criteria for stability of generalized neural networks including Markov jump parameters and additive time delays. IEEE Trans. Syst. Man Cybern. Syst. (2016). https://doi.org/10.1109/TSMC.2016.2609147

Gonçalves, A.P.C., Fioravanti, A.R., Geromel, J.C.: Markov jump linear systems and filtering through network transmitted measurements. Sig. Process. 90(10), 2842–2850 (2010)

Saravanakumar, R., Ali, M.S., Ahn, C.K., Karimi, H.R., Shi, P.: Stability of Markovian jump generalized neural networks with interval time-varying delays. IEEE Trans. Neural Netw. Learn. Syst. (2016). https://doi.org/10.1109/TNNLS.2016.2552491

Zhao, S., Ahn, C.K., Shmaily, Y.S., Shi, P., Agarwal, R.K.: An iterative filter with finite measurements for suddenly maneuvering targets. AIAA J. Guid. Control Dyn. 40, 2316–2322 (2017)

Mathiyalagan, K., Park, J.H., Sakthivel, R., Anthoni, S.M.: Robust mixed \(\cal{H}_\infty \) and passive filtering for networked Markov jump systems with impulses. Sig. Process. 101, 162–173 (2014)

Orey, S.: Markov chains with stochastically stationary transition probabilities. Ann. Probab. 19(3), 907–928 (1991)

Shen, M., Yan, S., Zhang, G., Park, J.H.: Finite-time \(\cal{H}_\infty \) static output control of Markov jump systems with an auxiliary approach. Appl. Math. Comput. 273, 553–561 (2016)

Wu, Z.-G., Shen, Y., Su, H., Lu, R., Huang, T.: \(\cal{H}_2\) performance analysis and applications of two-dimensional Hidden Bernoulli jump system. IEEE Trans. Syst. Man Cybern. Syst. (2017). https://doi.org/10.1109/TSMC.2017.2745679

Baik, H.-S., Jeong, H.S., Abraham, D.M.: Estimating transition probabilities in Markov chain-based deterioration models for management of wastewater systems. J. Water Resour. Plan. Manag. 132(1), 15–24 (2006)

Zhang, L., Boukas, E.-K.: Stability and stabilization of Markovian jump linear systems with partly unknown transition probabilities. Automatica 45(2), 463–468 (2009)

Shen, M., Ye, D., Wang, Q.: Mode-dependent filter design for Markov jump systems with sensor nonlinearities in finite frequency domain. Sig. Process. 134, 1–8 (2017)

Luan, X., Zhao, S., Liu, F.: \(\cal{H}_\infty \) control for discrete-time Markov jump systems with uncertain transition probabilities. IEEE Trans. Autom. Control 58(6), 1566–1572 (2013)

Kim, S.H.: Control synthesis of Markovian jump fuzzy systems based on a relaxation scheme for incomplete transition probability descriptions. Nonlinear Dyn. 78(1), 691–701 (2014)

Edwards, C., Spurgeon, S.K.: On the development of discontinuous observers. Int. J. Control 59(5), 1211–1229 (1994)

Almutairi, N.B., Zribi, M.: On the sliding mode control of a ball on a beam system. Nonlinear Dyn. 59(1), 221–238 (2010)

Shi, P., Xia, Y., Liu, G.P., Rees, D.: On designing of sliding-mode control for stochastic jump systems. IEEE Trans. Autom. Control 51(1), 97–103 (2006)

Ligang, W., Shi, P., Gao, H.: State estimation and sliding-mode control of Markovian jump singular systems. IEEE Trans. Autom. Control 55(5), 1213–1219 (2010)

Li, J., Zhang, Q., Zhai, D., Zhang, Y.: Sliding mode control for descriptor Markovian jump systems with mode-dependent derivative-term coefficient. Nonlinear Dyn. 82(1–2), 465–480 (2015)

Wei, Y., Park, J.H., Qiu, J., Wu, L., Jung, H.Y.: Sliding mode control for semi-Markovian jump systems via output feedback. Automatica 81, 133–141 (2017)

Chen, B., Niu, Y., Zou, Y.: Sliding mode control for stochastic Markovian jumping systems with incomplete transition rate. IET Control Theory Appl. 7(10), 1330–1338 (2013)

Kao, Y., Xie, J., Zhang, L., Karimi, H.R.: A sliding mode approach to robust stabilisation of Markovian jump linear time-delay systems with generally incomplete transition rates. Nonlinear Anal. Hybrid Syst. 17, 70–80 (2015)

Khalili, A., Rastegarnia, A., Sanei, S.: Quantized augmented complex least-mean square algorithm: derivation and performance analysis. Sig. Process. 121, 54–59 (2016)

Liberzon, D.: Hybrid feedback stabilization of systems with quantized signals. Automatica 39(9), 1543–1554 (2003)

Zheng, B., Yang, G.: Quantised feedback stabilisation of planar systems via switching-based sliding-mode control. IET Control Theory Appl. 6(1), 149–156 (2012)

Zheng, B., Park, J.H.: Sliding mode control design for linear systems subject to quantization parameter mismatch. J. Frankl. Inst. 353(1), 37–53 (2016)

Song, G., Li, T., Kai, H., Zheng, B.: Observer-based quantized control of nonlinear systems with input saturation. Nonlinear Dyn. 86(2), 1157–1169 (2016)

Zheng, B., Yang, G.: Robust quantized feedback stabilization of linear systems based on sliding mode control. Opt. Control Appl. Methods 34(4), 458–471 (2013)

Xiao, N., Xie, L., Minyue, F.: Stabilization of Markov jump linear systems using quantized state feedback. Automatica 46(10), 1696–1702 (2010)

Rasool, F., Nguang, S.K.: Quantized robust \(\cal{H}_\infty \) control of discrete-time systems with random communication delays. Int. J. Syst. Sci. 42(1), 129–138 (2011)

Shi, P., Liu, M., Zhang, L.: Fault-tolerant sliding-mode-observer synthesis of Markovian jump systems using quantized measurements. IEEE Trans. Ind. Electron. 62(9), 5910–5918 (2015)

Shen, M., Park, J.H.: \(\cal{H}_\infty \) filtering of Markov jump linear systems with general transition probabilities and output quantization. ISA Trans. 63, 204–210 (2016)

Shen, M., Park, J.H., Ye, D.: A separated approach to control of Markov jump nonlinear systems with general transition probabilities. IEEE Trans. Cybern. 46(9), 2010–2018 (2016)

Boyd, S., El Ghaoui, L., Feron, E., Balakrishnan, V.: Linear Matrix Inequalities in System and Control Theory. SIAM, Philadelphia (1994)

Chen, B., Niu, Y., Huang, H.: Output feedback control for stochastic Markovian jumping systems via sliding mode design. Opt. Control Appl. Methods 32(1), 83–94 (2011)

Liu, M., Zhang, L., Shi, P., Zhao, Y.: Sliding mode control of continuous-time Markovian jump systems with digital data transmission. Automatica 80, 200–209 (2017)

Yang, D., Zhao, J.: Robust finite-time output feedback \(\cal{H}_\infty \) control for stochastic jump systems with incomplete transition rates. Circuits Syst. Sig. Process. 34, 1799–1824 (2015)

Acknowledgements

This work was supported by Basic Science Research Programs through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (Grant No. NRF-2017R1A2B2004671), the National Natural Science Foundation of China under Grants (61403189, 61773200), the peak of six talents in Jiangsu Province under Grant 2015XXRJ-011, the China Postdoctoral Science Foundation under Grant 2015M570397, the Doctoral Foundation of Ministry of Education of China under Grant 20133221120012, the Natural Science Foundation of Jiangsu Province of China under Grant BK20130949.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interest to this work.

Rights and permissions

About this article

Cite this article

Shen, M., Zhang, H. & Park, J.H. Observer-based quantized sliding mode \({\varvec{\mathcal {H}}}_{\varvec{\infty }}\) control of Markov jump systems. Nonlinear Dyn 92, 415–427 (2018). https://doi.org/10.1007/s11071-018-4064-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-018-4064-x