Abstract

This paper is concerned with the delay-independent stability of Riemann–Liouville fractional-order neutral-type delayed neural networks. By constructing a suitable Lyapunov functional associated with fractional integral and fractional derivative terms, several sufficient conditions to ensure delay-independent asymptotic stability of the equilibrium point are obtained. The presented results are easily checked as they are described as the matrix inequalities or algebraic inequalities in terms of the networks parameters only. Two numerical examples are also given to show the validity and feasibility of the theoretical results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Fractional calculus mainly deals with derivatives and integrals of arbitrary non-integer order. As an extension of integer-order differentiation and integration, the subject of fractional calculus has drawn much attention from many researchers. Since fractional-order derivatives are nonlocal and have weakly singular kernels [1], fractional-order models own better description of memory and hereditary properties of various processes than integer-order ones. In recent decades, fractional differential equations [2, 3] have been proved to be an excellent tool in the modelling of many phenomena in various fields of electrochemistry [4], diffusion [5], viscoelastic materials [6], control systems [7, 8], biological systems [9] and so on.

As we all know, stability is one of the most concerned problems for any dynamic system. Recently, various kinds of stability of fractional-order dynamic systems including Mittag-Leffler stability [10, 11], Ulam stability [12], uniform stability [13] and asymptotic stability [14] have been extensively investigated. At the same time, time delay is one of the inevitable problems in dynamic systems, which usually has an important effect on the stability and performance of systems. For example, finite-time stability problems for fractional-order delayed differential equations are discussed based on Gronwall’s inequality approach [15], in which finite-time stability conditions are dependent on the size of time delays.

Note that various classes of neural networks such as Hopfield neural networks [16, 17], recurrent neural networks [18,19,20], cellular neural networks [21, 22], Cohen–Grossberg neural networks [23] and bidirectional associative memory neural networks [24, 25] have been widely used in solving some signal processing, image processing and optimal control problems [26]. A reference model approach and nonlinear measure approach to stability analysis of real-valued and complex-valued neural networks were proposed [27, 28], respectively. The stability properties of the equilibrium for various classes of neural networks at the presence of time delays have received a great deal of attention in the recent literatures [17,18,19,20,21,22,23,24,25,26]. In [29,30,31], the authors have sufficiently taken into account the influence of time delay factors on the dynamical behaviors of the network systems. In the classical neural network models, the time delays are usually in the states of the neural system. However, since the time derivatives of the states are the functions of time, in order to completely determine the stability properties of equilibrium point, some delay parameters must be introduced into the time derivatives of states of the system. The neural network model having time delays in the time derivatives of states is called delayed neutral-type systems [23, 25, 32,33,34,35,36,37,38]. This class of integer-order neutral systems has been extensively applied in many fields such as population ecology [32], distributed networks with lossless transmission lines [32], propagation and diffusion models [33] and VLSI systems [33].

Recently, some researchers have introduced fractional-order operators to neural networks to form fractional-order neural models [39,40,41], which could better describe the dynamical behavior of the neurons. It is worth mentioning that notable contributions have been made to various kinds of stability analysis of fractional-order neural networks [42,43,44,45,46]. For instance, the multi-stability problems of fractional-order neural networks without delay have been investigated by the characteristic roots analysis [39]. The uniform stability of the fractional-order neural networks has been presented in fixed time-intervals by analysis technique [40]. Mittag-Leffler stability of impulsive Caputo fractional-order delayed neural networks has been investigated by applying fractional Razumikhin approach [42]. The uniform stability and global stability of Riemann–Liouville fractional-order delayed neural networks have been considered based on fractional Lyapunov functional method and a Leibniz rule for fractional differentiation [44]. The Finite-time stability of Caputo fractional-order delayed neural networks has been studied by applying Gronwall’s inequality approach [45, 46].

There is no doubt that Lyapunov functional method provides a very effective approach to analyze stability of integer-order nonlinear systems. Compared with integer-order systems, it is more difficult to choose Lyapunov functionals for fractional order cases, which results in many difficulties in investigating the asymptotic behavior of such systems. For example, the well-known Leibniz rule does not hold for fractional derivative, and a counterexample (see [47]) was given to show that some incorrect uses of the Leibniz rule for fractional derivatives in the existing literature. In fact, it is very difficult to calculate fractional-order derivative of the Lyapunov functionals. This is the main reason that there are very few practical algebraic criteria on stability of fractional-order neural networks. To the best of our knowledge, the study of neutral-type neural networks mainly focused on neural networks with only first derivative of the states and delayed states [23, 25, 32,33,34,35,36,37,38]. There are few results on the asymptotic stability of fractional-order neutral-type delayed neural networks. Thus, it is necessary and challenging to take fractional-order derivative account into neutral type delayed neural networks.

Motivated by the above discussions, this paper will consider the delay-independent stability of Riemann–Liouville fractional-order neutral-type delayed neural networks. Compared to integer-order neural networks with or without time delays, the research on the stability of fractional-order neutral-type delayed neural networks is still at the stage of exploiting and developing. The main challenges and contributions of this paper are the following aspects:

-

(i)

It is difficult to calculate fractional-order derivatives of Lyapunov functionals. In order to overcome the difficulty, we construct a suitable functional including fractional integral and fractional derivative terms, and calculate its first-order derivative to derive the delay-independent stability conditions;

-

(ii)

The considered system includes state time delays, fractional-order derivatives of the states and time delays in fraction-order derivatives of states. We take into account the impact of these factors on the stability of network system simultaneously. The presented results contribute to the control and design of Riemann–Liouville fractional-order neutral-type delayed network systems. Moreover, we realize the analogue simulation of two examples to show the effectiveness of the theoretical results;

-

(iii)

The neuron activation functions discussed in Riemann–Liouville fractional neutral type neutral network model are not necessarily required to be differential. Compared to the existing results concerning integer-order neutral type neural networks [34, 35], the ones of this paper are more general and less conservative.

This paper is organized as follows. In Sect. 2, we recall some definitions concerning fractional calculus and describe the Riemann–Liouville fractional-order neutral-type neural network model. In Sect. 3, some sufficient conditions for the asymptotic stability of Riemann–Liouville fractional-order neutral-type neural networks are derived, which are described as the matrix inequalities or algebraic inequalities in terms of the networks parameters only. Two numerical examples are given to show the effectiveness and applicability of the proposed results in Sect. 4. Finally, some concluding remarks are drawn in Sect. 5.

Notations

Throughout the paper, \(\mathbb {R}^n\) denotes an n-dimensional Euclidean space, \(\mathbb {R}^{n\times m}\) is the set of all \(n\times m\) real matrices, I stands for the identity matrix with appropriate dimension, \(U^T\) means the transpose of a real matrix or vector U, \(V>0\) denotes the symmetric matrix V is positive definite. The matrix norm is defined by \(\Vert P\Vert _2=[\lambda _{M}(P^TP)]^{\frac{1}{2}}\), where \(\lambda _{M}(\cdot )\) denotes the maximun eigenvalue of the matrix \((\cdot )\).

2 Preliminaries and Model Description

In this section, we recall definitions of fractional calculus and several lemmas which will be used later. Moreover, the problem formulation on fractional-order neutral-type delayed neural networks is presented.

Definition 2.1

[3] The Riemann–Liouville fractional integral of order q for a function f is defined as:

where \(q>0, t\geqslant t_0\). The Gamma function \(\Gamma (q)\) is defined by the integral

Currently, there exist several definitions with regard to the fractional derivative of order \(q>0\) including Gr\(\ddot{u}\)nwald–Letnikov (GL) definition, Riemann–Liouville (RL) definition and Caputo definition. Among these definitions, Riemann–Liouville fractional operator often plays an important role in the stability analysis of fractional-order systems. Our consideration in this paper is the fractional-order neutral-type delayed neural networks with Riemann–Liouville derivative, whose definition and properties are given below (see [2, 3]).

Definition 2.2

The Riemann–Liouville fractional derivative of order q for a function f is defined as

where \(0\leqslant m-1\leqslant q<m, m\in \mathbb {Z}^{+}\).

Property 2.1

\(_{\ t_0}^{RL}D_{t}^{q}C=\frac{C(t-t_0)^{-q}}{\Gamma (1-q)}\) holds, where C is any constant.

Property 2.2

For any constants \(k_1\in \mathbb {R}\) and \(k_2\in \mathbb {R}\), the linearity of Riemann–Liouville’s fractional derivative is described by

Property 2.3

If \(p>q>0\), then the following equaility

holds for sufficiently good functions f(t). In particular, this relation holds if f(t) is integrable.

The following two lemmas will be used in the proof of our main results.

Lemma 2.1

[14] Let \(x(t): \mathbb {R}^n\rightarrow \mathbb {R}^n\) be a vector of differentiable function. Then, for any time instant \(t\geqslant t_0\), the following relationship holds

where \(P\in \mathbb {R}^{n\times n}\) is a constant, square, symmetric and positive definite matrix.

Lemma 2.2

[21] Let G, H and P be real matrices of appropriate dimensions with \(P>0\). Then, for any vectors x, y with appropriate dimensions, the following inequality holds:

In this paper, we consider the delay-independent stability of a class of Riemann–Liouville fractional-order neutral-type delayed neural networks with the state equations:

or in the matrix-vector notation

where \(_{ \ 0}^{RL}D_{t}^{\alpha } x(\cdot )\) denotes an \(\alpha \) order Riemann–Liouville fractional derivative of \(x(\cdot )\), the positive constant \(\alpha \) satisfies \(0<\alpha <1\), n is the number of neurons in the indicated neural network, \(x(t)=(x_1(t),x_2(t),\ldots ,x_n(t))^T\) is the state vector of the network at time t, the functions \(f_i(\cdot )\) denote the neurons activations with \(f_i(0)=0\), \(A=\) diag \((a_1,a_2,\ldots ,a_n)\) is a diagonal matrix with \(a_i>0\) for \(i=1,2,\ldots ,n\), \(B=(b_{ij})_{n\times n},\ C=({c_{ij}})_{n\times n}, E=({e_{ij}})_{n\times n}\) are the constant matrices, \(\tau >0\) denotes the maximum possible transmission delay from neuron to another.

The initial condition associated with Riemann–Liouville fractional system (1) can be written as [2, 3]

where \(\varphi (t)\in \mathbf {C}([-\tau ,0],\mathbb {R}^n)\) is the initial state function, and \(\mathbf {C}([-\tau ,0],\mathbb {R}^n)\) denotes the space of all continuous functions mapping the interval \([-\tau ,0]\) into \(\mathbb {R}^n\).

Throughout this paper, we assume that the activation functions \(f_i(\cdot )\) are Lipschitz continuous. Note that \(f_i(0)=0\), then there exist positive constants \(k_i>0\ (i=1,2,\ldots ,n)\) such that

According to Definition 2.2 and \(f_i(0)=0\) , we immediately know that the origin \(x^*=0\) is an equilibrium point of system (1).

Remark 2.1

The purpose of this paper is to investigate the delay-independent stability conditions of the equilibrium point for Riemann–Liouville fractional neutral-type delayed neural networks (1). In the stability of neural-networks, it is usually assumed that the neuron activation functions are bounded and monotonic [24], differential [43, 44]. However, in this paper, we adopt the assumption on the neuron activation functions in which the differentiability is not required.

3 Delay-Independent Stability Criteria

In this section, by constructing a suitable Lyapunov functional including fractional integral and fractional derivative terms, several sufficient conditions are presented to ensure that the origin of system (1) is asymptotically stable. These stability criteria are independent on the size of the delays, and described as the matrix inequalities or algebraic inequalities in terms of the network systems parameters only.

Theorem 3.1

The origin of system (1) is asymptotically stable, if there exist a positive diagonal matrix M and positive definite matrices P, Q and R such that the following matrix inequalities hold:

where \(K=diag \{k_1, k_2, \ldots , k_n\}>0\) and I is the identity matrix with dimension \(n\times n\).

Proof

Construct a Lyapunov functional including fractional integral and fractional derivative terms:

where \(\alpha \in (0,1)\), \(A=\) diag\(\{a_i\}\ (a_i>0)\) and \(M=\) diag\(\{m_i\}\ (m_i>0)\) are both positive definite diagonal matrices.

From Definitions 2.1 and 2.2, we know that \(V(x_t)\) is a positive definite functional. According to Property 2.3, the time derivative of \(V(x_t)\) along the trajectories of system (1) is obtained as follows:

An application of Lemma 2.1 yields that

Substituting (1) into (9), one can get that

By computations, we get

Note that the following equalities hold:

Combining the above equalities with (10) yields that

From Lemma 2.2, we get the following inequalities

Substituting (12)–(14) into (11), we have

From the assumption on the activation functions given by (3), we can get

Using (16) (17) in (15) yields that

where \(\Omega _1<0, \Omega _2<0, \Omega _3<0\), then we have \(\dot{V}(x_t)<0\). Thus, it can be concluded from the standard Lyapunov theorem [48] that the origin of system (1) is asymptotically stable.

Remark 3.1

In the proof of Theorem 3.1, a suitable Lyapunov functional including fractional integral and fractional derivative terms is constructed. It should be to point out that the positive definiteness of constructed Lyapunov functional is guaranteed by the definitions of Riemann–Liouville fractional-order differentiation and integration.

Remark 3.2

Recently, the various approaches were applied to discuss the stability problems of fractional-order neural networks including analysis technique [40], fractional Razumikhin approach [42], fractional Lyapunov functional method and a Leibniz rule for fractional differentiation [44], Gronwall’s inequality approach [45, 46] and so on. Different from the above mentioned approaches, we only need to calculate first-order derivative of Lyapunov functional to derive the delay-independent stability conditions based on the standard Lyapunov theorem. Thus, we can avoid computing fractional-order derivative of Lyapunov functional. In fact, it is often very difficult to calculate fractional-order derivatives of a Lyapunov functional.

The following algebraic criteria are the direct results of Theorem 3.1.

Corollary 3.1

The origin of system (1) is asymptotically stable, if there exist positive constants m, p, q and r such that the following conditions hold:

where \(K=diag\{k_1, k_2, \ldots , k_n\}>0\).

Corollary 3.2

The origin of system (1) is asymptotically stable, if there exist positive constants m, p, q and r such that the following conditions hold:

where \(\lambda =\min \nolimits _{1\leqslant i\leqslant n}\Big \{\frac{a_i}{k_i}\Big \}\).

Theorem 3.2

The origin of system (1) is asymptotically stable, if there exist a positive diagonal matrix M and positive constants \(\beta \) and \(\gamma \) such that the following conditions hold:

where \(K=diag \{k_1, k_2, \ldots , k_n\}>0\) and I is the identity matrix of dimension of \(n\times n\).

Proof

We note that the following inequalities hold in the proof of Theorem 3.1:

Substituting (19)–(21) into (11), we have

Using (16) (17) in (22) yields that

where \(\Theta _1<0, \Theta _2<0, \Theta _3<0\), then we have \(\dot{V}(x_t)<0\). Thus, it can be concluded from the standard Lyapunov Theorems [40] that the origin of system (1) is asymptotically stable. \(\square \)

The following corollary is the direct result of Theorem 3.2.

Corollary 3.3

The origin of system (1) is asymptotically stable, if there exist a positive diagonal matrix M and positive constants \(\beta \) and \(\gamma \) such that the following conditions hold:

where \(\lambda =\min \nolimits _{1\leqslant i\leqslant n}\Big \{\frac{a_i}{k_i}\Big \}\).

Remark 3.3

In [45, 46], the authors focused on studying the finite time stability of fractional-order delayed neural networks. However, it should be pointed out that the finite-time stability and Lyapunov asymptotic stability are mutually independent concepts, because finite-time stability does not contain Lyapunov asymptotic stability, and vise versa. Here, the asymptotic stability of fractional-order neutral-type delayed neural networks is discussed firstly.

Remark 3.4

Several sufficient conditions to ensure the asymptotic stability of system (1) are derived in this paper, which are concise and described as the matrix inequalities or the algebraic inequalities. According to the different network system parameters, we can flexibly choose an appropriate criterion to check the stability.

Remark 3.5

Note that the Remiann-Liouville derivative is a continuous operator of the order \(\alpha \) (see [2, 3]). Therefore, we can obtain the delay-independent stability criteria for integer-order neutral-type delayed neural networks from the results of this paper. In the past few decades, the integer-order neutral-type delayed neural networks were extensively discussed in [23, 25, 32,33,34,35,36,37,38]. For example, delay-dependent stability criteria for integer-order neutral-type neural networks with time-varying delays was obtained in terms of linear matrix inequalities (LMIs) [38]. General speaking, the LMI approach to the stability problem of neutral-type neural networks involves some difficulties with determining the constraint conditions on the network parameters as it requires to test positive definiteness of high dimensional matrices.

4 Illustrative Examples

In this section, two examples for Riemann–Liouville fractional-order neutral-type delayed neural networks are given to illustrate the effectiveness and feasibility of the theoretical results.

Example 4.1

Consider the two-state Riemann–Liouville fractional-order neutral-type neural network model (1), where

The positive matrices P, Q and R in the conditions of Theorem 3.1 are chosen as follows:

Then we obtain

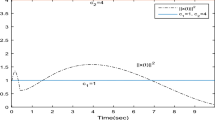

Thus, the conditions of Theorem 3.1 are satisfied. The dynamical responses of state trajectories of neural network system are depicted in Figs. 1 and 2, which shows that the state variable \((x_1(t), \ x_2(t))^T\) converges to the equilibrium point \((0,0)^T\). Therefore, the results of Theorem 3.1 are verified by means of this simulation.

State trajectories of neural network model (1) with \(\alpha =0.8, \ \tau =0.4\) under different initial values

State trajectories of neural network model (1) with different fractional order \(\alpha =0.6,\ 0.8, \ 1\)

State trajectories of neural network model (1) with \(\alpha =0.8, \ \tau =0.4\) under different initial values

Example 4.2

Consider the four-state Riemann–Liouville fractional-order neutral-type neural network model (1), where

We assume that \(\Vert E\Vert _2=\frac{1}{2}\) and \(k_1=k_2=k_3=k_4=1\). By computation, we have \(\Vert B\Vert _2=\Vert C\Vert _2=\frac{1}{4}, \ \lambda =1\). Here, we choose \(m=\frac{1}{2}, \beta =\gamma =\frac{1}{10}\). Then one has

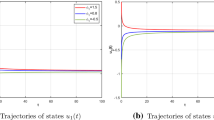

Thus, the conditions of Corollary 3.3 are satisfied. For numerical simulations, the state trajectories are depicted in Figs. 3 and 4 under different initial conditions and different fractional-order derivatives, respectively. It can be directly observed that the numeric conclusions affirm Corollary 3.3.

Remark 4.1

In particular, if fractional-order \(\alpha =1\), then system (1) will be reduced to an integer-order neutral-type delayed neural networks [23, 25, 32,33,34,35,36,37,38]. From the proof of Theorems 3.1 and 3.2, it is obvious that the results of this paper still hold for \(\alpha =1\) case.

For integer-order case, in order to compare with the existing results in the literature, we carry out the following analysis and discussion.

State trajectories of neural network model (1) with different fractional order \(\alpha =0.4,\ 0.6, \ 1\)

In fact, for \(\alpha =1\) case, similar to [36], we consider the network parameters as follows:

We assume that \(\Vert E\Vert _2=\frac{1}{2}\) and \(k_1=k_2=k_3=k_4=1\). By computations, we have \(\Vert B\Vert _2=\Vert C\Vert _2=\frac{1}{4}, \ \lambda =1\). Here, we choose \(m=\frac{1}{2}, \beta =\gamma =\frac{1}{10}\). We first check the conditions of Corollary 3.3. In this case,

hence, the conditions of Corollary 3.3 are satisfied if \(\eta <\frac{1}{6}\). When \(\frac{1}{12}\leqslant \eta <\frac{1}{6}\), the conditions of Corollary 3.3 are satisfied but the condition in [34] does not hold. Furthermore, the condition holds in [35] if \(\eta \leqslant \frac{\sqrt{3}}{24}\), which is more restrictive when compared with \(\eta <\frac{1}{6}\) imposed by the conditions of Corollary 3.3.

Remark 4.2

The asymptotic stability criteria for integer-order neutral-type delayed neural networks were derived [34, 35], in which the authors imposed the strict constraint conditions on network parameters. Compared with the stability conditions [34, 35], the results of this paper are more general and less conservative, and can be more easily verified. The above numerical simulations further confirm the theoretical results.

5 Conclusions

In this paper, we construct an appropriate functional including fractional integral and fractional derivative terms, and calculate its first-order derivative to derive delay-independent stability conditions. Several criteria on the delay-independent stability of Riemann–Liouville fractional neutral-type delayed neural networks are obtained. The proposed method avoids computing fractional-order derivative of Lyapunov functional. Furthermore, the presented results are described as matrix or algebra inequalities, which are valid and feasible to check stability. The presented results contribute to the control and design of Riemann–Liouville fractional-order neutral-type delayed network systems. The future work will focus on the investigation of the dynamical behaviors of Riemann–Liouville and Caputo fractional-order neutral type neural networks with both leakage and time-varying delays.

References

Wang JR, Zhou Y, Fečkan M (2012) Nonlinear impulsive problems for fractional differential equations and Ulam stability. Comput Math Appl 64(10):3389–3405

Podlubny I (1999) Fractional differential equations. Academic Press, New York

Kilbas AA, Srivastava HM, Trujillo JJ (2006) Theory and applications of fractional differential equations. Elsevier, Amsterdam

Laskin N (2000) Fractional market dynamics. Phys A Stat Mech Appl 287(3–4):482–492

Metzler R, Klafter J (2000) The random walk’s guide to anomalous diffusion: a fractional dynamics approach. Phys Rep 339(1):1–77

Shimizu N, Zhang W (1999) Fractional calculus approach to dynamic problems of viscoelastic materials. JSME Int J Ser C Mech Syst Mach Elem Manuf 42:825–837

Sabatier J, Agrawal OP, Machado JAT (2007) Advances in fractional calculus. Springer, Dordrecht

Baleanu D (2012) Fractional dynamics and control. Springer, Berlin

Magin R (2010) Fractional calculus models of complex dynamics in biological tissues. Comput Math Appl 59(5):1586–1593

Li Y, Chen YQ, Podlubny I (2010) Stability of fractional-order nonlinear dynamic systems: Lyapunov direct method and generalized Mittag-Leffler stability. Comput Math Appl 59(5):1810–1821

Liu S, Li XY, Jiang W, Zhou XF (2012) Mittag-Leffler stability of nonlinear fractional neutral singular systems. Commun Nonlinear Sci Numer Simul 17(10):3961–3966

Wang JR, Lv LL, Zhou Y (2012) New concepts and results in stability of fractional differential equations. Commun Nonlinear Sci Numer Simul 17(6):2530–2538

Duarte-Mermoud MA, Aguila-Camacho N, Gallegos JA, Castro-Linares R (2015) Using general quadratic Lyapunov functions to prove Lyapunov uniform stability for fractional order systems. Commun Nonlinear Sci Numer Simul 22(1–3):650–659

Liu S, Zhou XF, Li XY, Jiang W (2016) Stability of fractional nonlinear singular systems and its applications in synchronization of complex dynamical networks. Nonlinear Dyn 84(4):2377–2385

Lazarević MP, Spasić AM (2009) Finite-time stability analysis of fractional order time-delay systems: Gronwall’s approach. Math Comput Model 49(3–4):475–481

Cao JD (2001) Global exponential stability of Hopfield neural networks. Int J Syst Sci 32(2):233–236

Dharani S, Rakkiyappan R, Cao JD (2015) New delay-dependent stability criteria for switched Hopfield neural networks of neutral type with additive time-varying delay components. Neurocomputing 151(2):827–834

Cao JD, Huang DS, Qu YZ (2005) Global robust stability of delayed recurrent neural networks. Chaos Solitons Fractals 23(1):221–229

Liu Y, Wang ZD, Liu X (2006) Global exponential stability of generalized recurrent neural networks with discrete and distributed delays. Neural Netw 19(5):667–675

Gong WQ, Liang JL, Cao JD (2015) Matrix measure method for global exponential stability of complex-valued recurrent neural networks with time-varying delays. Neural Netw 70:81–89

Xu SY, Lam J, Ho DWC, Zou Y (2005) Novel global asymptotic stability criteria for delayed cellular neural networks. IEEE Trans Circuits Syst II Express Briefs 52(6):349–353

Zhang Q, Wei XP, Xu J (2007) Stability of delayed cellular neural networks. Chaos Solitons Fractals 31(2):514–520

Xiong WJ, Shi YB, Cao JD (2017) Stability analysis of two-dimensional neutral-type Cohen–Grossberg BAM neural networks. Neural Comput Appl 28(4):703–716

Huang ZT, Luo XS, Yang QG (2007) Global asymptotic stability analysis of bidirectional associative memory neural networks with distributed delays and impulse. Chaos Solitons Fractals 34(3):878–885

Park JH, Park CH, Kwon OM, Lee SM (2008) A new stability criterion for bidirectional associative memory neural networks of neutral-type. Appl Math Comput 199(2):716–722

Wang F, Liang JL, Wang F (2016) Optimal control for discrete-time singular stochastic systems with input delay. Optim Control Appl Methods 37(6):1282–1313

Qiao H, Peng JG, Xu ZB, Zhang B (2003) A reference model approach to stability analysis of neural networks. IEEE Trans Syst Man Cybern Part B Cybern 33(6):925–936

Gong WQ, Liang JL, Zhang CJ, Cao JD (2016) Nonlinear measure approach for the stability analysis of complex-valued neural networks. Neural Process Lett 44(2):539–554

Shen B, Wang ZD, Qiao H (2016) Event-triggered state estimation for discrete-time multidelayed neural networks with stochastic parameters and incomplete measurements. IEEE Trans Neural Netw Learn Syst 28(5):1152–1163

Li Q, Shen B, Liu YR, Huang TW (2016) Event-triggered \(H_\infty \) state estimation for discrete-time neural networks with mixed time delays and sensor saturations. Neural Comput Appl. doi:10.1007/s00521-016-2271-2

Wang F, Liang JL, Huang TW (2016) Synchronization of stochastic delayed multi-agent systems with uncertain communication links and directed topologies. IET Control Theory Appl 11(1):90–100

Lien CH, Yu KW, Lin YF, Chung YJ, Chung LY (2008) Global exponential stability for uncertain delayed neural networks of neutral type with mixed time delays. IEEE Trans Syst Man Cybern Part B Cybern 38(3):709–720

Cheng L, Hou ZG, Tan M (2008) A neutral-type delayed projection neural network for solving nonlinear variational inequalities. IEEE Trans Circuits Syst II Express Briefs 55(8):806–810

Cheng CJ, Liao TL, Yan JJ, Hwang CC (2006) Globally asymptotic stability of a class of neutral-type neural networks with delays. IEEE Trans Syst Man Cybern Part B Cybern 36(5):1191–1195

Samli R, Arik S (2009) New results for global stability of a class of neutral-type neural systems with time delays. Appl Math Comput 210(2):564–570

Orman Z (2012) New sufficient conditions for global stability of neutral-type neural networks with time delays. Neurocomputing 97:141–148

Rakkiyappan R, Zhu Q, Chandrasekar A (2014) Stability of stochastic neural networks of neutral type with Markovian jumping parameters: a delay-fractioning approach. J Frankl Inst 351(3):1553–1570

Shi K, Zhong S, Zhu H, Liu X, Zeng Y (2015) New delay-dependent stability criteria for neutral-type neural networks with mixed random time-varying delays. Neurocomputing 168:896–907

Kaslik E, Sivasundaram S (2012) Nonlinear dynamics and chaos in fractional-order neural networks. Neural Netw 32:245–256

Song C, Cao JD (2014) Dynamics in fractional-order neural networks. Neurocomputing 142:494–498

Ren FL, Cao F, Cao JD (2015) Mittag-Leffler stability and generalized Mittag-Leffler stability of fractional-order gene regulatory networks. Neurocomputing 160:185–190

Stamova I (2014) Global Mittag-Leffler stability and synchronization of impulsive fractional-order neural networks with time-varying delays. Nonlinear Dyn 77(4):1251–1260

Rakkiyappan R, Cao JD, Velmurugan G (2015) Existence and uniform stability analysis of fractional-order complex-valued neural networks with time delays. IEEE Trans Neural Netw Learn Syst 26(1):84–97

Rakkiyappan R, Sivaranjani R, Velmurugan G, Cao JD (2016) Analysis of global \(o(t^{-\alpha })\) stability and global asymptotical periodicity for a class of fractional-order complex-valued neural networks with time varying delays. Neural Netw 77:51–69

Yang XJ, Song QK, Liu YR, Zhao ZJ (2015) Finite-time stability analysis of fractional-order neural networks with delay. Neurocomputing 152:19–26

Ding XS, Cao JD, Zhao X, Alsaadi FE (2017) Finite-time stability of fractional-order complex-valued neural networks with time delays. Neural Process Lett. doi:10.1007/s11063-017-9604-8

Aguila-Camacho N, Duarte-Mermoud MA (2015) Comments on “fractional order Lyapunov stability theorem and its applications in synchronization of complex dynamical networks”. Commun Nonlinear Sci Numer Simul 25(1–3):145–148

Hale JK, Verduyn SM (1993) Introduction to functional differential equations. Springer, New York

Acknowledgements

The authors are very grateful to the Editors, and the anonymous reviewers for their helpful and valuable comments and suggestions which improved the quality of the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

This work is jointly supported by National Natural Science Fund of China (11301308, 61573096, 61272530), the 333 Engineering Fund of Jiangsu Province of China (BRA2015286), the Natural Science Fund of Anhui Province of China (1608085MA14), the Key Project of Natural Science Research of Anhui Higher Education Institutions of China (gxyqZD2016205, KJ2015A152), and the Natural Science Youth Fund of Jiangsu Province of China (BK20160660).

Rights and permissions

About this article

Cite this article

Zhang, H., Ye, R., Cao, J. et al. Delay-Independent Stability of Riemann–Liouville Fractional Neutral-Type Delayed Neural Networks. Neural Process Lett 47, 427–442 (2018). https://doi.org/10.1007/s11063-017-9658-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-017-9658-7