Abstract

In this paper, we propose an analytical approach based on the Laplace transform and Mittag–Leffler functions combining with linear matrix inequality techniques to study finite-time stability of fractional-order neural networks (FONNs) with time-varying delay. The concept of finite-time stability is extended to the fractional-order neural networks and the delay function is assumed to be non-differentiable, but continuous and bounded. We first prove some important lemmas on the existence of solutions and on estimation of the Caputo derivative of specific quadratic functions. Then, new delay-dependent sufficient conditions for finite-time stability of FONNs with time-varying delay are derived in terms of a tractable linear matrix inequality and Mittag–Leffler functions. Finally, a numerical example with simulations is provided to demonstrate the effectiveness and validity of the theoretical results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In the real world, neural networks have been found everywhere such as in weather forecasting and business processes because neural networks can create simulations and predictions for complex systems and relationships [1,2,3,4,5,6]. It is well-known that fractional-order systems (FOSs) have attracted much attention due to their important applications in various areas of applied sciences over the past decades [7,8,9]. Fractional analysis has been considered and developed in the context of neural networks as artificial neural networks, Hopfield neural networks, etc. In fact, the fractional-order derivative provides neurons with a fundamental and general computational ability that contributes to efficient information processing and frequency-independent phase shifts in oscillatory neuronal firings [10,11,12,13,14,15,16]. So far, most of the existing literature have concerned with Lyapunov asymptotic stability, however, in many practical cases, one concerns the system behavior on finite-time interval, i.e., finite-time stability (FTS) [17]. The concept of FTS has been developed to control problems, which concern the design of admissible controllers ensuring the FTS of the closed-loop system. Many valuable results on finite-time control problems such as finite-time stabilization, finite-time optimal control, adaptive fuzzy finite-time optimal control, etc. have been obtained for this type of stability, see, [18,19,20,21] and the references therein. Therefore, problem of finite-time stability for neural networks described by fractional differential equations has attracted a lot of attention from scientists. It is notable that most of the results on the stability of FOS neural networks did not consider time delay. In many practical applications, time delay is well-known to be unavoidable and it can cause oscillation or instability of the system.

There are various approaches to studying FTS for FOSs with delays including Lyapunov function method, Gronwall and Holder inequality approach, etc. The authors of [22,23,24] studied FTS of linear FOSs by using a generalized fractional Gronwall inequality lemma. In [25], Yang et al. studied FTS of fractional-order neural networks (FONNs) with delay. Chen et al. in [26] used some Holder-type inequalities to propose new criteria for FTS. Combining the Holder inequality and Gronwall inequality, Wu et al. [27] obtained sufficient conditions for FTS of FONNs with constant delay. Based on this approach the authors of [28, 29] developed the similar results for the systems with proportional constant delays. On the other hand, noting that the Lyapunov–Krasovskii function (LKF) method is one of the powerful techniques to studying stability of dynamical systems with delays, however, the LKF method can not be helpfully applied for fractional-order time-delay systems. The difficulty lies in the finding LKF to apply the fractional Lyapunov stability theorem. In [30,31,32,33], the authors used fractional Lyapunov stability theorem to find appropriate LKF for FOSs with time-varying delay, however, the proof of the main theorem provides a gap due to a wrong application of fractional Lyapunov stability theorem. Hence, it is worth investigating the stability of FONNs with time-varying delay. In the paper [34], the authors provided some sufficient conditions for the FTS of singular fractional-order systems with with time-varying delay. Very recently, to avoid finding LKF the authors of [35] employed fractional-order Razumikhin stability theorem to derive criteria for \(H_\infty \) control of FONNs with time-varying delay. To our knowledge, problem of FTS for fractional-order neural networks with time-varying delays has not yet been fully studied in the literature.

Motivated the above discussion, in this paper, we investigate problem of FTS for a class of FONNs with time-varying delay. Especially, the time-varying delay considered in the FONNs is only required to be continuous and interval bounded. The contribution of this paper is twofold. First, considering FONNs with interval time-varying delay, we propose some auxiliary lemmas on the existence of solutions and on estimating the Caputo derivative of some specific quadratic functions. Second, using a proposed analytical approach based on the factional calculus combining with LMI technique, we provide sufficient conditions for FTS. The conditions are established in terms of a tractable LMI and Mittag–Leffler functions. It should be noted that the proposed approach of Laplace transforms and inf–sup method has not yet seen in the field of FONNs with time-varying delay, and the stability conditions obtained in this paper are delay-dependent and novel.

The article is structured as follows. Section 2 presents formulation of the problem and some auxiliary technical lemmas. In Sect. 3, the main result on FTS is presented with an illustrative example and its simulation.

Notations. \(\mathbb {R}^+\) denotes the set of all real positive numbers; \(\mathbb {R}^n\) denotes the Euclidean \(n-\) dimensional space with its scalar product \(x^{\top}y;\) \(\mathbb {R}^{n\times r}\) denotes the space of all \((n\times r)\)-matrices; \(A^{\top}\) denotes the transpose of A; matrix A is positive semi-definite \((A\ge 0)\) if \(x^{\top }Ax\ge 0,\) for all \(x\in \mathbb {R}^n;\) A is positive definite \((A>0)\) if \(x^{\top }Ax>0\) for all \(x\ne 0;\) \(A\ge B\) means \(A-B\ge 0\); \(C([-\tau ,0], \mathbb {R}^n)\) denotes the set of vector valued continuous functions from \([-\tau ,0]\) to \(\mathbb {R}^n\);

2 Preliminaries

We first recall from [7] basic concepts of fractional calculus and some auxiliary results for the use in next section.

Definition 1

[7] For \(\alpha \in (0,1)\) and \( f\in L^1[0,T],\) the fractional integral \(I^{\alpha }f(t),\) the Riemann derivative \(D_R^{\alpha }f(t)\) and the Caputo derivative \(D^\alpha _Cf(t)\) of order \(\alpha\), respectively are defined as

where \(\Gamma (s) =\int \limits _{0}^{\infty }e^{-t}t^{s-1}dt, s > 0, \ t\in [0,T]\) is the Gamma function.

The function

denotes Mittag–Leffler function. The Laplace transform of the integrable function g(.) is defined by \( \mathcal {L}[g(t)](s)=\int \limits _0^{\infty }e^{-s t}g(t)dt. \)

Lemma 1

[7] Assume that \(f_1(.), f_2(.)\) are exponentially bounded integrable functions on \(\mathbb {R}^+,\) and \(0<\alpha <1, \beta >0.\) Then

-

(1)

\(\mathcal {L}[D^{\alpha }_Cf_1(t)](s)=s^{\alpha }\mathcal {L}[f_1(t)](s)-s^{\alpha -1}f_1(0), \)

-

(2)

\( \mathcal {L}[t^{\alpha -1} E_{\alpha ,\alpha }(\beta t^{\alpha })](s)=\dfrac{1}{s^{\alpha }-\beta }, \quad \ \mathcal {L}[ E_{\alpha }(\beta t^{\alpha }) ](s)=\dfrac{s^{\alpha -1}}{s^{\alpha }-\beta }, \)

-

(3)

\( \mathcal {L}[f_1*f_2(t)](s)=\mathcal {L}[f_1(t)](s)\cdot \mathcal {L}[f_2(t)](s), \)

where \(f_1(t)*f_2(t):=\int \limits _{0}^t f_1(t-\tau )f_2(\tau )d\tau .\)

Consider the following FONNs with time-varying delay:

or in the matrix form:

where \(x(t)= (x_i(t), ..., x_n(t))^{\top }\) is the state; the delay d(t) satisfies \( 0<d_1\le d(t)\le d_2,\ \forall t\ge 0; \) \(\phi (t)= (\phi _i(t), ..., \phi _n(t))^{\top }\) is the initial condition with the norm

the variation functions

satisfy \(f(0)=0,\ g(0)=0,\) and for all \(\xi ,\eta \in \mathbb {R},\ i=\overline{1,n}:\)

\(M=diag(m_1,m_2,\dots ,m_n);\) \(F=(a_{ij})_{n\times n},\ G=(b_{ij})_{n\times n}\) are the connections of the \(j^{th}\) neuron to the \(i^{th}\) neuron at time t.

Definition 2

Let \(c_1,c_2, T\) be given positive numbers. System (1) is FTS with respect to \((c_1,c_2, T)\) if

Lemma 2

If \(\phi \in C([-d_2,0],\mathbb {R}^n)\) and the condition (3) holds, then system (1) has a unique solution \(x\in C([-d_2,T),\mathbb {R}^n).\)

Proof

From Volterra integral form of system (2) we have

and consider the function

where

Note that the function \(v_y(t)\) is continuous on [0, T] if \(y\in C([-d_2,T],\mathbb {R}^n).\) So we can see that function \(H(\cdot )\) maps \(C([-d_2,T],\mathbb {R}^n)\) into \(C([-d_2,T],\mathbb {R}^n).\) In fact from the uniform continuity of \(v_y(t)\) on [0, T], there is a \(\delta >0\) such that for all \(t_1,t_2\in [0,T],\ t_2\le t_1,\) and

hence

which also shows the continuity of H(y)(t) on \([-d_2,T].\) Next, for \(t\in [0,T],\ y,z\in C([-d_2,T],\mathbb {R}^n):\)

which leads to

where \(\gamma _1= |M| + |F| \max \limits _i l_i+ |G| \max \limits _i k_i .\) Similarly, by induction, we have for \( m=1,2...\)

Besides, the space \(C([-d_2,T],\mathbb {R}^n)\) with the norm \(\Vert y\Vert =\sup \limits _{s\in [-d_2,T]} |y(s)|\) is a Banach space. Hence,

is a contraction map with this sup norm as m enough large. Applying the fixed-point theorem, we derive the existence of a unique solution \(x\in C([-d_2,T],\mathbb {R}^n).\) \(\square \)

Lemma 3

[34] For \(d>0\) and \(N> 0,\) if function \(S: [-d,N]\rightarrow \mathbb {R}^+\) is non-decreasing and satisfies

then

3 Main result

This section provides new conditions for FTS of system (1) in term of a tractable LMI and Mittag–Leffler condition. Before proving the theorem, let us denote [d] by the integer part of d and

Theorem 1

Let \(c_1, c_2, T\) be given positive numbers. System (1) is FTS with respect to \((c_1,c_2,T)\) if there exist a number \(\beta >0\) and a symmetric matrix \(P >0\) such that

Proof

Let us consider the following non-negative quadratic functional \( V(x(t))=x(t)^{\top }Px(t). \) Since the solution x(t) may not be non-differentiable, we propose the following result on estimating Caputo derivative of V(x(t)). \(\square \)

Lemma 4

For the solution \(x(t)\in C([-d_2,T],\mathbb {R}^n),\) the Caputo derivative \(D^{\alpha }_C(V(x(t)))\in C([0,T],\mathbb {R}^n)\) exists and \( D^{\alpha }_C[V(x(t))]\le 2x(t)^{\top }P D^{\alpha }_C x(t),\quad t\ge 0. \)

To prove the lemma, we note that \(x(t)\in C([-d_2,T],\mathbb {R}^n)\) (by Lemma 2), the function

is continuous on [0, T]. Hence, we get

as \(t\rightarrow 0.\) In the other words,

Consequently,

It is easy to calculate the following integral

From Theorem 2.2 of [36] it follows that \( D^{\alpha }_Cx=u\in C([0,T],\mathbb {R}^n),\) and when \(\xi \rightarrow 1^-,\) we have

when \(\xi \rightarrow 1^-,\) and

Hence, for \(0\le \xi t\le \tau < t\le T,\ \xi \in (0,1],\) we obtain that

where \(c\in (\tau ,t).\) Thus, as \(\xi \rightarrow 1^-,\) we get

because \(k(\xi )\) is independent on \(\tau , t,\) and \(x_0\in H_0^{\alpha }[0,T].\) From (8), (9), (10), as \(\xi \rightarrow 1^-,\)

Using Theorem 2.2 of [36] and (7), (11) gives \(\exists D^{\alpha }_CV(x(t))\in C[0,T]\) and

Besides we have \(D^{\alpha }_C x\in C[0,T]\) and

The identities (12) and (13) lead to \(D^{\alpha }_C(V(x(t)))- 2(x(t),P D^{\alpha }_C x(t) )=0, t=0 \) and for \(t\in (0,T]\) to

which completes the proof of Lemma 4.

To finish the theorem’s proof, denoting

we obtain, by using Lemma 4, that

because of \(\Vert f(\cdot )\Vert ^2\le \max \limits _i l_i^2\ \Vert x(t)\Vert ^2,\) and \([E_{ij}]_{3\times 3}<0\) (by the condition (4)). Let

Using the Laplace transform (by Lemma 1-(i)) to the both sides of (15) gives

equivalently

Applying Lemma 1 -(ii), (iii), we obtain that

hence

Taking the inverse Laplace transform to the derived equation gives

Using (14) and the inequality (3) we have

then

Moreover, we have

Applying Lemma 3 with \( S(t)=\sup \limits _{\theta \in [-d_2,t]}V(x(\theta )), a=E_{\alpha }(d_2 T^{\alpha }), \) \(b=E_{\alpha }(d_2 T^{\alpha }) -1, \) and from (18) it follows that

, where \( q=E_{\alpha }(d_2 T^{\alpha })\sum \limits _{j=0}^{[T/d_1]+1}(E_{\alpha }(d_2 T^{\alpha }) -1)^j . \) For \(t\in [0,T],\) the conditions (5) and (19) show that

which shows that system (1) is FTS with respect to \((c_1,c_2,T).\)

Remark 1

Note that the numbers \(c_1, c_2,\) do not involve in the LMI (4), we find the solutions \(P,\beta \) by solving LMI (4) and the condition (5) can be easily verified.

Remark 2

Theorem 1 proposed delay-dependent sufficient conditions for finite-time stability of FONNs with interval time-varying delay, which is a non-differentiable function, extends some existing results obtained in [23, 30,31,32,33], where the time delay is assumed to be differentiable. Moreover, for the case fractional derivative order \(\alpha =1,\) system (1) is reduced to normal fractional-order neural networks with time-varying delay and some existing results on FTS of such systems obtained in [4, 34, 37,38,39] can be derived from Theorem 1.

Remark 3

It should be pointed out that the advantage of our paper was proposing an approach based on the Laplace transform combining with the inf–sup method to study stability of FONNs with interval time-varying delay without using the fractional Lyapunov stability theorem.

Example 1

Consider FONNs (1) with the following system parameters

the neuron activation functions \(f,g: \mathbb {R}^2\rightarrow \mathbb {R}^2\) defined by

for all \(t\in \mathbb {R},\ (x_1,x_2)\in \mathbb {R}^2.\)

It can be shown that \( 0<d_1=0.1\le d(t)\le d_2=0.15, \) \(f(0)=g(0)=0,\) and the neuron activation functions satisfy the Lipschitz conditions (3) with \( l_1=l_2=k_1=k_2=0.1. \) Since the delay function d(t) is non-differentiable, the method used in [20, 30,31,32,33] cannot be applied. We use the LMI algorithm in MATLAB [40] to find solutions of (4) as

In this case, it can be computed that

For \(c_1=1,\ c_2=4,\ T=10,\) we can check the condition (5) as

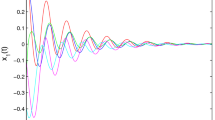

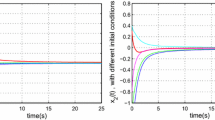

Hence, by Theorem 1, the system (1) is FTS with respect to (1, 4, 10). Figure 1 and Figure 2 demonstrate the time history \(\Vert x(t)\Vert ^2\) of the system with initial condition \(\phi (t)=[0.65, 0.65],\ t\in [-0.15,\ 0]\) and \(\alpha = 0.5,\) and \(\alpha = 0.6,\), respectively.

4 Conclusions

In this paper, the finite-time stability problem for a class of FONNs with interval time-varying delay has been addressed. Based on a novel analytical approach, delay-dependent sufficient conditions for FTS are proposed. The conditions are presented in the form of a tractable LMI and Mittag–Leffler functions. Finite-time stability analysis of FONNs with unbounded time-varying delay may be interesting topics to study in the future, and an extension of this study to non-autonomous FONNs with delays is an open problem.

References

Forti F, Tesi A (1995) New conditions for global stability of neural networks with application to linear and quadratic programming problems, IEEE Trans Circuits Syst I Funda Theory Appl. 42(7), 354–366

Arik S (2002) An analysis of global asymptotic stability of delayed cellular neural networks. IEEE Trans. on Neural Netw. 3(5), 1239–1242

Gupta D, Cohn LF (2012) Intelligent transportation systems and NO2 emissions: Predictive modeling approach using artificial neural networks. J. Infrastruct. Syst. 18(2), 113–118

Phat VN, Fernando T, Trinh H (2014) Observer-based control for time-varying delay neural networks with nonlinear observation. Neural Computing and Applications 24:1639–1645

Abdelsalam SI, Velasco-Hernandez JX, Zaher A.Z. (2012) Electro-magnetically modulated self-propulsion of swimming sperms via cervical canal. Biomechanics and Modeling in Mechanobiology 20:861–878

Abdesalam SI, Bhatti MM (2020) Anomalous reactivity of thermo-bioconvective nanofluid towards oxytactic microorganisms. Applied Mathematics and Mechanics 41:711–724

Kilbas AA, Srivastava H, Trujillo J (2006) Theory and Applications of Fractional Differential Equations. Elsevier, Amsterdam

Bhatti MM, Alamri SZ, Ellahi R, Abdelsalam S.I. (2021) Intra-uterine particle-fluid motion through a compliant asymmetric tapered channel with heat transfer. Journal of Thermal Analysis and Calorimetry 144:2259–2267

Elmaboud Y.A., Abdelsalam S.I., (2019), DC/AC magnetohydro dynamic-micropump of a generalized Burger’s fluid in an annulus, Physica Scripta, 94(11): 115209

Lundstrom B, Higgs M, Spain W, Fairhall A (2008) Fractional differentiation by neocortical pyramidal neurons. Nat. Neurosci. 11:1335–1342

Zhang S, Yu Y, Wang H (2015) Mittag-Leffler stability of fractional-order Hopfield neural networks. Nonlinear Anal. Hybrid Syst. 16:104–121

Chen L, Liu C, Wu R et al. (2016) Finite-time stability criteria for a class of fractional-order neural networks with delay. Neural Computing and Applications 27:549–556

Liu S, Yu Y, Zhang S (2019) Robust synchronization of memristor-based fractional-order Hopfield neural networks with parameter uncertainties. Neural Computing and Applications 31:3533–3542

Baleanu D, Asad JH, Petras I (2012) Fractional-order two-electric pendulum. Romanian Reports in Physics 64(4), 907–914

Baleanu D, Petras I, Asad JH, Velasco MP (2012) Fractional Pais-Uhlenbeck oscillator. International Journal of Theoretical Physics 51(4), 1253–1258

Baleanu D, Asad JH, Petras I (2015) Numerical solution of the fractional Euler-Lagrange’s equations of a thin elastica model. Nonlinear Dynamics 81(1–2), 97–102

Dorato P (1961) Short time stability in linear time-varying systems. Proceedings of IRE International Convention Record 4:83–87

Amato F, Ambrosino R, Ariola M, Cosentino C (2014) Finite-Time Stability and Control Lecture Notes in Control and Information Sciences, Springer, New York

Cai M, Xiang Z (2015) Adaptive fuzzy finite-time control for a class of switched nonlinear systems with unknown control coefficients. Neurocomputing 162:105–115

Fan Y, Li Y (2020) Adaptive fuzzy finite-time optimal control for switched nonlinear systems. Appl Meth Optimal Contr. doi: https://doi.org/10.1002/oca.2623

Ruan Y., Huang T. (2020), Finite-time control for nonlinear systems with time-varying delay and exogenous disturbance, Symmetry, 12(3): 447

Lazarevi MP, Spasi AM (2009) Finite-time stability analysis of fractional order time-delay systems: Gronwall’s approach. Math. Comput. Model. 49:475–481

Phat VN, Thanh NT (2018) New criteria for finite-time stability of nonlinear fractional-order delay systems: A Gronwall inequality approach. Appl. Math. Letters 83:169–175

Ye H, Gao J, Ding Y (2007) A generalized Gronwall inequality and its application to a fractional differential equation. J. Math. Anal. Appl. 328:1075–1081

Yang X, Song Q, Liu Y, Zhao Z (2015) Finite-time stability analysis of fractional-order neural networks with delay. Neurocomputing 152:19–26

Chen L, Liu C, Wu R, He Y, Chai Y (2016) Finite-time stability criteria for a class of fractional-order neural networks with delay. Neural Computing and Applications 27:549–556

Wu RC, Lu YF, Chen LP (2015) Finite-time stability of fractional delayed neural networks. Neurocomputing 149:700–707

Xu C, Li P (2019) On finite-time stability for fractional-order neural networks with proportional delays. Neural Processing Letters 50:1241–1256

Rajivganthi C, Rihan FA, Lakshmanan S, Muthukumar P (2018) Finite-time stability analysis for fractional-order Cohen-Grossberg BAM neural networks with time delays. Neural Computing and Applications 29:1309–1320

Hua C, Zhang T, Li Y, Guan X (2016) Robust output feedback control for fractional-order nonlinear systems with time-varying delays. IEEE/CAA J. Auto. Sinica 3:47–482

Zhang H, Ye R, Cao J, Ahmed A et al. (2018) Lyapunov functional approach to stability analysis of Riemann-Liouville fractional neural networks with time-varying delays, Asian. J. Control 20:1–14

Zhang H, Ye R, Liu S et al. (2018) LMI-based approach to stability analysis for fractional-order neural networks with discrete and distributed delays. Int. J. Syst. Science 49:537–545

Zhangand F, Zeng Z (2020) Multistability of fractional-order neural networks with unbounded time-varying delays. Neural Netw Lean Syst IEEE Trans https://doi.org/10.1109/TNNLS.2020.2977994

Thanh NT, Niamsup P, Phat VN (2020) New finite-time stability analysis of singular fractional differential equations with time-varying delay. Frac. Calcul. Anal. Appl. 23:504–517

Sau NH, Hong DT, Huyen NT, et al. (2021) Delay-dependent and order-dependent \(H_\infty \) control for fractional-order neural networks with time-varying delay. Equ Dyn Syst Diff. doi: https://doi.org/10.1007/s12591-020-00559-z

Vainikko G (2016) Which functions are fractionally differentiable. Zeitschrift fuer Analysis und Ihre Anwendungen 35:465–48

Cheng J, Zhong S, Zhong Q, Zhu H, Du YH (2014) Finite-time boundedness of state estimation for neural networks with time-varying delays. Neurocomputing 129:257–264

Prasertsang P, Botmart T (2021) Improvement of finite-time stability for delayed neural networks via a new Lyapunov-Krasovskii functional. AIMS Mathematics 6(1), 998–1023

Saravanan S., Ali M. S. (2018), Improved results on finite-time stability analysis of neural networks with time-varying delays, J. Dyn. Sys. Meas. Control., 140(10): 101003

Gahinet P, Nemirovskii A, Laub AJ, Chilali M (1985) LMI Control Toolbox For use with Matlab. The MathWorks Inc, Massachusetts

Acknowledgements

This paper was written when the authors were studying at the Vietnam Institute for Advanced Study in Mathematics (VIASM). We sincerely thank the Institute for support and hospitality. The research of N.T. Thanh and V.N. Phat is supported by National Foundation for Science and Technology Development (No. 101.01-2021.01). The research of P. Niamsup is supported by the Chiang Mai University, Thailand. The authors wish to thank the associate editor and anonymous reviewers for valuable comments and suggestions, which allowed us to improve the paper.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that no potential conflict of interest to be reported to this work.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Thanh, N.T., Niamsup, P. & Phat, V.N. New results on finite-time stability of fractional-order neural networks with time-varying delay. Neural Comput & Applic 33, 17489–17496 (2021). https://doi.org/10.1007/s00521-021-06339-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-021-06339-2