Abstract

Based on the nonlinear measure method and the matrix inequality techniques, this paper addresses the global asymptotic stability for the complex-valued neural networks with delay. Furthermore, robust stability of the addressed neural network with norm-bounded parameter uncertainties is also tackled. By constructing an appropriate Lyapunov functional candidate, several sufficient criteria are obtained to ascertain the existence, uniqueness and global stability of the equilibrium point of the addressed complex-valued neural networks, which are easy to be verified and implemented in practice. Finally, one example is given to illustrate the effectiveness of the obtained results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In the past decades, there has been increasing attention payed to investigate the dynamical behaviors of the neural networks due to their extensive applications in associative memory, classification of pattern recognition, engineering optimization, image processing, signal processing and so on, see Refs. [1–6] and the references cited therein. In practical applications, complex signals often appear, which stimulate the introducing and investigating of complex-valued neural networks [7]. Generally speaking, there are many differences between the real-valued neural networks and the complex-valued ones. Compared with the real-valued neural networks, the states, connection weights and activation functions of the latter are all complex-valued. Actually, the complex-valued networks have much more complicated properties than the real-valued ones in many aspects, which makes it possible to solve lots of problems that can not be solved with the real-valued counterparts. For example, both the XOR problem and the detection of symmetry problem can not be solved with a single real-valued neuron, which, however, can be done with a single complex-valued neuron with orthogonal decision boundaries [8]. Therefore, it is necessary to investigate the dynamics of the complex-valued neural networks, especially the stability of the network.

Up till now, there have been increasing research interests in the dynamical analysis for the complex-valued networks, see Refs. [9–14] for example. In [15], by utilizing the method of local inhibition and energy minimization, several criteria have been obtained to guarantee the complete stability and boundedness of the continuous-time complex-valued neural networks with delay. In [16], the activation dynamics of the complex-valued neural network on a general time scale have been investigated. In addition, the global exponential stability of the considered networks has been also discussed. In [17], a class of discrete-time neural networks with complex-valued linear threshold neurons have been discussed, and some conditions have been derived to ascertain the global attractivity, boundedness and complete stability of such networks. For more works on the stability analysis, one could refer to Refs. [18, 19].

In practical life, time delay often appears for the reason of the finite switching speed of the amplifiers, and it also occurs in the electronic implementation of the neural networks when processing the signal transmission, which may greatly influence the dynamical behaviors of the networks in the form of instability, bifurcation and oscillation [20, 21]. Hence, it is very important to study the dynamics of the delayed complex-valued neural networks. During the past several years, a large amount of results associated with this area have been obtained by researchers [22]. For example, in [23], the delayed complex-valued recurrent neural network under two classes of complex-valued activation functions has been investigated, and several sufficient criteria have been presented to assure the existence, uniqueness, global asymptotic/exponential stability of the equilibrium point for the network. On another front, the activation functions also play a remarkable role in the dynamics of neural systems. According to the Liouville’s theorem [24] in the complex domain, every bounded entire function must be a constant function. Therefore, if the activation functions of the complex-valued neural networks are chosen to be the smooth and bounded ones as done for the real-valued neural networks, it will not be appropriate to investigate the dynamics of the complex-valued networks. Hence, it is a big challenge for choosing appropriate activation functions for the complex-valued networks [25]. It should be noted that in Refs. [23, 26], the restrictions on the activation functions are too strong, which motivates the first attempt for the formation of the present paper.

Normally, the parameters of neural networks, including the release rates of neurons and connection weights, may be subject to some deviations because of the tolerance of electronic components employed in the design of neural networks or in the electronic implementation of neural networks. What is more, the stability of neural networks might often be destroyed due to the existence of modeling errors, parameter fluctuation or external disturbance. Hence, it is important and necessary to study the robust stability of neural networks. For the real-valued neural networks, lots of significant results have been developed in the literatures [27–29]. When referring to the complex-valued network, to the best knowledge of the authors, there are only a few results [9], which motivates us to further consider the global robust stability of the complex-valued neural networks.

There are different kinds of approaches for investigating the dynamics of neural networks, such as the Lyapunov function method, the energy function method, the matrix measure method and so on. In [30], by constructing an appropriate Lyapunov function, the global asymptotic stability of the complex-valued neural networks has been studied. In [31], the properties of the activation functions have been discussed so as to find complex functions with good properties by using the energy function method. There are also some results on the stability of neural networks by utilizing the matrix measure method [32, 33]. However, few results have been concerned by using the nonlinear measure method on the global stability of the complex-valued networks with delay, which forms another motivation for developing the presented research.

Inspired by the above discussions, under one general class of activation functions, several sufficient conditions are derived ensuring the global asymptotic stability of the addressed complex-valued network with/without parameter uncertainties by utilizing the nonlinear measure method and the matrix inequality techniques. Compared with the previous related works on the complex-valued systems, the main contribution of the present paper could be summarized as follows. (1) The restrictions on the activation functions are reduced, i.e., both the real and the imaginary parts of the activation functions are no longer required to be derivable. (2) Global asymptotic stability is investigated for the complex-valued neural networks with deterministic/norm-bounded uncertain parameters. And (3) a novel nonlinear measure approach is developed to investigate the stability of the neural system, which is easy to ascertain the existence and uniqueness of the equilibrium point for the networks. The remaining part of the paper is organized as follows. In Sect. 2, the complex-valued model is presented , and some preliminaries are briefly outlined. In Sect. 3, a novel nonlinear measure approach is employed, and by constructing appropriate Lyapunov functional candidate, several criteria are proposed to ascertain the global stability of the complex-valued networks with/without parameter uncertainties. In Sect. 4, one numerical example is given to show the effectiveness of the obtained conditions. Finally, conclusions are drawn in Sect. 5.

Notations The notation used throughout this paper is fairly standard. \({{\mathbb {C}}}^n, {{\mathbb {C}}}^{m\times n}\) and \({\mathbb R}^{m\times n}\) denote the set of n-dimensional complex vectors, \(m\times n\) complex matrices and \(m\times n\) real matrices, respectively. Let i be the imaginary unit, i.e. \(i=\sqrt{ - 1}\). The superscript ‘T’ represents the matrix transposition. \(X \ge Y\) (respectively, \(X > Y\)) means that \(X-Y\) is real, symmetric and positive semi-definite (respectively, positive definite). \(P^R\) and \(P^I\) refer to, respectively, the real and the imaginary parts of matrix \(P\in \mathbb {C}^{m\times n}\). \(\otimes \) and \(<\cdot ,\cdot >\) means, respectively, the Kronecker product and the inner product of vectors.

2 Problem Formulation and some Preliminaries

Consider the complex-valued neural networks described by the following nonlinear delay differential equations:

where \(u(t) = (u_1(t),u_2(t), \ldots ,u_n(t) )^T \in {{\mathbb {C}}}^n\) is the state vector of the neural networks with n neurons at time \(t, C = \mathrm{diag}\{ c_1 ,c_2 , \cdots ,c_n \} \in {\mathbb R}^{n\times n}\) with \(c_k>0\) (\(k=1,2,\ldots ,n\)) is the self-feedback connection weight matrix, \(A = (a_{kj} )_{n \times n} \in {\mathbb C}^{n\times n}\) and \(B = (b_{kj} )_{n \times n} \in {\mathbb C}^{n\times n}\) are, respectively, the connection weight matrix and the delayed connection weight matrix. \(L=(l_1,l_2,\ldots ,l_n)^T \in {{\mathbb {C}}}^n\) is the external input vector. \(f(u(t)) = (f_1(u_1(t)),f_2(u_2(t)), \ldots ,f_n(u_n(t)) )^T : {{\mathbb {C}}}^n \rightarrow {{\mathbb {C}}}^n\) and \(g(u(t-\tau )) = (g_1(u_1(t-\tau )),g_2(u_2(t-\tau )), \ldots ,g_n(u_n(t-\tau )) )^T : {{\mathbb {C}}}^n \rightarrow {{\mathbb {C}}}^n\) denote, respectively, the vector-valued activation functions without and with time delays in which \(\tau \) is the transmission delay, and the nonlinear activation functions are assumed to satisfy the conditions given below:

Assumption 1

For \(u=x+iy\in \mathbb {C}\) with \(x, y\in \mathbb {R}, f_k(u)\) and \(g_k(u)\) are expressed as

where \(k=1, 2, \cdots , n\). There exist positive constants \(\lambda _{k}^{RR},\lambda _{k}^{RI},\lambda _{k}^{IR},\lambda _{k}^{II}\) and \(\xi _k^{RR},\xi _k^{RI},\xi _k^{IR},\xi _k^{II}\) such that the following inequalities

for any \( x_1, x_2, y_1, y_2 \in \mathbb {R}\).

Remark 1

In Assumption 1 of Refs. [23] and [26], where the global stability of complex-valued neural networks with time-varying delays has been studied, the activation functions are supposed to be in the same forms as above with additional restrictions that the partial derivatives of \(f_j^R(\cdot ,\cdot )\) and \(f_j^I(\cdot ,\cdot )\) exist and are continuous. However, in our paper, both the real and the imaginary parts of the activation functions are no longer assumed to be derivable.

Denote \(u(t)=x(t)+iy(t)\) with \(x(t), y(t)\in \mathbb {R}^n\), then the complex-valued neural network (1) can be rewritten as follows:

where

The aim of this paper is to find some conditions, under which system (2) [or equivalently, system (1)] is globally asymptotically stable, where the conditions are to be presented by the nonlinear measure method.

First, the definition of the nonlinear measure is introduced. It should be noted that such a definition has been firstly introduced in Ref. [34] to investigate the global/local stability of Hopfield-type networks, and then extended in Ref. [35] to study the dynamics of static neural networks.

Definition 1

[35] Suppose that \(\Omega \) is an open set of \(\mathbb {R}^n\), and \(G: \Omega \rightarrow \mathbb {R}^n\) is an operator. The constant

is called the nonlinear measure of G on \(\Omega \) with the Euclidean norm \(\Vert \cdot \Vert _2\).

Before deriving our main results, some useful lemmas are introduced as follows.

Lemma 1

[36] For any vectors \(X, Y\in \mathbb {R}^n\), matrix \(0<W\in \mathbb {R}^{n\times n}\) and a positive real constant \(\gamma \), we have

Lemma 2

[37] Given constant matrices P, Q and R with \(P=P^T\) and \(~Q=Q^T\), then

is equivalent to one of the following conditions:

- (1):

-

\(Q<0, P-RQ^{-1}R^T<0\).

- (2):

-

\(P<0, Q-R^TP^{-1}R<0\).

Lemma 3

[35] If \(m_{\Omega }(G)< 0\), then G is an injective mapping on \(\Omega \). In addition, if \(\Omega =\mathbb {R}^n\), then G is a homeomorphism of \(\mathbb {R}^n\).

Remark 2

From Lemma 3, it is easy to obtain that if \(m_{\Omega }(G)< 0\) and \(\Omega =\mathbb {R}^n\), then \(G(w)=0~(\forall w\in \mathbb {R}^n)\) will have one unique solution. Combining the nonlinear measure with the Euclidean norm \(\Vert \cdot \Vert _2\) and some matrix inequality techniques, in this paper, some sufficient conditions are to be given assuring the stability of the addressed neural networks.

3 Main Results

In this section, based on the nonlinear measure method and by constructing appropriate Lyapunov functional candidate, some criteria are presented to ascertain the global asymptotical stability of the complex-valued neural network (1) with constant time delay. The main results are stated one by one as follows.

Theorem 1

Suppose that the Assumption 1 holds, the complex-valued neural network (1) has one unique equilibrium which is globally asymptotically stable if there exist a matrix \(P>0\), positive diagonal matrices \(Q_k\) and \(S_k=\mathrm{diag}\{s_k^1,s_k^2,\ldots ,s_k^n\}~(k=1,2,3,4)\) such that

where \(\Psi =-PC_1-C_1P+2Q_1+2Q_2+2Q_3+2Q_4, I_2\) is the unitary matrix in \(\mathbb {R}^{2\times 2}\),

with \(\Lambda _1^{RR}=\mathrm{diag}\{\lambda _1^{RR},\lambda _2^{RR},\ldots ,\lambda _n^{RR}\}, \Lambda _1^{RI}=\mathrm{diag}\{\lambda _1^{RI},\lambda _2^{RI},\ldots ,\lambda _n^{RI}\}, \Lambda _{1}^{IR}=\mathrm{diag}\{\lambda _1^{IR},\lambda _2^{IR},\ldots ,\lambda _n^{IR}\}, \Lambda _1^{II}=\mathrm{diag}\{\lambda _1^{II},\lambda _2^{II},\ldots ,\lambda _n^{II}\}, \Lambda _2^{RR}=\mathrm{diag}\{\xi _{1}^{RR},\xi _2^{RR},\ldots ,\xi _{n}^{RR}\}, \Lambda _2^{RI}=\mathrm{diag}\{\xi _1^{RI},\xi _2^{RI},\ldots ,\xi _n^{RI}\}, \Lambda _2^{IR}=\mathrm{diag}\{\xi _1^{IR},\xi _2^{IR},\ldots ,\xi _n^{IR}\}, \Lambda _2^{II}=\mathrm{diag}\{\xi _1^{II},\xi _2^{II},\ldots ,\xi _n^{II}\}\).

Proof

The result will be proved in two steps. First, the equilibrium point of system (2) will be proved to exist and be unique. Second, the unique equilibrium point of system (2) will be shown to be globally asymptotically stable.

Step 1 Define an operator \(H: \mathbb {R}^{2n}\rightarrow \mathbb {R}^{2n}\) as follows:

And construct a differential system given below:

Since the matrix P is invertible, it is easy to obtain that the equilibrium points sets of system (2) and system (5) are the same to each other. In the following, we will prove that \(m_{\mathbb {R}^{2n}}(PH)<0\).

For \(\alpha =((x^{\alpha })^T,(y^{\alpha })^T)^T, \beta =((x^{\beta })^T,(y^{\beta })^T)^T \in \mathbb {R}^{2n}\) with \(\alpha \ne \beta \) and \(x^{\alpha }, x^{\beta }, y^{\alpha }, y^{\beta }\in \mathbb {R}^n\), one has that

in which Lemma 1 has been utilized in the second step when deriving (6), and \(S_k~(k=1,2,3,4)\) are positive diagonal matrices solution of inequality conditions (3)–(4). By utilizing Assumption 1, one gets that

where condition (4) has been resorted to in the fifth step, \(x^\alpha =(x_1^\alpha ,x_2^\alpha ,\ldots ,x_n^\alpha )^T\) and \(y^\alpha , x^\beta , y^\beta \) are similarly defined. Similarly, one also has that

Substituting (7)–(10) into (6) yields that

Utilizing Lemma 2, it follows from condition (3) that \(\Psi +PA_1(I_2\otimes S_1^{-1})A_1^TP+PA_2(I_2\otimes S_2^{-1})A_2^TP+PB_1(I_2\otimes S_3^{-1})B_1^TP+PB_2(I_2\otimes S_4^{-1})B_2^TP<0 \), which combining (11) and the fact that \(\alpha \ne \beta \) infers that

From (12), according to Definition 1, one gets that \(m_{\mathbb {R}^{2n}}(PH)<0\). Therefore, it follows from Lemma 3 that system (5) has a unique equilibrium point, which implies that system (2) also has a unique equilibrium point.

Step 2 Suppose that \(\alpha ^*=((x^*)^T,(y^*)^T)^T\) is an equilibrium point of (2), i.e., \(-C_1\alpha ^*+A_1\overline{f_1}(\alpha ^*)+ A_2\overline{f_2}(\alpha ^*)+B_1\overline{g_1}(\alpha ^*) +B_2\overline{g_2}(\alpha ^*)+\zeta =0\). For simplicity, let \(e(t)=\alpha (t)-\alpha ^*\), then system (2) could be transformed into the following form:

where

Consider the following Lyapunov functional candidate:

where \(e_t(\theta )\triangleq e(t+\theta )\) for \(\theta \in [-\tau ,0]\). Calculate the derivative of \(V(t,e_t)\) along the trajectory of system (13), one obtains that

By the similar way as shown in (7)–(10), it is easy to obtain that

Substituting (15)–(16) into (14) yields that

It follows from Lemma 2 and condition (3) that \(\mathop V\limits ^\centerdot \centerdot (t,e_t)<0\) for all \(e(t)\ne 0\), which implies that the equilibrium point of system (13) (and consequently, system (1)) is globally asymptotically stable. The proof is complete.

Corollary 1

Suppose that the Assumption 1 holds, the complex-valued neural network (1) has one equilibrium which is globally asymptotically stable if there exist a matrix \(P>0\), positive diagonal matrices \(S_k~(k=1,2,3,4)\) such that

where \(\widetilde{\Psi }=-PC_1-C_1P+4I_2\otimes (S_1\Gamma _1^T\Gamma _1) +4I_2\otimes (S_2\Gamma _2^T\Gamma _2)+4 {I}_2\otimes (S_3\Gamma _3^T\Gamma _3)+4I_2\otimes (S_4\Gamma _4^T\Gamma _4), \Gamma _1=\mathrm{diag}\{\lambda _1^M,\lambda _2^M,\ldots ,\lambda _n^M\}, \Gamma _2=\mathrm{diag}\{\lambda _1^N,\lambda _2^N,\ldots ,\lambda _n^N\}, \Gamma _3=\mathrm{diag}\{\xi _1^M,\xi _2^M,\ldots ,\xi _n^M\}, \Gamma _4=\mathrm{diag}\{\xi _1^N,\xi _2^N,\ldots ,\xi _n^N\}\), and \(\lambda _k^M=\max \{\lambda _k^{RR},\lambda _k^{RI}\}, \lambda _k^N=\max \{\lambda _k^{IR},\lambda _k^{II}\}, \xi _k^M=\max \{\xi _k^{RR},\xi _k^{RI}\}, \xi _k^N=\max \{\xi _k^{IR},\xi _k^{II}\}, k=1,2,\dots ,n\).

Proof

Along the similar proof lines of Theorem 1, by utilizing Assumption 1, one gets that

which immediately infers that for positive diagonal matrix \(S_1\), the following inequality holds:

Similarly, one gets

Along the similar proof lines of Theorem 1, one could obtain the result here. The proof is completed.

It is well known to us that the parameters of the system are often affected by external disturbance. In this paper, it is assumed that the parameter uncertainties appear in system (13) with the following form:

where the matrices \(\Delta C_1(t),\Delta A_1(t),\Delta A_2(t),\Delta B_1(t)\) and \(\Delta B_2(t)\) are the uncertainties having the following norm-bounded form:

in which \(D,E_{C_1},E_{A_1},E_{A_2},E_{B_1}\) and \(E_{B_2}\) are known constant real matrices with appropriate dimensions, and F(t) is an unknown matrix function with Lebesgue-measurable elements bounded by

Lemma 4

[36] Given symmetric matrix \(\Xi , D\) and E of appropriate dimensions, then

holds for all F(t) satisfying \(F(t)^TF(t)\le I\) if and only if there exists \(\varepsilon >0\) such that

Now, we are ready to investigate the global asymptotic robust stability of the system (18).

Theorem 2

Suppose that the Assumption 1 holds, the system (18) with parameter uncertainties (19)-(20) is globally robustly asymptotically stable if there exist a matrix \(P>0\), positive diagonal matrices \(Q_k\) and \(S_k~(k=1,2,3,4)\) and a scalar \(\varepsilon >0\) such that inequality (4) and the following inequality

hold, where the matrix \(\Psi \) is the same as defined in Theorem 1.

Proof

Let \(\Upsilon _D=[D^TP, 0, 0, 0, 0]^T, \Upsilon _E=[-E_{C_1},E_{A_1},E_{A_2},E_{B_1},E_{B_2}]\). By resorting to Lemma 2 and condition (3), it is easy to obtain that inequality (21) is equivalent to

It follows from Lemma 4 that (22) holds if and only if

holds for all F(t) satisfying \(F(t)^TF(t)\le I\), which implies that

Utilizing Theorem 1 and (24), one gets the conclusion that the system (18) is globally robustly asymptotically stable. The proof is complete.

Corollary 2

Suppose that the Assumption 1 holds, the system (18) with parameter uncertainties (19)–(20) is globally robustly asymptotically stable if there exist a matrix \(P>0\), positive diagonal matrices \(S_k~(k=1,2,3,4)\) and a scalar \(\varepsilon >0\) such that

where \(\widetilde{\Psi }\) is the same as defined in Corollary 1.

Remark 3

In [22], the global stability of complex-valued neural networks with both leakage time delay and discrete time delay on time scales has been studied, whereas the global attractiveness is not taken into account. In this paper, both the global stability and the global attractiveness have been simultaneously considered. From this view point, the criteria here are more general than the results in Ref. [22].

Remark 4

The global \(\mu \)-stability of the complex-valued neural networks with unbounded time-varying delays has been investigated under the assumption that the equilibrium point of the network should exist in Ref. [38]. It is well known to us that the equilibrium points of the complex-valued system may not exist, which implies that the results in Ref. [38] could not be utilized under the situation that the equilibrium points of the complex-valued system don’t exist. However, based on the nonlinear measure method, it is easy to ascertain the existence and uniqueness of the equilibrium point for the complex-valued neural networks.

Remark 5

Recently, global exponential periodicity and stability have been investigated in Refs. [39, 40], respectively, for the complex-valued neural networks in continuous-time and discrete-time forms. If the parameters of the network are disturbed from the external environments, the results there would not verify whether the complex-valued system is stable or not. Whereas in this paper, besides the global asymptotic stability, the robust stability of the complex-valued network with norm-bounded parameter uncertainties has also been considered.

4 Numerical Examples

In this section, we will give one numerical example to illustrate the effectiveness of the obtained results.

Example 1

Consider a two-neuron complex-valued neural network with constant delay described in (1) with \(C=\mathrm{diag}\{8,6\}, \tau =0.8, L=(1-i,3+2i)^T\),

For \(u_k=x_k+iy_k\) with \(x_k, y_k\in \mathbb {R}, k=1,2\), the activation functions are taken as the same ones given in Ref. [14] as follows:

And, it is easy to calculate that \(\lambda _k^{RR}=0.5, \lambda _k^{RI}=0, \lambda _k^{IR}=0, \lambda _k^{II}=0.25\) and \(\xi _k^{RR}=0.25, \xi _k^{RI}=0, \xi _k^{IR}=0, \xi _k^{II}=0.5\).

It can be checked that there does not exist a positive Hermite matrix satisfying the conditions given in Theorem 1 in Ref. [14], which implies that the criteria in Ref. [14] could not guarantee whether the complex-valued neural network (1) is globally asymptotically stable or not. However, it is easy to find feasible solutions to (3) and (4) in Theorem 1 of our paper by utilizing the Matlab Toolbox. Here, only part of it is listed for space consideration: \(Q_1=\mathrm{diag}\{0.9146, 0.8224, 0.7407, 0.6438\}, S_2=\mathrm{diag}\{ 1.2238, 1.2597\}, Q_3=\mathrm{diag}\{0.7829, 0.6874, 0.7407, 0.6438\}, S_4=\mathrm{diag}\{ 1.2467, 1.2627\}\) and

Therefore, our result is less conservative than that of Theorem 1 in Ref. [14].

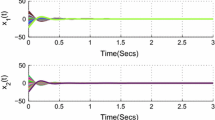

Figures 1 and 2 illustrate the time responses of the states for the complex-valued neural network (1) with the following six kinds of initial conditions, which further illustrates the effectiveness of the obtained results. Case 1 with the initial state \(u_1(t)=-6.7+0.3i, u_2(t)=2.3-3.7i\) for \(t\in [-0.8,0]\). Case 2 with the initial state \(u_1(t)=-0.7-1.7i, u_2(t)=-5.7-0.2i\) for \(t\in [-0.8,0]\). Case 3 with the initial state \(u_1(t)=0.3-7.7, u_2(t)=3.3+0.3i\) for \(t\in [-0.8,0]\). Case 4 with the initial state \(u_1(t)=-4.7-6.7i, u_2(t)=-9.7+1.3i\) for \(t\in [-0.8,0]\). Case 5 with the initial state \(u_1(t)=-1.7+1.3i, u_2(t)=-7.7-4.2i\) for \(t\in [-0.8,0]\). Case 6 with the initial state \(u_1(t)=1.8-4.7i, u_2(t)=1.8-8.7i\) for \(t\in [-0.8,0]\).

Trajectories of the real parts x(t) of the states u(t) for neural network (1)

Trajectories of the imaginary parts y(t) of the states u(t) for neural network (1)

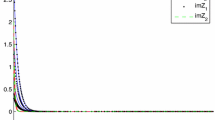

Next, we consider the system (18) with parameter uncertainties satisfying (19)–(20) with

and the other parameters are the same as given above. One could obtain feasible solution to (4) and (21) in Theorem 2, part of which is given as follows:

\(S_1=\mathrm{diag}\{ 1072.3, 867.1\}, S_4=\mathrm{diag}\{ 2604.6, 660.3\}, Q_2=\mathrm{diag}\{ 56.5595, 58.5228, 353.4201, 140.5313\}, Q_3=\mathrm{diag}\{113.2508, 125.0990, 178.1286, 15.2007\}\) and \(\varepsilon = 88.3343\). Therefore, it follows from Theorem 2 that the system (18) is globally robustly asymptotically stable.

By taking \(F(t)=I\) for simulation, Fig. 3 illustrates the time responses of the states for the system (18) with the same initial conditions given earlier, which further verifies the validity and effectiveness of the criteria obtained.

Trajectories of the states e(t) for the system (18)

5 Conclusions

In this paper, the global stability of the complex-valued neural networks with and without parameter uncertainties has been investigated, respectively. Based on the nonlinear measure method and by constructing appropriate Lyapunov functional candidate, several sufficient criteria have been obtained to ascertain the global robust stability of the addressed network under one general class of activation functions. Finally, one example has been illustrated in the end of the paper to show the effectiveness of our main results.

In the future, investigations such as multistability will be carried out further for the complex-valued neural systems with real-imaginary-type activation functions and distributed delays. Moreover, motivated from the works in Refs. [41, 42], other research topics will also be included such as the state estimation of the complex-valued systems with incomplete information.

References

Liang J, Wang Z, Liu X (2009) State estimation for coupled uncertain stochastic networks with missing measurements and time-varying delays: the discrete-time case. IEEE Trans Neural Netw 20(5):781–793

Wang Z, Zhang H, Yu W (2009) Robust stability of Cohen–Grossberg neural networks via state transmission matrix. IEEE Trans Neural Netw 20(1):169–174

Liao X, Wang J, Zeng Z (2005) Global asymptotic stability and global exponential stability of delayed cellular neural networks. IEEE Trans Circuits Syst II 52(7):403–409

Hu S, Wang J (2002) Global stability of a class of continuous-time recurrent neural networks. IEEE Trans Circuits Syst I 49(9):1334–1347

Jian J, Zhao Z (2015) Global stability in Lagrange sense for BAM-type Cohen–Grossberg neural networks with time-varying delays. Syst Sci Control Eng Open Acess J 3(1):1–7

Li C, Liao X, Yu J (2002) Complex-valued recurrent neural network with IIR neural model: traning and applications. Circuits Syst Signal Process 21(5):461–471

Hirose A (1994) Fractal variation of attractors in complex-valued neural networks. Neural Process Lett 1(1):6–8

Jankowski S, Lozowski A, Zurada J (1996) Complex-valued multistate neural associative memory. IEEE Trans Neural Netw 7(6):1491–1496

Zhang W, Li C, Huang T (2014) Global robust stability of complex-valued recurrent neural networks with time-delays and uncertainties. Int J Biomath 7(2) Art. No. 1450016

Goh SL, Mandic DP (2004) A complex-valued RTRL algorithm for recurrent neural networks. Neural Comput 16(12):2699–2713

Lee DL (2001) Relaxation of the stability condition of the complex-valued neural networks. IEEE Trans Neural Netw 12(5):1260–1262

Rakkiyappan R, Velmurugan G, Li X (2015) Complete stability analysis of complex-valued neural networks with time delays and impulses. Neural Process Lett 41(3):435–468

Rakkiyappan R, Cao J, Velmurugan G (2015) Existence and uniform stability analysis of fractional-order complex-valued neural networks with time delay. IEEE Trans Neural Netw Learn Syst 26(1):84–97

Fang T, Sun J (2014) Further investigate the stability of complex-valued recurrent neural networks with time-delays. IEEE Trans Neural Netw Learn Syst 25(9):1709–1713

Zou B, Song Q (2013) Boundedness and complete stability of complex-valued neural networks with time delay. IEEE Trans Neural Netw Learn Syst 24(8):1227–1238

Bohner M, Rao VSH, Sanyal S (2011) Global stability of complex-valued neural networks on time scales. Differ Equ Dyn Syst 19:3–11

Zhou W, Zurada JM (2009) Discrete-time recurrent neural networks with complex-valued linear threshold neurons. IEEE Trans Circuits Syst 56(8):669–673

Song Q, Zhao Z, Liu Y (2015) Stability analysis of complex-valued neural networks with probabilistic time-varying delays. Neurocomputing 159:96–104

Song Q, Zhao Z, Liu Y (2015) Impulsive effects on stability of discrete-time complex-valued neural networks with both discrete and distributed time-varying delays. Neurocomputing 168:1044–1050

He X, Li C, Huang T, Li C (2013) Codimension two bifurcation in a delayed neural network with unidirectional coupling. Nonlinear Anal 14(2):1191–1202

Khajanchi S, Banerjee S (2014) Stability an bifurcation analysis of delay induced tumor immune interaction model. Appl Math Comput 248:652–671

Chen X, Song Q (2013) Global stability of complex-valued neural networks with both leakage time delay and discrete time delay on time scales. Neurocomputing 121:254–264

Hu J, Wang J (2012) Global stability of complex-valued recurrent neural networks with time-delays. IEEE Trans Neural Netw 23(6):853–865

Rudin W (1987) Real and complex analysis. McGraw-Hill, New York

Hirose A (2006) Complex-valued neural networks. Springer, New York

Xu X, Zhang J, Shi J (2013) Exponential stability of complex-valued neural networks with mixed delays. Neurocomputing 128:483–490

Ozcan N, Arik S (2006) An analysis of global robust stability of neural networks with discrete time delays. Phys Lett A 359(5):445–450

Liao X, Wong K, Wu Z, Chen G (2001) Novel robust stability criteria for interval-delayed Hopfield neural networks. IEEE Trans Circuits Syst I 48(11):1355–1359

Hu S, Wang J (2002) Global exponential stability of continuous-time interval neural networks. Phys Rev E 65 Art. No. 036133

Zhang Z, Lin C, Chen B (2014) Global stability criterion for delayed complex-valued recurrent neural networks. IEEE Trans Neural Netw Learn Syst 25(9):1704–1708

Kuroe Y, Yoshida M, Mori T (2003) On activation functions for complex-valued neural networks-existence of energy functions. Lect Notes Computer Sci 2714:985–992

Fang T, Sun J (2013) Stability analysis of complex-valued nonlinear delay differential systems. Syst Control Lett 62:910–914

Fang T, Sun J (2014) Stability of complex-valued impulsive and switching system and application to the Lü system. Nonlinear Anal 14:38–46

Qiao H, Peng J, Xu Z (2001) Nonlinear measures: a new approach to exponential stability analysis for Hopfield-type neural networks. IEEE Trans Neural Netw 12(2):360–370

Li P, Cao J (2006) Stability in static delayed neural networks: a nonlinear measure approach. Neurocomputing 69(13–15):1776–1781

Wang Y, Xie L, De Souza CE (1992) Robust control of a class of uncertain nonlinear systems. Syst Control Lett 19:139–149

Boyd S, El Ghaoui L, Feyon E, Balakrishnan V (1994) Linear matrix inequalities in system and control theory. SIAM, Philadelphia

Chen X, Song Q, Liu X, Zhao Z (2014) Global \(\mu \)-stability of complex-valued neural networks with unbounded time-varying delays. Abstr Appl Anal, Article ID 263847

Hu J, Wang J (2015) Global exponential periodicity and stability of discrete-time complex-valued recurrent neural networks with time-delays. Neural Netw 66:119–130

Pan J, Liu X, Xie W (2015) Exponential stability of a class of complex-valued neural networks with time-varying delays. Neurocomputing 164:293–299

Liang J, Wang Z, Liu X (2011) Distributed state estimation for discrete-time sensor networks with randomly varying nonlinearities and missing measurements. IEEE Trans Neural Netw 22(3):486–496

Ding D, Wang Z, Shen B, Dong H (2015) \(H_\infty \) state estimation with fading measurements, randomly varying nonlinearities and probabilistic distributed delays. Int J Robust Nonlinear Control 25(13):2180–2195

Acknowledgments

This work is supported in part by the National Natural Science Foundation of China under Grant 61174136 and 61272530, the Natural Science Foundation of Jiangsu Province of China under Grant BK20130017, the 333 Project Foundation of Jiangsu Province, the Programme for New Century Excellent Talents in University under Grant NCET-12-0117 and the Fundamental Research Funds for the Central Universities and the Graduate Research and Innovation Program of Jiangsu Province (No. KYLX_0083).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Gong, W., Liang, J., Zhang, C. et al. Nonlinear Measure Approach for the Stability Analysis of Complex-Valued Neural Networks. Neural Process Lett 44, 539–554 (2016). https://doi.org/10.1007/s11063-015-9475-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-015-9475-9