Abstract

This paper presents a new method for sensor dynamic reliability evaluation based on evidence theory and intuitionistic fuzzy sets when the prior knowledge is unknown. The dynamic reliability of sensors is evaluated based on supporting degree between basic probability assignments (BPAs) provided by sensors. First, the concept of asymmetric supporting degree is proposed. By transforming BPAs to intuitionistic fuzzy sets, supporting degree between BPAs is calculated based on intuitionistic fuzzy operations and similarity measure. Then the relationship between dynamic reliability and supporting degree is analyzed. The process of dynamic reliability evaluation is proposed. Finally, the proposed dynamic reliability evaluation is applied to evidence combination. A new evidence combination rule is proposed based on evidence discounting operation and Dempster’s rule. Comparative analysis on the performance of the proposed reliability evaluation method and evidence combination rule is carried out based on numerical examples. The proposed method for data fusion is also applied in target recognition to show its feasibility and validity.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

As an important component in many fields dealing with pattern recognition, identification, diagnosis, etc., multi-sensor data fusion technology has received considerable attention for both military and nonmilitary applications. In a multi-sensor system, the information derived from different sources is usually imperfect, i.e., imprecise, uncertain, or even conflicting. To handle this case, uncertainty theories such as probability theory, evidence theory, fuzzy set theory, and possibility theory have been proposed. Among these theories, evidence theory has been widely applied in multi-sensor data fusion because of its flexibility in managing uncertainty [14, 27,28,29, 34,35,36]. Traditionally, information sources in the fusion systems are regarded equally reliable and most attention is paid to uncertainty modeling and fusion methods. However, the performance of the fusion system highly depends on the sensor performance (e.g., accuracy, work efficiency, and the ability to understand the dynamic working environment) and the capability to estimate the reliability of each sensor for each input. Information provided by sensors does not have the same degree of reliability. This may be caused by many factors specific to sensors. For instance, measurements can differ from one sensor to another in terms of completeness, precision, and certainty. Additionally, the working environment can also affect the sensor reliability, since some of them could be better adapted to the conditions encountered in the considered environment than others.

Therefore, the information to be fused should be modified according to the reliability of their sources, which reflects the ability of each source to provide a correct assessment of the given problem. The effects of information provided by more reliable sources should be strengthened, while the effects of information coming from less reliable sources should be weakened. Thus, sensor reliabilities must be assessed before information fusion.

This leaves us with the question of how to determine the reliability of evidence sources. When the prior information is available, the reliability of sensor can be evaluated by training or optimization. Elouedi et al. [8] have proposed a method of assessing the sensor reliability in a classification problem based on the transferable belief model (TBM), an extension of evidence theory. In this method, the sensor reliability is assessed by minimizing the mean square error between the discounted sensor readings and the actual values of data.

Guo et al. [10] extended Elouedi’s work in two aspects. On one hand, they developed a new evaluation method to improve Elouedi’s method and called it as the static evaluation method. On the other hand, they treated the evaluation task as a two-stage training process, namely, supervised (or static) and unsupervised (or dynamic) evaluation, respectively, and then proposed to combine them. This leads to a deeper insight into the issue of sensor reliability evaluation. The first one is what they have called the static supervised evaluation method. A static discounting factor assigned to a sensor is based on the comparison between its original readings and the actual values of data. Information content contained in the actual values of each target is extracted to determine its influence on the evaluation. This method also permits the evaluation of the reliability of the fusion result. The second one is the dynamic evaluation method, which can be used to dynamically evaluate the evidence reliability by adaptive learning and regulation in real-time situations. The dynamic reliability is related to the contexts of sensor acquisitions and sensor dynamic performance.

But the crucial problem is how to access the dynamic reliability of each sensor when there is no prior information. As interpreted by Guo et al. in [10], the evidential distance measure, conflict measure, and other induced dissimilarity measures are used to evaluate sensor reliability. Sensor reliability is increased with the similarity degree between its readings and other readings. This is called as the principle of majority. By this principle, many reliability evaluation methods have been proposed. For example, Schubert [19] proposed a degree of falsity to evaluate the reliability of evidence sources. Based on Jousselme’s [12] distance measure, Klein and Colot [13] propose the degree of dissent, which is evaluated by comparing a basic probability assignment (BPA) to the average BBA in a set. The distance between a BPA and the average BPA is applied to estimate its reliability. Based on Jousselme’s distance measure and Schubert’s idea, Yang et al. [31] defined a new disagreement measure by borrowing ideas from the design of Schubert’s degree of falsity to estimate the reliability of evidence source. Liu et al. [15] noted that the distance represented the difference between BPAs, whereas the conflict coefficient revealed the divergence degree of the hypotheses that two belief functions strongly support. These two aspects of dissimilarity were complementary in a certain sense, and their fusion could be used as the dissimilarity measure. So they presented a new dissimilarity measure by fusing distance and conflict measure based on Hamacher T-conorm fusion rule. In the evaluation of reliability of a source, both its dissimilarity with other sources and their reliability factors were considered.

However, taking a closer examine on these methods, we can find that they all boil down to the definition of similarity or dissimilarity measure between BPAs. We can also note that the supporting degree between belief functions is regarded as identical to the similarity degree between them. In fact, the supporting degree and the similarity degree are two different concepts. The similarity degree is used to measure the same characteristics contained in two belief functions. Thus the similarity measures are usually a symmetric concept. Given two BPAs \(m_{1}\) and \(m_{2}\), the similarity measure Sim satisfied Sim(m1,m2) = Sim(m2,m1). The supporting degree is quite different to similarity degree, although it is related to the similarity degree. The fact that \(m_{1}\) supports \(m_{2}\) does not indicates \(m_{2}\) supports \(m_{1}\). The concept of supporting degree is not mutual. For supporting degree measure Sup, Sup(m1,m2) = Sup(m2,m1) does not always hold.

So the concept of supporting degree in evidence should be further investigated to reveal its connection with similarity/ distance measures. To answer this questing, we will propose an asymmetric supporting degree measure for BPAs. Moreover, when assessing sensor reliability, it is necessary to take all uncertain information into account. Thus, sensor reliability cannot be evaluated comprehensively merely depending on evidence theory. Taking inspirations from the relations between evidence theory and intuitionistic fuzzy sets (IFSs) [22], we can improve the method of evaluating sensor reliability in the framework of evidence theory and IFSs. In this paper, the concept of asymmetric supporting degree is proposed. By transforming BPAs to intuitionistic fuzzy sets, supporting degree between BPAs is calculated based on intuitionistic fuzzy operations and similarity measure. Then the relationship between dynamic reliability and supporting degree is analyzed. The process of dynamic reliability evaluation is proposed. Finally, the proposed dynamic reliability evaluation is applied to evidence combination. A new evidence combination rule is proposed based on evidence discounting operation and Dempster’s rule.

The rest contents of this paper are arranged as follows. In Section 2, we briefly recall the relevant foundation of evidence theory. In Section 3, we present the evaluation of the dynamic reliability based on supporting degree. To facilitate the construction of supporting degree, some basic definitions on intuitionistic fuzzy sets together with the relationship between BPAs and IFSs are discussed in this section. A new method for data fusion is also proposed based on sensors’ dynamic reliability. In Section 4, numerical examples are applied to illustrate the performance of our proposed methods. Application of the proposed method in target recognition is presented in Section 5. Conclusions of this paper are put forward in Section 6.

2 Evidence theory

2.1 Basic concepts

Dempster-Shafer evidence theory was modeled based on a finite set consisting of mutually exclusive elements, called the frame of discernment denoted by \({\Theta } \) [6]. The power set of \({\Theta } \), denoted by \(2^{{\Theta }} \), contains all possible unions of the sets in \({\Theta }\) including \({\Theta } \) itself. Singleton sets in a frame of discernment \({\Theta } \) is called atomic sets because they do not contain nonempty subsets. The following terminologies are central in the Dempster-Shafer theory.

Let \({\Theta } =\{{\theta }_{1}, {\theta }_{2},\cdots ,{\theta }_{n}\}\) be the frame of discernment. A basic probability assignment (BPA) is a function m: 2Θ → [0, 1], satisfying the two following conditions:

where \(\emptyset \) denotes empty set, and \(A\) is any subset of \({\Theta } \). Such a function is also called belief structure. For each subset \(A\subseteq {\Theta } \), the value taken by the BPA at \(A\) is called the basic probability mass of \(A\), denoted by \(m(A)\)

A subset \(A\) of \({\Theta } \) is called the focal element of a belief structure \(m\) if \(m(A)>0\). The set of all focal elements is expressed by \({\mathbb {F}=\left \{{A\vert A\subseteq {\Theta },m(A)>0} \right \}}\).

A Bayesian belief structure (BBS) on \({\Theta } \) is a belief structure on \({\Theta } \) whose focal elements are atomic sets (singletons) of \({\Theta } \). A categorical belief structure is a normalized belief structure defined as: \(m(A)= 1\), \(\forall A\subseteq {\Theta } \) and \(m(B)= 0,\forall B\subseteq {\Theta } ,B\ne A\). A vacuous belief structure on \({\Theta } \) is defined as: \(m({\Theta } )= 1\)and \(m(A)= 0,\forall A\ne {\Theta } \).

Given a belief structure \(m\) on \({\Theta } \), the belief function and plausibility function which are in one-to-one correspondence with \(m\) can be defined respectively as:

\(Bel(A)\) represents all basic probability masses assigned exactly to \(A\) and its smaller subsets, and \(Pl(A)\)represents all possible basic probability masses that could be assigned to \(A\) and its smaller subsets. As such, \(Bel(A)\)and \(Pl(A)\) can be interpreted as the lower and upper bounds of probability to which \(A\) is supported. So we can consider the belief degree of \(A\) as an interval number \(BI(A)=[Bel(A),Pl(A)]\).

Definition 2.1

[21] The pignistic transformation maps a belief structure \(m\) to so called pignistic probability function. The pignistic transformation of a belief structure \(m\) on \({\Theta } =\{{\theta }_{1}, {\theta }_{2}, \cdots ,{\theta }_{n}\}\) is given by

where \(\vert A\vert \) is the cardinality of set \(A\).

In particular, given \(m(\emptyset )= 0\)and \({\theta } \in {\Theta } \), we have

We can get \(Bel(A)\le BetP(A)\le Pl(A)\)effortlessly.

2.2 Combination of belief functions

Definition 2.2

[6] Given two belief structures \(m_{1} \)and \(m_{2} \) on \({\Theta } \), the belief structure that results from the application of Dempster’s combination rule, denoted as \(m_{1} \odot m_{2} \), or \(m_{12} \) for short, is given by:

When multiple independent sources of evidence are available, the combined evidence can be obtained as:

Here, \(n\) is the number of evidence pieces in the process of combination, \(i\) denotes the \(i\)th piece of evidence, \(m_{i} (A_{i} )\) is the BPA of hypothesis \(A_{i} \) supported by evidence \(i\). The value \(m(A)\) reflects the degree of combined support, joint mass, from \(n\) mutually independent sources of evidence corresponding to \(m_{1} ,m_{2} ,\cdots ,m_{n} \), respectively. The quantity \(k\) defined in Section 2.3 is the amount of conflict among \(n\) mutually independent pieces of evidence, which is equal to the mass of the empty set after the conjunctive combination and before the normalization step. It represents contradictory evidence.

The value \(k = 0\) corresponds to the absence of conflicts among the evidence from different sources, whereas \(k = 1\) implies complete contradiction among the evidences. Indeed \(k = 0\), if and only if no empty set is created when all evidences are combined, and \(k = 1\) if and only if all the sets resulting from this combination rule are empty sets. The weight of partial conflict is \(\prod \nolimits _{i = 1}^{n} {m_{i} (A_{i} )} \), with \(\cap A_{i} =\emptyset \). The global conflict \(k\) is then the sum of all partial conflicts.

Dempster’s rule, however, has an inherent problem. When the pieces of evidence are completely contradictory, i.e. \(k = 1\), combination cannot be performed. When they are highly conflicting, i.e. \(k\to 1\), the combination results seldom agree with the actual situation, and are counter-intuitive (see the example given by Zadeh [33]).

Example 2.1

Consider a situation in which we have two belief structures \(m_{1} \) and \(m_{2} \) over the same frame of discernment \({\Theta } =\{{\theta }_{1} ,{\theta }_{2} ,{\theta }_{3} \}\). Let these two structures be as follows:

Applying Dempster’s rule to these structures yields \(m(\{{\theta }_{1} \})=m(\{{\theta }_{3} \})= 0\), \(m(\{{\theta }_{2} \})= 1\). We can see that \(m_{1} \) and \(m_{2} \) have low support level to hypothesis \({\theta }_{2} \), but the resulting structure has complete support to \({\theta }_{2} \). On the other hand, \(m_{1} \) and \(m_{2} \) have high support level on hypotheses \({\theta } _{1} \) and \({\theta }_{3} \), respectively, but \({\theta }_{1} \) and \({\theta }_{3} \) are totally unbelievable in the result. This appears to be counter-intuitive.

The reason for such counter-intuitive behavior is that Dempster’s rule cannot handle highly conflicting evidence. Such problems can be handled from two main points of view. If the counter-intuitive behavior is believed to be caused by unreliable evidence, then the evidence should be discounted. However, if the counter-intuitive behavior is attributed to the combination rule, improvements of the combination rule, as done in several studies [15, 21, 31], should then be made.

2.3 Evidence discounting

When a source of evidence is only partially reliable to a known reliability degree \(\lambda \in [0,1]\), a discounting operation can be defined on the associated BPA [9]. The most common discounting operation was first introduced by Shafer in [20]. The discounting operation is given by

where \(\lambda \) represents the degree of reliability of the evidence. If \(\lambda = 1\) (i.e. the evidence is completely reliable), then the BPA will remain unchanged. If \(\lambda = 0\) (i.e. the evidence is completely unreliable), then the BPA will become \(m({\Theta } )= 1\), which means that the evidence provides no supports for decision-making.

3 Evaluating the dynamic reliability of sensor

The static discounting factor of a sensor defined in the previous section is obtained based on its application at the evaluation stage and then can be regarded as its prior reliability for subsequent applications. However, the static evaluation does not take into account the change of the sensor reliability in varying environments. Because environmental noise, incremental effect, and opposite disturbance may cause the sensors to degrade or fail, it must be able to dynamically monitor and assess them in multisensor fusion systems. Otherwise, the data with large variation will affect the result devastatingly and decrease the performance of the system. If worse, this may induce the conflicting problem of evidence theory [7, 33]. The dynamic discounting factors are one of the representative indices that can express the dynamic performance of sensors.

In this section, we shall address this problem. The discounting factor of a sensor is assessed for one target to be classified and depends on the overall support degree for the sensor afforded by the other sensors.

3.1 Evaluate dynamic reliability based on support degree

A. Consider BPA in the view of IFS

Since Atanassov’s intuitionistic fuzzy set can be considered as a generation of Zadeh’s fuzzy set, we first give the definition of Zadeh’s fuzzy set, followed by brief description on basic concepts of IFSs.

Definition 3.1

[32] Let \(X=\{x_{1} ,x_{2} ,\cdots ,x_{n} \}\) be a universe of discourse, then a fuzzy set \(A\) in \(X\) is defined as follows:

where \(\mu _{A} (x):X\to [0,1]\) is the membership degree.

Definition 3.2

[1] An Atanassov’s intuitionistic fuzzy set \(A\) in \(X\) can be written as:

where \(\mu _{A} (x):X\to [0,1]\) and \(v_{A} (x):X\to [0,1]\) are membership degree and non-membership degree, respectively, with the condition:

\(\pi _{A} (x)\)determined by the following expression:

is called the hesitancy degree of the element \(x\in X\) to the set \(A\), and \(\pi _{A} (x)\in [0,1]\), \(\forall x\in X\). \(\pi _{A} (x)\) is also called the intuitionistic index of \(x\) to \(A\). Greater \(\pi _{A} (x)\)indicates more vagueness on \(x\). Obviously, when \(\pi _{A} (x)= 0,\forall x\in X\), an IFS degenerates to Zadeh’s fuzzy set.

It is worth noting that besides Definition 3.2 there are other possible representations of IFSs proposed in the literature [3, 5]. Atanassov and Gargov [3] proposed to use an interval representation \([\mu _{A} (x),1-v_{A} (x)]\) of IFS \(A\) in \(X\) instead of pair \(\langle \mu _{A} (x),v_{A} (x)\rangle \). This approach is equivalent to the interval valued fuzzy sets interpretation of IFS, where \(\mu _{A} (x)\) and \(1-v_{A} (x)\) represent the lower bound and upper bound of membership degree, respectively. Obviously, \([\mu _{A} (x),1-v_{A} (x)]\) is a valid interval, since \(\mu _{A} (x)\le 1-v_{A} (x)\) always holds for \(\mu _{A} (x)+v_{A} (x)\le 1\).

In the sequel, \(IFSs(X)\) denotes the set of all IFSs in \(X\). If \(\vert X\vert = 1\), i.e., there is only one element \(x\) in \(X\), the IFS \(A\) in \(X\) usually is denoted by \(A=\langle \mu _{A} ,v_{A} \rangle \) for short, which is also called an intuitionistic fuzzy value (IFV).

In the IFS theory, an IFS \(\langle \mu _{A} (x),v_{A} (x)\rangle \) has some physical interpretations. For example, if \(\langle \mu _{A} (x),v_{A} (x)\rangle =\langle 0.2,0.3\rangle \), then the degree of indeterminacy \(\pi _{A} (x)\) can be easily determined as 0.5. These can be interpreted as “the degree that element \(x\) belongs to \(A\) is 0.2, the degree that element \(x\) does not belong to \(A\) is 0.3, and the degree that element \(x\) belongs indeterminately to \(A\) is 0.5”. In a voting model, they can be interpreted as “the vote for resolution is two in favor and three against, with five abstentions”. In addition, for a fuzzy set \(B\) in \(X\), since \(v_{B} (x)= 1-\mu _{B} (x)\), the indeterminacy degree of \(x\) to \(B\) can be expressed as \(\pi _{B} (x)= 1-\mu _{B} (x)-(1-\mu _{B} (x))= 0\). The fuzzy set is thus a particular case of the IFS.

Definition 3.3

[2] For \(A\in IFSs(X)\) and \(B\in IFSs(X)\), some relations between them are defined as:

-

(R1)

\(A\subseteq B\) iff \(\forall x\in X\mu _{A} (x)\le \mu _{B} (x),v_{A} (x)\ge v_{B} (x)\);

-

(R2)

\(A=B\) iff \(\forall x\in X\mu _{A} (x)=\mu _{B} (x),v_{A} (x)=v_{B} (x)\);

-

(R3)

\(A^{C}=\{\langle x,v_{A} (x),\mu _{A} (x)\rangle \vert x\in X\}\), where \(A^{C}\) is the complement of \(A\).

Definition 3.4

[2] Let \(A=\{\langle x,\mu _{A} (x),v_{A} (x)\rangle \vert x\in X\}\), \(B=\{\langle x,\mu _{B} (x),v_{B} (x)\rangle \vert x\in X\}\) be two IFSs in the \(X\), then the following operations can be defined:

In the framework of evidence theory, the reading of each sensor can be expressed by a BPA. As discussed earlier, in evidence theory, \([Bel({\theta }),Pl({\theta } )]\) is the confidence interval which describes the uncertainty about \({\theta } \). It can be used to define the lower and upper probability bounds of the imprecise probability of \({\theta } \). Here, \(Bel({\theta } )\) is the lower probability, and \(Pl({\theta } )\) is the upper probability. Thus, the probability \(P({\theta } )\) lies in an interval \([Bel({\theta } ),Pl({\theta } )]\). If we can consider \(m\) as an IFS \(A\) in \({\Theta } =\{{\theta }_{{1}} ,{\theta } _{{2}} ,\cdots ,{\theta }_{n} \}\), \(Bel({\theta } )\) can be taken as the membership degree to which \({\theta } \) belongs to \(A\), while \(1-Pl({\theta } )\) is the non-membership degree of \({\theta } \). Based on such analysis, a BPA \(m\) on the discernment frame \({\Theta } =\{{\theta }_{{1}} ,{\theta }_{{2}} ,\cdots ,{\theta }_{n} \}\) can be transformed to an IFS \(A\) on \({\Theta } =\{{\theta } _{{1}} ,{\theta }_{{2}} ,\cdots ,{\theta }_{n} \}\). The corresponding IFS can be expressed as:

The relation between BPA in evidence theory and IFS can be interpreted by the application of pattern identification. Suppose that the discernment frame is \({\Theta } =\{{\theta }_{{1}} ,{\theta }_{{2}} ,{\theta }_{3} \}\), i.e., \({\Theta } =\{{\theta }_{{1}} ,{\theta }_{{2}} ,{\theta }_{3} \}\) is the set of possible classes of unknown object \(o\). The reading of a sensor \(S\) expressed by BPA \(m\) indicates that the sensor identifies the object as an IFS \(A\), where

Specially, if the sensor identify the object as a singleton subset of \({\Theta } \), taking \(\{{\theta }_{{1}} \}\) as an example, the BPA can be written as:

Then the corresponding IFS is \(A=\{\langle {\theta }_{1} ,1,0\rangle ,\langle {\theta }_{2} ,0,1\rangle ,\langle {\theta }_{3} ,0,1\rangle \}\), which is identical to the set \(\{{\theta }_{1} \}\).

If the object is totally unknown to the sensor, i.e., the sensor cannot provide any information about the object, the BPA \(m\) is \(m({\Theta } )= 0\). Thus, we have:

So the IFS can be written as \(A=\{\langle {\theta }_{1} ,0,0\rangle ,\langle {\theta }_{2} ,0,0\rangle ,\langle {\theta }_{3} ,0,0\rangle \}\). This indicates that the sensor identifies the object as the full set \({\Theta } \), which coincides with the sensor’s total ignorance on the object.

Above analysis can easily be adapted to other domains of multi-sensor data fusion, the underlying schema being quite general. Hence, each BPA derived from the readings of a sensor can be transformed to an IFS defined over the discernment frame.

B. Supporting degree between BPAs

The concept of supporting degree between BPAs has been proposed in some modified evidence combination rules [7, 15]. Supporting degree is usually considered as a symmetric a measurement related to similarity and distance between BPAs. Hence, taking Sup as the supporting degree between two BPAs \(m_{1}\) and \(m_{2}\), we have Sup(m1,m2) = Sup(m2,m1). This demonstrates that the supporting degree between two BPAs is mutually identical to each other. Suppose that Sim and Dis are similarity measure and distance measure between BPAs, respectively, the following relations have been widely accepted:

In other words, high similarity degree and low distance degree between two BPAs both indicate high supporting degree between them. Thus, supporting degree is usually considered equivalent to similarity degree for BPAs.

Taking a close examination on these metrics, we can note that the similarity degree describes the degree of similarity between two objects. It reflects the distance between them. If two objects are close to each other, we can say the similarity degree to them is high. Nevertheless, the concept of supporting degree cannot be a symmetric measurement between two objects. Object \(o_{1}\) may support \(o_{2}\) in a great degree, but this does not mean that \(o_{2}\) should support \(o_{1}\) in the same degree. The supporting degree of \(o_{1}\) to \(o_{2}\) is determined by the similarity between \(o_{1}\) and the intersection of them, denoted aso1 ∩ o2. That is to say, \(o_{1}\) agrees with \(o_{1}\cap o_{2}\), so \(o_{1}\) supports \(o_{2}\). Such sense can be extended to the supporting degree between BPAs easily.

For two BPAs \(m_{1}\) and \(m_{2}\), the supporting degree Sup satisfies the following property: \(Sup(m_{1} ,m_{2} )\propto Sim(m_{1} ,m_{1} \cap m_{2} )\). Moreover, the relation \(Sup(m_{1} ,m_{2} )\ne Sup(m_{2} ,m_{1} )\) holds for most cases. For clarity, we can take the similarity degree between \(m_{1} \) and the intersection \(m_{1} \cap m_{2} \) as the degree of \(m_{1} \) supporting \(m_{2} \), i.e., \(Sup(m_{1} ,m_{2} )=Sim(m_{1} ,m_{1} \cap m_{2} )\). Similarly, we have \(Sup(m_{2} ,m_{1} )=Sim(m_{2} ,m_{1} \cap m_{2} )\).

Considering the relation between BPA and IFS, we can calculate the supporting degree of BPAs in the framework of IFS, which will bring much convenience in defining the intersection operation on BPAs. Hence, the supporting degree \(Sup(m_{1} ,m_{2} )\) can be calculated by the supporting degree \(Sup(A_{1} ,A_{2} )\), where \(A_{1} \) and \(A_{2} \) are IFSs derived from \(m_{1} \) and \(m_{2} \) respectively. So we have:

Recent years, many methods have been proposed to define similarity measures for IFSs [4, 23]. When calculating the supporting degree, we use the similarity measure for IFSs based on Euclidian distance, which has been proposed in [24]. It is defined as following:

LetA = {〈x, μA(x),vA(x)〉|x ∈ X} and \(B=\{\langle x,\mu _{B} (x),v_{B} (x)\rangle \vert x\in X\}\)be two IFSs in \(X=\{x_{1} ,x_{2} ,\cdots ,x_{n} \}\). The similarity degree between \(A\) and \(B\) can be expressed by:

It has been proved that \(S_{E} (A,B)\) satisfies all desired properties of similarity measure between IFSs as shown in [23].

Based on above analysis, we can construct the supporting degree for two BPAs \(m_{1} \) and \(m_{2} \) by the following steps:

-

Step 1.

By (3) and 4, get the belief functions and plausibility functions of singleton subsets corresponding to \(m_{1} \) and \(m_{2} \).

-

Step 2.

By (15), get two IFSs \(A_{1} \) and \(A_{2} \) according to \(m_{1} \) and \(m_{2} \).

-

Step 3.

According Definition 3.4, get the intersection of \(A_{1} \) and \(A_{2} \), denoted by \(A_{1} \cap A_{2} \).

-

Step 4.

Following (17), calculate the similarity degrees \(S_{E} (A_{1} ,A_{1} \cap A_{2} )\) and \(S_{E} (A_{2} ,A_{1} \cap A_{2} )\).

Finally, we get the degree to which \(m_{1} \) supports \(m_{2} \) is \(Sup(m_{1} ,m_{2} )=S_{E} (A_{1} ,A_{1} \cap A_{2} )\), the degree of \(m_{2} \) supporting \(m_{1} \) is \(Sup(m_{2} ,m_{1} )=S_{E} (A_{2} ,A_{1} \cap A_{2} )\).

Considering the properties of \(S_{E} (A,B)\), we can get \(m_{1} =m_{2} \Rightarrow Sup(m_{1} ,m_{2} )=Sup(m_{2} ,m_{1} )= 1\).

C. Dynamic reliability of sensors

Suppose the number of sensors is \(N\). The BPA provided by each sensor is \(m_{N} \). After all supporting degrees between BPAs are obtained, we can construct a supporting degree matrix (SDM). The SDM is expressed as:

We can note that the elements in column \(j\) represent the degree to which \(m_{j}\) is supported by other BPAs. Thus, the total supporting degree of \(m_{j}\) getting from all other BPAs can be defined as:

Generally, the larger the support degree for one sensor, the more reliable the sensor. Otherwise, the sensor is regarded as less reliable. More formally, as suggested by Yager [30], the reliability associated with a sensor is a function of the sensor’s compatibility with the others. Intuitively, the relative reliability of each sensor can be defined as the relative total support degree of BPA provided by it. So we have:

For \(N\) sensors, one sensor with the highest relative reliability is regarded as the primary sensor and its dynamic reliability factor is equal to 1. So the absolute dynamic reliability of sensor \(S_{i}\) (i = 1,2,⋯ ,N) can be obtained as:

Comparing (20) and (21), we can get the absolute dynamic reliability of sensor \(S_{i}\) (i = 1,2,⋯ ,N) as following:

3.1.1 A new method for data fusion

Once the dynamic reliability factors of all sensors are obtained, the problem of how to incorporate them into the fusion process may arise. Some approaches have been proposed for this purpose in the information fusion literature [18]. For example, substitute the dynamic reliability factors into the discounting rule and then combine the discounted belief functions by the Dempster’s combination rule. So we can apply the dynamic reliability factors together with evidence discounting operation to fuse uncertain information from multiple sensors. Given \(N\) sensors \(S_{1}\), \(S_{2},\cdots ,S_{N}\), with uncertain outputs, the process of combing uncertain information from all sensors can be listed as following.

-

Step 1. Uncertain data modeling with BPA.

In real applications, the information or data can be any style, so the first step of information processing in the frame of the Dempster–Shafer evidence theory mainly focuses on modeling uncertain information with BPAs evidence theory. The uncertain outputs of sensors \(S_{1}\), \(S_{2},\cdots ,S_{N}\) are expressed as BPAs \(m_{1}\), \(m_{2},\cdots ,m_{N}\).

-

Step 2. Calculate the supporting degree of BPA \(m_{k}\)

-

(i)

By (3) and (4), get the belief functions and plausibility functions of singleton subsets corresponding to each BPA \(m_{i}\), \(i = 1,2,\cdots ,N\).

-

(ii)

By (15), get IFSs corresponding to each BPA.

-

(iii)

According Definition 3.4, get the intersection of \(A_{k} \) and \(A_{j} \), denoted by \(A_{k} \cap A_{i} \), \(i = 1,2,\cdots ,N\).

-

(iv)

Following (17), calculate the similarity degrees \(S_{E} (A_{i} ,A_{k} \cap A_{i} )\), \(i = 1,2,\cdots ,N\).

-

(v)

Finally, we get the degree of \(m_{i} \) supports \(m_{k} \) is \(Sup(m_{i} ,m_{k} )=S_{E} (A_{i} ,A_{k} \cap A_{i} )\).

-

(i)

-

Step 3. Calculate the dynamic reliability of each sensor.

According to the supporting degree of each BPA from all other BPAs, we can construct the support degree matrix as (18). Then the dynamic reliability of each sensor can be obtained based on (19) and (22).

-

Step 4. Modify original BPAs form all sensors.

Based on the evidence discounting operation shown in (10), we modify the original BPAs \(m_{1}\), \(m_{2},\cdots \), \(m_{N}\). The discounted BPAs are denoted by \({m_{1}^{R}} ,{m_{2}^{R}} ,\cdots ,{m_{N}^{R}} \).

-

Step 5. Data fusion by Dempster’s combination rule.

Combine the discounted BPAs \({m_{1}^{R}} ,{m_{2}^{R}} ,\cdots ,{m_{N}^{R}} \) by Dempster’s combination rule shown in (8).

For clarity, we present above five steps included in the procedure for fusing uncertain data from multiple sensors in the flow chart shown in Fig. 1.

4 Numerical examples and discussion

In this section, we will apply our proposed dynamic reliability evaluation method and evidence combination rule to the application of identification fusion to illustrate their performances.

First, a numerical example is proposed to show the implementation of sensor’s dynamic reliability evaluation method and its application in evidence combination.

Example 4.1

In a target recognition system based on multi-sensor, three sensors \(S_{1} ,S_{2},~\text {and}~S_{3} \) are employed to classify the identification of sea targets. Three possible types of targets are denoted as \({\theta }_{1}\), \({\theta }_{2}\), and \({\theta } _{3}\). So the discernment frame \({\Theta } \) can be written as \(\{{\theta }_{1} ,{\theta }_{2} ,{\theta }_{3} \}\). The sensor readings on the classes are expressed by the BBAs detailed as following:

Three intuitionistic fuzzy sets in \({\Theta }=\{{\theta }_{1} ,{\theta } _{2} ,{\theta }_{3}\}\) generated by these BPAs can be expressed as following:

The supporting degree matrix (SDM) for three BPAs is:

Based on the intersection operation on IFS and the definition of similarity \(S_{E}\), we can get the SDM as:

Based on (19), the total supporting degree obtained by each BPA can be calculated:

Then we can get the relative reliability factor of each sensor by Eq. (20):

Finally the absolute dynamic reliability of each sensor can be obtained according to (21):

Based on the dynamic reliability factor, the original BPAs can be modified by the discounting operation. The discounted BPAs are:

It is demonstrated that the proposed approach can provides a new alternative to combine uncertain sources of evidence with different reliability without a priori knowledge on the sources. In this example, we note that the information \(m_{2} \) proposed by sensor \(S_{2}\) is quite different from others. The proposed method can be well adapted for the fusion of highly conflicting sources of information for decision making support. The sources which are highly conflicting with the majority of other sources will be automatically assigned with a low reliability factor thanks to the new supporting degree measure in order to decrease their bad influence in the fusion process.

Another illustrative example adopted in [15] will be presented to show the performance of our new approach with respect to other methods.

Example 4.2

In a multi-sensor information fusion system, a set of five sensors (S1, \(S_{2}\), \(S_{3}\), \(S_{4}\), \(S_{5})\) is applied for sea target identification. They provide five normalized BPAs with imprecise focal elements over the frame of discernment \({\Theta } =\{{\theta }_{1}, {\theta }_{2} ,{\theta }_{3} \}\) as given in Table 1.

Table 1 shows that the BPAs \(m_{1}\), \(m_{2}\), \(m_{4}\) and \(m_{5}\) assign most of their belief to \({\theta } _{1}\), but \(m_{3}\) oppositely commits its largest mass of belief to \({\theta }_{2}\). Therefore \(m_{3}\) is considered as the least reliable or unimportant source based on the aforementioned underlying principle, and it can be considered as a noisy source (outlier).

By our proposed dynamic reliability evaluation method based on supporting degree, we can get the absolute dynamic reliability factors for five sensors as following.

We can see that the dynamic reliability degree of \(S_{3}\) is the lowest in five sensors. This coincides with our intuitive analysis that the information provided by \(S_{3}\) may be a noise source.

Based on evidence discounting operation and Dempster’s combination rule, we can get the fusion results as:

The fusion result shows that the target is identified as \({\theta }_{1} \). Since the dynamic reliability of \(S_{3}\) is very low, the influence of its readings on the final result is very limited.

Table 2 shows the fusion results obtained with the different methods. From Table 2, we see that the Classical Dempster’s rule (without discounting process) concludes that the hypothesis \({\theta }_{1}\) is very unlikely to happen whereas \({\theta }_{2}\) is almost sure to happen. Such result is unreasonable since the majority sources assign most of their belief to \({\theta }_{1}\), but only one source distributes its largest mass of belief to \({\theta }_{2}\). Such unexpected behavior shows that DS rule is risky to use to combine sources of evidence in a high conflicting situation.

Once reliability factor is applied as discounting factor, \(m_{3}\) becomes strongly discounted because of its largest dissimilarity with the other sources. When evidential distance \(d_{J}\) and dissimilarity measure DismP are used to generate reliability factors, sensor \(S_{3}\) is assigned to a very low reliability degree. So the information provided by \(S_{3}\) has little influence on the final fusion result. This caused great information loss. In fact, if only three sensors \(S_{1}\), \(S_{2}\), and \(S_{3}\) are considered, we cannot decide that \(m_{3}\) is outlier. So it may be an arbitrary choice to discount \(m_{3}\) in such great degree. We need more information to determine the reliability factor of \(S_{3}\). In such sense, the methods proposed in [7] and [15] may bring great risk in sequential fusion process.

The final result of our proposed method indicates that \({\theta } _{1}\) has a higher mass of belief than \({\theta }_{2}\) (as expected) after the fusion of the five sources, even if \({\theta }_{2}\) has got a bigger mass than \({\theta }_{1}\) after some intermediate steps of the sequential fusion process. We see that the mass assigned to \({\theta }_{2}\) increases when \(S_{3}\) participates the fusion. But the \(m\)({𝜃2}) decreases gradually with the addition of \(S_{4}\) and \(S_{5}\). If we considered five sensors sequentially, the fusion results in each step are more reasonable and cautious. This is caused by the nature of our proposed method. The new supporting degree is defined based on the distance measure between IFS, which is smaller than the evidential distance \(d_{J}\) and dissimilarity measure DismP. So the dynamic reliability factor of unreliable sensors is greater than those generated by \(d_{J}\) and DismP. Such new method can provide interesting results and valuable help for temporal information fusion.

5 Application in target recognition

In this section, we apply our proposed method for fata fusion in target recognition to further show its rationality. Target recognition based on information fusion has become a successful application of evidence theory receiving considerable attention in both military and civilian areas [16, 17, 26]. In target recognition, due to the limitation of sensors and interference from environments, the information derived from different sensors is usually imperfect. Hence, the target cannot be identified by single sensor. It is necessary to fuse information coming from multiple sensors to achieve better results. Due to its flexibility in combination and ease of use by the decision maker, evidence theory has been widely applied in target recognition based on multi-sensor data fusion.

5.1 Problem description

Suppose that one unknown aerial target is detected by a radar. Three possible types of targets are Airplane, Helicopter, and Rocket denoted as \(A\), \(H \)and \(R\), respectively. So in the framework of evidence theory, discernment frame \({\Theta } \) can be written as \(\{A H R\}\). To identify the class of this target, three sensors \(S_{1} S_{2}\) and \(S_{3}\) are applied to track and recognize it continuously. These three sensors output identification information at three time nodes \(t_{1}\), \(t_{2}\) and \(t_{3}\). The results of sensor reports in each time node modelled as BPAs are resented in Table 3.

5.2 Data fusion based the proposed method

Based on the proposed asymmetric supporting degree, we can get the Supporting Degree Matrix at each time node as following:

Then we can calculated the dynamic reliability of all sensors at each time node according to (19) and Section 3.1.1. The dynamic reliability of each sensor is obtained as:

Modify the original BPAs by evidence discounting operation, we can get the modified BPAs as listed in Table 4.

At each time node, we fuse the information provided by all sensors by Dempster’s combination. We can thus get the fusion results in three time nodes as listed in Table 5.

5.3 Discussions

From the fusion results presented in Table 5, we can note that the combination of three sensors’ reports in all time nodes illustrate that the unknown aerial target is a Helicopter. It is also shown that from time node \(t_{1}\) to \(t_{3}\), in the final results, the basic probability assigned to \(\{H\}\)is increasing. This indicates that in the process of continuous recognition, the reliability of decision making increases with the collection of latest information. Such phenomenon coincides with intuitive analysis on target recognition.

For comparison, Table 6 shows the results obtained by those methods developed in [11, 25]. We can note that the fusion results obtained by our proposed method are consistent with those results by other methods. Moreover, our proposed method assigns more support on \(\{H\}\) in the fusion results at all time nodes.

This comparative results demonstrate the feasibility and validity of our propose method for data fusion. Since the dynamic reliability of each sensor is evaluated based on the developed asymmetric supporting degree between BPAs, more uncertain information can be hold in the fusion. Thus the uncertain information form sensors’ report cam be well preprocessed based on the dynamic reliability and evidence discounting operation. These features of our proposed data fusion method lead to the reasonability and reliability of final decision making.

6 Conclusions

In this paper, a new dynamic reliability evaluation method for sensors is proposed based on supporting degree measure between BPAs in evidence theory. The supporting degree measure is defined based on the relationship between belief function and intuitionistic fuzzy sets. The proposed asymmetric supporting degree is related to the similarity degree between original BPAs and the intersection of them. The dynamic reliability of one sensor is monotone increasing with the supporting degree its readings obtained from other sensors’ readings. Then the dynamic reliability factors are applied into evidence combination based on evidence discounting operation and Dempster’s rule. We have shown through simple examples that the proposed dynamic reliability evaluation method can assigned a reasonable reliability factor to sensors which providing conflicting information. Moreover, numerical examples demonstrate that the combination rule based on dynamic reliability can reduce the influence of conflicting information on the final fusion result. Due to the definition of supporting degree and intuitionistic fuzzy similarity measure, the proposed combination rule is more cautions when dealing with conflicting information. More reasonable and effective definition of supporting degree in evidence theory is left for future investigations.

References

Atanassov KT (1986) Intuitionistic fuzzy sets. Fuzzy Sets Syst 20(1):87–96

Atanassov KT (2012) On intuitionistic fuzzy sets theory. Springer-Verlag, Berlin

Atanassov KT, Gargov G (1989) Interval-valued intuitionistic fuzzy sets. Fuzzy Sets Syst 31(3):343–349

Baccour L, Alimi AM, John RI (2013) Similarity measures for intuitionistic fuzzy sets: State of the art. J Intell Fuzzy Syst 24(1):37–49

Bustince H, Burillo P (1996) Vague sets are intuitionistic fuzzy sets. Fuzzy Sets Syst 79(3):403–405

Dempster AP (1967) Upper and lower probabilities induced by a multiple valued mapping. Ann Math Stat 38:325–339

Deng Y, Shi W, Zhu Z, Liu Q (2004) Combining belief functions based on distance of evidence. Decis Support Syst 38:489–493

Elouedi Z, Mellouli K, Smets P (2004) Assessing sensor reliability for multisensor data fusion within the transferable belief model. IEEE Trans Syst Man Cybern B Cybern 34(4):782–787

Florea MC, Jousselme A-L, Bosse E (2009) Robust combination rules for evidence theory. Inf Fusion 10(2):183–197

Guo H, Shi W, Deng Y (2006) Evaluating sensor reliability in classification problems based on evidence theory. IEEE Trans Syst Man Cybern B Cybern 36(5):970–981

Jiang W, Xie C, Zhuang M, Shou Y, Tang Y (2016) Sensor data fusion with z-numbers and its application in fault diagnosis. Sensors 16:1509

Jousselme A-L, Grenier D, Bosse E (2001) A new distance between two bodies of evidence. Inf Fusion 2(2):91–101

Klein J, Colot O (2010) Automatic discounting rate computation using a dissent criterion. In: Proceedings of the Workshop on the Theory of Belief Functions, Brest, France, vol 2010, pp 1-6

Li G, Zhou Z, Hu C, Chang L, Zhou Z, Zhao F (2017) A new safety assessment model for complex system based on the conditional generalized minimum variance and the belief rule base. Saf Sci 93:108–120

Liu Z, Dezert J, Pan Q, Mercier G (2011) Combination of sources of evidence with different discounting factors based on a new dissimilarity measure. Decis Support Syst 52:133–141

Liu Z, Pan Q, Dezert J, Han J, He Y (2017) Classifier fusion with contextual reliability evaluation. IEEE Transactions on Cybernetics. https://doi.org/10.1109/TCYB.2017.2710205

Liu Z, Pan Q, Dezert J, Martin A Combination of classifiers with optimal weight based on evidential reasoning. IEEE Transactions on Fuzzy Systems. https://doi.org/10.1109/TFUZZ.2017.2718483

Rogova G, Nimier V (2004) Reliability in information fusion: Literature survey. In: Proc. 7th Int. Conf. Inf. Fusion, Stockholm, Sweden, vol 2004, pp 1158–1165

Schubert J (2011) Conflict management in Dempster–Shafer theory using the degree of falsity. Int J Approx Reason 52(3):449–460

Shafer G (1976) A Mathematical Theory of Evidence. Princeton University Press, Princeton

Smets P (2000) Data fusion in the transferable belief model. In: Proceedings of the 3rd International Conference on Information Fusion, Paris, France, pp PS21–PS33

Song Y, Wang X, Lei L, Xue A (2014) Combination of interval-valued belief structures based on intuitionistic fuzzy set. Knowl-Based Syst 67:61–70

Song Y, Wang X, Quan. W, Huang W (2017) A new approach to construct similarity measure for intuitionistic fuzzy sets. Soft Comput. https://doi.org/10.1007/s00500-017-2912-0

Song Y, Wang X, Lei L, Quan W, Huang W (2016) An evidential view of similarity measure for Atanassov’s intuitionistic fuzzy sets. Journal of intelligent & Fuzzy systems31, pp 1653–1668

Tang Y, Zhou D, Xu S, He Z (2017) A weighted belief entropy-based uncertainty measure for multi-sensor data fusion. Sensors 17:928

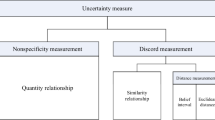

Wang X, Song Y (2017) Uncertainty measure in evidence theory with its applications. Appl. Intell. https://doi.org/10.1007/s10489-017-1024-y

Xu X, Li S, Song X, Wen C, Xu D (2016) The optimal design of industrial alarm systems based on evidence theory. Control Eng Pract 46:142–156

Xu X, Zhang Z, Xu D, Chen Y (2016) Interval-valued evidence updating with reliability and sensitivity analysis for fault diagnosis. Int J Comput Intell Syst 9(3):396–415

Xu X, Zheng J, Yang J, Xu D, Chen Y (2017) Data classification using evidence reasoning rule. Knowl-Based Syst 116:144–151

Yager RR (1992) On considerations of credibility of evidence. Int J Approx Reason 7(1):45–72

Yang Y, Han D, Han C (2013) Discounted combination of unreliable evidence using degree of disagreement. Int J Approx Reason 54:1197–1216

Zadeh LA (1965) Fuzzy sets. Inf Control 8:338–353

Zadeh LA (1986) A simple view of the Dempster–Shafer theory of evidence and its implication for the rule of combination. AI Magazine 2:85–90

Zhao F, Zhou Z, Hu C, Chang L, Zhou Z, Li G (2016) A new evidential reasoning-based method for online safety assessment of complex systems. IEEE Transactions on Systems, Man and Cybernetics: Systems. https://doi.org/10.1109/TSMC.2016.2630800

Zhou Z, Chang L, Hu C, Han X, Zhou Z (2016) A new BRB-ER-based model for assessing the lives of products using both failure data and expert knowledge. IEEE Trans Syst Man Cybern Syst 46(11):1529–1543

Zhou Z, Hu G, Zhang B, Hu C, Zhou Z, Qiao P (2017) A model for hidden behavior prediction of complex systems based on belief rule base and power set. IEEE Transactions on Systems Man & Cybernetics: Systems. https://doi.org/10.1109/TSMC.2017.2665880

Acknowledgments

This work was supported by the National Natural Science Foundation of China (Nos. 61703426, 61273275, 61573375, 61503407 and 60975026).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Song, Y., Wang, X., Zhu, J. et al. Sensor dynamic reliability evaluation based on evidence theory and intuitionistic fuzzy sets. Appl Intell 48, 3950–3962 (2018). https://doi.org/10.1007/s10489-018-1188-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-018-1188-0