Abstract

Dempster-Shafer (D-S) evidence theory has extensive applications in the field of data fusion. It uses the mass function to replace the probability distribution in Bayesian Probability theory, which has the advantages of weak constraints and representing uncertainty. However, many existing uncertainty measures can not be well applied to the mass function, leading to many defects in some scenarios. For example, many methods can not fully reflect the relationship between focal elements. Therefore, how to improve the uncertainty measurement, and make full use of the advantages of the mass function, so as to further accurately express the system state, is still a hot topic worth studying. To solve this problem, we propose a Belief Interval Euclidean Distance (BIED) entropy of the mass function. This method combines nonspecificity and discord measurement of the mass function and is suitable for various situations. In this paper, we introduce a large number of numerical examples to demonstrate the practicality of the BIED entropy. Compared with existing advanced methods, the BIED entropy can better identify the quantity relationship and similarity relationship between focal elements, and exhibit a linear trend with the changing cardinality of focal elements. Finally, we implement a BIED entropy-based multi-sensor data fusion on various kinds of datasets. The experimental results indicate that BIED entropy has relatively high robustness, with the target recognition accuracy close to 79.33% and the F1-Score close to 77.29%.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The sources of information in the real world are always complex and uncertain. In the scientific research of multi-sensor data fusion, the uncertainty of information always has an impact on our decision-making. To address this issue, many theories related to uncertainty measurement have been proposed, such as Bayesian theory [1], rough set theory [2], fuzzy set theory [3], and D-S evidence theory [4,5,6]. These methods can effectively avoid the adverse effects of information redundancy, conflict, and fuzziness on decision-making.

Among these methods, D-S evidence theory is derived from Bayesian theory, which is simple and feasible, and does not need to give a prior probability, so it has a wider range of applications in uncertainty measure. Due to its commutativity and associativity, it can reduce computational complexity when faced with large-scale data fusion. The mass function defined in this theory represents the relationship between focal elements, which can better describe the uncertainty than probability theory and other methods. It plays a significant role in multi-sensor data fusion [7, 8], pattern recognition [9, 10], group decision-making [11, 12], etc. However, when the mass functions are highly conflicting, using Dempster’s combination rule to fuse mass functions may result in abnormal results [13]. Therefore, the management of the conflicting mass function remains an unresolved issue.

The concept of information entropy was first introduced by Shannon, also known as Shannon entropy, which uses physical disorder to describe the complexity of information systems [14]. However, it is confined to the probability distribution and has a narrow applicability. Then how to improve information entropy and better apply it to D-S evidence theory has been a highly valued issue in recent years. The study of entropy in information field can be divided into two aspects: nonspecificity measurement and discord measurement. In the early studies, scholars studied the relationship between focal elements to improve Shannon entropy, but mostly only considered one aspect separately [15]. Deng proposed the belief entropy, also called Deng entropy, which combines nonspecificity and discord measurement, and emphasized the importance of nonspecificity measurement in the mass function [16]. In contrast to the probability distribution, the cardinality of focal elements in the mass function may be greater than 1, so Deng’s uncertainty measure considered the quantity relationship between focal elements. However, Deng entropy did not reflect the similarity relationship between focal elements. In subsequent studies, people used conflict coefficients [17], the negation of evidence [18], and belief intervals [19, 20] to further determine the intersection between focal elements. In general, how to combine nonspecificity measurement and discord measurement to identify the quantity and similarity relationships between focal elements, making the entropy more applicable to D-S evidence theory, is an important content of this study.

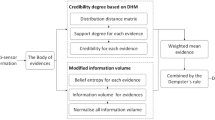

The belief interval represents the gap between the lower and upper bounds support of subsets in the mass function, and is an important measure of uncertainty that has been studied by many scholars [21]. Inspired by Deng’s distance-based total uncertainty measure [22], in this paper, we combine belief interval and Euclidean distance to measure the uncertainty. Due to the insufficient application of this uncertainty measure in mass functions and the lack of related research, we improve nonspecificity and discord measurement in the entropy and take into account the quantity and similarity relationships between focal elements. Then we propose a Belief Interval Euclidean Distance (BIED) entropy and a multi-sensor data fusion method based on it. The specific content can be seen in Fig. 1. In this paper, we evaluated BIED entropy based on Klir and Wierman’s five axioms [23]. Moreover, to verify the superior performance of BIED entropy, we mainly use belief entropy [16], Deng’s distance-based \(iTU^I\) method [22], Cui’s improved belief entropy method [24], Chen’s Renyi entropy-based method [25] and Gao’s Tsallis entropy-based method [26] to compare with it. According to a large number of numerical examples and experiments, we have ultimately demonstrated the robustness of BIED entropy. The main contributions of this paper can be summarized as follows

-

(1)

We further implement uncertainty measurement in the mass function by combining belief interval and Euclidean distance.

-

(2)

We propose BIED entropy, which combines nonspecificity and discord measurement to measure uncertainty.

-

(3)

We improve the applicability of entropy in mass functions from two aspects of focal elements: quantity and similarity relationships.

-

(4)

We propose a BIED entropy-based multi-sensor data fusion method. A large number of numerical examples show that BIED entropy has advantages in identifying the quantity relationship and similarity relationship between focal elements, and exhibits a linear growth trend, making it suitable for various scenarios.

The other sections of this paper can be summarized as follows. In Section 2, we introduced relevant literature and works. In Section 3, we reviewed the existing methods related to this paper. In Section 4, we proposed the BIED entropy, discussed its properties, and demonstrated its superiority through a large number of numerical examples. In Section 5, we proposed the BIED entropy-based multi-sensor data fusion method and conducted experiments to demonstrate its practicality. Finally, in Section 6, we summarize our work.

2 Literature review

Scholars have proposed many theories for uncertainty measurement. Firstly, various optimization algorithms related to machine learning are used to measure uncertainty, such as discrete particle swarm optimization algorithms [27], failure mode and effects analysis [28], Bayesian networks [29, 30], etc. However, these uncertainty measures based on machine learning algorithms cannot adapt to all scenarios, and the modification and calculation of them are relatively complex and difficult. Secondly, many physical uncertainty measures, such as entropy, have been applied to information systems. Shannon first introduced the concept of entropy into the information field, called information entropy, which became the baseline method [14]. The physical concept of entropy is easy to understand, and has strong flexibility, making it well combined with the existing uncertainty measures. In this paper, we use the entropy to deal with information decision and target detection.

In recent years, D-S theory has become popular in uncertainty measurement because of its advantages of simplicity and unconstrained by prior probability. The main applications of this theory include Dempster’s combination rule, which uses the fusion of mass functions to make decisions in the case of multiple sensors. Because the commutativity and associativity of D-S theory can reduce the computational complexity, its effective combination with information entropy can be helpful to uncertainty measurement. However, the definition of Shannon entropy is limited to the probability distribution and cannot measure the uncertainty of the mass function. Many scholars have made improvements to it. Yager [31] introduced the plausibility function to describe inconsistency, which preliminarily improved the application of Shannon entropy. Dubois and Prade [32] proposed Hartley entropy to deal with multiple sets in the mass function. Subsequently, the interrelationships between focal elements were also studied to improve information entropy, such as Klir and Ramer’s method [33], Jousselme’s method [34], etc. However, the above methods can not fully reflect the quantity relationship and similarity relationship between focal elements, and they only use simple linear functions to represent the cardinality of focal elements.

To improve the previous methods, Deng proposed the belief entropy [16] and pointed out that the uncertainty measurement of the mass function should comprehensively consider total nonspecificity and discord measurement. Total nonspecificity, also known as fuzziness, is due to the particularity of the mass function. When multiple sets are included, the uncertainty measure is related to the exponential function of the cardinality of focal elements. Discord is also known as conflict or randomness, which continues the idea of entropy in physics and measures the complexity of the state of evidence. Therefore, many subsequent studies take the belief entropy as a new baseline method and focus on the above two main aspects [17].

Although belief entropy is effective in uncertainty measure, it does not consider the correlation within the mass function, namely, the similarity relationship between focal elements. Cui [24] made improvements based on it, but the similarity evaluation of this method is related to the cardinality of frame of discernment. Therefore, when the frame of discernment is large, its recognition of the similarity relationship between focal elements will be weak. Recently, various types of entropy, including Renyi entropy-based method [25, 35], Tsallis entropy-based method with exponential variables [26] and other types of entropy [36,37,38], have been proposed to make uncertainty measurement more flexible. However, these methods also exposed disadvantages in some cases. For example, the exponential changing trend of the Tsallis entropy-based method caused the problem of exponential type growth for uncertainty measurement. In the case of a large cardinality of focal elements, a small increase in the cardinality of focal elements will significantly accelerate the increase rate of uncertainty. Therefore, this method is not only inconsistent with the actual situation, but also not suitable for storage and statistics under a large number of data. The exploration of uncertainty measures suitable for D-S evidence theory is still an important work. In addition, the research process described in this section is provided in Fig. 2.

In general, the new uncertainty measure will be able to combine various factors, strengthen its adaptability to the mass function, and be well applied to a wider range of system measurement. We find that the combination of belief interval and Euclidean distance proposed by Deng [22] is a good indicator of the differences between focal elements but could not explain the application of belief interval well. In our method, we introduce this quantitative indicator and better combine it with the quantity relationship and similarity relationship of focal elements, to represent nonspecificity and discord measurement more comprehensively.

3 Preliminaries

In this section, we will review several classic uncertainty measures.

3.1 Dempster-Shafer evidence theory

Dempster-Shafer evidence theory is also called D-S evidence theory, and several important definitions are as follows.

Definition 1: Frame of discernment Assuming X is a set containing \(\left| X \right| \) assumptions that are independent and mutually exclusive. Then X is called a frame of discernment, which is defined as follows [4, 5]

The power set of the frame of discernment is defined as

Definition 2: The mass function D-S evidence theory uses the mass function m to replace the probability distribution of Bayesian Probability theory, which is defined as [4, 5]

For any subset \(A \in 2^X\), the following condition is satisfied

If \(A \in 2^X\) and \({m\left( A \right) }\ne 0\), then A is called the focal element of m.

Definition 3: Dempster’s combination rule Assuming there are two mass functions \(m_1\) and \(m_2\). In addition, A, B, and C are all focal elements, and Dempster’s combination rule is defined as follows [4, 5]

where \(K\in \left[ {0, 1}\right) \) is the conflict coefficient between two mass functions, defined as

Definition 4: Belief function and plausibility function For any subset \(A \in 2^X\), its belief function \(Bel\left( A\right) \) represents the overall belief in A. The definition is as follows [4, 5]

In addition, its plausibility function \(Pl\left( A\right) \) for the subset A represents the max belief in A. The definition is as follows

The relationship between them is as follows

To be noticed, \(Bel\left( A \right) \) and \(Pl\left( A\right) \) are the upper and lower limit functions of subset A respectively. \(\left[ {Bel\left( A \right) ,Pl\left( A\right) }\right] \) is denoted as the belief interval of subset A.

3.2 Shannon entropy

This entropy can be used to measure the uncertainty of information.

Definition 5: Shannon entropy Assuming \(H\!=\!(p_1,p_2,\ldots , \) \(p_n)\) is a probability distribution containing n events, and the probability of event i occurring is \(p_i\). According to the knowledge of probability theory, we know that \(\forall p_i \in H\left| {p_i \ge 0} \right. \). The sum of all probabilities should be 1, represented as follows

Then, Shannon entropy is defined as [14]

3.3 Previous uncertainty measures

Since Shannon entropy is only defined in the probability distribution, it is not applicable to the uncertainty measurement of mass functions. In these years, many scholars have been committed to improving Shannon entropy. In the following equations, X is the frame of discernment, A and B are two different focal elements, and \(\left| A \right| \) is the cardinality of focal element A.

Deng [16] proposed the belief entropy, also called Deng entropy, which well improved the nonspecificity in the mass function as follows:

where \(\sum \limits _{A \subseteq X } {m\left( A \right) \log \left( {2^{\left| A \right| } - 1} \right) } \) represents the nonspecificity of the mass function and \(\sum \limits _{A \subseteq X } {m\left( A \right) \log m\left( A \right) }\) represents the conflict and randomness of the mass function, also known as discord. To be noticed, when \(\left| A \right| =1\), this belief entropy degenerates into Shannon entropy. This combination of non-specificity and discord allows for a more comprehensive management of mass functions. However, according to (12), belief entropy lacks the similarity relationship between focal elements and needs improvement.

To reflect the similar relationship between focal elements, Cui’s improved belief entropy (IBE) [24] is defined as follows:

As can be seen from (13), Cui’s method introduces the intersection between focal elements and the cardinality of focal elements, and can identify quantity relationship and similarity relationship. However, the equation contains the cardinality of frame of discernment \(\left| X \right| \), which is not stable for the recognition of similarity relationship.

There are also some improved methods based on other related uncertainty theories. For example, Chen’s Renyi entropy-based method (R) [25] is proposed as follows:

where n represents all the cardinalities of focal elements involved in the calculation.

Analyzing (14), because this method is rewritten in Renyi entropy, the relationship between exponential coefficient \(2^n - 1\) and linear coefficient n is not completely processed, which leads to instability in the quantity relationship. The similar relationship between focal elements cannot be reflected in this method.

Moreover, Gao’s Tsallis entropy-based method (T) [26] is given as follows:

The exponential coefficient \(2^{\left| A \right| } - 1\) in (15) can reflect the quantity relationship, but it leads to the exponential change trend when the cardinality of focal elements increases, which is not conducive to data analysis. It also fails to show similarity relationship.

3.4 Evidence distance

Deng [22] proposed \(iTU^I\) method to handle the uncertainty of the mass function by calculating the distance between belief intervals.

Definition 7: The \(iTU^I\) measurement Suppose X is the frame of discernment, and m is a mass function. Then the overall uncertainty measurement of m on X is represented by \(iTU^I\), which is defined as

where \(d_E^I\) represents the Euclidean distance, which is defined as

Equation (16) shows that the greater the difference between the belief interval \(\left[ {Bel\left( \omega \right) ,Pl\left( \omega \right) }\right] \) and \(\left[ {0,1}\right] \), the smaller \(iTU^I \left( m \right) \), indicating a smaller uncertainty in the mass function. The belief function and plausibility function in (16) can reflect the relationship between focal elements. However, since the elements \(\omega \) in \(iTU^I\) method are all singletons, the assigned belief value varies according to the cardinality of focal elements, but the recognition of similarity relationship could be easily affected. This method could not combine belief interval with mass function well.

After discussion, we summarize the performance of the existing methods from the following aspects: (1) Nonspecificity measurement(NM);(2) Discord measurement(DM);(3) Identifying quantity relationship between focal elements(IQR);(4) Identifying similarity relationship between focal elements(ISR);(5) Stability of similarity recognition(SSR);(6) Linear growth trend with changing cardinality(LGT);(7) Reasonable utilization of belief interval(RUBI). We list them in Table 1, and the work in this paper aims to fill in the gaps in these approaches.

4 Belief interval euclidean distance entropy

In this section, we will propose a new uncertainty measurement approach. To enhance the readability of subsequent sections, we provide a notation list in Appendix A.

4.1 BIED entropy

We propose a Belief Interval Euclidean Distance (BIED) entropy to measure the uncertainty of the mass function, defined as follows

where the combination coefficient \(d_M\) represents the relationship between focal elements, defined as follows

where the redistribution coefficient \(d_N\) is defined as follows

In Equation (20), inspired by the \(iTU^I\) method [22], we propose the improved Euclidean distance \(d_E\) to measure the difference between the belief interval of the mass function and [0,1], defined as follows

The BIED entropy considers two influencing factors of uncertainty measure shown in (18), where the former term \(\sum \limits _{A \subseteq X } {m\left( A \right) \log \frac{1}{{d_M \left( A \right) }}}\) represents discord measurement and the latter term \(\sum \limits _{A \subseteq X } {m\left( A \right) \log \left( {2^{\left| A \right| } - 1} \right) }\) performs nonspecificity.

In terms of nonspecificity measurement, due to the multiple sets in the mass function, we apply \(2^{\left| A \right| }-1\) defined in Deng entropy [16] to measure the fuzziness. In terms of discord measurement, we introduce \(d_E\left( A\right) \) for distance measurement and combine it with the relationship between focal elements to measure conflict. To help understand, (20) can also be written as

We can see from (22) that \(d_N\left( A\right) \) approximately reflects the redistribution of the probabilities of focal elements based on distance measurement, except the ignorance. The redistribution probability of focal element is related to the \(Bel\left( A\right) \), \(Pl\left( A\right) \) and the difference between them, shown separately in (22). Because the \(iTU^I\) method based on Euclidean distance does not consider the focal element as a whole, but only considers the difference between the belief function and plausibility function of singletons, our definition of \(d_N\left( A\right) \) eliminates this defect. For the mass functions with the same probability distribution, if there are more intersections between focal elements, the values of \(Bel\left( A\right) \) and \(Pl\left( A\right) \) are relatively large, and the uncertainty could be reduced, so it is necessary to consider the three together.

In addition, we define \(d_M\left( A\right) \) in (19) to identify the similarity relationship between focal elements. By combining these equations, we know that the more intersections between focal elements are, the greater \(d_M\left( A\right) \) is, the smaller BIED will be. To further explain the BIED entropy, we give the specific content of BIED entropy in Fig. 3 and the specific workflow of BIED entropy in Fig. 4.

By quantifying the distance between the belief interval of the given mass function and of the extreme case, combined with the correlation between focal elements, we have established a connection between \(d_E \left( A \right) \) and \(m \left( A \right) \) to enhance the practicality of Euclidean distance measures in the mass function.

Suppose X and Y are two different frames of discernment, \(m_X\) and \(m_Y\) are two mass functions. The BIED entropy has the following properties. The proof of these properties can be seen in Appendix B.

-

(1)

Non-negativity: \(BIED\left( m\right) \ge 0\).

-

(2)

Probability Consistency: When the mass function degenerates into a probability distribution, BIED entropy can degenerate into Shannon entropy.

-

(3)

Set Inconsistency: There exists a focal element \(A \subseteq X\), when \(m\left( A\right) =1\), \(BIED\left( A\right) \ne \log \left| A \right| \).

-

(4)

Nonadditivity: \(BIED\left( {m_X \oplus m_Y } \right) \ne BIED\left( {m_X } \right) + BIED\left( {m_Y } \right) \).

-

(5)

Nonsubadditivity: \(BIED\left( {m_X } \right) + BIED\left( {m_Y } \right) \!\ge \! BIED\) \(\left( {m_X \oplus m_Y } \right) \)does not always valid.

We also list the properties of some classic methods for comparison in Table 2, consistent with Table 1. The reader can follow the specific equations of the various methods given in Section 3, or find the proofs in the references mentioned in Table 2.

4.2 Numerical examples

In this subsection, we use numerical examples to demonstrate the calculation process and performance of the BIED entropy. To verify the research gap filled by BIED entropy, we choose several classical methods for comparison.

Example 1

Suppose \(X = \left\{ a,b,c\right\} \) is a frame of discernment. Given a mass function as follows

The calculation process of BIED entropy is as follows

In this example, the given mass function assigns the same probability to each focal element. However, in the calculation process of the BIED entropy, we found that \(d_M \left( {a,b,c} \right) \) is greater than \(d_M \left( a \right) \), \(d_M \left( b \right) \) and \(d_M \left( c \right) \). It can be understood that the BIED entropy allocates more probabilities to the ignorance, which can increase uncertainty. We can know that the more focal elements in the mass function, the more complex it is, and the greater the uncertainty.

Example 2

Suppose \(X = \left\{ a,b\right\} \) is a frame of discernment. Given two mass functions as follows

We calculate the uncertainty of two mass functions using BIED entropy and obtain the following results

Analyzing the results of Example 2, it can be seen that the mass function \(m_2\) assigns all probabilities to \(\left\{ a\right\} \), indicating that \(\left\{ a\right\} \) must occur with an uncertainty of 0. However, \(m_1\) assigns all probabilities to multiple subsets \(\left\{ a,b\right\} \), and contains a high uncertainty due to the nonspecificity measurement shown in (18). Therefore, the calculation results of BIED entropy are in line with common sense.

Example 3

Suppose \(X = \left\{ a,b,c,d\right\} \) is a frame of discernment. Given two mass functions as follows

We use several existing methods for comparison, and the results are recorded in Table 3.

-

(1)

Belief entropy [16]: We use the belief entropy shown in (12) to calculate.

$$\begin{aligned} E_d \left( {m_1 } \right)= & {} - {m_1 \left( {a,b,c,d} \right) \log \left( {\frac{{m_1 \left( {a,b,c,d} \right) }}{{2^4 - 1}}} \right) }= 3.9069 \\ E_d \left( {m_2 } \right)= & {} - {m_2 \left( a \right) \log \left( {\frac{{m_2 \left( a \right) }}{{2^1 - 1}}} \right) - {m_2 \left( b \right) \log \left( {\frac{{m_2 \left( b \right) }}{{2^1 - 1}}} \right) } } \nonumber \\{} & {} - {m_2 \left( c \right) \log \left( {\frac{{m_2 \left( c \right) }}{{2^1 - 1}}} \right) \!-\! {m_2 \left( d \right) \log \left( {\frac{{m_2 \left( d \right) }}{{2^1 - 1}}} \right) } } \!=\! 2 \\ \end{aligned}$$ -

(2)

\(iTU^I\) method [22]: We use the \(iTU^I\) method shown in (16) to calculate.

$$\begin{aligned} iTU^I \left( {m_1 } \right)= & {} 4 \left[ 1 - d_E^I \left( {\left[ {0,1} \right] ,\left[ {0,1} \right] } \right) \right] {\text { = }}4 \\ iTU^I \left( {m_2 } \right)= & {} 4 \left[ 1 - d_E^I \left( {\left[ {\frac{1}{4},\frac{1}{4}} \right] ,\left[ {0,1} \right] } \right) \right] {\text { = 0}}{\text {.8377}} \\ \end{aligned}$$ -

(3)

IBE method [24]: We use the IBE method shown in (13) to calculate.

$$\begin{aligned} E_{cui} \left( {m_1 } \right)= & {} - m_1 \left( {a,b,c,d} \right) \log \left( {\frac{m_1 \left( {a,b,c,d} \right) }{{2^4 - 1}}e^{\frac{{0}}{{15}}} } \right) = 3.9069 \\ E_{cui} \left( {m_2 } \right)= & {} - m_2 \left( a \right) \log \left( {\frac{{m_2 \left( a \right) }}{{2^1 - 1}}e^{\frac{0}{{15}}} } \right) \!-\! m_2 \left( b \right) \log \left( {\frac{{m_2 \left( b \right) }}{{2^1 - 1}}e^{\frac{0}{{15}}} } \right) \\{} & {} - m_2 \left( c \right) \log \left( {\frac{{m_2 \left( c \right) }}{{2^1 - 1}}e^{\frac{0}{{15}}} } \right) \!-\! m_2 \left( d \right) \log \left( {\frac{{m_2 \left( d \right) }}{{2^1 - 1}}e^{\frac{0}{{15}}} } \right) \\= & {} 2\\ \end{aligned}$$ -

(4)

Renyi entropy-based method [25]: We use the Renyi entropy-based method shown in (14) to calculate.

$$\begin{aligned} R\left( {m_1 } \right)= & {} \frac{{4\log \left[ {\left( {\frac{{m_1 \left( {a,b,c,d} \right) }}{{2^{\left| 4 \right| } - 1}}} \right) \left( {1 + \left( {\frac{{m_1 \left( {a,b,c,d} \right) }}{{2^4 - 1}}} \right) ^{4 - 1} - \frac{1}{{2^4 - 1}}} \right) }\right] }}{{1 - 4}}\\= & {} 5.3413 \nonumber \\ R\left( {m_2 } \right)= & {} - m_2 \left( a \right) \log m_2 \left( a \right) - m_2 \left( b \right) \log m_2 \left( b \right) - m_2 \left( c \right) \\{} & {} \times \log m_2 \left( c \right) - m_2 \left( d \right) \log m_2 \left( d \right) = 2 \\ \end{aligned}$$ -

(5)

Tsallis entropy-based method [26]: We use the Tsallis entropy-based method shown in (15) to calculate.

$$\begin{aligned} T\left( {m_1 } \right)\!=\! & {} {\frac{\left( {2^4 - 1}\right) m_1 \left( {a,b,c,d} \right) {\left( {1 \!-\! {\left( {\frac{{m_1 \left( {a,b,c,d} \right) }}{{2^4 - 1}}} \right) }^{4 - 1}}\right) }}{4 - 1}} \!=\!4.9985 \nonumber \\ T\left( {m_2 } \right)= & {} - m_2 \left( a \right) \log m_2 \left( a \right) - m_2 \left( b \right) \log m_2 \left( b \right) - m_2 \left( c \right) \\{} & {} \times \log m_2 \left( c \right) - m_2 \left( d \right) \log m_2 \left( d \right) = 2 \\ \end{aligned}$$ -

(6)

BIED entropy: Here we use our proposed method shown in (18) to calculate.

$$\begin{aligned} BIED\left( {m_1 } \right)= & {} m_1 \left( {a,b,c,d} \right) \log \frac{1}{{d_M \left( {a,b,c,d} \right) }} \\{} & {} + m_1 \left( {a,b,c,d} \right) \log \frac{1}{{2^4 - 1}} = 3.9069 \nonumber \\ BIED\left( {m_2 } \right)= & {} m_2 \left( a \right) \log \frac{1}{{d_M \left( a \right) }} + m_2 \left( b \right) \log \frac{1}{{d_M \left( b \right) }} \nonumber \\{} & {} + m_2 \left( c \right) \log \frac{1}{{d_M \left( c \right) }} + m_2 \left( d \right) \log \frac{1}{{d_M \left( d \right) }} \nonumber \\{} & {} + m_2 \left( a \right) \log \frac{1}{{2^1 - 1}} + m_2 \left( b \right) \log \frac{1}{{2^1 - 1}} \nonumber \\{} & {} + m_2 \left( c \right) \log \frac{1}{{2^1 - 1}} + m_2 \left( d \right) \log \frac{1}{{2^1 - 1}} = 2 \\ \end{aligned}$$

Record the comparison results in Table 3, where \(E_d\) is belief entropy [16], \(iTU^I\) is the \(iTU^I\) method [22], IBE is Cui’s IBE method [24], R is Renyi entropy-based method [25], T is Gao’s Tsallis entropy-based method [26], and BIED is proposed method. In Example 3, the mass function \(m_1\) assigns all probabilities to \(\left\{ a, b, c, d\right\} \), representing the total uncertainty of the ignorance. But \(m_2\) shows the total uncertainty of all singletons of X, with equal probabilities for them. Intuitively, \(m_1\) contains less information than \(m_2\). Therefore, \(m_1\) should demonstrate higher uncertainty.

Observing the results in Table 3, we found that among all methods, \(m_1\) has greater uncertainty than \(m_2\). Therefore, all of these methods can distinguish ’ignorance’ and ’equal probability’. Since \(m_2\) degenerates into a probability distribution, belief entropy, IBE, Tsallis entropy-based method, Renyi entropy-based method, and the proposed BIED entropy can degenerate into Shannon entropy in this situation. However, the uncertainty measure of \(iTU ^I\) for \(m_2\) is very small. Because \(m_2\) assigns equal probabilities to each single set, we cannot determine which event tends to occur. It is expected to contain great uncertainty.

Example 4

Suppose \(X = \left\{ a,b,c,d\right\} \) is a frame of discernment. Given two mass functions as follows

We also use several existing methods for comparison in Table 4.

-

(1)

Belief entropy [16]:

$$\begin{aligned} E_d \left( {m_1 } \right)= & {} - m_1 \left( {a,b} \right) \log \frac{{m_1 \left( {a,b} \right) }}{{2^2 - 1}} - m_1 \left( {c,d} \right) \\{} & {} \times \log \frac{{m_1 \left( {c,d} \right) }}{{2^2 - 1}} = 2.5559 \nonumber \\ E_d \left( {m_2 } \right)= & {} - m_2 \left( {a,c} \right) \log \frac{{m_2 \left( {a,c} \right) }}{{2^2 - 1}} - m_2 \left( {b,c} \right) \\{} & {} \times \log \frac{{m_2 \left( {b,c} \right) }}{{2^2 - 1}} = 2.5559 \\ \end{aligned}$$ -

(2)

\(iTU^I\) method [22]:

$$\begin{aligned} iTU^I \left( {m_1 } \right)= & {} 2 \left( { 1- \left[ d_E^I \left( {\left[ {0,0.4} \right] ,\left[ {0,1} \right] } \right) \right] } \right) \\{} & {} + 2 \left( { 1- \left[ d_E^I \left( {\left[ {0,0.6} \right] ,\left[ {0,1} \right] } \right) \right] } \right) = 2 \\ iTU^I \left( {m_2 } \right)= & {} \left[ 1 - d_E^I \left( {\left[ {0,0.4} \right] ,\left[ {0,1} \right] } \right) \right] \\{} & {} + \left[ 1 - d_E^I \left( {\left[ {0,0.6} \right] ,\left[ {0,1} \right] } \right) \right] \nonumber \\{} & {} + \left[ 1 - d_E^I \left( {\left[ {0,1} \right] ,\left[ {0,1} \right] } \right) \right] \\{} & {} + \left[ 1 - d_E^I \left( {\left[ {0,0} \right] ,\left[ {0,1} \right] } \right) \right] = 2 \\ \end{aligned}$$ -

(3)

IBE method [24]:

$$\begin{aligned} E_{cui} \left( {m_1 } \right)\!=\! & {} - m_1 \left( {a,b} \right) \log \left( {\frac{{m_1 \left( {a,b} \right) }}{{2^2 - 1}}e^{\frac{0}{15}} } \right) \!-\! m_1 \left( {c,d} \right) \\{} & {} \times \log \left( {\frac{{m_1 \left( {c,d} \right) }}{{2^2 - 1}}e^{\frac{0}{15}} } \right) = 2.5559\\ E_{cui} \left( {m_2 } \right)\!=\! & {} - m_2 \left( {a,c} \right) \log \left( {\frac{{m_2 \left( {a,c} \right) }}{{2^2 - 1}}e^{\frac{1}{{15}}} } \right) \!-\! m_2 \left( {b,c} \right) \\{} & {} \times \log \left( {\frac{{m_2 \left( {b,c} \right) }}{{2^2 - 1}}e^{\frac{1}{{15}}} } \right) = 2.4597 \\ \end{aligned}$$ -

(4)

Renyi entropy-based method [25]:

$$\begin{aligned} R\left( {m_1 } \right)\!=\! & {} \frac{{2\log \left( {\frac{{m_1 \left( {a,b} \right) }}{{2^2 - 1}}} \right) \left( {1 + \left( {\frac{{m_1 \left( {a,b} \right) }}{{2^2 - 1}}} \right) ^{2 - 1} - \frac{1}{{2^2 - 1}}} \right) }}{{1 - 2}} \nonumber \\{} & {} + \frac{{2\log \left( {\frac{{m_1 \left( {c,d} \right) }}{{2^2 - 1}}} \right) \left( {1 + \left( {\frac{{m_1 \left( {c,d} \right) }}{{2^2 - 1}}} \right) ^{2 - 1} - \frac{1}{{2^2 - 1}}} \right) }}{{1 - 2}} \!= 3.6730 \\ R\left( {m_2 } \right)\!=\! & {} \frac{{2\log \left( {\frac{{m_2 \left( {a,c} \right) }}{{2^2 - 1}}} \right) \left( {1 + \left( {\frac{{m_2 \left( {a,c} \right) }}{{2^2 - 1}}} \right) ^{2 - 1} - \frac{1}{{2^2 - 1}}} \right) }}{{1 - 2}} \nonumber \\{} & {} + \frac{{2\log \left( {\frac{{m_2 \left( {b,c} \right) }}{{2^2 - 1}}} \right) \left( {1 + \left( {\frac{{m_2 \left( {b,c} \right) }}{{2^2 - 1}}} \right) ^{2 - 1} - \frac{1}{{2^2 - 1}}} \right) }}{{1 - 2}} = 3.6730\\ \end{aligned}$$ -

(5)

Tsallis entropy-based method [26]:

$$\begin{aligned} T\left( {m_1 } \right)= & {} {\frac{\left( {2^2 - 1}\right) m_1 \left( {a,b} \right) {\left( {1 - {\left( {\frac{{m_1 \left( {a,b} \right) }}{{2^2 - 1}}} \right) }^{2 - 1}}\right) }}{2 - 1}} \nonumber \\{} & {} + {\frac{\left( {2^2 - 1}\right) m_1 \left( {c,d} \right) {\left( {1 - {\left( {\frac{{m_1 \left( {c,d} \right) }}{{2^2 - 1}}} \right) }^{2 - 1}}\right) }}{2 - 1}} =2.4800 \\ T\left( {m_2 } \right)= & {} {\frac{\left( {2^2 - 1}\right) m_2 \left( {a,c} \right) {\left( {1 - {\left( {\frac{{m_2 \left( {a,c} \right) }}{{2^2 - 1}}} \right) }^{2 - 1}}\right) }}{2 - 1}} \nonumber \\{} & {} + {\frac{\left( {2^2 - 1}\right) m_2 \left( {b,c} \right) {\left( {1 - {\left( {\frac{{m_2 \left( {b,c} \right) }}{{2^2 - 1}}} \right) }^{2 - 1}}\right) }}{2 - 1}} =2.4800 \\ \end{aligned}$$Table 5 The values of three variables in Example 5 -

(6)

BIED entropy:

$$\begin{aligned} BIED\left( {m_1 } \right)= & {} m_1 \log \frac{1}{{d_M \left( {a,b} \right) }} + m_1 \log \frac{1}{{d_M \left( {c,d} \right) }} \nonumber \\{} & {} + m_1 \left( {a,b} \right) \log \left( {2^2 - 1} \right) + m_1 \left( {c,d} \right) \\{} & {} \times \log \left( {2^2 - 1} \right) = 2.5559\\ BIED\left( {m_2 } \right)= & {} m_2 \log \frac{1}{{d_M \left( {a,c} \right) }} + m_2 \log \frac{1}{{d_M \left( {b,c} \right) }} \nonumber \\{} & {} + m_2 \left( {a,c} \right) \log \left( {2^2 - 1} \right) + m_2 \left( {b,c} \right) \\{} & {} \times \log \left( {2^2 - 1} \right) = 1.9839 \\ \end{aligned}$$

Example 4 shows that there is no intersection between the two focal elements of the mass function \(m_1\), while the two focal elements of \(m_2\) have an intersection \(\left\{ c \right\} \). Therefore, under the same probability distribution, the mass function \(m_1\) should have greater uncertainty. However, in Table 4, belief entropy, \(iTU^U\), Renyi entropy-based method, and Tsallis entropy-based method give the same results for the two mass functions, indicating that they cannot identify the similarity between focal elements. The IBE method and our proposed BIED entropy have obtained correct results. And in Example 5, we conduct a further comparative analysis of these two methods.

Example 5

Suppose \(X = \left\{ \omega _1,\omega _2,\ldots ,\omega _{22} \right\} \) is a frame of discernment. Given two mass functions as follows

where \(A_t\), \(B_t\), and \(C_t\) are all unknown variables listed in Table 5.

Comparison of results obtained by IBE and BIED in Example 5

From Table 5, it can be seen that these three variables always have the same cardinality in each calculation. In these 10 tests of uncertainty, \(A_t\) has no intersection with \(B_t\), but always has an intersection \(\left\{ \omega _1 \right\} \) with \(C_t\). Therefore, the uncertainty of \(m_1\) should always be greater than \(m_2\).

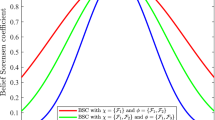

We present the uncertainty measure results obtained by the IBE and BIED in Fig. 5. In Figure 5a, with the increase of cardinality of the set, the gap of the uncertainty of the two mass functions obtained by IBE gradually decreases. When the cardinality is greater than 5, the uncertainty values of the two mass functions are almost the same. However, as shown in Table 5, there is always an intersection \(\left\{ \omega _1 \right\} \) between \(A_t\) and \(C_t\), so this result is inaccurate. Observing the results obtained by BIED in Fig. 5b, the uncertainty of \(m_1\) is always greater than \(m_2\) and maintains a certain gap with \(m_2\). As the cardinality increases, BIED can always distinguish the uncertainty of the two mass functions.

Then we analyze the results obtained from Example 5. For IBE, its reflection of the similarity relationship between focal elements is related to \(\left| X \right| \) shown in (13). As the value of \(\left| X \right| \) increases, the exponential term gradually tends to 1, and its ability to express the difference in the similarity of focal elements will weaken. However, the BIED entropy directly reflects the differences based on the relationship of focal elements themselves and is less affected by the cardinality of frame of discernment. Therefore, it has high availability even when the cardinality of frame of discernment is large.

Example 6

Suppose \(X = \left\{ a,b\right\} \) is a frame of discernment. Given two mass functions as follows

We still use the methods in Example 3 for comparative calculation, and the results are recorded in Table 6.

-

(1)

Belief entropy [16]:

$$\begin{aligned} E_d \left( {m_1 } \right)= & {} - {m_1 \left( {a} \right) \log \left( {\frac{{m_1 \left( {a} \right) }}{{2^1 - 1}}} \right) }-{m_1 \left( {b} \right) \log \left( {\frac{{m_1 \left( {b} \right) }}{{2^1 - 1}}} \right) } \nonumber \\{} & {} -{m_1 \left( {a,b} \right) \log \left( {\frac{{m_1 \left( {a,b} \right) }}{{2^2 - 1}}} \right) } = 2.1133 \\ E_d \left( {m_2 } \right)= & {} - {m_2 \left( {a} \right) \log \left( {\frac{{m_2 \left( {a} \right) }}{{2^1 - 1}}} \right) }-{m_2 \left( {b} \right) \log \left( {\frac{{m_2 \left( {b} \right) }}{{2^1 - 1}}} \right) } \nonumber \\{} & {} -{m_2 \left( {a,b} \right) \log \left( {\frac{{m_2 \left( {a,b} \right) }}{{2^2 - 1}}} \right) } = 2.3219 \\ \end{aligned}$$ -

(2)

\(iTU^I\) method [22]:

$$\begin{aligned} iTU^I \left( {m_1 } \right)= & {} {\left[ 1 - d_E^I \left( {\left[ {{\frac{1}{3}},{\frac{2}{3}}} \right] ,\left[ {0,1} \right] } \right) \right] }\\{} & {} + \left[ 1 - d_E^I \left( {\left[ {{\frac{1}{3}},{\frac{2}{3}}} \right] ,\left[ {0,1} \right] } \right) \right] {\text { = }}1.0666 \\ iTU^I \left( {m_2 } \right)= & {} {\left[ 1 - d_E^I \left( {\left[ {{\frac{1}{5}},{\frac{4}{5}}} \right] ,\left[ {0,1} \right] } \right) \right] } \\{} & {} +{ \left[ 1 - d_E^I \left( {\left[ {{\frac{1}{5}},{\frac{4}{5}}} \right] ,\left[ {0,1} \right] } \right) \right] } {\text { = 1}}{\text {.4343}} \\ \end{aligned}$$Table 6 Comparison of calculation results in Example 6 -

(3)

IBE method [24]:

$$\begin{aligned} E_{cui} \left( {m_1 } \right)\!=\! & {} - {\frac{1}{3}} \log \left( {\frac{{\frac{1}{3}}}{{2^1 - 1}}e^{\frac{{1}}{{3}}} } \right) -{\frac{1}{3}} \log \left( {\frac{{\frac{1}{3}}}{{2^1 - 1}}e^{\frac{{1}}{{3}}} } \right) \\{} & {} \!-{\frac{1}{3}} \log \left( {\frac{{\frac{1}{3}}}{{2^2 - 1}}e^{\frac{{2}}{{3}}} } \right) = 1.4721 \\ E_{cui} \left( {m_2 } \right)\!=\! & {} - {\frac{1}{5}} \log \left( {\frac{{\frac{1}{5}}}{{2^1 - 1}}e^{\frac{{1}}{{3}}} } \right) -{\frac{1}{5}} \log \left( {\frac{{\frac{1}{5}}}{{2^1 - 1}}e^{\frac{{1}}{{3}}} } \right) \\{} & {} \!-{\frac{3}{5}} \log \left( {\frac{{\frac{3}{5}}}{{2^2 - 1}}e^{\frac{{2}}{{3}}} } \right) = 1.5525 \\ \end{aligned}$$ -

(4)

Renyi entropy-based method [25]:

$$\begin{aligned} R\left( {m_1 } \right)= & {} -{m_1 \left( {a} \right) } \log {m_1 \left( {a} \right) }-{m_1 \left( {b} \right) } \log {m_1 \left( {b} \right) } \nonumber \\{} & {} +\frac{{2\log {\left( {\frac{{m_1 \left( {a,b} \right) }}{{2^2 - 1}}} \right) \left( {1 + \left( {\frac{{m_1 \left( {a,b} \right) }}{{2^2 - 1}}} \right) ^{2 - 1} - \frac{1}{{2^2 - 1}}} \right) } }}{{1 - 2}} \!=\! 8.1216 \\ R\left( {m_2 } \right) \nonumber \\= & {} -{m_2 \left( {a} \right) } \log {m_2 \left( {a} \right) }-{m_2 \left( {b} \right) } \log {m_2 \left( {b} \right) } \nonumber \\{} & {} +\frac{{2\log {\left( {\frac{{m_2 \left( {a,b} \right) }}{{2^2 - 1}}} \right) \left( {1 + \left( {\frac{{m_2 \left( {a,b} \right) }}{{2^2 - 1}}} \right) ^{2 - 1} - \frac{1}{{2^2 - 1}}} \right) } }}{{1 - 2}} \!=\! 5.9855 \nonumber \\ \end{aligned}$$ -

(5)

Tsallis entropy-based method [26]:

$$\begin{aligned} T\left( {m_1 } \right)= & {} -{m_1 \left( a \right) } \log {m_1 \left( a \right) } - {m_1 \left( b \right) } \log {m_1 \left( b \right) } \nonumber \\{} & {} +{\frac{\left( {2^2 - 1}\right) m_1 \left( {a,b} \right) {\left( {1 - {\left( {\frac{{m_1 \left( {a,b} \right) }}{{2^2 - 1}}} \right) }^{2 - 1}}\right) }}{ 2 - 1}} =1.9455 \\ T\left( {m_2 } \right)= & {} -{m_2 \left( a \right) } \log {m_2 \left( a \right) } - {m_2 \left( b \right) } \log {m_2 \left( b \right) } \nonumber \\{} & {} +{\frac{\left( {2^2 - 1}\right) m_2 \left( {a,b} \right) {\left( {1 - {\left( {\frac{{m_2 \left( {a,b} \right) }}{{2^2 - 1}}} \right) }^{2 - 1}}\right) }}{2 - 1}} =2.3688 \\ \end{aligned}$$ -

(6)

BIED entropy:

$$\begin{aligned} BIED\left( {m_1 } \right)= & {} m_1 \left( a \right) \log \frac{1}{{d_M \left( a \right) }} + m_1 \left( b \right) \log \frac{1}{{d_M \left( b \right) }} \\{} & {} +m_1 \left( {a,b} \right) \log \frac{1}{{d_M \left( {a,b} \right) }} + m_1 \left( a \right) \\{} & {} \times \log \frac{1}{{2^1 - 1}} + m_1 \left( b \right) \log \frac{1}{{2^1 - 1}} \\{} & {} + m_1 \left( {a,b} \right) \log \frac{1}{{2^2 - 1}} = 0.9655 \\ BIED\left( {m_2 } \right)= & {} m_2 \left( a \right) \log \frac{1}{{d_M \left( a \right) }} + m_2 \left( b \right) \log \frac{1}{{d_M \left( b \right) }} \\{} & {} +m_2 \left( {a,b} \right) \log \frac{1}{{d_M \left( {a,b} \right) }} + m_2 \left( a \right) \\{} & {} \times \log \frac{1}{{2^1 - 1}} + m_2 \left( b \right) \log \frac{1}{{2^1 - 1}} \\{} & {} + m_2 \left( {a,b} \right) \log \frac{1}{{2^2 - 1}} = 1.1493 \\ \end{aligned}$$

In this example, mass function \(m_1\) distributes the probability equally among the three subsets, while \(m_2\) allocates the probability according to the cardinality of focal elements, assigning the maximum probability to \(\left\{ a,b\right\} \). Intuitively, \(m_2\) is supposed to contain greater uncertainty. Analyzing the results shown in Table 6, we found that belief entropy, \(iTU ^I\), IBE, Tsallis entropy-based method, and our proposed BIED entropy were consistent with the prediction and can identify the quantity relationship of focal elements, while the uncertainty of \(m_2\) calculated by Renyi entropy-based method is significantly lower than that of \(m_1\).

Example 7

Suppose \(X=\left\{ a,b,c,d,e,f,g,h,i,j\right\} \) and given a mass function as follows

where the focal element Y is an unknown value. We measure the uncertainty of the mass function with the changing variable \(\left| Y \right| \).

The trend of uncertainty measurement with the increase of the cardinality of focal elements is an important criterion to measure the performance of the method. The positive and linear trend is useful for data storage and statistical analysis. First, the uncertainty measure without a steady growth trend has a weak ability to identify the change in the cardinality of focal elements. Second, the method of exponential or rapid growth trend is not conducive to statistics, and when the cardinality of focal elements is large, the influence of the cardinality will be significantly greater than that of the similarity relationship of focal elements. Simply, the nonspecificity will have a much greater impact on the uncertainty measurement than the discord, which is not logical. The importance of this property has been discussed in several previous studies [15, 25]. In this example, we use several methods to indicate the variation trend of the uncertainty. The calculation results are shown in Fig. 6 and Table 7.

According on the calculation results and variation trends, it can be seen that among these six methods, the changing trend of BIED entropy in this paper is linear and consistent with the belief entropy \(E_d\). Other methods, such as IBE and \(iTU^I\), also exhibit similar trends.

On the contrary, Gao’s Tsallis entropy-based method (T) [26] shows an exponential trend due to the term of \(2^{\left| A \right| } - 1\) in (15). In the actual analysis process, this changing trend of T makes the uncertainty of the mass function increase greatly due to the addition of a few events in a large number of events, which is unreasonable.

Chen’s Renyi entropy-based method (R) [25] exhibits an anomaly at \(\left| Y \right| = 3\), and in other cases, it also follows a linear trend. Through analysis, we believe that the reason for this phenomenon is that when \(\left| Y \right| = 3\), only \(\left\{ e\right\} \), \(\left\{ a,b,c\right\} \), and X exist in the mass function. However, in this method, the overall uncertainty is accumulated from all the focal elements of each cardinality, as shown in (14), so the value is relatively small in this case.

Taking into account Examples 3, 4, 5, 6, and 7, we compare the performance from five aspects: (1) Distinguishing the ’ignorance’ and ’equal probability’; (2) Identifying the similarity relationship between focal elements; (3)Maintaining good similarity measurement under high cardinality of frame of discernment; (4) Identifying the quantity relationship between focal elements; (5) Exhibiting a linear trend with the changing cardinality of focal elements. In Table 8, it can be seen that our proposed BIED entropy has the best performance compared to other methods.

Example 8

Suppose \(X=\left\{ a,b,c,d,e,f,g,h,i,j\right\} \) and given a mass function as follows

where the probability \(\alpha \) and the focal element \(S_t\) are both changing, with probability \(\alpha \) ranging from 0.01 to 0.99, and values of \(S_t\) being listed in Table 9. Compared to the previous examples, the situation in Example 8 is more complicated. We use BIED entropy to calculate the uncertainty of the mass function, and the results are shown in Fig. 7.

In Figure 7a, it can be seen that \(BIED \ge 0\), which also verifies the non-negativity of the method.

In Figure 7b, when \(S_t=\left\{ a\right\} \), BIED shows a parabolic trend. Since the cardinality of the focal elements in the mass function is all equal to 1, the mass function degenerates into a probability distribution, and when \(\alpha =0.5\), the uncertainty is maximum. When \(\left| {S_t } \right| >1\), in this case, the greater the probability assigned to \(S_t\), the greater the uncertainty, and they are positively linearly correlated.

In Figure 7c, except for the case where \(\left| {S_t } \right| =1\), the uncertainty always shows a positive linear correlation with \(\left| {S_t } \right| \), which is consistent with the results in Example 7.

Through the above examples, we verify that BIED entropy can effectively reflect the uncertainty of the mass function, identify the quantity and similarity relationships between various focal elements, and exhibit a linear trend when the cardinality of focal elements changes. Therefore, it can be well applied in decision-making.

5 Applications in multi-sensor data fusion

In this section, we apply BIED entropy to multi-sensor data fusion.

5.1 BIED entropy-based multi-sensor data fusion method

After analyzing the performance of BIED entropy, we propose a multi-sensor data fusion method based on BIED entropy. The process is as follows. Step 1. Assuming that the information source is X, n pieces of data are collected based on the information source and n mass functions are generated. Step 2. Calculate the BIED entropy of each mass function, and then allocate the weight of each mass function. To be noticed, the larger the BIED entropy of the mass function, the greater its uncertainty will be, so it contains more information and should be given greater weight. We define the weight allocation equation as follows

The relationship between uncertainty and \(\alpha -S_t\) in Example 8

Step 3. According to (23), the original mass functions are reconstructed into a new mass function \(m^*\). The reconstruction calculation equation is as follows

Step 4. Use the Dempster’s combination rule to perform \(n-1\) fusion on \(m^*\).

The process of the multi-sensor data fusion method based on BIED entropy is shown in Fig. 8 and Algorithm 1. Because the BIED entropy does not satisfy the additivity, at the end of Algorithm 1, we use Dempster’s combination rule to obtain the fusion result. This property makes BIED entropy unable to judge the overall state of the system by adding individuals. It can only reflect the uncertainty of a single mass function. The set inconsistency and non-subadditivity of BIED entropy cannot fully satisfy the five properties of information entropy proposed by Klir and Wierman [23], which makes it limited in reflecting entropy change.

5.2 Experiments

In this subsection, we use seven advanced methods: belief entropy(\(E_d\)) [16], the \(iTU^I\) method(\(iTU^I\)) [22], Cui’s IBE method(IBE) [24], Renyi entropy-based method(R) [25], Gao’s Tsallis entropy-based method(T) [26], plausibility entropy(\(H_{Pl}\)) [37] and fractal-based belief entropy(\(E_{FB}\)) [38] for comparison. The data related to these experiments can be obtained from the UCI machine learning database. We use six types of datasets from this database to analyze the performance of these methods. A brief description of the datasets is provided as follows:

-

(1)

Iris Dataset: The Iris dataset contains 150 samples, divided into three categories: Setosa, Versicolor, and Virginica. Each category includes 50 samples, each of them containing 4 attributes, namely sepal length (SL), sepal width (SW), petal length (PL), and petal width(PW).

-

(2)

Wine Recognition (WR) Dataset: This dataset includes 13 different chemical characteristics measured from three types of wine, including a total of 178 samples. It has been widely used in classification and clustering testing.

-

(3)

Boston House Price (BHP) Dataset: This dataset contains 506 housing data from the Boston area, each with 13 variables (such as crime rate, property tax rate, number of rooms, etc.) and one target variable (median housing price). To better apply to the experiments, we divide these samples into three categories according to the median value of housing prices.

-

(4)

Wheat Seeds (WS) Dataset: This dataset stores the area, perimeter, compaction, grain length, grain width, asymmetry coefficient, grain ventral groove length, and category of different varieties of wheat seeds. It has a total of 210 records, 7 features, and three categories.

-

(5)

Rice Dataset: This dataset contains 3810 pieces of data and two rice varieties, Osmancik and Cammeo. Each grain of rice includes 7 morphological characteristics, such as area, perimeter, major axis length, minor axis length, eccentricity, convex area, and extent.

-

(6)

Heart Disease (HD) Dataset: This dataset contains 1025 examples and 13 characteristics such as age, sex, resting blood pressure, maximum heart rate, etc. The target attribute is whether the patient has heart disease.

Before experimenting, for each dataset, we convert the values of attributes into mass functions according to the interval number-based mass function generation method [39]. Then the fusion of mass functions and robustness measurement are carried out.

5.2.1 Fusion of mass functions

In this experiment, we calculate the fusion results of different measures for data sets and compare their target recognition ability. First, as an example, we randomly selected 80% samples of Iris data as the training set to construct the model, with an equal amount of data extracted for each category. Then we randomly select a piece of data (SL: 7.3, SW: 2.9, PL: 6.3, PW: 1.8) as test data, and use the interval number to generate four mass functions listed in Table 10. In the table, Se represents Setosa, Ve represents Versicolour, and Vi represents Virginica.

Given that the actual category is Virginica, we compare the ability of several different uncertainty measures to identify this target. The fusion results obtained by these methods are recorded in Table 11.

By analyzing these methods, the BIED entropy assigns the greatest probability 0.6287 to the actual category Virginica, and the smallest probability of incorrect categories Setosa and Versicolor, making it the most accurate for target recognition. Moreover, BIED entropy allocates less probability to multiple sets such as \(\left\{ Se, Ve\right\} \) and \(\left\{ Se, Vi\right\} \), which reduces the difficulty of decision-making.

To make the results more convincing, we use the same method as the Iris dataset to perform experiments on other datasets. The experimental process is shown in Algorithm 2. The parameter BPA is the mass function generated by this data, targetSet is the target set of each data set, and methodSet is different existing methods participating in the comparison.

After that, we present the values of belief assigned to the correct target for each dataset using different uncertainty measures in Fig. 9. For all six datasets, BIED entropy allocates higher belief to the correct target sets, while other methods such as \(E_{FB}\) and R assign lower belief for WR and WS datasets and are less stable. This can verify the high recognition capability of BIED entropy.

5.2.2 Robustness measurement

In this measurement, we take the proportion of training sets as the experimental variable, ranging from 20% to 80%, to test the stability and availability of BIED entropy under different conditions. We still compare BIED entropy with several existing methods. First, we calculate the recognition accuracy of several methods in the test set and provide details of the target recognition performance in different data sets. Second, we add F1-Score as a new metric, and for each dataset and each method, the average F1-Score values across all test sets are provided. Finally, we collect the total results of these two metrics to prove the robustness of BIED entropy.

The specific process of different measurement methods in a data set can be seen in Algorithm 3. As an explanation, the parameter testSet is the test set data, correctSet is the correct category in each test set data, targetSet is the target set identified by the method, methodSet is different methods for comparison, and Proportion is the proportion of the training set.

Metric of accuracy: First, we provide the accuracy obtained by different methods in Fig. 10, with the proportion of training sets as the variable. Specifically, for a data set, if 20% of the data is the training set, the remaining 80% is the test set. It can be seen that excluding the WS dataset, BIED entropy has a relatively superior performance in different datasets. In Figure10a, the BIED entropy consistently maintains the highest accuracy. In Figure 10b, although it does not perform well in the low training set proportion, it shows higher accuracy than other methods when the proportion increases. In Figure 10c, e, and f, there is a relatively small difference between the results obtained by different measures. Although some methods, such as R and \(H_{Pl}\), have high accuracy in Fig. 10d, they have large fluctuations in WR and Rice datasets and have less stability.

Second, we take the average of all the test sets and show the details of the recognition accuracy of each data set in Fig. 11, where the targets for Iris dataset, WR dataset, BHP dataset, and WS dataset are marked as \(O_1\), \(O_2\), and \(O_3\) respectively, and the targets for Rice dataset and HD dataset are represented by \(O_1\) and \(O_2\) respectively.

The proposed BIED entropy shows high accuracy in both Fig. 11a and b. In Figure 11d, although the accuracy of BIED entropy in the WS dataset decreases, it still maintains around 0.85. The method based on Renyi entropy [25] exhibits the highest accuracy in the recognition of \(O_2\) and \(O_3\), but its recognition accuracy of \(O_1\) is the lowest, and has the largest variation range in the WS dataset. It also has less accuracy in the Iris, WR, and Rice datasets. Other methods such as \(iTU^I\) and \(E_d\) are also unstable. In Figure 11c, e and f, the BIED entropy also shows relatively high accuracy compared to other methods, staying above the medium level.

Metric of F1-Score: According to Algorithm 3, we analyze the calculation results of F1-Score here. The F1-Score combines Recall rate(Rr) and precision rate(Pr), and the closer the value is to 1, the better the model is. In Figure 12, we clearly list the average F1-Score values across all test sets for different methods and different data sets, i.e., \(\overline{FS}^*\) in Algorithm 3. It can be seen that BIED entropy still has good stability, with high values in Iris and WR datasets. Although its result in the WS dataset is relatively low, the difference with other methods is slight. In the BHP, Rice and HD datasets, the results of all methods are similar. It is worth noting that the BHP dataset has overall low values in these experiments, which may be related to the conversion of its continuous data into categorical data.

At this point, we have completed the experiment on the two metrics of accuracy and F1-Score. In Table 12, for each dataset and each method, we present specific values for the average accuracy and average F1-Score across all test sets, \(\overline{Acc}^*\) and \(\overline{FS}^*\) in Algorithm 3 respectively. For each method, we calculate the means of the two metrics and list them at the end of the table. Obviously, the BIED entropy exhibits the highest mean in both accuracy and F1-Score, 0.7933 and 0.7729 respectively. Although its performance in the WS dataset is not prominent, it maintains a good recognition in the other datasets and wins in the overall average. Through the above experiments, we have verified the robustness of BIED entropy, which can maintain high accuracy and stability in a large number of tests, and is suitable for practical target recognition.

6 Conclusion

The D-S evidence theory can effectively fuse information in several scenarios, however, the uncertainty measure of the mass function still needs to be studied. In this paper, we propose a Belief Interval Euclidean Distance (BIED) entropy. This method includes two main factors of uncertainty measure, namely nonspecificity and discord, and can identify the quantity and similarity relationships between different focal elements. It shows outstanding performance in various numerical examples and experiments of multi-sensor data fusion. With the increase of the cardinality of focal elements, the uncertainty measure of BIED entropy exhibits a positive linear correlation similar to Deng entropy, which is available in the practical application of data fusion. Some topics related to this method are discussed as follows:

-

(1)

Computational complexity and applicability Similar to some existing methods, BIED entropy does not satisfy the additivity and subadditivity, and the fusion of mass functions relies on Dempster’s combination rule. The calculation process of BIED entropy is complicated and involves many variables, which is caused by fully reflecting the relationship between focal elements. With the increase of cardinality of focal elements, the similarity relationship will be more complex, and the computational complexity may increase exponentially, which is a problem that these existing methods have not solved. Therefore, BIED entropy is more suitable for data sets with small and medium-sized target sets, and more sensitive to mass functions with similar cardinality of focal elements. It is also useful in distinguishing several mass functions with different similarity relationships of focal elements. When the difference of cardinality is large but the similarity relationship is small, relatively simple methods such as Deng entropy can be used.

-

(2)

Physical property of BIED entropy Since BIED entropy is based on belief interval and Euclidean distance, how to reasonably use the belief function and plausibility function to measure the gap from the extreme case [0, 1] is a physical property. Similar methods, such as the \(iTU^I\), have a relatively one-sided definition of this distance, and in some cases, the results are contrary to common sense. The \(d_N\) and \(d_M\) proposed in this paper make entropy applicable to more scenes, but it is limited to a simple accumulation of focal elements with similar relations. Moreover, a reasonable physical explanation does not involve too much machine learning knowledge, which can more vividly describe the characteristics of the method and point out the research gaps for subsequent research.

-

(3)

Future research direction Since fuzzy sets can reflect multiple decision attributes, in future work, we will study the distance measure based on pythagorean hesitate fuzzy sets to further improve the nonspecificity and discord measurement of the mass function, and improve the accuracy and efficiency of uncertainty measure.

Availability of data and materials

All data and models generated or used during the study appear in the submitted article.

Code availability

The code generated or used during the study is available in a repository or online in accordance with funder data retention policies (https://codeocean.com/capsule/8789477/tree)

References

Dong X, Chisci L, Cai Y (2021) An adaptive variational Bayesian filter for nonlinear multi-sensor systems with unknown noise statistics. Signal Processing 179

Trabelsi A, Elouedi Z, Lefevre E (2023) An ensemble classifier through rough set reducts for handling data with evidential attributes. Inf Sci 635:414–429

Gao X, Pan L, Pelusi D et al (2023) Fuzzy Markov Decision-Making Model for Interference Effects. IEEE Trans Fuzzy Syst 31(1):199–212

Dempster AP (1967) Upper and Lower Probabilities Induced by a Multivalued Mapping. Ann Math Stat 38(2):325–339

Shafer GA (1978) A Mathematical Theory of Evidence. Technometrics 20(1):106

Deng X, Jiang W (2020) On the negation of a Dempster-Shafer belief structure based on maximum uncertainty allocation. Inf Sci 516:346–352

Chen Z, Cai R (2024) Symmetric Renyi-Permutation divergence and conflict management for random permutation set. Expert Systems with Applications 238

Chen L, Deng Y, Cheong KH (2023) The Distance of Random Permutation Set. Inf Sci 628:226–239

Zhu C, Xiao F (2022) A belief Rényi divergence for multi-source information fusion and its application in pattern recognition. Applied Intelligence pp 1–18

Niu J, Liu Z, Lu Y et al (2022) Evidential Combination of Classifiers for Imbalanced Data. IEEE Transactions on Systems, Man, and Cybernetics: Systems 52(12):7642–7653

Wang J, Jiang W, Tao X et al (2023) Belief Structure-Based Pythagorean Fuzzy LINMAP for Multi-Attribute Group Decision-Making with Spatial Information. Appl Intell 25(4):1444–1464

Liu Z, He X, Deng Y (2021) Network-based evidential three-way theoretic model for large-scale group decision analysis. Inf Sci 547:689–709

Xiao F, Cao Z, Jolfaei A (2021) A Novel Conflict Measurement in Decision-Making and Its Application in Fault Diagnosis. IEEE Trans Fuzzy Syst 29(1):186–197

Shannon CE (1948) A mathematical theory of communication. The Bell system technical journal 27(3):379–423

Deng J, Deng Y (2023) DBE: Dynamic belief entropy for evidence theory with its application in data fusion. Engineering Applications of Artificial Intelligence 123

Deng Y (2016) Deng entropy. Chaos, Solitons & Fractals 91:549–553

Zhou M, Zhu SS, Chen YW et al (2022) A generalized belief entropy with nonspecificity and structural conflict. IEEE Transactions on Systems, Man, and Cybernetics: Systems 52(9):5532–5545

Yang C, Xiao F (2023) An exponential negation of complex basic belief assignment in complex evidence theory. Inf Sci 622:1228–1251

Mao H, Deng Y (2022) Negation of BPA: a belief interval approach and its application in medical pattern recognition. Appl Intell 52(4):4226–4243

Li R, Chen Z, Li H et al (2022) A new distance-based total uncertainty measure in Dempster-Shafer evidence theory. Appl Intell 52(2):1209–1237

Zhang Z, Xiao F (2022) Complex belief interval-based distance measure with its application in pattern recognition. Int J Intell Syst 37(10):6811–6832

Deng X, Xiao F, Deng Y (2017) An improved distance-based total uncertainty measure in belief function theory. Appl Intell 46:898–915

Klir G, Wierman M (1999) Uncertainty-based information: elements of generalized information theory, vol 15. Springer Science & Business Media

Cui H, Liu Q, Zhang J et al (2019) An Improved Deng Entropy and Its Application in Pattern Recognition. IEEE Access 7:18284–18292

Chen Z, Luo X (2021) Uncertainty Measure of Basic Probability Assignment Based on Renyi Entropy and Its Application in Decision-Making. IEEE Access 9:130032–130041

Gao X, Liu F, Pan L et al (2019) Uncertainty measure based on Tsallis entropy in evidence theory. Int J Intell Syst 34(11):3105–3120

Kong X, Cai B, Liu Y, et al (2022) Optimal sensor placement methodology of hydraulic control system for fault diagnosis. Mechanical Systems and Signal Processing 174

Tang Y, Sun Z, Zhou D, et al (2023) Failure mode and effects analysis using an improved pignistic probability transformation function and grey relational projection method. Complex & Intelligent Systems

Liu X, Cai B, Yuan X et al (2023) A hybrid multi-stage methodology for remaining useful life prediction of control system: Subsea Christmas tree as a case study. Expert Syst Appl 215:119335

Yang C, Cai B, Wu Q, et al (2023) Digital twin-driven fault diagnosis method for composite faults by combining virtual and real data. Journal of Industrial Information Integration 33

Yager RR (1983) Entropy and specificity in a mathematical theory of evidence. Int J Gen Syst 9(4):249–260

Dubois D, Prade H (1985) A note on measures of specificity for fuzzy sets. Int J Gen Syst 10(4):279–283

Klir GJ, Ramer A (1990) Uncertainty in the Dempster-Shafer theory: a critical re-examination. Int J Gen Syst 18(2):155–166

Jousselme AL, Liu C, Grenier D et al (2006) Measuring ambiguity in the evidence theory. IEEE Transactions on Systems, Man, and Cybernetics-Part A: Systems and Humans 36(5):890–903

Chen Z, Cai R (2022) A novel divergence measure of mass function for conflict management. Int J Intell Syst 37(6):3709–3735

Zhou Q, Deng Y (2022) Higher order information volume of mass function. Inf Sci 586:501–513

Cui Y, Deng X (2023) Plausibility entropy: A new total uncertainty measure in evidence theory based on plausibility function. IEEE Transactions on Systems Man Cybernetics-Systems 53(6):3833–3844

Zhou Q, Deng Y (2022) Fractal-based belief entropy. Inf Sci 587:265–282

Xiao F (2020) A new divergence measure for belief functions in D-S evidence theory for multisensor data fusion. Inf Sci 514:462–483

Funding

No funding was received for conducting this study.

Author information

Authors and Affiliations

Contributions

All authors took part in this study.

Corresponding author

Ethics declarations

Competing interests

The authors have no relevant financial or non-financial interests to disclose.

Ethics approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A

Some commonly used notations in this article are provided in Table 13 to help readers understand.

Appendix B

-

1.

Non-negativity. This property means \(BIED\left( m\right) \ge 0\).

Proof

Suppose X is a frame of discernment, there exists a focal element \(A \subseteq X\),

If \(m\left( A \right) \) = 1, then

We know that

If \(m\left( A \right) <1\),

Equation (18) can be written as

For any subset \(B \subseteq A\), we know that

First,

Because

It is easy to know that

Second,

so

Therefore,

Then

In summary, \(BIED\left( m\right) \ge 0\). Therefore, BIED entropy has non-negativity. \(\square \)

-

2.

Probability Consistency. In the frame of discernment X, when the cardinality of all focal elements in the mass function is 1, BIED entropy will degenerate into Shannon entropy.

Proof

If \(\forall A \subseteq X\left| {\left| A \right| = 1} \right. \), and \(m\left( A \right) \ne 1\)

and

Then, Equation (18) can be written as

This form is consistent with Shannon entropy. Specifically, when \(m\left( A \right) \) = 1, \(BIED\left( m \right) \) = 0, shows the same value as Shannon entropy. Therefore, BIED entropy can degenerate into Shannon entropy. \(\square \)

-

3.

Set Inconsistency. There exists a focal element \(A \subseteq X\), and when \(m\left( A\right) =1\), \(BIED\left( A\right) \ne \log \left| A \right| \), so it does not meet Hartley measurement requirements.

Proof

For \(m\left( A\right) =1\),

then

It’s obvious that only when \(\left| A\right| = 1\), \(BIED=\log \left| A \right| \). For \(\left| A\right| \>1\), \({2^{\left| A \right| } - 1} \>\left| A\right| \), \(BIED\left( A\right) \ne \log \left| A \right| \).

Therefore, it does not satisfy set consistency. \(\square \)

-

4.

Nonadditivity. Suppose X and Y are two different frames of discernment, \(m_X\) and \(m_Y\) are two mass functions respectively. This property means \(BIED\left( {m_X \oplus m_Y } \right) \ne BIED\left( {m_X } \right) + BIED\left( {m_Y } \right) \).

Proof

According to the thought of proof by contradiction, if it satisfies additivity, then

where

And

Then, we can know that

Therefore,

\(\square \)

-

5.

Nonsubadditivity. Suppose X and Y are two different frames of discernment, \(m_X\) and \(m_Y\) are two mass functions respectively. This property indicates that \(BIED\left( {m_X } \right) \) \(+ BIED\left( {m_Y } \right) \ge BIED\left( {m_X \oplus m_Y } \right) \) does not always valid.

Proof

Suppose \(X=\left\{ x_1,x_2\right\} \) and \(Y=\left\{ y_1,y_2\right\} \) are two frames of discernment. Given two mass functions as follows

Then, the synthesized mass function \(m\left( {X \times Y} \right) \)is represented as follows

Using BIED entropy to calculate its uncertainty, the results are as follows

Because

BIED entropy does not have nonsubadditivity. \(\square \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, F., Chen, Z. & Cai, R. A belief interval euclidean distance entropy of the mass function and its application in multi-sensor data fusion. Appl Intell 54, 7545–7569 (2024). https://doi.org/10.1007/s10489-024-05563-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-024-05563-2