Abstract

Uncertainty measure in evidence theory supplies a new criterion to assess the quality and quantity of knowledge conveyed by belief structures. As generalizations of uncertainty measure in the probabilistic framework, several uncertainty measures for belief structures have been developed. Among them, aggregate uncertainty AU and the ambiguity measure AM are well known. However, the inconsistency between evidential and probabilistic frameworks causes limitations to existing measures. They are quite insensitive to the change of belief functions. In this paper, we consider the definition of a novel uncertainty measure for belief structures based on belief intervals. Based on the relation between evidence theory and probability theory, belief structures are transformed to belief intervals on singleton subsets, with the belief function Bel and the plausibility function Pl as its lower and upper bounds, respectively. An uncertainty measure SU for belief structures is then defined based on interval probabilities in the framework of evidence theory, without changing the theoretical frameworks. The center and the span of the interval is used to define the total uncertainty degree of the belief structure. It is proved that SU is identical to Shannon entropy and AM for Bayesian belief structures. Moreover, the proposed uncertainty measure has a wider range determined by the cardinality of discernment frame, which is more practical. Numerical examples, applications and related analyses are provided to verify the rationality of our new measure.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

With the sharply increasing interest in data fusion, especially for military applications, the evidence theory [3, 31], also known as the Dempster-Shafer theory, or the D-S theory for short, has attracted considerable attention for its effectiveness in modeling and fusing uncertain information. Its application has extended to many areas, such as pattern classification [24, 26], clustering analysis [27], and decision making support [2, 37]. Often presented as a generalization of probability theory, the evidence theory outperforms probability theory due to its capability in uncertainty reasoning regardless of the prior knowledge. In evidence theory, the Dempster’s rule, which is famous for its commutativity and associativity, is used to combine belief functions. Although Dempster’s combination rule is well-modeled theoretically, its lack of robustness is considered as a limitation by researchers in this field. This is because counterintuitive results are obtained in some cases, especially when there is high conflict among bodies of evidence. So alternative rules of combination have been proposed to improve classical Dempster’s rule [7, 14, 25, 29].

Another issue involving in evidence theory is how to measure the uncertainty of belief functions, which is significant for processing and assessing evidence. Three main types of uncertainty have been identified by Klir and Yuan [21]: fuzziness, discord, and non-specificity. Generally, a fuzzy set represents fuzziness, a probability distribution represents only discord, and a classical set simply represents non-specificity. Total uncertainty in evidence theory is modeled by assigning a confidence value (being not necessarily 1) to the whole universe of discourse. This is impossible in the probability-theory framework, due to the axiom of additivity imposing that the probability of an event and the probability of its complement must sum to 1. A consequence is that a belief structure defined in the framework of evidence theory can model both non-specificity and discord, whereas only discord (and even a special kind of discord) can be modeled by a probability distribution. Therefore, in evidence theory, a belief structure (or equivalently a body of evidence) conveys two types of uncertainty: discord and non-specificity.

With the development of evidence theory, various kinds of measures for discord, non-specificity, and the total uncertainty including both of them have been proposed. The discord measure [17], the strife [17], and the confusion [13] were defined to measure the discord in evidence theory. Dubois and Prade [9] generalized the Hartley entropy [12] in the classical set theory to measure the non-specificity degree of belief structure. Yager’s specificity measure [38] and Korner’s specificity definition [23] can also be used to induce non-specificity measures.

The existing total uncertainty measures are represented by the aggregated measure (AU) [11, 28] and the ambiguity measure (AM) [15]. Following Maeda’s work [28], Harmanec and Klir [11] proposed a measure of aggregate uncertainty they named AU, which was defined in the framework of evidential theory by aggregating the non-specificity and discord. It has been proved that AU satisfies the requirements proposed by Klir et al. [18]. However, it is completive in computing, and insensitive to changes of evidence. Moreover, these two types of uncertainty coexisting in evidence theory cannot be discriminated by AU. In order to overcome the insensitivity problem, Klir and Smith proposed a total uncertainty measure TU defined as a linear combination of AU and non-specificity measure. However, Jousselme et al. [15] claimed that TU measure could not solve the problem of computing complexity. The total uncertainty measure TU brings a new problem of determining the parameter δ, which is more subjective. So they presented a new measure of aggregate uncertainty for ambiguity measure (AM) [15]. This ambiguity measure satisfies all the requirements for general uncertainty measure. It can also overcome some of the shortcomings of AU. So it has been widely applied in evidence theory. However, AM measure is incapable of discriminating the uncertainty degrees of different belief structures with identical pignistic probability distribution. Particularly, these two cases, i.e., total ignorance and assigning equal basic probability mass to each element in the universe, are potentially different from each other, the uncertainty degrees of them are regarded as identical by the AM measure, which is counterintuitive.

We can note that AU, AM, and TU all develop from Shannon entropy [32] in probability theory. They are defined based on the conversion from the basic probability assignment function (BPA) to probability distribution according to some criteria or constraints. These measures are used to quantify uncertainty by calculating the Shannon entropy of the eliciting probability. Since they are not directly defined in the framework of evidence theory, the information loss caused by the conversion will bring limitations to these measures. Hence, they are all insensitive to the change of BBA. These limitations to some extent reflect the inconsistency between the framework of evidence theory and that of the probability theory [39]. Therefore, to avoid these limitations of traditional uncertainty measures, it is desirable to define an uncertainty measure in the framework of evidence theory, without the conversion from BPA to probability. As an attempt to improve existing uncertainty measures, Deng [6] has proposed Deng entropy to simplify the calculation of uncertainty of BPAs by considering total non-specificity and discord simultaneously.

In this paper, we analyze the uncertainty degree of BPA based on belief intervals consisting of lower and upper probabilities. We conclude that belief intervals convey both the discord and the imprecision, which are corresponding to the two parts in the uncertainty of BPA. So it is feasible to define a total uncertainty measure for a BPA base on probability intervals. In order to agree with the well-known Shannon entropy, we define the discord of belief intervals by borrowing Shannon’s idea. The non-specificity of probability interval is quantified by its span.

We notice that Yang and Han [39] have defined a distance-based total uncertainty measure for BPA based on belief intervals. Deng et al. [5] have improved this measure to avoid counter-intuitive results caused by it. They defined the uncertainty measure by calculating the distance between the belief intervals and the so-called most uncertain interval. Although it can overcome some limitations in traditional uncertainty measures, the choice of distance measure is still an open problem. Moreover, this uncertainty measure is not consistent with Shannon entropy when the BPA reduces into the probability distribution. Since evidence theory is usually regarded as the extension of probability theory, it is necessary to define a unified uncertainty measure for both evidence theory and probability theory. Our proposed uncertainty measure is competent enough to quantify uncertainty degree in evidence theory and probability theory simultaneously. Since there is no switch between evidence theory and probability theory, it can overcome these limitations in traditional measures. It also has desired properties including the probability consistency, the set consistency, the ideal value range and the monotonicity. Furthermore, the new uncertainty measure can provide more rational results when compared with the traditional ones, which can be supported by experimental results and related analyses.

The rest of this paper is organized as follows. Section 2 gives a brief recall of the evidence theory, together with introductions of interval-valued belief structure and operations on interval values. In Section 3, we briefly review the existing uncertainty measure for precise belief structure. A new uncertainty measure for interval-valued belief structures and its properties are proposed in Section 4. Numerical experiments are given to illustrate the performance of the proposed measure in Section 5. This paper is concluded in Section 6.

2 Basics of evidence theory

Dempster-Shafer evidence theory was modeled based on a finite set consisting of mutually exclusive elements, called the frame of discernment denoted by Θ [3]. The power set of Θ, denoted by 2Θ, contains all possible unions of the sets in Θ including Θ itself. Singleton sets in a frame of discernment Θ are called atomic sets because they do not contain nonempty subsets. The following terminologies are central in the Dempster-Shafer theory.

Let Θ = {𝜃 1,𝜃 2,⋯,𝜃 n } be the frame of discernment. A basic probability assignment (BPA) is a function m: 2 ##Θ## → [0,1], satisfying the two following conditions:

where ∅ denotes empty set, and A is any subset of Θ. Such a function is also called a basic belief assignment by Smets [33], and a belief structure (BS) by Yager [36]. The terminology of belief structure will be adopted in this paper. For each subset A ⊆ Θ, the value taken by the BPA at A is called the basic probability mass of A, denoted by m(A).

A subset A of Θ is called the focal element of a belief structure m if m(A) > 0. The set of all focal elements is expressed by F = {A|A ⊆ Θ, m(A) > 0}.

A Bayesian belief structure (BBS) on Θ is a belief structure on Θ whose focal elements are atomic sets (singletons) of Θ. A categorical belief structure is a normalized belief structure defined as: m(A) = 1, ∀A ⊆ Θ and m(B) = 0,∀B ⊆ Θ,B≠A. A vacuous belief structure on Θ is defined as: m(Θ) = 1 and m(A) = 0,∀A≠Θ.

Given a belief structure m on Θ, the belief function and plausibility function which are in one-to-one correspondence with m can be defined respectively as:

B e l(A) represents all basic probability masses assigned exactly to A and its smaller subsets, and P l(A) represents all possible basic probability masses that could be assigned to A and its smaller subsets. As such, B e l(A) and P l(A) can be interpreted as the lower and upper bounds of probability to which A is supported. So we can consider the belief degree of A as an interval number B I(A) = [B e l(A),P l(A)].

Definition 1

[33] The pignistic transformation maps a belief structure m to so called pignistic probability function. The pignistic transformation of a belief structure m on Θ = {𝜃 1,𝜃 2,⋯,𝜃 n } is given by

where |A| is the cardinality of set A.

In particular, given m(∅) = 0 and 𝜃 ∈ Θ, we have

Then we can get B e l(A) ≤ B e t P(A) ≤ P l(A).

Definition 2

[3] Given two belief structures m 1 and m 2 on Θ, the belief structure that results from the application of Dempster’s combination rule, denoted as m 1 ⊕ m 2, or m 12 for short, is given by:

3 Existing uncertainty measures for belief structures

In evidence theory, there are two types of uncertainty, namely, the discord and the non-specificity. Some definitions of discord and non-specificity have been proposed [39]. The discord measure and non-specificity measure are also aggregated in different forms to measure the uncertainty of belief structures [15].

3.1 Measure of discord in belief structure

Shannon entropy is the classical measure of discord in probability theory. It is defined as follows.

Definition 3

(Shannon entropy [32]) Let p = {p 𝜃 |𝜃 ∈ Θ} be a probability distribution defined on discernment frame Θ. Then, the Shannon entropy is defined as:

In evidence theory, measures of discord are defined to describe the randomness (or discord or conflict) in a BPA [15]. Several widely used definitions are listed below. Despite their different names, they are all proposed for measuring the discord part of the uncertainty in a belief structure.

-

(1)

Confusion measure [13]

$$ Conf(m)=-\sum\limits_{A\subseteq {\Theta}} {m(A)\log_{2} (Bel(A))} $$(9) -

(2)

Dissonance measure [38]

$$ Diss(m)=-\sum\limits_{A\subseteq {\Theta}} {m(A)\log_{2} (Pl(A))} $$(10) -

(3)

Discord measure [18]

$$ Disc(m)=-\sum\limits_{A\subseteq {\Theta}} {m(A)\log_{2} \left[ {1-\sum\limits_{B\subseteq {\Theta}} {m(B)\frac{\left| {B-A} \right|}{\left| B \right|}}} \right]} $$(11) -

(4)

Strife measure [17]

$$ Stri(m)=-\sum\limits_{A\subseteq {\Theta}} {m(A)\log_{2} \left[ {1-\sum\limits_{B\subseteq {\Theta}} {m(B)\frac{\left| {A-B} \right|}{\left| A \right|}}} \right]} $$(12)

We can note that all of above measures are defined based on Shannon entropy. Klir and Parviz have discussed these definitions in [17], where more detailed information on these measures can be found.

3.2 Measure of non-specificity for belief structure

In probability theory, belief is only assigned singleton subsets of discernment frame. So there is merely discord type uncertainty in the probabilistic framework. Compared with probability theory, basic probability masses in evidence theory may be focused on the subsets with more than one element, which will bring the non-specificity type uncertainty [10, 23]. Non-specificity means two or more alternatives are left unspecified and represents an imprecision degree. In classical set theory, the only measurable kind of uncertainty is the non-specificity, which is related to its cardinality. The Hartley measure is regarded as the standard measure of non-specificity for classical sets.

Definition 4

(Hartley measure [12]) Let Θ be a frame of discernment, and let A be any subset of Θ. Then, the Hartley measure is defined as:

In evidence theory, non-specificity is only related to the focal element whose cardinality is larger than one. Considering the basic probability mass on the focal elements, researchers have defined some non-specificity measures [9, 23, 38]. The most commonly used non-specificity measure for belief structures is developed from Hartley measure. It is defined as [9]

When the m is a Bayesian belief structure, i.e., it only has singleton focal elements, N S(A) reaches the minimum value 0. When m is a categorical belief structure, i.e., m(A) = 1, N S(A) = log 2(|A|), which coincides with the Hartley measure for classical sets. Specially, if m is a vacuous belief structure, i.e., m(Θ) = 1, it reaches the maximum value log 2(|Θ|). This definition was proved to have uniqueness by Ramer [30] and it satisfies all the requirements of the non-specificity measure.

3.3 Total uncertainty measure in evidence theory

Based on these two kinds of uncertainty measures, Klir and Wierman gave some axiomatic requirements for general uncertainty measure of belief structures [20]. Harmanec and Klir [11] defined an aggregated uncertainty (AU) to quantify the total uncertainty of a belief structure.

The definition of AU illustrates that it is the maximum Shannon entropy of all probability distributions under the constraints corresponding to the given BPA. Therefore, it is also called as “upper entropy” [1]. It is can be seen as an aggregated total uncertainty (ATU) measure, which captures both non-specificity and discord. It can be proved that AU satisfies all the requirements for uncertainty measure in evidence theory, including probability consistency, set consistency, value range, monotonicity, sub-additivity and additivity for the joint BBA in Cartesian space [20]. So it is regarded as a well-justified measure of uncertainty for Dempster-Shafer theory. However, this measure is stuck with several shortcomings, i.e., high computing complexity, highly insensitive to changes of evidence, and no distinction between the two types of uncertainty (discord and non-specificity) [9]. In order to overcome the sensitivity problem, Klir and Smith proposed a total uncertainty measure TU defined as a linear combination of AU and non-specificity measure N [19].

Definition 5

(Total Uncertainty [19]) Let m be a BPA defined on a discernment frame Ω, then the total uncertainty T U(m,δ) is a linear combination of the non-specificity measure N(m) and A U(m) as follows:

where δ ∈ [0,1] is a constant, viewed as a discounting factor of AU.

It can be proved that TU satisfies all the requirements for uncertainty measure. However, Jousselme et al. [15] pointed that TU measure could not solve the problem of computing complexity, and bought a new problem with the choice of the linear parameter δ. So they presented an alternative measure to AM for quantifying ambiguity of belief structures [15].

Definition 6

(Ambiguity Measure [15]) Let Θ be a frame of discernment with nelements, Θ = {𝜃 1,𝜃 2,⋯,𝜃 n }, and let m be a BPA defined on Θ. An ambiguity measure AM can be defined as:

where B e t P m is the pignistic probability distribution of m.

Besides satisfying all the requirements for general uncertainty measures, the ambiguity measure also overcomes some of the shortcomings of the AU measure. The AM measure has been a popular uncertainty measure for belief structures thanks to its good performance. However, it is not sensitive to the change of belief structures, particularly, it cannot discriminate the uncertainty degrees of different belief structures with identical pignistic probability distribution. This will be demonstrated by the following example.

3.4 Analysis on available total uncertainty measures

The traditional total uncertainty measures have their own draw-backs. AM cannot satisfy the sub-additivity (for joint BBA in Cartesian space) which has been pointed out by Klir and Lewis [22]. Moreover, AM is criticized due to its logical non-monotonicity [22]. Some examples will be presented to analyze the performance of existing total uncertainty measures.

Example 1

The discernment frame is denoted as Θ = {𝜃 1,𝜃 2,𝜃 3}. Two BPAs are given as:

The belief function and plausibility function of m 1 and m 2 can be obtained as follows:

From these belief and plausibility functions, we can get:

The belief intervals corresponding to m 1 and m 2 can be expressed by B I 1(A) = [B e l 1(A),P l 1(A)] and B I 2(A) = [B e l 2(A),P l 2(A)], respectively. Then we have B I 1(A) ⊆ B I 2(A), ∀A ⊆ Θ. That is to say, all the belief intervals of m 1 can be contained by those corresponding belief intervals of m 2, which means that m 2 has a higher level of uncertainty. However, their corresponding AM and AU listed below are counter-intuitive.

We can see that AM decreases the total uncertainty of m 2 in this example although there is a clear increment of uncertainty of m 2 compared with that of m 1. The AU measure does not bring any decrease on the uncertainty of m 2, but it generates two equal values, which is also counter-intuitive, since the uncertainty degree of m 2 should be higher than that of m 1.

Taking a closer examination of AU, we can note that AU tries to find a probability to maximize the Shannon entropy. In this example, the Shannon entropy gets its maximum value log 23 = 1.5850 when the probability masses assigned to all singletons are equal, i.e., p({𝜃 1}) = p({𝜃 2}) = p({𝜃 3}) = 1/3. Moreover, the uniform probability distribution p(𝜃 1) = p(𝜃 2) = p(𝜃 3) = 1/3 satisfies all the constraints (shown in Eq. 15) established by Bel 1, Pl 1 and Bel 2, Pl 2. Therefore, both AU 1 and AU 2 take the maximum Shannon entropy value log 23 = 1.5850.

Example 2

Let Θ = {𝜃 1,𝜃 2,𝜃 3,𝜃 4} denote the discernment frame. Three belief structures on Θ are given as:

It is easy to get that B e t P m ({𝜃 1}) = B e t P m ({𝜃 2}) = B e t P m ({𝜃 3}) = B e t P m ({𝜃 4}) = 0.25 for all of these belief structures, i.e., they have the same pignistic probability distribution. Therefore, their uncertainty degrees calculated by AM are identical. We can also get AU(m 1) = AU(m 2) = AU(m 3) = 2. Intuitively, among these four belief structures, m 1 represents totally ignorance, so it is the most uncertain. As for m 3, the basic probability masses assigned to four singleton focal elements are equivalent. m 3 indicates that the support degree of each element is certain, while other two belief structures give interval probabilities for each element. Hence, the uncertain degree of m 3 should be the smallest. However, the AM measure cannot discriminate the differences hidden in the uncertainty of these three belief structures.

As we can see above, both AM and AU are defined in the framework of probability theory. They are calculated by transforming a BPA to certain probability distribution. Such a switch between different frameworks might cause problems in representing the uncertainty in belief functions. Since the belief functions theory is not a successful generalization of the probability theory, there exists inconsistency between the two frameworks. Information loss may be caused in such switch, which brings drawbacks to AM and AU. In our work, we will design the uncertainty measure of belief structures directly in the framework of evidence theory as introduced in the next section.

4 Uncertainty measure based on interval probabilities

4.1 Belief intervals and interval probabilities

In evidence theory, the belief interval [Bel(A), Pl(A)] can be obtained based on the basic probability assigned to each focal element. The belief intervals on all singletons can be regarded as interval probability distribution on the discernment frame. To facilitate the following exposition, we would like give some background knowledge about interval probability distribution [8, 34, 35].

Definition 7

Let Θ = {𝜃 1,𝜃 2,⋯,𝜃 n } be the frame of discernment, [a i ,b i ] (i = 1,2,⋯,n) be n intervals with 0 ≤ a i ≤ b i ≤ 1. P(𝜃 i ) = [a i ,b i ] (i = 1,2,⋯,n) constitute an interval probability distribution on Θ such that:

-

(1)

\(\sum \limits _{i=1}^{n} {a_{i}} \le 1{\kern 1pt}{\kern 1pt}and{\kern 1pt}{\kern 1pt}\sum \limits _{i=1}^{n} {b_{i}} \ge 1\);

-

(2)

\(\sum \limits _{i=1}^{n} {b_{i}} -(b_{k} -a_{k} )\ge 1\textit {and}\sum \limits _{i=1}^{n} {a_{i}} +(b_{k} -a_{k} )\le 1\forall k\in \left \{ {1,{\cdots } ,n} \right \}\);

-

(3)

p(H) = 0, ∀H∉Θ.

Theorem 1

For a belief structure m on the discernment frame Θ = {𝜃 1,𝜃 2,⋯ ,𝜃 n }, all belief intervals [B e l(𝜃 i ),P l(𝜃 i )]( i = 1,2,⋯,n)constitute an interval probability distribution on Θ.

Proof

Let F j (j = 1,2,⋯,N) be the focal elements of m, F be the set of focal elements. Non-singleton focal elements in F is expressed by B 1,B 2,⋯,B q , q ≤ N. We can get 0 ≤ B e l(𝜃 i ) ≤ P l(𝜃 i ) ≤ 1 by definitions of belief function and probability function.

-

(1)

For a singleton 𝜃 i ∈ Θ, B e l(𝜃 i ) and m(𝜃 i ) are identical. Then we have:

$$\sum\limits_{i=1}^{n} {Bel(\theta_{i} )} =\sum\limits_{i=1}^{n} {m(\theta_{i} )} \le \sum\limits_{j=1}^{N} {m(F_{j} )} =1. $$According to \(Pl(\theta _{i} )=\sum \limits _{F_{j} \cap \theta _{i} \ne \emptyset } {m(F_{j} )} =m(\theta _{i} )+\sum \limits _{B_{k} \cap \theta _{i} \ne \emptyset } {m(B_{k} )} \), we can get:

$$\begin{array}{l} \sum\limits_{i=1}^{n} {Pl(\theta_{i} )} =\sum\limits_{i=1}^{n} {\left( {m(\theta_{i} )+\sum\limits_{B_{k} \cap \theta_{i} \ne \emptyset} {m(B_{k} )}} \right)} \\ \,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\ge \sum\limits_{i=1}^{n} {m(\theta_{i} )} +\sum\limits_{k=1}^{q} {m(B_{k} )} =\sum\limits_{j=1}^{N} {m(F_{j} )} =1 \end{array}. $$ -

(2)

Given an arbitrary non-singleton focal elements B j satisfying B j ∩ 𝜃 k ≠∅, ∀𝜃 k ∈ Ω, we have ∃𝜃 i ∈ Ω, 𝜃 i ≠𝜃 k , B j ∩ 𝜃 i ≠∅. So the following inequality holds:

$$\sum\limits_{i\ne k} {\sum\limits_{B_{j} \cap \theta_{i} \ne \emptyset} {m(B_{j} )}} \ge \sum\limits_{j=1}^{q} {m(B_{j} )} . $$For ∀k ∈ {1,2,⋯,n}, we have

$$\begin{array}{@{}rcl@{}} \sum\limits_{i\ne k} {Pl(\theta_{i} )} &=&\sum\limits_{i\ne k} {\left( {\sum\limits_{F_{j} \cap \theta_{i} \ne \emptyset} {m(F_{j} )}} \right)}\\ &=&\sum\limits_{i\ne k} {\left( {m(\theta_{i} )+\sum\limits_{B_{j} \cap \theta_{i} \ne \emptyset} {m(B_{j} )}} \right)} \\ &=&\sum\limits_{i\ne k} {m(\theta_{i} )} +\sum\limits_{i\ne k} {\left( {\sum\limits_{B_{j} \cap \theta_{i} \ne \emptyset} {m(B_{j} )}} \right)}\\ &\ge& \sum\limits_{i\ne k} {m(\theta_{i} )} +\sum\limits_{j=1}^{q} {m(B_{j} )} \\ &=&\sum {m(F_{j} )} -m(\theta_{k} ) \\ &=&1-Bel(\theta_{k} ) \end{array} $$That is to say \(\sum \limits _{i=1}^{n} {Pl(\theta _{i} )} -Pl(\theta _{k} )\ge 1-Bel(\theta _{k} )\) holds for any k ∈ {1,2,⋯,n}. So we get \(\sum \limits _{i=1}^{n} {Pl(\theta _{i} )} -\left ({Pl(\theta _{k} )-Bel(\theta _{k} )} \right )\ge 1\), ∀k ∈ {1,2,⋯,n}.

As such, for ∀k ∈ {1,2,⋯,n}, we still have

$$\begin{array}{l} \sum\limits_{i\ne k} {Bel(\theta_{i} )} \,=\,\sum\limits_{i\ne k} {m(\theta_{i} )} =\sum {m(F_{j} )} -\sum {m(B_{j} )} -m(\theta_{k} ) \\ {\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 7pt}\!\le\! \sum {m(F_{j} )} -\sum\limits_{B_{j} \cap \theta_{k} \ne \emptyset} {m(B_{j} )} -m(\theta_{k} ) \\ {\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 7pt}\,=\,1-\sum\limits_{F_{j} \cap \theta_{k} \ne \emptyset} {m(F_{j} )} =1-Pl(\theta_{k} ) \end{array}, $$which means \(\sum \limits _{i=1}^{n} {Bel(\theta _{i} )} -Bel(\theta _{k} )\le 1-Pl(\theta _{k} )\) holds for any k ∈ {1,2,⋯,n}. Thus, \(\sum \limits _{i=1}^{n} {Bel(\theta _{i} )} +\left ({Pl(\theta _{k} )-Bel(\theta _{k} )} \right )\le 1\), ∀k ∈ {1,2,⋯,n}.

-

(3)

p(H) = 0, ∀H∉Θ is straightforward.

So all belief intervals [B e l(𝜃 i ),P l(𝜃 i )] (i = 1,2,⋯,n) constitute an interval probability distribution over Θ.

□

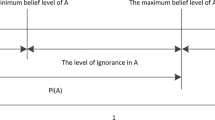

On the basis of interval probabilities, we can measure the uncertainty of belief structures. In an interval probability distribution [B e l(𝜃 i ),P l(𝜃 i )] over Θ = {𝜃 1,𝜃 2,⋯,𝜃 n }, its uncertainty degree can be represented by the interval as analyzed below.

-

Case I:

The most uncertain case is Bel(𝜃 i ) = 0 and Pl(𝜃 i ) = 1 for each 𝜃 i ∈ Θ, i.e., the probability intervals of all elements are all equal to [0,1]. The equal probability intervals indicate the greatest discord degree, and the interval [0,1] represents the largest non-specificity.

-

Case II:

The clearest case is B e l(𝜃 k ) = P l(𝜃 k ) = 1 and B e l(𝜃 i ) = P l(𝜃 i ) = 0 for i≠k. That is to say, 𝜃 k is assured to occur, while others are assured to never occur.

-

Case III:

When B e l(𝜃 i ) = a i ,P l(𝜃 i ) = b i , ∀a i ,b i ∈ [0,1], the probability interval of 𝜃 i is [a i ,b i ]. For 𝜃 i , the degree of non-specificity can be represented by its imprecision b i − a i . The degree of discord is quantified by the relation between all probability intervals.

-

Case IV:

When B e l(𝜃 i ) = P l(𝜃 i ) = a i , ∀a i ∈ [0,1], \(\sum \limits _{i=1}^{n} {a_{i}} =1\), the probability interval reduces to a precise probability distribution. It means that there is no imprecision and whether 𝜃 i occurs or not cannot be clearly determined.

Therefore, the information related to the uncertainty carried by a probability interval includes both the discord part and the non-specificity part, which coincides with the construction of the BPA’s uncertainty. Thus, given a BPA, we can fully utilize the information of the probability intervals to measure its total uncertainty. Then, how to use the information of belief intervals? Given the interval probability [B e l(𝜃 i ),P l(𝜃 i )] Θ = {𝜃 1,𝜃 2,⋯,𝜃 n }, we can use the relationship between all central values (P l(𝜃 i ) + B e l(𝜃 i ))/2 to assess the discord degree, and use the imprecision degree P l(𝜃 i ) − B e l(𝜃 i ) to measure the non-specificity degree. Then, a new uncertainty measure for belief structures can be constructed based on probability intervals.

4.2 Interval probability-based uncertainty measure

As is mentioned previously, this belief interval is equivalent to an interval probability distribution whose lower and upper bounds are the belief function B e l and the plausibility function P l, respectively. For an interval probability distribution, its uncertainty contains both discord and non-specificity. The discord part represents the difference between the probability masses assigned to all propositions. In other words, discord is determined by the distribution of all probability masses. So we can use the Shannon entropy to quantify the discord of interval probability distribution. Moreover, interval values can be compared by comparing their central values. Therefore, we can measure the discord of interval probability distribution on the basis of Shannon entropy and central probabilities. We have also pointed that the non-specificity of interval probability can be quantified by its imprecision degree, which is related to the span of interval probability. So in the definition of uncertainty measure for belief structure, both the Shannon entropy and the span of the interval probability should be taken into account. Hence, the interval probabilities-based uncertainty measure for a belief structure can be defined as follow.

Definition 8

Let m be a belief structure on the discernment frame Θ = {𝜃 1,𝜃 2,⋯,𝜃 n }. The interval probabilities-based uncertainty measure of m can be expressed as below:

The newly proposed SU has no drawbacks as those of AU and AM pointed out in Examples 1 and 2. Detailed comparative analysis will be presented in Section 5.

Here, we provide an illustrative example to show the calculation of SU.

Example 3

Suppose that the discernment frame is Θ = {𝜃 1,𝜃 2,𝜃 3}. A BPA m over Θ is m({ 𝜃 1} ) = 0.2, m({ 𝜃 1} ) = 0.1, m({ 𝜃 2,𝜃 3} ) = 0.4, m(Θ) = 0.3.

First calculate the belief function and plausibility function for singletons.

The probability intervals are:

The total uncertainty of m can be calculated as:

We find that the uncertainty degree of m is 2.4286, which is greater than log 23 = 1.585. It seems that the proposed uncertainty measure violated the requirement about the range of uncertainty degree [20]. In the flowing subsection, we will discuss the range of the proposed SU.

4.3 Some properties of the proposed SU

Theorem 2

The uncertainty measure SU is probability consistency, i.e., when m reduces to a Bayesian belief structure, SU is identical to Shannon entropy.

Proof

If m reduces to a Bayesian belief structure on Θ = {𝜃 1,𝜃 2,⋯ ,𝜃 n }, m({𝜃 i }) = p i for i = 1,2,⋯,n we can get: B e l({𝜃 i }) = P l({𝜃 i }) = p i . So the interval probability distribution reduces to a precise probability distribution over Θ = {𝜃 1,𝜃 2,⋯,𝜃 n }.

Then it follows that

Hence, SU is identical to the Shannon entropy. □

Theorem 3

The range of measure SU is [0, |Θ|], where |Θ|is the cardinality of Θ.

Proof

For 0 ≤ x ≤ y ≤ 1, we can define a function f(x,y) as:

In order to obtain the maximum of f(x,y), we should calculate the derivative:

Given 0 ≤ x ≤ y ≤ 1, 0 ≤ x + y ≤ 2 < e, we have:

-

1).

\(\frac {\partial f}{\partial x}>0{\kern 1pt}{\kern 1pt}\) for \({\kern 1pt}x+y<\frac {1}{e}\), \(\frac {\partial f}{\partial x}<0{\kern 1pt} {\kern 1pt}{\kern 1pt}\)for \(x+y>\frac {1}{e}\), and \(\frac {\partial f}{\partial x}=0{\kern 1pt}{\kern 1pt}\) for \({\kern 1pt}x+y=\frac {1}{e}\);

-

2).

\(\frac {\partial f}{\partial y}>0\) for \({\kern 1pt}{\kern 1pt}{\kern 1pt}{\kern 1pt}x+y<\frac {4}{e}\), \(\frac {\partial f}{\partial y}<0{\kern 1pt}{\kern 1pt}\) for \(x+y>\frac {4}{e}\), and \(\frac {\partial f}{\partial y}=0{\kern 1pt}\) for \(x+y=\frac {4}{e}\).

Therefore, f(x, y) is not a monotone function on x or y. The maximum of f can be obtained at the stationary point or the bounds of (x,y). Moreover, \(\frac {\partial f}{\partial x}=0\) and \(\frac {\partial f}{\partial y}=0\) cannot hold simultaneously, i.e. the stationary point doesn’t exist. Hence, we can get the maximum of f at three possible positions: (0, 1), (0, 0), and (1,1).

Since f(1,1) = 0, f(0,0) = 0, f(0,1) = 1, we can get f max(x,y) = f(0,1) = 1.

The graph of the function shown in Fig. 1 also illustrates f max(x,y) = f(0,1) = 1, which is identical to the theoretical analysis.

Therefore, for a belief structure m on Θ = {𝜃 1,𝜃 2,⋯,𝜃 n }, S U(m) can get its maximum value S U max(m) = n = |Θ|if and only if B e l(𝜃 i ) = 0,P l(𝜃 i ) = 1, for i = 1,2,⋯,n. This case can be obtained iff m is a vacuous belief structure.

Now, we are looking for the lower bound.

Since 0 ≤ B e t(𝜃 i ) ≤ P l(𝜃 i ) ≤ 1, we can have

So S U(m) get its minimum by equaling the two functions to 0 for all A i ∈ Ω, which indicates that the focal elements of m are all singleton.

Then we have:

Considering the condition that \(\sum \limits _{i=1}^{n} {m(\theta _{i} )} =1\), we can get:

Therefore, S U(m) can get its minimum when m is a categorical belief structure.

Finally, we can get the range of SU is [0,|Ω|]. □

This theorem indicates that a vacuous BPA contains the most uncertainty, while a categorical BPA with singleton focal element has the least uncertainty, which coincides with the intuitive analysis. It is worth noting that the range of SU is identical to the axiomatic range of uncertainty proposed by Deng [4], but different with Klir and Wierman’s requirement that the range of uncertainty measure is [0,l o g 2|Θ|] [20]. Klir has also mentioned that the range of uncertainty for evidence is [0, N], where 0 must be assigned to the unique uncertainty function that describes full certainty and N depends on the cardinality of the universal set involved and on the chosen unit of measurement [16]. It is apparent that the range of our similarity measure satisfies this requirement.

Theorem 4

If a BPA m is a categorical BPA focusing on A, then the uncertainty of m is |A|.

Proof

Suppose that m is a categorical BPA on Θ = {𝜃 1,𝜃 2,⋯,𝜃 n } and m(A) = 1. Without any loss of generality, we can let A = {𝜃 1,𝜃 2,⋯,𝜃 k }, k ≤ n.

Then we can get the belief function and plausibility function for each singleton:

So we can calculate the uncertainty of m as :

Hence, the uncertainty of m is identical to the cardinality of A. □

We notice that m(A) = 1 says that A is assure to occur. In such case, the belied structure m coincides with a classical set A. Since the set consistency in [20] is proposed on the basis of Hartley measure, this theorem indicates that the measure SU is not set consistent. However, we have in mind that the uncertainty degree of a classical set is related to its cardinality. So we can give the generalized form of uncertainty measure for classical set A as:

where f is an increasing function with respect to |A|.

In such sense, we can say our proposed uncertainty measure SU is set consistent. Considering the generalized form of uncertainty measure for classical set, we call this set consistency as generalized set consistency.

5 Comparative examples

Firstly, let us revisit Example 1. By Definition 8, we can get that SU(m 1) = 2.5546, SU(m 2) = 2.6929. This indicated that m 2 has a higher level of uncertainty, which is consistent to previous intuitive analysis.

Then we reconsider Example 2, where the three belief structures have the same pignistic probability. By Eq. (18), we have S U(m 1) = 4, S U(m 2) = 3, S U(m 3) = 2. We can note that our proposed uncertainty measure SU is competent to discriminate the different uncertainty level for different belief structures, although they have the same pignistic probability distribution. This result coincides with our previous intuitive analysis.

In the sequel, examples presented in [39] are used for reference.

Example 4

Let m be a belief structure in Θ = {𝜃 1,𝜃 2}. A BPA is given as: m({𝜃 1}) = a, m({𝜃 2}) = b, m(Θ) = 1 − a − b, a,b ∈ [0,0.5]. Here, we calculate AU, AM, SU values corresponding to different values of a and b. The change of AU, AM and SU values with the change of a and b are illustrated in Fig. 2.

As shown in Fig. 2, AM reaches its maximum value when a = b, regardless of the value of a and b. Because when a = b, the pignistic probability is uniformly distributed, i.e., BetP({ 𝜃 1}) = BetP({ 𝜃 2}) = 1/2. This is counter-intuitive, as analyzed in Example 2. The effect of total ignorance to the uncertainty degree cannot be reflected by AM. For example, m 1({ 𝜃 1}) = m 1({ 𝜃 2}) = 0.5 and m 2({ 𝜃 1}) = m 2({ 𝜃 2}) = 0.25, m 2(Θ) = 0.5, there exists AM(m 1) = AM(m 2). Obviously, it is irrational to say that m 1 and m 2 have the same degree of total uncertainty.

AU never changes with the change of a and b. The value of AU is always log 22 = 1. Since Bel({ 𝜃 1}) = a, Pl({ 𝜃 1}) = a + 1 − a − b = 1 − b, Bel({ 𝜃 2}) = b, Pl({ 𝜃 2}) = b + 1 + a − b = 1 − a, the constraints for calculating AU in this example are:

In the calculation of AU, we try to find a probability distribution with the maximum Shannon entropy. Since a,b ∈ [0,0.5], the uniformly distributed p({𝜃 1}) = p({𝜃 2}) = 0.5 always satisfies the constraints above. So no matter how a and b change, p({𝜃 1}) = p({𝜃 2}) = 0.5 is always taken when calculating AU. Hence, AU always equals to log 22 = 1. However, intuitively, the degree of uncertainty should change with the change of a and b. So, AU cannot well describe the degree of total uncertainty here.

In Fig. 2, we can see that SU brings out rational results. It reaches the maximum when a = b = 0, i.e., m(Θ) = 1. When a + b is fixed, the maximum values are reached if a = b holds. For example, suppose that a + b = 0.3. The change of SU value with respect to a is shown in Fig. 3. We can see that SU get the maximum value when a = 0.15, i.e., a = b = 0.15. Since the fixation of a + b indicates that m(Θ) = 1 − (a + b) is fixed, the non-specificity part is fixed. The case of a = b makes the discord part reach its maximum value. Therefore, the maximum SU can be reached when a = b.

Similarly, in the case of a = b, the value of SU with the change of a is shown in Fig. 4. We can see that when a = b, the value of SU is decreasing with the increase of a. It is shown that the uncertainty degree gets the maximum value when a = b = 0. Since a = b indicates that the discord part uncertainty is equal to 1, the value of SU is determined by the non-specificity part uncertainty, which is related to the basic probability assigned to Θ. So SU gets the maximum value in the case of a = b = 0. This is consistent to intuitive.

Example 5

Suppose that the discernment frame is Θ = { 𝜃 1,𝜃 2,𝜃 3} and a BPA over Θ is m(Θ) = 1. We make changes to the BPA step by step. In each step, m(Θ) has a decrease of Δ = 0.05 and each singleton mass m({ 𝜃 i }), i = 1,2,3 has an increase of Δ/3. Finally, m(Θ) reaches 0 and m({ 𝜃 i }) = 1/3. We calculate total uncertainty measures including SU, AM, AU, at each step. The changes of uncertainty values are shown in Fig. 5.

We can see that, the total uncertainty measures AU and AM stay unchanged and they are always at the value log 23. Intuitively, when the mass assignments are transferred from the full set Θ to those singletons, the uncertainty level should decrease. Hence, the results of AU and AM are counter-intuitive. The counter-intuitive results of AU and AM are caused by their definitions based on some probabilistic transformation from BPAs. In this example, for the probabilistic transformation used in the calculation of AM and AU, a uniformly distributed probability: P(𝜃 i ) = 1/3, i = 1, 2, 3, is applied. Therefore, AM and AU never change, when the BPA changes from a vacuous BPA to a Bayesian BPA in the final. As we can see in Fig. 4, our new measure SU can provide rational results. It becomes smaller and smaller when the BPA changes from a vacuous one to a Bayesian one. Therefore, it is inappropriate to define the total uncertainty measure for belief structures by switching the evidence theory framework to probability framework. It should be better not to directly design the total uncertainty measure in the framework of evidence theory. This is also the motivation of our work in this paper.

Example 6

Suppose that the discernment frame is Θ = { 𝜃 1,𝜃 2,𝜃 3} and a BPA over Θ is m(Θ) = 1. We make changes to the BPA step by step. In each step, m(Θ) has a decrease of Δ = 0.05 and the singleton mass m({ 𝜃 1}) has an increase of Δ. In the final step, m(Θ) = 0 and m({ 𝜃 1}) = 1. We calculate total uncertainty measures including SU, AM, AU, at each step. The change of uncertainty values are shown in Fig. 6.

In this example, the BPA changes from a vacuous one to a categorical one. As shown in Fig. 5, the total uncertainty values calculated based on AU, AM and SU all become smaller and smaller, which is intuitive. However, we note that AU is not sensitive to the BPA’s change at the beginning stage. This can be analyzed as following. Since constraint for calculating AU is B e l({𝜃 1}) ≤ p({𝜃 1}) ≤ 1, the uniformly distributed p({𝜃 1}) = p({𝜃 2}) = p({𝜃 3}) = 1/3 always satisfies the constraints above when if B e l({𝜃 1}) = m({ 𝜃 1}) ≤1/3. So the uncertainty degree AU stays unchanged in the first 6 steps. This has already been criticized by Jousselme et al. [15]. Although both AM and SU change with the change of BPA in each step, we can see that the SU measure is more sensitive to the change of BPA.

Example 7

Suppose that the discernment frame is Θ = { 𝜃 1, 𝜃 2, 𝜃 3, 𝜃 4, 𝜃 5, 𝜃 6, 𝜃 7, 𝜃 8}. A BPA over Θ is m(Θ) = 1. We make changes to the BPA step by step. In each step, m(Θ) has a decrease of Δ = 0.05, and the mass assignment for one focal element B with cardinality s<8 has an increase of Δ = 0.05. In the final step, m(B) = 1. Here, we can set s = 2, 4, 6, respectively. Given an s value, we repeat the whole procedure of BPA change. Under the different s, we investigate the changes of values for uncertainty measures including SU, AM and AU.

For the convenience of comparison, we normalize the uncertainty values in the interval [0,1]. The normalized uncertainty measures corresponding to AU, AM and SU are defined as following, respectively.

where n is the cardinality of discernment frame.

For different cardinality of B, the changes of values for normalized uncertainty measures including S U ′, A U ′, and A M ′ are shown in Fig. 7.

As shown in Fig. 7, the total uncertainty SU, AU, and AM all decrease when more mass assignments are transferred to B. Intuitively, when the mass assignments are transferred to a focal element with smaller cardinality, the non-specificity decreases faster. So a smaller s will bring a greater decrease on the total uncertainty. Comparatively, AM is not sensitive to the change of the BPA, since it is calculated based on the pignistic probability, which changes slightly with the change of BPAs. In Fig. 7, we can also see that in the earlier steps, AU stays unchanged for different s. Moreover, with the increase of s, the unchanged stage of AU is extended. The reason is analyzed as follows. At the beginning, the BPA is a vacuous one, the uncertainty degree AU can obtain its maximum value in the case of p({ 𝜃 i }) = 1/8, i = 1,2,⋯,8. When more probability masses are transferred to B, from the constrains for the calculation of AU, we can get that the uniformly distributed probability can be used to calculate AU in the case of m(B) ≤ s/8. Then it follows that AU = log 28 = 3 always holds if N ≤ 2.5s, N is the step number. Therefore, AU stays unchanged in the first 2.5s steps.

Example 8

Suppose that the discernment frame is Θ = { 𝜃 1, 𝜃 2, 𝜃 3, 𝜃 4, 𝜃 5, 𝜃 6, 𝜃 7, 𝜃 8, 𝜃 9, 𝜃 10}. A BPA over Θ is m(Θ) = 1-a and m(A) = a, |A| = s ≤ 10. Given an a, and the initial |A| = 1, we make changes to the BPA step by step. |A| increases by 1 in each step. In the final, the BPA becomes a vacuous one. We set a = 0.3, 0.5, 0.8, respectively. Given an a value, we repeat the whole procedure of BPA change. Under the different a, the changes of normalized uncertainty measures including S U ′, A U ′ and A M ′ are shown in Fig. 7.

As shown in Fig. 8, total uncertainty measures including AU, AM, and our SU all increase with the change of BPA at each step. This is intuitively reasonable, since the increase of cardinality of A will make the non-specificity increase. It can be noted that if a is given a larger value, they will increase faster. Because with the increase of |A|, relatively more mass assignments are transferred to a larger size focal element. Figure 8 shows that AU stay unchanged in when |A| is larger than a certain value. By the definition of AU, we can get that when |A| ≥ 10a, AU get its maximum value log 210. Compared with our proposed SU, AM is not sensitive to the BPA’s change.

From above illustrative examples, we can see that our proposed uncertainty measure SU is more sensitive to the change of BPAs. Due to the complicity of optimization algorithm, the calculation burden of AU is heavier those of SU and AM. Moreover, since our proposed uncertainty measure SU prevents the probabilistic switch of BPA, it is more convenient for direct application.

6 Application of uncertainty measure SU

In this section, we apply our new total uncertainty measure in the evaluation of sensors’ importance for identification fusion to further show its rationality.

A successful application of evidence theory is identification fusion, which has received considerable attention in both military and civilian areas [24, 26, 27]. In target identification, due to the limitation of sensors and interference from environments, the information derived from different sensors is usually imperfect. Hence, the target cannot be identified by single sensor. It is necessary to fuse information coming from multiple sensors to achieve better results. Due to its flexibility in combination and ease of use by the decision maker, evidence theory has been widely applied in multi-sensor data fusion.

When fusing the information coming from different sensors, it is necessary to access the importance of each sensor. Generally, the importance of sensor is quantified by a weighting factor, which is evaluated based on the similarity (distance) between BPAs provided by different sensors [7, 25]. If the BPA provided by a sensor is similar to others, then it is supported by other sensors in a great degree, and should be assigned a higher weighting factor. In our opinion, the importance of a sensor is also determined by the quality of the information it provided. We can suppose that if the information has a lower uncertainty degree, the corresponding sensor is more important. So the weighting factor of a sensor is simultaneously determined by the similarity degree between BPAs and the uncertainty degree of the BPA corresponding to it. Here, we divide a sensor’s importance into two parts, credibility and discriminability, which are determined by similarity (distance) measure and uncertainty measure, respectively. Intuitively, the sensor with smaller total uncertainty measure should be better (have higher discriminability). Hence, based on the uncertainty measure, we can get the discriminability part importance degree of each sensor. Considering the range of each uncertainty measure, we can use the normalized uncertainty measures to calculate the discriminability.

In a target recognition system based on multi-sensor, p sensors S 1,S 2,⋯,S p are employed to identify the target. The BPAs obtained from these sensors are m 1,m 2,⋯,m p , which are defined over the discernment frame Θ = {𝜃 1,𝜃 2,⋯,𝜃 n }. Then the relative discriminability of each sensor can be calculated by:

where U ′ is the normalized uncertainty measure for belief structure.

Suppose that five sensors S 1,S 2,⋯,S 5 are employed to classify the identification of aerial targets. Three possible types of targets are Airplane, Helicopter, and Rocket. denoted as A, H and R, respectively. So the discernment frame Ω can be written as {A,H,R}. The sensor readings on the classes are expressed by the BBAs detailed as following:

The normalized uncertainty values of each BPA calculated based on S U ′, A U ′ and A M ′ are shown in Table 1. We can see that the uncertainty values calculated by our proposed SU are different to each other. So the discriminability of each sensor can be ranked as D 2> D 5> D 4> D 3> D 1. For uncertainty measure AU, it is shown that AU(m 2) = AU(m 5) and AU(m 3) = AU(m 4). Hence, the discriminability degrees of S 2 and S 5 are evaluated as equal, so it is with S 3 and S 4. Table 1 also shows that the uncertainty measure AM is not sensitive to the difference between of m 3 and m 4. Based on AM, the discriminability of sensor S 3 and S 4 cannot be well ranked.

For clarity, Table 2 presents the relative discriminability degree derived from normalized uncertainty values. We find that uncertainty measure SU can provide the discriminability order of all sensors. The largest discriminability degree is assigned to the sensor S 2 by SU and AM, and the smallest one is assigned to S 1 by SU and AM. However, S 3 and S 4 get the same discriminability degree based on AM. Table 2 shows that AU assigns the largest discriminability to S 2 and S 5, and the second largest one to S 3 and S 4. So it is difficult to determine the discriminability of each sensor based on AU.

In summary, our proposed uncertainty measure SU can be well applied in discriminability evaluation for identification fusion based on multi-sensor, and it outperforms AM and AU.

The relative discriminability can be used to evaluate the importance of each sensor in information fusion. Considering the method proposed in [7], we can fuse the information provided by five sensors by the modified Murphy’s combination rule [29]. The weighted average of all BPAs can be given as:

Based on the proposed uncertainty measure SU, we can get the weighting factor of each sensor as:

So the weighted average BPA can be obtained as:

Then we use the classical Dempster’s rule to combine the weighted average BPA 4 times, which is the same as Murphy’s approach [29]. The final result can be expressed as:

Hence, the target is recognized as an Airplane. Reexamining these BPAs provided by five sensors, we can note that all of them assign the maximum probability mass to the focus element {A}. This indicates that their fusion result should also pointed to {A} as the identification of this target. Fortunately, the combination rule based on our proposed uncertainty measure and modified Murphy’ approach can get reasonable result identical to intuitive analysis.

7 Conclusions

In this paper we mainly investigated the uncertainty measure of belief structures. An alternative uncertainty measure is defined based on interval probabilities. We first critically review existing uncertainty measures for belief structures. Then we prove that the interval probability [Bel (⋅), Pl (⋅)] is an interval probability distribution. The presentation of our uncertainty measure SU then follows. It has been proved that SU is probability consistency. The range of SU is illustrated by both theoretical proof and numerical examples. Although this wider range is different from that of existing measures, it is determined by the cardinality of the discernment frame, which is of great significance in the application of uncertainty measure. Illustrative examples have reveals that our proposed measure is more sensitive to the change of belief structures than AM and AU. Furthermore, our new measure can provide more rational results in practical applications such as the sensor evaluation. As for the computational complexity of our proposal, we would like compare it with AM and AU. Considering the expression of SU and AM, we can notice that SU need some addition and arithmetic average operations, which are also necessary in the calculation of AM. But they are much simpler than logarithm operation and nonlinear optimization. So we can conclude that our proposed uncertainty measure will not confront heavy computation burden.

References

Abellán J, Masegosa A (2008) Requirements for total uncertainty measures in Dempster–Shafer theory of evidence. Int J Gen Syst 37(6):733–747

Awasthi A, Chauhan SS (2011) Using AHP and Dempster-Shafer theory for evaluating sustainable transport solutions. Environ Modell Softw 26(6):787–796

Dempster A (1967) Upper and lower probabilities induced by a multivalued mapping. Ann Math Stat 38 (2):325–339

Deng X, Deng Y (2014) On the axiomatic requirement of range to measure uncertainty. Physica A 406:163–168

Deng X, Xiao F, Deng Y (2016) An improved distance-based total uncertainty measure in belief function theory. Appl Intell 46(4):898–915

Deng Y (2016) Deng entropy. Chaos, Solitons & Fractals 91:549–553

Deng Y, Shi W, Zhu Z, Liu Q (2004) Combining belief functions based on distance of evidence. Decis Support Syst 38(3):489–493

Denoeux T (1999) Reasoning with imprecise belief structures. Int J Approx Reason 20(1):79–111

Dubois D, Prade H (1985) A note on measures of specificity for fuzzy sets. Int J Gen Syst 10(4):279–283

Dubois D, Prade H (1999) Properties of measures of information in evidence and possibility theories. Fuzzy Sets Syst 100(1):35–49

Harmanec D, Klir GJ (1994) Measuring total uncertainty in Dempster-Shafer theory. Int J Gen Syst 22 (4):405–419

Hartley RV (1928) Transmission of information. Bell Syst Tech J 7(3):535–563

Höhle U (1982) Entropy with respect to plausibility measures. In: Proceedings of the 12th IEEE international symposium on multiple-valued logic, pp 167–169

Jiang W, Zhan J (2017) A modified combination rule in generalized evidence theory. Appl Intell 46 (3):630–640

Jousselme AL, Liu C, Grenier D, Bossé É (2006) Measuring ambiguity in the evidence theory. IEEE Trans Syst Man Cybern Syst Hum 36(5):890–903

Klir GJ (2001) Measuring uncertainty and uncertainty-based information for imprecise probabilities. In: International fuzzy systems association (IFSA) world congress and 20th North American fuzzy information processing society (NAFIPS) international conference. IEEE, pp 1927–1934

Klir GJ, Parviz B (1992) A note on the measure of discord. In: Proceedings of the Eighth international conference on Uncertainty in artificial intelligence. Morgan Kaufmann Publishers Inc., pp 138–141

Klir GJ, Ramer A (1990) Uncertainty in Dempster-Shafer theory: a critical re-examination. Int J Gen Syst 18(2):155–166

Klir GJ, Smith RM (1999) Recent developments in generalized information theory. Int J Fuzzy Syst 1 (1):1–13

Klir GJ, Wierman MJ (1999) Uncertainty-based information: elements of generalized information theory, vol 15. Springer Science & Business Media

Klir GJ, Yuan B (1995) Fuzzy sets and fuzzy logic: theory and applications. Prentice-Hall, Upper Saddle River

Klir G, Lewis HW (2008) Remarks on “measuring ambiguity in the evidence theory”. IEEE Trans Syst Man Cybern Syst Hum 38(4):995–999

Körner R, Näther W (1995) On the specificity of evidences. Fuzzy Sets Syst 71(2):183–196

Liu Z, Pan Q, Dezert J, Martin A (2016) Adaptive imputation of missing values for incomplete pattern classification. Pattern Recog 52:85–95

Liu Z, Pan Q, Dezert J, Mercier G (2011) Combination of sources of evidence with different discounting factors based on a new dissimilarity measure. Decis Support Syst 52:133–141

Liu Z, Pan Q, Dezert J, Mercier G (2014) Credal classification rule for uncertain data based on belief functions. Pattern Recog 47(7):2532–2541

Liu Z, Pan Q, Dezert J, Mercier G (2015) Credal c-means clustering method based on belief functions. Knowl-Based Syst 74:119–132

Maeda Y, Nguyen HT, Ichihashi H (1993) Maximum entropy algorithms for uncertainty measures. Int J Uncertainty Fuzzy and Know-Based Syst 1(1):69–93

Murphy CK (2000) Combining belief functions when evidence conflicts. Decis Support Syst 29(1):1–9

Ramer A (1987) Uniqueness of information measure in the theory of evidence. Fuzzy Sets Syst 24(2):183–196

Shafer G (1976) A mathematical theory of evidence. Princeton University Press, Princeton

Shannon CE (2001) A mathematical theory of communication. ACM SIGMOBILE Mobile Comput Commun Rev 5(1):3–55

Smets P (2005) Decision making in the TBM: the necessity of the pignistic transformation. Int J Approx Reason 38(2):133–147

Wang YM, Yang JB, Xu DL, Chin KS (2006) The evidential reasoning approach for multiple attribute decision analysis using interval belief degrees. Eur J Oper Res 175(1):35–66

Wang YM, Yang JB, Xu DL, Chin KS (2007) On the combination and normalization of interval-valued belief structures. Inf Sci 177:1230–1247

Yager RR (2001) Dempster-Shafer belief structures with interval valued focal weights. Int J Intell Syst 16:497–512

Yager RR, Alajlan N (2015) Dempster–Shafer belief structures for decision making under uncertainty. Knowl-Based Syst 80:58–66

Yager RR (1983) Entropy and specificity in a mathematical theory of evidence. Int J Gen Syst 9(4):249–260

Yang Y, Han D (2016) A new distance-based total uncertainty measure in the theory of belief functions. Knowl-Based Syst 94:114–123

Acknowledgments

This work is supported by the Natural Science Foundation of China under grants No. 61273275, No. 60975026, No. 61573375 and No. 61503407.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wang, X., Song, Y. Uncertainty measure in evidence theory with its applications. Appl Intell 48, 1672–1688 (2018). https://doi.org/10.1007/s10489-017-1024-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-017-1024-y