Abstract

Artificial intelligence (AI) models and optimization algorithms (OA) are broadly employed in different fields of technology and science and have recently been applied to improve different stages of plant tissue culture. The usefulness of the application of AI-OA has been demonstrated in the prediction and optimization of length and number of microshoots or roots, biomass in plant cell cultures or hairy root culture, and optimization of environmental conditions to achieve maximum productivity and efficiency, as well as classification of microshoots and somatic embryos. Despite its potential, the use of AI and OA in this field has been limited due to complex definition terms and computational algorithms. Therefore, a systematic review to unravel modeling and optimizing methods is important for plant researchers and has been acknowledged in this study. First, the main steps for AI-OA development (from data selection to evaluation of prediction and classification models), as well as several AI models such as artificial neural networks (ANNs), neurofuzzy logic, support vector machines (SVMs), decision trees, random forest (FR), and genetic algorithms (GA), have been represented. Then, the application of AI-OA models in different steps of plant tissue culture has been discussed and highlighted. This review also points out limitations in the application of AI-OA in different plant tissue culture processes and provides a new view for future study objectives.

Key points

• Artificial intelligence models and optimization algorithms can be considered a novel and reliable computational method in plant tissue culture.

• This review provides the main steps and concepts for model development.

• The application of machine learning algorithms in different steps of plant tissue culture has been discussed and highlighted.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Plant tissue culture can be considered the culture or cultivation of specific plant cells, organs, or tissues (explant) under axenic conditions, which is based on “totipotency” (Bhojwani and Dantu 2013). The term totipotency means that all plant cells (except male and female gametes) include the full range of genes, which makes it theoretically possible for individual cells under in vitro condition to develop into healthy and true-to-type plants (Bhojwani and Dantu 2013). This process provides the foundation for “micropropagation” in which culture vessels are used for propagation from various explants. Nowadays, in vitro culture can be considered one of the most important methodologies for the breeding and propagation of many plant species. Without in vitro culture, different methods such as micropropagation, in vitro shoot regeneration, gynogenesis, androgenesis, the production of plant-derived metabolites, or somatic embryogenesis would not be achievable (Raj and Saudagar 2019; Hesami et al. 2018c). However, it is necessary to optimize the in vitro culture conditions (Fig. 1) for each species, and in some cases each genotype within a species, and for different stages of growth and development such as callogenesis, embryogenesis, shooting, and rooting (Gray and Trigiano 2018). For instance, the composition and concentrations of macro and micro nutrients, vitamins, and amino acids have a profound effect on organogenesis as reported in several studies with different plants. Although a plethora of studies have used MS (Murashige and Skoog 1962) salts as a basal medium for organogenesis in different plants, the composition of MS medium is based on analysis of tissue ashes of tobacco (Arab et al. 2018; Jamshidi et al. 2019; Akin et al. 2020; Nezami-Alanagh et al. 2018). Since the nutrient requirements for different tissue culture systems and plant species vary, it is necessary to develop medium formulations optimized for specific species and stages of development for maximal efficiency. However, due to the large number of media components, design and modification of a medium for specific purpose needs high expertise and is time-consuming (Phillips and Garda 2019). Hildebrandt et al. (1946) indicated that more than 16000 different treatments were required for designing a new culture medium. Also, Murashige and Skoog (1962) spent about five years to establish and develop the culture medium by using eighty-one different combinations of macro- and micro-elements and vitamins. To ease this problem, computer technologies such as artificial intelligence (AI) would be helpful to reduce this long and cumbersome process.

Although there are numerous biological events that can readily be observed in different stages of in vitro culture, all of them are non-linear and non-deterministic and, furthermore, are impacted by multiple other factors as well (Osama et al. 2015; Zielinska and Kepczynska 2013). The complex interactions of many factors make optimization using traditional statistics problematic and would require unrealistic numbers of treatments. Therefore, the application of the appropriate AI models can be considered a useful and precise methodology to simulate and predict different growth and developmental processes under in vitro conditions to help optimize protocols with fewer treatments.

Recently, there has been an increase in plant tissue culture modeling using data-driven (also known as machine learning or AI) models (Prasad and Gupta 2008a; Osama et al. 2015; García-Pérez et al. 2020a; Kaur et al. 2020). AI models include various designs that might cover different views to in vitro processes (Tani et al. 1992). Modeling different steps of plant tissue culture is one of the most remarkable challenges in the field of in vitro culture (Frossyniotis et al. 2008). This rise is a consequence of the physical complexity of plant tissue culture and the time and cost needed for analyzing different elements of the in vitro culture process (Shiotani et al. 1994; Molto and Harrell 1993; Hesami et al. 2017c). AI models have been found to be very applicable and reliable methods to help cope with those challenges and problems by providing opportunities to construct AI models from experimental and observed data and, also, improving the response of decision-makers facing complex systems in plant tissue culture (Osama et al. 2013; Araghinejad et al. 2017; Hesami et al. 2020b). Since AI tools are able to model different in vitro systems and subsequent outcomes of biological processes without the requirement for a profound knowledge of the physical systems neighboring the process, these methods are becoming common among plant tissue culture researchers (Osama et al. 2015; Zielinska and Kepczynska 2013).

Data-driven models, as understood by the name, refer to various methods that predict and model a process based on real information obtained from the process (Maddalena et al. 2020; Moravej et al. 2020; Dezfooli et al. 2018). They consist of various models, generally classified into soft computing and statistical methods (Akbari and Deligani 2020; Ebrahimian et al. 2020). Data-driven models can be generally categorized as accurate, inexpensive, precise, and flexible methods, which make them appropriate approaches for predicting and studying different biological systems with various complexity degrees according to our knowledge about a process (Araghinejad et al. 2017; Hosseini-Moghari et al. 2017). Although data-driven models were primarily used in different fields of science and technology, they could be considered novel methods regarding soft computing (Maddalena et al. 2020; Araghinejad et al. 2018). Modeling through AI models, which is dependent on the levels of our knowledge of mathematical and statistical equations, can be described as a solution determined by “engineering thinking and judgment” to the field of computational biology.

Recently, several reports have been published regarding various applications of artificial intelligence models in different procedures of plant tissue culture (Hesami et al. 2019b; Zielinska and Kepczynska 2013; Osama et al. 2015). All the reports confirmed the reliability and accuracy of AI in forecasting and optimizing growth and developmental processes under in vitro culture conditions. Thus, this review focuses on the recent developments in plant tissue culture using AI-OA. First, we have introduced the principle of modeling and optimizing as well as different well- known algorithms. Then, the application of AI-OA in different stages of plant tissue culture has been discussed.

Data-driven modeling basics

Preprocessing of data: first step before modeling

Although preprocessing data before using AI models is not required, it can sometimes improve the performance and accuracy of the models (Silva et al. 2019). Indeed, data preprocessing can guarantee that all data receive equivalent consideration during the training set. Two common preprocessing methods that can be applied are principle component analysis (PCA) and standardizing the data (Araghinejad et al. 2017). These approaches are described in this section.

Principal component analysis

The principal component analysis (PCA) is an approach that can be used for two purposes. The first is to eliminate the linear correlations among variables and the second is to decrease the data dimension. PCA replaces correlated variables by principal components (new uncorrelated variables). PCA uses a new orthogonal coordinate set to replace xy (Cartesian) coordinate set, where the first line crosses via the data scatter axis and the novel origin. The new coordinate process has merit over the previous version of the coordinate system that the first axis can be utilized to explain most of the variance, while the second axis provides only a little description. Therefore, the reduction of data dimension can be obtained by decreasing the second axis without missing much data when a significant correlation is available (Aït-Sahalia and Xiu 2019).

Linear combinations of the n vector of correlated vectors are shown by PCs and Xi. The PC number is equal to n, so, the full variance of the dataset is obtained through all PCs together. The first few PCs explain most of the variance, therefore, some PCs which represent a little of the variance can be ignored. n PCs are determined based on the following equations:

Figure 2 represents PCA for a two-dimensional (2D) dataset.

Standardizing

Data standardization as a simple method can improve the model efficiency. In this method, the specified range (usually between zero and one) is used for transmitting all variables. Data standardization prevents the negative effects of input variables with various ranges on the model efficiency. The following equation is one of the most common equations for the data standardization:

where xmin, xmax, and xnormal are the minimum, maximum, and normalized values of x, respectively. By using this equation for each of the output and input vectors, all variables are transmitted between 0 and 1 (Kumari and Swarnkar 2020).

Network selection

Network selection involves choosing the network, initial weight matrix, size and number of hidden layers, etc. (Osama et al. 2015).

Training selection

Training selection should be started with initial weight and network topology and training the network on the training dataset. After reaching the satisfactory minimum error, the weights will be saved (Silva et al. 2019).

Testing and interpretation of results

The trained network is employed to test the dataset to obtain the error. If it is not satisfactory, the network architecture or training set requires to be modified (Silva et al. 2019).

Assessment of the developed model

The concluding assessment of the network quality is addressed after the training processes completed through testing datasets based on different performance criteria such as the sum of the squares of the error (SSE), mean square error (MSE), root mean square error (RMSE), mean absolute error (MAE), mean bias error (MBE), the linear Pearson’s correlation coefficient (R), and coefficient of determination (R2) (Silva et al. 2019; Osama et al. 2015).

where yi presents the ih observed data, \( \overline{y} \) shows the mean of observed values, \( \hat{y_i} \)indicates the mean of predicted values, and n is the total number of predicted values.

Artificial intelligence models

Artificial neural networks

Different kinds of artificial neural networks (ANNs) including multilayer perceptron (MLP), generalized regression neural network (GRNN), radial basis function (RBF), and probabilistic neural network (PNN) are described in this section. Before introducing these ANNs, some terms should be defined:

Neuron: is the main unit of ANN, which based on a specific input variable and applying a transfer function, provides an appropriate response.

Architecture: is a network construction consists of input, hidden and output layers, number of neurons in each layer, the way of neuron connection, the flow of data (recurrent or straight), and specific transfer functions.

Train network: is the process of calibrating the ANN through input/output pairs.

Multilayer perceptron

The MLP as one of the most well-known ANNs includes one or more hidden layers, an input layer, and an output layer (Silva et al. 2019; Osama et al. 2015; Sheikhi et al. 2020) (Fig. 3a). A supervised training procedure is implemented by MLP that provides input and output variables to the network; the training set continues until the following equation would be minimized:

where K, yk, and\( {\hat{y}}_k \) are the number of datapoints, the kth observed data, and the kth forecasted data. In a three-layer MLP with n inputs and m neurons in the hidden layer \( \hat{y} \)determined as:

where xi is the ith input variable, w0 represents bias related to the neuron of output, wj0 is bias of the jth neuron of the hidden layer, f represents transfer functions for the output layer, g is the transfer functions for hidden layer, wji is the weight connecting the jth neuron of hidden layer and the ith input variable, and wj represents weight linking the neuron of output layer and the jth neuron of the hidden layer. Some of the well-known transfer functions are presented in Table 1.

Determining the construction of the MLP has the main function in its performance (Domingues et al. 2020; Niazian et al. 2018a). In the construction of this model, it is necessary to determine the number of neurons in each layer and the number of hidden layers (Niazian et al. 2018a). Hornik et al. (1989) revealed that the MLP with a sigmoid transfer function is general approximators; which shows that it could be trained to build any construction between the input and output variables. Therefore, the number of neurons in the hidden layer plays an important role in determining the construction of the MLP. Some studies (Sheikhi et al. 2020; Niazian et al. 2018a) have recommended the proper number of neurons (m) based on the number of data (K) or the number of input (n). For example, Tang and Fishwick (1993), Wong (1991), and Wanas et al. (1998) suggested “n,” “2n,” and “log (K)” as the suitable neuron number. Eventually, the optimal number of neurons in the hidden layer should be calculated by using trial and error, however, the reported offers could be employed as an initiating point. A large number of neurons contributes to the complexity of the network while a low number of them makes for simplicity of the network, therefore should be noted that a too simple network results in under-fitting, and, conversely, becoming too complex causes over-fitting (Domingues et al. 2020; Hosseini-Moghari and Araghinejad 2015).

Radial basis function

RBF is a three-layer ANN consisting of an input layer, a hidden layer, and an output layer (Fig. 3b). This is the basis and principal for radial basis networks, which organizes statistical ANNs. Statistical ANNs refer to networks which in contrast to the traditional ANNs implement regression-based approaches and have not been emulated by the biological neural networks (Lin et al. 2020). In an RBF model, Euclidean distance between the center of each neuron and the input is considered an input of transfer function for that neuron. The most well-known transfer function in RBF is the Gaussian function, which is determined based on the following equation:

where Xr,Xb, and h are input with unknown output, observed inputs in time b, and spread, respectively. The output of the function close to 1 when‖Xr − Xb‖approaches 0 and close to 0 when ‖Xr − Xb‖approaches a large value. Finally, the dependent variable (Yr) by predictor Xr is determined as follows:

where w0 andwjare the bias and weight of linkage between the bth hidden layer and the output layer, respectively.

Generalized regression neural network

GRNN introduced by Specht (1991) is another kind of statistical ANNs with a very fast training process. In the GRNN model, the number of observed data and the number of neurons in the hidden layer are equal. This model consists of an input layer, pattern layer, summation layer, and output layer (Fig. 3c). The pattern layer is completely connected to the input layer. D-summation and S-summation neurons of the summation layer are connected to the output derived from each neuron of the pattern layer. D-summation and S-summation neurons calculate the sum of the unweighted and weighted of the pattern layer, respectively. The connection weight between S-summation neuron and a neuron of the pattern layer is equal to the target output, while the connection weight for D-summation is unity. The output layer obtains the unknown value of output corresponding to the input vector, only via dividing the output of each S-summation neuron through the output of each D-summation neuron (Lan et al. 2020). Consequently, the following equation is used to determine the output value:

where Yrrepresents the output value, andTb is target associated with the bth observed data.

Probabilistic neural network

The probabilistic neural network (PNN) is a type of ANNs for classification aims. This model has a construction similar to that of the RBF model (Fig. 3d). When an input is provided, the first layer calculates distances from the calibration input vectors to the input vector and generates a probabilities vector as f(Xr,Xb). In the last layer, the maximum of these probabilities is picked by a complete transfer function in the output. PNN is not a regression model and cannot be forecasted continuous data, therefore, it can be employed as a method to qualitative predicting (Ying et al. 2020).

Neurofuzzy logic

Neurofuzzy logic is the result of the combination of the adaptive learning capabilities of ANNs and fuzzy logic (Dorzhigulov and James 2020). Designing neurofuzzy logic needs qualitative knowledge, but not quantitative. Neurofuzzy logic produces certain rules in an understandable and clear form: if there is a requirement, then there is a decision; therefore, demonstrating the relationships in observations. ANN models are applied to find the optimal levels of certain fuzzy logic parameters in neurofuzzy system and automatically determine fuzzy linguistic terms (rules) from numerical variables. The simplest and the most well-known method for constructing such models is to develop linguistic terms and membership functions by following these functions and then to review how this model operates. The network structure employed in neurofuzzy model consists of inference, fuzzyfication, and defuzzyfication facilities. The classic construction of the fuzzy logic indicates the description of operations conducted at each stage: (i) fuzzyfication, involving in defining the membership level of a special input variable to the size of each fuzzy sets comprising the possible ranges of inputs—this step is decreased to estimating functions or finding suitable variables in the tables, (ii) the application of fuzzy system indicators to define the degree to which a condition is reached in each of the functions, (iii) the use of the implication model, which causes the production of fuzzy sets representing each of the output variables happening in the conclusion, (iv) gathering all combinations of outputs for each of the representing outputs and all functions in one set of fuzzy, and (v) defuzzification, which involves in the specific value assigned to each of the outputs of the fuzzy set taken after gathering (Dorzhigulov and James 2020). Recently, several neuro-fuzzy approaches such as ASMOD (adaptive spline modeling of data), ANFIS (adaptive neuro-fuzzy inference systems), and NEFPROX (neuro-fuzzy systems for function approximation) have been developed and implemented. Among them, the ANFIS model gives a directed and systematic strategy for modeling and generates the best design parameters in the minimum time (Prado et al. 2020).

ANFIS can explain the complex system behavior according to the fuzzy if–then rules which are based on Sugeno fuzzy inference system. Consider the fuzzy inference system with x and y as input variables and z as an output. A typical ruleset, for the first order Sugeno fuzzy model, with four fuzzy if–then rules can be represented as:

where Ai and Bi (i = 1,2,3,4) are the fuzzy sets, pi,qi, and ri(i = 1,2,3,4) are the design parameters that are calculated during the training set. The construction of ANFIS includes input node layer, rule node layer, average node layer, consequent node layer, and output node layer (Fig. 4a).

Layer 1 (input node layer): All the nodes in layer 1 are adaptive nodes. The outputs of the input node layer are the fuzzy membership grade of the input variables, which are determined by:

where μand \( {O}_i^1 \)are membership functions and output from node i, respectively.

Layer 2 (rule node layer): In the second layer, the nodes are considered fixed nodes and labeled ∏, the AND operator is implemented to achieve one output that displays the result of the prior for that rule. The kth output of Layer 2 (wk) is calculated as:

which elucidates the firing strength of each rule. The firing strength indicates the level to which the prior section of the rule is satisfied, and it develops the function of the output for the rule.

Layer 3 (average node layer): In the third layer, the nodes are fixed nodes and labelledN. The normalization of firing strengths from the prior layer is the main function of layer 3. The normalized firing strengths are the outputs of the average node layer (\( \overline{w_i} \)), and can be determined as:

Layer 4 (consequent node layer): This layer determines the contribution of each ith rule in the whole output. The product of the first-order Sugeno model and the normalized firing strength is the output of each node in the fourth layer. Therefore, the outputs of the fourth layer can be shown as:

Layer 5 (output node layer): One single fixed node labeled Sis the only node in the fifth layer. Summing all incoming signals is the task of this layer. As a result, the following equation represents the final output of the ANFIS:

As can be seen, two sets of parameters should be determined and adjusted. The first set is premise parameters that are based on the input membership functions. The second set is consequent parameters {p,q,r} which are based on the first-order Sugeno model. The least-squares approach is implemented to adjust the consequent parameters and backpropagation algorithm is used to optimize premise parameters. It has been documented that high performance in training the ANFIS can be obtained by a hybrid algorithm (Prado et al. 2020).

Support vector machine

Support vector machines (SVMs) developed by Vapnik (2013) are the types of AI models with supervised and unsupervised learning that are utilized for classification, clustering, and regression analysis. Two types of SVM including support vector classification (SVC) and support vector regression (SVR) have been introduced in this section.

Support vector classification

SVC is a classification version of SVM; therefore, it can only be applied for qualitative predicting. SVC minimizes the risk of classification by dividing the decision space, which means that two groups have the highest distance from both lines. This means that among different separators, the separator is selected to have the highest distance of all groups. Figure 5 represents the differences between SVC classification and ANN classification methods. In Fig. 5, a and b are separators with error = 0; however, if a new input is added, these lines may lose their accuracy, while line c has the minimum risk for losing its precision. The following equation shows line c:

Where w and b are the classifier parameters and x is the variables in the decision space. As can be seen in Fig. 5, for classification, a margin is considered by SVC which determines as follow:

\( \frac{\left|b\right|}{\left\Vert w\right\Vert } \) represents the distance of a line from the origin. Therefore, the distance between the classifier line and the upper marginal line is computed as:

Thus, the width of the margin is calculated as:

The objective function of the SVC model can be considered the D value maximization or:

Also, when variables in the decision are in the ownership of the first class (y = 1),wTx + b should be ≥ 1, while with variables belonging to the second class (y = -1) in the decision space,wTx + b should be ≤ − 1. Thus, the optimization problem can be presented as:

The mentioned optimization problem is applied for the “hard margin” method where a solid fringe is taken account of the SVs. However, more flexibility is needed for practical purposes. This SVC will be achieved by receiving an error of ξ for each of the marginal lines. Therefore, the optimization can be represented as:

where ξi and C are a slack variable providing a soft classification margin and a penalty parameter, respectively. The dual problem can be solved to simplifying optimization. The dual solution can be written as:

whereαi ∈ ℜn presents Lagrange multipliers. In the training process, one αiexists for each vector. Support vectors (SVs) correspond to the nonzero subset (αi) and determine the decision surface, these SVs are the most informative. SVs present the location of the borderline (Fig. 5).

Mapping a higher-dimensional space through function φ(x) can be obtained by the decision space variables (x). Therefore, the dual problem can be shown as:

where k(xi, xj) = φ(xi). φ(xj) presents the kernel function. Thus, the SVC can be performed as follows:

This distinctive function is named as SVC. Table 2 has shown the kernel functions implemented in SVM’s formulations.

SVC presented in this section can be classified into two classes. When more than two categories are subjected, a suitable multiclass approach is required. There are two possible methods for this aim as following:

-

1.

Modification of the SVC design to directly combine the quadratic solving algorithm and multiclass learning.

-

2.

Incorporating several binary classifiers with two approaches:

“One against one” which employs pair comparisons among categories.

“One against the others” which compares a particular category with all the other categories.

Based on a comparative study (Kranjčić et al. 2019), the precision of these approaches has approximately been the same.

Support vector regression

The main difference between SVR and SVC is that in SVR, y instead of a binary number is considered a real number. When \( {\left\{\left({x}_i,{t}_i\right)\right\}}_i^n \)is considered a dataset, n, ti, and xi are the total number of observed data, ith output vector, and ith input vector. The following equation can be used for SVR determination:

Where b shows bias, w indicates weights, and the high dimensional feature space is shown asφ(x), which is non-linearly constructed based on the input space x. SVR tries to minimize the risk by placing predicted variables between the lower and upper bounds of the dataset. Lower and upper borderlines are written asy = wφ(x) + b − ε and y = wφ(x) + b + ε, respectively. Therefore, if a data is placed outside the marginal line, it must be adjusted (Fig. 4b). The following equations as an optimization set are applied for determining b and w coefficients:

where C is the penalty parameter, Lε shows insensitive loss function, andεis acceptable error (tube size). εand C are user-prescribed items. The following equation is used for the dual function with using Lagrange multipliers:

Then, w and b are calculated. The supporting vector is considered the Lagrange multipliers with non-zero values. Finally, the following equation is used for performing the SVR:

RBF can be considered one of the common kernel functions (Tong et al. 2020). Thus, an SVR with RBF as the kernel function can be shown as SVR(γ, C, ε).

Decision trees

Decision trees, as supervised machine learning models, are broadly employed in regression and classification tasks (Thomas et al. 2020). The decision tree structure consists of a root node, internal and leaf nodes. The internal nodes represent the values of the attributes, and each leaf node of the tree includes the probability distribution and the label of the class. There are different decision tree building algorithms such as chi-squared automatic interaction detector (CHAID), exhaustive CHAID, and Classification and regression tree (CART). These models construct data in an easily interpretable way (Akin et al. 2018). The foremost differences among these models occur in the tree construction process. In CART, each node dissects into two nodes (binary splits), while in CHAID and exhaustive CHAID, each node dissects into more than two nodes (multiway splits) by default (Akin et al. 2018; Kusiak et al. 2010). All the methods restrict the tree size by avoiding over-fitting. CART first produces the whole tree and then makes a backward pruning (post-pruning) to optimize the size of the tree, while CHAID and exhaustive CHAID restrict any growth at the optimal size of the tree by employing a stopping criterion (Thomas et al. 2020). All descriptive variables are checked for the optimal split in the tree construction process, and trial splits are employed to find the best split point. The best split can be considered the one that maximizes the variance between groups and minimizes the variance within a group. Backward pruning (post-pruning) is carried out based on V-fold cross-validation. The whole tree is randomly allocated to the V number of groups. One of these groups is employed to validate the model and the residual is used to construct a model, and this step is repeated V times. V number of models are created and confirmed in this step (Thomas et al. 2020; Akin et al. 2018). The CHAID and exhaustive CHAID tree-producing methods include merging, splitting, and stopping processes. When the output variable is continuous (regression-type variables), the CHAID approach performs the best-next split based on the F test, while chi-square is employed when the output is categorical. Continuous variables are divided into groups with a similar sample size. The smallest Bonferroni adjusted p value is utilized for partitioning response variables. Descriptive variable pairs with the highest p-value are monitored for significance (which is generally considered 0.05). The pair is merged into a single node if the p-value is larger than 0.05. A group with three or more classes is analyzed to define the most significant split. When the node size is less than the predetermined minimum child and parent node size value, the tree growth process stops, otherwise it continues (Rashidi et al. 2014). The exhaustive CHAID, as a modified CHAID method, needs more computing time because it uses more dependent variable testing and merging (Akin et al. 2018; Kusiak et al. 2010).

Random forest

RF as an ensemble learning method is introduced firstly by Breiman (2001). RF can be categorized as an ensemble of unpruned trees. This algorithm has been successfully employed in both classification and regression due to its simplicity in design and superior efficiency. Several merit points of the RF model such as prevent overfitting, the ability to handle noise, and the ability to manage a large number of features have been reported (Silva et al. 2019; Biau et al. 2019). The final output of the FR model is the combination of the output data of the individual trees (Fig. 4c). To maintain the minimum correlation among the individual trees while keeping the strength of the individual trees, each of the individual trees is thus built with two random injected sources for classification accuracy. First, each individual tree is trained based on randomly drawn with replacement (bootstrap replica) of the training data. Second, the algorithm defines the best split, at each node of each tree, based on a small variable subset that is randomly chosen from the whole variable set. Furthermore, each tree is completely grown to obtain a high variance of the tree outputs and low bias (Biau et al. 2019).

To solve regression problems, the mean squared error (MSE) is used to how data branches from each node. In fact, MSE determines the distance of each node from the forecasted real value, serving to determine which branch is better for the forest.

where N is the number of data points, fi is the value returned by the RF algorithm, and yi is the value of data point i.To solve classification problems, the Gini index is applied to determine how nodes on the branch of a decision tree. Equation 31 determines which of the branches is more likely to happen based on the probability and class to calculates the Gini of each branch on a node.

where pi corresponds to the relative frequency of the class in the dataset and c is the class number.

Entropy can be also used to calculate how nodes branch in a decision tree.

Entropy employs a certain outcome probability to decide how the node should branch. Unlike the Gini index, entropy is more mathematical intensive because of the logarithmic function applied in its formula.

Data fusion model

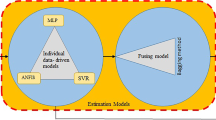

Nowadays, the necessity of increased precision and accuracy of AI models has encouraged researchers to establish applicable methods such as multi-model fusion-based (ensemble) approaches. The key idea of the ensemble model is fusing or combining data derived from fused information in order to obtain more precise predictions in comparing with implementing individual models (Alizadeh and Nikoo 2018). Many researchers in several fields of study have used data fusion (Alizadeh and Nikoo 2018; Hararuk et al. 2018; Meng et al. 2020). At more complex systems such as different stages of plant tissue culture, the ensemble model can be used to fuse the advantages and strengths of individual AI models. Several studies have demonstrated that ensemble models can be more reliable and accurate to model complex systems (Alizadeh and Nikoo 2018; Hararuk et al. 2018; Meng et al. 2020).

Data fusion is known as the process of combining and mixing data from various sources such as single outputs of several individual data-driven models (Fig. 4d) that the overall equation can be as follows:

where \( {\overset{\wedge }{y}}_i \) stands for target variable, x is a vector of independent indicators, ε stands for corresponding estimation error, and n is a number of observation data.

In order to develop data fusion models, Eq. (40) can be introduced to the following equation where several individual AI models are used as follows;

where m stands for the number of individual model and [\( {\overset{\wedge }{y}}_i \)] stands as matrix of estimations provided by each model.

Subsequently, the matrix of [\( {\overset{\wedge }{y}}_i \)] will be considered input data in fusion models.

Many methods such as bagging, K-nearest neighbors (KNN) algorithm, ordered weighted averaged (OWA) method based on the ORLIKE weighting method (ORLIKE-OWA) and ORNESS weighting method (ORNESS-OWA) have been recommended for fusing individual models, which reported that the most powerful and uncomplicated among different approaches is the bagging method for data fusing.

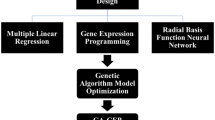

Optimization algorithms

In plant tissue culture, the optimum selection between existing various alternatives can significantly reduce the costs and time. Thus, the use of optimization methods in the field of plant tissue culture is of particular importance (Osama et al. 2015; Zielinska and Kepczynska 2013; Guangrong et al. 2008). In general, optimization methods are categorized into two groups including classic and evolutionary algorithms. Among classic methods, linear programming (LP), dynamic programming (DP), stochastic dynamic programming (SDP), and non-linear programming (NLP) can be mentioned (Goudarzi et al. 2020; Moravej 2017). Each of these methods has limitations that restrict their use. The LP method has the limitation of being linear. In this method, the entire set of relations should be linear; therefore, this method is not applicable to the nonlinear problems, which are common in plant tissue culture. In the DP method, the linear limitation was removed, but this method is only applicable to the discrete case. The SDP method, which is a stochastic form of the DP, also has this limitation; moreover, the computation volume in the DP and SDP will exponentially increase if the dimensions of the problem increase that this case is the so-called curse of dimensionality. The NLP method has higher accuracy than other methods, although in this way, the process of solving the problem is time-consuming, and in complicated problems, it may stop in the local optimums and does not reach a global optimum solution (Goudarzi et al. 2020; Moravej 2018; Bozorg-Haddad et al. 2017). The second category of methods includes evolutionary or metaheuristic ones, which have been developed based on a natural process (Moravej and Hosseini-Moghari 2016; Bozorg-Haddad et al. 2016; Hosseini-Moghari et al. 2015; Haddad Omid et al. 2016). Since these methods are not dependent on the problem type in terms of being linear or nonlinear and converge in the global optimum solution (close to the optimum) with a high-speed, are enormously popular. In this regard, in this paper, the Genetic Algorithm (GA), which is the most well-known evolutionary method, was introduced.

Among the particular contexts that the GA can be potentially applied, optimization of different in vitro culture stages, optimization of the MLP neural network weights, optimization of the spread parameter in the RBF, GRNN, etc., neural networks can be pointed out. In general, at any situation in which there are unknown variables or variables that their optimum amounts should be obtained based on a given criterion (objective function), the GA can be employed (Jamshidi et al. 2019).

In this section, firstly, we will discuss the basic concepts of optimization; some expressions such as the objective function, decision variable, and state variable will be introduced, and the GA will be explained. Then, the concepts of chromosome, gene, genetic and evolutionary operators, and the performance quality of the GA will be stated.

Basic concepts of optimization

Since the GA is an optimization algorithm, before entering into the discussions explaining the GA, at first, basic concepts of optimization will be stated. These concepts are general and exist in all optimization methods.

Decision variable: the variable that an optimization process is conducted to find its optimum value.

State variable: the variable that will not be optimized directly, but after determining the optimum amount of the decision variable, the value of this variable is also calculated; in fact, its value depends on the decision variable value.

Objective function: the criterion that optimum values of the decision variables are obtained based on it. This criterion can be defined as minimizing or maximizing.

The presence of the state variable in the optimization problems is not permanent, though the decision variable and objective function are two principal parts of any optimization method. For example, consider a function that gives an input such as x, and concludes an output like y. In a simple situation, it can be shown in the form of y = ax + b; however, if we want to determine the appropriate amounts of a and b according to the available data, we will require to a criterion for comparing between calculated ys and observed ones for different values of a and b. This criterion can be MSE or RMSE or any other criterion. In this example, a and b are decision variables of the problem, and MSE or RMSE are the objective functions of the problem, which we intend to minimize them.

Genetic algorithm

The GA, for the first time, was designed by Holland (1992) and developed as a powerful tool of optimization. The GA is a searching algorithm, which is derived from the biological nature and the process of natural selection. This method is based on Darwin’s notion, which says that in the environment, organisms that are much more stable than the others can always survive (Yun et al. 2020). Before entering into the discussion of the GA algorithm behavior, it is essential to know some of the basic concepts about GA.

Basic definitions

In the genetic algorithm, seven concepts are widely used; these concepts include (1) gene, (2) chromosome, (3) population, (4) evolutionary operators, (5) genetic operators, (6) elitism, and finally (7) generation. Genetic algorithm is based on these concepts, which will be defined as follows:

-

1.

Gene: in the GA, each decision variable of the problem is called a gene.

-

2.

Chromosome: a set of genes that are actually a series of answers for the studied problem are called a chromosome. In a problem with one decision variable, the gene and chromosome are the same.

-

3.

Population: a set of answers (chromosomes) is called population.

-

4.

Evolutionary operators: in order to create new answers in the GA, it is needed to select parent chromosomes; this selection is performed by the evolutionary operators.

-

5

Genetic operators: after selecting the parent chromosomes, creating new generations is performed by genetic operations of crossover and mutation.

-

6.

Elitism: In each iteration of the algorithm, the best answers are not undergone crossover and mutation, and are transferred to the next iteration intact, that they are called elite.

-

7.

Generation: each iteration of the algorithm is called a generation.

Overall flowchart of genetic algorithm

Before implementing the GA, some parameters such as selection method, crossover fraction, mutation rate etc. should be determined. Then, a set of probable answers will be created. Indeed, a population is created through chromosomes. These chromosomes possess the genes equal to the number of problem dimensions. During the process of optimization, these genes are improved by means of genetic operators of the crossover and mutation. Chromosomes are chosen for moving to the next generation according to the sufficiency of their corresponding objective function. In this selection, the operators such as the roulette wheel and tournament selection, which are evolutionary operators, are used. Using a crossover operator, a number of genes from two selected chromosomes are replaced with each other, moreover, some genes randomly changed by mutation operator. In addition, using the elitism parameter, we are able to increases the chance of selecting the best chromosomes, and consequently, improve the algorithm convergence. In the producing of each new generation, three operators including selection, crossover, and mutation, handle the process of optimization in such a way that created chromosomes make the objective function value better and better at each iteration to the extent that the optimization process will be ended by one of the stopping conditions (Yun et al. 2020). Figure 6 illustrates flowchart of the GA. The flowchart steps have been described in the following section.

Determining the parameters and stopping criteria

In order to implement the GA, the values of its parameters must first be determined, and then, for finishing algorithm process, the stopping conditions are necessary. In the determining part, some parameters such as the number of populations, crossover fraction, mutation rate, and the number of elites are determined. Optimum amounts of these values are given through the trial and error method. Crossover fraction is a percentage of the population on which crossover operation is performed. The mutation rate is a percentage of the genes of the population on which the mutation process is performed. Elites are a number of population members who are transferred unchanged to the next generation.

In order to end the algorithm procedure, we must consider the criterion; this criterion is arbitrary, but usually, the number of iterations (generation) is taken as the stopping criteria. In this way, the algorithm will stop after passing the maximum iterations. However, other criteria such as “to achieve a satisfactory answer” or “passing a certain number of iterations without observed improvement” or “time of algorithm implementation” can be selected as the stopping criteria.

Creating the initial population

The initial population, which its number is one of the algorithm parameters, is created randomly. This population is the number of chromosomes. The manner of creating an initial population is arbitrary but creating population between the upper and lower band of decision variables may be a good choice for the initial population.

Calculating the objective function value

Based on the created population and written cost function, the objective function value is calculated. In fact, equal to the number of population members, the objective function is calculated. Each objective function calculation is called an assessment; therefore, in a generation with a population equal to 50, the number of iterations is equal to 1, but the assessment number will be 50. The objective function value corresponding to each chromosome is used in the next stages of the algorithm.

The selection

In order to perform the crossover, two chromosomes should be selected. These chromosomes are considered parent, and by combining their genes, two offspring are established that are replaced with parents in the next generation. The selection process is usually based on the fitness value of the relevant objective function of each chromosome. Each chromosome that has more optimal objective function values is more likely to be selected and, as a result, to create the next generation. There are several ways to select that some of them are mentioned below.

Tournament Selection: In this method, some of the population members are randomly chosen. Among the selected set, a chromosome that shows the best fitness is considered parent. Selected members come back to the population, and once again, this procedure is performed, and the second parent is selected.

Roulette wheel: roulette wheel selection method is one of the most efficient and best-known methods of selecting. In this way, the probability of selecting chromosomes with higher fitness value of the objective function is greater; in other words, the probability of selection allocates to each chromosome corresponding to its fitness value.

To understand the mechanism of the roulette wheel, consider a circular plate (Fig. 7a), which has been divided into n parts (unequally). If we put an indicator in one side of the plate, and roll the plate, when the revolving plate stops, the parts that cover greater portions of the circle perimeter, have more probability to be the front of the indicator. The roulette wheel also employs a similar process for the selection of parent chromosomes. If the circle perimeter is equal to one, so the amount less than one will be allocated to the perimeter of each part (pi). Thus, if we cut the circle perimeter from a point, we will consider it like a line (Fig. 7a).

The schematic view of a Roulette wheel, b one-point crossover, c two-point crossover, d uniform crossover, e the mutation on a chromosome, f the objective space of an optimization problem with two objectives, where the yellow point in the center of areas is considered a solution, g the objective space of a two-objective optimization problem, and h the binary tournament selection

As over the line, perimeters (probabilities) are placed in the form of cumulative; if we create a random number in the range of zero to one, it is more likely to be placed in front of the ranges with a greater perimeter when the upper limit of each band is considered an index of the location of the random number; in this way, if the random number q is placed in the range of zero to p1, the index 1 will be selected, and If is placed in the range of p1 and p2 + p1, index 2, and so on.

The crossover

Survival of generations in nature is done by intercourse. In the GA, consequently, there is the nature of combination and intercourse. The combination is performed among chromosomes with gene replacement, and each parent chromosomes transfer their characteristics to their children. This process in the GA is arranged by means of the crossover operation. Crossover is a process in which the current generations of chromosomes mix, and create a new generation of chromosomes. Although the crossover operator may be applied on a chromosome or more than two chromosomes, usually, for each combination, two chromosomes are selected as the parent, and by joining them, two offspring are created. In the following section, some of the well-known crossover methods have been mentioned in the GA.

One-point crossover: this method was developed by Holland (1992). For mixing two chromosomes, parent chromosomes are cut from one given point and their genes are replaced with each other. Figure 7 b displays the single point crossover operator.

Two-point crossover: in this method, the parent chromosomes are randomly cut from two points. In this way, each chromosome is divided into three parts, which in order to combine, the middle section is fixed and the surrounding parts of two chromosomes are replaced (Fig. 7c).

Uniform crossover: in the previous methods, replacements were merely possible at cut points, whoever, in the uniform method, potential of replacement is uniformly considered for all genes. The number of replaced genes in this method is not fixed, but usually, half of the chromosome length is taken into account. Figure 7 d shows a uniform crossover operator.

These abovementioned methods are well used for binary problems, but in the continuous problems, replacement without changing genes is not appropriate, because in the continuous problems infinite answers are possible. Moreover, in the case of raw replacement of genes, their values are only replaced between established amounts in the initial population. In continuous problems, special methods are used to replace genes. Some of these methods include the arithmetic method and the sequential method.

Arithmetic method: In this method, the genes of two chromosomes of A and the B, which are selected for the crossover process, are transferred to offspring chromosomes (a,b) by using the following equations:

where α is selected in the interval of [0,1]. The amount of α = 0.5 causes that the children are gained out of parental genes. We can consider the α as a random number in the interval of [0,1] with different values for each gene.

Sequential method: in this method, parent chromosomes are cut from two points. Then, the middle section is kept constant for both chromosomes. The left and right parts, which are calculated for the first child in the second parent, have to start from the beginning of the chromosomes; genes that are absent in the middle of the first child are placed in the blank spaces randomly. In the second child, also, the middle part of the second parent is kept constant, and the previous process is performed based on the first parent.

The mutation

The aim of mutation in the GA is to create variety in the solutions. This operator beside the crossover operator leads to converge the GA toward the optimum solution. Mutation on chromosomes creates random variations. These changes contribute to enter the new genes into the population; as a result, creating new genes causes the comprehensive search of the algorithm. The mutation operator prevents the algorithm from trapping in the local optimum solutions. Furthermore, a low mutation rate causes that changes in the low number of genes does not have clear effects on the problem solution; in the other words, the high mutation rate makes children have little resemblance to their parent; so, this fact results in the loss of the historical memory of the algorithm. Thus, the mutation rate should be optimally determined by the trial and error method. Figure 7 e shows the mutation on a chromosome.

Random mutation operator (uniform): in this method, a number of genes are randomly replaced with new genes. Having a mutation rate and generating random numbers in the interval of [0,1], we can replace the genes, which their relevant random number is less than the rate of mutation, with a new gene. Creating a new gene could be based on the relationship of the initial population production.

Gaussian mutation operator: in this method, also, selection of a gene, which is supposed to be replaced by another gene by mutation operator, is similar to the previous method. In this way, if the selected gene is equal to xk, its value with the aid of a random number will be replaced with a normal distribution with mean xk and standard deviation σ. In this case, σ can be appropriated according to the permissible range of xk (upper and lower limit of the variable).

The presented methods in the previous section concerning the selection, crossover, and mutation are only a part of the existing methods in this area, although, knowing the basic concepts, the use of other methods will be possible; in addition, the inventive methods can be applied in this context as well. With respect to what was stated, the population in the next generation will be composed of three parts: the first part, elites that will be moved to the next generation without any change; the second part, the population obtained from crossover process; and the third part, the population obtained from mutation process.

The cost function

In the previous sections, we observed that the genetic and evolutionary operators act based on being appropriate for the objective function value corresponding to each chromosome. Nevertheless, the question is how to calculate the objective function value? To this end, we need a cost function. The cost function is not related to the implementation of the GA algorithm. Conversely, it is related to the optimization problem and should be written for each optimization problem separately. As it is clear from the name of the cost function, a function has to be defined. So, the desired function needs to input, and based on the input, it concludes output. A cost function in the GA takes chromosome into account as the input of the function, and the output of the function is the objective function value corresponding to that chromosome. In fact, the cost function is the main core for solving an optimization problem that the modeling system is carried out inside of it.

Multi-objective optimization

In many real problems of plant tissue culture, simultaneous optimization of multiple objective functions is considered. These functions are often in conflict with each other (George and Amudha 2020). For example, in the sterilization step, the sterilant has a negative effect on the explant viability and a positive impact on controlling contamination. Therefore, the ultimate aim of in vitro sterilization protocols would be the maximum explant viability and the minimum contamination.

In the multi-objective problems, in addition to the decision space of the problem, there is another space that is called objective space in which coordinate axes show the objective function value. Therefore, in order to define a multi-objective problem, if K ⊆ Rnis considered the n-dimensional search space, D ⊆ K as the justified space of multi-objective problem, andO ⊆ Rm as the m-dimensional objective space, then we will have:

where gp(x) is the pth inequality constraint, hq(x) is the qth equality constraint, P is the number of inequality constraints, Q is the number of equality constraints, \( {x}_i^{\mathrm{min}} \) is the lower limit of decision variables, and \( {x}_i^{\mathrm{max}} \) is the upper limit of decision variables.

In the single-objective optimization problems, judgment on the final optimal point was simple, because in this kind of problems, we only talk about one objective function. Hence, the optimal solution corresponding to the best value of the objective function can be regarded as the optimum solution of the problem. However, in judgment, in the multi-objective problems, it is not simple because the objective functions are usually in conflict with each other, so improving one will worsen the other. Therefore, we must establish a balance between the objectives. The aim of balancing is to find an equivalent solution between different objective functions of the problem. A balanced solution is concluded when it is not already possible to improve each of the objective functions without deteriorating other function values. According to the offered definition, a balanced solution of a problem may be more than one, and here, an individual solution will not be presented for the problem like the single-objective optimization. Each balanced solution is so-called a non-dominated solution, and the set of these solutions is known as non-dominated set or Pareto Optimal Set. In addition, these non-dominated solutions in the objective space of the problem form a front namely Pareto Front.

Domination: The solution x1 dominates solution x2 if and only if:

1. The solution x1 is worse than x2 in none of the objective functions.

2. The solution x1 is better than x2 at least in one objective function.

The mathematical expression of domination is presented as follows:

The definition above for both objective vectors (Z1, Z2) in the objective space can be defined similarly. If Z1 is not worse than Z2 in any objective function and is better than it at least in one objective function, then we will haveZ1 ≺ Z2. According to what was stated, it can be concluded that the solution x1 dominates the x2 solution when Z1 ≺ Z2.

Pareto Optimal: decision vector of x ∗ ∈ D will be called Pareto optimal if there is no other decision vector similar to x ≠ x∗ that dominates it. Moreover, decision vector of Z (x) will be Pareto optimal when x = x∗.

Pareto Optimal Set: the set of all decision vectors related to Pareto Optimal is named Pareto Optimal Set. If POS is Pareto Optimal Set, then it will be defined as follows.

Pareto Optimal front: The set of all objective vectors corresponding to the POS set is called the Pareto optimal front.

Figure 7 f is associated with the objective space of an optimization problem with two objectives. In this figure, if the yellow point in the center of four areas including 1, 2, 3, and 4 is placed as a solution, this point dominates all existing points in area 1, but all the existing points in area 3 dominate this point, whereas in areas 2 and 4, no point dominates this point as well as this point dominates no point in these areas. Considering areas 2 and 4, the necessity of providing a set of optimal solutions rather than a single solution is completely clear, because in this case, it is not certain which solution is better than other solutions. In fact, solutions have no superiority over each other.

Figure 7 g shows the objective space of a two-objective optimization problem. As it is obvious, the points that have not been dominated by any other points (Pareto point) form Pareto front. A good Pareto front is a one that has a maximum possible length, which means that covers all the non-dominated feasible solutions of the problem (George and Amudha 2020). In this regard, finding the points of both Pareto front sides will be more valuable. Infeasible points are known as points that do not meet the problem constraints. In fact, they are a mapping form of the infeasible decision variables in the decision space over objective space. Feasible points are points that meet the problem constraints, but was defeated by other point or points. Thus, the Pareto points are Feasible points that are non-dominated. It is necessary to note that in solving the problem, Pareto points are usually an estimation of Pareto front and cannot be fully compliant. The more compatibility among them is gained, the more quality of the obtained solutions is observed. (George and Amudha 2020).

Multi-objective optimization methods such as single-objective methods are divided into two categories: classic and smart (evolutionary). Of the classic solving methods related to multi-objective optimization problems, weighted sum method, goal programming, and goal attainment method can be noted. In these methods, a multi-objective problem by considering different weights for each objective will repeatedly be solved again and again, and thus, the Pareto curve is obtained. In other words, in these methods, with regard to the weight for each objective, the problem is changed into a single-objective problem. Therefore, these methods are so-called decomposition methods. Evolutionary multi-objective methods are very diverse, which include the first and second versions of the micro genetic algorithm (μGA, μGA2), and the first and second versions of non-dominated sorting genetic algorithm (NSGA-II, NSGA). The most well-known evolutionary multi-objective method is without doubt the NSGA-II (George and Amudha 2020). This algorithm will be described in the following section.

The second version of the non-dominated sorting genetic algorithm

NSGA-II algorithm is one of the most well-known and powerful algorithms that is applied for solving multi-objective optimization problems and its effectiveness has been proven in solving various problems. The first version of the algorithm (NSGA) was presented by Srinivas and Deb (1994). In this method, the concept of dominance was used and the Pareto that had an appropriate scattering plot (diversity in solutions) as well was considered more suitable Pareto. Important matters that exist in this optimization approach include:

-

The solution over which there is no other solution certainly better than is rated further. The solutions are ranked and sorted based on how many solutions dominate them.

-

Competence and quality of solutions is determined according to their rating.

-

Uses the Fitness Sharing method to diversify the solutions. In this method, if the Pareto points are closer to each other from a certain distance such as σ that is called sharing parameters, the fitness amount will be decreased with sharing between points.

The sensitivity of performance and quality of solutions in the algorithm NSGA to the parameter σ was very high, so that it was so difficult to determine its appropriate amount; moreover, the lack of elitism and complex calculations in determining the non-dominated solutions was so inappropriate in such a way that it was not simply negligible. In this regard, the second version of the algorithm NSGA namely NSGA-II was introduced by Deb et al. (2000) and Deb et al. (2002). This algorithm and its unique manner of dealing with the multi-objective optimization problems has been repeatedly applied by different researchers to create newer various multi-objective optimization algorithms. In the NSGA-II version, instead of the concept of the fitness sharing method, another concept called crowding distance has been used. Furthermore, the algorithm used to find the non-dominated solutions has reflected a significant improvement in terms of computational issues.

As mentioned before, a good Pareto front has two characteristics: the first feature is the solution quality, which means that the non-dominated solutions are in the Pareto front, and the second feature is the appropriate distribution and diversity of Pareto points. In the algorithm NSGA-II, the quality of solutions is determined by the relevant rank of each solution. Determining the rank of each solution is done by an algorithm called non-dominated sorting (NS) algorithm. the diversity of solutions is another criterion that is quantized by crowding distance. Therefore, a good solution is a solution that, in the first place, has the best quality and, in the second place, includes the highest crowding distance. In the following parts, these subjects will be discussed.

Fast non-dominated sorting algorithm

Discussed Pareto front until now is called the first front or F 1. If we do not take the F1 into account, another Pareto front can be extracted namely F2. This process can be applied until determining all fronts. For non-dominated sorting of a population with the size of N, any solution will be compared with other solutions. All individuals of the population, which are not dominated by any member, are placed in F1. For finding individuals of the next front, the previous process can be repeated by temporary ignoring of F1. This approach will nearly impose extra computational issues on us. In this section, the fast non-dominated sorting approach, which was introduced by Deb et al. (2000), will be discussed.

At first, for each solution, we consider two features; first feature ni, which is the number of solutions that dominates i solution. The second feature Si, which is a collection of the population individuals that are dominated by i solution. Whole solutions that have a ni equal to zero, are known as members of F1 (these solutions are rated as 1, the rate of anybody is the number front that is placed in). When F1 is called the current front, for each solution in the current front if the jth individual is observed in the Si group, one unit will be decreased from nj. In this way, if nj is equal to zero, that solution will be located in a separate list called H.

When all individuals of the current front are evaluated, individuals of the F1 will be introduced as the first front, and the process by considering H as the current front will be repeated. From now, the NS is known the same as fast non-dominated sorting (FNS). Mathematical description of the abovementioned matters related to the non-dominated sorting of the population (P) are presented as follows:

Crowding distance

Among the optimal solutions presented with the same rank, the solution that could bring more diversity has superiority. Thus, the solutions that are in the vacant regions of the objective space of the problem are considered superior. This advantage is quantified using the crowding distance. This distance for individuals over each front is calculated separately. To this end, individuals existing in each front should be ranked in an ascending format according to each objective function. As the smallest and largest values of the objective function are of great importance, the amount of crowding distance is considered equal to ∞ for them. For the rest of the solutions, crowding distance is computed based on the normalized difference amount between the objective function of two sides (before and after) of each solution. If we are going to calculate the crowding distance for L solutions existing in the τ front, we will have:

By calculating the crowding distance, we are able to define an operator, which includes in the first place domination and in the second place the crowding distance. This operator can be employed in different steps of the NSGA-II algorithm as a guide to move toward an optimum Pareto with an even distribution. This operator, which is called the crowded-comparison operator (≺n) is defined as follows; assume that each member of the population, such as i , has two characteristics including rank (irank) and crowding distance (idistance)

Therefore, between two solutions with unequal ranks, the superior solution is one with a lower rank, or if the rank of solutions is equal, the solution that has more crowding distance is a better solution. The criterion (≺n) is used to select the parent and create the new population.

Here, it is clearly characterized the difference between the single-objective GA algorithm and NSGA-II algorithm. Since in the NSGA-II the objective space is not sortable, we are not able to find the best solution by a sorting. So, in any part of the GA, that amount of the Cost was considered a criterion for selecting, in the NSGA-II criterion of (≺n) will be replaced with the Cost.

Binary tournament selection

The selection process in the algorithm NSGA-II is performed using the binary tournament selection method. In this method, the following steps are pursued:

-

Two members of the population are chosen randomly.

-

If two members are not of the same rank, the members with a lower ranking are selected.

-

If two members are of the same rank, a member is selected that has further crowding distance.

-

Selected members are returned into the population, and the previous process is repeated for the second parent selection.

After selecting the parents, the crossover operator is applied over them and offspring are created; then, mutation is applied on the population. So, the existing population will consist of three sections; the first section, the previous population; the second section, the population obtained from the crossover; the third section, the population achieved from mutation. After the merging of these populations, to the number of N, superior individuals (N is the allowable size of the population) should be selected. In this regard, at first, the population is sorted in ascending order based on non-dominated sorting (NS), and we begin to take individuals from F1 to create a new population. If the number of F1 individuals is less than N, all of its members will be transferred to the new population, and the remaining members will be transferred from subsequent Fs until the new population size would be equal to N. Continuing this process in a front like Fk, the size of the new population reaches N, in this front, all individuals are the same rank; so, in such condition, the front Fk based on the crowding distance is sorted in decreasing order, and decision-making criteria would be the crowding distance. Until the new population reaches size N, individuals are transferred to the next generation from the beginning of the front Fk. Figure 7 h illustrates this process well. If the number of F1 members is more than N, because the rank of all members in this front is equal to one, existing individuals in the front according to crowding distance are sorted in decreasing order, and the first N members are elected as the new population. Figure 7 h shows how to select a new population. In this figure, P is the main population and Q is the population resulting from the crossover and mutation.

The algorithm procedure such as the GA will continue until satisfying one of the stopping conditions. The obtained non-dominated solutions from solving the multi-objective optimization problem have no priority over other solutions, and depending on the circumstances, each of them can be considered an optimal decision.

AI-OA in plant tissue culture

In vitro culture consists of non-linear and non-deterministic developmental processes. In fact, in vitro culture stages are multi-variable procedures impacted by different factors such as plant genotype, culture medium, different types and concentrations of plant growth regulators (PGRs), etc. (Fig. 1) (Zielinska and Kepczynska 2013). The data derived from the plant tissue culture process can be categorized as (1) binary inputs which have only two grades, e.g., non-embryogenic/embryogenic callus, (2) discrete variables which include more than two grades, such as the number of roots, shoots, and embryos, (3) continuous variables which can consist of any grade, e.g., length of shoots or roots, and callus weight, (4) time-series data, (5) temporal data, (6) fuzzy inputs that relate to the degree of vitrification, callus color, and the developmental stages of embryos, and (7) categorical variables, e.g., the type of reaction, or the type of phytohormones and carbohydrate sources (Prasad and Gupta 2008a; Osama et al. 2015). The complexity of this situation and the interactive nature of the variables makes optimization challenging using traditional approaches in which single variable are generally evaluated sequentially in isolation. To address this challenge, artificial intelligence models and optimization algorithms have recently been used for modeling, forecasting and optimizing different stages of plant tissue culture. AI and OA methods used in various steps of in vitro culture were presented in this section and the AI-OA application was summarized in Table 3. As summary of various studies using AI systems to optimize different stages of plant tissue is presented below.

In vitro sterilization

Surface sterilization is an initial step of micropropagation in which the final success of plant tissue culture is directly dependent on. The surface sterilization performance can be impacted by various factors, e.g., the type, age, and size of the explant, physiological phase (vegetative or reproductive) of the mother plant, physical in vitro conditions (temperature and light), type and concentration of sterilant, and immersion time. Several studies (Teixeira da Silva et al. 2016; Hesami et al. 2017a; Hesami et al. 2018b; Cuba-Díaz et al. 2020) revealed that treatments with longer immersion time and greater concentration of disinfectants led to better surface disinfection. However, there is a negative correlation between explant viability and high concentration of disinfectants with long immersion time such that the efficiency of sterilization must be balanced with explant health (Hesami et al. 2019b; Cuba-Díaz et al. 2020). Thus, the type/level of sterilant and immersion time must be optimized for each species and explant to obtain the best outcomes during the surface sterilization. Optimizing this step is costly and time-consuming and some disinfectants are not environmentally friendly and/or hazardous to human health. To ease this problem, A hybrid AI-OA could be a reliable and useful statistical methodology for forecasting and optimizing this step. For instance, Ivashchuk et al. (2018) used MLP and RBF methods for studying and predicting in vitro sterilization of Bellevalia sarmatica, Nigella damascene, and Echinacea purpurea. Different concentrations of lysoformin, biocide, liquid bleach, chloramine B, and silver nitrate and various immersion time were considered inputs and the percentage of contamination and explant viability were taken as outputs. Also, a different range from 9 to 19 of neurons in the hidden layer and function activation (linear and sigmoid) were used for constructing the MLP model. According to Ivashchuk et al. (2018), MLP models with different neurons in the hidden layer and both linear and sigmoid function activations can precisely predict sterilization efficacy. Furthermore, they reported that there were no significant differences between MLP and RBF for modeling and predicting sterilization. In another study, Hesami et al. (2019b) applied MLP-NSGA-II to model and optimize in vitro sterilization of chrysanthemum. They considered NaOCl, nano-silver, HgCl2, AgNO3, Ca(ClO)2, H2O2, and immersion times as inputs, and explant viability and contamination rate as outputs. They used the 3-layer backpropagation network to run the MLP model. For output and hidden layers transfer functions of the linear (purelin) and the hyperbolic tangent sigmoid (tansig) were used, respectively. Furthermore, a Levenberg-Marquardt algorithm was used to determine the optimum bias and weights. They reported that the MLP model could precisely forecast contamination frequency (R2 > 0.97) and explant viability (R2 > 0.94). Moreover, they considered contamination frequency and explant viability as two objective functions in the NSGA-II process to determine the optimum values of sterilants and immersion time. One thousand generation, 200 initial population, 0.05 mutation rate, 0.7 crossover rate, two-point crossover function, a binary tournament selection function, and the uniform of mutation function were considered. The ideal point of Pareto was selected such that explant viability and contamination frequency became the maximum and minimum, respectively. Based on sensitivity analysis, Hesami et al. (2019b) reported that NaOCl was the best sterilant for in vitro sterilization of chrysanthemum. Also, according to MLP-NSGAII, 1.62% Sodium hypochlorite at 13.96 minutes immersion time can cause the highest explant viability (99.98%) with no contamination. Moreover, according to their validation experiment, in vitro sterilization can be precisely predicted and optimized by MLP-NSGA-II.

Microenvironment inside the culture medium containers