Abstract

This paper presents an adaptive discretization strategy for level set topology optimization of structures based on hierarchical B-splines. This work focuses on the influence of the discretization approach and the adaptation strategy on the optimization results and computational cost. The geometry of the design is represented implicitly by the iso-contour of a level set function. The extended finite element method is used to predict the structural response. The level set function and the state variable fields are discretized by hierarchical B-splines. While first-order B-splines are used for the state variable fields, up to third-order B-splines are considered for discretizing the level set function. The discretizations of the design and the state variable fields are locally refined along the material interfaces and selectively coarsened within the bulk phases. For locally refined meshes, truncated B-splines are considered. The properties of the proposed mesh adaptation strategy are studied for level set topology optimization where either the initial design is comprised of a uniform array of inclusions or inclusions are generated during the optimization process. Numerical studies employing static linear elastic material/void problems in 2D and 3D demonstrate the ability of the proposed method to start from a coarse mesh and converge to designs with complex geometries, reducing the overall computational cost. Comparing optimization results for different B-spline orders suggests that higher interpolation order promote the development of smooth designs and suppress the emergence of small features, without providing an explicit feature size control. A distinct advantage of cubic over quadratic B-splines is not observed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

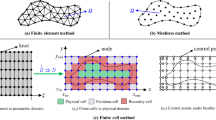

Following the seminal work of Bendsøe and Kikuchi (1988), topology optimization has become, over the last decades, a reliable and efficient design tool in various application fields (see Sigmund and Maute (2013) and Deaton and Grandhi (2014)). In general, an optimization problem is formulated to find the optimal material distribution, within a design domain, that maximizes a given objective while satisfying some given constraints on the geometry and/or the physical response. In specific regions of the design domains, the state variable fields may exhibit large gradients or discontinuities. Additionally, the design variable fields may have to resolve complex shapes, intricate material arrangements, or extremely thin features. In these cases, an increased mesh resolution is necessary to achieve a sufficient accuracy of both the physical responses and the geometry description. Most topology optimization approaches operate on uniformly refined meshes, for which extreme resolution requirements can lead to a drastic increase in computational cost since the number of design and state degrees of freedom (DOFs) are increased. Hence, there is a need for efficient adaptive strategies in topology optimization.

In the 1980s, Bennet and Botkin (1985) and Kikuchi et al. (1986) explored mesh adaptation in combination with shape optimization. Their objective was to avoid the distortions of the finite element mesh arising from shape modifications and thus to control the quality of the finite element solution. Later, Maute and Ramm (1995) and Maute et al. (1998) and Schleupen et al. (2000) performed topology optimization and used separate models for the design and the analysis to allow for more flexibility in the optimization process. In these works, the effective design space is adapted at each optimization step with respect to the current material distribution, to decrease both the number of finite elements in the analysis model and the number of design variables used for the geometry description. Ever since, adaptive topology optimization has been an active research topic, as it can provide both precise geometry representations and accurate mechanical responses at a reduced computational cost, substantially speeding up the design process.

Working with density-based approaches and solving compliance minimization problems, several adaptive strategies for topology optimization have been proposed. Costa and Alves (2003) carried out a refinement-only approach based on a density criterion. After a given number of optimization steps, the material and boundary elements are refined using h-adaptivity, i.e., the elements are split into smaller ones (see Yserentant (1986) and Krysl et al. (2003)). As no coarsening is introduced in the void regions and as no regularization is used, the optimization leads to mesh dependent designs that differ from the ones obtained with uniformly refined meshes. Stainko (2006) developed a refinement criterion based on a regularization filter indicator that locates the material interface. In this case, h-refinement is only applied around the interface and a reduced number of elements is generated during the optimization process. Extending the previous approaches, Wang et al. (2010) introduced mesh coarsening in the void regions, allowing for further cost reduction and achieving designs that only slightly differ from uniform ones. A similar approach is adopted in Nana et al. (2016) using unstructured meshes and in Nguyen-Xuan (2017) using polygonal elements. Also exploiting h-refinement, Bruggi and Verani (2011) trigger mesh adaptivity based on error estimators; one related to the geometric error and one to the analysis error.

In the previous approaches, the geometry description and the analysis are strongly coupled, as both the state and the design variables are defined on the same mesh. Following the initial idea by Maute and Ramm (1995), Guest and Smith Genut (2010) exploited the separation of the geometry and the analysis fields using Heaviside projection methods. After projection, multiple elements are influenced by a single design variable, introducing a redundancy in the design. The latter can be exploited to reduce the number of design variables, and therefore enhance computational performance. Wang et al. (2013, 2014) also refined the state and design fields separately by using two distinct meshes adapted by h-refinement and resorting to independent geometric and analysis error estimator criteria.

Later on, adaptive mesh refinement strategies were extended to other optimization techniques by, for example Wallin et al. (2012) in the context of phase fields and Panesar et al. (2017) for Bi-directional Evolutionary Structural Optimization (BESO). Adaptive mesh refinement has also been used to address stress-based optimization problems. Salazar de Troya and Tortorelli (2018) used a density-based approach and adapted the mesh following a stress error estimator, while Zhang et al. (2018) used moving morphable voids described explicitly by B-splines and refined regions located around the design boundaries.

So far, most topology optimization approaches have been implemented within the framework of classical finite element, i.e., relying on low-order Lagrange interpolation functions. Nonetheless, using B-splines or NURBS as basis functions has become an increasingly popular approach, along with the development of isogeometric analysis (IGA) (see Hughes et al. (2005) and Cottrell et al. (2009)). B-Splines are used to both describe the geometry of a structure and solve for its mechanical responses in IGA. From a geometry point of view, using B-splines and NURBS facilitates compatibility with computer-aided design (CAD) software that are based on this type of basis functions. From an analysis point of view, using smooth and higher order bases, such as quadratic and cubic B-splines, leads to more accurate structural responses per DOF than classical C0 finite element approaches (see Hughes et al. (2008), Evans et al. (2009), and Hughes et al. (2014)). For topology optimization, B-splines can offer additional advantages. They tend to promote smoother designs, prevent the development of spurious features, and limit the need for filtering techniques. Several works successfully apply topology optimization in combination with IGA; see for example Dedè et al. (2012) for a phase-field approach, Qian (2013) for a density approach, Wang and Benson (2016) or Jahangiry and Tavakkoli (2017) for a level set approach, Wang et al. (2017) for lattice structure designs, or Lieu and Lee (2017) for multi-material designs. A comprehensive review is presented in Wang et al. (2018).

Classical B-splines approaches do not allow for local refinement, as their tensor-product structure inherently enforces global refinement. However, in solving topology optimization problems, local mesh adaptivity is key to achieve both a precise description of the design boundaries and accurate mechanical response computations while maintaining a reasonable computational cost. Hierarchical B-splines (HB-splines), as introduced by Forsey and Bartels (1988), naturally support local refinement, but do not form a partition of unity (PU), a beneficial property for numerical purposes. Therefore, the concept was extended to so-called truncated hierarchical B-splines (THB-splines) to recover the PU and other advantageous properties (see Giannelli et al. (2012)). Local adaptive mesh refinement relying on HB-splines or THB-splines was implemented by Schillinger et al. (2012) and Garau and Vázquez (2018).

Recently, research efforts have been dedicated to the exploitation of B-spline refinement in conjunction with level set topology optimization. Solving compliance minimization problems, Bandara et al. (2016) used B-spline shape functions for both the geometry representation and the analysis with immersed boundary techniques. They proposed a global mesh refinement and coarsening strategy based on Catmull-Clark subdivision surfaces. Wang et al. (2019) focused on cellular structures and used B-spline shape functions to represent the geometry of each representative cell. In their approach, local refinement, i.e., cell subdivision, is achieved by knot insertion. Recently, Xie et al. (2020) used THB-splines as interpolation functions and the Solid Isotropic Material with Penalization (SIMP) method to perform density-based topology optimization with local refinement.

This paper proposes an adaptive mesh refinement strategy using HB-splines to perform level set topology optimization. We use a level set function (LSF) to describe the design geometry implicitly. The structural analysis relies on an immersed boundary technique to predict the system responses, here the eXtended Finite Element Method (XFEM) with a generalized Heaviside enrichment strategy. We discretize both the design and the state variable fields, i.e., the level set and the displacement fields, with HB-splines. Contrary to most approaches relying on lower order basis functions, we interpolate the design variables with higher order B-splines. For simplicity, the interpolation of the displacement field is restricted to first-order functions. For local refinement, we consider truncated B-splines. Although not widely used in the literature so far, THB-splines satisfy the PU and form a convex hull, allowing us to conveniently impose bounds on the design variables. Refinement is triggered according to a user-defined criterion, here the location of the design boundaries, and the mesh is refined along the interfaces and coarsened in the solid and void phases. As the design and state variable fields are defined on the same mesh, they are refined or coarsened simultaneously, which allows for a sufficiently accurate resolution of both the geometry and the physical response.

Two approaches to handle the design space in level set topology optimization are considered: (i) seeding an initial hole pattern and (ii) nucleating holes during the optimization process using a combined level set/density approach. We solve the optimization problems with mathematical programming techniques and in particular the Globally Convergent Method of Moving Asymptotes (GCMMA) (see Svanberg (2002)). The required sensitivity analysis is carried out with an adjoint formulation.

The ability of the proposed approach to generate complex geometries at a reduced computational cost starting from initial coarse meshes is assessed with two- and three-dimensional topology optimization problems. By varying the adaptive strategy and the underlying B-spline discretization, we can characterize the influence of the mesh adaptivity on the optimization results and the computational cost. Our numerical study results in interesting findings. First, the mesh adaptivity strategy influences the optimization process. Finer initial meshes and more frequent refinement operations lead to the development of more complex geometries exhibiting thin structural members. The properties of B-splines have an impact on the generated designs and an educated choice of the B-splines features can lead to advantageous behaviors for topology optimization. Higher order B-splines promote smoother geometries and tend to eliminate small spurious features from the design. Truncation allows us to conveniently handle enforcement of bounds on the design variables. The numerical examples also reveal that hole nucleation is crucial for the computational efficiency of the adaptive strategy, as it allows starting the optimization process on coarser meshes.

The remainder of the paper is organized as follows. Section 2 presents the level set description of the geometry using B-splines. Section 3 discusses the strategies adopted to achieve a sufficiently rich design space working with level set topology optimization. Section 4 details the mesh refinement and coarsening strategies, while Section 5 focuses on the construction of hierarchical B-spline bases. The structural analysis is described in Section 6; governing equations are detailed and the basic principles of XFEM are recalled. Section 7 is devoted to the optimization problem formulation, the used regularization schemes, and the sensitivity analysis implementation. Section 8 studies the proposed approach with 2D and 3D solid/void design examples. Finally, Section 9 summarizes the work and draws some conclusions.

2 Geometry description

Since the development of the level set method (LSM) by Osher and Sethian (1988), it has been extensively used in combination with shape and topology optimization to implicitly describe the geometry of the design (see van Dijk et al. (2013) and Sigmund and Maute (2013)).

A general description of the two-phase problems addressed in this paper is given in Fig. 1. Two material phases, A and B, are distributed over a d-dimensional design domain \(\varOmega \subset \mathbb {R}^{d}\). Material subdomains are non-overlapping, i.e., Ω =Ω A ∪ΩB. The boundaries of material subdomains A and B are denoted ∂ΩA and ∂ΩB. Dirichlet and Neumann boundary conditions are applied to \({{\Gamma }^{I}_{D}} = \partial \varOmega ^{I} \cap \partial \varOmega _{D}\) and \({{\Gamma }^{I}_{N}} = \partial \varOmega ^{I} \cap \partial \varOmega _{N}\) respectively, with I = A,B. The material phases are separated by an interface ΓAB = ∂ΩA ∩ ∂ΩB.

A LSF is used to describe the material distribution within the design domain Ω. At a given point x in space, the design geometry is defined as:

The LSF is interpolated using B-spline shape functions Bi(x) as:

where ϕi are the B-spline coefficients. In this work, the B-spline coefficients and corresponding shape functions are used to evaluated nodal level set values on the integration mesh, that is the mesh over which the weak form of the governing equations is integrated. These nodal values are used to interpolate the level set field linearly within an integration element. The linear approximation within an element simplifies the construction of the intersection of the LSF with the element edges and does not affect the accuracy of the analysis, as linear interpolation functions are used for the state variable field. Further explanations about the level set field interpolation on the analysis mesh are provided in Section 5.

Contrary to classical approaches by Wang et al. (2003) or Allaire et al. (2004), where the level set is updated in the optimization process by solving Hamilton-Jacobi-type equations, the coefficients of the LSF are here defined as explicit functions of the design variables. They are updated by mathematical programming techniques driven by shape sensitivity computations.

It should be noted that, in this work, no filter is used to widen the zone of influence of the design variables and thus enhance the convergence of the optimization problem, as proposed in Kreissl and Maute (2012). Proceeding this way, the B-spline basis function support is not altered and its influence on the optimization process and the obtained designs can be emphasized and compared for different B-spline orders.

3 Seeding of inclusions

The design updates in level set topology optimization is solely driven by shape sensitivities (see van Dijk et al. (2013)). To allow for a sufficient freedom in the design, level set optimization techniques require either seeding inclusions in the initial design or introducing inclusions during the optimization process. In the context of solid/void problem, the inclusion represents a hole.

Seeding inclusions in the initial design domain is a commonly used strategy and has been successfully implemented in level set topology optimization (see for example Villanueva and Maute (2014)). Nonetheless, this strategy leads to several difficulties. To avoid a premature removal of holes and geometric features which may lead to suboptimal designs, the optimization process is started from a feasible configuration. In this case, finding a hole pattern that satisfies the design constraints is not a trivial task. Moreover, this strategy requires a sufficiently fine mesh able to resolve both the physics and the design of the initial layout, as the initial hole pattern can easily constitute the most geometrically complex configuration over the entire optimization process. Thus, as will be shown in Section 8, seeding initial holes limits the computational effectiveness of the proposed adaptive discretization strategy. Therefore, allowing for hole seeding during the optimization process proves to be advantageous in the context of mesh adaptivity.

Topological derivatives constitute a systematic approach to seed new holes in the design domain during the optimization process (see Novotny and Sokołowski (2013) for a detailed introduction). The basic concept of evaluating the influence, i.e., the sensitivity, of introducing an infinitesimal hole on the objective and constraint functions was introduced by Eschenauer et al. (1994). A finite-sized hole is inserted at a location where the topological derivative field associated to an objective or considered cost function is minimal. The shape of the new hole is then optimized along with existing domain boundaries. The reader is referred to Sigmund and Maute (2013) or Maute (2017) for further details. Although proven useful, topological derivatives only provide information about where to place an infinitesimal hole and it remains unclear what the size and shape of a finite-sized hole should be.

Alternatively, hole seeding during the optimization process can be achieved by combining level set and density-based techniques, as explored recently in Kang and Wang (2013), Geiss et al. (2019b), and Burman et al. (2019) or Jansen (2019). Following the single-field approach of Barrera et al. (2019), an abstract design variable field, s(x), is introduced with \(s \in \mathbb {R}\), 0 ≤ s(x) ≤ 1, to define both the level set field, ϕ, and spatially variable material properties, such as the density ρ and the Young’s modulus E, using a SIMP interpolation scheme:

where ϕsc is a scaling parameter that accounts for the mesh size h and is set to \(\phi _{\text {sc}} \simeq 3h {\dots } 5h\), ϕth is a threshold that defines the void/solid phases in terms of s(x) and ρsh, a parameter that controls the minimum density in the solid domain. The properties of the bulk material are denoted by ρ0 and E0, and β is the SIMP exponent. The resulting interpolation scheme is illustrated in Fig. 2. Throughout the optimization process, the parameter ϕth is kept constant and equal to ϕth = 0.5, while ρsh is a continuation parameter and is gradually increased to ρsh = 1 during the process. To avoid ill-conditioning, its initial value is set to \(\rho _{\text {sh}} \simeq 0.1 {\dots } 0.2\), unless a smaller value is required to satisfy an initial mass or volume constraint. Contrary to classical density-based approaches, the density here is used primarily for hole seeding and for convergence acceleration. A 0 − 1 material distribution is achieved through continuation on the parameter ρsh. A low SIMP exponent, e.g., β = 2.0, is used to reduce the bias of the density method to rapidly separate the material distribution into a solid and void phase. For the proposed mesh adaptation strategy, we have observed that using a low SIMP exponent promotes the formation of fine features, especially when starting from coarse meshes.

In this paper, both approaches, i.e., the seeding of holes in the initial design and the combined level set/density scheme, are considered and compared.

4 Mesh adaptivity

This section focuses on the strategies used to perform hierarchical mesh refinement. First, we introduce the notion of a hierarchical mesh. We recall the basic concepts of mesh refinement and detail our implementation of the refinement strategy. Finally, the considered user-defined criteria, used to trigger mesh adaptation, are explained in detail.

4.1 Hierarchical mesh

In this work, a regular background tensor grid is used to build hierarchical meshes. The elements of the background grid do not carry any notion of interpolation, i.e., an element of the background grid is not associated with B-spline bases or Lagrange nodes. Starting from a uniform background grid with a refinement level l0 and subdividing its elements, elements with a higher refinement level l > l0 are created.

Formally to define a hierarchical mesh of depth n, a sequence of subdomains Ωl is introduced:

where each subdomain Ωl is the subregion of Ω selected to be refined at the level l and is the union of elements on the level l − 1. The creation of such a refinement pattern is illustrated in Fig. 3.

In Section 5, these subdomains Ωl, refined to a level l, are used to create hierarchical B-spline bases to interpolate the design and state variable fields.

4.2 Local refinement strategies

This subsection focuses on the refinement strategies used in this paper and explains their implementation. Before refinement is carried out by element subdivision, elements need to be flagged for adaptation. Elements can be flagged based on one or several user-defined criteria and on additional mesh regularity requirements.

A complete refinement step proceeds as stated in Algorithm 1. Initially, elements are flagged for refinement based on user-defined criteria, as further explained in Section 4.2.1. Additionally, elements are flagged within a so-called buffer region, around the previously flagged elements, so that the obtained refined mesh complies with specific regularity requirements, as further detailed in Section 4.2.2. Finally, a minimum refinement level \(l_{\min \limits }\) is enforced and elements with \(l< l_{\min \limits }\) are flagged and refined. To increase performance, flagged elements are collected in a refinement queue first and refined afterwards.

In this paper, design and state variables are defined on a common mesh, although the interpolation order may differ. Thus, the discretization of both fields are adapted simultaneously. While the proposed framework allows for separately refining state and design variable fields, this option is not considered here for the sake of simplicity.

After each adaptation of the mesh, the design variable field ϕ, as described in (2), is mapped to the new refined mesh through an L2 projection. The B-spline coefficients ϕi,new on the new mesh are obtained from the ones on the old mesh ϕi,old by solving:

where

This projection step requires an additional linear solve for a system whose size depends on the number of design variables of the new mesh.

4.2.1 User-defined refinement criteria

Refinement can be triggered by one or several user-defined criteria, including geometry criteria or finite element error indicators, although the latter are not considered here. In this paper, refinement is applied after a given number of optimization steps, defined by the user, and using a purely geometric criterion, i.e., the distance to the solid-void interfaces.

During each refinement step, an element is flagged for refinement or for keeping its current refinement level by the Algorithm 2. Each element mesh is flagged for refinement depending on its nodal level set values ϕ. The parameter ϕbw defines the bandwidth around the interface which is refined. This allows fine-tuning the zone of refinement in addition to the buffer introduced in Section 4.2.2. Over the course of the optimization process, at the solid-void boundary, the mesh is refined up to a maximum refinement level defined for the interface \(l_{\text {ifc},\max \limits }\). Within the solid phase, the mesh is refined up to a maximum refinement level \(l_{\text {solid},\max \limits }\), that is usually equal or smaller than the interface one, \(l_{\text {solid},\max \limits } \leq l_{\text {ifc},\max \limits }\). The void phase is never flagged for refinement. A minimum refinement level \(l_{\min \limits } \geq 0\) is applied. As each refinement operation is started from the coarsest refinement level possible, an element that is not flagged will not be refined at all. At the beginning of the optimization, \(l_{\min \limits }\) is generally set to \(l_{\min \limits } > 0\). By lowering \(l_{\min \limits }\) throughout the optimization process, a coarsening effect is achieved.

In the course of the optimization process, the shape of the solid-void interface changes and the interface may intersect elements previously not refined. In this study, the mesh is adapted to maintain a uniform refinement level for all intersected elements. Although not required by the proposed framework, this strategy is used here as it simplifies the XFEM enrichment (see Section 6.2). The same enrichment algorithm can be used for all intersected elements, as is the case for uniformly refined meshes. To this end, Algorithm 1 is executed, omitting Step 2 of Algorithm 2.

4.2.2 Mesh regularity requirements

In this paper, we limit the size difference between adjacent elements in the refined mesh, i.e., a refinement of more than a factor 4 in 2D and 8 in 3D is not allowed. Although not mandatory (see studies by Jensen (2016) and Panesar et al. (2017)), this rule is adopted in most works dedicated to adaptivity as it promotes accurate analysis results. This requirement can be achieved by enforcing a so-called buffer zone around elements primarily flagged for refinement. The width of the buffer zone dbuffer for a particular flagged element is calculated by multiplying its size with a user-defined buffer parameter bbuffer. The width of the buffer zone must be larger than the support size of the considered interpolation functions. Using B-splines, it means that bbuffer ≥ p, where p is the polynomial degree of the used B-spline basis.

The mesh refinement procedure for building a buffer zone is summarized in Algorithm 3. Within a refined mesh, coarse elements are called parents; refined elements, children. The algorithm is applied to each flagged element and starts by determining its parent element. The refinement status of the parent’s neighbors, i.e., elements lying within the buffer range of the considered parent, is checked. If these neighbors are neither refined nor flagged for refinement, the distance \(d_{\max \limits }\) between the considered parent element and its neighbors is calculated. If the distance \(d_{\max \limits }\) is smaller than the buffer size dbuffer, the neighbor elements are flagged for refinement. The algorithm is then applied recursively to all newly flagged neighbor elements, until no extra elements are flagged. An easy and efficient access to hierarchical mesh information, such as neighborhood relationships, is provided by using a quadtree and an octree data structure in 2D and 3D respectively.

5 Hierarchical B-splines

This section focuses on hierarchical B-splines for adaptively discretizing design variable and state variable fields. First the basic concepts of B-splines in one and multiple dimensions are recalled. Then, the principle of B-spline refinement and the construction of truncated and non-truncated hierarchical B-spline bases are briefly described.

5.1 Univariate B-spline basis functions

Starting from a knot vector \({\Xi } = \{ \xi _{1},\xi _{2}, {\dots } ,\xi _{n+p+1} \}\), for which \(\xi \in \mathbb {R}\) and \(\xi _{1} \leq \xi _{2} \leq {\dots } \leq \xi _{n+p+1}\), a univariate B-spline basis function Ni,p(ξ) of degree p is constructed recursively starting from the piecewise constant basis function:

and using the Cox de Boor recursion formula for higher degrees p > 0 (see de Boor (1972)):

A knot is said to have a multiplicity k if it is repeated in the knot vector. The corresponding B-spline basis exhibits a Cp−k continuity at that specific knot, while it is \(C^{\infty }\) in between the knots. A knot span is defined as the half-open interval [ξi,ξi+ 1). Within this context, an element is defined as a nonempty knot span.

5.2 Tensor-product B-spline basis functions

Tensor-product B-spline basis functions Bi,p(ξ) are obtained by applying the tensor product to univariate B-spline basis functions. Denoting the parametric space dimension as dp, a tensor-product B-spline basis is constructed starting from dp knot vectors Ξm = {\({\xi _{1}^{m}},{\xi _{2}^{m}}, \dots ,\)\(\xi _{n_{m}+p_{m}+1}^{m}\)} with pm donating the polynomial degree and nm the number of basis functions in the parametric direction \(m = 1, \dots , d_{p}\). A tensor-product B-spline basis function is generated from dp univariate B-splines \(N_{i_{m},p_{m}}^{m}(\xi ^{m})\) in each parametric direction m as:

where the position in the tensor-product structure is given by the index \(\mathbf {i} =\{ i_{1}, \dots , i_{d_{p}} \}\) and \(\mathbf {p} = \{ p_{1}, \dots , p_{d_{p}} \}\) is the polynomial degree. Similar to the univariate case, an element is defined as the tensor product of dp nonempty knot spans. Additionally, a B-spline space \(\mathcal {V}\) is defined as the span of B-spline basis functions.

5.3 B-Spline refinement

Hierarchical refinement of uniform B-splines can be achieved by subdivision. A univariate B-spline basis function can be expressed as a linear combination of p + 2 contracted, translated, and scaled copies of itself:

where the binomial coefficient is defined as:

Figure 4 shows the refinement of a quadratic univariate B-spline basis function obtained by subdivision.

The extension of the subdivision property in (11) to tensor-product B-spline basis functions Bp is straightforward as they exhibit a tensor-product structure:

where the indices \(\mathbf {j} = \{i_{1}, \dots , i_{d_{p}}\}\) indicate the position in the tensor-product structure.

5.4 Hierarchical B-splines

To build a hierarchical B-spline basis, a sequence of tensor-product B-spline spaces is introduced:

Each B-spline space \(\mathcal {V}^{l}\) has a corresponding basis \({\mathscr{B}}^{l}\).

A hierarchical B-spline basis \({\mathscr{H}}\) can be constructed recursively based on the sequence of B-spline bases \({\mathscr{B}}^{l}\) that span the domains Ωl. In an initial step, the basis functions defined on the coarsest level, l = 0, are collected and assigned to \({\mathscr{H}}^{0}\). The hierarchical B-spline basis \({\mathscr{H}}^{l+1}\) is constructed by taking the union of all basis functions B in \({\mathscr{H}}^{l}\) whose support is not fully enclosed in Ωl+ 1 and all basis functions B in \({\mathscr{B}}^{l+1}\) whose support lies in Ωl+ 1. Following Garau and Vázquez (2018), the recursive algorithm reads:

where the index l gives the level of refinement. Basis functions collected in \({\mathscr{H}}\), where \({\mathscr{H}} = {\mathscr{H}}^{n-1}\), are called active, while basis functions in \({\mathscr{B}}^{l}\) not present in \({\mathscr{H}}\) are said to be inactive.

A hierarchical B-spline basis \({\mathscr{H}}\) is illustrated for a one-dimensional example in Fig. 5. The top row shows a one-dimensional hierarchical refined mesh. Following (16) a B-spline basis \({\mathscr{H}}\) is created through an initialization step with all bases in the subdomain Ω0 refined to a level l = 0. Recursively, all bases in the subdomain Ωl+ 1 with higher refinement level l + 1 are added, while existing basis functions of level l fully enclosed in Ωl+ 1 are discarded.

Three levels of refinement on a given mesh. The refined mesh is depicted in the top figure. The three underlying figures present the three levels of refinement considered here. Within these three levels, the active B-spline basis \({\mathscr{H}}\) is displayed in black, while the inactive B-spline basis functions are displayed in gray

Contrary to h-refinement in classical finite element where extra treatments, such as the introduction of multi-point constraints, are necessary, hanging nodes in hierarchical refined meshes are naturally handled by the B-spline basis.

5.5 Truncated B-splines

A major drawback of hierarchical B-spline bases is the loss of the PU property. The truncated hierarchical B-spline basis constitutes an extension of the hierarchical B-spline basis, aiming at recovering the PU principle and at reducing the number of overlapping functions on adjacent hierarchical levels (see Giannelli et al. (2012)). Considering a basis function Bl, part of \({\mathscr{B}}^{l}\) and defined on the domain Ωl, its representation in terms of the finer basis of level l + 1 is given as:

where \(c_{B^{l+1}}^{l+1}\) is the coefficient associated to a basis function Bl+ 1.

As described in Giannelli et al. (2012) and Garau and Vázquez (2018), the truncation of this basis function Bl, whose support overlaps with the support of finer basis functions Bl+ 1, part of \({\mathscr{B}}^{l+1}\) and defined on Ωl+ 1, is expressed as:

Similar to the creation of a hierarchical B-spline basis \({\mathscr{H}}\), a truncated hierarchical B-spline basis \(\mathcal {T}\) is constructed recursively, but by additionally applying the truncation in (18) at each iteration:

The truncated basis \(\mathcal {T}\) spans the same space as the non-truncated basis \({\mathscr{H}}\), but its underlying functions exhibit the following properties. They are defined on smaller supports which result in sparser stiffness matrices in a finite element analysis. The PU property is restored which allows for the imposition of bounds on variables. In addition, truncated bases admit a strong stability property (see Giannelli et al. (2014)) which enables the construction of optimal multi-level solvers (see Hofreither et al. (2016)). However, this property is not exploited in this paper, as the displacements are interpolated by linear B-splines.

The effect of the truncation is illustrated in Fig. 6. Univariate truncated and non-truncated bases \(\mathcal {T}\) and \({\mathscr{H}}\) are juxtaposed for comparison. The comparison shows the reduced support of the truncated B-splines. The effect of truncation on the support of a multivariate, two-dimensional B-spline basis is shown in Fig. 7. Truncated multivariate B-splines present a characteristic kidney-shaped support zone.

6 Structural analysis

Whereas the proposed adaptive discretization scheme is not limited to any particular physics problem, in this paper, we consider solid-void problems as illustrated in Fig. 1, where the material subdomain A is filled with a linear elastic solid, while the material subdomain B is void. In this section, we present the variational form of the governing equations for the linear elastic analysis model. As the XFEM is used to discretize the state variable fields in the solid domain, the basic principles of the method are briefly recalled.

6.1 Governing equations

We solve for static equilibrium to enforce balance of linear momentum within the solid domain ΩA. In this work, the total residual \(\mathcal {R}\), i.e., the weak form of the governing equations, consists of four terms which are discussed subsequently:

The weak form of the linear elastic governing equation reads:

where u and δu are the displacement field and the test function, respectively. Traction forces, tN, are applied on the Neumann boundary, \({{\Gamma }_{N}^{A}}\). The Cauchy stress tensor is denoted by σ = D ε and is obtained by multiplication of the infinitesimal strain tensor \(\boldsymbol {\varepsilon } = \frac {1}{2} \left (\nabla \mathbf {u} + \nabla \mathbf {u}^{T} \right )\) with the fourth-order constitutive tensor D, here for isotropic linear elasticity.

To weakly enforce prescribed displacements on Dirichlet boundaries, the static equilibrium in (21) is augmented with Nitsche’s method (see Nitsche (1971)):

where uD are the displacement imposed on the Dirichlet boundary, \({{\Gamma }^{A}_{D}}\). The parameter γN is chosen to achieve a certain accuracy in satisfying the boundary conditions and is a multiple of the ratio E/h, where E is the Young’s modulus of the considered material and h is the edge length of the intersected elements.

Using immersed boundary techniques (see Section 6.2), numerical instabilities arise when either the contributions of the DOFs interpolating the displacement field on the residual vanish and/or these contributions become linearly dependent. These issues typically arise when the level set field intersects elements such that small material subdomains emerge. This results in an ill-conditioning of the equation system and inaccurate prediction of displacement gradients along the interface. The face-oriented ghost stabilization proposed by Burman and Hansbo (2014) is used in this work to mitigate this issue. Using a virtual work–based formulation, the jump in the displacements gradients is penalized across the faces belonging to intersected elements by augmenting the residual equations with:

where F is a specific face belonging to \(\mathcal {F}_{\text {cut}}\), the set of element faces cut by the interface, and nF is the outward normal to face F. The jump operator ⟦∙⟧ computes quantities between adjacent elements A and B as ⟦∙⟧ = ∙A −∙B. The ghost penalty parameter is denoted γG and typically takes its value in the range \(0.1 {\dots } 0.001\). In contrast to the displacement-based formulation of Burman and Hansbo (2014), (23) allows for different materials in adjacent elements.

During the optimization process, isolated islands of material can emerge and develop in the design domain. This leads to a singular system of equations, as the rigid body modes associated to these free-floating material islands are not suppressed or constrained. To prevent this issue, we adopt the selective structural spring approach proposed and successfully implemented in Villanueva and Maute (2017), Geiss and Maute (2018), and Geiss et al. (2019b). An additional stiffness term is added to the weak form of the governing equations:

where the parameter γS is set to a value ranging from 0 to 1 depending on the solution of an additional diffusion problem, so that a fictitious spring stiffness kS is only applied to solid subdomains disconnected from any mechanical boundary conditions. The spring stiffness kS is set to E/h2, where E is Young’s modulus of the considered material and h the element edge width.

6.2 The extended finite element method

The XFEM was developed by Moës et al. (1999) to model crack propagation without remeshing. The method allows capturing discontinuous or singular behaviors within a mesh element by adding specific enrichment functions to the classical finite element approximation. Here, following the work by Terada et al. (2003), Hansbo and Hansbo (2004), and Makhija and Maute (2014), a generalized Heaviside enrichment strategy with multiple enrichment levels is used. This particular enrichment enhances the classical finite element interpolation with additional shape functions which then results in independent interpolation into disconnected subdomains.

Considering a two-phase problem, the displacement field u(x) is approximated with the Heaviside enrichment:

where I⋆ is the set of all the B-spline coefficients in the analysis mesh, Bi(x) is the B-spline basis function associated with the ith coefficient, and \(u^{I}_{im}\) is the vector of displacement DOF associated with the ith coefficient for material phase I = A,B. The number of active enrichment levels is denoted M and the Kronecker delta \(\delta _{ml}^{Ii}\) is used to select the active enrichment level m for the ith coefficient and material phase I. This selection ensures the satisfaction of the PU principle (see Babuška and Melenk (1997)), as only one set of DOFs is used to interpolate the solution at a given point x. For simplicity, the interpolation of the displacement field is restricted to first-order functions. The Heaviside function H is defined as:

Using the Heaviside enrichment, the integration of the weak form of the governing equations has to be performed separately on each material phase. Therefore, intersected elements are decomposed into integration subdomains, i.e., into triangles in two dimensions and into tetrahedra in three dimensions. For further details on the decomposition approach, the reader is referred to Villanueva and Maute (2014).

7 Optimization problem

In this paper, we focus on minimum compliance designs considering mass either via a constraint or a component of the objective. The optimization problem is formulated as follows:

The functional \(\mathcal {Z}\) is a weighted sum of the compliance and the mass, both evaluated over the solid domain:

where \(\mathcal {S}\) is the strain energy, \(\mathcal {S}_{0}\) is the initial strain energy value, \({\mathscr{M}}^{A}\) the mass of material phase A, \({\mathscr{M}}_{0}\) is the initial mass value, and ws and wm are the weighting factors. The perimeter associated to the domain boundary is denoted by \(\mathcal {P}_{p}\) and its initial value is \(\mathcal {P}_{0}\). The penalty functions \(\mathcal {P}_{\phi }\) and \(\mathcal {P}_{\nabla \phi }\) are used to regularize the level set field around and away from the interface and are further discussed in Section 7.1. The parameters cp and cϕ are the penalties associated with the perimeter and the regularization respectively. The mass constraint ensures that the ratio of material phase A, \({\mathscr{M}}^{A}\), and the total mass, \({\mathscr{M}}\), is smaller or equal to cm. Note that enforcing the bounds on the design variable s is conveniently achieved using THB-splines as they form a convex hull, while it requires extra handling when using HB-splines.

On purpose, we do not consider any means to control the feature size. One goal of this paper is to study the influence of the proposed adaptive hierarchical B-spline discretization on the optimization results, including the ability to resolve fine features. To allow the emergence of such features, no feature size control is imposed.

In this work, we solve the discretized optimization problem in the reduced space of only the design variables by a gradient-based algorithm. For each candidate design generated in the optimization process, the state variables are determined by solving the discretized governing equations (see Section 6).

7.1 Level set regularization scheme

To ensure that the spatial gradient near the solid-void interface is approximately uniform and matches a target gradient norm over the span [−ϕvt, ϕvt], a level set regularization scheme is used. The scheme also enforces convergence to a target positive ϕup or negative ϕlow value away from the interface, as depicted in Fig. 8.

Such a scheme requires the identification of the vicinity of the solid-void interface. The level set values are often used for this purpose. However, unless the LSF is constructed as an approximation or as an exact signed distance field, this approach lacks robustness and may lead to spurious oscillations in the level set field (see Geiss et al. (2019a)). In this study, we do not compute a signed distance field. Instead, we identify the proximity of a point to the solid-void interface by building a so-called level of neighborhood (LoN) \(\mathcal {I}(\mathbf {x})\) of a point with respect to a point belonging to an intersected element. The LoN is evaluated at the nodes of the considered mesh, providing the LoN value \(\mathcal {I}_{i}\) at each node i. The approach is illustrated in Fig. 9. The process starts with identifying intersected elements and setting the LoN \(\mathcal {I}\) of all nodes belonging to these elements to one; otherwise, \(\mathcal {I}\) is set to zero. In a recursive loop over all elements and nodes, first the elements having a node with a LoN value larger than 0 are flagged and then the LoN values of nodes belonging to a flagged element are increased by 1. The region with LoN values larger than 0 widens with the number of times the loop, NLoN, is executed. Thus, the maximum LoN value \(\mathcal {I}_{\max \limits }\) equals NLoN. The use of these nodal LoN values is discussed below.

To promote the convergence of the level set field to a target field, two penalty functions, \(\mathcal {P}_{\phi }\) and \(\mathcal {P}_{\nabla \phi }\), are added to the optimization problem objective in (27). The first term penalizes the difference between the level set value ϕ and, depending on its sign, the lower or upper target value ϕtarget away from the interface:

where ϕtarget is set as a multiple of the element edge size of the initial mesh hinit, i.e., the mesh with the initial refinement level.

The second term penalizes both the difference between the norm of the spatial gradient of the level set field ∇ϕ and a target gradient norm ∇ϕtarget near the interface and the norm of the spatial gradient of the level set field ∇ϕ away from the interface:

where α1, α2 and α3 are weighting factors. The parameter w is a measure of the distance of a point to the solid-void interface as:

where \(\mathcal {I}(\mathbf {x})\) is the local LoN value, interpolated by the element shape functions using the nodal \(\mathcal {I}_{i}\) values. The parameter γI determines the region around and away from the interface in which the integrals in (29) and (30) are evaluated. As the value of γI is increased, the area considered to be in the vicinity of the interface, i.e., the area where the target slope ||∇ϕtarget|| is promoted, shrinks. The parameters ϕtarget, ∇ϕtarget, and γI are user-defined.

7.2 Sensitivity analysis

In this study, we compute the design sensitivities by the adjoint approach. Let us consider an objective or constraint function \(\mathcal {F}(\mathbf {u}(\mathbf {s}), \mathbf {s})\) dependent on the design variables. The derivative of this response function with respect to a design variable si is computed as follows:

where the first term of the right-hand side accounts for the explicit dependency on the design variables and the second term for the implicit dependency on the design variables through the state variables. The implicit term is evaluated by the adjoint approach:

where \(\mathcal {R}\) is the residual defined in (20) for the forward analysis and λ are the adjoint responses, evaluated through:

The derivatives with respect to the design variables are evaluated following the semi-analytical approach presented in Sharma et al. (2017). It should be noted that all the stabilization parameters introduced for the forward analysis in Sections 6.1 and 7.1 are accounted for during the sensitivity analysis.

As truncated B-spline basis functions have smaller support than non-truncated ones (see Fig. 6 and 7), the zone of influence associated with a given truncated B-spline coefficient is smaller. Therefore, the perturbation of a truncated B-spline coefficient influences a smaller number of nodal level set values on the integration mesh and a reduced number of derivatives with respect to the level set nodal values needs to be evaluated per B-spline coefficient. Depending on the implementation of the sensitivity analysis, this property may reduce the computational cost.

When computing the design sensitivities with a LSM, only the intersected elements need to be considered for evaluating (34), as only the finite element residuals of these elements depend on the level set field. However, when the seeding approach presented in Section 3 is used, the residual contributions of non-intersected elements within the solid domain also depend on the design variables. Thus, in this case, the partial derivative \(\frac {\partial \mathcal {R}}{\partial \mathbf {u}}\) needs to be integrated over all elements in the solid domain.

8 Numerical examples

We study the proposed level set topology optimization with hierarchical mesh refinement with 2D and 3D examples, focusing on minimum compliance designs considering mass either via a constraint or a component of the objective as defined in (27).

The state variable field, i.e., the displacement field, is discretized in space with bilinear Lagrange quadrangular elements for 2D, and with trilinear Lagrange hexahedral elements for 3D design domains. The design variable field, i.e., the level set field, is discretized with HB- and THB-splines. The design variables are the B-splines coefficients and are used to interpolate nodal values on the Lagrange analysis mesh.

The optimization problems are solved by GCMMA from Svanberg (2002) and the required sensitivity analysis is performed following the adjoint approach described in Section 7.2. The parameters for the initial, lower, and upper asymptote adaptation in GCMMA are set to 0.05, 0.65, and 1.05, respectively. The optimization problem is considered converged if the absolute change of the objective function relative to the mean of the objective function in the five previous optimization steps drops below 10− 5 and the constraint is satisfied.

The refinement strategy described in Section 4.2 is adopted and the maximum refinement level for interface and solid elements is set to \(l_{\text {ifc},\max \limits } = l_{\text {solid},\max \limits } = l_{\max \limits } = 4\). The minimum refinement level is set to \(l_{\min \limits } = 0\), the size of the coarsest mesh, the initial mesh refinement level, and the number of iterations between two mesh adaptations are given with each example.

The system of discretized governing equations and adjoint sensitivity equations are solved by the direct solver PARDISO for the 2D problems (see Kourounis et al. (2018)), and by a GMRES algorithm for 3D problems, preconditioned by an algebraic multi-grid solver (see Gee et al. (2006)). A relative drop of the linear residual of 10− 12 is required. This strict tolerance is imposed to reduce the influence of the iterative solver on the optimization results.

For the first two examples, we provide detailed comparisons between designs generated on uniformly and adaptively refined meshes in terms of performance, achieved geometries, and computational cost. Furthermore, we investigate the influence of the B-spline interpolation by varying the interpolation order.

The effect of applying or omitting truncation for the B-splines is investigated for 2D examples and presented in Appendix A1. The numerical studies suggest that using THB-splines does not affect the geometric complexity, the performance, or the computational efficiency gains achieved with the proposed adaptive strategy. THB-Splines offer several advantageous properties; they form a PU, a desirable property when solving finite element problems. They also lead to an improved conditioning of the system of equations and offer a convenient way to impose bounds on design variables, i.e., an operation largely applied when solving optimization problems. Therefore, all subsequent optimization problems are solved using THB-splines.

Providing meaningful computational efficiency measures for the adaptive simulations is not trivial as various factors influence the computational cost. A simple wall-clock time comparison is provided for the 2D examples and takes into account both the time spent in the forward and sensitivity analyses. It is given as the ratio between the total runtime for the most refined uniform mesh and the total runtime for an adaptive mesh:

where \(t_{\text {uniform}}^{(k)}\) and \(t_{\text {adaptive}}^{(k)}\) are the runtimes for the uniform and adaptive meshes at the optimization iteration k. Nopt is the number of optimization steps until convergence and is set to \(N_{\text {opt}} = \min \limits (N_{\text {opt}, \text {uniform}},\)Nopt,adaptive).

Providing wall-clock times for all of the optimization examples presented in the paper would not be meaningful, as different problem configurations of the same example were run on different hardware due to limited availability to dedicated computing resources. Furthermore, different linear solvers and software implementations lead to different dependencies of the computational time on the number of finite element DOFs. Therefore, in addition to a runtime comparison, if available, we measure the computational cost in terms of the evolution of the number of unconstrained DOFs of the finite element models during the optimization process.

An efficiency factor Exfem is defined as the ratio between the total number of unconstrained DOFs in the XFEM model for the most refined uniform mesh and the total number of unconstrained DOFs in the XFEM model for an adaptive mesh. This measure is indicative of the computational gains achieved for XFEM solid-void problems and is expressed as follows:

where \(\#DOFs_{\text {uniform},\text {xfem}}^{(k)}\) and \(\#DOFs_{\text {adaptive}}^{(k)}\) are the numbers of unconstrained DOFs in the XFEM models for the uniform and the adaptive meshes at the optimization iteration k. The parameter ns is an exponent that can be fitted to relate the number of DOFs to the computational effort in terms of floating-point operations or wall-clock time (see Woźniak et al. (2014)), for example when a sparse direct linear solver is used and is set to ns = 1 for the 2D and ns = 4/3 for the 3D cases.

Alternatively, a measure of the peak resource requirement Rxfem is defined as the ratio between the maximum number of unconstrained DOFs in the XFEM model for the uniform mesh with highest refinement level and the maximum number of unconstrained DOFs in the XFEM model for the adaptive mesh:

It should be noted that for an XFEM model, the number of unconstrained DOFs is changing in the course of the optimization process, even on a uniformly refined mesh. For the solid-void problems studied in this paper and because the void domain is omitted in the analysis, the XFEM model reduces the number of DOFs compared with a classical FEM model. To account for these computational gains, we define an efficiency factor Efem, indicative for solid-solid XFEM or solid-void FEM problems, as:

where \(\#DOFs_{\text {uniform},\text {fem}}^{(k)}\) is an approximation of the number of unconstrained DOFs for the uniform mesh with highest refinement level at the optimization iteration k, assuming that all DOFs are active as is the case for a classical FEM model.

A corresponding measure of the peak resource need Rfem is defined as follows:

The measures given by (36), (38), (37), and (39) for estimating the gains in computational efficiency are solely based on the costs for solving the linear finite element system. It does not account for the costs for building the XFEM model for initializing the linear solver, for the L2 projection of the design variable fields (see Section 4.2), for computing the right-hand side of the adjoint system in (33), and for the optimization algorithm. The latter computational costs are, however, small typically when compared with building and solving the system of governing equations.

8.1 Two-dimensional beam

As illustrated in Fig. 10, the first problem setup consists of a solid-void 2D beam with a length L = 6.0 and a height l = 1.0. The solid is described by a linear elastic model and an isotropic constitutive behavior, with Young’s modulus E = 1.0 and Poisson’s ratio ν = 0.3. The beam is supported on its two lower corners over a length ls = 0.025 and a pressure p = 1.0 is applied in the middle of its top span over a length lp = 2 × 0.025. The data are provided in self-consistent units.

The structure is designed for minimum compliance with a mass constraint of 40% of the total mass of the design domain. Taking advantage of the symmetry of the problem, only one-half of the design domain is considered. Table 1 summarizes the problem parameters.

The compliance minimization problem, defined in (27), is solved on uniformly and adaptively refined meshes, considering five levels of refinement lrefine = 0, 1, 2, 3, and 4, corresponding to 30 × 10, 60 × 20, 120 × 40, 240 × 80, and 480 × 160 element meshes. The influence of the B-spline interpolation is investigated by solving the same design problem with linear, quadratic, and cubic B-spline functions. These influences are studied separately considering the two approaches for introducing inclusions, i.e., the initial hole seeding and the combined level set/density scheme.

8.1.1 Design with initial hole seeding

In this subsection, the design domain is initially seeded with holes, so that the mass constraint is satisfied at the beginning of the optimization process. The initial configuration is depicted in Fig. 11. The complexity of such a hole pattern requires a rather fine initial mesh and cannot be represented with the coarsest refinement levels lref = 0,1. Thus, the optimization process needs to start from a mesh with lref > 1.

Figure 12 shows the optimized designs generated on uniformly refined meshes with lref = 2,3, and 4 using THB-splines and for different B-spline orders, along with the associated mechanical performance in terms of strain energy. The solid phase is depicted in black and the meshes are omitted as they are uniform. The designs generated on uniform meshes for different B-spline orders become increasingly similar as the mesh is refined. The performances of the designs are also rather similar. As expected, the results show that refining the mesh allows for a higher geometric complexity, including thinner structural members.

Figure 13 shows the optimized designs generated on adaptively refined meshes using linear, quadratic, and cubic THB-splines. For each layout, the solid phase is depicted in gray and the refined mesh is shown in the void phase. The computational gains achieved with the adaptive strategy are given in Table 2.

Applying hierarchical mesh refinement, the optimization process is started with an initial uniform mesh with a refinement level \(l_{\text {ref}}^{0} = 2\) and is refined up to a refinement level \(l_{\text {ref},\max \limits } = 4\) and coarsened to a refinement level lmin = 0. The minimum and maximum refinement levels are updated after every 10, 25, or 50 iterations. Additionally, the mesh is adapted to maintain a uniform refinement level in all intersected elements, as mentioned in Section 4.2.1. First, the designs demonstrate the ability of the method to recover similar layouts to the ones obtained on uniform meshes with higher mesh refinement. No significant loss is observed regarding the level of details or the complexity of the geometry achieved. The strain energy values are quite similar regardless of the use of uniform or adaptive meshes. The remaining differences in the strain energy values are not only due to differences in the designs, but also arise from differences in the discretization of the finite element model.

The results in Fig. 13 show, however, that the adaptive designs are sensitive to the number of iterations after which the refinement is applied. More frequent refinement operations, specifically early in the optimization process, allow for the emergence of finer features in the design. In terms of computational efficiency, the cost reduction with the adaptive strategy remains limited as initial configurations require rather fine meshes, i.e., lref > 1. The runtime ratios and the efficiency factors in Table 2 show that the achieved gain slightly increases with the B-spline order. The largest factors, in terms of runtime, efficiency, and peak need, are achieved when the number of iterations before refinement is increased. Performing mesh refinement and updating the minimum and maximum refinement levels every 50 iterations lead to the largest computational gains. Using XFEM alone reduces the computational cost by about 50%. The adaptation strategy allows for a reduction of the computational cost by more than 3.5 over the uniform FEM model and by more than 1.75 over the uniform XFEM model. The peak resource needs Rxfem and Rfem follow the same trend.

Considering the refined meshes in Fig. 13, it is noticeable that the refined bandwidth around the solid-void interfaces increases in size with the order of the B-spline interpolation. This is a direct consequence of the refined buffer zone described in Section 4.2.2, whose size depends on the interpolation order. For both, the uniform and adaptive designs the influence of the B-spline interpolation order on the designs is mainly noticeable when working with lower order B-splines. Using a linear interpolation allows for the emergence of finer members and details in the structure, at the increased risk of converging to a local minimum with inferior performance. Higher order designs tend to be smoother and to eliminate thin members. For this 2D case, it is noteworthy that working with linear B-splines does not lead to any smoothness issues or irregular shapes which might be caused by a spurious interplay between geometry and finite element prediction.

To further investigate the influence of mesh adaptivity, the optimization problem is solved starting from a uniform mesh with the highest refinement level, here \(l_{\text {ref},\max \limits }=4\) and using THB-splines. The mesh adaptation operation, triggered here every 25 iterations, involves coarsening of the mesh in void regions. The generated designs are shown in Fig. 14 along with the ones generated on the finest uniform mesh lref = 4. They slightly differ from the layouts obtained on uniform meshes, but present quite similar performance in terms of strain energy. These differences might result from the restart of the optimization process, as the mesh is adapted. After refinement, the level set field is mapped onto the newly generated mesh, which might result in a slight perturbation of the field values. Furthermore, the GCMMA is restarted with uniform lower and upper asymptotes for all variables which may alter the evolution of the design. Additionally, these results show that the obtained designs are dependent on the initial mesh refinement \(l_{\text {ref}}^{0}\).

The efficiency factors are given in Table 3. They show that the initial refinement level affects the computational cost. In this case, the mesh adaptivity does not provide an actual computational gain with respect to an XFEM model, as Exfem and Rxfem are about equal to 1.

8.1.2 Design with simultaneous hole seeding

In this section, the combined level set/density scheme, presented in Section 3, is used to nucleate holes during the optimization process. The combined scheme uses a SIMP exponent β = 2.0. The density shift parameter ρsh is initially set to 0.2 and is increased every 25 optimization steps. In this case, the optimization process can be started with rather coarse meshes. The minimum and maximum refinement levels are updated every 25 iterations. All other parameters are kept the same as in Section 8.1.1. Numerical studies have shown that the chosen strategy for updating the density shift parameter and the minimum/maximum refinement levels leads to satisfactory results. Refining this strategy or using alternate approaches may lead to improved performance and should be explored in future studies.

Figure 15 shows the optimized designs generated on uniformly refined meshes with lref = 1,2,3, and 4 using THB-splines and considering different B-spline orders, along with the associated performance in terms of strain energy. The solid phase is depicted in black and the meshes are omitted as they are uniform. For coarse meshes, the designs exhibit different performances. The differences in the strain energy measure disappear as the meshes are refined. As expected, the complexity of the designs increases with mesh refinement. Remaining differences in both the geometry and the performance are likely caused by the non-convexity of the optimization problem.

Figure 16 shows the optimized designs generated on adaptively refined meshes with initial refinement level \(l_{\text {ref}}^{0} = 1, 2\), and 3 and using linear, quadratic, and cubic THB-splines. The performance in terms of strain energy values is provided for each design. For the adaptive designs, the mesh refinement is depicted in the void phase and the solid phase is represented in gray. The gains in terms of computational cost are given in Table 4.

Using the adaptive strategy, the results clearly show that, starting from coarser meshes, it is possible to recover designs rather similar, in terms of geometry and performance, to the ones obtained with finer uniform meshes, at least up to one or two refinement level higher. It is also noticeable that the layouts obtained with the refinement strategy depend on the initial mesh refinement. Finer structural members are more likely to develop and be maintained through the optimization process as the initial mesh is refined.

The runtime ratios and the efficiency factors are given in Table 4. They show that more significant gains are obtained when starting from coarser meshes, with lower refinement levels. The adaptation strategy allows for a reduction of the computational cost by more than a factor of 3 over the uniform FEM model and by more than 1.75 over the uniform XFEM model. The achieved runtime and efficiency factors starting from the same initial refinement with different B-splines order match closely.

As a direct consequence of the buffer zone definition (see Section 4.2.2), the refined meshes in Fig. 16 exhibit a wider refined bandwidth as the interpolation order increases from linear to cubic. As observed in the previous section and in Figs. 15 and 16, linear B-splines can resolve finer structural members, while keeping a smooth description of the geometry at least in this 2D setting. Designs obtained with quadratic and cubic B-splines are smoother and similar.

A comparison of the results from Sections 8.1.1 and 8.1.2 suggests that the ability to nucleate holes during the optimization process is crucial to increase the computational cost reduction achieved with mesh adaptation. Creating an initial hole pattern requires the use of rather fine initial meshes, which limits the computational cost savings. Comparing the efficiency ratios in Tables 2 and 4 shows that starting from a 120 × 40 initial mesh and refining every 25 iterations, an efficiency factor Exfem of about 2.0 is achieved for the initial seeding approach, while a ratio of about 2.5 is obtained for the combined scheme. Moreover, although a dependency on the initial mesh refinement is observed in both cases, the combined level set/density scheme seems to mitigate this dependency.

8.2 Three-dimensional beam

In this section, the previous 2D beam example is extended to 3D. This example allows studying the influence of the mesh adaptivity on 3D designs in terms of geometry, performance, and computational cost. In particular, we assess the ability of our approach to generate well-known three-dimensional optimal members such as shear webs that usually develop when solving optimization problems on fine uniform meshes. For 3D designs, the dependency on the initial hole pattern is mitigated by the ability to develop holes in the third dimension, which might counterbalance the performance issues encountered when using the initial seeding approach with the refinement strategy.

Figure 17 illustrates the setup for the solid-void 3D beam with a length L = 6.0, a height l = 1.0, and a thickness h = 1.0. The structural response is modeled by an isotropic linear elastic model with Young’s modulus E = 1.0 and Poisson’s ratio ν = 0.3. The structure is supported on its two lower sides over a length ls = 0.025 and a pressure p = 1.0 is applied in the middle of its top span over an area lp × h = 2 × 0.025 × 1.0. The values above are provided in self-consistent units.

We optimize the beam for minimum compliance under a mass constraint of 10% of the total mass of the design domain. Taking advantage of the symmetry, we only study a quarter of the design domain. A list of problem parameters is given in Table 5.

As for the 2D case, the initial hole seeding and the combined level set/density schemes are studied. The optimization problem in (27) is solved on uniformly and adaptively refined meshes considering four levels of refinements, lref = 0,1,2,3, corresponding to 18 × 6 × 3, 36 × 12 × 6, 72 × 24 × 12, and 144 × 48 × 24 element meshes. The influence of the B-spline interpolation order is investigated by solving the same problem with linear, quadratic, and cubic B-spline functions. All the simulations are performed with THB-splines.

8.2.1 Design with initial hole seeding

The initial seeding of holes in the design domain is presented in Fig. 18. This configuration satisfies the mass constraint, but requires a rather fine initial mesh to resolve the hole pattern, i.e., lref > 1.

The optimized designs generated on uniformly and adaptively refined meshes are given in Figs. 19 and 20, respectively, along with their performance in terms of strain energy. Additionally, we depict vertical cross-sections of the designs in blue, to visualize the layouts’ internal material arrangement. The efficiency factors are summarized in Table 6.

In Fig. 19, uniform meshes with levels of refinement lref = 2 and 3 are considered, as coarser meshes cannot resolve the initial hole pattern. The design geometries and their performance in terms of strain energy are quite similar for linear B-splines. Each optimized layout is characterized by two shear webs and a truss-type structure that develops under the loaded surface. For higher B-spline orders, similar designs are observed only when using a finer mesh, i.e., lref = 3. For coarser meshes with refinement level lref = 2, a single shear web develops in the center of the design. This behavior suggests that the obtained design depends on both the B-spline order and the refinement level. As the mesh is refined, smaller members are included in the layouts, which exhibit smoother surfaces. This can be observed in particular for the shear webs that become thin and smooth with mesh refinement.

Applying the refinement strategy every 25 iterations, the optimization process is started on a uniform mesh with a refinement level \(l_{\text {ref}}^{0} = 2\) (see Fig. 20). In the course of the optimization process, the mesh is locally refined up to level \(l_{\text {ref},\max \limits } = 3\) or coarsened to a level \(l_{\min \limits } = 0\). The adaptive strategy generates designs that display a slightly higher geometric complexity and smoothness than the designs on corresponding initial uniform meshes. However, they are visibly dependent on the initial mesh refinement as the obtained design topologies, i.e., two shear webs for linear B-splines versus one shear web for higher order B-splines, are similar to the ones generated on a uniform mesh with lref = 2. The efficiency ratios are given in Table 6. They show that a moderate reduction of the computational cost is achieved as the optimization process with adaptivity is started on a rather fine mesh, a reduction by a factor of about 4 over the uniform FEM model and by about 1.5 over the uniform XFEM model.

It is interesting to note that, as for the 2D case, linear B-splines support the development of smaller structural features such as the beams observed for both the uniform and the adaptive designs. At the same time, using these low-order B-splines leads to spurious oscillations of the level set field, which result in layouts with rough surfaces. Higher order B-splines promote the generation of smoother designs, as is the case for both quadratic and cubic B-splines.

8.2.2 Design with simultaneous hole seeding

In this section, holes are nucleated in the design domain during the optimization process using the combined level set/density scheme presented in Section 3. For the combined scheme, the SIMP exponent β is set to 2.0. The density shift parameter ρsh is initially set to 0.2 and is updated every 25 optimization steps. Contrary to Section 8.2.1, coarser meshes can be used as a starting point for the adaptive optimization, since the initial design geometry is not complex.

The designs obtained on uniformly and adaptively refined meshes are shown in Figs. 21 and 22 with their performance in terms of strain energy. Additionally, we depict vertical cross-sections of the designs in blue, to visualize the layouts’ internal material arrangement. Table 7 gives the design performance in terms of computational cost.

In Fig. 21, three levels of refinement are considered lref = 1,2, and 3. The uniform design geometries are quite similar, as each layout is characterized by two shear webs and a truss-type structure that develops under the loaded surface. Also, the performance in terms of strain energy is quite similar for the designs obtained on meshes with refinement levels lref = 2 and 3. These results demonstrate that the combined level set/density scheme reduces the dependency on the initial design that was observed with the initial hole seeding approach. Using extremely coarse meshes with lref = 1 leads to spurious oscillations of the level set field and thus a rough solid/void interface surface. This behavior is more strongly pronounced when using linear B-splines.

Working with an adaptive mesh, the optimization process is started on a uniformly refined mesh with the lowest level of refinement \(l_{\text {ref}}^{0} = 1\) and is refined up to \(l_{\text {ref},\max \limits } = 3\) or coarsened to \(l_{\min \limits } = 0\) (see Fig. 22). The results demonstrate the ability of the adaptive optimization approach to create designs similar to the ones obtained on uniform meshes with a higher level of refinement. The designs also compare well in terms of strain energy performance. The refinement strategy leads to a significant reduction of the computational cost, as the optimization is started on a coarser mesh. Table 7 shows high efficiency ratios, i.e., a reduction by a factor of about 7 over the uniform FEM model and by about 3 over the uniform XFEM model.

As observed in the previous section, using low-order B-splines promotes the development of thinner features, which is not observed with higher polynomial orders. However, linear B-spline designs lack smoothness, even as the mesh is refined.

The effect of hole seeding during the optimization process is not as pronounced as in 2D. In 3D, holes can be created more easily by effectively changing the thickness, i.e., changing the shape in the third dimension. The main advantage of the combined scheme lies in the ability to use coarser meshes as a starting point for the adaptive topology optimization, which leads to larger efficiency ratios Exfem, i.e., around 3 for the combined scheme versus around 1.5 with the initial seeding strategy when compared with uniform XFEM meshes.

Both the 2D and 3D beam examples show that using linear B-splines allows for the development of thinner structural members. However, in the 3D case, the latter details develop at the cost of spurious oscillations in the level set field, leading to a rough representation of the surface. Higher order B-splines promote the development of smoother designs. In 2D and in 3D, the designs generated with quadratic and cubic B-splines match closely. Therefore, all upcoming simulations are carried out with quadratic B-splines. They lead to smooth designs without reducing the complexity of the geometry achieved. Moreover, they present smaller support and a lower level of complexity than cubic B-splines, which leads to simpler implementation and a reduced overall computational cost.

8.3 Short beam under uniform pressure

This final example aims at demonstrating the capabilities of the proposed mesh adaptivity to generate geometrically complex designs with limited computational resources. The optimization problem formulation is modified to generate designs with increasingly lower volume fractions and show that mesh adaptation allows for the development of extremely thin structural members.

We consider a short beam subject to a uniform pressure on its top. The design domain with boundary conditions is shown in Fig. 23 and is defined by a rectangular cuboid with a length L = 2.0, a height l = 0.6, and a thickness h = 1.0. The structural response is described by an isotropic linear elastic model with Young’s modulus E = 6.9 1010 and Poisson’s ratio ν = 0.3. The presence of material is enforced on the top of the table, where a uniform pressure load p = 0.67 106 is applied. The structure is supported on the side at the top corners, and on the bottom at each corner with ls = 0.01 and hs = 0.2.

We optimize the structure for minimum compliance and mass; no constraints are imposed. Applying the adaptive refinement strategy, we focus on the influence of the mass of the designs on the achieved computational cost reduction. Taking advantage of the symmetry, we only study a quarter of the design domain. The problem parameters are given in Table 8 in self-consistent units.