Abstract

An innovative strategy of the design of experiments (DoE) is proposed to reduce the number of calls of the performance function in the kriging-based structural reliability analysis procedure. Benefitting from the local uncertainty provided by the kriging model and the joint probability density function of performance function values of untried points derived in this research, the epistemic variance of the target failure probability is calculated approximately. The variance is treated as the accuracy measurement of the estimated failure probability. The next best point is defined as the untried point that can minimize the variance of failure probability in the sense of expectation which is computed by Gauss–Hermite quadrature. The basic idea of the proposed strategy is to refresh the current DoE by adding the next best point into it. The candidate points of the next best one are randomly generated by Markov chain Monte Carlo method from the kriging-based conditional distribution. A structural reliability analysis procedure is introduced to apply the proposed DoE strategy, whose stopping criterion is constructed mainly on the basis of the coefficient of variation of failure probability. To validate the efficiency of the proposed DoE strategy, three examples are analyzed in which there are explict and implict performance function. And, the analysis results demonstrate the outperformance of the innovative strategy.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

As is known to all, the most important purpose of structural reliability analysis is to estimate the reliability or the failure probability of a mechanical structure whose safety is influenced by kinds of randomness of input variables. This research focuses on the estimation of failure probability, which is defined as follows:

G(x) is the performance function of a studied structure. The random vector X = [X1, X2, ..., XM]T with joint probability density function f(x) contains all input variables with uncertainty. The performance function divides the whole space of X into the safe domain G(x) > 0 and the failure domain G(x) ≤ 0. IG ≤ 0(x)is the failure indicator function defined as (2):

The multidimensional integral is not easy to perform because the performance function of interest is usually implicit and time-consuming to calculate in engineering. As the development of computer codes, complex numerical models are widely employed to define a performance function with a scalar or vector output, which enhances the estimate of failure probability. Nowadays, three kinds of methods are used to approximately perform (1), i.e., random simulation methods, the first- and second-order reliability method (FORM and SORM), and surrogate model-based methods.

Random simulation methods, including Monte Carlo simulation (MCS) (Sobol and Tutunnikov 1996; Gaspar et al. 2014; Zhang et al. 2010), importance sampling (Melchers 1990; Richard and Zhang 2007; Cornuet et al. 2012), subset simulation (Au and Beck 2001; Au 2016), line sampling (Pradlwarter et al. 2007), etc., need to call a great deal of the performance function to acquire accurate result, which is generally unaffordable. The accuracy of FORM and SORM is undesirable for engineering when the performance function is highly nonlinear (Zhao and Ono 1999). In recent years, surrogate models obtain much popularity (Bucher and Most 2008; Kleijnen 2009; Bourinet et al. 2011; Schueremans and Van Gemert 2005). The basic idea of this kind of method is to construct an explicit expression based on the data from the design of experiments (DoE) of computer codes, and the explicit expression is treated as a surrogate of the real performance function to estimate the failure probability (Bucher and Most 2008). An accurate surrogate is essential to guarantee the accuracy of the estimated failure probability. Several surrogate models including polynomial response surface (Gayton et al. 2003), spare polynomial expansion (Blatman and Sudret 2008; Yu et al. 2012), kriging(Kaymaz 2005; Shimoyama et al. 2013; Bae et al. 2018), support vector machine (Song et al. 2013; Alibrandi et al. 2015), neural network (Schueremans and Van Gemert 2005), etc. are available for structural reliability analysis.

This research focuses on the kriging model with two characteristics that are indeed valuable for structural reliability analysis. The first of them is that the kriging model is a method of interpolation, which makes it possible to improve the local accuracy of the surrogate model. The second is that it provides both the best linear unbiased estimator and the so-called kriging variance which quantifies the local accuracy of the kriging model or the local epistemic uncertainty of the performance function value. (Jones et al. (1998)) applies kriging to global optimization and constructs the expected improvement function based on the statistical information mentioned above to acquire an explicit tradeoff between improvement of the global accuracy of the kriging model and exploration of the area of interest. During structural reliability analysis, (1) indicates that only the sign of G(x) or the limit state G(x) = 0 matters to the estimate of failure probability. To obtain a global fitting of the limit, Bichon et al. (2008) proposes the expected feasibility function (EFF) to quantify the degree that a point satisfies G(x) = 0 in the sense of expectation and refresh DoE iteratively by adding the maximum point or the next best point into it until the maximum value of EFF is lower than a given threshold. After ref. (Bichon et al. 2008), Bichon et al. (Echard et al. 2011, 2013), Lv et al. (2015), and Yang et al. (2015a), Yang et al. (2015b) construct learning function U, learning function H, and the expected risk function, respectively. These “learning functions” for reliability analysis measure a point by taking only the statistical information provided by the kriging model into consideration and ignoring its importance or the joint PDF f(x). The next best point from the above-mentioned learning functions may locate in the area of little significance for the target failure probability. The least improvement function (LIF) in ref. (Sun et al. 2017) is designed to quantify how much the fraction of the domain with uncertain signs could be minished at least in terms of expectation if adding a point into the current DoE. It provides the tradeoff between the local uncertainty of signs and the joint PDF. The hypotheses formed during the derivation of LIF make its efficiency vary with problems.

To further reduce the number of calling the performance function during structural reliability, this research proposes an innovative DoE strategy named as stepwise variance reduction strategy. The epistemic variance of the target failure probability is proposed as the accuracy measurement of the estimated failure probability and calculated approximately. The basic idea of the innovative strategy is to research the next best point that can minimize the epistemic uncertainty of the target failure probability or improve the accuracy of the estimate of failure probability most in the sense of expectation and refresh DoE by adding the next best point into it. Markov chain Monte Carlo (MCMC) method is employed to generate approximately i.i.d. (independent and identically distributed) candidates of the next best point from the domain that contributes most of the epistemic uncertainty of failure probability. Gauss–Hermite quadrature is used to approximate the expectation of the variance of failure probability after adding a given point into the current DoE. The next best point is defined as the one that minimizes the expectation. A reliability analysis procedure is introduced to apply the proposed DoE strategy. In the introduced procedure, the stopping criterion is based on the idea that the estimated failure probability meets the requirement of accuracy if the coefficient of variation of failure probability is smaller than a given threshold.

The remainder of this paper is organized as follows. Section 2 reviews the theory of the kriging model briefly and derives the joint PDF of the performance function values of untried points in detail, which is the key to the approximation of the epistemic variance of failure probability. The proposed strategy is constructed in Sect. 3 and applied in Sect. 4. Section 5 studies three examples to validate the efficiency of the proposed strategy. Section 6 is the conclusion.

2 Theory of the kriging model

2.1 Review of the kriging model

In the framework of the kriging model, the target performance function G(x) is treated as a realization of a Gaussian process. G(x) consists of two parts:

where g(x) is a scalar or multi-variable polynomial generally. Suitable basic functions benefit the accuracy of the kriging model especially when the number of points in DoE to build the kriging model is small. β is the coefficient vector of g(x). It is unknown and estimated with generalized least squares. gT(x)β is the deterministic part of G(x), while z(x) is the stochastic part which is a realization of a stationary Gaussian process with zero-mean and constant variance (σ2). The covariance function of z(x) is defined as follows:

where R(xi, xj; θ) denotes the correlation between z(xi) and z(xj) and θ is an undetermined parameter vector. Among several correlation functions, the Gaussian correlation function performs well when dealing with nonlinear performance function. Therefore, it is employed in this research.

where θ(m) and x(m)i are the mth elements of θ and xi, respectively.

Given a DoE containing N points SDoE = [x1,x2,…,xN] and performance function values of these points Y = [y1, y2,…, yN]T, the best linear unbiased estimator of G(x) for an untried point x is written as follows:

where

The mean square error of \( \hat{G}\left(\boldsymbol{x}\right) \) or the so-called kriging variance is

where

The subscript N in (6) and (12) denotes the number of points in DoE.

In the framework of Gaussian process, G(x) is treated as an epistemic random variable and subject to normal distribution.

Equation(15) provides the epistemic uncertainty (or the conditional distribution) of G(x) on the condition of SDoE and Y, which makes it possible to quantify the local accuracy of the kriging surrogate model. Most of the kriging-based DoE strategies for reliability analysis, optimization, global sensitivity analysis, etc. are based on the statistical information provided by (15) (Jones et al. 1998; Bichon et al. 2008; Echard et al. 2011; Lv et al. 2015; Yang et al. 2015b; Sun et al. 2017). To find an optimal θ, both cross-validation and maximum likelihood estimation are available. The core issue of this article is how to use the Gaussian process instead of improving it, so the model-form uncertainty is ignored. The latter is used in this research.

2.2 The joint distribution of the performance function values of untried points

According to the information shown by (15), the local uncertainty of any untried point x can be measured, which is far from enough for reliability analysis application. The estimated performance function values of huge number of untried points are needed to determine an estimation of the target failure probability, and any two of the untried points have correlation. This research is interested in the joint distribution of \( {\boldsymbol{Y}}_{\mathrm{U}}={\left[G\left({\boldsymbol{x}}_{\mathrm{U},1}\right),G\left({\boldsymbol{x}}_{\mathrm{U},2}\right),...,G\left({\boldsymbol{x}}_{\mathrm{U},{N}_{\mathrm{U}}}\right)\right]}^T \)of an untried sample of points \( {\boldsymbol{S}}_{\mathrm{U}}=\left[{\boldsymbol{x}}_{\mathrm{U},1},{\boldsymbol{x}}_{\mathrm{U},2},...,{\boldsymbol{x}}_{\mathrm{U},{N}_{\mathrm{U}}}\right] \) which may be helpful to construct a global accurate measurement of the kriging model or an uncertain quantification of a kriging-based estimation of failure probability.

To derive the joint PDF of \( {\boldsymbol{Y}}_{\mathrm{U}}={\left[G\left({\boldsymbol{x}}_{\mathrm{U},1}\right),G\left({\boldsymbol{x}}_{\mathrm{U},2}\right),...,G\left({\boldsymbol{x}}_{\mathrm{U},{N}_{\mathrm{U}}}\right)\right]}^T \), a preparatory theorem is necessary and shown as follows:

Theorem 1: Yt = [Y1, Y2, ...., Yh]T is a multivariate normal distributed vector with mean vector μ and covariance matrix Σ. \( {\boldsymbol{Y}}_1={\left[{Y}_1,...,{Y}_{k_1}\right]}^T \) and \( {\boldsymbol{Y}}_2={\left[{Y}_{k_1+1},...,{Y}_k\right]}^T \) are two sub-vectors of Yt and satisfy (17),

where

Suppose that vector y2 is a realization of Y2. Then, the conditional distribution of Y1 is shown as follows:

where

Proof: Construct an auxiliary vector W,

It can be derived from (25),

Therefore, W1 and W2 are independent with each other. Then, the joint PDF of W can be written as

One can notice,

Now taking (25), (27), and (28) into account, the conditional PDF of Y1 is

Hence, it can be concluded as follows:

Prove up.

Theorem 2:X is the input vector of a studied mechanical structure. Given SDoE = [x1,x2,…,xN] and Y = [y1,y2,…,yN]T, construct the kriging model (6) and (12) based on the theory in Sect. 2.1. \( {\boldsymbol{S}}_{\mathrm{U}}=\left[{\boldsymbol{x}}_{\mathrm{U},1},{\boldsymbol{x}}_{\mathrm{U},2},...,{\boldsymbol{x}}_{\mathrm{U},{N}_{\mathrm{U}}}\right] \)contains NU untried points belonging to the X space, and \( {\boldsymbol{Y}}_{\mathrm{U}}={\left[G\left({\boldsymbol{x}}_{\mathrm{U},1}\right),G\left({\boldsymbol{x}}_{\mathrm{U},2}\right),...,G\left({\boldsymbol{x}}_{\mathrm{U},{N}_{\mathrm{U}}}\right)\right]}^T \) is the performance function values with respect to SU. The vector YU on the condition of Y = [y1,y2,…,yN]T is subject to multivariate normal distribution.

where

Proof: According to the theory listed in Sect. 2.1, the performance function G(x) is treated as a realization of a Gaussian process with mean function gT(x)β and covariance function σ2R(⋅, ⋅ ; θ). And, [SU, SDoE] is a finite set of points contained in the X space. Therefore, \( {\boldsymbol{Y}}_A={\left[G\left({\boldsymbol{x}}_{\mathrm{U},1}\right),...,G\left({\boldsymbol{x}}_{\mathrm{U},{N}_{\mathrm{U}}}\right),{y}_1,\dots, {y}_N\right]}^T \) is subject to multivariate normal distribution if the values of yn (n = 1,…,N) are not calculated.

where

Now given the realization of Y = [y1,y2,…,yN]T, the conditional distribution of YU on the condition of Y = [y1,y2,…,yN]T and β can be obtained according to (21) in Theorem 1.

where

Notice that the coefficient vector β is still unknown and needs to be estimated with generalized least squares as shown by (7). And, according to the theory of generalized least squares, one can easily derive the following:

where

It is obvious that

Taking (41), (44), and (45) into consideration, the conditional PDF of YU (\( {f}_{{\boldsymbol{Y}}_{\mathrm{U}}\mid \boldsymbol{Y}}\left({\boldsymbol{y}}_{\mathrm{U}}\right) \)) on the condition of Y = [y1,y2,…,yN]T can be derived as follows:

Then,

where

Therefore, the conclusion is

or

Prove up.

Similar to (12), the epistemic uncertainty of σ2 is neglected, and σ2 in (34) is replaced with \( {\hat{\sigma}}^2 \) estimated as (13). Then,(32) provides the joint distribution of YU on the condition of Y, while (15) focuses on the epistemic randomness of the performance function value of one single point, which is the most important difference between them. Besides, (32) coincides with (15) exactly if NU = 1.

3 The stepwise variance reduction strategy for structure reliability analysis

3.1 Estimate of the target failure probability

The kriging model performs as a surrogate of the target performance function during the reliability analysis procedure. According to the definition of failure probability, only the sign of G(x) matters. And, almost all of points in X space are untried. For a given kriging model, the sign of G(x) is with epistemic uncertainty caused by the randomness of G(x). Taking (15) into account, one can obtain

where

πN(x)is the probabilistic classification function proposed by Dubourg et al. (2013). Perform expectation operation on both sides of (1),

\( {\hat{P}}_{f,N} \)defined by (56) is the kriging-based estimation of the failure probability employed by this research.

Numerical methods of integration and methods of simulation are available to perform the multivariate integration involved in (56). As πN(x) has an explicit expression and therefore can be calculated millions of times in a short time, the most robust method is MCS used here.

where{xMC, i} (i = 1,…,NMC) are i.i.d. (independent and identically distributed) random points subject to f(x) and NMC with the number of MCS points. It is widely known that any foregone accuracy of (57) can be obtained as the increase of NMC. The coefficient of variation of (57) is measured as follows:

3.2 Variance of the target failure probability

Because of the epistemic uncertainty of IG ≤ 0(x) and G(x), Pf defined by (1) is also a random variable. Its exact distribution is almost impossible to derive because it involves infinite number of Bernoulli distributed variables. Besides, any two of the variables have correlation. This research tries to approximately calculate the variance of Pf. According to the definition of \( {\hat{P}}_{f,N} \), it is the expectation of Pf. So, the variance of Pf can be written as follows:

It is understandable that \( {\sigma}_{P_f,N}^2 \) quantifies the epistemic uncertainty of Pf as well as the accuracy of \( {\hat{P}}_{f,N} \). A small value of \( {\sigma}_{P_f,N}^2 \) or \( {\sigma}_{P_f,N} \) indicates that the difference between Pf and \( {\hat{P}}_{f,N} \) is negligible with a large probability, in the case of which the corresponding kriging model is accurate enough and unnecessary to improve. Otherwise, more points are needed to refresh current DoE and construct a better kriging model.

Similar to the distribution of Pf, \( {\sigma}_{P_f,N}^2 \) is also difficult to obtain. To handle the awkward situation, this research replaces Pf and \( {\hat{P}}_{f,N} \) in (59) with their corresponding MCS estimates.

The idea of (60) is to approximate the expectation that involve infinite points with finitepoints. It is worthy to emphasize that the MCS estimates of Pf and \( {\hat{P}}_{f,N} \) are acquired from the same i.i.d. random points. Equation(60) can be rewritten as follows:

where

The computation of P(G(xMC, i) ≤ 0, G(xMC, j) ≤ 0) needs the joint distribution information of [G(xMC, i), G(xMC, j)]T, which has already been derived in Sect. 2.2 (see (32)). The approximation of \( {\sigma}_{P_f.N}^2 \) shown in (61) includes \( 2{N}_{\mathrm{MC}}^2-{N}_{\mathrm{MC}} \) terms. And, an engineering structure may be a rare event to fail. Therefore, (61) may be out of computational affordability even though all of the terms are with explicit expression. Actually, the epistemic uncertainty of Pf mainly originates from the domain of UG, N ≤ 2 where the signs of G(x) and \( \hat{G}\left(\boldsymbol{x}\right) \) are different with considerable probability. Taking Ref. (Echard et al. 2011) as reference, this research neglects the probability that points in the domain of UG, N > 2 are wrongly predicted in terms of the sign of the performance function and treats IG < 0(x) as non-randomness if UG, N(x) > 2, that is to say (59) can be approximated by the following way:

So,(61) can be further simplified as follows:

Equation(63) or (64) is the proposed approximation of the variance of Pf, which is treated as the measurement of the accuracy of \( {\hat{P}}_{f,N} \) defined by (56) or (57).

3.3 The proposed stepwise variance reduction strategy

This section constructs an innovative DoE strategy named stepwise variance reduction strategy whose principle is to find the point that can minimize the proposed accuracy measurement of \( {\hat{P}}_{f,N} \) in the sense of expectation. The optimal point is named as the next best point.

To search the next best point, a new symbol is introduced here.

where \( {\boldsymbol{S}}_{\sigma }=\left\{{\boldsymbol{x}}_{\sigma, 1},...,{\boldsymbol{x}}_{\sigma, {N}_{\sigma }}\right\} \) is a sample of i.i.d. random points subject to the conditional PDF f(x| UG, N(x) ≤ 2). They can be generated with both MC method and MCMC method. As the improvement of the quality of the kriging model, the fraction of UG, N ≤ 2 tends to be insignificant, which decreases the efficiency of MC method. MCMC method performs well in sampling random points from conditional distribution. This kind of algorithm can generate approximate i.i.d. random points from given conditional PDF if parameters involved are appropriately set. Besides, the efficiency of MCMC method does not degenerate seriously as the available area of UG, N ≤ 2 becomes cabined. Therefore, this research employs MCMC simulation to sample Sσfrom f(x| UG, N(x) ≤ 2).

3.3.1 Candidates of the next best point

Obviously, \( {\tilde{\sigma}}_{P_f,N}^2 \) is proportional to the proposed approximation of \( {\sigma}_{P_f,N}^2 \). So, the next best point can also be defined as the one that can minimize \( {\tilde{\sigma}}_{P_f,N}^2 \) in the sense of expectation. To simplify the search of the next best point, two restrictions are formed as follows:

- 1.

The uncertainty of Pf caused by the current domain UG, N > 2 is limited and is still negligible if adding point x into DoE and rebuilding the kriging model.

- 2.

The next best point locates in the domain UG, N ≤ 2.

During searching the best next point, one needs to compute the expectation of \( {\tilde{\sigma}}_{P_f,N+1}^2 \) or \( {\sigma}_{P_f,N+1}^2 \) by virtually adding a point into the current DoE and rebuilding the kriging model several times. The first hypothesis means that the uncertainties of Pf for the virtually new kriging modelare still up to the domain UG, N ≤ 2 rather than UG, N > 2. This hypothesis is difficult to prove and may not be rigorous in theory, but it makes sense in engineering and eases the computational cost of the evaluation of a point. The second hypothesis shrinks the searching area from the whole X space to UG, N ≤ 2, which is understandable.

Gradient descent algorithms and swarm intelligence-based algorithms may not be suitable for optimizing the next best point. The domain UG, N ≤ 2 may multiply and be connected, and have more than one local optimums especially when the performance function has multi-design points. Besides, when the kriging model is highly accurate, the domain UG, N ≤ 2 is surely much cabined and difficult to locate. To overcome above awkward situations, a second best alternative is that the candidates of the next best point reduce from the domain UG, N ≤ 2 to Sσ. In other words, this research treats the best point in Sσ as the next best one rather than searches it in the whole UG, N ≤ 2.

3.3.2 The expectation of \( {\tilde{\sigma}}_{P_f,N+1}^2 \)

To quantify \( {\boldsymbol{x}}_{\sigma, {n}_{\sigma }} \) (\( {\boldsymbol{x}}_{\sigma, {n}_{\sigma }}\in {\boldsymbol{S}}_{\sigma } \)) in terms of the expectation of \( {\tilde{\sigma}}_{P_f,N+1}^2 \) after adding it into the current DoE, one needs to reconstruct the kriging model based on \( {\boldsymbol{S}}_{\mathrm{DOE}}=\left[{\boldsymbol{x}}_1,...,{\boldsymbol{x}}_N,{\boldsymbol{x}}_{\sigma, {n}_{\sigma }}\right] \) and \( \boldsymbol{Y}={\left[{y}_1,...,{y}_N,G\left({\boldsymbol{x}}_{\sigma, {n}_{\sigma }}\right)\right]}^{\mathrm{T}} \), where \( {\boldsymbol{x}}_{\sigma, {n}_{\sigma }} \) is one of the candidate points generated by MCMC method. The value of \( {\tilde{\sigma}}_{P_f,N+1}^2\left({\boldsymbol{x}}_{\sigma, {n}_{\sigma }},G\left({\boldsymbol{x}}_{\sigma, {n}_{\sigma }}\right)\right) \) can be estimated as follows:

Obviously, \( {\tilde{\sigma}}_{P_f,N+1}^2 \) depends on both \( {\boldsymbol{x}}_{\sigma, {n}_{\sigma }} \)and \( G\left({\boldsymbol{x}}_{\sigma, {n}_{\sigma }}\right) \). It is worthy to emphasize that \( G\left({\boldsymbol{x}}_{\sigma, {n}_{\sigma }}\right) \) is a normal distributed variable defined by (15) before performing the structural model to calculate it. Given \( {\boldsymbol{x}}_{\sigma, {n}_{\sigma }} \), \( {\tilde{\sigma}}_{P_f,N+1}^2\left({\boldsymbol{x}}_{\sigma, {n}_{\sigma }},G\left({\boldsymbol{x}}_{\sigma, {n}_{\sigma }}\right)\right) \) is a random variable whose randomness comes from the epistemic uncertainty of \( G\left({\boldsymbol{x}}_{\sigma, {n}_{\sigma }}\right) \). The expectation of \( {\tilde{\sigma}}_{P_f,N+1}^2\left({\boldsymbol{x}}_{\sigma, {n}_{\sigma }}\right) \) with respect to \( G\left({\boldsymbol{x}}_{\sigma, {n}_{\sigma }}\right) \) is

where

Therefore, the next best point is in Sσ, which can minimize (67).

3.3.3 About the calculation of (67)

Equation(67) can be rewritten as

where

Obviously, Gauss–Hermite quadrature is available to perform the integral of (70).

where vj (j = 1,…,nG) denote the quadrature points and wj is the weight associated with vj. As the growth of the number of quadrature points nG, (72) is accurate enough to meet the requirement of engineering.

3.3.4 The procedure of the proposed stepwise variance reduction strategy

The main steps of the proposed stepwise variance reduction strategy are summarized as follows:

Step 1. Generate Nσi.i.d. points (\( {\boldsymbol{S}}_{\sigma }=\left\{{\boldsymbol{x}}_{\sigma, 1},...,{\boldsymbol{x}}_{\sigma, {N}_{\sigma }}\right\} \)) approximately from the conditional PDF f(x| UG, N(x) ≤ 2) by MCMC method. Points in Sσ are candidates of the next best one.

Step 2. For each point \( {\boldsymbol{x}}_{\sigma, {n}_{\sigma }}\in {\boldsymbol{S}}_{\sigma } \)

For each Gauss–Hermite quadrature point vj (j = 1,…,nG)

Construct the kriging model using \( {\boldsymbol{S}}_{\mathrm{DOE}}=\left[{\boldsymbol{x}}_1,...,{\boldsymbol{x}}_N,{\boldsymbol{x}}_{\sigma, {n}_{\sigma }}\right] \)and \( \boldsymbol{Y}={\left[{y}_1,...,{y}_N,{y}_{\sigma, {n}_{\sigma },j}\right]}^{\mathrm{T}} \).

Compute \( {\tilde{\sigma}}_{P_f,N+1}^2\left({\boldsymbol{x}}_{\sigma, {n}_{\sigma }},{y}_{\sigma, {n}_{\sigma },j}\right) \) according to (66).

Step 3. According to (72), calculate \( E\left({\boldsymbol{x}}_{\sigma, {n}_{\sigma }}\right){\boldsymbol{x}}_{\sigma, {n}_{\sigma }}\in {\boldsymbol{S}}_{\sigma } \) based on the results of step 2.

Step 4. Find the next best point that minimizes \( E\left({\boldsymbol{x}}_{\sigma, {n}_{\sigma }}\right) \) ((69)).

In the proposed strategy, the only function of MCMC method is to generate i.i.d. candidates of the next best point from the given conditional PDF f(x| UG, N(x) ≤ 2). In spite of efficiency, other random simulation methods are potential to do this in the case of guaranteeing that random points are independent and identically distributed. If the sampling PDF is not f(x| UG, N(x) ≤ 2), one needs to do some change to (65) and other relative equations.

4 Application of the proposed stepwise variance reduction strategy

As illustrated above, the proposed strategy is designed for structural reliability analysis. It performs as the similar role with learning functions to find the next best point with respect to the given criterion (Bichon et al. 2008; Echard et al. 2011). In theory, any kriging-based procedure of structural reliability analysis involving sequential DoE strategy can employ the proposed strategy. This research applies it to the procedure constructed in ref. (Sun et al. 2017) to replace the learning function called LIF. Besides, the stopping criterion of the reliability procedure is also reconstructed. The new criterion proposed below is mainly based on the variance of Pf approximated in Sect. 3.2. The main steps of the procedure which employs the proposed DoE strategy and the new stopping criterion are summarized as follows:

Step 1: Generate the initial DoE with N0 points \( {\boldsymbol{S}}_{\mathrm{DoE}}=\left[{\boldsymbol{x}}_1,...,{\boldsymbol{x}}_{N_0}\right] \) and run the model of studied structure to calculate \( \boldsymbol{Y}={\left[{y}_1,...,{y}_{N_0}\right]}^{\mathrm{T}} \). Latin hypercube sampling (LHS) is used to produce SDoE. Since abnormal distributed vector can be transformed into normal one exactly or approximately, this research supposes that the input random vector X is subject to standard multivariate normal distribution. The hypercube for generating SDoE is [− 5,5]M.

Step 2: Construct the kriging surrogate model based on the current SDoE and Y.

Step 3: Approximate estimation of failure probability (\( {\hat{P}}_{f,N} \)) and the variance of Pf (\( {\sigma}_{P_f,N}^2 \)) with MCS according to (57) in Sect. 3.1and (64) in Sect. 3.2, respectively. The sample of random points for \( {\hat{P}}_{f,N} \) is the same with the one for \( {\sigma}_{P_f,N}^2 \). If \( {\hat{P}}_{f,N} \) and \( {\sigma}_{P_f,N} \) satisfy (74), terminate the reliability analysis procedure and \( {\hat{P}}_{f,N} \) is the estimation of the target failure probability that meets a given accurate requirement. Otherwise, continue the procedure to Step 4.

where[δ] is the threshold coefficient of variation of Pf. Equation(74) means that the potential error of \( {\hat{P}}_{f,N} \) is negligible if the coefficient of variation of Pf (δN) is smaller than the given threshold value ([δ]).

It is worthy to emphasize that this step is optional. Its main purpose is to judge whether the kriging model constructed by step 2 is accurate enough. Therefore, it may be unnecessary to perform this step every iteration.

Step 4: Perform the proposed stepwise variance reduction strategy to look for the next best point according to procedure introduced in Sect. 3.3.4. Add the next best point into DoE and calculate its performance function value. Return to step 2.

The flow chart is shown below. The left part of Fig.1 is the main body of the stepwise variance reduction strategy, and the left part is the proposed DoE strategy.

5 Examples for validation

Three benchmark examples are analyzed in this section. Results from different methods are compared to validate the efficiency of the proposed DoE strategy.

5.1 A series system with four branches

This section studies a series system that includes four branches. Its performance function is explicit and has two independent variables.

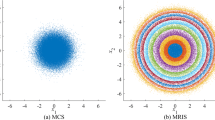

where X = [X1,X2]T is the input variable vector and subject to standard multivariate normal distribution. The main purpose of employing this example is to visualize the efficiency of the proposed DoE strategy in improving the accuracy of the kriging model and the estimation of failure probability.

Apply the reliability analysis procedure introduced in Sect. 4 to this example with Nσ = 3000. Results are summarized in Table 1. Besides, results from some other methods are also listed as comparisons. As most of existing learning functions are not suitable for reliability procedure that generates candidates of the next best point every iteration (such as the procedure in Sect. 4), AK-MCS-based methods are employed (Echard et al. 2011). AK-MCS+the proposed DoE strategy means that the proposed DoE strategy is combined with AK-MCS, in which the candidates of the next best point come from a given sample of i.i.d. points rather than MCMC method. AK-MCS+the proposed DoE strategy here is to provide a fair comparison between the proposed strategy in Sect. 3 and other learning functions. Ncall denotes the number of calls to the real performance function ((75)). [δ]is the threshold coefficient of variation of Pf. Different values of [δ] are set to investigate how much the proposed estimation of the variance (or standard deviation) of Pf can reflect the real accuracy of \( {\hat{P}}_f \) and demonstrate the efficiency of the proposed DoE strategy in terms of Ncall when the requirement of accuracy is given. εinTable 1 presents the relative error of estimates of failure probability when \( {\hat{P}}_f \) satisfies (74).

Figure2 details the convergence process of \( {\hat{P}}_f \) and the decrement of δN ((74)) as the iteration of different methods goes. The proposed strategy tends to give a rough estimate of the target failure probability quickly for this series system. Figure 3 compares estimated limit states with the real one and visualizes the outstanding of the proposed DoE strategy from the others. As shown in Fig.3, the proposed strategy is able to provide more accurate estimated limit state for a given Ncall, as a result of which it needs lower number of calls to (75) to meet a given accuracy requirement (Table 1 and Fig.2).

According to the meaning of [δ] and the stopping criterion of (74), the relative error of \( {\hat{P}}_f \) with respect to the referential value may be tolerable for engineering applications if it is less than 3[δ]. As listed in Table 1, the accuracy of methods based on the proposed strategy is acceptable even though they may provide less accurate estimation of Pf than other methods for a given value of [δ] and regardless of Ncall. Figure 3 shows that AK-MCS+U method focuses on only one of the four branches firstly and then goes to another after the kriging model is accurate enough in this area. The same conclusion can be derived from refs. (Echard et al. 2011; Sun et al. 2017), too. This characteristic explains the huge wave of the line of δN (Fig.2) from AK-MCS+U.

5.2 A truss structure

This section analyzes a widely referenced truss structure (Sun et al. 2017; Blatman and Sudret 2010; Roussouly et al. 2013). As shown by Fig.4, it contains 23 bars. Eleven of them are horizontal with random cross section A1 and Young’s moduli E1, and the others are sloping with random cross section A2 and Young’s moduli E2. Six Gumbel distributed loads are applied on nodes of horizontal bars. The distribution information of these inputs is listed in Table 2. Similar with the first example, all variables involved are mutually independent.

This structure is treated as failed if the deflection of node E |s(x)| is larger than a given threshold which is 0.14 m in this research to keep in accordance with ref. (Sun et al. 2017; Blatman and Sudret 2010). Therefore, the performance function of the structure is defined as

Reference (Blatman and Sudret 2010) gives the reference value of failure probability, which is obtained by IS with 500,000 simulations. References (Sun et al. 2017; Roussouly et al. 2013) indicate that the result is credible.

Apply the reliability analysis method in Sect. 4 and some other methods to this truss structure. Results are all summarized in Table 3. The proposed DoE strategy is not combined with AK-MCS, because the structure is rare event to fail and AK-MCS+the proposed DoE strategy needs too much time.

Figure 5 shows the lines of \( {\hat{P}}_f \)and δN. FromFig.5, several methods including AK-MCS-based methods can roughly estimate the failure probability very soon, and the advantage of the proposed DoE strategy is not obvious. However, the proposed strategy needs less number of calls the real performance function to let \( {\hat{P}}_f \) satisfy the stopping criterion defined by (74) with different values of [δ].

Comparison of methods in terms of \( {\hat{P}}_f \) and δN (LIF means the method proposed in ref. (Sun et al. 2017))

5.3 A frame structure

Figure 6 shows a frame structure that contains 8 finite elements. Table 4 lists properties of these elements including Young’s modulus (E), Moment of Inertia (I), and cross section (A). P1, P2, and P3 are three random loads. Distribution information of involved variables is summarized in Table 5. Unlike the above examples, some correlation exists between variables of this structure.

The rest of variables are mutually independent.

The performance function of this frame structure is defined as (81).

wheres(x) denotes the top displacement as depicted in Fig.6.

To get a reference value of the failure probability, 1.3 × 106i.i.d. simulations are performed, and 154 of random points are located in the failure domain. Therefore, the reference value of the failure probability is 1.185 × 10−4 whose coefficient of variation is 0.081.

Apply kriging-based methods mentioned in Sects. 5.1 and 5.2 to the frame structure. Table 6 summarizes all results. As the reference value of Pf is not accurate enough, the estimations of failure probability (\( {\hat{P}}_f \)) with respect to all kriging models are obtained by testing the sample of random points mentioned above to eliminate the inaccuracy of the reference value. Lines of \( {\hat{P}}_f \) and δN are shown in Fig.7. It is obvious that the proposed strategy outperforms other methods very much in terms of this example.

From Fig.7, the stepwise variance reduction strategy fails to make \( {\hat{P}}_f \) satisfy (74) with fewer DoE points than 180 if [δ] equals to 0.05 or 0.03, and other learning functions perform even worse. As this example has 21 random inputs, the variable space of importance to the failure probability is too broad and most of points in the vicinity of \( \hat{G}\left(\boldsymbol{x}\right)=0 \) or G(x)) = 0 are with local inaccuracy. To remarkably reduce the value of δ, many more DoE points are needed. Since, similarly with the series system in Sect. 5.1, the performance function of the frame structure may contain multi-design points or the limit state near the design point is approximated to be a hyper spherical surface with its center at the origin. In those cases, it is not easy to roughly fit the target limit state quickly. The instability of AK-MCS+U and AK-MCS+EFF also indicates the complexity of the problem.

6 Conclusion

A stepwise variance reduction DoE strategy for structural reliability analysis is proposed in this research. Its principle is to find the point that is able to minimize the epistemic variance of Pf in the sense of expectation. The variance of Pf is an accuracy measurement of the estimation of failure probability defined by (56). It can also be treated as a global accuracy measurement of the kriging model. To assess it, the joint distribution of performance function values of untried points is derived in detail, which is the key to the approximation of the variance of Pf and the proposed strategy. The strategy performs the role similar to learning functions in reliability analysis procedure to determine the next best point among lots of candidates. A kriging-based reliability analysis procedure is introduced, which is mainly based on the proposed DoE strategy and the procedure constructed in ref. (Sun et al. 2017). A new stopping criterion is also proposed. Its basic idea is that one can terminate the reliability analysis procedure if the coefficient of variation of Pf is less than a given threshold or the potential error of \( {\hat{P}}_f \) is negligible.

To validate the efficiency of the proposed DoE strategy, three examples are analyzed. One of them is a series system with explicit performance function. The others are structures with implicit performance functions. According to the results of the validation, conclusion can be summarized as follows: (1) Most of the next best points from the proposed strategy are located in the area of importance. It can roughly estimate the target failure probability and the target limit state quickly. (2) The stepwise variance reduction strategy does well when dealing with problems with multi-design points, implicit and nonlinear performance function, and high-dimensional input vector. (3) The proposed stopping criterion has understandable meaning and is able to terminate the reliability analysis procedure timely according to the accuracy requirement. (4) If it takes a few hours or more to simulate a model once, the proposed DoE strategy will have some advantages. Besides, the decrement of δN is log-linear generally if the proposed DoE strategy is employed in a reliability analysis procedure, which may be useful for further simplifying the reliability analysis steps and releasing computational burden.

References

Alibrandi U, Alani AM, Ricciardi G (2015) A new sampling strategy for SVM-based response surface for structural reliability analysis. Probab Eng Mech 41:1–12

Au S-K (2016) On MCMC algorithm for subset simulation. Probab Eng Mech 43:117–120

Au SK, Beck JL (2001) Estimation of small failure probabilities in high dimensions by subset simulation. Probab Eng Mech 16(4):263–277

Bae S, Park C, Kim NH (2018) Uncertainty quantification of reliability analysis under surrogate model uncertainty using Gaussian process. in ASME 2018 International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, IDETC/CIE 2018, August 26, 2018 - August 29, 2018. American Society of Mechanical Engineers (ASME), Quebec City

Bichon BJ et al (2008) Efficient global reliability analysis for nonlinear implicit performance functions. AIAA J 46(10):2459–2468

Blatman G, Sudret B (2008) Sparse polynomial chaos expansions and adaptive stochastic finite elements using a regression approach. CR Mec 336(6):518–523

Blatman G, Sudret B (2010) An adaptive algorithm to build up sparse polynomial chaos expansions for stochastic finite element analysis. Probab Eng Mech 25(2):183–197

Bourinet JM, Deheeger F, Lemaire M (2011) Assessing small failure probabilities by combined subset simulation and support vector machines. Struct Saf 33(6):343–353

Bucher C, Most T (2008) A comparison of approximate response functions in structural reliability analysis. Probab Eng Mech 23(2–3):154–163

Cornuet JM et al (2012) Adaptive multiple importance sampling. Scand J Stat 39(4):798–812

Dubourg V, Sudret B, Deheeger F (2013) Metamodel-based importance sampling for structural reliability analysis. Probab Eng Mech 33:47–57

Echard B, Gayton N, Lemaire M (2011) AK-MCS: an active learning reliability method combining kriging and Monte Carlo simulation. Struct Saf 33(2):145–154

Echard B et al (2013) A combined importance sampling and kriging reliability method for small failure probabilities with time-demanding numerical models. Reliab Eng Syst Saf 111:232–240

Gaspar B et al (2014) System reliability analysis by Monte Carlo based method and finite element structural models. J Offshore Mech Arct Eng Trans ASME 136(3):1–09

Gayton N, Bourinet JM, Lemaire M (2003) CQ2RS: a new statistical approach to the response surface method for reliability analysis. Struct Saf 25(1):99–121

Jones DR, Schonlau M, Welch WJ (1998) Efficient global optimization of expensive black-box functions. J Glob Optim 13(4):455–492

Kaymaz I (2005) Application of kriging method to structural reliability problems. Struct Saf 27(2):133–151

Kleijnen JPC (2009) Kriging metamodeling in simulation: a review. Eur J Oper Res 192(3):707–716

Lv Z, Lu Z, Wang P (2015) A new learning function for kriging and its applications to solve reliability problems in engineering. Comput Math Appl 70(5):1182–1197

Melchers RE (1990) Radial importance sampling for structural reliability. J Eng Mech ASCE 116(1):189–203

Pradlwarter HJ et al (2007) Application of line sampling simulation method to reliability benchmark problems. Struct Saf 29(3):208–221

Richard J-F, Zhang W (2007) Efficient high-dimensional importance sampling. J Econ 141(2):1385–1411

Roussouly N, Petitjean F, Salaun M (2013) A new adaptive response surface method for reliability analysis. Probab Eng Mech 32:103–115

Schueremans L, Van Gemert D (2005) Benefit of splines and neural networks in simulation based structural reliability analysis. Struct Saf 27(3):246–261

Shimoyama K et al (2013) Updating kriging surrogate models based on the hypervolume indicator in multi-objective optimization. J Mech Des 135(9):094503

Sobol IM, Tutunnikov AV (1996) A variance reducing multiplier for Monte Carlo integrations. Math Comput Model 23(8–9):87–96

Song H et al (2013) Adaptive virtual support vector machine for reliability analysis of high-dimensional problems. Struct Multidiscip Optim 47(4):479–491

Sun Z et al (2017) LIF: a new kriging based learning function and its application to structural reliability analysis. Reliab Eng Syst Saf 157:152–165

Yang X et al (2015a) An active learning kriging model for hybrid reliability analysis with both random and interval variables. Struct Multidiscip Optim 51(5):1003–1016

Yang X et al (2015b) Hybrid reliability analysis with both random and probability-box variables. Acta Mech 226(5):1341–1357

Yu H, Gillot F, Ichchou M (2012) A polynomial chaos expansion based reliability method for linear random structures. Adv Struct Eng 15(12):2097–2111

Zhang H, Mullen RL, Muhanna RL (2010) Interval Monte Carlo methods for structural reliability. Struct Saf 32(3):183–190

Zhao YG, Ono T (1999) A general procedure for first/second-order reliability method (FORM/SORM). Struct Saf 21(2):95–112

Funding

The study was funded by National Science and Technology Major Project of China (Grant No. 2013ZX04011-011) and the National Defense Technology Foundation of China (Grant No. JSZL2015208B001).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declared that they have no conflicts of interest to this work. We declare that we do not have any commercial or associative interest that represents a conflict of interest in connection with the work submitted.

Additional information

Responsible Editor: Raphael Haftka

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yin, M., Wang, J. & Sun, Z. An innovative DoE strategy of the kriging model for structural reliability analysis. Struct Multidisc Optim 60, 2493–2509 (2019). https://doi.org/10.1007/s00158-019-02337-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-019-02337-0