Abstract

In this contribution, we review mortar finite element methods (FEM), which are nowadays the most well-established computational technique for contact modeling of solids and structures in the context of finite deformations and frictional sliding. Based on some concepts of nonlinear continuum mechanics, the mortar approach is first presented for the more accessible case of mesh tying (also referred to as tied contact). Mortar methods for unilateral contact then follow in a rather straightforward manner, despite the fact that several complexities, such as inequality constraints, are added to the problem formulation. A special focus is set on practical aspects of the implementation of mortar methods within a fully nonlinear, 3D finite element environment. Specifically, the choice of suitable discrete Lagrange multiplier bases, aspects of high performance computing (HPC), numerical integration procedures and new discretization techniques such as isogeometric analysis (IGA) using NURBS are discussed. Eventually, the great potential of mortar methods in the more general field of computational interface mechanics is exemplified through applications such as wear modeling and coupled thermo-mechanical interfaces.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

1 Introduction and Motivation

Contact phenomena are virtually omnipresent in nature and biological systems. The associated length and time scales cover the entire spectrum from the nanoscale to the macroscopic level and from hypervelocity impact to quasi-static contact interaction, respectively. For example, the plate tectonics process of the continental drift, the simple motion sequence when walking or the flow of red blood cells (erythrocytes) through blood vessels are all representatives of processes largely dominated by contact and associated physical effects. Beyond that, science and engineering have exploited the principles of contact mechanics to develop processes, such as deep-drawing or extrusion-molding, as well as technical systems and machine parts, including car tires, fluid bearings, gears, shafts and splines or elastomeric seals.

Contact mechanics can be looked at from several different perspectives. For some scenarios, e.g. in nanotribology, it is helpful or even mandatory to investigate contact interaction at an atomistic level. For many contact applications, however, a purely macroscopic viewpoint based on classical continuum assumptions is sufficient. Throughout this chapter, a continuum approach will be followed, mainly considering contact mechanics as a particularly challenging subclass of solid and structural mechanics. The geometrical constraint of non-penetration of different solid bodies can then easily be identified as the most important underlying principle of contact interaction. In addition, the overall contact phenomenon is commonly also influenced by one or several closely related interface effects, for example sticking and sliding friction, adhesion, elastohydrodynamic lubrication and wear. Altogether, contact and its associated phenomena introduce strong additional nonlinearities into solid mechanics problems, where contact itself can basically be interpreted as a set of complex boundary conditions, possibly changing over time. Together with the already typical nonlinearities inherent in general solid mechanics, i.e. large deformations and nonlinear constitutive (material) behavior, this evinces the challenges and difficulties of mathematically describing and solving contact interactions, even if the given problem setup is quite simple. Due to this complexity, only very few contact problem settings exist, where analytical solution techniques are actually applicable. The early work conducted by Hertz (1882) on pressure distributions between contacting elastic bodies more than a century ago, is commonly considered to be the origin of modern contact analysis. A comprehensive overview of the basic principles of contact mechanics, together with the most important analytical solution techniques can be found in the textbooks by Johnson (1985) and Timoshenko and Goodier (1970).

With general contact problems being hardly accessible for mathematical analysis, experimental procedures and numerical modeling are naturally becoming the focus of attention. Physical experiments are a convenient way of gaining information about certain aspects of contact mechanics, e.g. for determining coefficients of friction related to different material pairings. However, for the majority of contact scenarios, the applicability of experimental procedures is either limited or practically impossible. As a prominent example, experimental crashworthiness assessment, in accordance with safety regulations and consumer protection tests, causes considerable costs in the automotive industry. Complex contact phenomena in patient-specific surgery planning or during the design of medical devices, e.g. guaranteeing the optimal placement and minimum leakage of arterial stents, do not even allow for meaningful experimental tests at all. Thus, combining the aforementioned exemplary arguments, it becomes obvious that there is a very high and ever-growing demand for powerful numerical modeling and simulation techniques in the field of contact mechanics. What makes improved contact simulation approaches even more promising and likely to generate significant impact is the fact that the resulting numerical algorithms can typically be employed for a very broad range of scientific and technical interests. In fundamental physical, chemical or biological research, as well as in the applied sciences, novel methods and tools of computational contact mechanics allow for a better understanding of complex systems, which are influenced by contact phenomena. On the other hand, many aspects of engineering practice and product development (e.g. minimizing the frictional loss in gear transmissions, optimizing the structural integrity of car bodies in crash situations) also heavily benefit from improvements in contact modeling and simulation.

2 Contact Mechanics and FEM

All ideas and methods of computational contact mechanics will be exclusively discussed in the context of the finite element method (FEM) throughout this chapter. Since the 1960s, the FEM has gradually evolved as the dominating numerical approximation technique for the solution of partial differential equations (PDEs) in various fields, especially solid and structural mechanics including contact mechanics, but also in fluid mechanics, thermodynamics and for the treatment of coupled problems. The general FEM literature is abundant, exemplarily the interested reader is referred to the monographs by Bathe (1996), Hughes (2000), Belytschko et al. (2000), Reddy (2004), Zienkiewicz et al. (2005) and Zienkiewicz and Taylor (2005). Other approaches for the numerical simulation of contact mechanics are only mentioned very briefly here for the sake of completeness. Multibody dynamics are a fitting tool when analyzing contact and impact phenomena of rigid bodies, with possible extensions to elastic multibody dynamics allowing for a certain degree of deformation of the contacting bodies. Moreover, particle methods such as the discrete element method (DEM) are frequently used for investigating granular and particulate materials, whose mechanical behavior is largely dominated by contact interaction. While finite elements would not be the method of choice for such applications, this chapter is mainly related to contact of elastic solid bodies, possibly including very large deformations. In this context, the FEM undoubtedly provides a very convenient framework for numerical modeling and simulation. Furthermore, there is an increasing interest in the interplay of contact mechanics with other physical phenomena, such as thermomechanics, wear and the lubrication behavior of thin fluid films, where finite elements are also an eligible approach, e.g. due to their generality and geometrical flexibility.

First contributions to the treatment of contact mechanics within the FEM can be traced back to the 1970s and 1980s. In Francavilla and Zienkiewicz (1975) and Hughes et al. (1976), contact conditions are formulated based on a very simple, purely node-based approach, which requires node-matching finite element meshes at the contact interface and is restricted to small deformations. Subsequently, a different idea was expedited, typically denoted as node-to-surface or node-to-segment (NTS) approach and characterized by a discrete, point-wise enforcement of the non-penetration condition at the finite element nodes. This NTS approach could readily be applied to the case of finite deformations and large sliding motions, therefore soon becoming the standard procedure in computational contact mechanics. Without claiming that the following listing is exhaustive, the reader is referred to Bathe and Chaudhary (1985), Hallquist et al. (1985), Benson and Hallquist (1990), Simo and Laursen (1992), Laursen (1992), Laursen and Simo (1993) and Wriggers et al. (1990) for a comprehensive overview. An important basis for the methods to be proposed in this chapter is formed by the first investigations on the so-called segment-to-segment (STS) approach in Papadopoulos and Taylor (1992) and Simo et al. (1985). In contrast to the purely point-wise procedure typical of NTS methods, the STS approach is based on a thorough sub-division of the contact surface into individual segments for numerical integration together with an independent approximation of the contact pressure. Thereby, the STS approach can be interpreted as precursor of mortar finite element methods for computational contact mechanics, which will be the main topic here.

Before reviewing the literature on mortar methods, however, an overview of other important aspects of computational contact mechanics aside from the discretization approach (NTS, STS, mortar) is given. One main focus of attention has been set on different procedures for the enforcement of contact constraints, with the most prominent representatives being penalty methods, Lagrange multiplier methods and Augmented Lagrange methods, see Alart and Curnier (1991) for an excellent overview and discussion. Further questions related to contact modeling within a finite element framework comprise efficient search algorithms (Williams and O’Connor 1999), mesh adaptivity (Wriggers and Scherf 1995; Carstensen et al. 1999; Hüeber and Wohlmuth 2012), covariant surface description (Laursen and Simo 1993; Schweizerhof and Konyukhov 2005), surface smoothing (Wriggers et al. 2001; Puso and Laursen 2002), the treatment of contact on enriched and embedded interfaces (Laursen et al. 2012), modeling of interface effects other than friction (Yang and Laursen 2009; Sauer 2011), beam contact (Wriggers and Zavarise 1997; Zavarise and Wriggers 2000) and energy conservation in the context of contact dynamics (Laursen and Chawla 1997; Laursen and Love 2002; Hager et al. 2008; Hesch and Betsch 2009), among others. Apart from numerous original papers, a comprehensive introduction to most of these topics can be found in the textbooks by Laursen (2002) and Wriggers (2006).

Nevertheless, novel robust discretization techniques for finite deformation contact problems, and especially mortar finite elements adapted for this purpose, have arguably received most attention in the field of computational contact mechanics in recent years. Mortar methods, which were originally introduced as an abstract domain decomposition technique (Bernardi et al. 1994; Ben Belgacem 1999; Seshaiyer and Suri 2000), are characterized by an imposition of the occurring interface constraints in a weak sense and by the possibility to prove their mathematical optimality. In the context of contact analysis, this allows for a variationally consistent treatment of non-penetration and frictional sliding conditions despite the inevitably non-matching interface meshes for finite deformations and large sliding motions. Early applications of mortar finite element methods for contact mechanics can, for example, be found in Ben Belgacem et al. (1998), Hild (2000) and McDevitt and Laursen (2000), though limited to small deformations. Gradually, restrictions of mortar-based contact formulations with respect to nonlinear kinematics have been removed, leading to the implementations given in Puso and Laursen (2004a, b), Fischer and Wriggers (2005), Fischer and Wriggers (2006), Hesch and Betsch (2009), Tur et al. (2009) and Hesch and Betsch (2011).

An alternative choice for the discrete Lagrange multiplier space, so-called dual Lagrange multipliers, was proposed in Wohlmuth (2000, 2001) and, in contrast to the standard mortar approach, generates interface coupling conditions that are much easier to realize without impinging upon the optimality of the method. Applications of this approach to small deformation contact problems can be found in Hüeber and Wohlmuth (2005), Flemisch and Wohlmuth (2007), Brunssen et al. (2007) and Hüeber et al. (2008), and first steps towards finite deformations have been undertaken in Hartmann (2007) and Hartmann et al. (2007). A fully nonlinear extension of the dual mortar approach including consistent linearization of all deformation-dependent quantities has been proposed in Popp et al. (2009, 2010), with extensions to frictional sliding, second-order finite elements and a consistent treatment of dropping-edge problems following shortly afterwards (Cichosz and Bischoff 2011; Popp et al. 2012; Wohlmuth et al. 2012; Popp et al. 2013; Popp and Wall 2014). Another interesting feature of dual Lagrange multiplier interpolation is that it naturally fits together with so-called primal-dual active set strategies for constraint enforcement. It is well-known from the mathematical literature on constrained optimization problems and also from applications in computational contact mechanics, that primal-dual active set strategies can equivalently be interpreted as semi-smooth Newton methods (Alart and Curnier 1991; Qi and Sun 1993; Christensen et al. 1998; Christensen 2002; Hintermüller et al. 2002), thus allowing for the design of very efficient global solution algorithms, especially in the context of nonlinear material behavior and finite deformations.

Recent developments in the meanwhile rather broad field of mortar finite element methods for computational contact mechanics include, without being complete, the following topics: smoothing techniques (Tur et al. 2012), isogeometric analysis using NURBS (Temizer et al. 2011, 2012; De Lorenzis et al. 2014; Brivadis et al. 2015), improved numerical integration schemes (Farah et al. 2015), complex interface models such as wear (Cavalieri and Cardona 2013; Farah et al. 2016, 2017), treatment of embedded interfaces (Laursen et al. 2012) as well as aspects of adaptivity and high performance computing (Popp and Wall 2014; Kindo et al. 2014). While a few different discretization approaches have been suggested, see e.g. the contact domain method proposed in Hartmann et al. (2009) and Oliver et al. (2009), and while NTS methods are still very popular in engineering practice, mortar-based contact formulations have become quite well-established in the meantime and can arguably be seen as state-of-the-art method for computational contact mechanics.

3 Overview of Nonlinear Continuum Mechanics

In this section, the basic concepts of nonlinear continuum mechanics are reviewed with a focus on the governing equations for solid dynamics and contact interaction required later. These remarks are not intended to give an exhaustive overview of the topic, but are rather geared towards outlining the necessary basics for contact mechanics. For more extensive reviews in the field of solid and structural dynamics, the reader is referred to the corresponding literature, e.g., Gurtin (1981), Marsden and Hughes (1994), Ogden (1997), Bonet and Wood (1997), Holzapfel (2000) and Simo and Hughes (1998). Large parts of this section are based on the author’s previously published work (Popp 2012).

3.1 Kinematics

In this section, the fundamental kinematic relationships describing the deformation of a homogeneous body are presented. The classical (Boltzmann) continuum model in a three-dimensional Euclidean space description is assumed. Two distinct observer frames are defined: the reference configuration \(\Omega _0 \subset \mathbb {R}^3\) denotes the domain occupied by all material points \(\varvec{X}\) at time \(t=0\), while the current configuration \(\Omega _t \subset \mathbb {R}^3\) describes the changed positions \(\varvec{x}\) at a certain time t. The motion and deformation from reference to current configuration are tracked with the bijective nonlinear deformation map

which also allows for the notations \(\varvec{x}=\Phi _t(\varvec{X},t)\) and \(\varvec{X}=\Phi _t^{\mathsf {-1}}(\varvec{x},t)\). The absolute displacement of a material point (see again Fig. 1) is then described as

Within the total Lagrangian approach, kinematic relations and all derived quantities are described with respect to the material points in the reference configuration \(\Omega _0\). Thus, the material point position \(\varvec{X}\) plays the role of an independent variable for the problem formulation, while the primary unknown to be solved for is the time-dependent deformation map \(\Phi _t(\varvec{X},t)\), or equivalently the displacement vector \(\varvec{u}(\varvec{X},t)\).

A fundamental measure for deformation and strain in the context of finite deformation solid mechanics is given by the deformation gradient \({\varvec{F}}\), defined as partial derivative of the current configuration with respect to the reference configuration:

where \({\varvec{I}}\) is the second-order identity tensor. Assuming as usual bijectivity and smoothness of the deformation map \(\Phi _t\), the inverse deformation gradient \({\varvec{F}}^{\mathsf {-1}}=\partial \varvec{X} / \partial \varvec{x}\) is also well-defined, therefore guaranteeing a positive determinant \(J = \det {\varvec{F}} > 0\). This quantity, also commonly denoted as Jacobian determinant of the deformation, represents the transformation of an infinitesimal volume element between the two configurations:

The deformation gradient also allows for the mapping of an infinitesimal, oriented area element from reference to current configuration, yielding

which is commonly referred to as Nanson’s formula. Herein, the infinitesimal area elements are interpreted as vectors \(\mathrm {d} \varvec{A}_0 = \mathrm {d} A_0 \, \varvec{N}\) and \(\mathrm {d} \varvec{A} = \mathrm {d} A \, \varvec{n}\), where \(\varvec{N}\) and \(\varvec{n}\) denote unit normal vectors of the area element in the reference and current configuration, respectively.

An apparent choice for a suitable nonlinear strain measure is the so-called Green–Lagrange strain tensor \({\varvec{E}}\) defined in the material configuration as

Although strain measures are never unique, the Green–Lagrange strain tensor is a very common choice in nonlinear solid mechanics, and can be considered particularly convenient if large deformations occur but only a moderate amount of stretch and compression.

The first and second time derivatives of the displacement vector \(\varvec{u}(\varvec{X},t)\) in material description, i.e. velocities \(\dot{\varvec{u}}(\varvec{X},t)\) and accelerations \(\ddot{\varvec{u}}(\varvec{X},t)\), are defined as follows:

Corresponding rate forms (i.e. time derivatives) of the deformation measures, such as the material velocity gradient \({\varvec{L}}=\dot{{\varvec{F}}}\) or the material strain rate tensor \(\dot{{\varvec{E}}} = \frac{1}{2} (\dot{{\varvec{F}}}^{\mathsf {T}} \cdot {\varvec{F}} + {\varvec{F}}^{\mathsf {T}} \cdot \dot{{\varvec{F}}}) = \frac{1}{2} \dot{{\varvec{C}}}\) are readily defined, too.

3.2 Stresses and Constitutive Laws

The motion and deformation of an elastic body effects internal stresses. This is readily described by the traction vector \(\varvec{t}\) in the current configuration:

yielding the limit value of the resulting force \(\varvec{f}\) acting on an arbitrary surface area \(\Delta A\) characterized by its unit surface normal vector \(\varvec{n}\). The Cauchy theorem then correlates tractions and stresses via

Herein, the symmetric Cauchy stress tensor \({\varvec{\sigma }}\) represents the true internal stress state within a body in its a priori unknown current configuration, with diagonal and off-diagonal components components being interpretable as normal stresses and shear stresses, respectively. A multitude of alternative stress definitions is also prevailing in nonlinear continuum mechanics. Exemplarily, the first Piola–Kirchhoff stress tensor \({\varvec{P}}\) maps the material surface element \(\mathrm {d} \varvec{A}_0 = \mathrm {d} A_0 \varvec{N}\) onto the spatial resulting force \(\varvec{f}\). Its definition is obtained from the Cauchy stress tensor \({\varvec{\sigma }}\) by applying Nanson’s formula (5), yielding

Consequently, it is possible to construct a stress tensor purely based on quantities in the reference configuration, too. By also transforming the resulting force vector \(\varvec{f}\) accordingly, the symmetric second Piola–Kirchhoff stress tensor \({\varvec{S}}\) emerges as

With typical measures for both strains and stresses being established, constitutive relations provide the missing link between kinematics and material response. Throughout this chapter, only homogeneous bodies undergoing purely elastic deformation processes without internal dissipation are considered. Moreover, the existence of a so-called strain energy function or elastic potential \(\Psi ({\varvec{F}})\) is assumed, which only depends upon the current state of deformation (hyperelastic material behavior). The requirement of objectivity implies that \(\Psi \) remains unchanged when an arbitrary rigid body rotation is applied to the current configuration. A common formulation of hyperelastic materials in the reference frame then follows as

The relation between \({\varvec{S}}\) and \({\varvec{E}}\) given by (13) will in general be nonlinear. Thus, it is possible (and necessary within typical finite element procedures) to determine the fourth-order material elasticity tensor \(\pmb {\mathscr {C}}_{\mathsf {m}}\) via repeated derivation, yielding

Exemplarily, only one prevailing constitutive model is presented here: the St.-Venant–Kirchhoff material model is an isotropic, hyperelastic model based on a quadratic strain energy function

In this context, \(\lambda \) and \(\mu \) represent the so-called Lamé parameters, which are correlated with the more common Young’s modulus E and Poisson’s ratio \(\nu \) via

Inserting (15) into (13) and (14), it can easily be observed that the St.-Venant–Kirchhoff material model defines a linear relationship between Green–Lagrange strains \({\varvec{E}}\) and second Piola–Kirchhoff stresses \({\varvec{S}}\), and can therefore be interpreted as an objective generalization of Hooke’s law to the geometrically nonlinear realm. Many other constitutive laws exist for miscellaneous applications (e.g. the well-known Neo–Hookean, Mooney–Rivlin or Ogden models for rubber materials). However, with the focus of this chapter being on contact interaction rather than constitutive modeling, the interested reader is referred to the abundant literature on hyperelasticity, viscoelasticity or elastoplasticity for further details, e.g. in Holzapfel (2000), Ogden (1997) and Simo and Hughes (1998).

3.3 Initial Boundary Value Problem

Exemplarily, the IBVP will be presented in the reference configuration here, however the spatial description is derived analogously. For the definition of suitable boundary conditions, \(\partial \Omega _0\) is decomposed into two complementary sets in the absence of contact: \(\Gamma _{\sigma }\) represents the Neumann boundary, where the tractions \(\hat{\varvec{t}}_0\) are given, and \(\Gamma _{{\mathsf {u}}}\) denotes the Dirichlet boundary, where displacements \(\hat{\varvec{u}}\) are prescribed. Neumann and Dirichlet boundaries are disjoint sets, i.e.

The initial boundary value problem in material description can be summarized as follows :

Herein, T denotes the end of the considered time interval. Due to the time dependency within the balance of linear momentum in (18), which contains second derivatives with respect to time t, suitable initial conditions for the displacements \(\hat{\varvec{u}}_0(\varvec{X})\) and velocities \(\hat{\dot{\varvec{u}}}_0(\varvec{X})\) at time \(t=0\) are needed, viz.

The definition of a material model, such as for instance the one given in (15), eventually rounds off the initial boundary value problem of finite deformation solid mechanics. The IBVP is also commonly referred to as strong formulation of nonlinear solid mechanics, as Eqs. (18)–(22) are enforced at each individual point within the domain \(\Omega _0\).

3.4 Contact Kinematics

From the viewpoint of mathematical problem formulation, contact and impact procedures can be classified into several different categories. A problem setup consisting of one single deformable body and a rigid obstacle is commonly referred to as Signorini contact, while the typical general problem formulation rests upon the assumption of two deformable bodies undergoing contact interaction. Moreover, self contact and contact involving multiple bodies represent well-known special cases. While it is usually advantageous or even essential to design specific numerical algorithms for the aforementioned special cases, all mathematical basics concerning contact kinematics and contact constraints can yet be perfectly derived for the case of two deformable bodies.

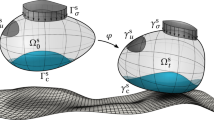

Hence, deformable-deformable contact of two bodies undergoing finite deformations, as illustrated in Fig. 2, serves as prototype exclusively considered here. Let the open sets \(\Omega _0^{(1)}\), \(\Omega _0^{(2)} \subset \mathbb {R}^3\) and \(\Omega _t^{(1)}\), \(\Omega _t^{(2)} \subset \mathbb {R}^3\) represent two bodies in the reference and current configuration, respectively. As the two bodies approach each other and may potentially come into contact on parts of their boundaries, the surfaces \(\partial \Omega _0^{(i)}\), \(i=1,2\), are now divided into three disjoint subsets, viz.

where \(\Gamma _{{\mathsf {u}}}^{(i)}\) and \(\Gamma _{\sigma }^{(i)}\) are the well-known Dirichlet and Neumann boundaries, and \( \Gamma _{{\mathsf {c}}}^{(i)}\) represents the potential contact surface. The counterparts in the current configuration are denoted as \(\gamma _{{\mathsf {u}}}^{(i)}\), \(\gamma _{\sigma }^{(i)}\) and \(\gamma _{{\mathsf {c}}}^{(i)}\). It is characteristic of contact problems that the actual, so-called active contact surface \(\Gamma _{{\mathsf {a}}}^{(i)} \subseteq \Gamma _{{\mathsf {c}}}^{(i)}\) is unknown, possibly continuously changing over time and thus has to be determined as part of the nonlinear solution process. For the sake of completeness, and to be mathematically precise, the currently inactive contact surface \(\Gamma _{{\mathsf {i}}}^{(i)} = \Gamma _{{\mathsf {c}}}^{(i)} \setminus \Gamma _{{\mathsf {a}}}^{(i)}\) should technically be interpreted as part of the Neumann boundary \(\Gamma _{\sigma }^{(i)}\).

A classical nomenclature in contact mechanics is retained throughout this chapter by referring to \(\Gamma _{{\mathsf {c}}}^{(1)}\) as the slave surface and to \(\Gamma _{{\mathsf {c}}}^{(2)}\) as the master surface, although the master-slave concept actually only makes sense in the context of finite element discretization and although its traditional meaning will not be entirely conveyed to the mortar FE approach presented later on.

Both bodies are required to satisfy the initial boundary value problem previously presented in Sect. 3.3, with the motion and deformation being described by the absolute displacement vectors \(\varvec{u}^{(i)} = \varvec{x}^{(i)} - \varvec{X}^{(i)}\). Moreover, a new fundamental geometric measure for proximity, potential contact and penetration of the two bodies is introduced with the so-called gap function \(\varvec{g}_{\mathsf {n}}(\varvec{X},t)\) in the current configuration. It is evident that the gap function and other contact-related quantities need to be examined in a spatial description, even though the IBVP may still be formulated with respect to the reference configuration. The gap function is defined as

where some alternatives exist for the identification of the contact point \(\hat{\varvec{x}}^{(2)}\) on the master surface associated with each point \(\varvec{x}^{(1)}\) on the slave surface and also for the corresponding contact normal vector \(\varvec{n}_{\mathsf {c}}\). The classical and perhaps most intuitive choice in contact mechanics is based on the so-called closest point projection (CPP), which determines \(\hat{\varvec{x}}^{(2)}\) as

Consequently, \(\varvec{n}_{\mathsf {c}}\) is then chosen to be the outward unit normal to the current master surface \(\gamma _{\mathsf {c}}^{(2)}\) in \(\hat{\varvec{x}}^{(2)}\). A very comprehensive overview of the closest point projection, its mathematical properties and possible pitfalls due to non-uniqueness and certain pathological cases can be found in Konyukhov and Schweizerhof (2008). However, a slightly different approach is followed here, with the outward unit normal to the current slave surface \(\gamma _{\mathsf {c}}^{(1)}\) being considered as contact normal \(\varvec{n}_{\mathsf {c}}\). Hence, the master side contact point \(\hat{\varvec{x}}^{(2)}\) is the result of a smooth interface mapping \(\chi : \gamma _{{\mathsf {c}}}^{(1)} \rightarrow \gamma _{{\mathsf {c}}}^{(2)}\) of \(\varvec{x}^{(1)}\) onto the master surface \(\gamma _{{\mathsf {c}}}^{(2)}\) along \(\varvec{n}_{\mathsf {c}}\), see Fig. 2. Especially in the context of mortar finite element discretization, this choice has some practical advantages over the classical closest point projection common for node-to-segment discretization.

Together with two vectors \(\varvec{\tau }_{\mathsf {c}}^{\xi }\) and \(\varvec{\tau }_{\mathsf {c}}^{\eta }\) taken from the tangential plane, \(\varvec{n}_{\mathsf {c}}\) forms a set of orthonormal basis vectors in the slave surface point \(\varvec{x}^{(1)}\). As these basis vector are attached to \(\varvec{x}^{(1)}\) and also move accordingly, they are commonly referred to as slip advected basis vectors. In this context, it is worth noting that the contact surface \(\gamma _{\mathsf {c}}^{(1)}\) is a two-dimensional manifold, which means that the tangential plane in each point \(\varvec{x}^{(1)}\) locally defines an \(\mathbb {R}^2\) space embedded into the global \(\mathbb {R}^3\). Therefore, any quantity on \(\gamma _{\mathsf {c}}^{(1)}\) is readily parametrized with the two local coordinates \(\xi (\varvec{X}^{(1)},t)\) and \(\eta (\varvec{X}^{(1)},t)\). While the gap function characterizes contact interaction in normal direction, the primary kinematic variable for frictional sliding in tangential direction is given by the relative tangential velocity

Note that this expression for \(\varvec{v}_{\tau ,{\mathsf {rel}}}\) is only exact in the case of perfect sliding and persistent contact, i.e. assuming \(\varvec{g}_{\mathsf {n}} = \dot{\varvec{g}}_{\mathsf {n}}=0\). Nevertheless, it is typically employed for quantifying the relative tangential movement of contacting bodies in all cases, even if the described prerequisites are not met exactly. To clarify the notation in (26), it is pointed out that \(\dot{\hat{\varvec{x}}}^{(2)}\) represents the current velocity of the material point \(\widehat{\varvec{X}}^{(2)}\), viz. the material contact point associated with \(\varvec{X}^{(1)}\) at time t. Therefore, it does not include a change of the material contact point \(\widehat{\varvec{X}}^{(2)}\) itself, or in other words, it does not include a change of the CPP of slave point \(\varvec{x}^{(1)}\). Based on the tangential plane defined above, \(\varvec{v}_{\tau ,{\mathsf {rel}}}\) can be decomposed into

As already mentioned, the definition of the relative tangential velocity given above is only frame-indifferent when perfect sliding occurs (\(\varvec{g}_{\mathsf {n}}=0\)), see e.g. Laursen (2002). However, since an objective measure of the slip rate is essential for formulating frictional contact conditions in finite deformation formulations, an appropriate algorithmic modification of the slip rate is typically carried out later in the course of finite element discretization.

Similar to the kinematic measures \(\varvec{g}_{\mathsf {n}}\) and \(\varvec{v}_{\tau ,{\mathsf {rel}}}\), the contact traction \(\varvec{t}_{\mathsf {c}}^{(1)}\) on the slave surface \(\gamma _{\mathsf {c}}^{(1)}\) can be split into normal and tangential components, yielding

Moreover, due to the balance of linear momentum on the contact interface, the traction vectors on slave side \(\gamma _{\mathsf {c}}^{(1)}\) and master side \(\gamma _{\mathsf {c}}^{(2)}\) are identical except for opposite signs, i.e.

For further details on these topics, the interested reader is referred to classical textbooks on contact mechanics, e.g. Johnson (1985) and Kikuchi and Oden (1988), or to more recent monographs on computational methods for contact mechanics, e.g. Laursen (2002) and Wriggers (2006).

3.5 Tied Contact Constraints

While the main focus of this chapter is on unilateral contact problems, the integration of mesh tying or tied contact problems for connecting dissimilar meshes suggests itself due to the numerous conceptual similarities. Mesh tying applications are also closely connected to the notion of domain decomposition. Thus, in Sect. 5, mesh tying serves as simplified model problem through which many methodological and later also implementational aspects of computational contact mechanics can be clearly illustrated.

As will be seen in the following, mesh tying (or tied contact) perfectly fits into the framework of contact kinematics defined above and can simply be interpreted as a special case from now on. The fundamental kinematic measure for mesh tying is simply the relative displacement between the two bodies, sometimes also referred to as gap vector \(\varvec{g}(\varvec{X},t)\), viz.

Since it is typically assumed that the two bodies to be tied together share a common interface \(\Gamma _{\mathsf {c}}^{(1)} \equiv \Gamma _{\mathsf {c}}^{(2)} \equiv \Gamma _{\mathsf {c}}\) in the reference configuration, the gap vector is equivalently expressed as

thus demonstrating the similarity with the scalar gap function \(\varvec{g}_{\mathsf {n}}(\varvec{X},t)\) for unilateral contact defined in (24) even more clearly. As compared with unilateral contact, mesh tying firstly requires no distinction between normal and tangential directions at the interface, and secondly results in a simple vector-valued equality constraint:

3.6 Normal Contact Constraints

After the short interlude on mesh tying, the focus in now again set on unilateral contact conditions. Examining the gap function defined in (24) in more detail, it becomes obvious that a positive value \(\varvec{g}_{\mathsf {n}}(\varvec{X},t)>0\) characterizes points currently not in contact, while a negative value \(\varvec{g}_{\mathsf {n}}(\varvec{X},t)<0\) denotes the (physically non-admissible) state of penetration. Therefore, the classical set of Karush–Kuhn–Tucker (KKT) conditions, commonly also referred to as Hertz–Signorini–Moreau (HSM) conditions for frictionless contact on the contact boundary can be stated as

As can be seen from Fig. 3, the KKT conditions not only define a non-smooth and nonlinear contact law, but one that is multi-valued at \(\varvec{g}_{\mathsf {n}}(\varvec{X},t)=0\). However, this set of inequality conditions also allows for a very intuitive physical interpretation. Due to the sign convention of the gap function introduced here, the first KKT condition simply represents the geometric constraint of non-penetration, whereas the second KKT condition implies that no adhesive stresses are allowed in the contact zone. Finally, the third KKT condition, well-known as complementarity condition, forces the gap to be closed when non-zero contact pressure occurs (contact) and the contact pressure to be zero when the gap is open (no contact). Note, that the type of KKT conditions defined in (33) also arise in many other problem classes of constrained optimization, and thus standard solution techniques (e.g. based on Lagrange multiplier methods and active set strategies) from optimization theory can readily be adapted for contact mechanics.

For the sake of completeness, the so-called persistency condition is also mentioned here. In the context of contact dynamics, the persistency condition is sometimes considered as an additional contact condition, requiring that

Herein, \(\dot{\varvec{g}}_{\mathsf {n}}(\varvec{X},t)\) represents the material time derivative of the gap function. Therefore, the persistency condition in combination with the KKT conditions in (33) basically demands that the contact pressure is only non-zero when the bodies are in contact and also remain so (persistent contact). On the contrary, the contact pressure is zero in the instant of bodies coming into contact and in the instant of separation. The persistency condition plays an important role in the design of energy conserving numerical algorithms for contact dynamics, see e.g. Laursen and Chawla (1997), Laursen and Love (2002), and bears a certain resemblance to the consistency condition in plasticity, see e.g. Simo and Hughes (1998).

3.7 Frictional Contact Constraints

While frictionless response (i.e. \(\varvec{t}_{\tau }=\varvec{0}\)) is a common modeling assumption, and especially helpful for a thorough development of computational methods for contact mechanics, the real contact behavior of many technical systems is determined by the frictional response to tangential loading. The associated scientific field of tribology is extremely broad, also encompassing physical phenomena such as adhesion, wear or elastohydrodynamic lubrication. The following overview is restricted to a purely macroscopic observation of dry friction, classically described by Coulomb’s law. One possible and widely used notation of Coulomb friction is given by

Herein, \(\Vert \ \cdot \, \Vert \) denotes the \(L^2\)-norm in \(\mathbb {R}^3\), \(\mathfrak {F} \ge 0\) is the friction coefficient and \(\beta \ge 0\) is a scalar parameter. An intuitive physical interpretation of Coulomb’s law as described in (35) is readily available, too. The first (inequality) condition, commonly referred to as slip condition, requires that the magnitude of the tangential stress \(\varvec{t}_{\tau }\) does not exceed a threshold defined by the coefficient of friction \(\mathfrak {F}\) and the normal contact pressure \(p_{\mathsf {n}}\). The frictional response is then characterized by two physically distinct situations. The stick state, defined by \(\beta = 0\), does not allow for any relative tangential movement in the contact zone, i.e. \(\varvec{v}_{\tau ,{\mathsf {rel}}}=\varvec{0}\). In contrast, the slip state, defined by \(\beta > 0\), implicates relative tangential sliding of the two bodies in accordance with the so-called slip rule given as second equation in (35). The last equation in (35) is again a complementarity condition, here separating the two independent solution branches of stick and slip. A commonly cited similarity of Coulomb’s law exists with the most simple formulations of elastoplasticity, see e.g. Simo and Hughes (1998). This similarity is especially interesting in the course of developing numerical algorithms for friction, which usually reuse well-known methodologies from computational inelasticity.

Finally, it is pointed out that frictional response in contact is a path-dependent process, thus introducing mechanical dissipation and making a system representation based on elastic potentials infeasible. Path-dependency can easily be observed in the fact that the tangential contact traction \(\varvec{t}_{\tau }\) depends on the velocity \(\varvec{v}_{\tau ,{\mathsf {rel}}}\) or on the rate of change of the tangential displacement if interpreted incrementally.

4 Overview of Nonlinear FEM

This section provides a brief introduction to the numerical treatment of nonlinear solid mechanics problems with finite element methods. Based on a weak formulation of the previously derived IBVP, the FEM for space discretization as well as typical implicit time stepping schemes for time discretization are presented. Large parts of this section are based on the author’s previously published work (Popp 2012).

4.1 From Strong Formulation to Weak Formulation

Many numerical methods for the solution of partial differential equations, and finite element methods in particular, require a transformation of the IBVP defined in (18)–(22) within a so-called weak or variational formulation. Although other variational principles exist, the well-known principle of virtual work (PVW) is derived exclusively here, with the starting point being a weighted residual notation of the balance equation (18) and the traction boundary condition (20), i.e.

Herein, the weighting or test functions \(\varvec{w}\) are initially arbitrary and can be interpreted as virtual displacements, i.e. \(\varvec{w} = \delta \varvec{u}\). Since the solution for the displacements is known on the Dirichlet boundary \(\Gamma _{\mathsf {u}}\), it is required that

Applying Gauss divergence theorem and inserting (37) and (12) yields

Three distinct contributions to the PVW can be identified. The first term in (38) represents the kinetic virtual work contribution \(\delta \mathcal {W}_{{\mathsf {kin}}}\), the second term denotes the internal virtual work contribution \(\delta \mathcal {W}_{{\mathsf {int}}}\), and the third and fourth term together form the virtual work of external loads \(\delta \mathcal {W}_{{\mathsf {ext}}}\). The PVW emerges as a very general principle of solid mechanics, as it does not require the existence of an associated potential \(\mathcal {W}\). As an example, no constitutive assumptions whatsoever enter the weak formulation in (38), thus making it also valid and applicable for problems such as elastoplasticity, frictional sliding or non-conservative loading.

It can easily be shown that solutions of the IBVP (i.e. of the strong formulation) also satisfy the weak formulation (38). As long as no restrictions are set on the choice of the weighting functions \(\delta \varvec{u}\), the two are formally identical, see e.g. Hughes (2000). However, due to the manipulations introduced above, the weak formulation poses weaker differentiability requirements to the solution functions \(\varvec{u}\), because only first derivatives of \(\varvec{u}\) with respect to \(\varvec{X}\) appear in (38) instead of second derivatives as in (18). Thus, the following solution and weighting spaces can be defined:

Herein, \( H^1 (\Omega )\) denotes the Sobolev space of functions with square integrable values and first derivatives. While the solution space \(\varvec{\mathcal {U}}\) may in general depend on the time t due to a possible time dependency of the Dirichlet boundary conditions, the weighting space \(\varvec{\mathcal {V}}\) does not depend on the time t in any way. In conclusion, the weak formulation of the nonlinear solid mechanics problems at hand can be restated as follows: Find \(\varvec{u} \in \varvec{\mathcal {U}}\) such that

4.2 Space Discretization

Space discretization is exclusively considered in the context of finite element methods here. However, as a detailed introduction to all important aspects of the FEM is beyond the scope of this chapter, only the basic ideas and notation will be highlighted. For a more elaborate survey of finite element methods, the reader is again referred to the corresponding literature, e.g. in Bathe (1996), Hughes (2000), Belytschko et al. (2000), Reddy (2004), Zienkiewicz and Taylor (2005) and Zienkiewicz et al. (2005).

Simply speaking, the concept of finite element discretization in this context is based on finding a numerical solution to (41) at discrete points, commonly referred to as nodes. The nodes are connected to form elements, which allows to formulate the following approximate partitioning of the domain \(\Omega _0\) into \({\mathsf {nele}}\) element subdomains:

The displacement solution \(\varvec{u}^{(e)}\) on element e is then typically approximated by local interpolation functions \(N_k(\varvec{X})\), yielding

where the discrete nodal values of the displacements \({\varvec{\mathsf {d}}}_k(t)\) have been introduced. Furthermore, the subscript \(\cdot _h\) signifies a spatially discretized quantity throughout this chapter and \({\mathsf {nnod}}^{(e)}\) represents the number of nodes associated with the element e. The interpolation functions \(N_k(\varvec{X})\), commonly referred to as shape functions, are typically (but not exclusively) low-order polynomials, e.g. Lagrange polynomials, thus meeting the differentiability requirements of the weak form. Based on the so-called isoparametric concept, the element geometry in the reference configuration \(\varvec{X}^{(e)}\) and current configuration \(\varvec{x}^{(e)}\) is approximated using the same shape functions. Typically, \(\Omega _0^{(e)}\) is mapped to a reference element geometry or parameter space \(\varvec{\xi } = (\xi ,\eta ,\zeta )\), e.g. the cube \([-1,1]\times [-1,1]\times [-1,1]\), which defines an element Jacobian matrix \(\varvec{J}^{(e)} = \partial \varvec{X}^{(e)} / \partial \varvec{\xi }\). Thus, the interpolation of displacements, current geometry and reference geometry at the element level is alternatively expressed as

with nodal positions \({\varvec{\mathsf {X}}}_k\) and \({\varvec{\mathsf {x}}}_k(t)\) in the reference and current configuration, respectively. Finally, time derivatives of the displacements, e.g. the accelerations \(\ddot{\varvec{u}}\), and the weighting functions \(\delta \varvec{u}\) are also interpolated using the same shape functions. The latter convention is commonly referred to as Bubnov–Galerkin approach, as compared with a Petrov–Galerkin approach, where an independent set of shape functions is chosen for interpolating the weighting functions.

Examining (44) more closely, it becomes obvious that the finite element method basically introduces restrictions on the solution and weighting spaces defined in (39) and (40). In the discrete setting, these spaces only contain a finite number of solution and weighting functions, respectively, which is expressed mathematically in terms of finite dimensional subspaces \(\varvec{\mathcal {U}}_h \subset \varvec{\mathcal {U}}\) and \(\varvec{\mathcal {V}}_h \subset \varvec{\mathcal {V}}\). The limited selection of solution and weighting functions then serves as a basis for the numerical solution, i.e. the weak formulation is recast into a discrete form, which is no longer equivalent to strong and weak formulation, but rather represents an approximation.

The individual contributions to the discretized weak form are integrated element-by-element using Gauss quadrature and then sorted into global vectors based on the so-called assembly operator, which governs the arrangement of local vectorial quantities into global vectors. After inserting the interpolations given by (44) into the weak formulation (38), the final spatially discretized formulation emerges as

with the global mass matrix \({\varvec{\mathsf {M}}}\), the global vector of nonlinear internal forces \({\varvec{\mathsf {f}}}_{{\mathsf {int}}}\) and the global vector of external forces \({\varvec{\mathsf {f}}}_{{\mathsf {ext}}}\). Moreover, \(\delta {\varvec{\mathsf {d}}}\), \(\ddot{{\varvec{\mathsf {d}}}}\) and \({\varvec{\mathsf {d}}}\) are global vectors comprising all discrete nodal values of virtual displacements, accelerations and displacements. Due to the interpolation introduced above, all vectors in (47) are of the size \({\mathsf {ndof}} = {\mathsf {ndim}} \cdot {\mathsf {nnod}}\), where \({\mathsf {nnod}}\) is the total number of nodes in the entire domain and \({\mathsf {ndim}}\) is the number of spatial dimensions. The variable name \({\mathsf {ndof}}\) refers to the fact that the discrete values of the nodal displacements \({\varvec{\mathsf {d}}}\) are also denoted as degrees of freedom. Since (47) must hold for arbitrary virtual displacements \(\delta {\varvec{\mathsf {d}}}\), it can equivalently be written as

This defines a system of \({\mathsf {ndof}}\) ordinary differential equations (ODEs), commonly referred to as semi-discrete equations of motion. So far, only space discretization with the finite element method has been established, but the system is still continuous with respect to time.

4.3 Time Discretization

There exists a large variety of finite difference methods suitable for time discretization of the semi-discrete equations of motion (48). In doing so, time derivatives are approximated by their discrete counterparts, the difference quotients. Based on the introduction of a constant time step size \(\Delta t\), the time interval of interest \(t \in [0,T]\) is subdivided into several intervals \([t_n,t_{n+1}]\), where \(n \in \mathbb {N}_0\) is the time step index, and thus the spatially discretized displacement solution \({\varvec{\mathsf {d}}}(t)\) is computed at a series of discrete points in time.

In principle, time integration methods can be divided into implicit and explicit schemes. While implicit methods lead to a fully coupled system of \({\mathsf {ndof}}\) nonlinear discrete algebraic equations for the unknown displacements \({\varvec{\mathsf {d}}}_{n+1} := {\varvec{\mathsf {d}}}(t_{n+1})\), explicit methods allow for a direct extrapolation towards \({\varvec{\mathsf {d}}}_{n+1}\) without requiring a solution step. Here, only implicit schemes will be considered. They represent the method of choice for problems dominated by a low frequency response, while explicit methods are widely used in the context of high frequency responses and wave-like phenomena, e.g. in high velocity impact situations. In general, implicit time integration methods can be shown to be unconditionally stable, thus allowing for relatively large time step sizes as compared with explicit schemes. However, the implementation of implicit methods is more challenging due to the fact that nonlinear solution methods (see Sect. 4.4) including a linearization of the entire finite element formulation are required.

Here, the presentation is restricted to one exemplary and widely used implicit time integration scheme, viz. the generalized-\(\alpha \) method introduced by Chung and Hulbert (1993). This one-step time integration scheme is based on the well-known Newmark method, which allows for expressing the approximate discrete velocities \({\varvec{\mathsf {v}}}_{n+1} \approx \dot{{\varvec{\mathsf {d}}}}(t_{n+1})\) and accelerations \({\varvec{\mathsf {a}}}_{n+1} \approx \ddot{{\varvec{\mathsf {d}}}}(t_{n+1})\) at the end of the considered time interval \([t_n,t_{n+1}]\) solely in terms of already known quantities at time \(t_n\) and the unknown displacements \({\varvec{\mathsf {d}}}_{n+1}\), i.e.

where \(\beta \in [0,1/2]\) and \(\gamma \in [0,1]\) are two parameters characterizing the behavior of the method. The generalized-\(\alpha \) method introduces generalized mid-points \(t_{n+1-\alpha _{\mathsf {m}}}\) and \(t_{n+1-\alpha _{\mathsf {f}}}\) and shifts the evaluation of the individual terms in (48) from \(t_{n+1}\) to these midpoints. The following linear interpolation rules are commonly established for the generalized-\(\alpha \) method:

Eventually, the fully (i.e. space and time) discretized finite element formulation of nonlinear solid mechanics, also referred to as discrete linear momentum balance, is obtained as

One important advantage of the generalized-\(\alpha \) method is that it allows for introducing controllable numerical dissipation into the considered system, while at the same time retaining the important properties of unconditional stability and second-order accuracy. Controllable numerical dissipation in this context means that the parameters \(\beta \), \(\gamma \), \(\alpha _{\mathsf {m}}\) and \(\alpha _{\mathsf {f}}\) can be harmonized such that the desired damping effect is only achieved in the spurious high frequency modes, while damping in the low frequency domain is kept at a minimum. This procedure is usually united in the notion of a spectral radius \(\rho _{\infty }\) as the sole free parameter to choose for a generalized-\(\alpha \) method. The other parameters then follow directly from the requirements of unconditional stability, second-order accuracy and optimized numerical dissipation as

Note that no numerical dissipation is introduced into the system for the choice \(\rho _{\infty }=1\). Moreover, the generalized-\(\alpha \) method also contains the classical Newmark method as a special case by setting \(\alpha _{\mathsf {m}}=\alpha _{\mathsf {f}}=0\).

For the sake of completeness, it is pointed out that quasistatic problems, i.e. neglecting inertia effects, are also considered in the following. In that case, the time parameter t only plays the role of a pseudo-time and no time integration method is needed, but the quasistatic solution is rather computed as a series of static equilibrium states.

4.4 Linearization and Solution Techniques for Nonlinear Equations

Within each time step, the system of \({\mathsf {ndof}}\) nonlinear discrete algebraic Eq. (55) needs to be solved for the unknown displacements \({\varvec{\mathsf {d}}}_{n+1}\). Throughout this contribution, the Newton–Raphson method is employed as an iterative nonlinear solution technique. Within each iteration step i, the residual of the discrete linear momentum balance can be defined as

The Newton–Raphson method is based on repeated linearization of the residual in (57), solution of the resulting linearized system of equations and incremental update of the unknown displacements until a user-defined convergence criterion is met. At first, the linearization is obtained from the truncated Taylor expansion of (57), viz.

where the partial derivative of \({\varvec{\mathsf {r}}}_{{\mathsf {effdyn}}}({\varvec{\mathsf {d}}}_{n+1}^i)\) with respect to the displacements is commonly referred to as dynamic effective tangential stiffness matrix \({\varvec{\mathsf {K}}}_{{\mathsf {effdyn}}}({\varvec{\mathsf {d}}}_{n+1}^i)\) of size \({\mathsf {ndof}} \times {\mathsf {ndof}}\). In the context of the generalized-\(\alpha \) method, the dynamic effective tangential stiffness matrix can be determined based on Newmark’s approximation given in (49) and (50) and the generalized midpoints defined in (51)–(54), yielding

where \({\varvec{\mathsf {K}}}_{\mathsf {T}}({\varvec{\mathsf {d}}}_{n+1-\alpha _{\mathsf {f}}})\) is the tangential stiffness matrix associated with the internal forces as

To sum up, the Newton–Raphson method provides an iterative procedure for finding the unknown solution \({\varvec{\mathsf {d}}}_{n+1}\) for which the residual \({\varvec{\mathsf {r}}}_{{\mathsf {effdyn}}}({\varvec{\mathsf {d}}}_{n+1})\) vanishes. Within each iteration, it is required that

or in other words, the following linear system of equations has to be solved:

Having solved (62), the displacements \({\varvec{\mathsf {d}}}_{n+1}^{i+1}\) at the end of the time step can be updated via

and the iteration counter is increased by one, i.e. \(i \rightarrow i+1\). The procedure in (62) and (63) is repeated until a certain user-defined convergence criterion, usually with regard to the \(L^2\)-norm of the residual \(\Vert {\varvec{\mathsf {r}}}_{{\mathsf {effdyn}}}({\varvec{\mathsf {d}}}_{n+1}^i)\Vert \), is met. The most advantageous property of the Newton–Raphson method is its local quadratic convergence. This means that if the start solution estimate \({\varvec{\mathsf {d}}}_{n+1}^{0}\) is sufficiently close to the actual solution \({\varvec{\mathsf {d}}}_{n+1}\), i.e. within the problem-dependent convergence radius, then the residual norm approaches zero with a quadratic convergence rate.

In this contribution, only exact Newton–Raphson methods are considered as described above or later also their semi-smooth variants for the inclusion of contact constraints. However, the computational cost associated with such an approach can be considerable for nonlinear solid mechanics problems, bearing in mind that it requires a consistent linearization and thus a determination of the tangential stiffness matrix \({\varvec{\mathsf {K}}}_{\mathsf {T}}({\varvec{\mathsf {d}}}_{n+1-\alpha _{\mathsf {f}}})\) within each iteration step. In practice, this often leads to the application of quasi-Newton methods or modified Newton methods, which are based on a computationally cheaper approximation of the stiffness matrix (e.g. via secants), but sacrifice optimal convergence behavior. Apart from that, many extensions of the Newton–Raphson method aim at enlarging its local convergence radius. Popular examples of such globalization strategies are line search methods and the pseudo-transient continuation (PTC) technique, see e.g. Gee et al. (2009) and references therein.

5 Mortar Methods for Tied Contact

Mesh tying (also referred to as tied contact) serves as a model problem for the introduction to mortar finite element methods here. The basic motivation for such mortar mesh tying algorithms is to connect dissimilar meshes in nonlinear solid mechanics in a variationally consistent manner. Reasons for the occurrence of non-matching meshes can be manifold and range from different resolution requirements in the individual subdomains over the use of different types of finite element interpolation to the rather practical experience that the submodels to be connected are commonly meshed independently. Further details and a full derivation of all formulations can be found in the author’s original work (Popp 2012).

5.1 Strong Formulation

Without loss of generality, only the case of a body with one sole tied contact interface is considered. On each subdomain \(\Omega _0^{(i)}\), the initial boundary value problem of finite deformation elastodynamics needs to be satisfied, viz.

The tied contact constraint, also formulated in the reference configuration, is given as

Equations (64)–(69) represent the final strong form of a mesh tying problem in nonlinear solid mechanics. In the course of deriving a weak formulation (see next paragraph), the balance of linear momentum at the mesh tying interface \(\Gamma _{\mathsf {c}}\) is typically exploited and a Lagrange multiplier vector field \(\varvec{\lambda }\) is introduced, thus setting the basis for a mixed variational approach.

5.2 Weak Formulation

To start the derivation of a weak formulation of (64)–(69), appropriate solution spaces \(\varvec{\mathcal {U}}^{(i)}\) and weighting spaces \(\varvec{\mathcal {V}}^{(i)}\) need to be defined as

Moreover, the Lagrange multiplier vector \(\varvec{\lambda }=-\varvec{t}_{\mathsf {c}}^{(1)}\), which represents the negative slave side contact traction \(\varvec{t}_{\mathsf {c}}^{(1)}\) and is supposed to enforce the mesh tying constraint (69), is chosen from a corresponding solution space denoted as \(\varvec{\mathcal {M}}\). In terms of its classification in functional analysis, this space represents the dual space of the trace space \(\varvec{\mathcal {W}}^{(1)}\) of \(\varvec{\mathcal {V}}^{(1)}\). In the given context, this means that \(\mathcal {M} = H^{-1/2}(\Gamma _{\mathsf {c}})\) and \(\mathcal {W}^{(1)} = H^{1/2}(\Gamma _{\mathsf {c}})\), where \(\mathcal {M}\) and \(\mathcal {W}^{(1)}\) denote single scalar components of the corresponding vector-valued spaces \(\varvec{\mathcal {M}}\) and \(\varvec{\mathcal {W}}\).

Based on these considerations, a saddle point type weak formulation is derived next. Basically, this can be done by extending the standard weak formulation of nonlinear solid mechanics as defined in (38) to two subdomains and combining it with Lagrange multiplier coupling terms. Find \(\varvec{u}^{(i)} \in \varvec{\mathcal {U}}^{(i)}\) and \(\varvec{\lambda } \in \varvec{\mathcal {M}}\) such that

Herein, the kinetic contribution \(\delta \mathcal {W}_{{\mathsf {kin}}}\), the internal and external contributions \(\delta \mathcal {W}_{{\mathsf {int,ext}}}\) and the mesh tying interface contribution \(\delta \mathcal {W}_{{\mathsf {mt}}}\) to the overall virtual work on the two subdomains, as well as the weak form of the mesh tying constraint \(\delta \mathcal {W}_{\lambda }\), have been abbreviated as

It is important to point out that, strictly speaking, the coupling bilinear forms \(\delta \mathcal {W}_{{\mathsf {mt}}}\) and \(\delta \mathcal {W}_{\lambda }\) cannot be represented by integrals, because the involved spaces \(H^{1/2}(\Gamma _{\mathsf {c}})\) and \(H^{-1/2}(\Gamma _{\mathsf {c}})\) do not satisfy the requirements for a proper integral definition. Instead, a mathematically correct notation would use so-called duality pairings \(\langle \varvec{\lambda } , (\delta \varvec{u}^{(1)} - \delta \varvec{u}^{(2)}) \rangle _{\Gamma _{\mathsf {c}}}\) and \(\langle \delta \varvec{\lambda } , (\varvec{u}^{(1)} - \varvec{u}^{(2)}) \rangle _{\Gamma _{\mathsf {c}}}\), see e.g. Wohlmuth (2000). However, during finite element discretization the solution spaces are restricted to discrete subsets of \(L^2(\Gamma _{\mathsf {c}})\) functions, and by then at the latest the coupling terms may be formulated as surface integrals. Moreover, even in the mathematical literature the distinction between duality pairing and integral is not treated consistently, and thus the slightly inaccurate formulation in (76) and (77) is preferred here due to readability.

The coupling terms on \(\Gamma _{\mathsf {c}}\) also allow for a direct interpretation in terms of variational formulations and the principle of virtual work. Whereas the contribution in (76) represents the virtual work of the unknown interface tractions \(\varvec{\lambda } = -\varvec{t}_{\mathsf {c}}^{(1)} = \varvec{t}_{\mathsf {c}}^{(2)}\), the contribution in (77) ensures a weak, variationally consistent enforcement of the tied contact constraint (69). Unlike for unilateral contact with inequality constraints, there exist no further restrictions on the Lagrange multiplier space \(\varvec{\mathcal {M}}\) here (such as e.g. positivity). Nevertheless, the concrete choice of the discrete Lagrange multiplier space \(\varvec{\mathcal {M}}_h\) in the context of mortar finite element discretizations is decisive for the stability of the method and for optimal a priori error bounds, cf. Sect. 7.1. Finally, it is pointed out that the weak formulation (72) and (73) possesses all characteristics of saddle point problems and Lagrange multiplier methods.

5.3 Finite Element Discretization

For the spatial discretization of the tied contact problem (72) and (73), standard isoparametric finite elements are employed. This defines the usual finite dimensional subspaces \(\varvec{\mathcal {U}}^{(i)}_h\) and \(\varvec{\mathcal {V}}^{(i)}_h\) being approximations of \(\varvec{\mathcal {U}}^{(i)}\) and \(\varvec{\mathcal {V}}^{(i)}\), respectively. Throughout this chapter, both first-order and second-order interpolation is considered with finite element meshes typically consisting of 3-node triangular (tri3), 4-node quadrilateral (quad4), 6-node triangular (tri6), 8-node quadrilateral (quad8) and 9-node quadrilateral (quad9) elements in 2D, and of 4-node tetrahedral (tet4), 8-node hexahedral (hex8), 10-node tetrahedral (tet10), 20-node hexahedral (hex20) and 27-node hexahedral (hex27) elements in 3D.

With the focus being on the finite element discretization of the coupling terms here, only the geometry, displacement and Lagrange multiplier interpolations on \(\Gamma _{{\mathsf {c}},h}^{(i)}\) will be considered in the following. Discretization of the remaining contributions to (72) is not discussed, but the reader is instead referred to the abundant literature. As explained in Sect. 4.2, the subscript \(\cdot _h\) refers to a spatially discretized quantity. Obviously, there exists a connection between the employed finite elements in the domains \(\Omega _{0,h}^{(i)}\) and the resulting surface facets on the mesh tying interfaces \(\Gamma _{{\mathsf {c}},h}^{(i)}\). For example, a mixed 3D finite element mesh composed of tet4 and hex8 elements yields tri3 and quad4 facets on the surface of tied contact. Consequently, the following general form of geometry and displacement interpolation on the discrete mesh tying surfaces holds:

The total number of slave nodes on \(\Gamma ^{(1)}_{{\mathsf {c}},h}\) is \(n^{(1)}\), and the total number of master nodes on \(\Gamma ^{(2)}_{{\mathsf {c}},h}\) is \(n^{(2)}\). Discrete nodal positions and discrete nodal displacements are given by \({\varvec{\mathsf {x}}}_k^{(1)}\), \({\varvec{\mathsf {x}}}_l^{(2)}\), \({\varvec{\mathsf {d}}}_k^{(1)}\) and \({\varvec{\mathsf {d}}}_l^{(2)}\). The shape functions \(N_k^{(1)}\) and \(N_l^{(2)}\) are defined with respect to the usual finite element parameter space, commonly denoted as \(\xi ^{(i)}\) for two-dimensional problems (i.e. 1D mesh tying interfaces) and as \(\varvec{\xi }^{(i)}=(\xi ^{(i)},\eta ^{(i)})\) for three-dimensional problems (i.e. 2D mesh tying interfaces). As mentioned above, the shape functions are derived from the underlying bulk discretization. Although not studied here, the proposed algorithms can in principle be transferred to higher-order interpolation and alternative shape functions, such as non-uniform rational B-splines (NURBS), see e.g. Cottrell et al. (2009), De Lorenzis et al. (2011) and Temizer et al. (2011, 2012).

In addition, an adequate discretization of the Lagrange multiplier vector \(\varvec{\lambda }\) is needed, too, and will be based on a discrete Lagrange multiplier space \(\varvec{\mathcal {M}}_h\) being an approximation of \(\varvec{\mathcal {M}}\). Some details concerning the choice of \(\varvec{\mathcal {M}}_h\), and especially concerning the two possible families of standard and dual Lagrange multipliers, will follow in Sect. 7.1. Thus, only a very general notation is given at this point:

with the (still to be defined) shape functions \(\Phi _j\) and the discrete nodal Lagrange multipliers \(\varvec{\uplambda }_j\). The total number of slave nodes carrying additional Lagrange multiplier degrees of freedom is \(m^{(1)}\). Typically for mortar methods, every slave node also serves as coupling node, and thus in the majority of cases \(m^{(1)} = n^{(1)}\) will hold. However, in the context of second-order finite elements, it will be favorable to chose \(m^{(1)} < n^{(1)}\) in certain cases. Substituting (78) and (80) into the interface virtual work \(\delta \mathcal {W}_{{\mathsf {mt}}}\) in (72) yields

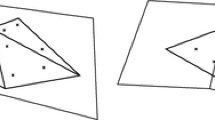

where \(\chi _h : \Gamma _{{\mathsf {c}},h}^{(1)} \rightarrow \Gamma _{{\mathsf {c}},h}^{(2)}\) defines a suitable discrete mapping from the slave to the master side of the mesh tying interface. Such a mapping (or projection) becomes necessary due to the fact that the discretized coupling surfaces \(\Gamma _{{\mathsf {c}},h}^{(1)}\) and \(\Gamma _{{\mathsf {c}},h}^{(2)}\) are, in general, no longer geometrically coincident. This becomes very clear when thinking of a curved mesh tying interface with non-matching finite element meshes on the two different sides. As illustrated in Fig. 4, tiny gaps and overlaps may be generated in the discretized setting, although the surfaces had still been coincident in the continuum framework. Throughout this contribution, numerical integration of the mortar coupling terms is exclusively performed on the slave side \(\Gamma _{{\mathsf {c}},h}^{(1)}\) of the interface. In (81), nodal blocks of the two mortar integral matrices commonly denoted as \({\varvec{\mathsf {D}}}\) and \({\varvec{\mathsf {M}}}\) can be identified. This leads to the following definitions:

where \(j\,{=}\,1, \ldots ,m^{(1)}, \; k\,{=}\,1, \ldots ,n^{(1)}, \; l=1, \ldots ,n^{(2)}\). Note that \({\varvec{\mathsf {I}}}_{{\mathsf {ndim}}} \in \mathbb {R}^{{\mathsf {ndim}} \times {\mathsf {ndim}}}\) is an identity matrix whose size is determined by the global problem dimension \({\mathsf {ndim}}\), viz. either \({\mathsf {ndim}}=2\) or \({\mathsf {ndim}}=3\). In general, both mortar matrices \({\varvec{\mathsf {D}}}\) and \({\varvec{\mathsf {M}}}\) have a rectangular shape. However, \({\varvec{\mathsf {D}}}\) becomes a square matrix for the common choice \(m^{(1)}=n^{(1)}\). More details concerning the actual numerical integration of the mass matrix type of entries in \({\varvec{\mathsf {D}}}\) and \({\varvec{\mathsf {M}}}\) as well as the implementation of the interface mapping \(\chi _h\) for 3D will be given in Sects. 5.4 and 7.3.

For the ease of notation, all nodes of the two subdomains \(\Omega _0^{(1)}\) and \(\Omega _0^{(2)}\), and correspondingly all degrees of freedom (DOFs) in the global discrete displacement vector \({\varvec{\mathsf {d}}}\), are sorted into three groups: a group \(\mathcal {S}\) containing all slave interface quantities, a group \(\mathcal {M}\) of all master quantities and a group denoted as \(\mathcal {N}\), which comprises all remaining nodes or DOFs. The global discrete displacement vector can be sorted accordingly, yielding \({\varvec{\mathsf {d}}} = ({\varvec{\mathsf {d}}}_{\mathcal {N}},{\varvec{\mathsf {d}}}_{\mathcal {M}},{\varvec{\mathsf {d}}}_{\mathcal {S}})\). Going back to (81), this allows for the following definition:

Herein, the discrete mortar mesh tying operator \({\varvec{\mathsf {B}}}_{{\mathsf {mt}}}\) and the resulting discrete vector of mesh tying forces \({\varvec{\mathsf {f}}}_{{\mathsf {mt}}}(\varvec{\uplambda }) = {\varvec{\mathsf {B}}}_{{\mathsf {mt}}}^{\mathsf {T}} \varvec{\uplambda }\) acting on the slave and the master side of the interface are introduced. To finalize the discretization of the considered mesh tying problem, a closer look needs to be taken at the weak constraint contribution \(\delta \mathcal {W}_{\lambda }\) in (73). Due to the saddle point characteristics and resulting symmetry of the mixed variational formulation in (72) and (73), all discrete components of \(\delta \mathcal {W}_{\lambda }\) have already been introduced and the final formulation is given as

with \({\varvec{\mathsf {g}}}_{{\mathsf {mt}}}({\varvec{\mathsf {d}}})={\varvec{\mathsf {B}}}_{{\mathsf {mt}}} {\varvec{\mathsf {d}}}\) representing the discrete mesh tying constraint at the coupling interface. Taking into account the typical finite element discretization of all remaining contributions to the first part of the weak formulation (72), as previously outlined in Sect. 4.2, the semi-discrete equations of motion including tied contact forces and the constraint equations emerge as

Mass matrix \({\varvec{\mathsf {M}}}\), damping matrix \({\varvec{\mathsf {C}}}\), internal forces \({\varvec{\mathsf {f}}}_{{\mathsf {int}}}({\varvec{\mathsf {d}}})\) and external forces \({\varvec{\mathsf {f}}}_{{\mathsf {ext}}}\) result from standard FE discretization. It is important to point out that the actual mortar-based interface coupling described here is completely independent of the concrete choice of the underlying finite element formulation. The same also holds true for the question which particular material model is applied. As both topics, i.e. nonlinear finite elements for continua and complex material models, are discussed at length in the literature, details will not be repeated here but the focus will remain solely on the mesh tying terms \({\varvec{\mathsf {f}}}_{{\mathsf {mt}}}(\varvec{\uplambda })\) and \({\varvec{\mathsf {g}}}_{{\mathsf {mt}}}({\varvec{\mathsf {d}}})\).

Examining the semi-discrete problem statement in (86) and (87) in more detail, the well-known nonlinearity of the internal forces \({\varvec{\mathsf {f}}}_{{\mathsf {int}}}({\varvec{\mathsf {d}}})\) due to the consideration of finite deformation kinematics and nonlinear material behavior becomes apparent. However, neither the discrete interface forces \({\varvec{\mathsf {f}}}_{{\mathsf {mt}}}(\varvec{\uplambda })\) nor the mesh tying constraints \({\varvec{\mathsf {g}}}_{{\mathsf {mt}}}({\varvec{\mathsf {d}}})\) introduce an additional nonlinearity into the system. This is due to the fact that no relative movement of the subdomains is permitted in mesh tying problems. Therefore, the mortar integral matrices \({\varvec{\mathsf {D}}}\) and \({\varvec{\mathsf {M}}}\) and hence also the discrete mesh tying operator \({\varvec{\mathsf {B}}}_{{\mathsf {mt}}}\) only need to be evaluated once at problem initialization and thus do not depend on the actual displacements, even if finite deformations of the considered body are involved. With respect to numerical efficiency, this means that evaluating the mortar coupling terms for tied contact problems is a one-time cost, which can usually be neglected as compared with the remaining computational costs. Only for the unilateral contact case discussed in Sect. 6, this will no longer be the case. The question how to numerically evaluate the entries of \({\varvec{\mathsf {B}}}_{{\mathsf {mt}}}\) in 3D problems is discussed in the following paragraph.

5.4 Evaluation of Mortar Integrals in 3D

All general concepts of the evaluation of mortar integrals in 3D can also be transferred back to the simple 2D case. The integral entries of both matrices \({\varvec{\mathsf {D}}}\) and \({\varvec{\mathsf {M}}}\) will be computed based on so-called mortar segments in order to achieve the maximum possible accuracy of Gauss quadrature and to guarantee linear momentum conservation in the semi-discrete setting. Projection operations between slave surface \(\Gamma _{{\mathsf {c}},h}^{(1)}\) and master surface \(\Gamma _{{\mathsf {c}},h}^{(2)}\), which consist of two-dimensional facets, are based on nodal averaging and a \(C^0\)-continuous field of normal vectors, cf. Fig. 17. For 3D situations, the averaged nodal normal vector \({\varvec{\mathsf {n}}}_k\) is given as

where the total number of slave facets \(n^{{\mathsf {adj}}}_k\) adjacent to slave node k may vary within a much wider range than in 2D (for instance \(n^{{\mathsf {adj}}}_k=4\) in Fig. 17). In anticipation of unilateral contact formulations, (88) also defines a tangential plane at slave node k, from which the two unit tangent vectors \(\varvec{\uptau }_k^{\xi }\) and \(\varvec{\uptau }_k^{\eta }\) can be chosen to form an orthonormal basis together with \({\varvec{\mathsf {n}}}_k\) as

Mortar segments must be defined such that the shape function integrands in (82) and (83) are \(C^1\)-continuous on these surface subsets. However, it is quite obvious that this task is much more complex in three dimensions than it would be in two dimensions, because mortar segments are arbitrarily shaped polygons as compared with line segments in the 2D case. Beyond that, the choice of an adequate mortar integration surface itself is quite difficult. In the 2D mortar mesh tying formulation that is not discussed here, integration is performed directly on the slave surface \(\Gamma ^{(1)}_{{\mathsf {c}},h}\). Unfortunately, it is not trivial to directly transfer this approach to three dimensions, because of the possible warping of surface facets.

The general topic of numerical integration, and an overview of the available (segment-based and element-based) integration schemes for this purpose is given in Sect. 7.3

5.5 Solution Methods

The attention is now turned back to the actual mortar finite element approach for tied contact derived in Sect. 5.3, and in particular to the final fully discretized version (i.e. after time discretization with the generalized-\(\alpha \) method previously discussed in Sect. 4.3) of (86) and (87). All solution methods for this system of \({\mathsf {ndof}}+{\mathsf {nco}}\) nonlinear discrete algebraic equations, where the global number of constraints is given by \({\mathsf {nco}} = {\mathsf {ndim}} \cdot m^{(1)}\), are based on a standard Newton–Raphson iteration as introduced in Sect. 4.4. With only equality constraints being present, no active set strategies are needed for mesh tying systems, but the iterative solution techniques can be applied directly, thus yielding standard (or smooth) Newton methods. Primal-dual active set strategies and the associated notion of semi-smooth Newton methods only become important in the context of unilateral contact considered in Sect. 6.

As explained in Sect. 4.4, the Newton–Raphson method (or Newton’s method) is based on a subsequent linearization of the residual, here defined by the discrete balance of linear momentum and the discrete mesh tying constraints in the time-discretized versions of (86) and (87). Each nonlinear solution step (iteration index i) then consists of solving the resulting linearized system of equations and an incremental update of the unknown displacements \({\varvec{\mathsf {d}}}_{n+1}\) and Lagrange multipliers \(\varvec{\uplambda }_{n+1-\alpha _{\mathsf {f}}}\) until a user-defined convergence criterion is met. Taking into account that the discrete mesh tying operator \({\varvec{\mathsf {B}}}_{{\mathsf {mt}}}\) defined in (84) does not depend on the displacements, consistent linearization in iteration step i yields:

Herein, the fact that the Lagrange multipliers only enter the discrete mesh tying in a linear fashion has been made use of. Due to this linearity, it is possible to solve directly for the unknown Lagrange multipliers \(\varvec{\uplambda }_{n+1-\alpha _{\mathsf {f}}}^i\) in each iteration step instead of an incremental formulation. Moreover, as mentioned in Sect. 4.4, all discrete force terms (inertia, damping, internal and external forces) except for the additional mesh tying forces \({\varvec{\mathsf {f}}}_{{\mathsf {mt}}}(\varvec{\uplambda }_{n+1-\alpha _{\mathsf {f}}}^i)\) are summarized in the residual \({\varvec{\mathsf {r}}}_{{\mathsf {effdyn}}}({\varvec{\mathsf {d}}}_{n+1}^i)\) and the partial derivative of \({\varvec{\mathsf {r}}}_{{\mathsf {effdyn}}}({\varvec{\mathsf {d}}}_{n+1}^i)\) with respect to the displacements \({\varvec{\mathsf {d}}}\) is commonly referred to as dynamic effective tangential stiffness matrix \({\varvec{\mathsf {K}}}_{{\mathsf {effdyn}}}({\varvec{\mathsf {d}}}_{n+1}^i)\), as introduced in (58). Finally, it is pointed out that the constraints \({\varvec{\mathsf {g}}}_{{\mathsf {mt}}}({\varvec{\mathsf {d}}}_{n+1})={\varvec{\mathsf {0}}}\) are already enforced at time \(t=0\) to assure angular momentum conservation. Thus, the right-hand side of the linearized constraint equation in (91) simply reduces to zero.