Abstract

In many applications such as data compression, imaging or genomic data analysis, it is important to approximate a given tensor by a tensor that is sparsely representable. For matrices, i.e. 2-tensors, such a representation can be obtained via the singular value decomposition, which allows to compute best rank k-approximations. For very big matrices a low rank approximation using SVD is not computationally feasible. In this case different approximations are available. It seems that variants of the CUR-decomposition are most suitable. For d-mode tensors \(\mathcal{T} \in \otimes _{i=1}^{d}\mathbb{R}^{n_{i}}\), with d > 2, many generalizations of the singular value decomposition have been proposed to obtain low tensor rank decompositions. The most appropriate approximation seems to be best (r 1, …, r d )-approximation, which maximizes the ℓ 2 norm of the projection of \(\mathcal{T}\) on ⊗ i = 1 d U i , where U i is an r i -dimensional subspace \(\mathbb{R}^{n_{i}}\). One of the most common methods is the alternating maximization method (AMM). It is obtained by maximizing on one subspace U i , while keeping all other fixed, and alternating the procedure repeatedly for i = 1, …, d. Usually, AMM will converge to a local best approximation. This approximation is a fixed point of a corresponding map on Grassmannians. We suggest a Newton method for finding the corresponding fixed point. We also discuss variants of CUR-approximation method for tensors. The first part of the paper is a survey on low rank approximation of tensors. The second new part of this paper is a new Newton method for best (r 1, …, r d )-approximation. We compare numerically different approximation methods.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Let \(\mathbb{R}\) be the field of real numbers. Denote by \(\mathbb{R}^{\mathbf{n}} = \mathbb{R}^{n_{1}\times \ldots \times n_{d}}:= \otimes _{i=1}^{d}\mathbb{R}^{n_{j}}\), where \(\mathbf{n} = (n_{1},\ldots,n_{d})\), the tensor products of \(\mathbb{R}^{n_{1}},\ldots, \mathbb{R}^{n_{d}}\). \(\mathcal{T} = [t_{i_{1},\ldots,i_{d}}] \in \mathbb{R}^{\mathbf{n}}\) is called a d-mode tensor. Note that the number of coordinates of \(\mathcal{T}\) is N = n 1 … n d . A tensor \(\mathcal{T}\) is called a sparsely representable tensor if it can represented with a number of coordinates that is much smaller than N.

Apart from sparse matrices, the best known example of a sparsely representable 2-tensor is a low rank approximation of a matrix \(A \in \mathbb{R}^{n_{1}\times n_{2}}\). A rank k-approximation of A is given by \(A_{\text{appr}}:=\sum _{ i=1}^{k}\mathbf{u}_{i}\mathbf{v}_{i}^{\top }\), which can be identified with \(\sum _{i=1}^{k}\mathbf{u}_{i} \otimes \mathbf{v}_{i}\). To store A appr we need only the 2k vectors \(\mathbf{u}_{1},\ldots,\mathbf{u}_{k} \in \mathbb{R}^{n_{1}},\;\mathbf{v}_{1},\ldots,\mathbf{v}_{k} \in \mathbb{R}^{n_{2}}\). A best rank k-approximation of \(A \in \mathbb{R}^{n_{1}\times n_{2}}\) can be computed via the singular value decomposition, abbreviated here as SVD, [19]. Recall that if A is a real symmetric matrix, then the best rank k-approximation must be symmetric, and is determined by the spectral decomposition of A.

The computation of the SVD requires \(\mathcal{O}(n_{1}n_{2}^{2})\) operations and at least \(\mathcal{O}(n_{1}n_{2})\) storage, assuming that n 2 ≤ n 1. Thus, if the dimensions n 1 and n 2 are very large, then the computation of the SVD is often infeasible. In this case other type of low rank approximations are considered, see e.g. [1, 5, 7, 11, 13, 18, 21].

For d-tensors with d > 2 the situation is rather unsatisfactory. It is a major theoretical and computational problem to formulate good generalizations of low rank approximation for tensors and to give efficient algorithms to compute these approximations, see e.g. [3, 4, 8, 13, 15, 29, 30, 33, 35, 36, 43].

We now discuss briefly the main ideas of the approximation methods for tensors discussed in this paper. We need to introduce (mostly) standard notation for tensors. Let [n]: = { 1, …, n} for \(n \in \mathbb{N}\). For \(\mathbf{x}_{i}:= (x_{1,i},\ldots,x_{n_{i},i})^{\top }\in \mathbb{R}^{n_{i}},i \in [d]\), the tensor \(\otimes _{i\in [d]}\mathbf{x}_{i} = \mathbf{x}_{1} \otimes \cdots \otimes \mathbf{x}_{d} = \mathcal{X} = [x_{j_{1},\ldots,j_{d}}] \in \mathbb{R}^{\mathbf{n}}\) is called a decomposable tensor, or rank one tensor if x i ≠ 0 for i ∈ [d]. That is, \(x_{j_{1},\ldots,j_{d}} = x_{j_{1},1}\cdots x_{j_{d},d}\) for j i ∈ [n i ], i ∈ [d]. Let \(\langle \mathbf{x}_{i},\mathbf{y}_{i}\rangle _{i}:= \mathbf{y}_{i}^{\top }\mathbf{x}_{i}\) be the standard inner product on \(\mathbb{R}^{n_{i}}\) for i ∈ [d]. Assume that \(\mathcal{S} = [s_{j_{1},\ldots,j_{d}}]\) and \(\mathcal{T} = [t_{j_{1},\ldots,j_{d}}]\) are two given tensors in \(\mathbb{R}^{\mathbf{n}}\). Then \(\langle \mathcal{S},\mathcal{T}\rangle:=\sum _{j_{i}\in [n_{i}],i\in [d]}s_{j_{1},\ldots,j_{d}}t_{j_{1},\ldots,j_{d}}\) is the standard inner product on \(\mathbb{R}^{\mathbf{n}}\). Note that

The norm \(\|\mathcal{T}\|:= \sqrt{\langle \mathcal{T}, \mathcal{T}\rangle }\) is called the Hilbert-Schmidt norm. (For matrices, i.e. d = 2, it is called the Frobenius norm.)

Let I = { 1 ≤ i 1 < ⋯ < i l ≤ d} ⊂ [d]. Assume that \(\mathcal{X} = [x_{j_{i_{ 1}},\cdots \,,j_{i_{l}}}] \in \otimes _{k\in [l]}\mathbb{R}^{n_{i_{k}} }\). Then the contraction \(\mathcal{T} \times \mathcal{X}\) on the set of indices I is given by:

Assume that \(\mathbf{U}_{i} \subset \mathbb{R}^{n_{i}}\) is a subspace of dimension r i with an orthonormal basis \(\mathbf{u}_{1,i},\ldots,\mathbf{u}_{r_{i},i}\) for i ∈ [d]. Let \(\mathbf{U}:= \otimes _{i=1}^{d}\mathbf{U}_{i} \subset \mathbb{R}^{\mathbf{n}}\). Then \(\otimes _{i=1}^{d}\mathbf{u}_{j_{i},i}\), where j i ∈ [n i ], i ∈ [d], is an orthonormal basis in U. We are approximating \(\mathcal{T} \in \mathbb{R}^{n_{1}\times \cdots \times n_{d}}\) by a tensor

The tensor \(\mathcal{S}' = [s_{j_{1},\ldots,j_{d}}] \in \mathbb{R}^{r_{1}\times \cdots \times r_{d}}\) is the core tensor corresponding to \(\mathcal{S}\) in the terminology of [42].

There are two major problems: The first one is how to choose the subspaces U 1, …, U d . The second one is the choice of the core tensor \(\mathcal{S}'\). Suppose we already made the choice of U 1, …, U d . Then \(\mathcal{S} = P_{\mathbf{U}}(\mathcal{T} )\) is the orthogonal projection of \(\mathcal{T}\) on U:

If the dimensions of n 1, …, n d are not too big, then this projection can be explicitly carried out. If the dimension n 1, …, n d are too big to compute the above projection, then one needs to introduce other approximations. That is, one needs to compute the core tensor \(\mathcal{S}'\) appearing in (14.1) accordingly. The papers [1, 5, 7, 11, 13, 18, 21, 29, 33, 35, 36] essentially choose \(\mathcal{S}'\) in a particular way.

We now assume that the computation of \(P_{\mathbf{U}}(\mathcal{T} )\) is feasible. Recall that

The best r-approximation of \(\mathcal{T}\), where r = (r 1, …, r d ), in Hilbert-Schmidt norm is the solution of the minimal problem:

This problem is equivalent to the following maximum

The standard alternating maximization method, denoted by AMM, for solving (14.5) is to solve the maximum problem, where all but the subspace U i is fixed. Then this maximum problem is equivalent to finding an r i -dimensional subspace of U i containing the r i biggest eigenvalues of a corresponding nonnegative definite matrix \(A_{i}(\mathbf{U}_{1},\ldots,\mathbf{U}_{i-1},\mathbf{U}_{i+1},\ldots,\mathbf{U}_{d}) \in \mathrm{ S}_{n_{i}}\). Alternating between U 1, U 2, …, U d we obtain a nondecreasing sequence of norms of projections which converges to v. Usually, v is a critical value of \(\|P_{\otimes _{i\in [d]}\mathbf{U}_{i}}(\mathcal{T} )\|\). See [4] for details.

Assume that r i = 1 for i ∈ [d]. Then \(\dim \mathbf{U}_{i} = 1\) for i ∈ [d]. In this case the minimal problem (14.4) is called a best rank one approximation of \(\mathcal{T}\). For d = 2 a best rank one approximation of a matrix \(\mathcal{T} = T \in \mathbb{R}^{n_{1}\times n_{2}}\) is accomplished by the first left and right singular vectors and the corresponding maximal singular value σ 1(T). The complexity of this computation is \(\mathcal{O}(n_{1}n_{2})\) [19]. Recall that the maximum (14.5) is equal to σ 1(T), which is also called the spectral norm \(\|T\|_{2}\). For d > 2 the maximum (14.5) is called the spectral norm of \(\mathcal{T}\), and denoted by \(\|\mathcal{T}\|_{\sigma }\). The fundamental result of Hillar-Lim [27] states that the computation of \(\|\mathcal{T}\|_{\sigma }\) is NP-hard in general. Hence the computation of best r-approximation is NP-hard in general.

Denote by \(\mathop{\mathrm{Gr}}\nolimits (r, \mathbb{R}^{n})\) the variety of all r-dimensional subspaces in \(\mathbb{R}^{n}\), which is called Grassmannian or Grassmann manifold. Let

Usually, the AMM for best r-approximation of \(\mathcal{T}\) will converge to a fixed point of a corresponding map \(\mathbf{F}_{\mathcal{T}}:\mathop{ \mathrm{Gr}}\nolimits (\mathbf{r},\mathbf{n}) \rightarrow \mathop{\mathrm{Gr}}\nolimits (\mathbf{r},\mathbf{n})\). This observation enables us to give a new Newton method for finding a best r-approximation to \(\mathcal{T}\). For best rank one approximation the map \(\mathbf{F}_{\mathcal{T}}\) and the corresponding Newton method was stated in [14].

This paper consists of two parts. The first part surveys a number of common methods for low rank approximation methods of matrices and tensors. We did not cover all existing methods here. We were concerned mainly with the methods that the first author and his collaborators were studying, and closely related methods. The second part of this paper is a new contribution to Newton algorithms related to best r-approximations. These algorithms are different from the ones given in [8, 39, 43]. Our Newton algorithms are based on finding the fixed points corresponding to the map induced by the AMM. In general its known that for big size problem, where each n i is big for i ∈ [d] and d ≥ 3, Newton methods are not efficient. The computation associate the matrix of derivatives (Jacobian) is too expensive in computation and time. In this case AMM or MAMM (modified AMM) are much more cost effective. This well known fact is demonstrated in our simulations.

We now briefly summarize the contents of this paper. In Sect. 14.2 we review the well known facts of singular value decomposition (SVD) and its use for best rank k-approximation of matrices. For large matrices approximation methods using SVD are computationally unfeasible. Section 14.3 discusses a number of approximation methods of matrices which do not use SVD. The common feature of these methods is sampling of rows, or columns, or both to find a low rank approximation. The basic observation in Sect. 14.3.1 is that, with high probability, a best k-rank approximation of a given matrix based on a subspace spanned by the sampled row is with in a relative ε error to the best k-rank approximation given by SVD. We list a few methods that use this observation. However, the complexity of finding a particular k-rank approximation to an m × n matrix is still \(\mathcal{O}(\mathit{kmn})\), as the complexity truncated SVD algorithms using Arnoldi or Lanczos methods [19, 31]. In Sect. 14.3.2 we recall the CUR-approximation introduced in [21]. The main idea of CUR-approximation is to choose k columns and rows of A, viewed as matrices C and R, and then to choose a square matrix U of order k in such a way that CUR is an optimal approximation of A. The matrix U is chosen to be the inverse of the corresponding k × k submatrix A′ of A. The quality of CUR-approximation can be determined by the ratio of | detA′ | to the maximum possible value of the absolute value of all k × k minors of A. In practice one searches for this maximum using a number of random choices of such minors. A modification of this search algorithm is given in [13]. The complexity of storage of C, R, U is \(\mathcal{O}(k\max (m,n))\). The complexity of finding the value of each entry of CUR is \(\mathcal{O}(k^{2})\). The complexity of computation of CUR is \(\mathcal{O}(k^{2}mn)\). In Sect. 14.4 we survey CUR-approximation of tensors given in [13]. In Sect. 14.5 we discuss preliminary results on best r-approximation of tensors. In Sect. 14.5.1 we show that the minimum problem (14.4) is equivalent to the maximum problem (14.5). In Sect. 14.5.2 we discuss the notion of singular tuples and singular values of a tensor introduced in [32]. In Sect. 14.5.3 we recall the well known solution of maximizing \(\|P_{\otimes _{i\in [d]}\mathbf{U}_{i}}(\mathcal{T} )\|^{2}\) with respect to one subspace, while keeping other subspaces fixed. In Sect. 14.6 we discuss AMM for best r-approximation and its variations. (In [4, 15] AMM is called alternating least squares, abbreviated as ALS.) In Sect. 14.6.1 we discuss the AMM on a product space. We mention a modified alternating maximization method and and 2-alternating maximization method, abbreviated as MAMM and 2AMM respectively, introduced in [15]. The MAMM method consists of choosing the one variable which gives the steepest ascend of AMM. 2AMM consists of maximization with respect to a pair of variables, while keeping all other variables fixed. In Sect. 14.6.2 we discuss briefly AMM and MAMM for best r-approximations for tensors. In Sect. 14.6.3 we give the complexity analysis of AMM for d = 3, r 1 ≈ r 2 ≈ r 3 and n 1 ≈ n 2 ≈ n 3. In Sect. 14.7 we state a working assumption of this paper that AMM converges to a fixed point of the induced map, which satisfies certain smoothness assumptions. Under these assumptions we can apply the Newton method, which can be stated in the standard form in \(\mathbb{R}^{L}\). Thus, we first do a number of AMM iterations and then switch to the Newton method. In Sect. 14.7.1 we give a simple application of these ideas to state a Newton method for best rank one approximation. This Newton method was suggested in [14]. It is different from the Newton method in [43]. The new contribution of this paper is the Newton method which is discussed in Sects. 14.8 and 14.9. The advantage of our Newton method is its simplicity, which avoids the notions and tools of Riemannian geometry as for example in [8, 39]. In simulations that we ran, the Newton method in [8] was 20 % faster than our Newton method for best r-approximation of 3-mode tensors. However, the number of iterations of our Newton method was 40 % less than in [8]. In the last section we give numerical results of our methods for best r-approximation of tensors. In Sect. 14.11 we give numerical simulations of our different methods applied to a real computer tomography (CT) data set (the so-called MELANIX data set of OsiriX). The summary of these results are given in Sect. 14.12.

2 Singular Value Decomposition

Let \(A \in \mathbb{R}^{m\times n}\setminus \{0\}\). We now recall well known facts on the SVD of A [19]. See [40] for the early history of the SVD. Assume that r = rank A. Then there exist r-orthonormal sets of vectors \(\mathbf{u}_{1},\ldots,\mathbf{u}_{r} \in \mathbb{R}^{m},\mathbf{v}_{1},\ldots,\mathbf{v}_{r} \in \mathbb{R}^{n}\) such that we have:

The quantities u i , v i and σ i (A) are called the left, right i-th singular vectors and i-th singular value of A respectively, for i ∈ [r]. Note that u k and v k are uniquely defined up to ± 1 if and only if σ k−1(A) > σ k (A) > σ k+1(A). Furthermore for k ∈ [r − 1] the matrix A k is uniquely defined if and only if σ k (A) > σ k+1(A). Denote by \(\mathcal{R}(m,n,k) \subset \mathbb{R}^{m\times n}\) the variety of all matrices of rank at most k. Then A k is a best rank-k approximation of A:

Let \(\mathbf{U} \in \mathop{\mathrm{Gr}}\nolimits (p, \mathbb{R}^{m}),\mathbf{V} \in \mathop{\mathrm{Gr}}\nolimits (q, \mathbb{R}^{n})\). We identify U ⊗V with

Then P U⊗V (A) is identified with the projection of A on UV ⊤ with respect to the standard inner product on \(\mathbb{R}^{m\times n}\) given by \(\langle X,Y \rangle =\mathop{ \mathrm{tr}}\nolimits XY ^{\top }\). Observe that

Hence

Thus

To compute U l ⋆, V l ⋆ and σ 1(A), …, σ l (A) of a large scale matrix A one can use Arnoldi or Lanczos methods [19, 31], which are implemented in the partial singular value decomposition. This requires a substantial number of matrix-vector multiplications with the matrix A and thus a complexity of at least \(\mathcal{O}(\mathit{lmn})\).

3 Sampling in Low Rank Approximation of Matrices

Let \(A = [a_{i,j}]_{i=j=1}^{m,n} \in \mathbb{R}^{m\times n}\) be given. Assume that \(\mathbf{b}_{1},\ldots,\mathbf{b}_{m} \in \mathbb{R}^{n},\mathbf{c}_{1},\ldots,\mathbf{c}_{n} \in \mathbb{R}^{m}\) are the columns of A ⊤ and A respectively. (b 1 ⊤, … b m ⊤ are the rows of A.) Most of the known fast rank k-approximation are using sampling of rows or columns of A, or both.

3.1 Low Rank Approximations Using Sampling of Rows

Suppose that we sample a set

of rows \(\mathbf{b}_{i_{1}}^{\top },\ldots,\mathbf{b}_{i_{s}}^{\top }\), where s ≥ k. Let \(\mathbf{W}(I):=\mathrm{ span}(\mathbf{b}_{i_{1}},\ldots,\mathbf{b}_{i_{s}})\). Then with high probability the projection of the first i-th right singular vectors v i on W(I) is very close to v i for i ∈ [k], provided that s ≫ k. In particular, [5, Theorem 2] claims:

Theorem 1 (Deshpande-Vempala)

Any \(A \in \mathbb{R}^{m\times n}\) contains a subset I of \(s = \frac{4k} {\epsilon } + 2k\log (k + 1)\) rows such that there is a matrix \(\tilde{A}_{k}\) of rank at most k whose rows lie in W (I) and

To find a rank-k approximation of A, one projects each row of A on W(I) to obtain the matrix \(P_{\mathbb{R}^{m}\otimes \mathbf{W}(I)}(A)\). Note that we can view \(P_{\mathbb{R}^{m}\otimes \mathbf{W}(I)}(A)\) as an m × s′ matrix, where \(s' =\dim \mathbf{W}(I) \leq s\). Then find a best rank k-approximation to \(P_{\mathbb{R}^{m}\otimes \mathbf{W}(I)}(A)\), denoted as \(P_{\mathbb{R}^{m}\otimes \mathbf{W}(I)}(A)_{k}\). Theorem 1 and the results of [18] yield that

Here η is proportional to \(\frac{k} {s}\), and can be decreased with more rounds of sampling. Note that the complexity of computing \(P_{\mathbb{R}^{m}\otimes \mathbf{W}(I)}(A)_{k}\) is \(\mathcal{O}(ks'm)\). The key weakness of this method is that to compute \(P_{\mathbb{R}^{m}\otimes \mathbf{W}(I)}(A)\) one needs \(\mathcal{O}(s'mn)\) operations. Indeed, after having computed an orthonormal basis of W(I), to compute the projection of each row of A on W(I) one needs s′n multiplications.

An approach for finding low rank approximations of A using random sampling of rows or columns is given in Friedland-Kave-Niknejad-Zare [11]. Start with a random choice of I rows of A, where | I | ≥ k and \(\dim \mathbf{W}(I) \geq k\). Find \(P_{\mathbb{R}^{m}\otimes \mathbf{W}(I)}(A)\) and \(B_{1}:= P_{\mathbb{R}^{m}\otimes \mathbf{W}(I)}(A)_{k}\) as above. Let \(\mathbf{U}_{1} \in \mathop{\mathrm{Gr}}\nolimits (k, \mathbb{R}^{m})\) be the subspace spanned by the first k left singular vectors of B 1. Find \(B_{2} = P_{\mathbf{U}_{1}\otimes \mathbb{R}^{n}}(A)\). Let \(\mathbf{V}_{2} \in \mathop{\mathrm{Gr}}\nolimits (k, \mathbb{R}^{n})\) correspond to the first k right singular vectors of B 2. Continuing in this manner we obtain a sequence of rank k-approximations B 1, B 2, …. It is shown in [11] that \(\|A - B_{1}\| \geq \| A - B_{2}\| \geq \ldots\) and \(\|B_{1}\| \leq \| B_{2}\| \leq \ldots\). One stops the iterations when the relative improvement of the approximation falls below the specified threshold. Assume that σ k (A) > σ k+1(A). Since best rank-k approximation is a unique local minimum for the function \(\|A - B\|,B \in \mathcal{R}(m,n,k)\) [19], it follows that in general the sequence \(B_{j},j \in \mathbb{N}\) converges to A k . It is straightforward to show that this algorithm is the AMM for low rank approximations given in Sect. 14.6.2. Again, the complexity of this method is \(\mathcal{O}({\it \text{kmn}})\).

Other suggested methods as [1, 7, 37, 38] seem to have the same complexity \(\mathcal{O}({\it \text{kmn}})\), since they project each row of A on some k-dimensional subspace of \(\mathbb{R}^{n}\).

3.2 CUR-Approximations

Let

and I ⊂ [m] as in (14.9) be given. Denote by \(A[I,J]:= [a_{i_{p},j_{q}}]_{p=q=1}^{s,t} \in \mathbb{R}^{s\times t}\). CUR-approximation is based on sampling simultaneously the set of I rows and J columns of A and the approximation matrix to A(I, J, U) given by

Once the sets I and J are chosen the approximation A(I, J, U) depends on the choice of U. Clearly the row and the column spaces of A(I, J, U) are contained in the row and column spaces of A[I, [n]] and A[[m], J] respectively. Note that to store the approximation A(I, J, U) we need to store the matrices C, R and U. The number of these entries is tm + sn + st. So if n, m are of order 105 and s, t are of order 102 the storages of C, R, U can be done in Random Access Memory (RAM), while the entries of A are stored in external memory. To compute an entry of A(I, J, U), which is an approximation of the corresponding entry of A, we need st flops.

Let U and V be subspaces spanned by the columns of A[[m], J] and A[I, [n]]⊤ respectively. Then A(I, J, U) ∈ UV ⊤, see (14.7).

Clearly, a best CUR approximation is chosen by the least squares principle:

The results in [17] show that the least squares solution of (14.12) is given by:

Here F † denotes the Moore-Penrose pseudoinverse of a matrix F. Note that U ⋆ is unique if and only if

The complexity of computation of A([m], J)† and A(I, [n])† are \(\mathcal{O}(t^{2}m)\) and \(\mathcal{O}(s^{2}n)\) respectively. Because of the multiplication formula for U ⋆, the complexity of computation of U ⋆ is \(\mathcal{O}(\mathit{stmn})\).

One can significantly improve the computation of U, if one tries to best fit the entries of the submatrix A[I′, J′] for given subsets I′ ⊂ [m], J′ ⊂ [n]. That is, let

(The last equality follows from (14.13).) The complexity of computation of U ⋆(I′, J′) is \(\mathcal{O}(st\vert I'\vert \vert J'\vert )\).

Suppose finally, that I′ = I and J′. Then (14.15) and the properties of the Moore-Penrose inverse yield that

In particular B(I, J)[I, J] = A[I, J]. Hence

The original CUR approximation of rank k has the form B(I, J) given by (14.17) [21].

Assume that rank A ≥ k. We want to choose an approximation B(I, J) of the form (14.17) which gives a good approximation to A. It is possible to give an upper estimate for the maximum of the absolute values of the entries of A − B(I, J) in terms of σ k+1(A), provided that detA[I, J] is relatively close to

Let

The results of [21, 22] yield:

(See also [10, Chapter 4, §13].)

To find μ k is probably an NP-hard problem in general [9]. A standard way to find μ k is either a random search or greedy search [9, 20]. In the special case when A is a symmetric positive definite matrix one can give the exact conditions when the greedy search gives a relatively good result [9].

In the paper by Friedland-Mehrmann-Miedlar-Nkengla [13] a good approximation B(I, J) of the form (14.17) is obtained by a random search on the maximum value of the product of the significant singular values of A[I, J]. The approximations found in this way are experimentally better than the approximations found by searching for μ k .

4 Fast Approximation of Tensors

The fast approximation of tensors can be based on several decompositions of tensors such as: Tucker decomposition [42]; matricizations of tensors, as unfolding and applying SVD one time or several time recursively, (see below); higher order singular value decomposition (HOSVD) [3], Tensor-Train decompositions [34, 35]; hierarchical Tucker decomposition [23, 25]. A very recent survey [24] gives an overview on this dynamic field. In this paper we will discuss only the CUR-approximation.

4.1 CUR-Approximations of Tensors

Let \(\mathcal{T} \in \mathbb{R}^{n_{1}\times \ldots n_{d}}\). In this subsection we denote the entries of \(\mathcal{T}\) as \(\mathcal{T} (i_{1},\ldots,i_{d})\) for i j ∈ [n j ] and j ∈ [d]. CUR-approximation of tensors is based on matricizations of tensors. The unfolding of \(\mathcal{T}\) in the mode l ∈ [d] consists of rearranging the entries of \(\mathcal{T}\) as a matrix \(T_{l}(\mathcal{T} ) \in \mathbb{R}^{n_{l}\times N_{l}}\), where \(N_{l} = \frac{\prod _{i\in [d]}n_{i}} {n_{l}}\). More general, let K ∪ L = [d] be a partition of [d] into two disjoint nonempty sets. Denote \(N(K) =\prod _{i\in K}n_{i},N(L) =\prod _{j\in L}n_{j}\). Then unfolding \(\mathcal{T}\) into the two modes K and L consists of rearranging the entires of \(\mathcal{T}\) as a matrix \(T(K,L,\mathcal{T} ) \in \mathbb{R}^{N(K)\times N(L)}\).

We now describe briefly the CUR-approximation of 3 and 4-tensors as described by Friedland-Mehrmann-Miedlar-Nkengla [13]. (See [33] for another approach to CUR-approximations for tensors.) We start with the case d = 3. Let I i be a nonempty subset of [n i ] for i ∈ [3]. Assume that the following conditions hold:

We identify [n 2] × [n 3] with [n 2 n 3] using a lexicographical order. We now take the CUR-approximation of \(T_{1}(\mathcal{T} )\) as given in (14.17):

We view \(T_{1}(\mathcal{T} )[[n_{1}],J]\) as an n 1 × k 2 matrix. For each α 1 ∈ I 1 we view \(T_{1}(\mathcal{T} )[\{\alpha _{1}\},[n_{2}n_{3}]]\) as an n 2 × n 3 matrix \(Q(\alpha _{1}):= [\mathcal{T} (\alpha _{1},i_{2},i_{3})]_{i_{2}\in [n_{2}],i_{3}\in [n_{3}]}\). Let R(α 1) be the CUR-approximation of Q(α 1) based on the sets I 2, I 3:

Let \(F:= T_{1}(\mathcal{T} )[I_{1},J]^{-1} \in \mathbb{R}^{k^{2}\times k^{2} }\). We view the entries of this matrix indexed by the row (α 2, α 3) ∈ I 2 × I 3 and column α 1 ∈ I 1. We write these entries as \(\mathcal{F}(\alpha _{1},\alpha _{2},\alpha _{3}),\alpha _{j} \in I_{j},j \in [3]\), which represent a tensor \(\mathcal{F}\in \mathbb{R}^{I_{1}\times I_{2}\times I_{3}}\). The entries of Q(α 1)[I 2, I 3]−1 are indexed by the row α 3 ∈ I 3 and column α 2 ∈ I 2. We write these entries as \(\mathcal{G}(\alpha _{1},\alpha _{2},\alpha _{3}),\alpha _{2} \in I_{2},\alpha _{3} \in I_{3}\), which represent a tensor \(\mathcal{G}\in \mathbb{R}^{I_{1}\times I_{2}\times I_{3}}\). Then the approximation tensor \(\mathcal{B} = [\mathcal{B}(j_{1},j_{2},j_{3})] \in \mathbb{R}^{n_{1}\times n_{2}\times n_{3}}\) is given by:

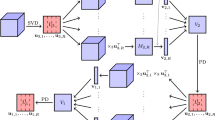

We now discuss a CUR-approximation for 4-tensors, i.e. d = 4. Let \(\mathcal{T} \in \mathbb{R}^{n_{1}\times n_{2}\times n_{3}\times n_{4}}\) and K = { 1, 2}, L = { 3, 4}. The rows and columns of \(X:= T(K,L,\mathcal{T} ) \in \mathbb{R}^{(n_{1}n_{2})\times (n_{3}n_{4})}\) are indexed by pairs (i 1, i 2) and (i 3, i 4) respectively. Let

First consider the CUR-approximation X[[n 1 n 2], J 2]X[J 1, J 2]−1 X[J 1, [n 3 n 4]] viewed as tensor \(\mathcal{C}\in \mathbb{R}^{n_{1}\times n_{2}\times n_{3}\times n_{4}}\). Denote by \(\mathcal{H}(\alpha _{1},\alpha _{2},\alpha _{3},\alpha _{4})\) the ((α 3, α 4), (α 1, α 2)) entry of the matrix X[J 1, J 2]−1. So \(\mathcal{H}\in \mathbb{R}^{I_{1}\times I_{2}\times I_{3}\times I_{4}}\). Then

For α j ∈ I j , j ∈ [4] view vectors X[[n 1 n 2], (α 3, α 4)] and X[(α 1, α 2), [n 3 n 4]] as matrices \(Y (\alpha _{3},\alpha _{4}) \in \mathbb{R}^{n_{1}\times n_{2}}\) and \(Z(\alpha _{1},\alpha _{2}) \in \mathbb{R}^{n_{3}\times n_{4}}\) respectively. Next we find the CUR-approximations to these two matrices using the subsets (I 1, I 2) and (I 3, I 4) respectively:

We denote the entries of Y (α 3, α 4)[I 1, I 2]−1 and Z(α 1, α 2)[I 3, I 4]−1 by

respectively. Then the CUR-approximation tensor \(\mathcal{B}\) of \(\mathcal{T}\) is given by:

We now discuss briefly the complexity of the storage and computing an entry of the CUR-approximation \(\mathcal{B}\). Assume first that d = 3. Then we need to store k 2 columns of the matrices \(T_{1}(\mathcal{T} )\), k 3 columns of \(T_{2}(\mathcal{T} )\) and \(T_{3}(\mathcal{T} )\), and k 4 entries of the tensors \(\mathcal{F}\) and \(\mathcal{G}\). The total storage space is k 2 n 1 + k 3(n 2 + n 3) + 2k 4. To compute each entry of \(\mathcal{B}\) we need to perform 4k 6 multiplications and k 6 additions.

Assume now that d = 4. Then we need to store k 3 columns of \(T_{l}(\mathcal{T} ),l \in [4]\) and k 4 entries of \(\mathcal{F},\mathcal{G},\mathcal{H}\). Total storage needed is k 3(n 1 + n 2 + n 3 + n 4 + 3k). To compute each entry of \(\mathcal{B}\) we need to perform 6k 8 multiplications and k 8 additions.

5 Preliminary Results on Best r-Approximation

5.1 The Maximization Problem

We first show that the best approximation problem (14.4) is equivalent to the maximum problem (14.5), see [4] and [26, §10.3]. The Pythagoras theorem yields that

(Here (⊗ i = 1 d U i ) ⊥ is the orthogonal complement of ⊗ i = 1 d U i in \(\otimes _{i=1}^{d}\mathbb{R}^{n_{i}}\).) Hence

This shows the equivalence of (14.4) and (14.5).

5.2 Singular Values and Singular Tuples of Tensors

Let \(\mathrm{S}(n) =\{ \mathbf{x} \in \mathbb{R}^{n},\;\|\mathbf{x}\| = 1\}\). Note that one dimensional subspace \(\mathbf{U} \in \mathop{\mathrm{Gr}}\nolimits (1, \mathbb{R}^{n})\) is span(u), where u ∈ S(n). Let S(n): = S(n 1) ×⋯ × S(n d ). Then best rank one approximation problem for \(\mathcal{T} \in \mathbb{R}^{\mathbf{n}}\) is equivalent to finding

Let \(f_{\mathcal{T}}: \mathbb{R}^{\mathbf{n}} \rightarrow \mathbb{R}\) is given by \(f_{\mathcal{T}}(\mathcal{X}) =\langle \mathcal{X},\mathcal{T}\rangle\). Denote by \(\mathrm{S}'(\mathbf{n}) \subset \mathbb{R}^{\mathbf{n}}\) all rank one tensors of the form ⊗ i ∈ [d] x i , where (x 1, …, x n ) ∈ S(n). Let \(f_{\mathcal{T}}(\mathbf{x}_{1},\ldots,\mathbf{x}_{d}):= f_{\mathcal{T}}(\otimes _{i\in [d]}\mathbf{x}_{i})\). Then the critical points of \(f_{\mathcal{T}}\vert \mathrm{S}'(\mathbf{n})\) are given by the Lagrange multipliers formulas [32]:

One calls λ and (u 1, …, u d ) a singular value and singular tuple of \(\mathcal{T}\). For d = 2 these are the singular values and singular vectors of \(\mathcal{T}\). The number of complex singular values of a generic \(\mathcal{T}\) is given in [16]. This number increases exponentially with d. For example for n 1 = ⋯ = n d = 2 the number of distinct singular values is d! . (The number of real singular values as given by (14.23) is bounded by the numbers given in [16].)

Consider first the maximization problem of \(f_{\mathcal{T}}(\mathbf{x}_{1},\ldots,\mathbf{x}_{d})\) over S(n) where we vary x i ∈ S(n i ) and keep the other variables fixed. This problem is equivalent to the maximization of the linear form \(\mathbf{x}_{i}^{\top }(\mathcal{T} \times (\otimes _{j\in [d]\setminus \{i\}}\mathbf{x}_{j}))\). Note that if \(\mathcal{T} \times (\otimes _{j\in [d]\setminus \{i\}}\mathbf{x}_{j})\neq \mathbf{0}\) then this maximum is achieved for \(\mathbf{x}_{i} = \frac{1} {\|\mathcal{T}\times (\otimes _{j\in [d]\setminus \{i\}}\mathbf{x}_{j})\|}\mathcal{T} \times (\otimes _{j\in [d]\setminus \{i\}}\mathbf{x}_{j})\).

Consider second the maximization problem of \(f_{\mathcal{T}}(\mathbf{x}_{1},\ldots,\mathbf{x}_{d})\) over S(n) where we vary (x i , x j ) ∈ S(n i ) × S(n j ), 1 ≤ i < j ≤ d and keep the other variables fixed. This problem is equivalent to finding the first singular value and the corresponding right and left singular vectors of the matrix \(\mathcal{T} \times (\otimes _{k\in [d]\setminus \{i,j\}}\mathbf{x}_{k})\). This can be done by using use Arnoldi or Lanczos methods [19, 31]. The complexity of this method is \(\mathcal{O}(n_{i}n_{j})\), given the matrix \(\mathcal{T} \times (\otimes _{k\in [d]\setminus \{i,j\}}\mathbf{x}_{k})\).

5.3 A Basic Maximization Problem for Best r-Approximation

Denote by \(\mathrm{S}_{n} \subset \mathbb{R}^{n\times n}\) the space of real symmetric matrices. For A ∈ S n denote by λ 1(A) ≥ … ≥ λ n (A) the eigenvalues of A arranged in a decreasing order and repeated according to their multiplicities. Let \(\mathrm{O}(n,k) \subset \mathbb{R}^{n\times k}\) be the set of all n × k matrices X with k orthonormal columns, i.e. X ⊤ X = I k , where I k is k × k identity matrix. We view \(X \in \mathbb{R}^{n\times k}\) as composed of k-columns [x 1 … x k ]. The column space of X ∈ O(n, k) corresponds to a k-dimensional subspace \(\mathbf{U} \subset \mathbb{R}^{n}\). Note that \(\mathbf{U} \in \mathop{\mathrm{Gr}}\nolimits (k, \mathbb{R}^{n})\) is spanned by the orthonormal columns of a matrix Y ∈ O(n, k) if and only if Y = XO, for some O ∈ O(k, k).

For A ∈ S n one has the Ky-Fan maximal characterization [28, Cor. 4.3.18]

Equality holds if and only if the column space of X = [x 1 … x k ] is a subspace spanned by k eigenvectors corresponding to k-largest eigenvalues of A.

We now reformulate the maximum problem (14.5) in terms of orthonormal bases of U i , i ∈ [d]. Let \(\mathbf{u}_{1,i},\ldots,\mathbf{u}_{n_{i},i}\) be an orthonormal basis of U i for i ∈ [d]. Then \(\otimes _{i=1}^{d}\mathbf{u}_{j_{i},i},j_{i} \in [n_{i}],i \in [d]\) is an orthonormal basis of ⊗ i = 1 d U i . Hence

Hence (14.5) is equivalent to

A simpler problem is to find

for a fixed i ∈ [d]. Let

The maximization problem (14.25) reduces to the maximum problem (14.24) with \(A = A_{i}(\underline{U}_{i})\). Note that each \(A_{i}(\underline{U}_{i})\) is a positive semi-definite matrix. Hence \(\sigma _{j}(A_{i}(\underline{U}_{i})) =\lambda _{j}(A_{i}(\underline{U}_{i}))\) for j ∈ [n i ]. Thus the complexity to find the first r i eigenvectors of \(A_{i}(\underline{U}_{i})\) is \(\mathcal{O}(r_{i}n_{i}^{2})\). Denote by \(\mathbf{U}_{i}(\underline{U}_{i}) \in \mathop{\mathrm{Gr}}\nolimits (r_{i}, \mathbb{R}^{n_{i}})\) a subspace spanned by the first r i eigenvectors of \(A_{i}(\underline{U}_{i})\). Note that this subspace is unique if and only if

Finally, if r = 1 d then each \(A_{i}(\underline{U}_{i})\) is a rank one matrix. Hence \(\mathbf{U}_{i}(\underline{U}_{i}) =\mathrm{ span}(\mathcal{T} \times \otimes _{k\in [d]\setminus \{i\}}\mathbf{u}_{1,k})\). For more details see [12].

6 Alternating Maximization Methods for Best r-Approximation

6.1 General Definition and Properties

Let Ψ i be a compact smooth manifold for i ∈ [d]. Define

We denote by ψ i , ψ = (ψ 1, …, ψ d ) and \(\hat{\psi }_{i} = (\psi _{1},\ldots,\psi _{i-1},\psi _{i+1},\ldots,\psi _{d})\) the points in Ψ i , Ψ and \(\hat{\varPsi }_{i}\) respectively. Identify ψ with \((\psi _{i},\hat{\psi }_{i})\) for each i ∈ [d]. Assume that \(f:\varPsi \rightarrow \mathbb{R}\) is a continuous function with continuous first and second partial derivatives. (In our applications it may happen that f has discontinuities in first and second partial derivatives.) We want to find the maximum value of f and a corresponding maximum point ψ ⋆:

Usually, this is a hard problem, where f has many critical points and a number of these critical points are local maximum points. In some cases, as best r approximation to a given tensor \(\mathcal{T} \in \mathbb{R}^{\mathbf{n}}\), we can solve the maximization problem with respect to one variable ψ i for any fixed \(\hat{\psi }_{i}\):

for each i ∈ [d].

Then the alternating maximization method, abbreviated as AMM, is as follows. Assume that we start with an initial point \(\psi ^{(0)} = (\psi _{1}^{(0)},\ldots,\psi _{d}^{(0)}) = (\psi _{1}^{(0)},\hat{\psi }_{1}^{(0,1)})\). Then we consider the maximal problem (14.30) for i = 1 and \(\hat{\psi }_{1}:=\hat{\psi }_{ 1}^{(0,1)}\). This maximum is achieved for \(\psi _{1}^{(1)}:=\psi _{ 1}^{\star }(\hat{\psi }_{1}^{(0,1)})\). Assume that the coordinates ψ 1 (1), …, ψ j (1) are already defined for j ∈ [d − 1]. Let \(\hat{\psi }_{j+1}^{(0,j+1)}:= (\psi _{1}^{(1)},\ldots,\psi _{j}^{(1)},\psi _{j+2}^{(0)},\ldots,\psi _{d}^{(0)})\). Then we consider the maximum problem (14.30) for i = j + 1 and \(\hat{\psi }_{j+1}:=\hat{\psi } _{j+1}j^{(0,j+1)}\). This maximum is achieved for \(\psi _{j+1}^{(1)}:=\psi _{ j+1}^{\star }(\hat{\psi }_{j+1}^{(0,j+1)})\). Executing these d iterations we obtain ψ (1): = (ψ 1 (1), …, ψ d (1)). Note that we have a sequence of inequalities:

Replace ψ (0) with ψ (1) and continue these iterations to obtain a sequence ψ (l) = (ψ 1 (l), …, ψ d (l)) for l = 0, …, N. Clearly,

Usually, the sequence ψ (l), l = 0, …, will converge to 1-semi maximum point ϕ = (ϕ 1, …, ϕ d ) ∈ Ψ. That is, \(f(\phi ) =\max _{\psi _{i}\in \varPsi }f((\psi _{i},\hat{\phi }_{i}))\) for i ∈ [d]. Note that if f is differentiable at ϕ then ϕ is a critical point of f. Assume that f is twice differentiable at ϕ. Then ϕ does not have to be a local maximum point [15, Appendix].

The modified alternating maximization method, abbreviated as MAMM, is as follows. Assume that we start with an initial point ψ (0) = (ψ 1 (0), …, ψ d (0)). Let \(\psi ^{(0)} = (\psi _{i}^{(0)},\hat{\psi }_{i}^{(0)})\) for i ∈ [d]. Compute \(f_{i,0} =\max _{\psi _{i}\in \varPsi _{i}}f((\psi _{i},\hat{\psi }_{i}^{(0)}))\) for i ∈ [d]. Let \(j_{1} \in \arg \max _{i\in [d]}f_{i,0}\). Then \(\psi ^{(1)} = (\psi _{j}^{\star }(\hat{\psi }_{j_{1}}^{(0)}),\hat{\psi }_{j_{1}}^{(0)})\) and \(f_{1} = f_{1,j_{1}} = f(\psi ^{(1)})\). Note that it takes d iterations to compute ψ (1). Now replace ψ (0) with ψ (1) and compute \(f_{i,1} =\max _{\psi _{i}\in \varPsi _{i}}f((\psi _{i},\hat{\psi }_{i}^{(1)}))\) for i ∈ [d]∖{j 1}. Continue as above to find ψ (l) for l = 2, …, N. Note that for l ≥ 2 it takes d − 1 iterations to determine ψ (l). Clearly, (14.31) holds. It is shown in [15] that the limit ϕ of each convergent subsequence of the points ψ (j) is 1-semi maximum point of f.

In certain very special cases, as for best rank one approximation, we can solve the maximization problem with respect to any pair of variables ψ i , ψ j for 1 ≤ i < j ≤ d, where d ≥ 3 and all other variables are fixed. Let

View ψ = (ψ 1, …, ψ d ) as \((\psi _{i,j},\hat{\psi }_{i,j})\) for each pair 1 ≤ i < j ≤ d. Then

A point ψ is called 2-semi maximum point if the above maximum equals to f(ψ) for each pair 1 ≤ i < j ≤ d.

The 2-alternating maximization method, abbreviated here as 2AMM, is as follows. Assume that we start with an initial point ψ (0) = (ψ 1 (0), …, ψ d (0)). Suppose first that d = 3. Then we consider the maximization problem (14.32) for i = 2, j = 3 and \(\hat{\psi }_{2,3} =\psi _{ 1}^{(0)}\). Let (ψ 2 (0, 1), ψ 3 (0, 1)) = ψ 2, 3 ⋆(ψ 1 (0)). Next let i = 1, j = 3 and ψ 1, 3 = ψ 2 (0, 1). Then (ψ 1 (0, 2), ψ 3 (0, 2)) = ψ 1, 3 ⋆(ψ 2 (0, 1)). Next let i = 1, 2 and \(\hat{\psi }_{1,2} =\psi _{ 3}^{(0,2)}\). Then \(\psi ^{(1)} = (\hat{\psi }_{1,2}^{\star }(\psi _{3}^{(0,2)}),\psi _{3}^{(0,2)})\). Continue these iterations to obtain ψ (l) for l = 2, …. Again, (14.31) holds. Usually the sequence \(\psi ^{(l)},l \in \mathbb{N}\) will converge to a 2-semi maximum point ϕ. For d ≥ 4 the 2AMM can be defined appropriately see [15].

A modified 2-alternating maximization method, abbreviated here as M2AMM, is as follows. Start with an initial point ψ (0) = (ψ 1 (0), …, ψ d (0)) viewed as \((\psi _{i,j}^{(0)},\hat{\psi }_{i,j}^{(0)})\), for each pair 1 ≤ i < j ≤ d. Let \(f_{i,j,0}:=\max _{\psi _{i,j}\in \varPsi _{i}\times \varPsi _{j}}f((\psi _{i,j},\hat{\psi }_{i,j}^{(0)}))\). Assume that \((i_{1},j_{1}) \in \arg \max _{1\leq i<j\leq d}f_{i,j,0}\). Then \(\psi ^{(1)} = (\psi _{i_{1},j_{1}}^{\star }(\hat{\psi }_{i_{1},j_{1}}^{(0)}),\hat{\psi }_{i_{1},j_{1}}^{(0)})\). Let \(f_{i_{1},j_{1},1}:= f(\psi ^{(1)})\). Note that it takes \({d\choose 2}\) iterations to compute ψ (1). Now replace ψ (0) with ψ (1) and compute \(f_{i,j,1} =\max _{\psi _{i,j}\in \varPsi _{i}\times \varPsi _{j}}f((\psi _{i,j},\hat{\psi }_{i,j}^{(1)}))\) for all pairs 1 ≤ i < j ≤ d except the pair (i 1, j 1). Continue as above to find ψ (l) for l = 2, …, N. Note that for l ≥ 2 it takes \({d\choose 2} - 1\) iterations to determine ψ (l). Clearly, (14.31) holds. It is shown in [15] that the limit ϕ of each convergent subsequence of the points ψ (j) is 2-semi maximum point of f.

6.2 AMM for Best r-Approximations of Tensors

Let \(\mathcal{T} \in \mathbb{R}^{\mathbf{n}}\). For best rank one approximation one searches for the maximum of the function \(f_{\mathcal{T}} = \mathcal{T} \times (\otimes _{i\in [d]}\mathbf{x}_{i})\) on S(n), as in (14.22). For best r-approximation one searches for the maximum of the function \(f_{\mathcal{T}} =\| P_{\otimes _{i\in [d]}\mathbf{U}_{i}}\|^{2}\) on \(\mathop{\mathrm{Gr}}\nolimits (\mathbf{r},\mathbf{n})\), as in (14.26). A solution to the basic maximization problem with respect to one subspace U i is given in Sect. 14.5.3.

The AMM for best r-approximation were studied first by de Lathauwer-Moor-Vandewalle [4]. The AMM is called in [4] alternating least squares, abbreviated as ALS. A crucial problem is the starting point of AMM. A high order SVD, abbreviated as HOSVD, for \(\mathcal{T}\), see [3], gives a good starting point for AMM. That is, let \(T_{l}(\mathcal{T} ) \in \mathbb{R}^{n_{l}\times N_{l}}\) be the unfolded matrix of \(\mathcal{T}\) in the mode l, as in Sect. 14.4.1. Then U l is the subspace spanned by the first l-left singular vectors of \(T_{l}(\mathcal{T} )\). The complexity of computing U l is \(\mathcal{O}(r_{l}N)\), where \(N =\prod _{i\in [d]}n_{i}\). Hence for large N the complexity of computing partial HOSVD is high. Another approach is to choose the starting subspaces at random, and repeat the AMM for several choices of random starting points.

MAMM for best rank one approximation of tensors was introduced by Friedland-Mehrmann-Pajarola-Suter in [15] by the name modified alternating least squares, abbreviated as MALS. 2AMM for best rank one approximation was introduced in [15] by the name alternating SVD, abbreviated as ASVD. It follows from the observation that \(A:= \mathcal{T} \times (\otimes _{l\in [d]\setminus \{i,j\}}\mathbf{x}_{l})\) is an n i × n j matrix. Hence the maximum of the bilinear form x ⊤ A y on S((n i , n j )) is σ 1(A). See Sect. 14.2. M2AMM was introduced in [15] by the names MASVD.

We now introduce the following variant of 2AMM for best r-rank approximation, called 2-alternating maximization method variant and abbreviated as 2AMMV. Consider the maximization problem for a pair of variables as in (14.32). Since for r ≠ 1 d we do not have a closed solution to this problem, we apply the AMM for two variables ψ i and ψ j , while keeping \(\hat{\psi }_{i,j}\) fixed. We then continue as in 2AMM method.

6.3 Complexity Analysis of AMM for Best r-Approximation

Let \(\underline{U} = (\mathbf{U}_{1},\ldots,\mathbf{U}_{d})\). Assume that

For each i ∈ [d] compute the symmetric positive semi-definite matrix \(A_{i}(\underline{U}_{i})\) given by (14.27). For simplicity of exposition we give the complexity analysis for d = 3. To compute \(A_{1}(\underline{U}_{1})\) we need first to compute the vectors \(\mathcal{T} \times (\mathbf{u}_{j_{2},2} \otimes \mathbf{u}_{j_{3},3})\) for j 2 ∈ [r 2] and j 3 ∈ [r 3]. Each computation of such a vector has complexity \(\mathcal{O}(N)\), where N = n 1 n 2 n 3. The number of such vectors is r 2 r 3. To form the matrix \(A_{i}(\underline{U}_{i})\) we need \(\mathcal{O}(r_{2}r_{3}n_{1}^{2})\) flops. To find the first r 1 eigenvectors of \(A_{1}(\underline{U}_{1})\) we need \(\mathcal{O}(r_{1}n_{1}^{2})\) flops. Assuming that n 1, n 2, n 3 ≈ n and r 1, r 2, r 3 ≈ r we deduce that we need O(r 2 n 3) flops to find the first r 1 orthonormal eigenvectors of \(A_{1}(\underline{U}_{1})\) which span \(\mathbf{U}_{1}(\underline{U}_{1})\). Hence the complexity of finding orthonormal bases of \(\mathbf{U}_{1}(\underline{U}_{1})\) is \(\mathcal{O}(r^{2}n^{3})\), which is the complexity of computing \(A_{1}(\underline{U}_{1})\). Hence the complexity of each step of AMM for best r-approximation, i.e. computing ψ (l), is \(\mathcal{O}(r^{2}n^{3})\).

It is possible to reduce the complexity of AMM for best r-approximation is to \(\mathcal{O}(rn^{3})\) if we compute and store the matrices \(\mathcal{T} \times \mathbf{u}_{j_{1},1},\mathcal{T} \times \mathbf{u}_{j_{2},2},\mathcal{T} \times \mathbf{u}_{j_{3},3}\). See Sect. 14.10.

We now analyze the complexity of AMM for rank one approximation. In this case we need only to compute the vector of the form \(\mathbf{v}_{i}:= \mathcal{T} \times (\otimes _{j\in [d]\setminus \{i\}}\mathbf{u}_{j})\) for each i ∈ [d], where U j = span(u j ) for j ∈ [i]. The computation of each v i needs \(\mathcal{O}((d - 2)N)\) flops, where \(N =\prod _{j\in [d]}\). Hence each step of AMM for best rank one approximation is \(\mathcal{O}(d(d - 2)N)\). So for d = 3 and n 1 ≈ n 2 ≈ n 3 the complexity is \(\mathcal{O}(n^{3})\), which is the same complexity as above with r = 1.

7 Fixed Points of AMM and Newton Method

Consider the AMM as described in Sect. 14.6.1. Assume that the sequence \(\psi ^{(l)},l \in \mathbb{N}\) converges to a point ϕ ∈ Ψ. Then ϕ is a fixed point of the map:

In general, the map \(\tilde{\mathbf{F}}\) is a multivalued map, since the maximum given in (14.30) may be achieved at a number of points denoted by \(\psi _{i}^{\star }(\hat{\psi }_{i})\). In what follows we assume:

Assumption 1

The AMM converges to a fixed point ϕ of \(\tilde{\mathbf{F}}\) i.e. \(\tilde{\mathbf{F}}(\phi ) =\phi\) , such that the following conditions hold:

-

1.

There is a connected open neighborhood O ⊂Ψ such that \(\tilde{\mathbf{F}}: O \rightarrow O\) is one valued map.

-

2.

\(\tilde{\mathbf{F}}\) is a contraction on O with respect to some norm on O.

-

3.

\(\tilde{\mathbf{F}} \in \mathrm{ C}^{2}(O)\) , i.e. \(\tilde{\mathbf{F}}\) has two continuous partial derivatives in O.

-

4.

O is diffeomorphic to an open subset in \(\mathbb{R}^{L}\) . That is, there exists a smooth one-to-one map \(H: O \rightarrow \mathbb{R}^{L}\) such that the Jacobian D(H) is invertible at each point ψ ∈ O.

Assume that the conditions of Assumption 1 hold. Then the map \(\tilde{\mathbf{F}}: O \rightarrow O\) can be represented as

Hence to find a fixed point of \(\tilde{\mathbf{F}}\) in O it is enough to find a fixed point of F in O 1. A fixed point of F is a zero point of the system

To find a zero of G we use the standard Newton method.

In this paper we propose new Newton methods. We make a few iterations of AMM and switch to a Newton method assuming that the conditions of Assumption 1 hold as explained above. A fixed point of the map \(\tilde{\mathbf{F}}\) for best rank one approximation induces a fixed point of map \(\mathbf{F}: \mathbb{R}^{\mathbf{n}} \rightarrow \mathbb{R}^{\mathbf{n}}\) [15, Lemma 2]. Then the corresponding Newton method to find a zero of G is straightforward to state and implement, as explained in the next subsection. This Newton method was given in [14, §5]. See also Zhang-Golub [43] for a different Newton method for best (1, 1, 1) approximation.

Let \(\tilde{\mathbf{F}}:\mathop{ \mathrm{Gr}}\nolimits (\mathbf{r},\mathbf{n}) \rightarrow \mathop{\mathrm{Gr}}\nolimits (\mathbf{r},\mathbf{n})\) be the induced map AMM. Each \(\mathop{\mathrm{Gr}}\nolimits (r, \mathbb{R}^{n})\) can be decomposed as a compact manifold to a finite number of charts as explained in Sect. 14.8. These charts induce standard charts of \(\mathop{\mathrm{Gr}}\nolimits (\mathbf{r},\mathbf{n})\). After a few AMM iterations we assume that the neighborhood O of the fixed point of \(\tilde{\mathbf{F}}\) lies in one the charts of \(\mathop{\mathrm{Gr}}\nolimits (\mathbf{r},\mathbf{n})\). We then construct the corresponding map F in this chart. Next we apply the standard Newton method to G. The papers by Eldén-Savas [8] and Savas-Lim [39] discuss Newton and quasi-Newton methods for (r 1, r 2, r 3) approximation of 3-tensors using the concepts of differential geometry.

7.1 Newton Method for Best Rank One Approximation

Let \(\mathcal{T} \in \mathbb{R}^{\mathbf{n}}\setminus \{0\}\). Define:

Recall the results of Sect. 14.5.2: Any critical point of \(f_{\mathcal{T}}\vert \mathrm{S}(\mathbf{n})\) satisfies (14.23). Suppose we start the AMM with \(\psi ^{(0)} = (\mathbf{x}_{1}^{(0)},\ldots,\mathbf{x}_{d}^{(0)}) \in \mathrm{ S}(\mathbf{n})\) such that \(f_{\mathcal{T}}(\psi ^{(0)})\neq 0\). Then it is straightforward to see that \(f_{\mathcal{T}}(\psi ^{(l)}) > 0\) for \(l \in \mathbb{N}\). Assume that \(\lim _{l\rightarrow \infty }\psi ^{(l)} =\omega = (\mathbf{u}_{1},\ldots,\mathbf{u}_{d}) \in \mathrm{ S}(\mathbf{n})\). Then ω is the singular tuple of \(\mathcal{T}\) satisfying (14.23). Clearly, \(\lambda = f_{\mathcal{T}}(\omega ) > 0\). Let

Then ϕ is a fixed point of F.

Our Newton algorithm for finding the fixed point ϕ of F corresponding to a fixed point ω of AMM is as follows. We do a number of iterations of AMM to obtain ψ (m). Then we renormalize ψ (m) according to (14.38):

Let D F(ψ) denote the Jacobian of F at ψ, i.e. the matrix of partial derivatives of F at ψ. Then we perform Newton iterations of the form:

After performing a number of Newton iterations we obtain ϕ (m′) = (z 1, …, z d ) which is an approximation of ϕ. We then renormalize each z i to obtain \(\omega ^{(m')}:= ( \frac{1} {\|\mathbf{z}_{1}\|} \mathbf{z}_{1},\ldots, \frac{1} {\|\mathbf{z}_{d}\|}\mathbf{z}_{d})\) which is an approximation to the fixed point ω. We call this Newton method Newton-1.

We now give the explicit formulas for 3-tensors, where n 1 = m, n 2 = n, n 3 = l. First

Then

Hence Newton-1 iteration is given by the formula

for i = 0, 1, …, . Here we abuse notation by viewing (u, v, w) as a column vector \((\mathbf{u}^{\top },\mathbf{v}^{\top },\mathbf{w}^{\top })^{\top }\in \mathbb{C}^{m+n+l}\).

Numerically, to find \((D\mathbf{G}(\mathbf{u}_{i},\mathbf{v}_{i},\mathbf{w}_{i}))^{-1}\mathbf{G}(\mathbf{u}_{i},\mathbf{v}_{i},\mathbf{w}_{i})\) one solves the linear system

The final vector (u j , v j , w j ) of Newton-1 iterations is followed by a scaling to vectors of unit length \(\mathbf{x}_{j} = \frac{1} {\|\mathbf{u}_{j}\|}\mathbf{u}_{j},\mathbf{y}_{j} = \frac{1} {\|\mathbf{v}_{j}\|}\mathbf{v}_{j},\mathbf{z}_{j} = \frac{1} {\|\mathbf{w}_{j}\|}\mathbf{w}_{j}\).

We now discuss the complexity of Newton-1 method for d = 3. Assuming that m ≈ n ≈ l we deduce that the computation of the matrix D G is \(\mathcal{O}(n^{3})\). As the dimension of D G is m + n + l it follows that the complexity of each iteration of Newton-1 method is \(\mathcal{O}(n^{3})\).

8 Newton Method for Best r-Approximation

Recall that an r-dimensional subspace \(\mathbf{U} \in \mathop{\mathrm{Gr}}\nolimits (r, \mathbb{R}^{n})\) is given by a matrix \(U = [u_{ij}]_{i,j=1}^{n,r} \in \mathbb{R}^{n\times r}\) of rank r. In particular there is a subset α ⊂ [n] of cardinality r so that detU[α, [r]] ≠ 0. Here α = (α 1, …, α r ), 1 ≤ α 1 < … < α r ≤ n. So \(U[\alpha,[r]]:= [u_{\alpha _{i}j}]_{i,j=1}^{r} \in \mathbf{GL}(r, \mathbb{R})\), (the group of invertible matrices). Clearly, V: = UU[α, [r]]−1 represents another basis in U. Note that V [α, [r]] = I r . Hence the set of all \(V \in \mathbb{R}^{n\times r}\) with the condition: V [α, [r]] = I r represent an open cell in \(\mathop{\mathrm{Gr}}\nolimits (r,n)\) of dimension r(n − r) denoted by \(\mathop{\mathrm{Gr}}\nolimits (r, \mathbb{R}^{n})(\alpha )\). (The number of free parameters in all such V ’s is (n − r)r.) Assume for simplicity of exposition that α = [r]. Note that \(V _{0} = \left [\begin{array}{c} I_{r}\\ 0\end{array} \right ] \in \mathop{\mathrm{Gr}}\nolimits (r, \mathbb{R}^{n})([r])\). Let \(\mathbf{e}_{i} = (\delta _{1i},\ldots,\delta _{ni})^{\top }\in \mathbb{R}^{n},i = 1,\ldots,n\) be the standard basis in \(\mathbb{R}^{n}\). So \(\mathbf{U}_{0} =\mathrm{ span}(\mathbf{e}_{1},\ldots,\mathbf{e}_{r}) \in \mathop{\mathrm{Gr}}\nolimits (r, \mathbb{R}^{n})([r])\), and V 0 is the unique representative of U 0. Note that U 0 ⊥ , the orthogonal complement of U 0, is span(e r+1, …, e n ). It is straightforward to see that \(\mathbf{V} \in \mathop{\mathrm{Gr}}\nolimits (r, \mathbb{R}^{n})([r])\) if and only if \(\mathbf{V} \cap \mathbf{U}_{0}^{\perp } =\{ \mathbf{0}\}\).

The following definition is a geometric generalization of \(\mathop{\mathrm{Gr}}\nolimits (r, \mathbb{R}^{n})(\alpha )\):

A basis for \(\mathop{\mathrm{Gr}}\nolimits (r, \mathbb{R}^{n})(\mathbf{U})\), which can be identified the tangent hyperplane \(T_{\mathbf{U}}\mathop{ \mathrm{Gr}}\nolimits (r, \mathbb{R}^{n})\), can be represented as ⊕r U ⊥ : Let u 1, …, u r and u r+1, …, u n be orthonormal bases of U and U ⊥ respectively Then each subspace \(\mathbf{V} \in \mathop{\mathrm{Gr}}\nolimits (r, \mathbb{R}^{n})(\mathbf{U})\) has a unique basis of the form u 1 +x 1, …, u r +x r for unique x 1, …, x r ∈ U ⊥ . Equivalently, every matrix \(X \in \mathbb{R}^{(n-r)\times r}\) induces a unique subspace V using the equality

Recall the results of Sect. 14.5.3. Let \(\underline{U} = (U_{1},\ldots,U_{d}) \in \mathop{\mathrm{Gr}}\nolimits (\mathbf{r},\mathbf{n})\). Then

where \(\mathbf{U}_{i}(\underline{U}_{i})\) a subspace spanned by the first r i eigenvectors of \(A_{i}(\underline{U}_{i})\). Assume that \(\tilde{\mathbf{F}}\) is one valued at \(\underline{U}\), i.e. (14.28) holds. Then it is straightforward to show that \(\tilde{\mathbf{F}}\) is smooth (real analytic) in neighborhood of \(\underline{U}\). Assume next that there exists a neighborhood O of \(\underline{U}\) such that

such that the conditions 1–3 of Assumption 1 hold. Observe next that \(\mathop{\mathrm{Gr}}\nolimits (\mathbf{r},\mathbf{n})(\underline{U})\) is diffeomorphic to

We say that \(\tilde{\mathbf{F}}\) is regular at \(\underline{U}\) if in addition to the above condition the matrix \(I - D\tilde{\mathbf{F}}(\underline{U})\) is invertible. We can view \(X = [X_{1}\;\ldots \;X_{d}] \in \mathbb{R}^{(n_{1}-r_{1})\times r_{1}}\ldots \times \mathbb{R}^{(n_{d}-r_{d})\times r_{d}}\). Then \(\tilde{\mathbf{F}}\) on O can be viewed as

Note that F i (X) does not depend on X i for each i ∈ [d]. In our numerical simulations we first do a small number of AMM and then switch to Newton method given by (14.40). Observe that \(\underline{U}\) corresponds to \(X(\underline{U}) = [X_{1}(\underline{U}),\ldots,X_{d}(\underline{U})]\). When referring to (14.40) we identify X = [X 1, …, X d ] with ϕ = (ϕ 1, …, ϕ d ) and no ambiguity will arise.

Note that the case r = 1 d corresponds to best rank one approximation. The above Newton method in this case is different from Newton method given in Sect. 14.7.1.

9 A Closed Formula for \(D\mathbf{F}(X(\underline{U}))\)

Recall the definitions and results of Sect. 14.5.3. Given \(\underline{U}\) we compute \(\tilde{F}_{i}(\underline{U}) = \mathbf{U}_{i}(\underline{U}_{i})\), which is the subspace spanned by the first r i eigenvectors of \(A_{i}(\underline{U}_{i})\), which is given by (14.27), for i ∈ [d]. Assume that (14.33) holds. Let

With each \(X = [X_{1},\ldots,X_{d}] \in \mathbb{R}^{L}\) we associate the following point \((\mathbf{W}_{1},\ldots,\mathbf{W}_{d}) \in \mathop{\mathrm{Gr}}\nolimits (\mathbf{r},\mathbf{n})(\underline{U})\). Suppose that \(X_{i} = [x_{pq,i}] \in \mathbb{R}^{(n_{i}-r_{i})\times r_{i}}\). Then W i has a basis of the form

One can use the following notation for a basis \(\mathbf{w}_{1,i},\ldots,\mathbf{w}_{r_{i},i}\), written as a vector with vector coordinates \([\mathbf{w}_{1,i}\cdots \mathbf{w}_{r_{i},i}]\):

Note that to the point \(\underline{U} \in \mathop{\mathrm{Gr}}\nolimits (\mathbf{r},\mathbf{n})(\underline{U})\) corresponds the point X = 0. Since \(\mathbf{u}_{1,i},\ldots,\mathbf{u}_{n_{i},i}\) is a basis in \(\mathbb{R}^{n_{i}}\) it follows that

View \([\mathbf{v}_{1,i}\cdots \mathbf{v}_{r_{i},i}],[\mathbf{u}_{1,i}\cdots \mathbf{u}_{n_{i},i}]\) as n i × r i and n i × n i matrices with orthonormal columns. Then

The assumption that \(\tilde{\mathbf{F}}: O \rightarrow O\) implies that Y i, 0 is an invertible matrix. Hence \([\mathbf{v}_{1,i}\cdots \mathbf{v}_{r_{i},i}]Y _{i,0}^{-1}\) is also a basis in \(\mathbf{U}_{i}(\underline{U}_{i})\). Clearly,

Hence \(\tilde{\mathbf{F}}(\underline{U})\) corresponds to F(0) where

We now find the matrix of derivatives. So \(D_{i}F_{j} \in \mathbb{R}^{((n_{i}-r_{i})r_{i}\times ((n_{j}-r_{j})r_{j}))}\) is the partial derivative matrix of (n j − r j )r j coordinates of F j with respect to (n i − r i )r i the coordinates of U i viewed as the matrix \(\left [\begin{array}{c} I_{r_{i}} \\ G_{i} \end{array} \right ]\). So \(G_{i} \in \mathbb{R}^{(n_{i}-r_{i})\times r_{i}}\) are the variables representing the subspace U i . Observe first that D i F i = 0 just as in Newton method for best rank one approximation in Sect. 14.7.1.

Let us now find D i F j (0). Recall that D i F j (0) is a matrix of size (n i − r i )r i × (n j − r j )r j . The entries of D i F j (0) are indexed by ((p, q), (s, t)) as follows: The entries of \(G_{i} = [g_{pq,i}] \in \mathbb{R}^{(n_{i}-r_{i})\times r_{i}}\) are viewed as (n i − r i )r i variables, and are indexed by (p, q), where p ∈ [n i − r i ], q ∈ [r i ]. F j is viewed as a matrix \(G_{j} \in \mathbb{R}^{(n_{j}-r_{j})\times r_{j}}\).The entries of F j are indexed by (s, t), where s ∈ [n j − r j ] and t ∈ [r j ]. Since \(\underline{U} \in \mathop{\mathrm{Gr}}\nolimits (\mathbf{r},\mathbf{n})(\underline{U})\) corresponds to \(0 \in \mathbb{R}^{L}\) we denote by A j (0) the matrix \(A_{j}(\underline{U}_{j})\) for j ∈ [d]. We now give the formula for \(\frac{\partial A_{j}(0)} {\partial g_{pq,i}}\). This is done by noting that we vary U i by changing the orthonormal basis \(\mathbf{u}_{1,i},\ldots,\mathbf{u}_{r_{i},i}\) up to the first perturbation with respect to the real variable \(\varepsilon\) to

We denote the subspace spanned by these vectors as \(\mathbf{U}_{i}(\varepsilon,p,q)\). That is, we change only the q orthonormal vector of the standard basis in U i , for q = 1, …, r i . The new basis is an orthogonal basis, and up order \(\varepsilon\), the vector \(\mathbf{u}_{q,i} +\varepsilon \mathbf{u}_{r_{i}+p,i}\) is also of length 1. Let \(\underline{U}(\varepsilon,i,p,q) = (\mathbf{U}_{1},\ldots,\mathbf{U}_{i-1},\mathbf{U}_{i}(\varepsilon,p,q),\mathbf{U}_{i+1},\ldots,\mathbf{U}_{d})\). Then \(\underline{U}(\varepsilon,i,p,q)_{j}\) is obtained by dropping the subspace U j from \(\underline{U}(\varepsilon,i,p,q)\). We will show that

We now give a formula to compute B j, i, p, q . Assume that i, j ∈ [d] is a pair of different integers. Let J be a set of d − 2 pairs \(\cup _{l\in [d]\setminus \{i,j\}}\{(k_{l},l)\}\), where k l ∈ [r l ]. Denote by \(\mathcal{J}_{ij}\) the set of all such J’s. Note that \(\mathcal{J}_{ij} = \mathcal{J}_{ji}\). Furthermore, the number of elements in \(\mathcal{J}_{ij}\) is \(R_{ij} =\prod _{l\in [d]\setminus \{i,j\}}r_{l}\). We now introduce the following matrices

Note that C ij (J) = C ji (J)⊤.

Lemma 1

Let i,j ∈ [d],i ≠ j. Assume that p ∈ [n i − r i ],q ∈ [r i ]. Then (14.53) holds. Furthermore

Proof

The identity of (14.55) is just a restatement of (14.27). To compute \(A_{j}(\underline{U}(\varepsilon,i,p,\) q) j ) use (14.54) by replacing u k, i with \(\hat{u}_{k,i}\) for k ∈ [n i ]. Deduce first (14.53) and then (14.56). □

Recall that \(\mathbf{v}_{1,j},\ldots,\mathbf{v}_{r_{j},j}\) is an orthonormal basis of \(\mathbf{U}_{j}(\underline{U}_{j})\), and these vectors are the eigenvectors \(A_{j}(\underline{U}_{j})\) corresponding its first r j eigenvalues. Let \(\mathbf{v}_{r_{j}+1,j},\ldots,\mathbf{v}_{n_{j},i}\) be the last n j − r j orthonormal eigenvectors of \(A_{j}(\underline{U}_{j})\). We now find the first perturbation of the first r i eigenvectors for the matrix \(A_{j}(\underline{U}_{j}) +\varepsilon B_{j,i,p,q}\). Assume first, for simplicity of exposition, that each \(\lambda _{k}(A_{j}(\underline{U}_{j}))\) is simple for k ∈ [r j ]: Then it is known, e.g. [10, Chapter 4, §19, (4.19.2)]:

The assumption that \(\lambda _{k}(A_{j}(\underline{U}_{j}))\) is a simple eigenvalue for k ∈ [r j ] yields

for \(\mathbf{y} \in \mathbb{R}^{n_{j}}\).

Since we are interested in a basis of \(\mathbf{U}_{j}(\underline{U}(\varepsilon,i,p,q)_{j})\) up to the order of \(\varepsilon\) we can assume that this basis is of the form

Hence

Note that the assumption (14.28) yields that w k, j is well defined for k ∈ [r j ]. Let

Up to the order of \(\varepsilon\) we have that a basis of \(\mathbf{U}_{j}(\underline{U}(\varepsilon,i,p,q)_{j})\) is given by columns of matrix \(Z_{j,0}+\varepsilon W_{j}(i,p,q) = \left [\begin{array}{c} Y _{j,0} +\varepsilon V _{j}(i,p,q) \\ X_{j,0} +\varepsilon U_{j}(i,p,q) \end{array} \right ]\). Note that

Observe next

Hence

Thus \(D\mathbf{F}(0) = [D_{i}F_{J}]_{i,j\in [d]} \in \mathbb{R}^{L\times L}\). We now make one iteration of Newton method given by (14.40) for l = 1, where ϕ (0) = 0:

Let \(\mathbf{U}_{i,1} \in \mathop{\mathrm{Gr}}\nolimits (r_{i}, \mathbb{R}^{n_{i}})\) be the subspace represented by the matrix X i, 1:

for i ∈ [d]. Perform the Gram-Schmidt process on \(\tilde{\mathbf{u}}_{1,i,1},\ldots,\tilde{\mathbf{u}}_{r_{i},i,1}\) to obtain an orthonormal basis \(\mathbf{u}_{1,i,1},\ldots,\mathbf{u}_{r_{i},i,1}\) of U i, 1. Let \(\underline{U}:= (\mathbf{U}_{1,1},\ldots,\mathbf{U}_{d,1})\) and repeat the algorithm which is described above. We call this Newton method Newton-2.

10 Complexity of Newton-2

In this section we assume for simplicity that d = 3, r 1 = r 2 = r 3 = r, n i ≈ n for i ∈ [3]. We assume that executed a number of times the AMM for a given \(\mathcal{T} \in \mathbb{R}^{\mathbf{n}}\). So we are given \(\underline{U} = (\mathbf{U}_{1},\ldots,\mathbf{U}_{d})\), and an orthonormal basis u 1, i , …, u r, i of U i for i ∈ [d]. First we complete each u 1, i , …, u r, i to an orthonormal basis \(\mathbf{u}_{1,i},\ldots,\mathbf{u}_{n_{i},i}\) of \(\mathbb{R}^{n_{i}}\), which needs \(\mathcal{O}(n^{3})\) flops. Since d = 3 we still need only \(\mathcal{O}(n^{3})\) to carry out this completion for each i ∈ [3].

Next we compute the matrices C ij (J). Since d = 3, we need n flops to compute each entry of C ij (J). Since we have roughly n 2 entries, the complexity of computing C ij (J) is \(\mathcal{O}(n^{3})\). As the cardinality of \(\mathcal{J}_{ij}\) is r we need \(\mathcal{O}(rn^{3})\) flops to compute all C ij (J) for \(J \in \mathcal{J}_{ij}\). As the number of pairs in [3] is 3 it follows that the complexity of computing all C ij (J) is \(\mathcal{O}(rn^{3})\).

The identity (14.55) yields that the complexity of computing \(A_{j}(\underline{U}_{j})\) is \(\mathcal{O}(r^{2}n^{2})\). Recall next that \(A_{j}(\underline{U}_{j})\) is n j × n j symmetric positive semi-definite matrix. The complexity of computations of the eigenvalues and the orthonormal eigenvectors of \(A_{j}(\underline{U}_{j})\) is \(\mathcal{O}(n^{3})\). Hence the complexity of computing \(\underline{U}\) is \(\mathcal{O}(rn^{3})\), as we pointed out at the end of Sect. 14.6.3.

The complexity of computing B j, i, p. q using (14.56) is \(\mathcal{O}(rn^{2})\). The complexity of computing w k, j (i, p, q), given by (14.58) is \(\mathcal{O}(n^{2})\). Hence the complexity of computing W j (i, p, q) is \(\mathcal{O}(rn^{2})\). Therefore the complexity of computing D i F j is \(\mathcal{O}(r^{2}n^{3})\). Since d = 3, the complexity of computing the matrix D F(0) is also \(\mathcal{O}(r^{2}n^{3})\).

As \(D\mathbf{F}(0) \in \mathbb{R}^{L\times L}\), where L ≈ 3rn, the complexity of computing (I − D F(0))−1 is \(\mathcal{O}(r^{3}n^{3})\). In summary, the complexity of one step in Newton-2 is \(\mathcal{O}(r^{3}n^{3})\).

11 Numerical Results

We have implemented a Matlab library tensor decomposition using Tensor Toolbox given by [30]. The performance was measured via the actual CPU-time (seconds) needed to compute. All performance tests have been carried out on a 2.8 GHz Quad-Core Intel Xeon Macintosh computer with 16 GB RAM. The performance results are discussed for real data sets of third-order tensors. We worked with a real computer tomography (CT) data set (the so-called MELANIX data set of OsiriX) [15].

Our simulation results are averaged over 10 different runs of the each algorithm. In each run, we changed the initial guess, that is, we generated new random start vectors. We always initialized the algorithms by random start vectors, because this is cheaper than the initialization via HOSVD. We note here that for Newton methods our initial guess is the subspaces returned by one iteration of AMM method.

All the alternating algorithms have the same stopping criterion where convergence is achieved if one of the two following conditions are met: iterations > 10; fitchange < 0. 0001 is met. All the Newton algorithms have the same stopping criterion where convergence is achieved if one of the two following conditions are met: iterations > 10; change < exp(−10).

Our numerical simulations demonstrate the well known fact that for large size tensors Newton methods are not efficient. Though the Newton methods converge in fewer iterations than alternating methods, the computation associated with the matrix of derivatives (Jacobian) in each iteration is too expensive making alternating maximization methods much more cost effective. Our simulations also demonstrate that our Newton-1 for best rank one approximation is as fast as AMM methods. However our Newton-2 is much slower than alternating methods. We also give a comparison between our Newton-2 and the Newton method based on Grassmannian manifold by [8], abbreviated as Newton-ES.

We also observe that for large tensors and large rank approximation two alternating maximization methods, namely MAMM and 2AMMV, seem to outperform the other alternating maximization methods. We would recommend Newton-1 for rank one approximation in case of rank one approximation both for large and small sized tensors. For higher rank approximation we recommend 2AMMV in case of large size tensors and AMM or MAMM in case of small size tensors.

Our Newton-2 performs a bit slower than Newton-ES, however we would like to point couple of advantages. Our method can be easily extendable to higher dimensions (for d > 3 case) both analytically and numerically compared to Newton-ES. Our method is also highly parallelizable which can bring down the computation time drastically. Computation of D i F j matrices in each iteration contributes to about 50 % of the total time, which however can be parallelizable. Finally the number of iterations in Newton-2 is at least 30 % less than in Newton-ES (Figs. 14.1–14.3).

It is not only important to check how fast the different algorithms perform but also what quality they achieve. This was measured by checking the Hilbert-Schmidt norm, abbreviated as HS norm, of the resulting decompositions, which serves as a measure for the quality of the approximation. In general, we can say that the higher the HS norm, the more likely it is that we find a global maximum. Accordingly, we compared the HS norms to say whether the different algorithms converged to the same stationary point. In Figs. 14.4 and 14.5, we show the average HS norms achieved by different algorithms and compared them with the input norm. We observe all the algorithms seem to attain the same local maximum.

11.1 Best (2,2,2) and Rank Two Approximations

Assume that \(\mathcal{T}\) is a 3-tensor of rank three at least and let \(\mathcal{S}\) be a best (2, 2, 2)-approximation to \(\mathcal{T}\) given by (14.1). It is easy to show that \(\mathcal{S}\) has at least rank 2. Let \(\mathcal{S}' = [s_{j_{1},j_{2},j_{3}}] \in \mathbb{R}^{2\times 2\times 2}\) be the core tensor corresponding to \(\mathcal{S}\). Clearly \(\mathrm{rank\;}\mathcal{S} =\mathrm{ rank\;}\mathcal{S}'\geq 2\). Recall that a real nonzero 2 × 2 × 2 tensor has rank one, two or three [41]. So \(\mathrm{rank\;}\mathcal{S}\in \{ 2,3\}\). Observe next that if \(\mathrm{rank\;}\mathcal{S} =\mathrm{ rank\;}\mathcal{S}' = 2\) then \(\mathcal{S}\) is also a best rank two approximation of \(\mathcal{T}\). Recall that a best rank two approximation of \(\mathcal{T}\) may not always exist. In particular where \(\mathrm{rank\;}\mathcal{T} > 2\) and the border rank of \(\mathcal{T}\) is 2 [6]. In all our numerical simulations for best (2, 2, 2)-approximation we performed on random large tensors, the tensor \(\mathcal{S}'\) had rank two. Note that the probability of 2 × 2 × 2 tensors, with entries normally distributed with mean 0 and variance 1, to have rank 2 is \(\frac{\pi }{4}\) [2].

12 Conclusions

We have extended the alternating maximization method (AMM) and modified alternating maximization method (MAMM) given in [15] for the computation of best rank one approximation to best r-approximations. We have also presented new algorithms such as 2-alternating maximization method variant (2AMMV) and Newton method for best r-approximation (Newton-2). We have provided closed form solutions for computing the DF matrix in Newton-2. We implemented Newton-1 for best rank one approximation [14] and Newton-2. From the simulations, we have found out that for rank one approximation of both large and small sized tensors, Newton-1 performed the best. For higher rank approximation, the best performers were 2AMMV in case of large size tensors and AMM or MAMM in case of small size tensors.

Notes

- 1.

This work was supported by NSF grant DMS-1216393.

References

Achlioptas, D., McSherry, F.: Fast computation of low rank approximations. In: Proceedings of the 33rd Annual Symposium on Theory of Computing, Heraklion, pp. 1–18 (2001)

Bergqvist, G.: Exact probabilities for typical ranks of 2 × 2 × 2 and 3 × 3 × 2 tensors. Linear Algebra Appl. 438, 663–667 (2013)

de Lathauwer, L., de Moor, B., Vandewalle, J.: A multilinear singular value decomposition. SIAM J. Matrix Anal. Appl. 21, 1253–1278 (2000)

de Lathauwer, L., de Moor, B., Vandewalle, J.: On the best rank-1 and rank-(R 1, R 2, …, R N ) approximation of higher-order tensors. SIAM J. Matrix Anal. Appl. 21, 1324–1342 (2000)

Deshpande, A., Vempala, S.: Adaptive sampling and fast low-rank matrix approximation, Electronic Colloquium on Computational Complexity, Report No. 42 pp. 1–11 (2006)

de Silva, V., Lim, L.-H.: Tensor rank and the ill-posedness of the best low-rank approximation problem. SIAM J. Matrix Anal. Appl. 30, 1084–1127 (2008)

Drineas, P., Kannan, R., Mahoney, M.W.: Fast Monte Carlo algorithms for matrices I–III: computing a compressed approximate matrix decomposition. SIAM J. Comput. 36, 132–206 (2006)

Eldén, L., Savas, B.: A Newton-Grassmann method for computing the best multilinear rank-(r 1, r 2, r 3) approximation of a tensor. SIAM J. Matrix Anal. Appl. 31, 248–271 (2009)

Friedland, S.: Nonnegative definite hermitian matrices with increasing principal minors. Spec. Matrices 1, 1–2 (2013)

Friedland, S.: MATRICES, a book draft in preparation. http://homepages.math.uic.edu/~friedlan/bookm.pdf, to be published by World Scientific

Friedland, S., Kaveh, M., Niknejad, A., Zare, H.: Fast Monte-Carlo low rank approximations for matrices. In: Proceedings of IEEE Conference SoSE, Los Angeles, pp. 218–223 (2006)

Friedland, S., Mehrmann, V.: Best subspace tensor approximations, arXiv:0805.4220v1

Friedland, S., Mehrmann, V., Miedlar, A., Nkengla, M.: Fast low rank approximations of matrices and tensors. J. Electron. Linear Algebra 22, 1031–1048 (2011)

Friedland, S., Mehrmann, V., Pajarola, R., Suter, S.K.: On best rank one approximation of tensors. http://arxiv.org/pdf/1112.5914v1.pdf

Friedland, S., Mehrmann, V., Pajarola, R., Suter, S.K.: On best rank one approximation of tensors. Numer. Linear Algebra Appl. 20, 942–955 (2013)

Friedland, S., Ottaviani, G.: The number of singular vector tuples and uniqueness of best rank one approximation of tensors. Found. Comput. Math. 14, 1209–1242 (2014)

Friedland, S., Torokhti, A.: Generalized rank-constrained matrix approximations. SIAM J. Matrix Anal. Appl. 29, 656–659 (2007)

Frieze, A., Kannan, R., Vempala, S.: Fast Monte-Carlo algorithms for finding low-rank approximations. J. ACM 51, 1025–1041 (2004)

Golub, G.H., Van Loan, C.F.: Matrix Computation, 3rd edn. John Hopkins University Press, Baltimore (1996)

Goreinov, S.A., Oseledets, I.V., Savostyanov, D.V., Tyrtyshnikov, E.E., Zamarashkin, N.L.: How to find a good submatrix. In: Matrix Methods: Theory, Algorithms and Applications, pp. 247–256. World Scientific Publishing, Hackensack (2010)

Goreinov, S.A., Tyrtyshnikov, E.E., Zamarashkin, N.L.: A theory of pseudo-skeleton approximations of matrices. Linear Algebra Appl. 261, 1–21 (1997)

Goreinov, S.A., Tyrtyshnikov, E.E.: The maximum-volume concept in approximation by low-rank matrices. Contemp. Math. 280, 47–51 (2001)

Grasedyck, L.: Hierarchical singular value decomposition of tensors. SIAM J. Matrix Anal. Appl. 31, 2029–2054 (2010)

Grasedyck, L., Kressner, D., Tobler, C.: A literature survey of low-rank tensor approximation techniques. GAMM-Mitteilungen 36, 53–78 (2013)

Hackbusch, W.: Tensorisation of vectors and their efficient convolution. Numer. Math. 119, 465–488 (2011)

Hackbusch, W.: Tensor Spaces and Numerical Tensor Calculus. Springer, Heilderberg (2012)

Hillar, C.J., Lim, L.-H.: Most tensor problems are NP-hard. J. ACM 60, Art. 45, 39 pp. (2013)

Horn, R.A., Johnson, C.R.: Matrix Analysis. Cambridge University Press, Cambridge/New York (1988)

Khoromskij, B.N.: Methods of Tensor Approximation for Multidimensional Operators and Functions with Applications, Lecture at the workschop, Schnelle Löser für partielle Differentialgleichungen, Oberwolfach, 18.-23.05 (2008)

Kolda, T.G., Bader, B.W.: Tensor decompositions and applications. SIAM Rev. 51, 455–500 (2009)

Lehoucq, R.B., Sorensen, D.C., Yang, C.: ARPACK User’s Guide: Solution of Large-Scale Eigenvalue Problems With Implicitly Restarted Arnoldi Methods (Software, Environments, Tools). SIAM, Philadelphia (1998)

Lim, L.-H.: Singular values and eigenvalues of tensors: a variational approach. In: Proceedings of IEEE International Workshop on Computational Advances in Multi-Sensor Adaptive Processing (CAMSAP ’05), Puerto Vallarta, vol. 1, pp. 129–132 (2005)

Mahoney, M.W., Maggioni, M., Drineas, P.: Tensor-CUR decompositions for tensor-based data. In: Proceedings of the 12th Annual ACM SIGKDD Conference, Philadelphia, pp. 327–336 (2006)

Oseledets, I.V.: On a new tensor decomposition. Dokl. Math. 80, 495–496 (2009)

Oseledets, I.V.: Tensor-Train decompositions. SIAM J. Sci. Comput. 33, 2295–2317 (2011)

Oseledets, I.V., Tyrtyshnikov, E.E.: Breaking the curse of dimensionality, or how to use SVD in many dimensions. SIAM J. Sci. Comput. 31, 3744–3759 (2009)

Rudelson, M., Vershynin, R.: Sampling from large matrices: an approach through geometric functional analysis. J. ACM 54, Art. 21, 19 pp. (2007)

Sarlos, T.: Improved approximation algorithms for large matrices via random projections. In: Proceedings of the 47th Annual IEEE Symposium on Foundations of Computer Science (FOCS), Berkeley, pp. 143–152 (2006)

Savas, B., Lim, L.-H.: Quasi-Newton methods on Grassmannians and multilinear approximations of tensors. SIAM J. Sci. Comput. 32, 3352–3393 (2010)

Stewart, G.W.: On the early history of the singular value decomposition. SIAM Rev. 35, 551–566 (1993)

ten Berge, J.M.F., Kiers, H.A.L.: Simplicity of core arrays in three-way principal component analysis and the typical rank of p × q × 2 arrays. Linear Algebra Appl. 294, 169–179 (1999)

Tucker, L.R.: Some mathematical notes on three-mode factor analysis. Psychometrika 31, 279–311 (1966)

Zhang, T., Golub, G.H.: Rank-one approximation to high order tensors. SIAM J. Matrix Anal. Appl. 23, 534–550 (2001)

Acknowledgements

We thank Daniel Kressner for his remarks.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Friedland, S., Tammali, V. (2015). Low-Rank Approximation of Tensors. In: Benner, P., Bollhöfer, M., Kressner, D., Mehl, C., Stykel, T. (eds) Numerical Algebra, Matrix Theory, Differential-Algebraic Equations and Control Theory. Springer, Cham. https://doi.org/10.1007/978-3-319-15260-8_14

Download citation

DOI: https://doi.org/10.1007/978-3-319-15260-8_14

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-15259-2

Online ISBN: 978-3-319-15260-8

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)