Abstract

Although several methods are available to compute the multilinear rank of multi-way arrays, they are not sufficiently robust with respect to the noise. In this paper, we propose a novel Multilinear Tensor Decomposition (MTD) method, namely R-MTD (Robust MTD), which is able to identify the multilinear rank even in the presence of noise. The latter is based on sparsity and group sparsity of the core tensor imposed by means of the \(l_1\) norm and the mixed-norm, respectively. After several iterations of R-MTD, the underlying core tensor is sufficiently well estimated, which allows for an efficient use of the minimum description length approach and an accurate estimation of the multilinear rank. Computer results show the good behavior of R-MTD.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The rank estimation problem has been considered for several decades. The noisy matrix case was firstly considered. Methods based on the computation of singular values and the use of either Akaike’s information criterion [1], the Bayesian Information Criterion (BIC) [2] or the Minimum Description Length (MDL) principle [3] were proposed. The singular values of a low-rank noiseless matrix can be sorted in decreasing order (see the red diamonds in Fig. 1). Then the estimated rank \(R^{est}\) can be computed by searching the breaking point of the singular value curve, which minimizes the MDL criterion [3]:

where \(\lambda _{i}\) is the i-th highest singular value and where \((I\times J)\) is the size of the considered matrix. Unfortunately, when the matrix is strongly noisy the MDL approach has difficulty finding the breaking point (see the blue circles in Fig. 1).

The rank estimation problem was also considered for arrays with more than two entries, commonly named tensors. Contrarily to the matrix case, there are several definitions of tensor rank. In the following, we will consider the definition related to the Multilinear Tensor Decomposition (MTD) model [4]:

where \(\mathbf {A}\in \mathbb {R}^{I_{1}\times R_{1}}\), \(\mathbf {B}\in \mathbb {R}^{I_{2}\times R_{2}}\) and \(\mathbf {C}\in \mathbb {R}^{I_{3}\times R_{3}}\) are the loading matrices and where \(\varvec{\mathcal {G}}\in \mathbb {R}^{R_{1}\times R_{2}\times R_{3}}\) is the core tensor of a third order tensor \(\varvec{\mathcal {F}}\). Note that \(\mathbf {X}_{:,j}\) denotes the j-th column of \(\mathbf {X}\) and \(\circ \) denotes the vector outer product operator. The multilinear rank of \(\varvec{\mathcal {F}}\) is given by the minimal values of \(R_{1}\), \(R_{2}\) and \(R_{3}\) for which the equality (2) holds. The classical Higher-Order Singular Value Decomposition (HOSVD) [5] is a special MTD, which is a direct extension of the matrix SVD to tensors. While the multilinear rank can be derived from the rank of the unfolding matrices of the considered tensor, the latter matrix ranks are difficult to estimate as explained previously. Thus authors proposed to minimize the nuclear norm of each unfolding matrix in order to find the minimal MTD [8] and consequently the multilinear rank. More recently, the SCORE algorithm [7], based on the modified eigenvalues of the considered noisy tensor, was proposed too. Unfortunately, such methods are not sufficiently robust with respect to the presence of noise.

In this paper, we propose a novel MTD method, namely R-MTD (Robust MTD), which is able to identify the multilinear rank even in the presence of noise. The latter is based on sparsity and group sparsity of the core tensor imposed by means of the \(l_1\) norm and the mixed-norm, respectively. Note that the mixed-norm was proved to be a convex envelope of rank and used to provide robust canonical polyadic and block term decomposition algorithms [9, 10]. After several iterations of R-MTD, the underlying core tensor is sufficiently well estimated, which allows for an efficient use of the MDL approach and an accurate estimation of the multilinear rank. Computer results show the good behavior of R-MTD.

2 Notions and Preliminaries

A scalar is denoted by an italic letter, e.g., x and I. A vector is denoted by a bold lowercase letter, e.g., \(\mathbf {x}\in \mathbb {R}^{I}\) and a matrix is represented by a bold capital letter, e.g., \(\mathbf {X}\in \mathbb {R}^{I\times J}\) and specially, \(\mathbf {I}\) is the identity matrix. The vectorization of \(\mathbf {X}\) is denoted by \(\mathrm {vec}(\mathbf {X})\in \mathbb {R}^{IJ\times 1}\). \(\mathbf {X}^{\dag }\) is the pseudo inverse of matrix \(\mathbf {X}\). The nuclear norm of a matrix \(\mathbf {X}\in \mathbb {R}^{I\times J}\) with rank r is equal to the sum of its singular values, i.e., \(\Vert \mathbf {X}\Vert _{*}=\sum _{i=1}^{r}\sigma _{i}\), and \(\Vert \mathbf {X}\Vert \) is the largest singular value. The symbol \(\mathrm {rank(.)}\) denotes the rank operator. The \(l_{0}\) norm, \(l_{1}\) norm and Frobenius norm of \(\mathbf {X}\in \mathbb {R}^{I\times J}\) are defined by \(\Vert \mathbf {X}\Vert _{0}=\sum _{i=1}^{I}\,\sum _{j=1}^{J}\,\mathbf {X}_{i,j}/{\mathbf {X}_{i,j}}\) with \({\mathbf {X}_{i,j}}\not =0\), \(\Vert \mathbf {X}\Vert _{1}=\sum _{i=1}^{I}\,\sum _{j=1}^{J}\,|\mathbf {X}_{i,j}|\) and \(\Vert \mathbf {X}\Vert _{F}=\sqrt{\sum _{i=1}^{I}\,\sum _{j=1}^{J}\,\mathbf {X}^{2}_{i,j}}\), respectively. The mixed-norm pair of \(\mathbf {X}\in \mathbb {R}^{I\times J}\) is given by  and

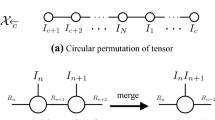

and  where Tr[.] is the trace operator, where \(\mathbf {\Phi }\) is a diagonal matrix with \(\mathbf {\Phi }_{i,i}=1/\sqrt{\sum _{j = 1}^{J}\,\mathbf {X}^{2}_{i,j}}\) denoting the (i, i)-th component of \(\mathbf {\Phi }\) and where \(\mathbf {\Psi }\) is a diagonal matrix with \(\mathbf {\Psi }_{j,j}=1/\sqrt{\sum _{i = 1}^{I}\,\mathbf {X}^{2}_{i,j}}\) standing for the (j, j)-th component of \(\mathbf {\Psi }\). Note that this trace compact definition is convenient for a convex optimization if \(\mathbf {\Phi }\) and \(\mathbf {\Psi }\) are fixed as weight matrices. A high-order tensor is denoted by a bold calligraphic letter, e.g., \(\varvec{\mathcal {X}}\in \mathbb {R}^{I_{1}\times I_{2}\times \dots \times I_{N}}\). The symbol \(\otimes \) denotes the Kronecker product operator. The n-th mode unfolding matrix of the tensor \(\varvec{\mathcal {X}}\in \mathbb {R}^{I_{1}\times I_{2}\times \dots \times I_{N}}\) is denoted by \(\mathbf {X}^{(n)}\in \mathbb {R}^{I_{n}\times (I_{n+1}I_{n+2}\dots I_{N}I_{1}I_{2}\dots I_{n-1})}\). The sub-tensor \(\varvec{\mathcal {X}}_{i_{n}=k}\) is obtained by fixing the n-th index to k. The scalar product of two tensors \(\varvec{\mathcal {X}}\in \mathbb {R}^{I_{1}\times I_{2}\times \dots \times I_{N}}\) and \(\varvec{\mathcal {Y}}\in \mathbb {R}^{I_{1}\times I_{2}\times \dots \times I_{N}}\) is defined by \(\left\langle \varvec{\mathcal {X}}, \varvec{\mathcal {Y}} \right\rangle =\sum _{i_1=1}^{I_1}\cdots \sum _{i_N=1}^{I_N}\, \varvec{\mathcal {X}}_{i_1, \cdots , i_N} \, \varvec{\mathcal {Y}}_{i_1,\cdots , i_N}\). The Frobenius norm of tensor \(\varvec{\mathcal {X}}\) is defined by \(\Vert \varvec{\mathcal {X}}\Vert _F = \sqrt{\sum _{i_1\ldots i_N}\,\varvec{\mathcal {X}}^{2}_{i_1,\ldots , i_N}}\).

where Tr[.] is the trace operator, where \(\mathbf {\Phi }\) is a diagonal matrix with \(\mathbf {\Phi }_{i,i}=1/\sqrt{\sum _{j = 1}^{J}\,\mathbf {X}^{2}_{i,j}}\) denoting the (i, i)-th component of \(\mathbf {\Phi }\) and where \(\mathbf {\Psi }\) is a diagonal matrix with \(\mathbf {\Psi }_{j,j}=1/\sqrt{\sum _{i = 1}^{I}\,\mathbf {X}^{2}_{i,j}}\) standing for the (j, j)-th component of \(\mathbf {\Psi }\). Note that this trace compact definition is convenient for a convex optimization if \(\mathbf {\Phi }\) and \(\mathbf {\Psi }\) are fixed as weight matrices. A high-order tensor is denoted by a bold calligraphic letter, e.g., \(\varvec{\mathcal {X}}\in \mathbb {R}^{I_{1}\times I_{2}\times \dots \times I_{N}}\). The symbol \(\otimes \) denotes the Kronecker product operator. The n-th mode unfolding matrix of the tensor \(\varvec{\mathcal {X}}\in \mathbb {R}^{I_{1}\times I_{2}\times \dots \times I_{N}}\) is denoted by \(\mathbf {X}^{(n)}\in \mathbb {R}^{I_{n}\times (I_{n+1}I_{n+2}\dots I_{N}I_{1}I_{2}\dots I_{n-1})}\). The sub-tensor \(\varvec{\mathcal {X}}_{i_{n}=k}\) is obtained by fixing the n-th index to k. The scalar product of two tensors \(\varvec{\mathcal {X}}\in \mathbb {R}^{I_{1}\times I_{2}\times \dots \times I_{N}}\) and \(\varvec{\mathcal {Y}}\in \mathbb {R}^{I_{1}\times I_{2}\times \dots \times I_{N}}\) is defined by \(\left\langle \varvec{\mathcal {X}}, \varvec{\mathcal {Y}} \right\rangle =\sum _{i_1=1}^{I_1}\cdots \sum _{i_N=1}^{I_N}\, \varvec{\mathcal {X}}_{i_1, \cdots , i_N} \, \varvec{\mathcal {Y}}_{i_1,\cdots , i_N}\). The Frobenius norm of tensor \(\varvec{\mathcal {X}}\) is defined by \(\Vert \varvec{\mathcal {X}}\Vert _F = \sqrt{\sum _{i_1\ldots i_N}\,\varvec{\mathcal {X}}^{2}_{i_1,\ldots , i_N}}\).

3 The R-MTD Method

3.1 Preliminary Tools

First let’s recall the definition of a convex envelope which will be used in the following analysis.

Definition 1

(Lower convex envelope). Let \( f : \mathbb {R}^{n}\rightarrow \mathbb {R}\) be a real-valued function. The convex envelope of \( f \), represented by \(\mathcal {C}_{f}\), is the convex pointwise largest function \(\mathcal {C}_{f} : \mathbb {R}^{n}\rightarrow \mathbb {R}\) which is pointwise less than \( f \). In other words, we have \(\mathcal {C}_{f} = sup\{ g : \mathbb {R}^{n}\rightarrow \mathbb {R} \, | \, g ~{ is~convex~and~} g (x) \le f (x) {~ for~ any~ } x\in \mathbb {R}^{n} \}\).

Now let’s study the lower convex envelopes of rank. The nuclear norm of \(\mathbf {X}\) is one of them in the norm ball, i.e., \(\Vert \mathbf {X}\Vert \le 1\), as shown in [12, Theorem 1] and since the low rank constraint involves a NP-hard optimization problem it is better to minimize the nuclear norm. On the other hand, an interesting relationship between the nuclear norm and the mixed-norm was established in [11, Proposition 1] for a thin matrix case, i.e., \(\mathbf {X}\in \mathbb {R}^{m \times n}\) with \(m>n\). We extended this result by means of the following Theorem 1 and Corollary 1.

Theorem 1

Given any matrix \(\mathbf {X}\in \mathbb {R}^{m \times n}\) and its orthonormal subspace decomposition denoted by \(\mathbf {X}=\mathbf {D}\varvec{\alpha }\) and \(\mathbf {X}=\varvec{\theta }\mathbf {Z}\), where \(\mathbf {D}\in \mathbb {R}^{m \times m}\) and \(\mathbf {Z}\in \mathbb {R}^{n \times n}\) are orthonormal matrices, with \(\varvec{\alpha }\in \mathbb {R}^{m \times n}\) and \(\varvec{\theta }\in \mathbb {R}^{m \times n}\), the mixed-norms of \(\varvec{\alpha }\) and \(\varvec{\theta }\) are larger than or equal to the nuclear norm of \(\mathbf {X}\), i.e. \(\Vert \varvec{\alpha }\Vert _{2,1} \ge \Vert \mathbf {X}\Vert _*\) and \(\Vert \varvec{\theta }\Vert _{1,2} \ge \Vert \mathbf {X}\Vert _*\).

Then we can easily derive the following corollary by fixing \(\mathbf {D}\) and \(\mathbf {Z}\) equal to the identity matrix in Theorem 1.

Corollary 1

We have \(\Vert \mathbf {X}\Vert _{2,1} \ge \Vert \mathbf {X}\Vert _*\) and \(\Vert \mathbf {X}\Vert _{1,2} \ge \Vert \mathbf {X}\Vert _*\).

In fact, for a matrix with full column rank or full row rank, the nuclear norm is not the tightest convex envelope of rank and the mixed-norm is more qualified to be a lower convex envelop of rank compared with the nuclear norm based on the Definition 1 and the explanation is given in the following theorem.

Theorem 2

Given any matrix \(\mathbf {X}\in \mathbb {R}^{m \times n}\) with linearly independent rows or columns, we have:

-

1.

If \(\mathbf {X}\) has linearly independent rows, which means that \(\mathrm {rank(\mathbf {X})}=m\) and \(m\le n\), then we get \(\mathrm {rank(\mathbf {X})}\ge \frac{\Vert \mathbf {X}\Vert _{2,1}}{\Vert \mathbf {X}\Vert } \ge \frac{\Vert \mathbf {X}\Vert _{*}}{\Vert \mathbf {X}\Vert }\).

-

2.

If \(\mathbf {X}\) has linearly independent columns, which means that \(\mathrm {rank(\mathbf {X})}=n\) and \(m\ge n\), then we get \(\mathrm {rank(\mathbf {X})}\ge \frac{\Vert \mathbf {X}\Vert _{1,2}}{\Vert \mathbf {X}\Vert } \ge \frac{\Vert \mathbf {X}\Vert _{*}}{\Vert \mathbf {X}\Vert }\).

Consequently, we will use the mixed-norm in the following subsection in order to impose the low rank property. Besides, we will also minimize the \(l_{1}\) norm of the over-estimated core tensor in order to vanish the residual nonzero elements for which the absolute values are close to zero.

3.2 Towards the R-MTD Algorithm

In order to guarantee a sufficient group sparsity of each unfolding matrix along the different dimensions, and each unfolding matrix consist with linear independent rows, the proposed method is implemented under the following assumption: \(R_{i}\ll \mathrm {min}\{I_{i}, \prod _{k=1,k\not =i}^{3}I_{k}\}\) and \(R_{i}\le \prod _{k=1,k\not =i}^{3}R_{k}\), \(i=1,2,3\).

R-MTD for Rank Estimation: The mixed-norm \(\big \Vert \widehat{\mathbf {G}}^{(i)}\big \Vert _{2,1}\) is considered as a lower convex envelope of rank in the objective function and the other mixed-norm \(\big \Vert \widehat{\mathbf {G}}^{(i)}\big \Vert _{1,2}\) is also adopted as a convex upper bound of the nuclear norm for a more robust estimation. The objective function is presented below for a third order multilinear tensor as an example but it is not hard to generalize it to higher orders:

where \(\widehat{\varvec{\mathcal {G}}}\) is the over-estimated core tensor, where \(\widehat{\mathbf {G}}^{(i)}\) are the unfolding matrices along the different dimensions and where \(\widehat{\mathbf {A}}\), \(\widehat{\mathbf {B}},\widehat{\mathbf {C}}\) are the corresponding over-estimated loading matrices. Here, we should note that \(\widehat{R}_{i}\) should be larger than \({R}_{i}\). The minimization problem (3) is solved by means of the Alternating Directions Method of Multiplier (ADMM) [14]:

The augmented Lagrangian function \(\mathcal {L}\) of the variables \(\widehat{\varvec{\mathcal {G}}}\), \(\widehat{\mathbf {A}}\), \(\widehat{\mathbf {B}}\), \(\widehat{\mathbf {C}}\), \(\widehat{\varvec{\mathcal {P}}}\), \(\widehat{\varvec{\mathcal {Y}}}\), \(\beta \), \(\mathbf {\Phi }^{(i)}\) and \(\mathbf {\Psi }^{(i)}\) can be written as follows using the mixed-norm definition given in Sect. 2:

where the parameters \(\lambda _{i}\), u and \(\beta \) are penalty coefficients and where \(\widehat{\varvec{\mathcal {Y}}}\) is the Lagrangian multiplier tensor. Now let’s derive the update rule of each variable. The mode-1 unfolding matrix of the core tensor \(\widehat{\varvec{\mathcal {G}}}\) is computed from the following gradient equation:

The solution of (6) can be calculated by Encapsulating Sum in [15], the \((k+1)\)-th iteration of \(\widehat{\mathbf {G}}^{(1)}\) is given by:

Consecutively, \(\widehat{\mathbf {G}}^{(2)}\) and \(\widehat{\mathbf {G}}^{(3)}\) at the \((k+1)\)-th iteration can be computed as follows:

Note that, the weighting matrices \(\mathbf {\Phi }_{k}^{(1)}\) and \(\mathbf {\Psi }_{k}^{(1)}\) are derived from \(\widehat{\mathbf {G}}_{k}^{(1)}\) as the definition in Sect. 2. For the computation of \(\mathbf {\Phi }_{k}^{(2)}\) and \(\mathbf {\Psi }_{k}^{(2)}\), they are calculated using the 2-th mode unfolding matrix of the updated core tensor \({\widehat{\mathbf {G}}_{k+1}^{(1)}}\). Identically, \(\mathbf {\Phi }_{k}^{(3)}\) and \(\mathbf {\Psi }_{k}^{(3)}\) are given using the updated core tensor \({\widehat{\mathbf {G}}_{k+1}^{(2)}}\). Then, updating factor matrices by using tensor \(\widehat{\varvec{\mathcal {G}}}_{k+1}\), i.e., \({\widehat{\mathbf {G}}_{k+1}^{(3)}}\). The sub-problem function about factor matrix \(\widehat{\mathbf {A}}\) in \((k+1)\)-th iteration is given as following:

The updated \(\widehat{\mathbf {A}}_{k+1}\) matrix at the \((k+1)\)-th iteration is given by:

Using \(\widehat{\mathbf {A}}_{k+1}\), \(\widehat{\mathbf {B}}_{k+1}\) at the \((k+1)\)-th iteration can be computed as follows:

Similarly, \(\widehat{\mathbf {C}}_{k+1}\) at the \((k+1)\)-th iteration is given by:

Regarding variable \(\widehat{\varvec{\mathcal {P}}}\), we have to minimize:

An equivalent problem of (14) is given by:

The solution of \(\widehat{\varvec{\mathcal {P}}}\) in problem (15) has been solved in [13] as follows:

where \(\mathbb {S}_{\tau }(x)=\mathrm {sign}(x)(|x|-\tau ,0), x\in \mathbb {R}\) is the soft-thresholding operator and it is used elementwise. The multiplier tensor \(\widehat{\varvec{\mathcal {Y}}}\) in \((k+1)\)-th iteration is calculated based on the following update rule:

The parameter \(\beta \) in \((k+1)\)-th iteration is updated as:

Finally, the proposed algorithm R-MTD stops when the convergence criterion is reached, i.e.,

with \(\mathrm {error}_{k+1}=\big \Vert \varvec{\mathcal {T}}-\widehat{\varvec{\mathcal {G}}}_{k+1}\times _{1}\widehat{\mathbf {A}}_{k+1}\times _{2}\widehat{\mathbf {B}}_{k+1}\times _{3}\widehat{\mathbf {C}}_{k+1}\Vert _{F}\) and \(\mathrm {error}_{k}=\big \Vert \varvec{\mathcal {T}}-\widehat{\varvec{\mathcal {G}}}_{k}\times _{1}\widehat{\mathbf {A}}_{k}\times _{2}\widehat{\mathbf {B}}_{k}\times _{3}\widehat{\mathbf {C}}_{k} \Vert _{F}\). Eventually, the values \(R_{i}^{est}\) are estimated using the MDL criterion (2) on the singular values derived from the SVD of the unfolding matrices \(\mathbf {\widehat{\mathbf {G}}}_{k+1}^{(i)}, i=1,2,3\).

R-MTD for Denoising: Once the multilinear rank is estimated, we can use it to replace the over-estimated rank in (3) and the penalty function for denoising is rewritten as follows:

Here, the weight parameters of the constraints are set to zero, i.e., \(\lambda _{i}=\gamma =0, i=1,2,3\). With these zero weight parameters, we can repeat the same procedure as described previously.

4 Experiment Results

In this section, the noisy simulated data is utilized to test the performance of R-MTD on rank estimation and signal denoising. A comparative study of our method with the recent and efficient SCORE algorithm [7] is also provided. For the proposed method, the selection of parameter \(\lambda _{i}, i=1,2,3\) should be larger when the size of underlying core tensor is smaller than the over-estimated core tensor size. Typically, these coefficients are set as \(\lambda _{i}=5, i=1,2,3\) and the other parameters \(\mu \), \(\beta \) and \(\rho \) are fixed as 0.025, 0.3 and 20, respectively. The selected parameters of SCORE method are chosen as the paper [7] advised. All results reported in this section are averaged over 100 Monte Carlo (MC) realizations.

Experimental Setting. The size of \(\varvec{\mathcal {F}}\) is fixed with (\(I_{1}=I_{2}=I_{3}=100\)) and we consider different sizes of the core tensor \(\varvec{\mathcal {G}}\): (\(R_{1}=R_{2}=R_{3}=3\)), (\(R_{1}=R_{2}=R_{3}=4\)) and (\(R_{1}=R_{2}=R_{3}=5\)). All the elements of factor matrices and the core tensor follow a Gaussian distribution. To implement our method, the over-estimated core size is set to \(\widehat{R}_{1}=\widehat{R}_{2}=\widehat{R}_{3}=10\). The noise tensor \(\varvec{\mathcal {E}}\in \mathbb {R}^{I_{1} \times I_{2} \times I_{3}}\) also follows Gaussian distribution with different Signal-to-Noise Ratio (SNR). Finally, the noisy tensor \(\varvec{\mathcal {T}}\) is obtained as follows:

where the parameter \(\sigma \) controls the SNR, i.e. \(\mathrm {SNR} = -20\mathrm {log}_{10}(\sigma )\).

Rank Estimation Performance. To evaluate the performance of all methods on rank estimation, we propose two criteria. The first one named Accuracy Rate (AR) measures the good estimate rate of all ranks, i.e. \(R_{1}=R_{1}^{est}, R_{2}=R_{2}^{est}\) and \(R_{3}=R_{3}^{est}\), and is defined as the following:

The second criterion, called Average Rank Estimation Error (AREE), measures the deviation between the estimated ranks and exact ranks. It is given by:

Figure 2, displays the obtained results of the rank estimation for different SNR and different sizes of the core tensor. We can see that the AR criterion obtained for R-MTD and SCORE is increasing as the SNR increases (first line of Fig. 2). We also observed that, for all cases of the size of the core tensor, the robustness to noise of the R-MTD outperforms the SCORE whatever the SNR is. The second line of Fig. 2 shows the AREE criterion. Clearly, the rank estimation errors of R-MTD are always smaller than those of the SCORE method for all scenarios and SNR.

Signal Denoising Efficiency. In this section, we consider the effectiveness and robustness of the proposed method on signal denoising. To do so, four combination cases of rank estimation methods and tensor decomposition ones are considered: R-MTD+R-MTD, R-MTD+HOOI [6], SCORE+T-HOSVD [5] and SCORE+HOOI. The reconstruction error is defined as the Average Relative Error (ARE), given by:

Figure 3 gives the effectiveness of the four compared methods. For SNR higher or equal to \(-5\) dB, all methods seem to have similar performances. Regarding the lower SNR, we can see that the performances of the SCORE+HOOI, R-MTD+R-MTD and R-MTD+HOOI are better than the SCORE+T-HOSVD. This can be explained by the fact that the T-HOSVD method only extract the first several components corresponding to the estimated rank without any denoising process, it cannot gives excellent denoising result for lower SNR cases. Besides, although AR results of the R-MTD is more effective than the SCORE, the denoising efficiency of SCORE+HOOI is almost the same as the efficiency of R-MTD+R-MTD and R-MTD+HOOI because of the smaller difference of AREE between the SCORE and the R-MTD.

5 Conclusion

We have proposed a new MTD method, named R-MTD, that permits to identify the multilinear rank of noisy data. To do so, a sparsity and group sparsity of the core tensor are imposed by means of the \(l_{1}\) norm and the mixed-norm, respectively. More precisely, we first over-estimate the core tensor, then an optimal core tensor is estimated from noisy multilinear tensor after several iterations of the R-MTD algorithm. We also assessed the importance of a good estimation of the rank of the core tensor to signal denoising. Different experiments conducted using simulated data demonstrated the effectiveness of the R-MTD algorithm both for rank estimation and for data denoising.

References

Wax, M., Kailath, T.: Detection of signals by information theoretic criteria. IEEE Trans. Acoust. Speech Signal Process. 33(2), 387–392 (1985)

Schwarz, G.: Estimating the dimension of a model. Ann. Stat. 6(2), 461–464 (1978)

Rissanen, J.: Modeling by shortest data description. Automatica 14(5), 465–471 (1978)

Tucker, L.R.: Implications of factor analysis of three-way matrices for measurement of change. In: Problems in Measuring Change, pp. 122–137 (1963)

De Lathauwer, L., De Moor, B., Vandewalle, J.: A multilinear singular value decomposition. SIAM J. Matrix Anal. Appl. 21(4), 1253–1278 (2000)

De Lathauwer, L., De Moor, B., Vandewalle, J.: On the best rank-1 and rank-(\(\mathit{R}_{1}, \mathit{R}_{2},\ldots, \mathit{R}_{n}\)) approximation of higher-order tensors. SIAM J. Matrix Anal. 21(4), 1324–1342 (2000)

Yokota, T., Lee, N., Cichocki, A.: Robust multilinear tensor rank estimation using higher order singular value decomposition and information criteria. IEEE Trans. Signal Process. 65(5), 1196–1206 (2017)

Xie, Q., Zhao, Q., Meng, D., Xu, Z.: Kronecker-basis-representation based tensor sparsity and its applications to tensor recovery. IEEE Trans. Pattern Anal. Mach. Intell. (2017)

Han, X., Albera, L., Kachenoura, A., Senhadji, L., Shu, H.: Low rank canonical polyadic decomposition of tensors based on group sparsity. In: IEEE 25th European Signal Processing Conference (EUSIPCO), pp. 668–672 (2017)

Han, X., Albera, L., Kachenoura, A., Senhadji, L., Shu, H.: Block term decomposition with rank estimation using group sparsity. In: Computational Advances in Multi-Sensor Adaptive Processing (CAMSAP) (2017)

Shu, X., Porikli, F., Ahuja, N.: Robust orthonormal subspace learning: efficient recovery of corrupted low-rank matrices. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3874–3881 (2014)

Fazel, M.: Matrix rank minimization with applications. Ph.D. dissertation, Ph.D. thesis, Stanford University (2002)

Donoho, D.L., Johnstone, I.M.: Adapting to unknown smoothness via wavelet shrinkage. J. Am. Stat. Assoc. 90(432), 1200–1224 (1995)

Boyd, S., Parikh, N., Chu, E., Peleato, B., Eckstein, J.: Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. \(Trends^{\textregistered }\) Mach. Learn. 3(1), 1–122 (2011)

Petersen, K.B., Pedersen, M.S., et al.: The Matrix Cookbook. Technical University of Denmark, pp. 7–15 (2008)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Han, X., Albera, L., Kachenoura, A., Shu, H., Senhadji, L. (2018). Robust Multilinear Decomposition of Low Rank Tensors. In: Deville, Y., Gannot, S., Mason, R., Plumbley, M., Ward, D. (eds) Latent Variable Analysis and Signal Separation. LVA/ICA 2018. Lecture Notes in Computer Science(), vol 10891. Springer, Cham. https://doi.org/10.1007/978-3-319-93764-9_1

Download citation

DOI: https://doi.org/10.1007/978-3-319-93764-9_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-93763-2

Online ISBN: 978-3-319-93764-9

eBook Packages: Computer ScienceComputer Science (R0)