Abstract

In this paper we discuss the notion of singular vector tuples of a complex-valued \(d\)-mode tensor of dimension \(m_1\times \cdots \times m_d\). We show that a generic tensor has a finite number of singular vector tuples, viewed as points in the corresponding Segre product. We give the formula for the number of singular vector tuples. We show similar results for tensors with partial symmetry. We give analogous results for the homogeneous pencil eigenvalue problem for cubic tensors, i.e., \(m_1=\cdots =m_d\). We show the uniqueness of best approximations for almost all real tensors in the following cases: rank-one approximation; rank-one approximation for partially symmetric tensors (this approximation is also partially symmetric); rank-\((r_1,\ldots ,r_d)\) approximation for \(d\)-mode tensors.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The object of this paper is to study two closely related topics: counting the number of singular vector tuples of complex tensor and the uniqueness of a best rank-one approximation of real tensors. To state our results, we introduce notation that will be used in the paper. Let \(\mathbb {F}\) be either the field of real or complex numbers, denoted by \(\mathbb {R}\) and \(\mathbb {C}\) respectively, unless stated otherwise. For each \(\mathbf {x}\in \mathbb {F}^m\setminus \{\mathbf {0}\}\) we denote by \([\mathbf {x}]:=\mathrm {span}(\mathbf {x})\) the line through the origin spanned by \(\mathbf {x}\) in \(\mathbb {F}^m\). Then \(\mathbb {P}(\mathbb {F}^{m})\) is the space of all lines through the origin in \(\mathbb {F}^m\). We say that \(\mathbf {x}\in \mathbb {F}^m,[\mathbf {y}]\in \mathbb {P}(\mathbb {F}^m)\) are generic if there exist subvarietes \(U\subsetneq \mathbb {F}^m, V\subsetneq \mathbb {P}(\mathbb {F}^m)\) such that \(\mathbf {x}\in \mathbb {F}^m\setminus U, [\mathbf {y}]\in \mathbb {P}(\mathbb {F}^m)\setminus V\). A set \(S\subset \mathbb {F}^m\) is called closed if it is a closed set in the Euclidean topology. We say that a property \(P\) holds almost everywhere (a.e.) in \(\mathbb {R}^n\) if \(P\) does not hold on a measurable set \(S\subset \mathbb {R}^n\) of a zero Lebesgue measure. Equivalently, we say that almost all (a.a.) \(\mathbf {x}\in \mathbb {R}^n\) satisfy \(P\).

For \(d\in \mathbb {N}\) denote \([d]:=\{1,\ldots ,d\}\). Let \(m_i\ge 2\) be an integer for \(i\in [d]\). Denote \(\mathbf {m}:=(m_1,\ldots ,m_d)\). Let \(\Pi _\mathbb {F}(\mathbf {m}):=\mathbb {P}(\mathbb {F}^{m_1})\times \ldots \times \mathbb {P}(\mathbb {F}^{m_d})\). We call \(\Pi _\mathbb {F}(\mathbf {m})\) the Segre product. Set \(\Pi (\mathbf {m}):=\Pi _\mathbb {C}(\mathbf {m})\). Denote by \(\mathbb {F}^{\mathbf {m}}=\mathbb {F}^{m_1\times \ldots \times m_d}:=\otimes _{i=1}^d \mathbb {F}^{m_i}\) the vector space of \(d\)-mode tensors \(\mathcal {T}=[t_{i_1,\ldots ,i_d}], i_j=1,\ldots ,m_j, j=1,\ldots ,d\) over \(\mathbb {F}\). (We assume that \(d\ge 3\) unless stated otherwise.) For an integer \(p\in [d]\) and for \(\mathbf {x}_{j_r}\in \mathbb {F}^{m_{j_r}}, r\in [p] \), we use the notation \(\otimes _{j_r,r\in [p]}\mathbf {x}_{j_r}:=\mathbf {x}_{j_1}\otimes \ldots \otimes \mathbf {x}_{j_p}\). For a subset \(P=\{j_1,\ldots ,j_p\}\subseteq [d]\) of cardinality \(p=|P|\), consider a \(p\)-mode tensor \(\mathcal {X}=[x_{i_{j_1},\ldots ,i_{j_p}}]\in \otimes _{j_r, r\in [p]}\mathbb {F}^{m_{j_r}}\), where \(j_1<\ldots <j_p\). Define

as a \((d-p)\)-mode tensor obtained by contraction on the indices \(i_{j_1},\ldots ,i_{j_p}\).

To motivate our results, let us consider the classical case of matrices, i.e., \(d=2\) and \(A\in \mathbb {R}^{m_1 \times m_2}\). We call a pair \((\mathbf {x}_1,\mathbf {x}_2)\in (\mathbb {R}^{m_1}\setminus \{\mathbf {0}\})\times (\mathbb {R}^{m_2}\setminus \{\mathbf {0}\})\) a singular vector pair if

for some \(\lambda _1,\lambda _2\in \mathbb {R}\). For \(\mathbf {x}\in \mathbb {R}^m\) let \(\Vert \mathbf {x}\Vert :=\sqrt{\mathbf {x}^\top \mathbf {x}}\) be the Euclidean norm on \(\mathbb {R}^m\). Choosing \(\mathbf {x}_1,\mathbf {x}_2\) to be of Euclidean length one we deduce that \(\lambda _1=\lambda _2\), where \(|\lambda _1|\) is equal to some singular value of \(A\). It is natural to identify all singular vector pairs of the form \((a_1\mathbf {x}_1,a_2\mathbf {x}_2)\), where \(a_1a_2\ne 0\), as the class of singular vector pairs. Thus \(([\mathbf {x}_1],[\mathbf {x}_2])\in \mathbb {P}(\mathbb {R}^{m_1})\times \mathbb {P}(\mathbb {R}^{m_2})\) is called a singular vector pair of \(A\).

For a generic \(A\), i.e., \(A\) of the maximal rank \(r=\min (m_1,m_2)\) and \(r\) distinct positive singular values, \(A\) has exactly \(r\) distinct singular vector pairs. Furthermore, under these conditions \(A\) has a unique best rank-one approximation in the Frobenius norm given by the singular vector pair corresponding to the maximal singular value [10].

Assume now that \(m=m_1=m_2\) and \(A\) is a real symmetric matrix. Then the singular values of \(A\) are the absolute values of the eigenvalues of \(A\). Furthermore, if all the absolute values of the eigenvalues of \(A\) are pairwise distinct, then \(A\) has a unique best rank-one approximation, which is symmetric. Hence, for any real symmetric matrix \(A\) there exists a best rank-one approximation which is symmetric.

In this paper we derive similar results for tensors. Let \(\mathcal {T}\in \mathbb {F}^{\mathbf {m}}\). We first define the notion of a singular vector tuple \((\mathbf {x}_1,\ldots ,\mathbf {x}_d)\in (\mathbb {F}^{m_1}\setminus \{\mathbf {0}\})\times \ldots \times (\mathbb {F}^{m_d}\setminus \{\mathbf {0}\})\) [16]:

As for matrices we identify all singular vector tuples of the form \((a_1\mathbf {x}_1,\ldots ,a_d\mathbf {x}_d)\), \(a_1\ldots a_d\ne 0\) as one class of singular vector tuple in \(([\mathbf {x}_1],\ldots ,[\mathbf {x}_d])\in \Pi _\mathbb {F}(\mathbf {m})\). (Note that for \(d=2\) and \(\mathbb {F}=\mathbb {C}\) our notion of singular vector pair differs from the classical notion of singular vectors for complex-valued matrices; see § 3.)

Let \(([\mathbf {x}_1],\ldots ,[\mathbf {x}_d])\in \Pi (\mathbf {m})\) be a singular vector tuple of \(\mathcal {T}\in \mathbb {C}^{\mathbf {m}}\). This tuple corresponds to a zero (nonzero) singular value if \(\prod _{i\in [d]}\lambda _i=0\; (\ne 0)\). This tuple is called a simple singular vector tuple (or just simple) if the corresponding global section corresponding to \(\mathcal {T}\) has a simple zero at \(([\mathbf {x}_1],\ldots ,[\mathbf {x}_d])\); see Lemma 11 in § 3.

Our first major result is the following theorem.

Theorem 1

Let \(\mathcal {T}\in \mathbb {C}^{\mathbf {m}}\) be generic. Then \(\mathcal {T}\) has exactly \(c(\mathbf {m})\) simple singular vector tuples that correspond to nonzero singular values. Furthermore, \(\mathcal {T}\) does not have a zero singular value. In particular, a generic real-valued tensor \(\mathcal {T}\in \mathbb {R}^\mathbf {m}\) has at most \(c(\mathbf {m})\) real singular vector tuples corresponding to nonzero singular values, and all of them are simple. The integer \(c(\mathbf {m})\) is the coefficient of the monomial \(\prod _{i=1}^ d t_i^{m_i-1}\) in the polynomial

At the end of §3 we list the first values of \(c(\mathbf {m})\) for \(d=3\). We generalize the preceding results to the class of tensors with given partial symmetry.

We now consider the cubic case where \(m_1=\cdots =m_d=m\). For an integer \(m\ge 2\) let \(m^{\times d}:=(\underbrace{m,\ldots ,m}_d)\). Then \(\mathcal {T}\in \mathbb {F}^{m^{\times d}}\) is called \(d\)-cube, or simply a cube tensor. For a vector \(\mathbf {x}\in \mathbb {C}^m\) let \(\otimes ^k\mathbf {x}:=\underbrace{\mathbf {x}\otimes \ldots \otimes \mathbf {x}}_{k}\). Assume that \(\mathcal {T},\mathcal {S}\in \mathbb {C}^{m^{\times d}}\). Then the homogeneous pencil eigenvalue problem is to find all vectors \(\mathbf {x}\) and scalars \(\lambda \) satisfying \(\mathcal {T}\times \otimes ^{d-1}\mathbf {x}=\lambda \mathcal {S}\times \otimes ^{d-1}\mathbf {x}\). The contraction here is with respect to the last \(d-1\) indices of \(\mathcal {T},\mathcal {S}\). We assume without loss of generality that \(\mathcal {T}=[t_{i_1,\ldots ,i_d}],\mathcal {S}=[s_{i_1,\ldots ,i_d}]\) are symmetric with respect to the indices \(i_2,\ldots ,i_d\). \(\mathcal {S}\) is called nonsingular if the system \(\mathcal {S}\times \otimes ^{d-1}\mathbf {x}=\mathbf {0}\) implies that \(\mathbf {x}=0\). Assume that \(\mathcal {S}\) is nonsingular and fixed. Then \(\mathcal {T}\) has exactly \(m(d-1)^{m-1}\) eigenvalues counted with their multiplicities. \(\mathcal {T}\) has \(m(d-1)^{m-1}\) distinct eigenvectors in \(\mathbb {P}(\mathbb {C}^m)\) for a generic \(\mathcal {T}\). See [21] for the case where \(\mathcal {S}\) is the identity tensor.

View \(\mathbb {R}^{m_1\times \ldots m_d}\) as an inner product space, where for two \(d\)-mode tensors \(\mathcal {T},\mathcal {S}\in \mathbb {R}^{m_1\times \ldots \times m_d}\) we let \(\langle \mathcal {T},\mathcal {S}\rangle := \mathcal {T}\times \mathcal {S}\). Then the Hilbert–Schmidt norm is defined as \(\Vert \mathcal {T}\Vert :=\sqrt{\langle \mathcal {T},\mathcal {T}\rangle }\). [Recall that for \(d=2\) (matrices) the Hilbert–Schmidt norm is called the Frobenius norm.] A best rank-one approximation is a solution to the minimal problem

\(\otimes _{i\in [d]}\mathbf {u}_i\) is called a best rank-one approximation of \(\mathcal {T}\). Our second major result is as follows.

Theorem 2

-

1.

For a.a. \(\mathcal {T}\in \mathbb {R}^{\mathbf {m}}\) a best rank-one approximation is unique.

-

2.

Let \(\mathrm {S}^d(\mathbb {R}^m)\subset \mathbb {R}^{m^{\times d}}\) be the space of \(d\)-mode symmetric tensors. For a.a. \(\mathcal {S}\in \mathrm {S}^d(\mathbb {R}^m)\) a best rank-one approximation of \(\mathcal {S}\) is unique and symmetric. In particular, for each \(\mathcal {S}\in \mathrm {S}^d(\mathbb {R}^m)\) there exists a best rank-one approximation that is symmetric.

The last statement of part 2 of this theorem was demonstrated by the first named author in [7]. Actually, this result is equivalent to Banach’s theorem [1]. See [23] for another proof of Banach’s theorem. In Theorem 12 we generalize part 2 of Theorem 2 to the class of tensors with given partial symmetry.

Let \(\mathbf {r}=(r_1,\ldots ,r_d)\), where \(r_i\in [m_i]\) for \(i\in [d]\). In the last section of this paper we study a best rank-\(\mathbf {r}\) approximation for a real \(d\)-mode tensor [6]. We show that for a.a. tensors a best rank-\(\mathbf {r}\) approximation is unique.

We now describe briefly the contents of our paper. In § 2 we give a layman’s introduction to some basic notions of vector bundles over compact complex manifolds and Chern classes of certain bundles over the Segre product needed for this paper. We hope that this introduction will make our paper accessible to a wider audience. § 3 discusses the first main contribution of this paper, namely, the number of singular vector tuples of a generic complex tensor is finite and is equal to \(c(\mathbf {m})\). We give a closed formula for \(c(\mathbf {m})\), as in (1.3). § 4 generalizes these results to partially symmetric tensors. In particular, we reproduce the result of Cartwright and Sturmfels for symmetric tensors [3]. In § 5 we discuss a homogeneous pencil eigenvalue problem. In § 6 we give certain conditions on a general best approximation problem in \(\mathbb {R}^n\), which are probably well known to the experts. In § 7 we give uniqueness results on the best rank-one approximation of partially symmetric tensors. In § 8 we discuss a best rank-\(\mathbf {r}\) approximation.

We thank J. Draisma, who pointed out the importance of distinguishing between isotropic and nonisotropic vectors, as we do in § 3.

2 Vector Bundles over Compact Complex Manifolds

In this section we recall some basic results on complex manifolds and holomorphic tangent bundles that we use in this paper. Our object is to give the simplest possible intuitive description of basic results in algebraic geometry needed in this paper, sometimes compromising the rigor. An interested reader can consult with [11] for general facts about complex manifolds and complex vector bundles, and for a simple axiomatic exposition on complex vector bundles with [15]. For a Bertini-type theorem we refer the reader to Fulton [8] and Hartshorne [12].

2.1 Complex Compact Manifolds

Let \(M\) be a compact complex manifold of dimension \(n\). Thus, there exists a finite open cover \(\{U_i\}, i\in [N]\) with coordinate homeomorphism \(\phi _i: U_i\rightarrow \mathbb {C}^n\) such that \(\phi _i\circ \phi _j^{-1}\) is holomorphic on \(\phi _j(U_i\cap U_j)\) for all \(i,j\).

As an example, consider the \(m-1\)-dimensional complex projective space \(\mathbb {P}(\mathbb {C}^m)\), which is the set of all complex lines in \(\mathbb {C}^{m}\) through the origin. Any point in \(\mathbb {P}(\mathbb {C}^{m})\) is represented by a one-dimensional subspace spanned by the vector \(\mathbf {x}=(x_1,\ldots ,x_{m})^\top \in \mathbb {C}^{m}\setminus \{\mathbf {0}\}\). The standard open cover of \(\mathbb {P}(\mathbb {C}^m)\) consists of \(m\) open covers \(U_1,\ldots ,U_{m}\), where \(U_i\) corresponds to the lines spanned by \(\mathbf {x}\) with \(x_i\ne 0\). The homeomorphism \(\phi _i\) is given by \(\phi _i(\mathbf {x})=(\frac{x_1}{x_i},\ldots , \frac{x_{i-1}}{x_i},\frac{x_{i+1}}{x_i},\ldots ,\frac{x_{m}}{x_i})^\top \). Thus, each \(U_i\) is homeomorphic to \(\mathbb {C}^{m-1}\).

Let \(M\) be an \(n\)-dimensional compact complex manifold, as previously. For \(\zeta \in U_i\), the coordinates of the vector \(\phi _i(\zeta )=\mathbf {z}=(z_1,\ldots ,z_n)^\top \) are called the local coordinates of \(\zeta \). Since \(\mathbb {C}^n\equiv \mathbb {R}^{2n}\), \(M\) is a real manifold of real dimension \(2n\). Let \(z_j=x_j+\mathbf {i}y_j, \bar{z}_j=x_j-\mathbf {i}y_j,j\in [n]\), where \(\mathbf {i}=\sqrt{-1}\). For simplicity of notation we let \(\mathbf {u}=(u_1,\ldots ,u_{2n})= (x_1,y_1,\ldots ,x_n,y_n)\) be the real local coordinates on \(U_i\). Any function \(f:U_i\rightarrow \mathbb {C}\) in the local coordinates is viewed as \(f(\mathbf {u})=g(\mathbf {u})+\mathbf {i}h(\mathbf {u})\), where \(h,g: U_i\rightarrow \mathbb {R}\). Thus, \(df=\sum _{j\in [2n]} \frac{\partial f}{\partial u_j} du_j\). For a positive integer \(p\), a (differential) \(p\)-form \(\omega \) on \(U_i\) is given in the local coordinates as follows:

(\( f_{i_1,\ldots ,i_p}(\mathbf {u})\) are differentiable functions in local coordinates \(\mathbf {u}\) for \(1\le i_1<\ldots <i_p\le 2n\).) Recall that the wedge product of two differential is anticommutative, i.e., \(du_k\wedge du_l=-du_l\wedge du_k\). Then

(Recall that a differential \(0\)-form is a function.) Note that for \(p>2n\) any differential \(p\)-form is a zero form. A straightforward calculation shows that \(d(d\omega )=0\). \(\omega \) is a \(p\)-form on \(M\) if its restriction to each \(U_i\) is a \(p\)-form, and the restrictions of these two forms on \(U_i\cap U_j\) are obtained from another by the change of coordinates \(\phi _i\circ \phi _j^{-1}\). \(\omega \) is called closed if \(d\omega =0\), and \(d\omega \) is called an exact form. The space of closed \(p\)-forms modulo exact \(p\)-forms is a finite-dimensional vector space over \(\mathbb {C}\), which is denoted by \(\mathrm {H}^p(M)\). Each element of \(\mathrm {H}^p(M)\) is represented by a closed \(p\)-form, and the difference between two representatives is an exact form. Since the product of two forms is also a form, it follows that the space of all closed forms modulo exact forms is a finite-dimensional algebra, where the identity \(1\) corresponds to the constant function with value \(1\) on \(M\).

2.2 Holomorphic Vector Bundles

A holomorphic vector bundle \(E\) on \(M\) of rank \(k\), where \(k\) is a nonnegative integer, is a complex manifold of dimension \(n+k\), which can be simply described as follows. There exists a finite open cover \(\{U_i\},i\in [N]\) of \(M\) with the aforementioned properties satisfying the following additional conditions. At each \(\zeta \in U_i\) we are given \(k\)-dimensional vector space \(E_\zeta \), called a fiber of \(E\) over \(\zeta \), all of which can be identified with a fixed vector space \(\mathbf {V}_i\), having a basis \([\mathbf {e}_{1,i},\ldots ,\mathbf {e}_{k,i}]\). For \(\zeta \in U_i\cap U_j, i\ne j\) the transition matrix from \([\mathbf {e}_{1,i},\ldots ,\mathbf {e}_{k,i}]\) to \([\mathbf {e}_{1,j},\ldots ,\mathbf {e}_{k,j}]\) is given by a \(k\times k\) invertible matrix \(g_{U_j U_i}(\zeta )\). Thus, \([\mathbf {e}_{1,i},\ldots ,\mathbf {e}_{k,i}]=[\mathbf {e}_{1,j},\ldots ,\mathbf {e}_{k,j}] g_{U_j U_i}(\zeta )\). Each entry of \(g_{U_j U_i}(\zeta )\) is a holomorphic function in the local coordinates of \(U_j\). We have the following relations:

(\(I_k\) is an identity matrix of order \(k\).)

For \(k=0\), \(E\) is called a zero bundle. \(E\) is called a line bundle if \(k=1\). \(E\) is called a trivial bundle if there exists a finite open cover such that each \(g_{U_i U_j}(\zeta )\) is an identity matrix. A vector bundle \(F\) on \(M\) is called a subbundle of \(E\) if \(F\) is a submanifold of \(E\) such that \(F_\zeta \) is a subspace of \(E_\zeta \) for each \(\zeta \in M\). Assume that \(F\) is a subbundle of \(E\). Then \(G:=E/F\) is the quotient bundle of \(E\) and \(F\), where \(G_\zeta \) is the quotient vector space \(E_\zeta /F_\zeta \). Let \(E_1,E_2\) be two vector bundles on \(M\). We can create the following new bundles on \(M\): \(E:=E_1\oplus E_2, F:=E_1\otimes E_2, {H:=\mathrm Hom (E_1,E_2)}\). Here, \(E_\zeta =E_{1,\zeta }\oplus E_{2,\zeta }, F_\zeta =E_{1,\zeta }\otimes E_{2,\zeta }\), and \(H_\zeta \) consists of all linear transformations from \(E_{1,\zeta }\) to \(E_{2,\zeta }\). In particular, the vector bundle \(\mathrm Hom (E_1,E_2)\), where \(E_2\) is the one-dimensional trivial bundle, is called a dual bundle of \(E_1\) and is denoted by \(E_1^\vee \). Recall that \(\mathrm Hom (E_1,E_2)\) is isomorphic to \(E_2\otimes E_1^\vee \). For a given vector bundle \(E\) on \(M\) we can define the bundle \(F:=\otimes ^d E\). Here \(F_\zeta =\otimes ^d E_\zeta \) is a fiber of \(d\)-mode tensors.

Let \(M,M'\) be compact complex manifolds, and assume that \(f:M'\rightarrow M\) is holomorphic. Assume that \(\pi :E\rightarrow M\) is a holomorphic vector bundle. Then one can pull back \(E\) to obtain a bundle \(\pi ':E'\rightarrow M'\), where \(E'=f^*E\).

Given a manifold \(M_i\) with a vector bundle \(E_i\) for \(i=1,2\), we can define the bundle \(F:=E_1\oplus E_2, G:=E_1\otimes E_2\) on \(M:= M_1\times M_2\) by the equality

A special case for \(F\) occurs when one of the factors \(E_i\) is a zero bundle, say \(E_2=0\). Then \(E_1\oplus 0\) is the pullback of the bundle \(E_1\) on \(M_1\) obtained by using the projection \(\pi _1: M_1\times M_2\) and is denoted as the bundle \(\pi _1^* E_1\) on \(M_1\times M_2\). Thus, \(E_1\oplus E_2\) is the bundle \(\pi _1^* E_1\oplus \pi _2^* E_2\) on \(M_1\times M_2\). Similarly, \(E_1\otimes E_2\) is the bundle \(\pi _1^* E_1\otimes \pi _2^* E_2\).

We now discuss a basic example used in this paper. Consider the trivial bundle \(F(m)\) on \(\mathbb {P}(\mathbb {C}^{m})\) of rank \(m\). Thus, \(F(m)_\zeta =\mathbb {C}^m\). The tautological line bundle \(T(m)\) on \(\mathbb {P}(\mathbb {C}^{m})\), customarily denoted by \(\mathcal {O}(-1)\), is given by \(T(m)_{[\mathbf {x}]}=\mathrm {span}(\mathbf {x})\subset \mathbb {C}^m\). Thus, \(T(m)\) is a subbundle of \(F(m)\). Denote by \(Q(m)\) the quotient bundle \(F(m)/T(m)\). Hence, \(\mathrm {rank\;}Q(m)=m-1\). We have an exact sequence of the following bundles on \(\mathbb {P}(\mathbb {C}^{m})\):

The dual of the bundle of \(T(m)\), also called the hyperplane line bundle, is denoted here by \(H(m)\). [\(H(m)\) is customarily denoted by \(\mathcal {O}(1)\) in the algebraic geometry literature.]

2.3 Chern Polynomials

We now return to a holomorphic vector bundle \(E\) on a compact complex manifold \(M\). The seminal work of Chern [5] associates with each \(\pi :E\rightarrow M\) the Chern class \(c_j(E)\) for each \(j\in [\dim M]\). One can view \(c_j(E)\) as an element in \(\mathrm {H}^{2j}(M)\). The Chern classes needed in this paper can be determined by the following well-known rules [15].

One associate with \(E\) the Chern polynomial \(C(t,E)=1+\sum _{j=1}^{\mathrm {rank\;}E} c_j(E)t^j\). Note that \(c_j(E)=0\) for \(j>\dim M\). The total Chern class \(c(E)\) is \(C(1,E)=\sum _{j=0}^{\infty } c_j(E)\). Consider the formal factorization \(C(t,E)=\prod _{j=1}^{\mathrm {rank\;}E}(1+\xi _j(E)t)\). Then the Chern character \(ch(E)\) of \(E\) is defined as \(\sum _{j=1}^{\mathrm {rank\;}E}e^{\xi _j(E)}\).

\(C(t,E)=1\) if \(E\) is a trivial bundle. The Chern polynomial of the dual bundle is given by \(C(t,E^\vee )=C(-t,E)\). Given an exact sequence of bundles

we have the identity

which is equivalent to \(c(F)=c(E)c(G)\).

The product formula is the identity \(ch(E_1\otimes E_2)=ch(E_1)ch(E_2)\). Let \(f:M'\rightarrow M\). Then \(c_j(f^*E)\), viewed as a differential form in \(\mathrm {H}^{2j}(M')\), is obtained by pullback of the differential form \(c_j(E)\). In particular, for the pullback bundle \(\pi _1^* E_1\) described previously, we have the equality \(c_j(\pi _1^* E_1)= c_j(E_1)\) when we use the local coordinates \(\zeta =(\zeta _1,\zeta _2)\) on \(M_1\times M_2\).

Assume that \(\mathrm {rank\;}E=\dim M=n\). Then \(c_n(E)=\nu (E)\omega \), where \(\omega \in \mathrm {H}^{2n}(M)\) is the volume form on \(M\) such that \(\omega \) is a generator of \(\mathrm {H}^{2n}(M,\mathbf {Z})\). Then \(\nu (E)\) is an integer, which is called the top Chern number of \(E\).

Denote by \(s_m\) the first Chern class of \(H(m)\), which belongs to \(\mathrm {H}^2(\mathbb {P}(\mathbb {C}^m))\). Then \(s_m^k\) represents the differential form \( \wedge ^k s_m\in \mathrm {H}^{2k}(\mathbb {P}(\mathbb {C}^m))\). Observe that \(s_m^m=0\). Moreover the algebra of all closed forms modulo the exact forms on \(\mathbb {P}(\mathbb {C}^m)\) is \(\mathbb {C}[s_m]/(s_m^m)\), i.e. all polynomials in the variable \(s_m\) modulo the relation \(s_m^m=0\). So \(C(t,H(m))=1+ s_mt\) and \(C(t,T(m))=1- s_mt\). The exact sequence (2.1) and the formula (2.2) imply that

Therefore

2.4 Certain Bundles on Segre Product

Let \(m_1,\ldots ,m_d\ge 2\) be given integers with \(d>1\). Use the notation \(\mathbf {m}_i=(m_1,\ldots ,m_{i-1},m_{i+1},\ldots ,m_d)\) for \(i\in [d]\). Consider the Segre product \(\Pi (\mathbf {m}):=\mathbb {P}(\mathbb {C}^{m_1})\times \ldots \times \mathbb {P}(\mathbb {C}^{m_d})\). Let \(\pi _i:\Pi (\mathbf {m})\rightarrow \mathbb {P}(\mathbb {C}^{m_i})\) be the projections on the \(i\)th component. Then \(\pi _i^* H(m_i),\pi _i^* Q(m_i),\pi _i^*F(m_i)\) are the pullback of the bundles \(H(m_i),Q(m_i),F(m_i)\) on \(\mathbb {P}(\mathbb {C}^{m_i})\) to \(\Pi (\mathbf {m})\), respectively.

Consider the map \(\iota _\mathbf {m}: \Pi (\mathbf {m})\rightarrow \mathbb {P}(\mathbb {C}^\mathbf {m})\) given by \(\iota _\mathbf {m}([\mathbf {x}_1],\ldots ,[\mathbf {x}_d])=[\otimes _{i\in [d]}\mathbf {x}_i]\). It is straightforward to show that \(\iota \) is \(1-1\). Then \(\Sigma (\mathbf {m}):=\iota _\mathbf {m}(\Pi (\mathbf {m}))\subset \mathbb {P}(\mathbb {C}^\mathbf {m})\) is the Segre variety. Let \(T(\mathbf {m})\) be a tautological line bundle on \(\mathbb {P}(\mathbb {C}^\mathbf {m})\). The identity \(\mathrm {span}(\otimes _{j\in [d]}\mathbf {x}_j)=\otimes _{j\in [d]}\mathrm {span}(\mathbf {x}_j)\) implies that the line bundle \(\iota ^*T(\mathbf {m})\) is isomorphic to \(\otimes _{j\in [d]}\pi _j^*T(m_j)\). Hence the dual bundles \(\iota ^*H(\mathbf {m})\) and \(\otimes _{j\in [d]}\pi _j^*H(m_j)\) are isomorphic. Consider next the bundle \(\hat{T}(\mathbf {m}_i)\) on \(\Pi (\mathbf {m})\), which is

Hence the dual bundle \(\hat{T}(\mathbf {m}_i)^\vee \) is isomorphic to \(\otimes _{j\in [d]\setminus \{i\}} \pi _j^* H(m_j)\). In particular,

Define the following vector bundles on \(\Pi (\mathbf {m})\):

Observe that

Since \(\mathrm Hom (E_1,E_2)\sim E_2\otimes E_1^\vee \), we obtain the following relations:

The formula (2.2) yields

Use the notation \(t_i=c_1(\pi _i^* H(m_i))\). The cohomology ring \(H^*(\Pi (\mathbf {m}))\) is generated by \(t_1,\ldots , t_d\), with the relations \(t_i^{m_i}=0\), that is, \(H^*(\Pi (\mathbf {m}))\simeq \mathbb {C}[t_1,\ldots , t_d]/(t_1^{m_1},\ldots ,t_d^{m_d})\), and in what follows we interpret \(t_i\) just as variables. Correspondingly, the \(k\)th Chern class \(c_k(E)\) is equal to \(p_k(t_1,\ldots ,t_d)\) for some homogeneous polynomial \(p_k\) of degree \(k\) for \(k=1,\ldots ,\dim \Pi (\mathbf {m})\). [Recall that \(c_0(E)=1\) and \(c_k(E)=0\) for \(k>\dim \Pi (\mathbf {m}).\)]

In what follows we need to compute the top Chern class of \(R(\mathbf {m})\). Since \(\mathrm {rank\;}R(\mathbf {m})=\dim \Pi (\mathbf {m})\) and \(\Pi (\mathbf {m})\) is a manifold, it follows that the top Chern class of \(R(\mathbf {m})\) is of the form

where \(c(\mathbf {m})\) is an integer. Thus, \(c(\mathbf {m})=\nu (R(\mathbf {m}))\) is the top Chern number of \(R(\mathbf {m})\).

Lemma 3

Let \(R(i,\mathbf {m})\) and \(R(\mathbf {m})\) be the vector bundles on the Segre product \(\Pi (\mathbf {m})\) given by (2.6). Then the total Chern classes of these vector bundles are given as follows:

The top Chern number of \(R(\mathbf {m})\), \(c(\mathbf {m})\), is the coefficient of the monomial \(\prod _{i\in [d]} t_i^{m_i-1}\) in the polynomial \(\prod _{i\in [d]}\frac{\hat{t}_i^{m_i} -t_i^{m_i}}{\hat{t}_i - t_i}\). (In this formula of \(c(\mathbf {m})\) we do not assume the identities \(t_i^{m_i}=0\) for \(i\in [d]\).)

Proof

Let \(\zeta _i:=e^{\frac{2\pi \mathbf {i}}{m_i}}\) be the primitive \(m_i\)th root of unity. Then

The second equality of (2.13) and (2.3) yield that

Hence, \(ch(\pi _i^*Q(m_i))=\sum _{k\in [m_i-1]} e^{-\zeta _i^k t_i}\). Clearly, \(ch(H(m_j))=e^{t_j}\). The product formula for Chern characters yields

Hence,

where \(x=\frac{t_i}{1+\hat{t}_i}\). Since \(t_i^{m_i}=0\), we deduce

This establishes (2.11). Equation (2.12) follows from formula (2.2). Note that the degree of the polynomial in \(\mathbf {t}:=(t_1,\ldots ,t_d)\) appearing on the right-hand side of (2.11) is \(m_i-1\). The polynomial \(\sum _{j=0}^{m_i-1} \hat{t}_i^{m_i-1-j} t_i^j=\frac{\hat{t}_i^{m_i}-t_i^{m_i}}{\hat{t}_i-t_i}\) is the homogeneous polynomial of degree \(m_i-1\) appearing on the right-hand side of (2.11). Hence, the homogeneous polynomial of degree \(\dim \Pi (\mathbf {m})\) of the right-hand side of (2.12) is \(\prod _{i\in [d]} \frac{\hat{t}_i^{m_i}-t_i^{m_i}}{\hat{t}_i-t_i}\). Assuming the relations \(t_i^{m_i}=0, i\in [d]\), we obtain that this polynomial is \(c(\mathbf {m})\prod _{i\in [d]} t_i^{m_i-1}\). This is equivalent to the statement that \(c(\mathbf {m})\) is the coefficient of \()\prod _{i\in [d]} t_i^{m_i-1}\) in the polynomial \(\prod _{i\in [d]} \frac{\hat{t}_i^{m_i}-t_i^{m_i}}{\hat{t}_i-t_i}\), where we do not assume the relations \(t_i^{m_i}=0, i\in [d]\). \(\square \)

2.5 Bertini-Type Theorems

Let \(M\) be a compact complex manifold and \(E\) a holomorphic bundle on \(M\). A holomorphic section \(\sigma \) of \(E\) on an open set \(U\subset E\) is a holomorphic map \(\sigma : U\rightarrow E\), where \(E\) is viewed as a complex manifold. Specifically, let \(U_i,i\in [N]\) be the finite cover of \(M\) such that the bundle \(E\) restricted to \(U_i\) is \(U_i\times \mathbb {C}^k\) with the standard basis \([\mathbf {e}_{1,i},\ldots ,\mathbf {e}_{k,i}]\), as in §2.2. Then \(\sigma (\zeta )=\sum _{j=1}^k \sigma _{j,i}(\zeta )\mathbf {e}_{j,i}\) for \(\zeta \in U\cap U_i\), where \(\sigma _{j,i}(\zeta ), j\in [k]\) are analytic on \(U\cap U_i\). \(\sigma \) is called a global section if \(U=M\). Denote by \(\mathrm {H}^0(E)\) the linear space of global sections on \(E\). A subspace \(\mathbf {V}\subset \mathrm {H}^0(E)\) is said to generate \(E\) if \(\mathbf {V}(\zeta )\), the value of all sections in \(\mathbf {V}\) at each \(\zeta \in M\), is equal to \(E_\zeta \).

The following proposition is a generalization of the classical Bertini’s theorem in algebraic geometry, and it is a standard consequence of the generic smoothness theorem. For the convenience of the reader we state and give a short proof of this proposition.

Theorem 4

(“Bertini-type” theorem) Let \(E\) be a vector bundle on \(M\). Let \(\mathbf {V}\subset \mathrm {H}^0(E)\) be a subspace that generates \(E\). Then the following statements hold:

-

1.

If \(\mathrm {rank\;}E>\dim M\) for the generic \(\sigma \in \mathbf {V}\), then the zero locus of \(\sigma \) is empty.

-

2.

If \(\mathrm {rank\;}E\le \dim M\) for the generic \(\sigma \in \mathbf {V}\), then the zero locus of \(\sigma \) is either smooth of codimension \(\mathrm {rank\;}E\) or it is empty.

-

3.

If \(\mathrm {rank\;}E=\dim M\), then the zero locus of the generic \(\sigma \in V\) consists of \(\nu (E)\) simple points, where \(\nu (E)\) is the top Chern number of \(E\).

Proof

We identify the vector bundle \(E\) with its locally free sheaf of sections; see [8, B.3]. We have the projection \(E{\mathop {\longrightarrow }\limits ^{\pi }} M\), where the fiber \(\pi ^{-1}(\zeta )\) is isomorphic to the vector space \(E_\zeta \). Let \(\Pi \subset E\) be the zero section. By assumption we have a natural projection of maximal rank

Let \(Z=p^{-1}(\Pi )\); then \(Z\) is isomorphic to the variety \(\{(\zeta ,\sigma )\in M\times \mathbf {V}|\sigma (\zeta )=0\}\) and it has dimension equal to \(\dim M+\dim \mathbf {V}-\mathrm {rank\;}E\). Consider the natural projection \(Z{\mathop {\longrightarrow }\limits ^{q}}\mathbf {V}\); now \(\forall \sigma \in V\) the fiber \(q^{-1}(\sigma )\) is naturally isomorphic to the zero locus of \(\sigma \). We have two cases. If \(q\) is dominant (namely the image of \(q\) is dense), then by the generic smoothness theorem [12, Corollary III 10.7] \(q^{-1}(\sigma )\) is smooth of dimension \(\dim X-\mathrm {rank\;}E\) for generic \(\sigma \).

If \(q\) is not dominant (and this always happens in the case \(\mathrm {rank\;}E>\dim M\)), then \(q^{-1}(\sigma )\) is empty for generic \(\sigma \). This concludes the proof of the first two parts. The third part follows from [8, Example 3.2.16]. \(\square \)

For our purposes we need the following refinement of Theorem 4.

Definition 5

Let \(\pi : E\rightarrow M\) be a vector bundle on a smooth projective variety \(M\) such that \(\mathrm {rank\;}E\ge \dim M\). Let \(\mathbf {V}\subset \mathrm {H}^0(E)\) be a subspace. Then \(\mathbf {V}\) almost generates \(E\) if the following conditions hold. Either \(\mathbf {V}\) generates \(E\) (in this case \(k=0\)) or there exists \(k\ge 1\) smooth strict irreducible subvarieties \(Y_1,\ldots ,Y_k\) of \(M\) satisfying the following properties. First, on each \(Y_j\) there is a vector bundle \(E_j\). Second, after assuming \(Y_0=M\) and \(E_0=E\), the following conditions hold:

-

1.

\(\mathrm {rank\;}E_j > \dim Y_j\) for each \(j\ge 1\).

-

2.

Let \(\pi _j: E_j \rightarrow Y_j\), and for any \(i,j\ge 0\) assume that \(Y_i\) is a subvariety of \(Y_j\). Then \(E_i\) is a subbundle of \({E_j}_{|Y_i}\).

-

3.

\(\mathbf {V}(\zeta )\subset (E_j)_\zeta \) for \(\zeta \in Y_j\).

-

4.

Denote by \(P_j\subset [k]\) the set of all \(i\in [k]\) such that \(Y_i\) are strict subvarieties of \(Y_j\). Then \(\mathbf {V}(\zeta )=(E_j)_{\zeta }\) for \(\zeta \in Y_j\setminus \cup _{i\in P_j} Y_i\).

Theorem 6

Let \(E\) be a vector bundle on a smooth projective variety \(M\). Assume that \(\mathrm {rank\;}E\ge \dim M\). Let \(\mathbf {V}\subset \mathrm {H}^0(E)\) be a subspace that almost generates \(E\). Then the following conditions hold:

-

1.

If \(\mathrm {rank\;}E>\dim M\), then for a generic \(\sigma \in \mathbf {V}\) the zero locus of \(\sigma \) is empty.

-

2.

If \(\mathrm {rank\;}E=\dim M\), then the zero locus of a generic \(\sigma \in \mathbf {V}\) consists of \(\nu (E)\) simple points lying outside \(\cup _{j\in [k]} Y_j\), where \(\nu (E)\) is the top Chern number of \(E\).

Proof

As in the proof of Theorem 4 we consider the variety

We consider the two projections

The fiber \(q^{-1}(v)\) can be identified with the zero locus of \(v\). If \(\zeta \in Y_k\), then, by 4 of Definition 5, the fibers \(p^{-1}(\zeta )\) can be identified with a subspace of \(\mathbf {V}\) having codimension \(\mathrm {rank\;}E_k\). It follows that the dimension of \(p^{-1}(Y_k)\) is equal to \(\dim \mathbf {V}-\mathrm {rank\;}E_k+\dim Y_k\), which, by 1 of Definition 5, is strictly smaller than \(\dim \mathbf {V}\) if \(k\ge 1\). Let \(Y=\cup _{k\ge 1}Y_k\). Then \(p^{-1}(X\setminus Y)\subset Z\) is a fibration and it is smooth. Call \(\overline{q}\) the restriction of \(q\) to \(p^{-1}(X\setminus Y)\). If \(\mathrm {rank\;}E>\dim M\), then we obtain that \(\overline{q}\) is not dominant and the generic fiber \({\overline{q}}^{-1}(v)\) is empty. If \(\mathrm {rank\;}E=\dim M\), by the generic smoothness theorem applied to \(\overline{q}:p^{-1}(X\setminus Y)\rightarrow \mathbf {V}\), we obtain that there exists \(V_0\subset \mathbf {V}\), with \(V_0\) open, such that the fiber \({\overline{q}}^{-1}(v)\) is smooth for \(v\in V_0\).

Moreover, the dimension count yields that \(q(p^{-1}(Y))\) is a closed proper subset of \(\mathbf {V}\) (note that \(q\) is a proper map). Call \(V_1=\mathbf {V}\setminus q(p^{-1}(Y))\), again open.

It follows that for \(v\in V_0\cap V_1\) the fiber \(q^{-1}(v)\) coincides with the fiber \({\overline{q}}^{-1}(v)\), which is smooth by the previous argument, given by finitely many simple points. The number of points is \(\nu (E)\), again by [8, Example 3.2.16]. \(\square \)

3 Number of Singular Vector Tuples of a Generic Tensor

In this section we compute the number of singular vector tuples of a generic tensor \(\mathcal {T}\in \mathbb {C}^{\mathbf {m}}\). In what follows we need the following two lemmas. The first one is well known, and we leave its proof to the reader. Denote by \(Q_m:=\{\mathbf {x}\in \mathbb {C}^m, \mathbf {x}^\top \mathbf {x}=0\}\) the quadric of isotropic vectors.

Lemma 7

Let \(\mathbf {x}\in \mathbb {C}^m\setminus \{\mathbf {0}\}\), and use the notation \(\mathbf {U}:=\mathbb {C}^m/[\mathbf {x}]\). For \(\mathbf {y}\in \mathbb {C}^m\) denote by \([[\mathbf {y}]]\) the element in \(\mathbf {U}\) induced by \(\mathbf {y}\). Then the following statements hold:

-

1.

Any linear functional \(\mathbf {g}:\mathbf {U}\rightarrow \mathbb {C}\) is uniquely represented by \(\mathbf {w}\in \mathbb {C}^m\) such that \(\mathbf {w}^\top \mathbf {x}=0\) and \(\mathbf {g}([[\mathbf {y}]])=\mathbf {w}^\top \mathbf {y}\). In particular, if \(\mathbf {x}\in Q_m\), then the functional \(\mathbf {g}_\mathbf {x}:\mathbf {U}\rightarrow \mathbb {C}\) given by \(g([[\mathbf {y}]])=\mathbf {x}^\top \mathbf {y}\) is a linear functional.

-

2.

Suppose that \(\mathbf {x}\not \in Q_m\) and \(a\in \mathbb {C}\) is given. Then for each \(\mathbf {y}\in \mathbb {C}^m\) there exists a unique \(\mathbf {z}\in \mathbb {C}^m\) such that \([[\mathbf {z}]]=[[\mathbf {y}]]\) and \(\mathbf {x}^\top \mathbf {z}=a\).

Lemma 8

Let \(\mathbf {m}=(m_1,\ldots ,m_d)\in \mathbb {N}^d\). Assume that \(\mathbf {x}_i\in \mathbb {F}^{m_i}\setminus \{\mathbf {0}\}, \mathbf {y}_i\in \mathbb {F}^{m_i}\) are given for \( i\in [d]\).

-

1.

There exists \(\mathcal {T}\in \mathbb {F}^{\mathbf {m}}\) satisfying

$$\begin{aligned} \mathcal {T}\times \otimes _{j\in [d]\setminus \{i\}}\mathbf {x}_j=\mathbf {y}_i, \end{aligned}$$(3.1)for any \(i\in [d]\) if and only if the following compatibility conditions hold:

$$\begin{aligned} \mathbf {x}_1^\top \mathbf {y}_1=\ldots =\mathbf {x}_d^\top \mathbf {y}_d. \end{aligned}$$(3.2) -

2.

Let \(P\subset [d]\) be the set of all \(p\in [d]\) such that \(\mathbf {x}_p\) is isotropic. Consider the following system of equations

$$\begin{aligned}{}[[\mathcal {T}\times \otimes _{j\in [d]\setminus \{l\}}\mathbf {x}_j]]=[[\mathbf {y}_l]] \end{aligned}$$(3.3)for any \(l\in [d]\). Then there exists \(\mathcal {T}\in \mathbb {F}^{\mathbf {m}}\) satisfying (3.3) if and only if one of the following conditions holds:

-

a

\(|P|\le 1\), i.e., there exists at most one isotropic vector in \(\{\mathbf {x}_1,\ldots ,\mathbf {x}_d\}\).

-

b

\(|P|=k\ge 2\). Assume that \(P=\{i_1,\ldots ,i_k\}\). Then

$$\begin{aligned} \mathbf {x}_{i_1}^\top \mathbf {y}_{i_1}=\mathbf {x}_{i_2}^\top \mathbf {y}_{i_2}=\ldots =\mathbf {x}_{i_k}^\top \mathbf {y}_{i_k}. \end{aligned}$$(3.4)

-

3.

Fix \(i\in [d]\). Let \(P\subset [d]\setminus \{i\}\) be the set of all \(p\in [d]\setminus \{i\}\) such that \(\mathbf {x}_p\) is isotropic. Then there exists \(\mathcal {T}\in \mathbb {F}^{\mathbf {m}}\) satisfying condition (3.1) and conditions (3.3) for all \(l\in [d]\setminus \{i\}\) if and only if one of the following conditions holds:

-

a

\(|P|=0\).

-

b

\(|P|=k-1 \ge 1\). Assume that \(P=\{i_1,\ldots ,i_{k-1}\}\). Let \(i_k=i\). Then (3.4) hold.

Proof

1. Assume first that (3.1) holds. Then \(\mathcal {T}\times \otimes _{j\in [d]}\mathbf {x}_j=\mathbf {x}_i^\top \mathbf {y}_i\) for \(i\in [d]\). Hence, (3.2) holds. Suppose now that (3.2) holds. We now show that there exists \(\mathcal {T}\in \mathbb {F}^\mathbf {m}\) satisfying (3.1).

Let \(U_j=[u_{pq,j}]_{p=q=1}^{m_j}\in \mathbf {GL}(m_j,\mathbb {F})\) for \(j\in [d]\). Let \(U:=\otimes _{i\in [d]}U_i\). Then \(U\) acts on \(\mathbb {F}^{\mathbf {m}}\) as a matrix acting on the corresponding vector space. That is, let \(\mathcal {T}'=U\mathcal {T}\), and assume that \(\mathcal {T}=[t_{i_1,\ldots ,i_d}], \mathcal {T}'=[t'_{j_1,\ldots ,j_p}]\). Then

The conditions (3.1) for \(\mathcal {T}'\) become

Clearly, \(\mathbf {x}_i^\top \mathbf {y}_i=(\mathbf {x}_i')^\top \mathbf {y}_i'\) for \(i\in [d]\). Since \(\mathbf {x}_i\ne 0\), there exists \(U_i\in \mathbf {GL}(m_i,\mathbb {F})\) such that \((U_i^\top )^{-1}\mathbf {x}_i=\mathbf {e}_{1,i}=(1,0,\ldots ,0)^\top \) for \(i\in [d]\). Hence, it is enough to show that (3.1) is satisfied for some \(\mathcal {T}\) if \(\mathbf {x}_i=\mathbf {e}_{i,1}\) for \(i\in [d]\) if \(\mathbf {e}_{1,1}^\top \mathbf {y}_1=\ldots =\mathbf {e}_{d,1}^\top \mathbf {y}_d\). Let \(\mathbf {y}_i=(y_{1,i},\ldots ,y_{m_i,i})^\top \) for \(i\in [d]\). Then conditions (3.2) imply that \(y_{1,1}=\ldots =y_{1,d}\). Choose a suitable \(T=[t_{i_1,\ldots , i_d}]\) as follows. \(t_{i_1,\ldots , i_d}=y_{i_j,j}\) if \(i_k=0\) for \(k\ne j\), \(t_{i_1,\ldots , i_d}=0\) otherwise. Then (3.1) holds.

2. We now consider system (3.3). This system is solvable if and only if we can find \(t_1,\ldots ,t_d\in \mathbb {F}\) such that

Suppose first that \(\mathbf {x}_i\not \in Q_{m_i}\) for \(i\in [d]\). Fix \(a\in \mathbb {F}\). Choose \(t_i=\frac{a-\mathbf {x}_i^\top \mathbf {y}_i}{\mathbf {x}_i^\top \mathbf {x}_i}\) for \(i\in [d]\). Hence system (3.3) is solvable. Suppose next that \(\mathbf {x}_j\in Q_{m_j}\). Then \(\mathbf {x}_j^\top (\mathbf {y}_j+t_j\mathbf {x}_j)=\mathbf {x}_j^\top \mathbf {y}_j\). Assume that \(P=\{j\}\). Let \(a=\mathbf {x}_j^\top \mathbf {y}_j\). Choose \(t_i, i\ne j\) as above to deduce that (3.6) holds. Hence, (3.3) is solvable.

Assume finally that \(k\ge 2\) and \(P=\{i_1,\ldots ,i_k\}\). Equation (3.6) yields that if (3.3) is solvable, then (3.4) holds. Suppose that (3.4) holds. Let \(a=\mathbf {x}_{i_1}^\top \mathbf {y}_{i_1}=\ldots =\mathbf {x}_{i_k}^\top \mathbf {y}_{i_k}\). For \(i\not \in P\) let \(t_i=\frac{a-\mathbf {x}_i^\top \mathbf {y}_i}{\mathbf {x}_i^\top \mathbf {x}_i}\) to deduce that condition (3.6) holds. Hence, (3.3) is solvable.

3. Consider Eq. (3.1) and Eqs. (3.3) for \(l\in [d]\setminus \{i\}\). Then this system is solvable if and only if system (3.6) is solvable for \(t_i=0\) and some \(t_l\in \mathbb {F}\) for \(l\in [d]\setminus \{i\}\). Let \(a=\mathbf {x}_i^\top \mathbf {y}_i\). Assume that \(|P|=0\). Choose \(t_l=\frac{a-\mathbf {x}_l^\top \mathbf {y}_l}{\mathbf {x}_l^\top \mathbf {x}_l}\) for \(l\in [d]\setminus \{i\}\) as above to deduce that this system is solvable. Assume that \(P=\{i_1,\ldots ,i_{k-1}\}\) for \(k\ge 2\). Suppose this system is solvable for some \(\mathcal {T}\in \mathbb {F}^\mathbf {m}\). Then \(a=\mathbf {x}_j^\top \mathbf {y}_j\) for each \(j\in P\). Let \(i_k:=i\). Hence (3.6) holds. Conversely, assume that (3.6) holds. Choose \(t_l=\frac{a-\mathbf {x}_l^\top \mathbf {y}_l}{\mathbf {x}_l^\top \mathbf {x}_l}\) for \(l\not \in P\cup \{i\}\). Then (3.6) holds. Hence, our system is solvable. \(\square \)

Lemma 9

Let \(R(i,\mathbf {m})\) and \(R(\mathbf {m})\) be the vector bundles over the Segre product \(\Pi (\mathbf {m})\) defined in (2.6). Denote by \(\mathrm {H}^0(R(i,\mathbf {m}))\) and \(\mathrm {H}^0(R(\mathbf {m}))\) the linear space of global sections of \(R(i,\mathbf {m})\) and \(R(\mathbf {m})\), respectively. Then the following conditions hold:

-

1.

For each \(i\in [d]\) there exists a monomorphism \(L_i:\mathbb {C}^{\mathbf {m}}\rightarrow \mathrm {H}^0(R(i,\mathbf {m}))\) such that \(L_i(\mathbb {C}^{\mathbf {m}})\) generates \(R(i,\mathbf {m})\) (see §2.5).

-

2.

\(L=(L_1,\ldots ,L_d)\) is a monomorphism of the direct sum of \(d\) copies of \(\mathbb {C}^{\mathbf {m}}\) (denoted by \(\oplus ^d \mathbb {C}^{\mathbf {m}}\)) to \(\mathrm {H}^0(R(\mathbf {m}))\), which generates \(R(\mathbf {m})\).

-

3.

Let \(\delta : \mathbb {C}^{\mathbf {m}}\rightarrow \oplus ^d \mathbb {C}^{\mathbf {m}}\) be the diagonal map \(\delta (\mathcal {T})=(\mathcal {T},\ldots ,\mathcal {T})\). Consider \(([\mathbf {x}_1],\ldots , [\mathbf {x}_d])\in \Pi (\mathbf {m})\).

-

(a)

If at most one of \(\mathbf {x}_1,\ldots ,\mathbf {x}_d\) is isotropic, then \(L\circ \delta (\mathbb {C}^{\mathbf {m}})\) [as a space of sections of \(R(\mathbf {m})\)] generates \(R(\mathbf {m})\) at \(([\mathbf {x}_1],\ldots ,[\mathbf {x}_d])\).

-

(b)

Let \(P\subset [d]\) be the set of all \(i\in [d]\) such that \(\mathbf {x}_i\) is isotropic. Assume that \(P=\{i_1,\ldots ,i_k\}\), where \(k\ge 2\). Let \(\mathbf {g}_{\mathbf {x}_{i_p}}\) be the linear functional on the fiber of \(\pi _{i_p}^*Q(m_{i_p})\) at \(([\mathbf {x}_1],\ldots ,[\mathbf {x}_d])\) as defined in Lemma 7 for \(p=1,\ldots ,k\). Let \(\mathbf {U}(P)\) be the subspace of all linear transformations \(\tau =(\tau _1,\ldots ,\tau _d)\in R(\mathbf {m})_{([\mathbf {x}_1],\ldots ,[\mathbf {x}_d])}, \tau _i\in R(i,\mathbf {m})_{([\mathbf {x}_1],\ldots ,[\mathbf {x}_d])}, i\in [d]\) satisfying

$$\begin{aligned} \mathbf {g}_{\mathbf {x}_{i_1}}(\tau _{i_1}(\otimes _{j\in [d]\setminus \{i_1\}} \mathbf {x}_j))=\ldots =\mathbf {g}_{\mathbf {x}_{i_k}}(\tau _{i_k}(\otimes _{j\in [d]\setminus \{i_k\}} \mathbf {x}_j)). \end{aligned}$$(3.7)Then \(L\circ \delta (\mathcal {T})([\mathbf {x}_1],\ldots ,[\mathbf {x}_d])\in \mathbf {U}(P)\) for each \(\mathcal {T}\in \mathbb {C}^\mathbf {m}\). Furthermore, \(L\circ \delta (\mathbb {C}^{\mathbf {m}})([\mathbf {x}_1],\ldots ,[\mathbf {x}_d])=\mathbf {U}(P)\).

Proof

For \(\mathcal {T}\in \mathbb {C}^{\mathbf {m}}\) we define the section \( L_i(\mathcal {T})(([\mathbf {x}_1],\ldots ,[\mathbf {x}_d]))\!\in \! R(i,\mathbf {m})_{([\mathbf {x}_1],\ldots ,[\mathbf {x}_d])}\) as follows:

It is straightforward to check that \(L_i(\mathcal {T})\) is a global section of \(R(i,\mathbf {m})\).

Assume \(\mathcal {T}\ne 0\). Then there exist \(\mathbf {v}_j\in \mathbb {C}^{m_j}, j\in [d]\) such that \(\mathcal {T}\times \otimes _{j\in [d]}\mathbf {v}_j\ne 0\). Hence, \(\mathbf {u}_i:=\mathcal {T}\times \otimes _{j\in [d]\setminus \{i\}}\mathbf {v}_j\in \mathbb {C}^{m_i}\setminus \{\mathbf {0}\}\). Let \(\mathbf {x}_j=\mathbf {v}_j\) for \(j\ne i\). Choose \(\mathbf {x}_i\in \mathbb {C}^{m_i}\setminus \{[\mathbf {u}_i]\}\). Then \(L_i(\mathcal {T})(([\mathbf {x}_1],\ldots ,[\mathbf {x}_d]))\ne 0\). Hence, \(L_i\) is injective.

We now show that \(L_i(\mathbb {C}^{\mathbf {m}})\) generates \(R(i,\mathbf {m})\). Let \(\mathbf {y}_i\in \mathbb {C}^{m_i}\). Choose \(\mathbf {g}_j\in \mathbb {C}^{m_j}\) such that \(\mathbf {g}_j^\top \mathbf {x}_j=1\) for \(j\in [d]\). Set \(\mathcal {T}=(\otimes _{j\in [i-1]}\mathbf {g}_j)\otimes \mathbf {y}_i\otimes (\otimes _{j\in [d]\setminus [i]} \mathbf {g}_j)\). Then \( L_i(\mathcal {T})(([\mathbf {x}_1],\ldots ,[\mathbf {x}_d]))= [[\mathbf {y}_i]]\). This proves 1.

Define \(L((\mathcal {T}_1,\ldots ,\mathcal {T}_d))(([\mathbf {x}_1],\ldots ,[\mathbf {x}_d]))=\oplus _{i\in [d]}L_i(\mathcal {T}_i)(([\mathbf {x}_1],\ldots ,[\mathbf {x}_d]))\). Then \(L((\mathcal {T}_1,\ldots ,\mathcal {T}_d))\in \mathrm {H}^0(R(\mathbf {m}))\). Clearly \(L\) is a monomorphism. Furthermore, \(L(\oplus ^d \mathbb {C}^\mathbf {m})\) generates \(\mathbb {R}(\mathbf {m})\). This shows 2.

Cases 3a and 3b of our lemma follow from parts 2a and 2b of Lemma 8, respectively. \(\square \)

Künneth’s formula [14] yields the equalities

The following result is a corollary to Lemma 8.

Corollary 10

Assume that \(([\mathbf {x}_1],\ldots ,[\mathbf {x}_d])\in \Pi (\mathbf {m})\) is a singular vector tuple of a tensor \(\mathcal {T}\) corresponding to a nonzero singular value. Then one of the following holds:

-

1.

All \(\mathbf {x}_i\) are isotropic.

-

2.

All \(\mathbf {x}_i\) are nonisotropic.

For \(\mathcal {T}\in \mathbb {R}^{\mathbf {m}}\) with a real singular vector tuple \(([\mathbf {x}_1],\ldots ,[\mathbf {x}_d])\in \Pi _\mathbb {R}(\mathbf {m})\) the condition \(\prod _{i\in [d]}\lambda _i=0\) implies that \(\lambda _i=0\) for each \(i\). Indeed, since \(\mathbf {x}_i\in \mathbb {R}^{m_i}\setminus \{\mathbf {0}\}\), it follows from (1.2) that \(\lambda _i=\frac{\mathcal {T}\times \otimes _{j\in [d]} \mathbf {x}_j}{\mathbf {x}_i^\top \mathbf {x}_i}\) for each \(i\in [d]\). Thus \(\lambda _k=0\) for some \(k\in [d]\) yields that \(\mathcal {T}\times \otimes _{j\in [d]} \mathbf {x}_j=0\). Hence, each \(\lambda _i=0\).

However, this observation is not valid for complex tensors, already in the case of complex-valued matrices (\(d=2\)); see subsequent example. It is straightforward to see that a singular vector pair \(([\mathbf {x}_1],[\mathbf {x}_2])\) of \(A\in \mathbb {C}^{m_1\times m_2}\) is given by the following conditions:

Consider the following simple example:

Then \(A^\top \mathbf {x}_1=\mathbf {x}_2, A\mathbf {x}_2=\mathbf {0}\), i.e., \(\lambda _1=1,\lambda _2=0\).

Lemma 11

Let \(\mathcal {T}\in \mathbb {C}^\mathbf {m}\), and consider the section \(\hat{\mathcal {T}}:=L\circ \delta ({\mathcal {T}}) \in \mathrm {H}^0(R(\mathbf {m}))\). We have that \(([\mathbf {x}_1],\ldots ,[\mathbf {x}_d])\in \Pi (\mathbf {m})\) is a zero of \(\hat{\mathcal {T}}\) if and only if \(([\mathbf {x}_1],\ldots ,[\mathbf {x}_d])\) is a singular vector tuple corresponding to \({\mathcal {T}}\).

Proof

Suppose first that \(\hat{\mathcal {T}}(([\mathbf {x}_1],\ldots ,[\mathbf {x}_d]))=0\). Then \(L_i({\mathcal {T}})(([\mathbf {x}_1],\ldots ,[\mathbf {x}_d]))\) is a zero vector in the fiber \(R(i,\mathbf {m})\) at \(([\mathbf {x}_1],\ldots ,[\mathbf {x}_d])\). Suppose first that \({\mathcal {T}}\times (\otimes _{j\in [d]\setminus \{i\}}\mathbf {x}_j)\ne \mathbf {0}\). Then \({\mathcal {T}}\times (\otimes _{j\in [d]\setminus \{i\}}\mathbf {x}_j)=\lambda _i\mathbf {x}_i\) for some \(\lambda _i\ne 0\). Otherwise, the previous equality holds with \(\lambda _i=0\). Hence, \(([\mathbf {x}_1],\ldots ,[\mathbf {x}_d])\) is a singular vector tuple corresponding to \({\mathcal {T}}\). Conversely, it is straightforward to see that if \(([\mathbf {x}_1],\ldots ,[\mathbf {x}_d])\) is a singular vector tuple corresponding to \(\mathcal {T}\), then the section \(\hat{\mathcal {T}}\) vanishes at \(([\mathbf {x}_1],\ldots ,[\mathbf {x}_d])\in \Pi (\mathbf {m})\). \(\square \)

We now present the proof of Theorem 1, which was stated in § 1.

Proof of Theorem 1. Let \(V=L\circ \delta (\mathbb {C}^{\mathbf {m}})\) be the subspace of sections of \(R(\mathbf {m})\) given by tensors (embedded diagonally). We now show that \(\mathbf {V}\) almost generates \(R(\mathbf {m})\) as defined in Definition 5. First, \(\mathrm {rank\;}R(\mathbf {m})=\dim \Pi (\mathbf {m})\). Second, let \(2^{[d]_k}\) be the set of all subsets of \([d]\) of cardinality \(k\) for each \(k\in [d]\). Let \(\alpha \in 2^{[d]_k}\). Define \(Y_\alpha =X_1\times \ldots X_d\), where \(X_i=\mathbb {P}(Q_{m_i})\) if \(i\in \alpha \) and \(X_i=\mathbb {P}(\mathbb {C}^{m_i})\) otherwise. Clearly, \(Y_\alpha \) is a strict smooth subvariety of \(\Pi (\mathbf {m})\) of codimension \(k\). Note that \(Y_\beta \subsetneq Y_\alpha \) if and only if \(\alpha \subsetneq \beta \). We now define the subbundle \(E_\alpha \) of \(\pi ^{-1}(Y_{\alpha })\). If \(\alpha \in 2^{[d]_1}\), then \(E_\alpha =\pi ^{-1}(Y_{\alpha })\). Assume now that \(k>1\). Let \(\alpha =\{i_1,\ldots ,i_k\}\). Let \(([\mathbf {x}_1],\ldots ,[\mathbf {x}_d])\in Y_{\alpha }\). Thus, \(\mathbf {x}_{i_l}\in Q_{m_{i_l}}\) for \(l=1,\ldots ,k\). Then the fiber \(E_{\alpha }\) at \(([\mathbf {x}_1],\ldots ,[\mathbf {x}_d])\) is the set of all vectors satisfying (3.7). Note that \(\mathrm {rank\;}E_{\alpha }=\dim Y_\alpha +1\). Assume that \(\alpha \subsetneq \beta \). Clearly, \(E_\beta \) is a strict subbundle of \(\pi _\alpha ^{-1}(Y_\beta )\). Hence conditions 1 and 2 of Definition 5 hold. Lemma 9 implies that conditions 3 and 4 of Definition 5 hold. Theorem 6 implies that for a generic \(\mathcal {T}\in \mathbb {C}^\mathbf {m}\) the section \(L\circ \delta (\mathcal {T})\) has a finite number of simple zeros. Moreover, this number is equal to the top Chern number of \(R(\mathbf {m})\). Lemma 3 yields that the top Chern number of \(R(\mathbf {m})\) is \(c(\mathbf {m})\).

It remains to show that a generic \(\mathcal {T}\in \mathbb {C}^\mathbf {m}\) does not have a zero singular value. Fix \(i\in [d]\), and consider the set of all \(\mathcal {T}\in \mathbb {C}^\mathbf {m}\) that have a singular vector tuple \(([\mathbf {x}_1],\ldots ,[\mathbf {x}_d])\in \Pi (\mathbf {m})\) with \(\lambda _i=0\).

Let \(R(i,\mathbf {m})'\) and \(R_i(\mathbf {m})'\) be defined in (2.6). Similar to definition (3.8), we can define a monomorphism \(L_i':\mathbb {C}^\mathbf {m}\rightarrow \mathrm {H}^0(R(i,m)')\) by the equality

Let \(\tilde{L}_i=(L_1,\ldots ,L_{i-1},L_i',L_{i+1},\ldots ,L_d):\oplus _{j\in [d]}\mathbb {C}^\mathbf {m}\rightarrow \mathrm {H}^0(R_i(\mathbf {m})')\).

We claim that \(\tilde{L}_i\circ \delta (\mathbb {C}^\mathbf {m})\) almost generates \(R_i(\mathbf {m})'\). Clearly, \(\mathrm {rank\;}R_i(\mathbf {m})'\!=\!\dim \Pi (\mathbf {m})\!+\!1\). Recall that a vector \((\tau _1,\ldots ,\tau _d)\in R_i(\mathbf {m})'_{([\mathbf {x}_1],\ldots ,[\mathbf {x}_d])}\) is of the form

Let \(\alpha \subset [d]\setminus \{i\}\) be a nonempty set. Then \(Y_\alpha =X_1\times \ldots \times X_d\), where \(X_j=\mathbb {P}(Q_{m_j})\) if \(j\in \alpha \) and \(X_j=\mathbb {P}(\mathbb {C}^{m_j})\) if \(j\not \in \alpha \). [Note that \(X_i=\mathbb {P}(\mathbb {C}^{m_i})\).] We now define the vector bundles \(\pi _{\alpha }:E_\alpha \rightarrow Y_{\alpha }\). Let \(\pi : R_i(\mathbf {m})'\rightarrow \Pi (\mathbf {m})\). Assume that \(\alpha =\{i_1,\ldots ,i_{k-1}\}\subset [d]\setminus \{i\}\), where \(k-1\ge 1\). Then \(E_\alpha \) is the subbundle \(\pi ^{-1}(Y_{\alpha })\) defined as follows. For \(([\mathbf {x}_1],\ldots ,[\mathbf {x}_d])\in Y_{\alpha }\) it consists of all sections of the form (3.11) satisfying a variation of the condition (3.7):

Note that \(\mathrm {rank\;}E_\alpha =\dim Y_\alpha +1\). Clearly, the conditions of 1–2 of Definition 5 hold. Part 3 of Lemma 8 implies conditions 3 and 4 of Definition 5. Theorem 6 yields that a generic section of \(\tilde{L}_i\circ \delta (\mathcal {T})\) does not have zero. Thus, \(\mathcal {T}\) does not have a singular vector tuple satisfying (1.2) with \(\lambda _i=0\). Hence, a generic tensor \(\mathcal {T}\in \mathbb {C}^\mathbf {m}\) does not have a zero singular value.

Clearly, a generic \(\mathcal {T}\in \mathbb {R}^\mathbf {m}\) has exactly \(c(\mathbf {m})\) simple complex-valued singular vector tuples. Only some of those can be realized as points in \(\Pi _\mathbb {R}(\mathbf {m})\). \(\square \)

We first observe that Theorem 1 agrees with the standard theory of singular values for \(m\times n\) real matrices. That is, a generic \(A\in \mathbb {R}^{m\times n}\) has exactly \(\min (m,n)\) nonzero singular values all of which are positive and pairwise distinct. The corresponding singular vector pairs are simple.

We now point out a matrix proof of Theorem 1 for \(d=2\). Let \(\mathrm {O}(m)\subset \mathbb {C}^{m\times m}\) be a variety of \(m\times m\) orthogonal matrices and \(\mathrm {D}_{m,n}\subset \mathbb {C}^{m\times n}\) the linear subspace of all diagonal matrices. Consider the trilinear polynomial map \(F:\mathrm {O}(m_1)\times \mathrm {D}_{m_1,m_2}\times \mathrm {O}(m_2)\rightarrow \mathbb {C}^{m_1\times m_2}\) given by \((U_1,D,U_2)\mapsto U_1 D U_2^\top \). Singular value decomposition yields that any \(A\in \mathbb {R}^{m_1\times m_2}\) is of the form \(U_1 D U_2^\top \), where \(U_1,U_2\) are real orthogonal and \(D\) is a nonnegative diagonal matrix. Hence, \(F(\mathrm {O}(m_1)\times \mathrm {D}_{m_1,m_2}\times \mathrm {O}(m_2))=\mathbb {R}^{m_1\times m_2}\). Therefore, the image of \(F\) is dense in \(\mathbb {C}^{m_1\times m_2}\). Hence, a generic \(A\in \mathbb {C}^{m_1\times m_2}\) is of the form \(U_1^\top D U_2\). Furthermore, we can assume that \(D=\mathop {\mathrm{diag}}\nolimits (\lambda _1,\ldots ,\lambda _l), l=\min (m_1,m_2)\), where the diagonal entries are nonzero and pairwise distinct. Assume that \(\mathbf {x}_i,\mathbf {y}_i\) are the \(i\)th columns of \(U_1,U_2\) respectively for \(i=1,\ldots ,l\). Then \(([\mathbf {x}_i],[\mathbf {y}_i])\) is a simple singular vector tuple corresponding to \(\lambda _i\) for \(i=1,\ldots ,l\).

We list for the convenience of the reader a few values \(c(\mathbf {m})\). First,

Indeed, \(\frac{\hat{t}_i^2-t_i^2}{\hat{t}_i-t_i}=(\hat{t}_i+t_i)=\sum _{j\in [d]} t_j\). Therefore, \(\prod _{j\in [d]} \frac{\hat{t}_i^2-t_i^2}{\hat{t}_i-t_i}= (\sum _{j\in [d]} t_j)^d\). Clearly, the coefficient of \(t_1\ldots t_d\) in this polynomial is \(d!\).

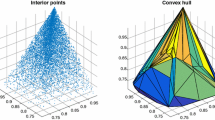

Second, we list in Table 1 the first values in the case where \(d=3\). From this table one sees that \(c(m_1,m_2,m_3)\) stabilizes for \(m_3\ge m_1+m_2-1\), and the case where equality holds is called the boundary format case in the theory of hyperdeterminants ([9]). It is the case where a “diagonal” naturally occurs, as in Fig. 1:

In the \(d=2\) case, a boundary format means a square.

4 Partially Symmetric Singular Vector Tuples

For an integer \(m\ge 2\) let \(m^{\times d}:=(\underbrace{m,\ldots ,m}_d)\). Then \(\mathcal {T}\in \mathbb {F}^{m^{\times d}}\) is called a \(d\)-cube, or simply a cube tensor. Denote by \(\mathrm {S}^d(\mathbb {F}^{m})\subset \mathbb {F}^{m^{\times d}}\) the subspace of symmetric tensors. For \(\mathcal {T}\in \mathrm {S}^d(\mathbb {F}^{m})\) it is natural to consider a singular vector tuple (1.2) where \(\mathbf {x}_1=\ldots =\mathbf {x}_d=\mathbf {x}\) [16], Formula (7), with \(p=2\)]. This is equivalent to the system

Here \(\otimes ^{d-1}\mathbf {x}:=\underbrace{\mathbf {x}\otimes \ldots \otimes \mathbf {x}}_{d-1}\). Furthermore, the contraction in (4.1) is on the last \(d-1\) indices. Equation (4.1) makes sense for any cube tensor \(\mathcal {T}\in \mathbb {C}^{m^{\times d}}\) [16, 19, 22]. For \(d=2\), \(\mathbf {x}\) is an eigenvector of the square matrix \(\mathcal {T}\). Hence, for a \(d\)-cube tensor (\(d\ge 3\)) \(\mathbf {x}\) is referred to as a nonlinear eigenvalue of \(\mathcal {T}\). Abusing slightly our notation we call \(([\mathbf {x}],\ldots ,[\mathbf {x}]) \in \Pi (m^{\times d})\) a symmetric singular vector tuple of \(\mathcal {T}\). [Note that if \(\mathcal {T}\in \mathrm {S}^d(\mathbb {C}^m)\), then \(([\mathbf {x}],\ldots ,[\mathbf {x}])\) is a proper symmetric singular vector tuple of \(\mathcal {T}\).]

Let \(s_{d-1}(\mathcal {T})=[t'_{i_1,\ldots ,i_d}]\) be the symmetrization of a \(d\)-cube \(\mathcal {T}=[t_{i_1,\ldots ,i_d}]\) with respect to the last \(d-1\) indices

Here \(p(i_2,\ldots ,i_d)\) is the number of multisets \(\{j_2,\ldots ,j_d\}\) that are equal to \(\{i_2,\ldots ,i_d\}\). [Note that for \(d=2\), \(s_1(\mathcal {T})=\mathcal {T}\).] It is straightforward to see that

Hence, in (4.1) we can assume that \(\mathcal {T}\) is symmetric with respect to the last \(d-1\) indices.

As for singular vector tuples we view the eigenvectors of \(\mathcal {T}\) as elements of \(\mathbb {P}(\mathbb {C}^m)\). It was shown by Cartwright and Sturmfels [3] that a generic \(\mathcal {T}\in \mathbb {C}^{m^{\times d}}\) has exactly \(\frac{(d-1)^{m}-1}{d-2}\) distinct eigenvectors. (This formula was conjectured in [19].)

The aim of this section is to consider partially symmetric singular vectors and their numbers for a generic tensor. This number will interpolate our formula \(c(\mathbf {m})\) for the number of singular vector tuples for a generic \(\mathcal {T}\in \mathbb {C}^\mathbf {m}\) and the number of eigenvalues of generic \(\mathcal {T}\in \mathbb {C}^{m^{\times d}}\) given in [3].

Let \(d=\omega _1+\ldots +\omega _p\) be a partition of \(d\). Thus, each \(\omega _i\) is a positive integer. Let \(\omega _0=m_0'=0\) and \( \varvec{\omega }=(\omega _1,\ldots ,\omega _p)\), and denote by \(\mathbf {m}(\varvec{\omega })\) the \(d\)-tuple

Denote by \(\mathrm {S}^{\varvec{\omega }}(\mathbb {F})\subset \mathbb {F}^{\mathbf {m}(\varvec{\omega })}\) the subspace of tensors that are partially symmetric with respect to the partition \(\varvec{\omega }\). That is, the entries of \(\mathcal {T}=[t_{i_1,\ldots ,i_d}]\in \mathrm {S}^{\varvec{\omega }}(\mathbb {F})\) are invariant if we permute indices in the \(k\)th group of indices \([\sum _{j=0}^k \omega _j]\setminus [\sum _{j=0}^{k-1} \omega _j]\) for \(k\in [p]\). Note that \(\mathrm {S}^{\varvec{\omega }}(\mathbb {F})=\mathrm {S}^d(\mathbb {F}^\mathbf {m}))\) for \(p=1\) and \(\mathrm {S}^{\varvec{\omega }}(\mathbb {F})=\mathbb {F}^\mathbf {m}\) for \(p=d\). We call \(\varvec{\omega }=(1,\ldots ,1)\), i.e., \(p=d\), a trivial partition.

For simplicity of notation we let \(\mathrm {S}^{\varvec{\omega }}:=\mathrm {S}^{\varvec{\omega }}(\mathbb {C})\). Assume that \(\mathcal {T}\in \mathrm {S}^{\varvec{\omega }}\). Consider a singular vector tuple \(([\mathbf {x}_1],\ldots ,[\mathbf {x}_d])\) satisfying (1.2) and \(\varvec{\omega }\)-symmetric conditions

We rewrite (1.2) for an \(\varvec{\omega }\)-symmetric singular vector tuple \(([\mathbf {x}_1],\ldots ,[\mathbf {x}_d])\) as follows. Define

Hence our equations for an \(\varvec{\omega }\)-symmetric singular vector tuple for \(\mathcal {T}\in \mathrm {S}^{\varvec{\omega }}\) is given by

In view of the definition of \(\otimes _{l\in [p]} (\otimes ^{\omega _l-\delta _{lk}} \mathbf {z}_l)\), we agree that the contraction on the left-hand side of (4.7) is done on all indices except the index \(1+\sum _{i=0}^{k-1} \omega _i m_i'\). As for the \(d\)-cube tensor, system (4.7) makes sense for any \(\mathcal {T}\in \mathbb {C}^{\mathbf {m}(\varvec{\omega })}\).

Let \(\mathbf {m}':=(m_1',\ldots ,m_p')\). We call \(([\mathbf {z}_1],\ldots ,[\mathbf {z}_p])\in \Pi (\mathbf {m}')\) satisfying (4.7), a \(\varvec{\omega }\)-symmetric singular vector tuple of \(\mathcal {T}\in \mathbb {C}^{\mathbf {m}(\varvec{\omega })}\). We say that \(([\mathbf {z}_1],\ldots ,[\mathbf {z}_p])\) corresponds to a zero (nonzero) singular value if \(\prod _{i=1}^ p \lambda _i=0 \; (\ne 0)\).

The aim of this section is to generalize Theorem 1 to tensors in \(\mathrm {S}^{\varvec{\omega }}\).

Theorem 12

Let \(d\ge 3\) be an integer, and assume that \(\varvec{\omega }=(\omega _1,\ldots ,\omega _p)\) is a partition of \(d\). Let \(\mathbf {m}(\varvec{\omega })\) be defined by (4.6). Denote by \(\mathrm {S}^{\varvec{\omega }}\subset \mathbb {C}^{\mathbf {m}(\varvec{\omega })}\) the subspace of tensors partially symmetric with respect to \(\varvec{\omega }\). Let \(c(\mathbf {m}',\varvec{\omega })\) be the coefficient of the monomial \(\prod _{i=1}^ p t_i^{m_i'-1}\) in the polynomial

A generic \(\mathcal {T}\in \mathrm {S}^{\varvec{\omega }}\) has exactly \(c(\mathbf {m}',\varvec{\omega })\) simple \(\varvec{\omega }\)-symmetric singular vector tuples that correspond to nonzero singular values. A generic \(\mathcal {T}\in \mathrm {S}^{\varvec{\omega }}\) does not have a zero singular value. In particular, a generic real-valued tensor \(\mathcal {T}\in \mathrm {S}^{\varvec{\omega }}_{\mathbb {R}}\) has at most \(c(\mathbf {m}',\varvec{\omega })\) real singular vector tuples, and all of them are simple.

Proof

The proof of this theorem is analogous to the proof of Theorem 1, so we point out briefly the needed modifications. Let \(H(m_i')\), \(Q(m_i')\), and \(F(m_i')\) be the vector bundles defined in §2.4. Let \(\pi _i\) be the projection of \(\Pi (\mathbf {m}')\) on the component \(\mathbb {P}(\mathbb {C}^{m_i'})\). Then \(\pi _i^* H(m_i'), \pi _i^* Q(m_i'),\pi _i^*F(m_i')\) are the pullbacks of the vector bundles \(H(m_i'),Q(m_i'),F(m_i')\) to \(\Pi (\mathbf {m}')\), respectively. Clearly, \(c(\pi _i^* H(m_i'))=1+t_i\), and moreover \(c(\otimes ^k \pi _i^* H(m_i'))=1+kt_i\), where \(t_i^{m_i'}=0\).

We next observe that we can view \(\Pi (\mathbf {m}')\) as a submanifold of \(\Pi (\mathbf {m}(\omega ))\) using the embedding

where we assume relations (4.5). Let \(\tilde{R}(i,\mathbf {m}')\) and \(\tilde{R}(i,\mathbf {m}')'\) be the pullbacks of \(R(j,\mathbf {m})\) and \(R(j,\mathbf {m})'\), respectively, where \(j=1+\sum _{k=0}^{i-1} \omega _k m_k'\) [see (2.6)]. Then

Note that

As in the proof of Lemma 3 we deduce that the top Chern class of \(\tilde{R}(i,\mathbf {m}')\) is given by the polynomial

where we assume the relations \(t_i^{m_i'}=0\) for \(i\in [p]\). Use (2.2) to deduce that the top Chern number of \(\tilde{R}(\mathbf {m}')\) is \(c(\mathbf {m}',\varvec{\omega })\).

From the results of §3, in particular Lemma 9, we deduce that there exists a monomorphism \(L_i: \mathbb {C}^{\mathbf {m}(\varvec{\omega })}\rightarrow \mathrm {H}^0(\tilde{R}(i,\mathbf {m}'))\). Furthermore, \(L_i(\mathbb {C}^{\mathbf {m}(\varvec{\omega })})\) generates \(\tilde{R}(i,\mathbf {m}')\). Let \(L=(L_1,\ldots ,L_p):\oplus _{i\in [p]}\mathbb {C}^{\mathbf {m}(\omega )}\rightarrow \mathrm {H}^0(\tilde{R}(\mathbf {m}'))\). Then \(L(\oplus _{i\in [p]}\mathbb {C}^{\mathbf {m}(\varvec{\omega })})\) generates \(\mathrm {H}^0(\tilde{R}(\mathbf {m}'))\). Let \(\delta : \mathbb {C}^{\mathbf {m}(\varvec{\omega })}\oplus ^p \mathbb {C}^{\mathbf {m}(\varvec{\omega })}\) be the diagonal map. We claim that \(L\circ \delta \) almost generates \(\mathrm {H}^0(\tilde{R}(\mathbf {m}'))\).

First, we consider a special case of Lemma 8 for \(\mathcal {T}\in \mathrm {S}^{\varvec{\omega }}\). Here we assume that \(\mathbf {x}_1,\ldots ,\mathbf {x}_d\) and \(\mathbf {y}_1,\ldots ,\mathbf {y}_d\) satsify the conditions induced by the equalities (4.5):

Then all parts of the lemma need to be stated in terms of \(\mathbf {z}_1,\ldots ,\mathbf {z}_p\) and \(\mathbf {w}_1,\ldots ,\mathbf {w}_p\). Second, we restate Lemma 9 for \(\mathcal {T}\in \mathrm {S}^{\varvec{\omega }}\) and \(\mathbf {x}_1,\ldots ,\mathbf {x}_d\) and \(\mathbf {y}_1,\ldots ,\mathbf {y}_d\) of the preceding form. Third, let \(Y_\alpha \subsetneq \Pi (\mathbf {m}')\), where \(\alpha \) are nonempty subsets of \([p]\), be the varieties defined in the proof of Theorem 1. The proof of Theorem 1 yields that \(L\circ \delta (\mathrm {S}^{\varvec{\omega }})\) almost generates \(\tilde{R}(\mathbf {m}')\) with respect to the varieties \(Y_\alpha \). Theorem 6 yields that a generic \(\mathcal {T}\in \mathrm {S}^{\varvec{\omega }}\) has exactly \(c(\mathbf {m}',\varvec{\omega })\) simple \(\varvec{\omega }\)-symmetric singular vector tuples. The proof that a generic \(\mathcal {T}\in \mathrm {S}^{\varvec{\omega }}\) does not have a zero singular value is analogous to the proof given in Theorem 1. \(\square \)

Remark 13

In the special case where \(\varvec{\omega }=(1,1,\ldots ,1)\), we have \(c(\mathbf {m}',\varvec{\omega }))=c(\mathbf {m}')\), and Theorem 12 reduces to Theorem 1. In the case where \(\varvec{\omega }=(d)\), we have \(c(m,\varvec{\omega })=\frac{(d-1)^{m}-1}{d-2}\), and Theorem 12 reduces to the results in [3]. This last reduction was already performed in [20].

Lemma 14

In the case where \(\varvec{\omega }=(d-1,1)\), we have

If \(m_1\le m_2\), then we have \(c((m_1,m_2),(d-1,1))=\frac{(2d-3)^{m_1}-1}{2d-4}\).

If \(m_1=m_2+1\), then we have \(c((m_1,m_2),(d-1,1))=\frac{(2d-3)^{m_1}-1}{2d-4}-(d-1)^{m_1-1}\).

We now compare our formulas for the \(3\times 3\times 3\) partially symmetric tensors. Consider first the case where \(c((3),(3))= \frac{2^3-1}{2-1}=7\), i.e., the Cartwright–Sturmfels formula. That is, a generic symmetric \(3\times 3\times 3\) tensor has \(7\) singular vector triples of the form \(([\mathbf {x}],[\mathbf {x}],[\mathbf {x}])\). Second, consider a generic \((2,1)\) partially symmetric tensor. The previous lemma gives \(c((3,3),(2,1))=13\), i.e., a generic partially symmetric tensor has \(13\) singular vector triples of the form \(([\mathbf {x}],[\mathbf {x}],[\mathbf {y}])\). Third, consider a generic \(3\times 3\times 3\) tensor. In this case, our formula gives \(c(3,3,3)=c((3,3,3), (1,1,1))=37\) singular vector triples of the form \([\mathbf {x}],[\mathbf {y}],[\mathbf {z}]\).

Let us assume that we have a generic symmetric \(3 \times 3 \times 3\) tensor. Let us estimate the total number of singular vector triples it may have, assuming that it behaves as a generic partially symmetric tensor and a nonsymmetric one. First it has \(7\) singular vector triples of the form \([\mathbf {x}],[\mathbf {x}],[\mathbf {x}]\). Second, it has \(3\cdot 6=18\) singular vector triples of the form \([\mathbf {x}],[\mathbf {y}],[\mathbf {z}]\), where exactly two out of these three classes are the same. Third, it has \(12\) singular vector triples of the form \([\mathbf {x}],[\mathbf {y}],[\mathbf {z}]\), where all three classes are distinct. Note also that the number \(37\) was computed, in a similar setting, in [18].

The previously discussed situation indeed occurs for the diagonal tensor \(\mathcal {T}=[\delta _{i_1,i_2}\delta _{i_2i_3}]\in \mathbb {C}^{3\times 3\times 3}\).

We list in Table 2 the singular vector triples of this tensor \(\mathcal {T}\). The first 7 singular vector triples have equal entries, and they are the ones counted by the formula in [3]. The first \(7+6=13\) singular vector have the form \(([\mathbf {x}],[\mathbf {x}],[\mathbf {y}])\). Any singular vector of this form gives \(3\) singular vector triples \(([\mathbf {x}],[\mathbf {x}],[\mathbf {y}])\), \(([\mathbf {x}],[\mathbf {y}],[\mathbf {x}])\), \(([\mathbf {y}],[\mathbf {x}],[\mathbf {x}])\). Note that six singular vector triples have zero singular value, but this does not correspond to the generic case; indeed, for a generic tensor all \(37\) singular vector triples correspond to a nonzero singular value.

In the case of \(4\times 4\times 4\) tensors, the diagonal tensor has \(156\) singular vector triples corresponding to a nonzero singular value and infinitely many singular vector triples corresponding to zero singular values. These infinitely many singular vector triples fill exactly \(36\) projective lines in the Segre product \(\mathbb {P}(\mathbb {C}^4)\times \mathbb {P}(\mathbb {C}^4)\times \mathbb {P}(\mathbb {C}^4)\), which “count” in this case for the remaining \(240-156=84\) singular vector triples.

5 A Homogeneous Pencil Eigenvalue Problem

By \(\mathbf {x}=(x_1,\ldots ,x_m)^\top \in \mathbb {C}^m\) denote \(\mathbf {x}^{\circ (d-1)}:=(x_1^{d-1},\ldots ,x_m^{d-1})^\top \). Let \(\mathcal {T}\in \mathbb {C}^{m^{\times d}}\). The eigenvalues of \(\mathcal {T}\) satisfying (4.1) are called the \(E\)-eigenvalues in [22]. The homogeneous eigenvalue problem introduced in [16, 17, 21], sometimes referred to as \(N\)-eigenvalues, is

Let \(\mathcal {S}\in \mathbb {C}^{m^{\times d}}\). Then a generalized \(d-1\) pencil eigenvalue problem is

For \(d=2\) the preceding homogeneous system is the standard eigenvalue problem for a pencil of matrices \(\mathcal {T}-\lambda \mathcal {S}\).

A tensor \(\mathcal {S}\) is called singular if the system

has a nontrivial solution. Otherwise, \(\mathcal {S}\) is called nonsingular. It is very easy to give an example of a symmetric nonsingular \(\mathcal {S}\) [7]. Let \(\mathbf {w}_1,\ldots ,\mathbf {w}_m\) be linearly independent in \(\mathbb {C}^m\). Then \(\mathcal {S}=\sum _{i=1}^m \otimes ^d\mathbf {w}_i\) is nonsingular. The set of singular tensors in \(\mathbb {C}^{m^{\times d}}\) is given by the zero set of some multidimensional resultant [9, Chapter 13]. It can be obtained by elimination of variables. Let us denote by \(\mathrm {res}_{m,d}\in \mathbb {C}[\mathbb {C}^{m^{\times d}}]\) the multidimensional resultant corresponding to system (5.3), which is a homogeneous polynomial in the entries of \(\mathcal {S}\) of degree \(\mu (m,d)=m(d-1)^{m-1}\); see formula (2.12) of [9, Chapter 9]. Denote by \(Z(\mathrm {res}_{m,d})\) the zero set of the polynomial \(\mathrm {res}_{m,d}\). Then \(\mathrm {res}_{m,d}\) is an irreducible polynomial such that system (5.3) has a nonzero solution if and only if \(\mathrm {res}_{m,d}(\mathcal {S})=0\). Furthermore, for a generic point \(\mathcal {S}\in Z(\mathrm {res}_{m,d})\) system (5.3) has exactly one simple solution in \(\mathbb {P}(\mathbb {C}^m)\). The eigenvalue problem (5.2) consists of two steps. First, find all \(\lambda \) satisfying \(\mathrm {res}_{m,d}(\lambda \mathcal {S}-\mathcal {T})=0\). Clearly, \(\mathrm {res}_{m,d}(\lambda \mathcal {S}-\mathcal {T})\) is a polynomial in \(\lambda \) of degree at most \(\mu (m,d)\). (It is possible that this polynomial in \(\lambda \) is a zero polynomial. This is the case where there exists a nontrivial solution to the system \(\mathcal {S}\otimes ^{d-1}\mathbf {x}=\mathcal {T}\otimes ^{d-1}\mathbf {x}=\mathbf {0}\).) Then one needs to find the nonzero solutions of the system \((\lambda \mathcal {S}-\mathcal {T})\otimes ^{d-1}\mathbf {x}=0\), which are viewed as eigenvectors in \(\mathbb {P}(\mathbb {C}^m)\). Assume that \(\mathcal {S}\) is nonsingular. Then \(\mathrm {res}_{m,d}(\lambda \mathcal {S}-\mathcal {T})=\mathrm {res}_{m,d}(\mathcal {S})\lambda ^{\mu (m,d)}+\) polynomial in \(\lambda \) of degree at most \(\mu (m,d)-1\). We show below a result, known to experts, that for generic \(\mathcal {S},\mathcal {T}\) each eigenvalue \(\lambda \) of the system \((\lambda \mathcal {S}-\mathcal {T})\otimes ^{d-1}\mathbf {x}=0\) has exactly one corresponding eigenvector in \(\mathbb {P}(\mathbb {C}^m)\). We outline a short proof of the following known theorem, which basically uses only the existence of the resultant for system (5.3). For an identity tensor \(\mathcal {S}\), i.e., (5.1), see [21].

Theorem 15

Let \(\mathcal {S},\mathcal {T}\in \mathbb {C}^{m^{\times d}}\), and assume that \(\mathcal {S}\) is nonsingular. Then \(\mathrm {res}_{m,d}(\lambda \mathcal {S}-\mathcal {T})\) is a polynomial in \(\lambda \) of degree \(m(d-1)^{m-1}\). For a generic \(\mathcal {S}\) and \(\mathcal {T}\) to each eigenvalue \(\lambda \) of the pencil (5.1) corresponds one eigenvector in \(\mathbb {P}(\mathbb {C}^m)\).

Proof

Consider the space \(\mathbb {P}(\mathbb {C}^2)\times \mathbb {P}(\mathbb {C}^{m^{\times d}}\times \mathbb {C}^{m^{\times d}})\times \mathbb {P}(\mathbb {C}^m)\) with the local coordinates \(((u,v),(\mathcal {S},\mathcal {T}),\mathbf {x})\). Consider the system of \(m\) equations that are homogeneous in \((u,v), (\mathcal {S},\mathcal {T}), \mathbf {x}\) given by

The existence of the multidimensional resultant is equivalent to the assumption that the preceding variety \(V(m,d)\) is an irreducible variety of dimension \(2m^d-1\) in \(\mathbb {P}(\mathbb {C}^2)\times \mathbb {P}(\mathbb {C}^{m^{\times d}}\times \mathbb {C}^{m^{\times d}})\times \mathbb {P}(\mathbb {C}^m)\). Thus, it is enough to find a good point \((\mathcal {S}_0,\mathcal {T}_0)\) such that it has exactly \(\mu (m,d)=m(d-1)^{m-1}\) smooth points \(((u_i,v_i), (\mathcal {S}_0,\mathcal {T}_0), \mathbf {x}_i)\) in \(V(m,d)\).

We call \(\mathcal {T}=[t_{i_1,\ldots ,i_d}]\in \mathbb {C}^{m^{\times d}}\) an almost diagonal tensor if \(t_{i_1,\ldots ,i_d}=0\) whenever \(i_p\ne i_q\) for some \(1<p<q\le d\). An almost diagonal tensor \(\mathcal {T}\) is represented by a matrix \(B=[b_{ij}]\in \mathbb {C}^{m\times m}\), where \(t_{i,j,\ldots ,j}=b_{ij}\). Assume now that \(\mathcal {S}_0,\mathcal {T}_0\) are almost diagonal tensors represented by the matrices \(A,B\), respectively. Then

Assume furthermore that \(A=I\), and \(B\) is a cyclic permutation matrix, i.e., \(B(x_1,\ldots ,x_m)^\top \) \(=(x_2,\ldots ,x_m,x_1)^\top \). Then \(B\) has \(m\) distinct eigenvalues, the \(m\)th roots of unity. \(\mathbf {x}\) is an eigenvector of (5.5) if and only if \(\mathbf {x}^{\circ (d-1)}\) is an eigenvector of \(B\). Fix an eigenvalue of \(B\). One can set \(x_1=1\). Then we have exactly \((d-1)^{m-1}\) eigenvectors in \(\mathbb {P}(\mathbb {C}^m)\) corresponding to each eigenvalue \(\lambda \) of \(B\). Thus, all together we have \(m(d-1)^{m-1}\) distinct eigenvectors. It remains to show that each point \(((u_i,v_i),(\mathcal {S}_0,\mathcal {T}_0),\mathbf {x}_i)\) is a simple point of \(V(m,d)\). For that we need to show that the Jacobian of system (5.4) at each point has rank \(m\), the maximal possible rank, at \(((u_i,v_i),(\mathcal {S}_0,\mathcal {T}_0),\mathbf {x}_i)\). For that we assume that \(u_i=\lambda _i, v_i=1, x_1=1\). This easily follows from the fact that each eigenvalue of \(B\) is a simple eigenvalue. Hence, the projection of \(V(m,d)\) on \(\mathbb {P}(\mathbb {C}^{m^{\times d}}\times \mathbb {C}^{m^{\times d}})\) is \(m(d-1)^{m-1}\) valued.

Note that in this example each eigenvalue \(\lambda \) of (5.5) is of multiplicity \((d-1)^{m-1}\). It remains to show that when we consider the pair \(\mathcal {S}_0,\mathcal {T}\), where \(\mathcal {T}\) varies in the neighborhood of \(\mathcal {T}_0\), we obtain \(m(d-1)^{m-1}\) different eigenvalues. Since the Jacobian of system (5.5) has rank \(m\) at each eigenvalue \(\lambda _i=\frac{u_i}{v_i}\) and the corresponding eigenvector \(\mathbf {x}_i\), one has a simple variation formula for each \(\delta \lambda _i\) using the implicit function theorem. Set \(x_1=1\) and denote \(\mathbf {F}(\mathbf {x},\lambda ,\mathcal {T})=(F_1,\ldots ,F_m):=(\lambda \mathcal {S}_0-\mathcal {T})\times \otimes ^{d-1}\mathbf {x}\). Thus, we have the system of \(m\) equations \(\mathbf {F}(\mathbf {x},\lambda )=0\) in \(m\) variables \(x_2,\ldots ,x_m, \lambda \). We let \(\mathcal {T}=\mathcal {T}_0+t\mathcal {T}_1\), and we want to find the first term of \(\lambda _i(t)=\lambda _i+\alpha _i t +O(t^2)\). We also assume that \(\mathbf {x}_i(t)=\mathbf {x}_i+t\mathbf {y}_i+O(t^2)\), where \(\mathbf {y}_i=(0,y_{2,i},\ldots ,y_{m,i})^\top \). Let

The first-order computation yields the equation

Let \(\mathbf {w}=(w_1,\ldots ,w_m)^\top \) be the left eigenvector of \(B\) corresponding to \(\lambda _i\), i.e., \(\mathbf {w}^\top B=\lambda _i\mathbf {w}^\top \) normalized by the condition \(\mathbf {w}^\top (\mathcal {S}_0\times \otimes ^{d-1}\mathbf {x}_i)=(\mathcal {S}_0\times \otimes ^{d-1}\mathbf {x}_i)\times \mathbf {w}=1\). Contracting both sides of (5.6) using the vector \(\mathbf {w}\) we obtain

It is straightforward to show that \(\alpha _1,\ldots ,\alpha _{m(d-1)^{m-1}}\) are pairwise distinct for a generic \(\mathcal {T}_1\). \(\square \)

The proof of Theorem 15 yields the following corollary.

Corollary 16

Let \(\mathcal {T}\in \mathbb {C}^{m^{\times d}}\) be a generic tensor. Then the homogeneous eigenvalue problem (5.1) has exactly \(m (d-1)^{m-1}\) distinct eigenvectors in \(\mathbb {P}(\mathbb {C}^m)\), which correspond to distinct eigenvalues.

We close this section with a heuristic argument that shows that a generic pencil \((\mathcal {S},\mathcal {T})\in \mathbb {P}(\mathbb {C}^{m^{\times d}}\times \mathbb {C}^{m^{\times d}})\) has \(\mu (m,d)=m(d-1)^{m-1}\) distinct eigenvalues in \(\mathbb {P}(\mathbb {C}^m)\). Let \(\mathcal {S}\in \mathbb {C}^{m^{\times d}}\) be nonsingular. Then \(\mathcal {S}\) induces a linear map \(\hat{\mathcal {S}}\) from the line bundle \(\otimes ^{d-1} T(m)\) to the trivial bundle \(\mathbb {C}^m\) over \(\mathbb {P}(\mathbb {C}^m)\) by \(\otimes ^{d-1}\mathbf {x}\mapsto \mathcal {S}\times \otimes ^{d-1}\mathbf {x}\). Then we have an exact sequence of line bundles

where \(Q_{m,d}=\mathbb {C}^m/(\hat{\mathcal {S}}( \otimes ^{d-1} T(m)))\). The Chern polynomial of \(Q_{m,d}\) is \(1+\sum _{i=1}^{m-1} (d-1)^i t^i \alpha ^i\). A similar computation for finding the number of eigenvectors of (4.1) shows that the number of eigenvalues of (5.2) is the coefficient of \(t_1^{m-1}\) in the polynomial \(\frac{\hat{t}_1^{m} -\tilde{t}_1^{m}}{\hat{t}_1-\tilde{t}_1}\). Here, \(\hat{t}_1=\tilde{t}_1=(d-1)t_1\). Hence, the coefficient of \(t_1^{m-1}\) is \(\frac{(d-1)^m-(d-1)^m}{(d-1)-(d-1)}\). The calculus interpretation of this formula is the derivative of \(t^m\) at \(t=d-1\), which gives the value of the coefficient \(m(d-1)^{m-1}\).

6 Uniqueness of a Best Approximation

Let \(\langle \cdot ,\cdot \rangle , \Vert \cdot \Vert \) be the standard inner product and the corresponding Euclidean norm on \(\mathbb {R}^n\). For a subspace \(\mathbf {U}\subset \mathbb {R}^n\) we denote by \(\mathbf {U}^\perp \) the subspace of all vectors orthogonal to \(\mathbf {U}\) in \(\mathbb {R}^n\). Let \(C\subsetneq \mathbb {R}^n\) be a given nonempty closed set (in Euclidean topology, see §1). For each \(\mathbf {x}\in \mathbb {R}^n\) we consider the function