Abstract

This paper addresses the application of generalized polynomials for solving nonlinear systems of fractional-order partial differential equations with initial conditions. First, the solutions are expanded by means of generalized polynomials through an operational matrix. The unknown free coefficients and control parameters of the expansion with generalized polynomials are evaluated by means of an optimization process relating the nonlinear systems of fractional-order partial differential equations with the initial conditions. Then, the Lagrange multipliers are adopted for converting the problem into a system of algebraic equations. The convergence of the proposed method is analyzed. Several prototype problems show the applicability of the algorithm. The approximations obtained by other techniques are also tested confirming the high accuracy and computational efficiency of the proposed approach.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Fractional calculus generalizes the differentiation and integration to non-integer orders (Podlubny 1999). In recent years, fractional differential equations attracted the attention of researchers in many branches of science such as mathematics, physics, engineering, and biosciences (Miller and Ross 1993; Hilfer 2000; Zhou et al. 2015; Hassani et al. 2019, 2020; Riewe 1997; Shekari et al. 2019; Rahimkhani and Ordokhani 2020; Zhao et al. 2019). The modeling of systems with differential equations has been an important research topic. Owolabi et al. (2018) studied the analytical and numerical solutions of a dynamical model comprising three species of systems using the fractional Fourier transform. Alliera and Amster (2018) used the topological degree theory and proved the existence of positive periodic solutions for a system of delay differential equations. Ablinger et al. (2019) developed an algorithm to solve analytically linear systems of differential equations that factorize to first order. Sabermahani et al. (2018) proposed a set of fractional functions based on the Lagrange polynomials to solve a class of fractional differential equations. Sabermahani et al. (2020a) introduced a formulation for fractional-order general Lagrange scaling functions and employed these functions for solving fractional differential equations. Sabermahani et al. (2020b) used hybrid functions of block-pulse and fractional-order Fibonacci polynomials for obtaining the approximate solution of fractional delay differential equations. Sabermahani et al. (2020c) presented a numerical technique based on two-dimensional Müntz–Legendre hybrid functions to solve fractional-order partial differential equations in the sense of the Caputo derivative. Several other studies on systems of differential equations can be found in Schittkowski (1997), Zhou and Casas (2014), Owolabi (2018), Feng et al. (2019), and Jaros and Kusano (2014).

Partial differential equations (PDE) are used to describe different types of phenomena in biosciences (Gupta et al. 2013; Shangerganesh et al. 2019). Recently, Kumar et al. (2018) addressed initial-value boundary problems in hyperbolic PDE in the cryosurgery of lung cancer. The bio-heat transfer during cryosurgery of lung cancer was studied using the Lagrange wavelet Galerkin method to convert the problem into generalized systems of Sylvester equations. Gupta et al. (2010) proposed a mathematical model for describing the process of heat transfer in biological tissues for different coordinate system during thermal therapy. Roohi et al. (2018) found the general form of the space–time-fractional heat conduction equation with locally variable initial condition and time-dependent boundary conditions. Ganesan and Lingeshwaran (2017) proposed a finite-element scheme using the Galerkin finite-element method for computations of the cancer invasion model. Avazzadeh and Hassani (2019) applied transcendental Bernstein series as base functions to solve reaction–diffusion equations with nonlocal boundary conditions. Ravi Kanth and Garg (2020) presented a combination of the Crank–Nicolson and exponential B-spline methods for solving a class of time-fractional reaction–diffusion equation. Yang et al. (2020) considered the Crank–Nicolson orthogonal spline collocation method for the approximate solution of the variable coefficient fractional mobile–immobile equation. Liu and Wang (2019) proposed a unified size-structured PDE model for the growth of metastatic tumors, which extends a well-known coupled PDE dynamical model. Farayola et al. (2020) presented a mathematical model of a radiotherapy cancer treatment process. The model was used to simulate the fractionated treatment process of six patients. Mohammadi and Dehghan (2020) used the meshless method to solve numerically models showing cancer cell invasion of tissue with/without considering the effect of cell–cell and cell–matrix adhesion.

In this study, we apply the generalized polynomials (GP) for solving the following nonlinear systems of fractional-order partial differential equations (NSF-PDE) (Zhao et al. 2017):

with the initial conditions:

Here, \(^C_0{D_{t}^{\alpha }}\) and \(^C_0{D_{t}^{\beta }}\) denote the fractional derivative of the Caputo-type orders with \(1<\alpha ,\,\beta \le 2\), respectively.

We proposed an optimization process based on the GP and operational matrices of derivatives together with the help of the Lagrange multipliers method to approximate the solution of the NSF-PDE (1). The algorithm transforms the problem into a system of nonlinear algebraic equations with unknown coefficients, parameters and Lagrange multipliers. The method is illustrated by means of three examples and the numerical approximations compared with the analytical solutions.

The paper is organized as follows. Section 2 presents the fundamental aspects of the fractional calculus. Section 3 introduces the GP and their properties, including function approximation, convergence analysis, and the operational matrix of derivative. Section 4 develops an algorithm for the solution of Eq. (1). Section 5 analyzes three problems that illustrate the applicability and accuracy of the proposed method. Finally, Sect. 6 summarizes the conclusions.

2 Fundamental aspects

In this section, we give the definitions and mathematical concepts that are used in this paper.

Definition 1

(Dahaghin and Hassani 2017a, b; Farooq et al. 2019) A real function \(u(t),~t>0\) is said to be in the space \(C_{\rho },~\rho \in \mathbb {R}\) if there exists a real number \(p>\rho \), such that \(u(t)=t^{p}u_{1}(t)\), where \(u_{1}(t)\in C[0,\infty )\), and it is said to be in the space \(C_{\rho }^{n}\) if \(u^{(n)}\in C_{\rho },~n\in \mathbb {N}\).

Definition 2

(Dahaghin and Hassani 2017a, b; Farooq et al. 2019) The fractional derivative of order \(\alpha >0 \), of the Caputo type, is defined as:

where n is a natural number, \(t>0\), and \(u\in C_{1}^{n}\).

From the definitions of the fractional derivatives of the Caputo type, it is straightforward to conclude that:

where \(n-1<\alpha \le n\).

3 Operational matrices and analysis of the proposed method

This section introduces the GP in the perspective of their application as a class of basis functions. Furthermore, the operational matrices of fractional derivatives of the GP in the Caputo type, function approximation, and the convergence analysis are also presented.

3.1 GP and operational matrices of GP

To solve the NSF-PDE, we introduce the GP class of basis functions. Let us define the GP of degree m as follows:

where the symbol \(k_{i}\) denotes control parameters. The expansions of given functions u(x, t) and v(x, t) in terms of GP can be represented with the following matrices:

where \(\mathscr {A}=[a_{ij}]\) and \(\mathscr {B}=[b_{ij}]\) are \((m_{1}+1)\times (m_{2}+1)\) and \((n_{1}+1)\times (n_{2}+1)\) unknown matrices of free coefficients, respectively, which must be computed, and \(\mathscr {D}_{m_{1}}(x)\), \(\mathscr {F}_{m_{2}}(t)\), \(\mathscr {H}_{n_{1}}(x)\), and \(\mathscr {Z}_{n_{2}}(t)\) are vectors defined as:

where \(k_{i}\), \(s_{j}\), \(r_{i}\), and \(l_{j}\) are control parameters.

The fractional derivatives of orders \(\,1<\alpha ,\,\beta \le 2\,\) of \(\,\mathscr {D}_{m_{1}}(x)\,\) and \(\,\mathscr {Z}_{n_{2}}(t)\,\) can be written as:

where \(\,\mathcal {D}^{(\alpha )}_{x}\,\) and \(\,\mathcal {D}^{(\beta )}_{t}\,\) denote the \(\,(m_{1}+1)\times (m_{1}+1)\,\) and \(\,(n_{2}+1)\times (n_{2}+1)\,\) operational matrices of fractional derivatives, respectively, which are defined by:

The first-order derivatives of \(\,\mathscr {D}_{m_{1}}(x)\,\) and \(\,\mathscr {Z}_{n_{2}}(t)\,\) are given by:

where \(\mathcal {D}^{(1)}_{x}\) and \(\mathcal {D}^{(1)}_{t}\) denote the following \(\,(m_{1}+1)\times (m_{1}+1)\,\) and \(\,(n_{2}+1)\times (n_{2}+1)\,\) operational matrix of derivatives:

3.2 Function approximation

Let \(X=L^{2}[0,1]\times [0,1]\) and \(Y=\left\langle x^{\beta _{i}}t^{\gamma _{j}};\,\ 0\le i\le m_{1},\,\ 0\le j\le m_2\right\rangle \). Then, Y is a finite-dimensional vector subspace of \(X\,\left( \mathrm{{dim}} Y \le (m_1+1)(m_2+1)<\infty \right) \) and each \(\tilde{v}=\tilde{v}(x,t)\in X\) has a unique best approximation \(v_0=v_0(x,t)\in Y\), so that:

For more details, see Theorem 6.1-1 of Kreyszig (1978). Since \(v_0\in Y\) and Y is a finite-dimensional vector subspace of X, by an elementary argument in linear algebra, there exist unique coefficients \(b_{ij} \in \mathbb {R}\), such that the dependent variable \(v_0(x,t)\) may be expanded in terms of the GP as:

where \(\mathscr {H}_{n_{1}}(x)\) and \(\mathscr {Z}_{n_{2}}(t)\) are defined in Eq. (8).

3.3 Convergence analysis

In the following, we present a theorem that insures the existence of a GP for approximating an arbitrarily continuous function.

Theorem 1

Let \(f:[0,1]\times [0,1]\rightarrow \mathbb {R}\) be a continuous function. Then, for every \(x,t\in [0,1]\) and \(\epsilon >0\), there exists a generalized polynomial \(\mathcal{{Q}}_{m_1,m_2}(x,t)\), such that:

Proof

Let \(\epsilon >0\) be arbitrarily chosen. In view of Weierstrass theorem (Kreyszig 1978), there exists a polynomial \(P_{m_1,m_2}(x,t)=\sum ^{m_1}_{i=0}\sum ^{m_2}_{j=0}a_{i,j}x^it^j\), \(x,t\in [0,1]\) and \(a_{i,j}\in \mathbb {R}\), such that:

We construct a GP, \(\mathcal{{Q}}_{m_1,m_2}(x,t)\), as follows:

We first notice that for the case of \(x=0\) and \(t=0\), by setting \(k_0=0\), \(s_0=0\) and \(\mathcal{{Q}}_{m_1,m_2}(x,t)=a_{0,0}\), the conclusion follows immediately. Now, we proceed to the case of \(x,t\in (0,1]\). We consider two sequences \(\{k_{i,n}\}_{n\in \mathbb {N}}\) and \(\{s_{j,n}\}_{n\in \mathbb {N}}\) of real numbers, such that \(k_{i,n}\rightarrow 0\) as \(n\rightarrow \infty \), and \(s_{j,n}\rightarrow 0\) as \(n\rightarrow \infty \), for all \(i=0,1,2,\ldots ,m_1\) and \(j=0,1,2,\ldots ,m_2\). This ensures that \(x^{k_{i,n}}\rightarrow 1\) as \(n\rightarrow \infty \), and that \(t^{s_{j,n}}\rightarrow 1\) as \(n\rightarrow \infty \), for \(i=0,1,2,\ldots ,m_1\) and \(j=0,1,2,\ldots ,m_2\). Hence, for every \(\epsilon >0\), there exist the values \(N_0,N_1,\ldots ,N_{m_1}\) and \(Z_0,Z_1,\ldots ,Z_{m_2}\) in \(\mathbb {N}\), such that:

for all \(i=0,1,2,\ldots ,m_1\) and \(j=0,1,2,\ldots ,m_2\). Setting \(N=\max \{N_0,N_1,\ldots ,N_{m_1},Z_0,Z_1,\ldots ,Z_{m_2}\}\) and:

we conclude that:

This implies that:

This completes the proof. \(\square \)

3.4 Error analysis

We investigate the error analysis of the system fractional PDEs. Let \(C(\varGamma ,\Vert \cdot \Vert )\) denote the Banach space of all continuous functions defined \(\varOmega =[0,1]\times [0,1]\) with the norm:

We first assume that u(x, t) and v(x, t), and \(u^*(x,t)\) and \(v^*(x,t)\) are the exact and approximate solutions of the system, respectively. Let the nonlinear terms \(f_1\) and \(f_2\) be defined in Lipschitz condition, such that:

We assume that \(f_1\) and \(f_2\) are continuous functions with variables u, v and \(u^*\), \(v^*\), respectively.

Let \(e_1(x,t)=\Vert u(x,t)-u^*(x,t)\Vert \) and \(e_2(x,t)=\Vert v(x,t)-v^*(x,t)\Vert \) be the error functions of the system. Then, we consider:

and

where

If we transform Eq. (19), then we obtain from \(0<\frac{M_2}{\varGamma (1+\beta )}<1\) that:

Substituting Eq. (20) into Eq. (18), we are led to:

Setting \(\eta _1=\frac{L_1}{\varGamma (1+\alpha )}+\frac{L_2}{\varGamma (1+\alpha )}\left[ \frac{M_1}{\varGamma (1+\beta )}\left( 1-\frac{M_2}{\varGamma (1+\beta )}\right) ^{-1} \right] \), from (21), we conclude that:

Similarly, from Eq. (18) and \(0<\frac{L_1}{\varGamma (1+\alpha )}<1\), it yields:

Substituting Eq. (23) into Eq. (19), we obtain:

Setting \(\eta _2=\frac{M_1}{\varGamma (1+\beta )}\left[ \frac{L_2}{\varGamma (1+\alpha )}\left( 1-\frac{L_1}{\varGamma (1+\alpha )}\right) ^{-1} \right] +\frac{M_2}{\varGamma (1+\beta )}\), from (24), we conclude that:

Finally, letting \(m_1\rightarrow \infty \) and \(m_2\rightarrow \infty \) in relation (6), and taking into account (22) and (25), we deduce that \(e_1(x,t)\rightarrow 0\) and \(e_2(x,t)\rightarrow 0\). This completes the proof.

4 Description of the method

In the follow-up, and using the results obtained in the previous sections, we explain the procedure of the new method for solving Eq. (1) with:

To reduce the problem to a simpler one, we approximate the solutions using the GP as:

where \(\mathscr {A}=[a_{ij}]\) and \(\mathscr {B}=[b_{ij}]\) are undetermined matrices, and \(\mathscr {D}_{m_{1}}(x)\), \(\mathscr {F}_{m_{2}}(t)\), \(\mathscr {H}_{n_{1}}(x)\), and \(\mathscr {Z}_{n_{2}}(t)\) are in accordance with Eq. (8). From Eqs. (11) and (14), we can write:

Replacing Eqs. (27) and (28) into Eq. (26) yields:

Substituting Eqs. (27)–(29) into Eq. (1), we get:

and the 2-norm of the residual functions can be expressed as:

Here, \(\mathscr {K},\mathscr {S},\mathscr {R}\), and \(\mathscr {L}\) represent unknown control vectors for \(\mathscr {D}_{m_{1}}(x)\), \(\mathscr {F}_{m_{2}}(t)\), \(\mathscr {H}_{n_{1}}(x)\), and \(\mathscr {Z}_{n_{2}}(t)\), respectively, and may be defined as follows:

Since we must evaluate the unknown matrices \(\mathscr {A}\) and \(\mathscr {B}\) and the control parameters \(\mathscr {K},\mathscr {S},\mathscr {R}\) and \(\mathscr {L}\), for finding the approximate solution, we consider an optimization problem:

subject to:

We use the Lagrange multipliers method to solve the minimization problem:

where the vector \(\lambda \) represents the unknown Lagrange multipliers and \(\varLambda \) is a known column vector whose entries are equality constraints expressed in Eq. (35). The necessary conditions for the extremum are given by the following system of nonlinear algebraic equations:

We can solve the above system of nonlinear algebraic equations using software packages such as MAPLE or MATLAB. Finally, using the unknown free coefficients and control parameters, we can approximately determine the solutions of the problem using Eqs. (27) and (28), respectively. The algorithm is described briefly in the table.

5 Illustrative examples

In this section, we include three examples for different values of \(m_{1}\), \(m_{2}\), \(n_{1}\), and \(n_{2}\) to illustrate the applicability and computational efficiency of the proposed method. All numeric calculations are executed by means of MAPLE 17 via 15 decimal digits. The absolute error (AE) functions are obtained by applying the following formulae:

Example 1

Consider the following NSF-PDE:

with the following initial conditions:

The required information can be achieved via the analytic solutions \(u(x,t)=x^{4.6}\,t^{3.5}\) and \(v(x,t)=x^{3.9}\,t^{5.1}\). We implement the proposed scheme for finding the approximate solution when \(m_{1}=m_{2}=n_{1}=n_{2}=2\). As described in the previous sections, the solution can be expanded as follows:

where

and \(k_{2},\,s_{2},\,r_{2}\) and \(l_{2}\) are control parameters. Moreover, the unknown coefficient matrices of \(\mathscr {A}\) and \(\mathscr {B}\) are given by:

The control parameters and free coefficients with \(m_{1}=m_{2}=n_{1}=n_{2}=2\) are obtained as follows:

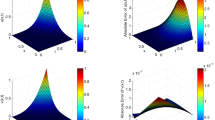

This problem is also solved by the GP method with \(m_{1}=3,\,m_{2}=2,\,n_{1}=3\) and \(n_{2}=2\). The AE values of the GP method at various points are listed in Table 1. The runtime of the proposed method with different choices of \(m_{1}\), \(m_{2}\), \(n_{1}\), and \(n_{2}\) are reported in Table 2. The GP solutions and AE functions are represented in Fig. 1 for \(m_{1}=m_{2}=n_{1}=n_{2}=2\). The approximate and exact solutions for different values of x and t are shown in Figs. 2 and 3. Table 1 and Figs. 1, 2, and 3 show the accurate numerical results. As can be seen, using more terms of the GP provides higher accuracy. Also, from Table 2, we conclude that if we choose large values of \(m_{1}\), \(m_{2}\), \(n_{1}\), and \(n_{2}\), then we have an higher computational load.

Approximate solution (left) and absolute error function (right) for Example 1 with \(m_{1}=m_{2}=n_{1}=n_{2}=2\)

The approximate and exact solutions for u(x, 0.4) (left side) and v(x, 0.4) (right side) with \(m_{1}=m_{2}=n_{1}=n_{2}=2\) for Example 1

The approximate and exact solutions for u(0.4, t) (left side) and v(0.4, t) (right side) with \(m_{1}=m_{2}=n_{1}=n_{2}=2\) for Example 1

Example 2

Consider the following system of fractional PDE (Zhao et al. 2017):

with the following initial conditions:

The analytical solutions of this system are \(u(x,t)=xt(x-1)\) and \(v(x,t)=xt(t-1)\). This problem is solved by means of the GP method with \(m_{1}=3,\,m_{2}=2,\,n_{1}=2,\,n_{2}=3\) and \(m_{1}=m_{2}=n_{1}=n_{2}=3\). The obtained AE in different points of \((x, t)\in [0,1]\times [0,1]\) are listed in Table 3. The runtime of the proposed method with different choices of \(m_{1}\), \(m_{2}\), \(n_{1}\), and \(n_{2}\) is reported in Table 4. The approximate solutions and AE functions are depicted in Fig. 4 for \(m_{1}=n_{2}=3,\,m_{2}=n_{1}=2\). The charts of the approximate and exact solutions for different values of x and t are depicted in Figs. 5 and 6. We verify that the new method is accurate in all cases and that using more terms of the GP improves the accuracy. Also, from Table 4, we conclude choosing larger values of \(m_{1}\), \(m_{2}\), \(n_{1}\), and \(n_{2}\), leads to an increasing computational load.

Zhao et al. (2017) introduced an efficient numerical technique based on the shifted Chebyshev orthogonal polynomials as basis functions for solving the system of fractional PDE (39). If we comparing Table 3 and Fig. 4 with the results obtained in Zhao et al. (2017), then we verify that we achieve a better accuracy.

Approximate solution (left) and absolute error function (right) for Example 2 with \(m_{1}=n_{2}=3,\,m_{2}=n_{1}=2\).

The approximate and exact solutions for u(x, 0.6) (left side) and v(x, 0.6) (right side) with \(m_{1}=n_{2}=3,\,m_{2}=n_{1}=2\) for Example 2

The approximate and exact solutions for u(0.6, t) (left side) and v(0.6, t) (right side) with \(m_{1}=n_{2}=3,\,m_{2}=n_{1}=2\) for Example 2

Example 3

Consider the following system of fractional PDE (Zhao et al. 2017):

with the following initial conditions:

The analytical solutions of the system are \(u(x,t)=x^{3}\sinh (t)\) and \(v(x,t)=t^{3}\sinh (x)\). This problem is also solved by the GP method with different values for \(m_{1},\,m_{2},\,n_{1}\) and \(n_{2}\). In Zhao et al. (2017), the shifted Chebyshev orthogonal polynomials, as basis functions, are applied to solve the system of fractional PDE (40). The AE of u(x, t) and v(x, t) by the GP method in different points \((x,t)\in [0,1]\times [0,1]\) are collected in Tables 5 and 6, respectively. We verify that they compare well with those reported previously in Zhao et al. (2017). The runtime of the proposed method with different choices of \(m_{1}\), \(m_{2}\), \(n_{1}\), and \(n_{2}\) are reported in Table 7. The approximate solutions and AE functions are shown in Fig. 7 for \(m_{1}=m_{2}=n_{1}=n_{2}=5\). The approximate and exact solutions for different x and t are depicted in Figs. 8 and 9. From Tables 5 and 6, we conclude that GP method is suitable for this problem, and that by increasing the number of GP, the accuracy is improved. Also, from Table 7, we verify that if we choose larger values of \(m_{1}\), \(m_{2}\), \(n_{1}\), and \(n_{2}\), then result in an increasing computational load.

Approximate solution (left) and absolute error function (right) for Example 3 with \(m_{1}=m_{2}=n_{1}=n_{2}=5\)

The approximate and exact solutions for u(x, 0.8) (left side) and v(x, 0.8) (right side) with \(m_{1}=m_{2}=n_{1}=n_{2}=5\) for Example 3

The approximate and exact solutions for u(0.8, t) (left side) and v(0.8, t) (right side) with \(m_{1}=m_{2}=n_{1}=n_{2}=5\) for Example 3

6 Conclusion

In this work, the GP and their operational matrices are applied to find a solution to the NSF-PDE. The generated operational matrices and the Lagrange multipliers method were employed to convert the NSF-PDE into a system of nonlinear algebraic equations to obtain an optimal selection of the free coefficients and control parameters. The convergence of the new method was discussed. Also, the method was illustrated by means of three numerical examples. Throughout the convergence analysis and numerical examples, it was verified that the algorithm is precise and efficient and that a small number of basis functions is sufficient to obtain a favorable approximate solution. A comparison between the results by the GP and other schemes confirms the accuracy of the proposed algorithm.

References

Ablinger J, Blümlein J, Marquard P, Rana N, Schneider C (2019) Automated solution of first order factorizable systems of differential equations in one variable. Nucl Phys B 939:253–291

Alliera CHD, Amster P (2018) Systems of delay differential equations: analysis of a model with feedback. Commun Nonlinear Sci Numer Simul 65:299–308

Avazzadeh Z, Hassani H (2019) Transcendental Bernstein series for solving reaction–diffusion equations with nonlocal boundary conditions through the optimization technique. Numer Methods Partial Differ Equ 35(6):2258–2274

Dahaghin MS, Hassani H (2017a) A new optimization method for a class of time fractional convection–diffusion-wave equations with variable coefficients. Eur Phys J Plus 132:130

Dahaghin MS, Hassani H (2017b) An optimization method based on the generalized polynomials for nonlinear variable-order time fractional diffusion-wave equation. Nonlinear Dyn 88(3):1587–1598

Farayola MF, Shafie S, Siam FM, Khan I (2020) Mathematical modeling of radiotherapy cancer treatment using Caputo fractional derivative. Comput Methods Prog Bio. https://doi.org/10.1016/j.cmpb.2019.105306

Farooq U, Khan H, Baleanu D, Arif M (2019) Numerical solutions of fractional delay differential equations using Chebyshev wavelet method. Comput Appl Math. https://doi.org/10.1007/s40314-019-0953-y

Feng TF, Chang CH, Chen JB, Zhang HB (2019) The system of partial differential equations for the \(c_0\) function. Nucl Phys B 940:130–189

Ganesan S, Lingeshwaran S (2017) Galerkin finite element method for cancer invasion mathematical model. Comput Math Appl 73(12):2603–2617

Gupta PK, Singh J, Rai KN (2010) Numerical simulation for heat transfer in tissues during thermal therapy. J Therm Biol 35:295–301

Gupta PK, Singh J, Rai KN, Rai SK (2013) Solution of the heat transfer problem in tissues during hyperthermia by finite difference-decomposition method. Appl Math Comput 219:6882–6892

Hassani H, Avazzadeh Z, Tenreiro Machado JA (2019) Solving two-dimensional variable-order fractional optimal control problems with transcendental Bernstein series. J Comput Nonlinear Dyn 14(6):11

Hassani H, Avazzadeh Z, Tenreiro Machado JA (2020) Numerical approach for solving variable-order space-time fractional telegraph equation using transcendental Bernstein series. Eng Comput 36:867–878

Hilfer R (2000) Applications of fractional calculus in physics. World Scientific, Singapore

Jaros J, Kusano T (2014) On strongly monotone solutions of a class of cyclic systems of nonlinear differential equations. J Math Anal Appl 417:996–1017

Kreyszig E (1978) Introductory functional analysis with applications. Wiley, Oxford

Kumar M, Upadhyay S, Rai KN (2018) A study of lung cancer using modified Legendre wavelet Galerking method. J Therm Biol 78:356–366

Liu J, Wang XS (2019) Numerical optimal control of a size-structured PDE model for metastatic cancer treatment. Math Biosci 314:28–42

Miller KS, Ross B (1993) An introduction to the fractional calculus and differential equations. Wiley, New York

Mohammadi V, Dehghan M (2020) Generalized moving least squares approximation for the solution of local and non-local models of cancer cell invasion of tissue under the effect of adhesion in one- and two-dimensional spaces. Comput Biol Med. https://doi.org/10.1016/j.compbiomed.2020.103803

Owolabi KM (2018) Mathematical analysis and numerical simulation of chaotic noninteger order differential systems with Riemann-Liouville derivative. Numer Methods Partial Differ Equ 34(1):274–295

Owolabi KM, Pindza E, Davison M (2018) Dynamical study of two predators and one prey system with fractional Fourier transform method. Numer Methods Partial Differ Equ 35(5):1614–1636

Podlubny I (1999) Fractional differential equations. Academic Press, New York

Rahimkhani P, Ordokhani Y (2020) The bivariate Müntz wavelets composite collocation method for solving space-time-fractional partial differential equations. Comput Appl Math. https://doi.org/10.1007/s40314-020-01141-7

Ravi Kanth ASV, Garg N (2020) A numerical approach for a class of time-fractional reaction–diffusion equation through exponential B-spline method. Comput Appl Math. https://doi.org/10.1007/s40314-019-1009-z

Riewe F (1997) Mechanics with fractional derivatives. Phys Rev E 55(3):3582–3592

Roohi R, Heydari MH, Aslami M, Mahmoudi MR (2018) A comprehensive numerical study of space-time fractional bioheat equation using fractional-order Legendre functions. Eur Phys J Plus 133:412

Sabermahani S, Ordokhani Y, Yousefi SA (2018) Numerical approach based on fractional-order Lagrange polynomials for solving a class of fractional differential equations. Comput Appl Math 37:3846–3868

Sabermahani S, Ordokhani Y, Yousefi SA (2020a) Fractional-order general Lagrange scaling functions and their applications. BIT Numer Math 60:101–128

Sabermahani S, Ordokhani Y, Yousefi SA (2020b) Fractional-order Fibonacci-hybrid functions approach for solving fractional delay differential equations. Eng Comput 36:795–806

Sabermahani S, Ordokhani Y, Yousefi SA (2020c) Two-dimensional Müntz–Legendre hybrid functions: theory and applications for solving fractional-order partial differential equations. Comput Appl Math. https://doi.org/10.1007/s40314-020-1137-5

Schittkowski K (1997) Parameter estimation in one-dimensional time-dependet partial differential equations. Optim Method Softw 7:165–210

Shangerganesh L, Nyamoradi N, Sathishkumar G, Karthikeyan S (2019) Finite-time blow-up of solutions to a cancer invasion mathematical model with haptotaxis effects. Comput Math Appl 77(8):2242–2254

Shekari Y, Tayebi A, Heydari MH (2019) A meshfree approach for solving 2D variable-order fractional nonlinear diffusion-wave equation. Comput Methods Appl Mech Engr 350:154–168

Yang X, Zhang H, Tang Q (2020) A spline collocation method for a fractional mobile-immobile equation with variable coefficients. Comput Appl Math. https://doi.org/10.1007/s40314-019-1013-3

Zhao F, Huang Q, Xie J, Li Y, Ma L, Wang J (2017) Chebyshev polynomials approach for numerically solving system of two-dimensional fractional PDEs and convergence analysis. Appl Math Comput 313:321–330

Zhao T, Mao Z, Karniadakis GE (2019) Multi-domain spectral collocation method for variable-order nonlinear fractional differential equations. Comput Methods Appl Mech Eng 348:377–395

Zhou Y, Casas E (2014) Fractional systems and optimization. Optimization 63(8):1153–1156

Zhou Y, Ionescu C, Tenreiro Machado JA (2015) Fractional dynamics and its applications. Nonlinear Dyn 80(4):1661–1664

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Agnieszka Malinowska.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Hassani, H., Machado, J.A.T., Naraghirad, E. et al. Solving nonlinear systems of fractional-order partial differential equations using an optimization technique based on generalized polynomials. Comp. Appl. Math. 39, 300 (2020). https://doi.org/10.1007/s40314-020-01362-w

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-020-01362-w

Keywords

- Nonlinear system of fractional-order partial differential equations

- Generalized polynomials

- Operational matrix

- Optimization problem

- Control parameters