Abstract

In this study, we propose a new set of fractional functions based on the Lagrange polynomials to solve a class of fractional differential equations. Fractional differential equations are the best tools for modelling natural phenomenon that are elaborated by fractional calculus. Therefore, we need an accurate and efficient technique for solving them. The main purpose of this article is to generalize new functions based on Lagrange polynomials to the fractional calculus. At first, we present a new representation of Lagrange polynomials and in continue, we propose a new set of fractional-order functions which are called fractional-order Lagrange polynomials (FLPs). Besides, a general formulation for operational matrices of fractional integration and derivative of FLPs on arbitrary nodal points are extracted. These matrices are obtained using Laplace transform. The initial value problems is reduced to the system of algebraic equations using the operational matrix of fractional integration and collocation method. Also, we find the upper bound of error vector for the fractional integration operational matrix and we indicate convergence of approximations of FLPs. Illustrative examples are included to demonstrate the validity and applicability of the proposed technique.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

FDEs are generalizations of ordinary differential equations to an arbitrary order. A history of the development of fractional differential operators is given in Oldham and Spanier (1974) and Miller and Ross (1993). During the last few decades, fractional calculus has been widely used to describe many phenomena, such as hydrologic (Benson et al. 2013), dynamic viscoelasticity modeling (Larsson et al. 2015), economics (Baillie 1996), temperature and motor control (Bohannan 2008), continuum and statistical mechanics (Mainardi 1997), solid mechanics (Rossikhin and Shitikova 1997), bioengineering (Magin 2004), medicine (Hall and Barrick 2008), earthquake (He 1988) and electromagnetism (Engheta 1996). Although, exploring and studying on new analytical and numerical methods to solve various fractional differential equations has become a valuable topic. Many authors investigated on the existence and uniqueness of solutions to the fractional differential equations, such as Mainardi (1997) and Podlubny (1999).

In recent studies, several methods have been employed to solve fractional differential equations, such as Laplace transforms (Daftardar-Gejji and Jafari 2007), Homotopy analysis method (Dehghan et al. 2010), variational iteration method (Odibat and Momani 2006), finite difference method (Meerschaert and Tadjeran 2006), Legendre wavelets method (Jafari et al. 2011), Haar wavelet (Rehman and Khan 2012), Bernoulli polynomials method (Keshavarz et al. 2014), Fifth-kind orthonormal Chebyshev polynomial (Abd-Elhameed and Youssri 2017), and so on.

On the other hand, Lagrange interpolation is commendable for analytical tools. The Lagrange approximate is in most cases the method of choice for dealing with polynomial interpolation (Burden and Faires 2010). Lagrange interpolation is used to solve integral equations (Rashed 2004; Mustafa and Ghanim 2014; Shahsavaran 2011). Also, Shamsi and Razzaghi (2004), Foroozandeh and Shamsi (2012) solved a class of optimal control problems using the interpolating scaling functions.

Recently, Kazem et al. (2013), proposed the new orthogonal functions based on the Legendre polynomials to obtain a new method to solve FDEs. Bhrawy et al. (2014) defined the fractional-order generalized Laguerre functions based on the generalized Laguerre polynomials. Yuzbasi (2013) introduced fractional Bernstein polynomials for solving the fractional Riccati type differential equations. Moreover, Rahimkhani et al. (2016) proposed the new functions based on Bernoulli wavelet and Krishnasamy and Razzaghi (2016) solved Bagley–Torvik equation with fractional Taylor basis.

In the present paper, our aim is to introduce the fractional-order Lagrange polynomials, which are employed to produce operational matrices of fractional derivative and integration generally, using Laplace transform to solve numerically linear and nonlinear FDEs with initial conditions.

This paper is organized as follows. In the next section, we describe some necessary definitions and required mathematical preliminaries for our subsequent development. In Sect. 3, we introduce a new representation of Lagrange polynomials and fractional-order Lagrange polynomials. In Sect. 4, we achieve the FLPs operational matrices of fractional order integration and derivative using Laplace transform without considering point of interpolation. Section 5 is devoted to the numerical method for solving a class of the initial value differential equations of fractional order and a system of fractional differential equations. In Sect. 6, we prove convergence of approximations of fractional-order Lagrange polynomials and we find an upper bound for error of vector operational matrix of fractional integration. In Sect. 7, we demonstrate the accuracy and effectiveness of the present method by considering some numerical examples.

2 Preliminaries

In this section, we recall some basic definitions and properties of fractional calculus theory.

Definition 1

Let \(f: [a, b] \rightarrow R\) be a function, \(\nu > 0\) a real number and \(n= \lceil \nu \rceil \), the Riemann–Liouville integral of fractional order is defined as (Mashayekhi and Razzaghi 2016)

where \(x^{ \nu -1} * f(x)\) is the convolution product of \(x^{\nu -1}\) and f(x) and \(\lceil \nu \rceil \) denotes the smallest integer greater than or equal to \(\alpha \).

Moreover, for the Riemann–Liouville fractional integrals, the following relationships are established (Mashayekhi and Razzaghi 2016)

and

Definition 2

Fractional derivative of order \(\nu \) in Caputo sense is defined as (Mashayekhi and Razzaghi 2016)

for \(m-1 < \nu \le m,\;m \in N\), \(x > 0\). For the Caputo derivative, we have (Rahimkhani et al. 2016):

where \(\lambda \) is constant.

and

where \(\lambda \) and \(\mu \) are constants.

Definition 3

(Generalized Taylors formula) Let \(D^{k \alpha }f(x) \in C (0,1]\) for \(k=0,1,\ldots ,n+1\). Then, we have (Odibat and Shawagfeh 2007)

with \(0 < \xi \le x,\;\forall x \in (0, \;1]\). Moreover, one has (Kazem et al. 2013):

where \(M_{\alpha } \ge \sup _{\xi \in (0,\;1]} \vert D^{(n+1) \alpha }f (\xi ) \vert \).

Definition 4

The Laplace transform of a function \(u(x,\;t),\;t \ge 0\), is defined by (Javidi and Ahmad 2013)

where \(\tau \) is the transformed parameter and is assumed to be real and positive.

Also, the Laplace transform of \(D^{\nu }f (t)\) can be found as follows:

3 Fractional-order Lagrange polynomials

In this section, first, we recall the definition of Lagrange polynomials and we present a new representation of Lagrange polynomials. Then we propose the fractional-order Lagrange polynomials and their properties.

3.1 Lagrange polynomials

Lagrange polynomial based on these points can be defined as follows (Stoer and Bulirsch 1996):

where, the set of nodes be given by \(x_{i} \in [0, 1],\;i=0, 1, \ldots , n\).

Also, Lagrange polynomials satisfy this condition

3.1.1 A new representation of Lagrange polynomials

Lemma 1

Let \(L_i(x),i=0, 1, \ldots , n\) are Lagrange polynomials on the set of nodes \(x_i \in [0, 1]\). Lagrange polynomials in these points are described by

Remark 1

Using Eq. (13), we can rewrite each of \(L_{i}(x)\) as follows

where

and \(s=1,\;2,\; \ldots ,\;n,\;\;\;i \ne k_{1} \ne \cdots \ne k_{s}\).

3.2 Fractional-order Lagrange polynomials

We define a new set of fractional functions called fractional-order Lagrange polynomials (FLPs). These polynomials constructed by changing of variable x to \(x^{\alpha }\), \((0<\alpha \le 1)\), on the Lagrange polynomials, which are denoted by \(L_{i}^{\alpha }(x)\).

Using Eq. (14), the analytic form of \(L_{i}^{\alpha }(x)\), given by:

where

and \(s=1, 2, \ldots , n, i \ne k_{1} \ne \cdots \ne k_{s}\).

These fractional functions on arbitrary nodal points are obtained. Then, we can have different choices for Lagrange polynomials. For example, if we consider zeros of shifted Legendre polynomials as these points, we have a set of orthogonal polynomials.

The fractional-order Lagrange functions for \(n=2\) and \(x_{i} = \frac{i}{n}\) are as:

Also, Fig. 1 represents the graphs of FLPs for \(n=2\) and various values of \(\alpha \).

3.3 Function approximation

A function f which is defined over \([0,\;1]\) can be expanded in terms of fractional-order Lagrange polynomials as

where C and \(L^{\alpha }(x)\) are \((n+1) \times 1\) vectors given by:

and T indicates transposition. We suppose that

where \(\langle , \rangle \) denotes inner product, so we have:

that

Now, we suppose that

we get

then

where D is matrix of order \((n+1) \times (n+1)\) as follows

4 Operational matrices of fractional integration and derivative

In this section, we derive operational matrix of fractional integration and fractional derivative of FLPs. We achieve these matrices in general, without regarding to the nodes of \(x_{i}, i=0, 1, \ldots , n\).

4.1 Fractional integration operational matrix of FLPs

We assume \(L^{\alpha }(x)\) be Lagrange polynomials vector defined in Eq. (21), then we have

where \(F^{(\nu ,\;\alpha )}\) is \((n+1) \times (n+1)\) operational matrix of fractional integration of order \(\nu \). Using Eq. (1), we achieve

To obtain \(I^{\nu }L^{\alpha }(x)\), we take the Laplace transform from Eq. (29). Then, we have

Taking the inverse Laplace transform of Eq. (30), yields

Now, we can expand \(\tilde{F}_{i}(s,x,\alpha )\) in terms of FLPs as

with

Therefore, we have

For example, we consider the following two cases. In each case, we present the corresponding fractional integration opertional matrix of FLPs.

Case 1 For \(n=2,\;x_{i},\;(i=0, 1, \ldots , n)\) are zeros of shifted Legendre polynomials

Case 2 For \(n=2,\;x_{i} = \frac{i}{2}, (i=0, 1, \ldots , n)\)

4.2 The FLPs operational matrix of the fractional derivative

The derivative of the function vector \(L^{\alpha }\) can be approximated as follows

\(D^{(\nu , \alpha )}\) is called the FLPs operational matrix of derivative in the Caputo sense.

Using Eq. (17), we achieve:

Taking the Laplace transform from Eq. (36), we get

where

Taking the inverse Laplace transform of \(F_{i}( r)\), yields

where

Now, we can expand \(\tilde{F}_{is}(x)\) in terms of FLPs as

with

Then, we have

and

Hence, we have

5 Numerical method

The matrices presented in the previous section are generally obtained. Then, we can have different choices for Lagrange polynomials. In this paper, we choose the points of Lagrange polynomials \(x_{i}=\frac{i}{n}\).

We consider the following problems:

-

(a)

The multi-order fractional differential equation

$$\begin{aligned} \tilde{G}(x, u(x), D^{\nu _{j}}u(x))=0, \quad 0 \le x \le 1, \end{aligned}$$(46)subject to

$$\begin{aligned} u^{(k)}(0)=u_{0k}, \quad k=0, 1, \ldots , l-1, \end{aligned}$$(47)with \(l= \lceil \nu _{r} \rceil \), where \(\nu _{j}, (\nu _{1}< \nu _{2}< \cdots < \nu _{r} )\) are positive real numbers and \(\lceil \nu _{r} \rceil \) denotes the ceiling function.

-

(b)

System of fractional differential equations

$$\begin{aligned} D^{\nu _{j}}u_{j}(x) = \tilde{G}_{j} (x, u_{1}(x), \ldots , u_{n}(x)), \quad 0< \nu _{j} \le 1, j=0, 1, \ldots , n, 0\le x \le 1, \end{aligned}$$(48)with the initial conditions

$$\begin{aligned} u_{j}(0)=u_{j0}, \quad j=1, 2, \ldots , n. \end{aligned}$$

For problem (a), we expand \(D^{\nu _{r}}u(x)\) by FLPs as

so, we get

where

where

Substituting above relations in Eq. (46), we achieve an algebraic equation. Moreover, we collocate this equation at \(x_{i},i=0, 1, 2, \ldots , n\). Therefore, we have a system of algebraic equations, which can be solved for the unknown vector C using Newton’s iterative method.

Similar to problem (a), we expand \(D^{\nu _{j}}u_{j}(x), \, j=0, 1, \ldots , n\), by FLPs as

From Eq. (28), we have

Substituting above equations in Eq. (48), we derive a system of algebraic equations. Then, we collocate this system at \(x_{i}\). This system can be solved using Newton’s iterative method.

6 Error analysis

A function \(f \in L^{2}[0,\;1]\) can be expanded as

We define error function \(\widehat{E}(x)\) as follows:

Theorem 1

Let \(D^{k \alpha }f \!\in \! C (0, 1], k=0,\;1, \ldots ,\;n\) and \(Y_{n}^{\alpha } = \{L_{0}^{\alpha }(x),\;L_{1}^{\alpha }(x),\;\ldots ,\;L_{n}^{\alpha }(x) \}\). If \(f_{n}(x)\) is the best approximation to f(x) out of \(Y_{n}^{\alpha }\), then the error bound of the approximation solution \(f_{n}(x)\) using FLPs can be obtained as follows:

where \(M_{\alpha }=\sup _{x \in [0,1]} \vert D^{(n+1) \alpha }f(x) \vert \).

Proof

We define

from the generalized Taylors formula in Definition 3, we have

Utilizing the fact that \(\tilde{f}(x) \in Y_{n}^{\alpha }\) is the best approximation of f out of \(Y_{n}^{\alpha }\) and using Eq. (56), we get

The theorem is proved by taking the square roots.

Therefore, FLP’s approximations of f(x) are convergent. \(\square \)

6.1 Upper bound of error vector for the fractional integration operational matrix

Now, we look for an upper bound for the error vector of \(F^{(\nu ,\alpha )}\) and we show that this error tends to zero, by increasing the number of FLPs.

Theorem 2

Let H is a Hilbert space and Y is a close subspace of H such that \(dim Y < \infty \) and \(y_{1}, y_{2}, \ldots , y_{n}\), is any basis for Y. Let z be an arbitrary element in H and \(y^{*}\) be the unique best approximation to z out of Y. Then (Kreyszig 1978)

where

The error vector \(\tilde{E}^{(\nu )}\) of the operational matrix \(F^{(\nu ,\alpha )}\) is given by

We approximate \(\tilde{F}_{i}(s,x,\alpha )\) as follows

from Theorem 2, we have:

Then, using Eqs. (32) and (62), we get

By considering the above discussion and Eq. (54), it can be concluded that by increasing the number of the fractional-Lagrange polynomials, the error vector \(\tilde{E}^{(\nu )}\) tend to zero.

7 Illustrative test problems

In this section, we apply our method to solve the following examples.

Example 1

Consider the following fractional differential equation (Lakestani et al. 2012):

subject to

The exact solution of this problem is \(u(x)=\sqrt{x}\).

Applying the present method for \(n=1\) and \(\alpha = \nu =\frac{1}{2}\), the problem reduces to:

where \(\sqrt{x}+\frac{\sqrt{\pi }}{2} \simeq E^{T}L^{\alpha }(x)\).

Using Eqs. (26), (32), for \( \nu =\alpha = \frac{1}{2}\), we have

by substituting these matrices in Eq. (65) and using collocation method in \(x_{i}\), the exact solution is obtained, while error of method based on B-spline functions (Lakestani et al. 2012) is presented in Table 1.

Example 2

Consider the following linear initial value problem (Bhrawy et al. 2014; Hashim et al. 2009; Saadatmandi and Dehghan 2010; Diethelm et al. 2002)

subject to

The second initial condition is for \(\nu > 1\) only. The exact solution of this problem is as follows (Stoer and Bulirsch 1996):

For \(\nu = 1\), the exact solution is \(u(x) = e^{-x}\) and the exact solution for \(\nu = 2\), is \(u(x) = \cos (x)\).

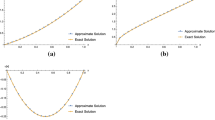

The numerical results for u(x) in \(n=4,\;\alpha =1\) and \(\nu = 0.65, 0.75, 0.85, 0.95\) and 1 are plotted in Fig. 2 a. Also, we present the results for \(\nu > 1\). Fig. 2b shows the approximate solutions obtained for \(n=4,\;\alpha =1\) and \(\nu = 1.65, 1.75, 1.85, 1.95\), and 2.

Curves of exact and numerical values of u(x) for various of \(\nu \), in Example 2

In these figures, we see that our approximate solutions converge to the exact solutions.

In addition, we solve this problem for \(\nu =2,\;\alpha =1\) with \(n=4\) and the absolute error which is obtained between the approximation solution and the exact solution for this case is plotted in Fig. 3.

For more investigation, we apply our method for \(\alpha =\nu =0.85\). In Table 2, the absolute error obtained between our numerical results and the exact solution for various of x and compared with the results of method in Yuzbasi (2013). Figure 2 and Table 2 demonstrate the validity and effectiveness of our method for this problem.

Absolute error between the exact and approximation solution, for \(\alpha =1,\;\nu =2\), in Example 2

Example 3

Consider the following system of fractional differential equations (Rahimkhani et al. 2016)

subject to

The exact solution of this system when, \(\alpha =\nu _{1}= \nu _{2} =1\), is \(u_{1}(x)=e^{x}sin(x),\;u_{2}(x)=e^{x}cos(x)\). We apply the proposed method to solve this system. Then this system reduces as

where \(1 \simeq E^{T} L^{\alpha }(x)\).

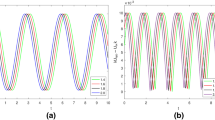

Plots of system in Example 3, when \(\alpha =\nu _{1} = \nu _{2} = 1\)

Absolute error obtained between the approximate solutions and the exact solution with \(n=8\) and \(\alpha =\gamma _{1} = \gamma _{2} = 1\) for (a) \(u_{1}(x)\) and (b) \(u_{2}(x)\) in Example 3

Figure 4 shows the numerical solutions of this system, using our method with \(n=8\). Also, Fig. 5 displays the absolute error obtained between the approximate solutions and the exact solution at \(\alpha =\nu _{1} = \nu _{2} = 1\) and \(n=8\) for \(u_{1}(x)\) and \(u_{2}(x)\).

Moreover, since the exact solutions for \(\nu _{1} \ne 1,\;\nu _{2} \ne 1\) are not exist, then, we measured the reliability using defining the norm of error \((\Vert R_{N}(x) \Vert ^{2})\).

Table 3 shows \(\Vert R_{N} \Vert ^{2}\) for \(\alpha =\nu _{1}=\nu _{2}\) and \(n=8\). This table demonstrates that the FLPs are more effective in solving systems of fractional differential equations.

Example 4

We consider the following nonlinear system of fractional differential equations (Rahimkhani et al. 2016)

subject to the initial conditions

The exact solution of this system, in \(\nu _{1}=\nu _{2}=\alpha =1\), is \(u_{1}(x)= e^{\frac{x}{2}},\;u_{2}(x)=xe^{x}\).

We solve this system using present method for \(n=8\). Figure 6 shows the approximate solutions for \(\alpha =\nu _{1}=\nu _{2}\) with various values of \(\alpha \), and the exact solution. Also, Fig. 7 displays the absolute error obtained between the approximate solutions and the exact solution in \(\alpha = \nu _{1} = \nu _{2} = 1\) and \(n=8\) for this problem.

The comparison of approximate solutions for \(n=8, \alpha =\nu _{1}=\nu _{2}\) and the exact solution for Example 4

Absolute error obtained between the approximate solutions and the exact solution with \(n=8\) and \(\alpha =\nu _{1} = \nu _{2} = 1\) for (a) \(u_{1}(x)\) and (b) \(u_{2}(x)\) in Example 4

Example 5

In this example, we consider the following nonlinear initial value problem (Kazem et al. 2013)

The exact solution of this problem is \(u(x)=x^2\). Applying the technique described in Sect. 5, we obtain exact solution with \(n=4\) and \(\alpha =1\).

Example 6

Consider the following fractional Riccati equation (Jafari et al. 2011; Keshavarz et al. 2014)

subject to initial condition

The exact solution, when \(\nu = 1\), is

By applying the technique described in Sect. 5, the problem became as

where

We apply the FLP approach to solve this problem with \(n=5\) and various values of \(\nu ,\;\alpha \). The approximation solution for this problem by Legendre wavelet method in \(k = 1,\; M = 25,\;\nu =1\) is plotted in Jafari et al. (2011) and absolute difference between exact and approximation solutions obtained by Bernoulli wavelet method for \(k = 1,\; M = 5,\; \nu =1\) is plotted in Keshavarz et al. (2014). From these figures and Fig. 8a, we see that we can achieve a reasonable approximation with the exact solution. Moreover, Fig. 8b shows the approximation solutions obtained for \(\alpha =1,\;n=5\) and different values of \(\nu \) using the FLP scheme. From these results, it is seen that the approximation solutions converge to the exact solution.

The exact solutions for the values of \(\nu \ne 1\) do not exist. Therefore, to demonstrate an efficiency of the proposed method for this problem, we define the norm of residual error as follows

Table 4 displays \(\Vert \mathrm{Res}_{n} \Vert ^{2}\) with some n and various values of \(\nu = \alpha \). Table 4 and figures show the advantage of the present technique for solving this nonlinear problem.

Absolute error between the exact and approximation solutions and comparison of u(x) with various values of \(\nu \) and the exact solution, with \(n= 5\) and \(\alpha =1\) for Example 6

a Comparing the exact and approximate solutions, b comparison of u(x) with various values of \(\nu \) and the exact solution, with \(n= 5\) and \(\alpha =1\) for Example 7

Example 7

Consider the following fractional Riccati equation (Jafari et al. 2011)

subject to initial condition \(u(0)=0\). The exact solution, when \(\nu = 1\), is

By setting \(n=5\) and \(\alpha = 1\), we obtain fractional-Lagrange polynomial solution for various \(\nu \). In Fig. 9c, we show the FLP solutions for \(\alpha =1\) and various values of \(\nu \). Fig. 5 shows that the FLP solution converges to the exact solution. Also, the approximate solution for this problem by Legendre wavelet method in \(k = 1, M = 25\) is plotted in (Jafari et al. 2011). From Fig. 9, it is obvious that we can achieve a good approximation with the exact solution using a small number of bases.

The exact solutions for the values of \(\nu \ne 1\) do not exist. Therefore, to show efficiency of the present method for this problem, we define the norm of residual error as follows

Table 5 displays \(\Vert \mathrm{Res}_{4} \Vert ^{2}\) with various values of \(\nu = \alpha \). From Table 5 and Fig. 9, we can see the advantage of the proposed method for this example.

8 Conclusion

In this paper, new functions named fractional-order Lagrange polynomials (FLPs) based on Lagrange polynomials has been constructed to solve the fractional differential equations. In addition, we have used the application of the fractional-order Lagrange polynomials for solving systems of FDEs.

First, we presented a new representation of Lagrange polynomials. Next, we introduce a new set of functions called fractional Lagrange polynomials (FLPs). Also, we obtain operational matrices of the Caputo fractional derivative and Riemann–Liouville fractional integration generally, without considering the nodes of Lagrange polynomials. These matrices are obtained using Laplace transform. The operational matrix of fractional integration together with collocation method have been used to approximate the numerical solution of the FDEs. Our numerical results in comparison with exact solutions and with the solutions obtained by some other numerical methods shows that this method is more accurate.

References

Abd-Elhameed WM, Youssri YH (2017) Fifth-kind orthonormal Chebyshev polynomial solutions for fractional differential equations. Comput Appl Math 1–25. https://doi.org/10.1007/s40314-017-0488-z

Baillie RT (1996) Long memory processes and fractional integration in econometrics. J Econ 73:5–59

Benson DA, Meerschaert MM, Revielle J (2013) Fractional calculus in hydrologic modeling: a numerical perspective. Adv Water Resour 51:479–497

Bhrawy AH, Alhamed YA, Baleanu D (2014) New spectral techniques for systems of fractional differential equations using fractional-order generalized Laguerre orthogonal functions. Fract Calc Appl Anal 17:1138–1157

Bohannan GW (2008) Analog fractional order controller in temperature and motor control applications. J Vib Control 14:1487–1498

Burden RL, Faires JD (2010) Numerical analysis, 9th edn. Brooks/Cole Cengage Learning, Boston

Daftardar-Gejji V, Jafari H (2007) Solving a multi-order fractional differential equation using Adomian decomposition. Appl Math Comput 189:541–548

Dehghan M, Manafian J, Saadatmandi A (2010) Solving nonlinear fractional partial differential equations using the homotopy analysis method. Numer Methods Partial Differ Equ 26:448–479

Diethelm K, Ford NJ, Freed AD (2002) A predictorcorrector approach for the numerical solution of fractional differential equation. Nonlinear Dyn 29:3–22

Engheta N (1996) On fractional calculus and fractional multipoles in electromagnetism. IEEE Tran Antennas Propag 44:554–566

Foroozandeh Z, Shamsi M (2012) Solution of nonlinear optimal control problems by the interpolating scaling functions. Acta Astronaut 72:21–26

Hall MG, Barrick TR (2008) From diffusion-weighted MRI to anomalous diffusion imaging. Magn Reson Med 59:447–455

Hashim I, Abdulaziz O, Momani S (2009) Homotopy analysis method for fractional IVPs. Commun Nonlinear Sci Numer Simul 14:674–684

He JH (1988) Nonlinear oscillation with fractional derivative and its applications. In: Proceedings of the international conference on vibrating engineering. Dalian, China

Jafari H, Yousefi SA, Firoozjaee MA, Momani S, Khalique CM (2011) Application of Legendre wavelets for solving fractional differential equations. Comput Math Appl 62:1038–1045

Javidi M, Ahmad B (2013) Numerical solution of fractional partial differential equations by numerical Laplace inversion technique. Adv Differ Equ 1:375. https://doi.org/10.1186/1687-1847-2013-375

Kazem S, Abbasbandy S, Kumar S (2013) Fractional-order Legendre functions for solving fractional-order differential equations. Appl Math Model 37:5498–551

Keshavarz E, Ordokhani Y, Razzaghi M (2014) Bernoulli wavelet operational matrix of fractional order integration and its applications in solving the fractional order differential equations. Appl Math Model 38:6038–6051

Kreyszig E (1978) Introductory functional analysis with applications. Wiley, New York

Krishnasamy VS, Razzaghi M (2016) The numerical solution of the Bagley–Torvik equation with fractional Taylor method. J Comput Nonlinear Dyn. https://doi.org/10.1115/1.4032390

Lakestani M, Dehghan M, Irandoust-pakchin S (2012) The construction of operational matrix of fractional derivatives using B-spline functions. Commun Nonlinear Sci Numer Simul 17:1149–1162

Larsson S, Racheva M, Saedpanah F (2015) Discontinuous Galerkin method for an integro-differential equation modeling dynamic fractional order viscoelasticity. Comput Methods Appl Mechan Eng 283:196–209

Magin RL (2004) Fractional calculus in bioengineering. Criti Rev Biomed Eng 32:1–104

Mainardi F (1997) Fractional calculus: some basic problems in continuum and statistical mechanics. Springer, New York

Mashayekhi S, Razzaghi M (2016) Numerical solution of distributed order fractional differential equations by hybrid functions. J Comput Phys 315:169–181

Meerschaert MM, Tadjeran C (2006) Finite difference approximations for two-sided space-fractional partial differential equations. Appl Numer Math 56:80–90

Miller KS, Ross B (1993) An introduction to the fractional calculus and fractional differential equations. Wiley, New York

Mustafa MM, Ghanim IN (2014) Numerical solution of linear Volterra–Fredholm Integral equations using Lagrange polynomials. Math Theory Model 5:137–146

Odibat Z, Momani S (2006) Application of variational iteration method to nonlinear differential equations of fractional order. Int J Nonlinear Sci Numer Simul 1:15–27

Odibat Z, Shawagfeh NT (2007) Generalized Taylors formula. Appl Math Comput 186:286–293

Oldham KB, Spanier J (1974) The fractional calculus. Academic Press, New York

Podlubny I (1999) Fractional differential equations. Academic Press, San Diego

Rahimkhani P, Ordokhani Y, Babolian E (2016) Fractional-order Bernoulli wavelets and their applications. Appl Math Model 40:8087–8107

Rashed MT (2004) Lagrange interpolation to compute the numerical solutions of differential, integral and integro-differential equations. Appl Math Comput 151:869–878

Rehman MU, Khan RA (2012) A numerical method for solving boundary value problems for fractional differential equations. Appl Math Model 36:894–907

Rossikhin YA, Shitikova MV (1997) Applications of fractional calculus to dynamic problems of linear and nonlinear hereditary mechanics of solids. Appl Mech Rev 50:15–67

Saadatmandi A, Dehghan M (2010) A new operational matrix for solving fractional-order differential equations. Comput Math Appl 59:1326–1336

Shahsavaran A (2011) Lagrange functions method for solving nonlinear Hammerstein Fredholm–Volterra integral equations. Appl Math Sci 49:2443–2450

Shamsi M, Razzaghi M (2004) Numerical solution of the controlled duffing oscillator by the interpolating scaling functions. J Electromagn Waves Appl 18:691–705

Stoer J, Bulirsch R (2002) Introduction to numerical analysis, 3rd edn. Springer, NewYork

Yuzbasi S (2013) Numerical solutions of fractional Riccati type differential equations by means of the Bernstein polynomials. Comput Appl Math 219:6328–6343

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by José Tenreiro Machado.

Rights and permissions

About this article

Cite this article

Sabermahani, S., Ordokhani, Y. & Yousefi, S.A. Numerical approach based on fractional-order Lagrange polynomials for solving a class of fractional differential equations. Comp. Appl. Math. 37, 3846–3868 (2018). https://doi.org/10.1007/s40314-017-0547-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40314-017-0547-5

Keywords

- Fractional-order Lagrange polynomial

- Fractional differential equations

- Operational matrix

- Collocation method

- Laplace transform

- Numerical solution