Abstract

Support vector machine (SVM) is a widely used method for classification. Proximal support vector machine (PSVM) is an extension of SVM and a promising method to lead to a fast and simple algorithm for generating a classifier. Motivated by the fast computational efforts of PSVM and the properties of sparse solution yielded by \(\ell _{1}\)-norm, in this paper, we first propose a PSVM with a cardinality constraint which is eventually relaxed by \(\ell _{1}\)-norm and leads to a trade-off \(\ell _{1}-\ell _{2}\) regularized sparse PSVM. Next we convert this \(\ell _{1}-\ell _{2}\) regularized sparse PSVM into an equivalent form of \(\ell _{1}\) regularized least squares (LS) and solve it by a specialized interior-point method proposed by Kim et al. (J Sel Top Signal Process 12:1932–4553, 2007). Finally, \(\ell _{1}-\ell _{2}\) regularized sparse PSVM is illustrated by means of a real-world dataset taken from the University of California, Irvine Machine Learning Repository (UCI Repository). Moreover, we compare the numerical results with the existing models such as generalized eigenvalue proximal SVM (GEPSVM), PSVM, and SVM-Light. The numerical results show that the \(\ell _{1}-\ell _{2}\) regularized sparse PSVM achieves not only better accuracy rate of classification than those of GEPSVM, PSVM, and SVM-Light, but also a sparser classifier compared with the \(\ell _{1}\)-PSVM.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In classification problems, we are given a set of training data \(\{(x_{1}, y_{1}), \cdots ,(x_{l}, y_{l})\}\), where \(x_{i} \in {\mathbb {R}}^{n}\) is input and \(y_{i}\in \{+1,-1\}\) is binary output. We wish to find a classifier to separate the training data into two sets, one is with the label “\(+1\)” and the other with the label “\(-1\)”. Meanwhile, when given a new input \(x\), the classifier can assign a label \(y\) from \(\{+1,-1\}\) to it.

SVM has been proved to be a powerful tool in a wide range of areas to solve classification, pattern recognition, and regression problems. It has drawn much attention in recent years. Both hard and soft margin SVM were first proposed by Vapnik [19, 20] based on the principle of structural risk minimization (SRM) principle. The SRM principle was realized by maximizing the margin of two separating parallel hyperplanes. To reduce the computational cost, several variants of SVM have been developed. For example, Suykens et al. [16, 17] proposed a least squares support vector machine (LS-SVM), in which the objective function was modified by a least squares error term and the constraints were replaced by equality constraints. Recent study indicates that LS-SVM is efficient for feature selection, linear regression. Moreover, based on the optimization theory, Fung [11] developed proximal SVM (PSVM) to solve classification problem. By contrast, PSVM leads to a fast and simple algorithm for generating a system of linear equations. The formulation of PSVM greatly simplifies the problem with considerably faster computational time than SVM. Moreover, Chen et al. [7] used \(l_p\)-norm to replace \(l_1\)-norm and presented an \(l_p\)-norm proximal SVM and studied its applications. Deng et al. [9] presented a detail study of SVM, including algorithms and extensions. Recently, there are other directions to extend the supervised SVM to semi-supervised SVM, in which the datasets contain two parts, the training set and the test set. For instance, Bai et al. [1, 2] developed conic optimization form for semi-supervised SVM.

Furthermore, the idea of \(\ell _{1}\) regularization is still receiving a lot of interests nowadays. In signal processing, the idea of \(\ell _{1}\) regularization comes up in several contexts including basis pursuit denoising [8] and signal recovery method from incomplete measurements [4, 6]. In statistics, the idea of \(\ell _{1}\) regularization is used in the well-known Lasso algorithm [18] for feature selection.

We are inspired by the fast computational efforts of PSVM and the properties of sparse solution yielded by \(\ell _{1}\)-norm. In this paper, we first propose a PSVM with a cardinality constraint which is eventually relaxed by \(\ell _{1}\)-norm and leads to a trade-off \(\ell _{1}-\ell _{2}\) regularized sparse PSVM. Next we convert this \(\ell _{1}-\ell _{2}\) regularized sparse PSVM into an equivalent form of \(\ell _{1}\) regularized least squares (LSs) and solve it by a specialized interior-point method proposed by Kim et al. [12]. Finally, \(\ell _{1}-\ell _{2}\) regularized sparse PSVM is illustrated by means of a real-world dataset taken from the UCI Repository. Moreover, we compare the numerical results with the existing models such as GEPSVM, PSVM and SVM-Light. The numerical results show that the \(\ell _{1}-\ell _{2}\) regularized sparse PSVM achieves not only better accuracy rate of classification than those of GEPSVM, PSVM and SVM-Light, but also a sparser classifier compared with the \(\ell _{1}\)-PSVM.

The paper is organized as follows. In Sect. 2, we briefly recall the basic concepts of SVM and PSVM, respectively. In Sect. 3, we add the cardinality constraint to the model of PSVM, then reformulate it as the \(\ell _{1}-\ell _{2}\) regularized sparse PSVM. In Sect. 4, we describe a specialized interior-point method which uses a preconditioned conjugate gradient algorithm to compute the search direction. Section 5 gives the experimental results on the datasets taken from UCI repository. Finally, conclusive remarks are given in Sect. 6.

2 Preliminaries

In this section, we briefly review the concepts of SVM and PSVM, respectively.

2.1 SVM for Binary Classification

We start by recalling SVM, which is a learning system and uses a hypothesis space of linear functions in a high dimensional feature space, trained with a learning algorithm from optimization theory that implements a learning bias derived from statistical learning theory [20]. Here, we consider the simplest case of linear binary classification to show how an SVM works.

Given a training set \(\{(x_{1},y_{1}),\cdots ,(x_{l},y_{l})\}\subseteq {\mathbb {R}}^{n}\times \{-1,+1\}\), it is linearly separable if there exists a hyperplane \(w^\mathrm{T}x+b=0\) such that

As shown in Fig. 1, the margin or the distance between the two supporting hyperplane is \(\frac{2}{\Vert w\Vert _{2}}\), the solid dots and hollow dots show the points of the “\(+1\)” class and “\(-1\)” class, respectively, while the solid line shows a separation hyperplane \(w^\mathrm{T}x+b=0\) between them.

The larger the margin is, the better the separation is. So the SVM aims to find the separation hyperplane which results in the max-margin. This leads to a quadratic programming problem as follows:

When the set of points is not linearly separable, we generalize the method by relaxing the separability constraints Eq. (2.1). This can be done by introducing positive slack variables \(\xi _{i}\), \(i=1,\cdots ,l\) in the constraints, which then become

As shown in Fig. 2, it means that the points may locate in the area between the two dashed lines. But each exceeding point must be punished by a misclassification penalty, i.e., an increase in the objective function of Eq. (2.4). Thus, it follows that

2.2 PSVM for Binary Classification

Suppose that a two-class problem of classifying \(l\) points in the \(n\)-dimensional real space \({\mathbb {R}}^{n}\) is considered, the standard PSVM is expressed as follows:

where \(C>0\), \( x_{i}\in {\mathbb {R}}^{n}\), \(y_{i}\in \{-1,1\}\), \(\xi _{i}\in {\mathbb {R}}\), \(w\in {\mathbb {R}}^{n}\), \(b\in {\mathbb {R}}\).

The geometric interpretation of Eq. (2.5) is shown in Fig. 3. Compared with Eq. (2.4), this model not only adds advantages such as strong convexity of the objective function, but also changes the nature of optimization problem significantly. The planes \(w^\mathrm{T}x_{i}+b=\pm 1\) are not bounding planes any more, but can be thought as \(``proximal\)” planes, around which points of the corresponding class are clustered and which are pushed as far apart as possible.

3 \(\ell _{1}-\ell _{2}\) Regularized Sparse PSVM Model

To obtain a sparse classifier, we add the cardinality constraint \(\Vert w\Vert _{0}\leqslant N\) to the original PSVM model Eq. (2.5), where \(\Vert \cdot \Vert _{0}\) denotes the number of non-zero components, \(N\in \mathbb {N}^{+}\). Then a new model is established as follows:

where \(C>0\), \(x_{i}\in {\mathbb {R}}^{n}\), \(y_{i}\in \{-1,1\}\), \(\xi _{i}\in {\mathbb {R}}\), \(w\in {\mathbb {R}}^{n}\), \(b\in {\mathbb {R}}\). After adding this cardinality constraint, Eq. (3.1) becomes a combinatorial problem which is difficult to solve.

In the following, we first transform the cardinality constraint into the objective function with a parameter \(\lambda \). Then we have:

where \(\lambda \geqslant 0\), \(C>0\), \(x_{i}\in {\mathbb {R}}^{n}\), \(y_{i}\in \{-1,1\}\), \(\xi _{i}\in {\mathbb {R}}\), \(w\in {\mathbb {R}}^{n}\), \(b\in {\mathbb {R}}\). In the objective function, the \(\ell _{0}\)-norm is non-convex, and it is computationally difficult. Not surprisingly, the above problem Eq. (3.2) is also computationally difficult. Furthermore, it is known to be NP-hard.

As the \(\Vert \cdot \Vert _{1}\) is, in a certain natural sense, a convexification of the \(\Vert \cdot \Vert _{0}\), the following model can be viewed as a convexification of Eq. (3.2), in this paper, we call it an \(\ell _{1}-\ell _{2}\) regularized sparse PSVM:

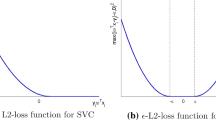

where \(\lambda \geqslant 0\), \(C>0\), \(x_{i}\in {\mathbb {R}}^{n}\), \(y_{i}\in \{-1,1\}\), \(\xi _{i}\in {\mathbb {R}}\), \(w\in {\mathbb {R}}^{n}\), \(b\in {\mathbb {R}}\). Readers can refer to [5, 10] for more details about the relation between \(\ell _{1}\)-norm and \(\ell _{0}\)-norm. The objective function of Eq. (3.3) is a trade-off between \(\ell _{1}\)-norm term and \(\ell _{2}\)-norm term. The \(\ell _{2}\)-norm term is responsible for the good classification performance, while the \(\ell _{1}\)-norm term leads to sparser solutions. When the \(i\)th component of \(w\) is zero, the \(i\)th component of the vector \(x\) is irrelevant in deciding the class of \(x\) using linear decision function \(f(x)={\mathrm{sgn}}(w^\mathrm{T}x-b)\). The solution of model Eq. (3.3) differs with various parameter \(\lambda \).

Several solution methods can be used to solve the \(\ell _{1}-\ell _{2}\) regularized sparse PSVM Eq. (3.3), for example, the alternating direction method of multipliers (ADMM). Recently, Bai et al. [3] applied ADMM to solve \(\ell _{1}-\ell _{2}\) regularized sparse PSVM. Our goal in this paper is to use another well-known solution method: interior-point method to solve Eq. (3.3). Our approach is to convert the \(\ell _{1}-\ell _{2}\) regularized sparse PSVM into an equivalent \(\ell _{1}\) regularized LS appeared in [12], which is solved by a specialized interior-point method.

Considering the definition of \(l_2\)-norm and the constraint \(\xi _{i}=1-y_{i}(w^\mathrm{T}x_{i}+b)\) for \(i=1,\cdots ,l\), we can rewrite the objective function as follows:

where \(A{=}\left( \! \begin{array}{c} \sqrt{C}y_{1}x_{1}^\mathrm{T} \\ \vdots \\ \sqrt{C}y_{l}x_{l}^\mathrm{T} \\ e_{1}^\mathrm{T} \\ \vdots \\ e_{n}^\mathrm{T} \\ 0 \\ \end{array} \right) _{(l{+}n{+}1)\times n,}\) \(A_{1}{=}\left( \begin{array}{c} \sqrt{C}y_{1} \\ \vdots \\ \sqrt{C}y_{l} \\ 0 \\ \vdots \\ 0 \\ 1 \\ \end{array} \right) _{(l{+}n{+}1)\times 1,}\) \(d{=}\left( \begin{array}{c} \sqrt{C} \\ \vdots \\ \sqrt{C} \\ 0 \\ \vdots \\ 0 \\ 0 \\ \end{array} \right) _{(l{+}n{+}1)\times 1,}\) \(e_{i}=\left( \begin{array}{c} 0 \\ \vdots \\ 0 \\ 1_{(i)}\\ 0\\ \vdots \\ 0 \\ \end{array} \right) _{n\times 1.}\)

Therefore, we can convert Eq. (3.3) into an equivalent unconstrained optimization problem with a simple form:

Obviously, we obtain an equivalent expression of \(\ell _{1}-\ell _{2}\) regularized sparse PSVM. Therefore, we use a specialized interior-point method to solve Eq. (3.4). The details of the solving process will be described in next section.

4 A Specialized Interior-Point Method

4.1 A Specialized Interior-Point Method and PCG Algorithm

In this section, we use a specialized interior-point method to solve Eq. (3.4), which is the equivalent model of \(\ell _{1}-\ell _{2}\) regularized sparse PSVM. We use the preconditioned conjugate gradients (PCG) algorithm to calculate the search direction which is similar to the method in [12]. The objective function of Eq. (3.4) is convex but not differentiable, and we first reformulate it to a convex quadratic problem with linear inequality constraints as follows:

where \(w=(\begin{array}{ccc} w_{1}&\cdots&w_{n} \end{array} )\), \(b\in {\mathbb {R}}\), \(u\in {\mathbb {R}}^{n}\). We define the logarithmic barrier for the bound constraints \(-u_{i}\leqslant w_{i}\leqslant u_{i}\),

with domain

We augment the weighted objective function by the logarithmic barrier and obtain the following function:

where the parameter \(t>0\). This function is smooth, strictly convex, and bounded below. Newton’s method is used in minimizing \(\varPsi _{t}\), i.e., the search direction is computed as the exact solution to the Newton system,

where \(H\) is the Hessian and \(g\) is the gradient of \(\varPsi _{t}\) at the current iterate \((b,w,u)\). The Hessian can be written as

where

Obviously, \(H\) is symmetric and positive definite. The gradient can be written as

where

Solving Newton system Eq. (4.2) exactly may be not computationally practical, so we apply the PCG algorithm to the Newton system to compute the search direction approximately (readers can refer to [12] or Sect. 5 in [15] for more details). In this paper, we choose the preconditioner \(P\) as follows:

which is symmetric and positive definite.

4.2 Dual Gap and Stopping Criteria

To derive a Lagrange dual of the model Eq. (3.4), we first introduce a new variable \(z\in {\mathbb {R}}^{l+n+1}\), as well as new equality constraints \(z=Aw+A_{1}b-d\), to obtain the equivalent problem:

Associating dual variables \(\nu \in {\mathbb {R}}^{l+n+1}\) with the equality constraints \(z=Aw+A_{1}b-d\), the Lagrangian is

The objective function of Eq. (4.3) is convex but not differentiable, so we use a first-order optimality condition based on subdifferential calculus, and obtain the dual function:

The Lagrange dual of Eq. (4.3) is therefore

where the dual objective \(G(\nu )\) is

First, we define \(\bar{b}=\arg \min _{b}\left\| Aw+A_{1}b-d\right\| _{2}^{2}\), then for an arbitrary \(w\), \(A_{1}^\mathrm{T}(Aw+A_{1}\bar{b}-d)=0\). So \((w,\bar{b},\bar{z})\) is primal feasible. Next, we define

Evidently \(\bar{\nu }\) is dual feasible and \(G(\bar{\nu })\) is lower bound on \(p^{*}\), which is the optimal value of the model Eq. (3.4).

Here \((w,\bar{b},\bar{z})\) is primal feasible and \(\bar{\nu }\) is corresponding dual feasible. Assume that \(\varepsilon _\mathrm{abs}>0\) is a given absolute accuracy, then we have

This guarantees that \((w,\bar{b},\bar{z})\) is a suboptimal \(\varepsilon _\mathrm{abs}\) if the stopping criterion \(f(w,\bar{b},\bar{z})-G(\bar{\nu })\leqslant \varepsilon _\mathrm{abs}\) holds. The difference between the primal objective value of \((w,\bar{b},\bar{z})\) and the associated lower bound \(G(\bar{\nu })\) is called the duality gap. We denote duality gap \(\eta \) as:

We always have \(\eta \geqslant 0\), and by the weak duality, the point \((w,\bar{b},\bar{z})\) is no more than \(\eta \)-suboptimal. At the optimal point, we have \(\eta =0\).

4.3 Algorithm

Remark The typical values for the line search parameters are \(\alpha =0.01\), \(\beta =0.5\). The update rule for \(t\) performs well with \(\mu =2\) and \(s_\mathrm{min}=0.5\).

5 Numerical Tests

In this section, we take six datasets (including Pima Indians, Heart, Australian, Mushroom, Spambase, and Sonar) from the UCI repository [14] and one synthetic dataset. Table 1 gives their characteristics. We first use the synthetic dataset, which is generated by Gaussian distribution, to test the classification effectiveness of our model. Then we compare the results of \(\ell _{1}-\ell _{2}\) regularized sparse PSVM with various \(\lambda \) to demonstrate the properties of the trade-off between the \(\ell _{1}\)-norm and \(\ell _{2}\)-norm terms. We also compare the numerical results of \(\ell _{1}-\ell _{2}\) regularized sparse PSVM model with GEPSVM, PSVM, and SVM-Light which has been calculated in [13]. At last, we try to figure out the effectiveness of our model in finding sparse solutions and compare it with \(\ell _{1}\)-PSVM [7]. The \(\ell _{1}-\ell _{2}\) regularized sparse PSVM is implemented on a PC with an Intel Core i5, \(2.50\) GHz CPU, \(2.00\) GB RAM.

First, we perform our model on synthetic data to demonstrate the classification effectiveness of our model. The results are shown in Fig. 4. The experimental results verify the validity of our model and algorithm.

From the point view of the optimization problem, initially, \(C\) is a penalty parameter which penalizes the constraint \(\xi >0\) into the objective function. In the point view of classification, \(C\) is also a weight which is used to balance the maximum margin and the minimum classification error. Numerically, we have compared the numerical results of \(\ell _{1}-\ell _{2}\) regularized sparse PSVM with various parameter \(C\in \{10, 1, 0.1, 0.01\}\), the results show that the model always obtains higher classification accuracy with \(C=0.1\). So we fixed \(C=0.1\) in this section.

For each dataset from UCI repository, to compare the effectiveness of the trade-off between \(\ell _{1}\)-norm and \(\ell _{2}\)-norm in \(\ell _{1}-\ell _{2}\) regularized sparse PSVM, we choose the parameter \(\lambda \in \{0, 1, 10\}\) and \(C=0.1\). The classification accuracy and the execution time are summarized in Tables 2 and 3, respectively.

From Table 2, we observe that for a given \(C\), the classification accuracy of the \(\ell _{1}-\ell _{2}\) regularized sparse PSVM performs better when \(\lambda =0\) (the model without \(\ell _{1}\)-norm term), while from Table 3, we can observe that the model succeeds in decreasing the execution time when \(\lambda >0\) (\(\ell _{1}\)-norm term is added). The numerical results verify that the \(\ell _{2}\)-norm term is responsible for the good classification performance while \(\ell _{1}\)-norm term plays an important role on decreasing the execution time.

To demonstrate the performance of our model, we compare the classification accuracy of the \(\ell _{1}-\ell _{2}\) regularized sparse PSVM with that of GEPSVM, PSVM and SVM-Light [13].

The testing accuracy of \(\ell _{1}-\ell _{2}\) regularized sparse PSVM is evaluated with a 10-fold cross-validation and the performance is the average misclassification error over 10 folds. In 10-fold cross-validation, the total dataset is divided into ten parts. Each part is chosen once as the test set while the other nine parts form the training set.

Table 4 shows the comparison of classification accuracy. The numbers listed in bold show the better classification accuracy for each dataset. We conclude that \(\ell _{1}-\ell _{2}\) regularized sparse PSVM is more efficient than other three SVMs.

Finally, we want to test the effect of \(\ell _{1}-\ell _{2}\) regularized sparse PSVM in finding sparse solutions and compare it with \(\ell _{1}\)-PSVM which has been calculated in [7]. Tuning parameter \(\lambda \), keep the classification accuracy of \(\ell _{1}-\ell _{2}\) regularized sparse PSVM approximately equals to the results of \(\ell _{1}\)-PSVM in [7], then compare the number of zero variables in \(w\). Table 5 shows the numerical results, where \(\sharp \) denotes the number of zero variables in \(w\).

From Table 5, we observe that \(\ell _{1}-\ell _{2}\) regularized sparse PSVM succeeds in finding more sparse solutions with higher classification accuracy than \(\ell _{1}\)-PSVM.

6 Conclusions

In this paper, we have proposed a PSVM with cardinality constraint and converted it into an \(\ell _{1}-\ell _{2}\) regularized sparse PSVM, then we have solved the equivalent model of the \(\ell _{1}-\ell _{2}\) regularized sparse PSVM by a specialized interior-point method with PCG algorithm to compute the search direction. We have implemented the \(\ell _{1}-\ell _{2}\) regularized sparse PSVM by a real-world dataset taken from the UCI repository. The classification accuracy and execution time are tested by choosing different parameter \(\lambda \). The numerical results show that the \(\ell _{1}-\ell _{2}\) regularized sparse PSVM outperforms the others with more accuracy for classification. Moreover, it succeeds in finding sparse solutions with higher accuracy than or almost the same as \(\ell _{1}\)-PSVM.

We have only considered the binary linear classification problems in this paper. Our future research will extend to the multi-class classification and nonlinear classification. Moreover, compared with the performance in paper [3], our numerical results of the execution time are slower than that in [3], where the ADMM was used. We will modify the PCG so as to speed up the solution method.

References

Bai, Y.Q., Chen, Y., Niu, B.L.: SDP relaxation for semi-supervised support vector machine. Pac. J. Optim. 8(1), 3 (2012)

Bai, Y.Q., Niu, B.L., Chen, Y.: New SDP models for protein homology detection with semi-supervised SVM. Optimization 62(4), 561 (2013)

Bai, Y.Q., Shen, Y.J., Shen, K.J.: Consensus proximal support vector machine for classification problems with sparse solutions. J. Oper. Res. Soc. China 2, 57–74 (2014)

Cands, E.J.: Compressive sampling. Proc. Int. Congress Math. 3, 1433–1452 (2006)

Cands, E.J., Romberg, J., Tao, T.: Robust uncertainty principles: exact signal reconstruction from highly incomplete frequency information. Inf. Theory IEEE Trans. 52(2), 489–509 (2006a)

Cands, E.J., Romberg, J.K., Tao, T.: Stable signal recovery from incomplete and inaccurate measurements. Commun. Pure Appl. Math. 59(8), 1207–1223 (2006b)

Chen, W.J., Tian, Y.J.: \(L_{p}\) -norm proximal support vector machine and its applications. Proc. Comput. Sci. 1, 2417–2423 (2012)

Chen, S.S., Donoho, D.L., Saunders, M.A.: Atomic decomposition by basis pursuit. SIAM J. Sci. Comput. 20(1), 33–61 (1998)

Deng, N.Y., Tian, Y.J., Zhang, C.H.: Support Vector Machines. CRC Press, Taylor and Francis Group, Boca (2013)

Donoho, D.L.: Compressed sensing. Inf. Theory IEEE Trans. 52(4), 1289–1306 (2006)

Fung, G., Mangasarian, O.L.: Proximal support vector machine classifiers. In: Proceedings of the Seventh ACM SIGKDD International Conference on Knowledge Discovery and Data Mining ACM, pp. 77–86 (2001)

Kim, S.J., Koh, K., Boyd, S.P.: An interior-point method for large-scale \(\ell _{1}\)-regularized least square. J. Sel. Topics Signal Process. 12, 1932–4553 (2007)

Mangasarian, O.L., Wild, E.W.: Multisurface proximal support vector machine classification via generalized eigenvalues. Pattern Anal Mach. Intell. IEEE Trans. 28(1), 69–74 (2006)

Murphy, P.M., Aha, D.W.: UCI machine learning repository, www.ics.uci.edu/mlearn/MLRepository.html, 1992

Nocedal, J., Wright, S.: Numerical Optimization. Springer Series in Operations Research, New York (2006)

Suykens, J.A.K., Vandewalle, J.: Least squares support vector machine classifiers. Neural Process Lett. 9, 293–300 (1999)

Suykens, J.A.K.: Least Squares Support Vector Machines. World Scientific, Singapore (2002)

Tibshirani, R.: Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B, 267–288, 1996

Vapnik, V.N., et al.: The Nature of Statistical Learning Theory. Springer, New York (1995)

Vapnik, V.N.: Statistical Learning Theory. Wiley, New York (1998)

Author information

Authors and Affiliations

Corresponding author

Additional information

This research was supported by the National Natural Science Foundation of China (No. 11371242).

Rights and permissions

About this article

Cite this article

Bai, YQ., Zhu, ZY. & Yan, WL. Sparse Proximal Support Vector Machine with a Specialized Interior-Point Method. J. Oper. Res. Soc. China 3, 1–15 (2015). https://doi.org/10.1007/s40305-014-0068-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40305-014-0068-5

Keywords

- Proximal support vector machine

- Classification accuracy

- Interior-point methods

- Preconditioned conjugate gradients algorithm