Abstract

We propose and study new projection-type algorithms for solving pseudomonotone variational inequality problems in real Hilbert spaces without assuming Lipschitz continuity of the cost operators. We prove weak and strong convergence theorems for the sequences generated by these new methods. The numerical behavior of the proposed algorithms when applied to several test problems is compared with that of several previously known algorithms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider the variational inequality problem (VI) [10, 11] of finding a point x∗∈ C such that

where C is a nonempty, closed, and convex subset of a real Hilbert space H, F : H → H is a single-valued mapping, and 〈⋅,⋅〉 and ∥⋅∥ are the inner product and the induced norm on H, respectively. We denote by Sol(C,F) the solution set of problem (1). Variational inequality problems are fundamental in a broad range of mathematical and applied sciences; the theoretical and algorithmic foundations, as well as the applications of variational inequalities, have been extensively studied in the literature and continue to attract intensive research. For a detailed exposition of the field in the finite-dimensional setting, see, for instance, [9] and the extensive list of references therein.

Many authors have proposed and analyzed several iterative methods for solving the variational inequality (1). The simplest one is the following projection method, which can be considered an extension of the projected gradient method for optimization problems:

for each n ≥ 1, where PC denotes the metric projection from H onto C. Convergence results for this method require some monotonicity properties of F. This method converges under quite strong hypotheses. If F is Lipschitz continuous with Lipschitz constant L and α-strongly monotone, then the sequence generated by (2) converges to an element of Sol(C,F) for \( \lambda \in \left (0, \frac {2\alpha }{L^{2}}\right )\).

In order to find an element of Sol(C,F) under weaker hypotheses, Korpelevich [21] (and, independently, Antipin [1]) proposed to replace method (2) by the extragradient method in the finite-dimensional Euclidean space \(\mathbb {R}^{m}\) for a monotone and L-Lipschitz continuous operator \(F: \mathbb {R}^{m}\to \mathbb {R}^{m}\). Her algorithm is of the form

where \(\lambda \in \left (0,\frac {1}{L}\right )\). The sequence {xn} generated by (3) converges to an element of Sol(C,F) provided that Sol(C,F) is nonempty.

In recent years, the extragradient method has been extended to infinite-dimensional spaces in various ways; see, for example, [3,4,5,6, 22, 25, 26, 30,31,32] and the references cited therein.

We may observe that, when F is not Lipschitz continuous or the constant L is very difficult to compute, Korpelevich’s method is not so practical because we cannot determine the step size λ. To overcome this difficulty, Iusem [16] proposed in the Euclidean space \(\mathbb {R}^{m}\) the following iterative algorithm for solving Sol(C,F):

where γn > 0 is computed through an Armijo-type search and \(\lambda _{n}=\frac {\langle Fy_{n},x_{n}-y_{n}\rangle }{\|Fy_{n}\|^{2}}\). This modification has allowed the author to establish convergence without assuming Lipschitz continuity of the operator F.

In order to determine the step size γn in (4), we need to use a line search procedure which contains one projection. So at iteration n, if this procedure requires mn steps to arrive at the appropriate γn, then we need to evaluate mn projections.

To overcome this difficulty, Iusem and Svaiter [19] proposed a modified extragradient method for solving monotone variational inequalities which only requires two projections onto C at each iteration. A few years later, this method was improved by Solodov and Svaiter [30]. They introduced an algorithm for solving (1) in finite-dimensional spaces. As a matter of fact, their method applies to a more general case, where F is merely continuous and satisfies the following condition:

Property (5) holds if F is monotone or, more generally, pseudomonotone on C in the sense of Karamardian [20]. More precisely, Solodov and Svaiter proposed the following algorithm:

Vuong and Shehu [36] have recently modified the result of Solodov and Svaiter in the spirit of Halpern [14], and obtained strong convergence in infinite-dimensional real Hilbert spaces. Their algorithm is of the following form:

Vuong and Shehu proved that if F : H → H is pseudomonotone, uniformly continuous, and weakly sequentially continuous on bounded subsets of C, and the sequence {αn} satisfies the conditions \(\lim _{n\to \infty }\alpha _{n}=0\) and \({\sum }_{n=1}^{\infty } \alpha _{n}=\infty \), then the sequence {xn} generated by Algorithm 2 converges strongly to p ∈ Sol(C,F), where p = PCx1.

Motivated and inspired by [30, 36], and by the ongoing research in these directions, in the present paper, we introduce new algorithms for solving variational inequalities with uniformly continuous pseudomonotone operators. In particular, we use a different Armijo-type line search in order to obtain a hyperplane which strictly separates the current iterate from the solutions of the variational inequality under consideration.

Our paper is organized as follows. We first recall in Section 2 some basic definitions and results. Our algorithms are presented and analyzed in Section 3. In Section 4, we present several numerical experiments which illustrate the performance of the algorithms. They also provide a preliminary computational overview by comparing it with the performance of several related algorithms.

2 Preliminaries

Let H be a real Hilbert space and C be a nonempty, closed, and convex subset of H. The weak convergence of a sequence \(\{x_{n}\}_{n=1}^{\infty }\) to x as \(n \to \infty \) is denoted by \(x_{n}\rightharpoonup x\) while the strong convergence of \(\{x_{n}\}_{n=1}^{\infty }\) to x as \(n \to \infty \) is denoted by xn → x. For each x,y ∈ H, we have

Definition 2.1

Let F : H → H be an operator. Then,

-

1.

The operator F is called L-Lipschitz continuous with Lipschitz constant L > 0 if

$$ \|Fx-Fy\|\le L \|x-y\| \ \ \ \forall x,y \in H. $$If L = 1, then the operator F is called nonexpansive and if L ∈ (0,1), then F is called a strict contraction.

-

2.

F is called monotone if

$$ \langle Fx-Fy,x-y \rangle \geq 0 \ \ \ \forall x,y \in H. $$ -

3.

F is called pseudomonotone if

$$ \langle Fx,y-x \rangle \geq 0 \Longrightarrow \langle Fy,y-x \rangle \geq 0 \ \ \ \forall x,y \in H. $$ -

4.

F is called α-strongly monotone if there exists a constant α > 0 such that

$$ \langle Fx-Fy,x-y\rangle\geq \alpha \|x-y\|^{2} \ \ \forall x,y\in H. $$ -

5.

The operator F is called sequentially weakly continuous if the weak convergence of a sequence {xn} to x implies that the sequence {Fxn} converges weakly to Fx.

It is easy to see that every monotone operator is pseudomonotone, but the converse is not true.

For each point x ∈ H, there exists a unique nearest point in C, denoted by PCx, which satisfies ∥x − PCx∥≤∥x − y∥ ∀y ∈ C. The mapping PC is called the metric projection of H onto C. It is known that PC is nonexpansive.

Lemma 2.1

([13]) Let C be a nonempty, closed, and convex subset of a real Hilbert space H. If x ∈ H and z ∈ C, then z = PCx⇔〈x − z,z − y〉≥ 0 ∀y ∈ C.

Lemma 2.2

([13]) Let C be a closed and convex subset of a real Hilbert space H and let x ∈ H. Then,

i) ∥PCx − PCy∥2 ≤〈PCx − PCy,x − y〉 ∀y ∈ H;

ii) ∥PCx − y∥2 ≤∥x − y∥2 −∥x − PCx∥2 ∀y ∈ C.

More properties of the metric projection can be found in Section 3 in [13].

The following lemmas are useful in the convergence analysis of our proposed methods.

Lemma 2.3

([17, 18]) Let H1 and H2 be two real Hilbert spaces. Suppose F : H1 → H2 is uniformly continuous on bounded subsets of H1 and M is a bounded subset of H1. Then, F(M) is bounded.

Lemma 2.4

([7], Lemma 2.1) Let C be a nonempty, closed, and convex subset of a real Hilbert space H, and let F : C → H be pseudomonotone and continuous. Then, x∗ belongs to Sol(C,F) if and only if

The following lemma can be found in [15].

Lemma 2.5

Let C be a nonempty, closed, and convex subset of a real Hilbert space H. Let h be a real-valued function on H and define K := {x ∈ C : h(x) ≤ 0}. If K is nonempty and h is Lipschitz continuous on C with modulus 𝜃 > 0, then

where dist(x,K) denotes the distance of x to K.

Lemma 2.6

([28]) Let C be a nonempty subset of H and let {xn} be a sequence in H such that the following two conditions hold:

-

i)

For every x ∈ C, \(\lim _{n\to \infty }\|x_{n}-x\|\) exists;

-

ii)

Every sequential weak cluster point of {xn} is in C.

Then, {xn} converges weakly to a point in C.

The next technical lemma is very useful and has been used by many authors; see, for example, Liu [23] and Xu [37]. A variant of this lemma has already been used by Reich in [29].

Lemma 2.7

Let {an} be sequence of non-negative real numbers such that:

where {αn}⊂ (0,1) and {bn} is a real sequence such that

-

a)

\({\sum }_{n=0}^{\infty } \alpha _{n}=\infty \);

-

b)

\(\limsup _{n\to \infty }b_{n}\le 0\).

Then, \(\lim _{n\to \infty }a_{n}=0\).

3 Main results

In this section, we introduce two new methods for solving (1). In the convergence analysis of these algorithms, the following three conditions are assumed.

Condition 3.1

The feasible set C is a nonempty, closed, and convex subset of the real Hilbert space H.

Condition 3.2

The operator F : C → H associated with the VI (1) is pseudomonotone and uniformly continuous on C.

Condition 3.3

The mapping F : H → H satisfies the following property:

Condition 3.4

The solution set of the VI (1) is nonempty, that is, Sol(C,F)≠∅.

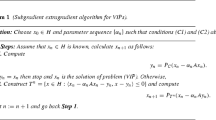

3.1 Weak convergence

We begin by introducing a new projection-type algorithm.

Lemma 3.1

Assume that Conditions 3.1–3.4 hold. Then, the Armijo-type search rule (3.1) is well defined.

Proof

Since l ∈ (0,1) and the operator F is continuous on C, the sequence {〈Fxn − F(xn − ljrλ(xn)),rλ(xn)〉} converges to zero as j tends to infinity. On the other hand, as a consequence of Step 1, ∥rλ(xn)∥ > 0 (otherwise, the procedure would have stopped). Therefore, there exists a non-negative integer jn satisfying (3.1). □

Lemma 3.2

Assume that the sequence {xn} is generated by Algorithm 3. Then, we have

Proof

Since PC is the metric projection, we know that ∥x − PCy∥2 ≤〈x − y,x − PCy〉 for all x ∈ C and y ∈ H. Let y = xn − λFxn,x = xn. Then,

and so

□

Lemma 3.3

Assume that Conditions 3.1–3.4 hold. Let x∗ be a solution of problem (1) and let the function hn be defined by (3.1). Then, \( h_{n} (x_{n}) =\frac {\tau _{n}}{2\lambda }\|r_{\lambda } (x_{n})\|^{2}\) and hn(x∗) ≤ 0. In particular, if rλ(xn)≠ 0, then hn(xn) > 0.

Proof

The first claim of Lemma 3.3 is obvious. In order to prove the second claim, Let x∗ be a solution of problem (1) Then by Lemma 2.4, we have hn(x∗) = 〈Fyn,yn − x∗〉≥ 0. We also have

On the other hand, by (3.1) we have

Thus,

Using Lemma 3.2, we get

Combining (10) and (11), we now see that

Thus, hn(x∗) ≤ 0, as asserted. □

We adapt the technique developed in [35] to obtain the following result.

Lemma 3.4

Assume that Conditions 3.1–3.4 hold. Let {xn} be a sequence generated by Algorithm 3. If there exists a subsequence \(\{x_{n_{k}}\}\) of {xn} such that \(\{x_{n_{k}}\}\) converges weakly to z ∈ C and \(\lim _{k\to \infty }\|x_{n_{k}}-z_{n_{k}}\|=0\), then z ∈ Sol(C,F).

Proof

Since \(z_{n_{k}}=P_{C}(x_{n_{k}}-\lambda F_{n_{k}})\), we have

or equivalently,

This implies that

Since \(\|x_{n_{k}}-z_{n_{k}}\|\to 0\) as \(k \to \infty \) and since the sequence \(\{Fx_{n_{k}}\}\) is bounded, taking \(k \to \infty \) in (12), we get

Next, to show that z ∈ Sol(C,F), we first choose a decreasing sequence {𝜖k} of positive numbers which tends to 0. For each k, we denote by Nk the smallest positive integer such that

where the existence of Nk follows from (13). Since the sequence {𝜖k} is decreasing, it is easy to see that the sequence {Nk} is increasing. Furthermore, for each k, since \(\{x_{N_{k}}\}\subset C\), we have \(Fx_{N_{k}}\ne 0\) and setting

we have \(\langle Fx_{N_{k}}, x_{N_{k}}\rangle = 1\) for each k. Now, we can deduce from (14) that for each k,

Since the operator F is pseudomonotone, it follows that

This implies that

Next, we show that \(\lim _{k\to \infty }\epsilon _{k} v_{N_{k}}=0\). Indeed, we have \(x_{n_{k}}\rightharpoonup z\in C \text { as } k \to \infty \). Since F satisfies Condition 3.3, we have

Since \(\{x_{N_{k}}\}\subset \{x_{n_{k}}\}\) and 𝜖k → 0 as \(k \to \infty \), we obtain

which implies that \(\lim _{k\to \infty } \epsilon _{k} v_{N_{k}} = 0\).

Now, letting \(k\to \infty \), we see the right-hand side of (15) tends to zero because F is uniformly continuous, the sequences \(\{x_{n_{k}}\}\) and \(\{v_{N_{k}}\}\) are bounded, and \(\lim _{k\to \infty }\epsilon _{k} v_{N_{k}}=0\). Thus, we get

Hence, for all x ∈ C, we have

Appealing to Lemma 2.4, we obtain that z ∈ Sol(C,F) and the proof is complete. □

Lemma 3.5

Assume that Conditions 3.1–3.3 hold. Let {xn} be a sequence generated by Algorithm 3. If \( \lim _{n\to \infty }\tau _{n}\|r_{\lambda } (x_{n})\|^{2}=0\), then \( \lim _{n\to \infty }\|x_{n}-z_{n}\|=0\).

Proof

First we consider the case where \(\liminf _{n\to \infty }\tau _{n}>0\). In this case, there is a constant τ > 0 such that τn ≥ τ > 0 for all \(n\in \mathbb {N}\). We then have

Combining the assumption and (16), we see that

Second, we consider the case where \(\liminf _{n\to }\tau _{n}=0 \). In this case, we take a subsequence {nk} of {n} such that

and

Let \(y_{n_{k}}=\frac {1}{l}\tau _{n_{k}} z_{n_{k}}+(1-\frac {1}{l}\tau _{n_{k}})x_{n_{k}}\). Since \(\lim _{n\to \infty }\tau _{n}\|r_{\lambda } (x_{n})\|^{2}=0\), we have

From the step size rule (3.1) and the definition of yk, it follows that

Since F is uniformly continuous on bounded subsets of C, (18) implies that

Combining now (19) and (20), we obtain

This, however, is a contradiction to (17). It follows that \(\lim _{n \to \infty } \|x_{n} - z_{n}\| = 0\) and this completes the proof of the lemma. □

Theorem 3.1

Assume that Conditions 3.1–3.4 hold. Then, any sequence {xn} generated by Algorithm 3 converges weakly to an element of Sol(C,F).

Proof

Claim 1. We first prove that {xn} is a bounded sequence. Indeed, for p ∈ Sol(C,F), we have

This implies that

and so \(\lim _{n\to \infty }\|x_{n}-p\|\) exists. Thus, the sequence {xn} is bounded, and it also follows that the sequences {yn} and {Fyn} are bounded too.

Claim 2. We claim that

for some L > 0. Indeed, since the sequence {Fyn} is bounded, there exists L > 0 such that ∥Fyn∥≤ L for all n. Using this fact, we see that for all u,v ∈ Cn,

This implies that hn(⋅) is L-Lipschitz continuous on Cn. By Lemma 2.5, we obtain

which, when combined with Lemma 3.3, yields the inequality

Combining the proof of Claim 1 with (23), we obtain

which implies, in its turn, Claim 2.

Claim 3. We claim that {xn} converges weakly to an element of Sol(C,F). Indeed, since {xn} is a bounded sequence, there exists a subsequence \(\{x_{n_{k}}\}\) of {xn} such that \(\{x_{n_{k}}\}\) converges weakly to z ∈ C.

Appealing to Claim 2, we find that

Thanks to Lemma 3.5 we also get

Using Lemma 3.4 and (24), we may infer that z ∈ Sol(C,F).

Thus, we have proved that

-

i)

For every p ∈ Sol(C,F), the limit \(\lim _{n\to \infty }\|x_{n}-p\|\) exists;

-

ii)

Every sequential weak cluster point of the sequence {xn} is in Sol(C,F).

Lemma 2.6 now implies that the sequence {xn} converges weakly to an element of Sol(C,F).

□

Remark 3.1

1. When the operator F is monotone, it is not necessary to assume Condition 3.3 (see, [8, 35]).

2. Note that in our work we use Condition 3.3, which is strictly weaker than the sequential weak continuity of the operator F, an assumption which has frequently been used in recent articles on pseudomonotone variaional inequality problems [12, 33,34,35,36]. Indeed, if F is sequentially weakly continuous, then Condition 3.3 is fulfilled because the norm is weakly lower semicontinuous. On the other hand, it is not difficult to see that the operator F : H → H, defined by F(x) := ∥x∥x,x ∈ H, satisfies condition 3.3, but is not sequentially weakly continuous.

3.2 Strong convergence

In this section, we introduce an algorithm for solving variational inequalities which is based on the viscosity method [27] and on Algorithm 3. We assume that f : C → C is a contractive mapping with a coefficient ρ ∈ [0,1) and that the following condition is satisfied:

Condition 3.5

The real sequence {αn} is contained in (0,1) and satisfies

Theorem 3.2

Assume that Conditions 3.1–3.4 and 3.5 hold. Then, any sequence {xn} generated by Algorithm 4 converges strongly to p ∈ Sol(C,F), where p = PSol(C,F) ∘ f(p).

Proof

Claim 1. We first prove that the sequence {xn} is bounded. To this end, let \(w_{n}=P_{C_{n}}x_{n}\). By (21) we have

This implies that

Using (25), we have

Thus, the sequence {xn} is indeed bounded. Consequently, the sequences {yn}, {f(xn)}, and {Fyn} are bounded too.

Claim 2. We claim that

To prove this, we first note that

On the other hand, we have

Substituting (27) into (26), we get

This implies that

Claim 3. We claim that

Indeed, according to (22), we get

It follows from the definition of the sequence {xn} and (28) that

This implies that

Claim 4. We prove that

Indeed, we have

Claim 5. Now we intend to show that the sequence {∥xn − p∥2} converges to zero by considering two possible cases.

Case 1: There exists an \(N\in {\mathbb N}\) such that ∥xn+ 1 − p∥2 ≤∥xn − p∥2 for all n ≥ N. This implies that \(\lim _{n\to \infty }\| x_{n}-p\|^{2}\) exists. It now follows from Claim 2 that

Since the sequence {xn} is bounded, there exists a subsequence \(\{x_{n_{k}}\}\) of {xn} that weakly converges to some point z ∈ C such that

Now, according to Claim 3, we see that

It follows that

Thanks to Lemma 3.5, we infer that

Using the fact that \(x_{n_{k}}\rightharpoonup z\), (29), and Lemma 3.4, we now conclude that z ∈ Sol(C,F).

On the other hand,

Thus,

Since p = PSol(C,F)f(p) and \(x_{n_{k}} \rightharpoonup z\in Sol(C,F)\), we get

This implies that

which, when combined with Claim 4 and Lemma 2.7, implies that

Case 2: Assume that there is no \(n_{0} \in \mathbb {N}\) such that \(\{\|x_{n}-p\|\}_{n=n_{0}}^{\infty }\) is monotonically decreasing. In this case, we adapt a technique of proof used in [24]. Set Γn = ∥xn − p∥2 for all n ≥ 1 and let \(\eta : \mathbb {N} \to \mathbb {N}\) be a mapping defined for all n ≥ n0 (for some n0 large enough) by

that is, η(n) is the largest number k in {1,...,n} such that Γk increases at k = η(n); note that, in view of Case 2, this η(n) is well defined for all sufficiently large n. Clearly, η is an increasing sequence such that \(\eta (n)\to \infty \) as \(n \to \infty \) and

According to Claim 2, we have

From Claim 3, it follows that

Using the same arguments as in the proof of Case 1, we obtain

and

Thanks to Claim 4 we get

Thus,

which, when combined with (30), implies that \(\limsup _{n\to \infty }\|x_{\eta (n)+1}-p\|^{2}\le 0\), that is, \(\lim _{n\to \infty }\|x_{\eta (n)+1}-p\|=0\).

Next, we show that for all sufficiently large n, we have

Indeed, for n ≥ n0, it is not difficult to observe that η(n) ≤ n for n ≥ n0. Now consider the following three cases: η(n) = n,η(n) = n − 1, and η(n) < n − 1. In the first and second cases, it is obvious that Γn ≤Γη(n)+ 1 for n ≥ n0. In the third case, η(n) ≤ n − 2, we infer from the definition of η(n) that for any integer n ≥ n0, Γj ≥Γj+ 1 for η(n) + 1 ≤ j ≤ n − 1. Thus, \({\varGamma }_{\eta (n)+1} \geq {\varGamma }_{\eta (n)+2} \geq {\dots } \geq {\varGamma }_{n-1} \geq {\varGamma }_{n}\). As a consequence, we obtain inequality (31). Now, using (31) and \(\lim _{n\to \infty }\|x_{\eta (n)+1}-p\|=0\), we conclude that xn → p as \(n\to \infty \). □

Applying Algorithm 3 with f(x) := x1 for all x ∈ C, we obtain the following corollary.

Corollary 3.1

Given μ > 0,l ∈ (0,1), and \(\lambda \in (0,\frac {1}{\mu })\), let x1 ∈ C be arbitrary. Compute

and rλ(xn) := xn − zn. If rλ(xn) = 0, then stop; xn is a solution of Sol(C,F). Otherwise,

Compute

where \(\tau _{n}:=l^{j_{n}}\) and jn is the smallest non-negative integer j satisfying

Compute

where

Assume that Conditions 3.1–3.4 hold. Then, the sequence {xn} converges strongly to a point p ∈ Sol(C,F), where p = PSol(C,F)x1.

4 Numerical illustrations

In this section, we provide several numerical examples regarding our proposed algorithms. We compare Algorithm 3 (also called Proposed Alg. 3.3 or TD Agl) with Algorithm 1 (Solodov and Svaiter, Alg. 1.1) and Algorithm 2 (Vuong and Shehu, Alg. 1.2) in Examples 1 and 2. In Example 3, we compare Algorithm 4 (also called Algorithm 3.4) with Algorithm 2 (also called Algorithm 1.2). All the numerical experiments were performed on an HP laptop with Intel(R) Core(TM)i5-6200U CPU 2.3GHz with 4 GB RAM. All the programs were written in Matlab2015a.

Example 1

We first consider a classical example for which the usual gradient method does not converge to a solution of the variational inequality. The feasible set is \(C := \mathbb {R}^{m}\) (for some positive even integer m) and F := (aij)1≤i,j≤m is the m × m square matrix the terms of which are given by

It is clear that the zero vector x⋆ = (0,...,0) is the solution of this test example. We take \(\alpha _{n}=\frac {1}{n}\) and the starting point is \(x_{1} = (1, 1, . . . , 1)^{T} \in \mathbb {R}^{m}\). We terminate the iterations if ∥xn − x⋆∥≤ 𝜖 with 𝜖 = 10− 4 or if the number of iterations ≥ 1000. The results are presented in Table 1 and in Figs. 1 and 2 below.

Example 2

Assume that \(F:\mathbb {R}^{m} \to \mathbb {R}^{m}\) is defined by F(x) := Mx + q with M = NNT + S + D, N is an m × m matrix, S is an m × m skew-symmetric matrix, D is an m × m diagonal matrix, whose diagonal entries are positive (so M is positive definite), q is a vector in \(\mathbb {R}^{m}\), and

It is clear that F is monotone and Lipschitz continuous with a Lipschitz constant L = ∥M∥. Thus, F is a uniformly continuous pseudomonotone operator. For q = 0, the unique solution of the corresponding variational inequality is {0}.

In our experiments, all the entries of N, S, and D are generated randomly in the interval (− 2,2) and those of D are in the interval (0,1). The starting point is \(x_{1} = (1, 1, . . . , 1)^{T} \in \mathbb {R}^{m}\) and \(\alpha _{n}=\frac {1}{\sqrt {n}}\). We use the stopping rule ∥xn − x⋆∥≤ 10− 4 and we also stop if the number of iteration ≥ 1000 for all the algorithms. The numerical results are presented in Table 2 and in Figs. 3 and 4.

Example 3

Consider C := {x ∈ H : ∥x∥≤ 2}. Let \(g: C\rightarrow \mathbb {R}\) be defined by \(g(u):=\frac {1}{1+\|u\|^{2}}\). Observe that g is Lg-Lipchitz continuous with \(L_{g}=\frac {16}{25}\) and \(\frac {1}{5}\leq g(u)\leq 1,~~\forall u \in C\). Define the Volterra integral operator \(A :L^{2}([0,1]) \rightarrow L^{2}([0,1])\) by

The operator A is bounded and linear monotone (see Exercise 20.12 of [2]) and \(\|A\|=\frac {2}{\pi }\). Next, define \(F:C\rightarrow L^{2}([0,1])\) by F(u)(t) := g(u)A(u)(t), ∀u ∈ C,t ∈ [0,1]. Then, F is pseudomonotone and LF-Lipschitz-continuous with \(L_{F} = \frac {82}{\pi }\).

Take μ = 0.3, l = 0.9, and \(\alpha _{n}=\frac {1}{n}\) in Algorithm 4 and Algorithm 2. Choose \(\lambda =\frac {0.9}{\mu }\) and f(x) := x1 in Algorithm 4. Let the initial point be \(x_{0}=\sin \limits (2\pi t^{2})\).

We compared Algorithm 4 with Algorithm 2. The numerical results are presented in Fig. 5. It shows that the performance of Algorithm 4 is better than that of Algorithm 2.

5 Conclusions

In this paper, we have proposed new projection-type algorithms for solving variational inequalities in real Hilbert spaces. We have established weak and strong convergence theorems for these algorithms under a pseudomonotonicity assumption imposed on the cost operator, which is not assumed to be Lipschitz continuous. Moreover, our algorithms require the calculation of only two projections onto the feasible set per each iteration. These two properties bring out the advantages of our proposed algorithms over several existing algorithms which have recently been proposed in the literature. Numerical experiments in both finite- and infinite-dimensional spaces illustrate the good performance of our new schemes.

References

Antipin, A.S.: On a method for convex programs using a symmetrical modification of the Lagrange function. Ekonomika i Mat. Metody 12, 1164–1173 (1976)

Bauschke, H.H., Combettes, P.L.: Convex Analysis and Monotone Operator Theory in Hilbert Spaces. Springer, New York (2011). (CMS Books in Mathematics)

Censor, Y., Gibali, A, Reich, S.: The subgradient extragradient method for solving variational inequalities in Hilbert space. J. Optim. Theory Appl. 148, 318–335 (2011)

Censor, Y., Gibali, A., Reich, S.: Strong convergence of subgradient extragradient methods for the variational inequality problem in Hilbert space. Optim. Meth. Softw. 26, 827–845 (2011)

Censor, Y., Gibali, A., Reich, S.: Extensions of Korpelevich’s extragradient method for the variational inequality problem in Euclidean space. Optimization 61, 1119–1132 (2011)

Censor, Y., Gibali, A., Reich, S.: Algorithms for the split variational inequality problem. Numer. Algorithms 56, 301–323 (2012)

Cottle, R.W., Yao, J.C.: Pseudo-monotone complementarity problems in Hilbert space. J. Optim. Theory Appl. 75, 281–295 (1992)

Denisov, S.V., Semenov, V.V., Chabak, L.M.: Convergence of the modified extragradient method for variational inequalities with non-Lipschitz operators. Cybern. Syst. Anal. 51, 757–765 (2015)

Facchinei, F., Pang, J.S.: Finite-Dimensional Variational Inequalities and Complementarity Problems. Springer Series in Operations Research, vols. I and II. Springer, New York (2003)

Fichera, G.: Sul problema elastostatico di Signorini con ambigue condizioni al contorno. Atti Accad. Naz. Lincei, VIII. Ser., Rend., Cl. Sci. Fis. Mat. Nat. 34, 138–142 (1963)

Fichera, G.: Problemi elastostatici con vincoli unilaterali: il problema di Signorini con ambigue condizioni al contorno. Atti Accad. Naz. Lincei, Mem., Cl. Sci. Fis. Mat. Nat., Sez. I, VIII. Ser. 7, 91–140 (1964)

Gibali, A., Thong, D.V.: A new low-cost double projection method for solving variational inequalities. Optim. Eng. https://doi.org/10.1007/s11081-020-09490-2(2020)

Goebel, K., Reich, S.: Uniform Convexity, Hyperbolic Geometry, and Nonexpansive Mappings. Marcel Dekker, New York (1984)

Halpern, B.: Fixed points of nonexpanding maps. Bull. Am. Math. Soc. 73, 957–961 (1967)

He, Y.R.: A new double projection algorithm for variational inequalities. J. Comput. Appl. Math. 185, 166–173 (2006)

Iusem, A.N.: An iterative algorithm for the variational inequality problem. Comput. Appl. Math. 13, 103–114 (1994)

Iusem, A.N., Gárciga, O.R.: Inexact versions of proximal point and augmented Lagrangian algorithms in Banach spaces. Numer. Funct. Anal. Optim. 22, 609–640 (2001)

Iusem, A.N., Nasri, M.: Korpelevich’s method for variational inequality problems in Banach spaces. J. Global Optim. 50, 59–76 (2011)

Iusem, A.N., Svaiter, B.F.: A variant of Korpelevich’s method for variational inequalities with a new search strategy. Optimization 42, 309–321 (1997)

Karamardian, S.: Complementarity problems over cones with monotone and pseudomonotone maps. J. Optim. Theory Appl. 18, 445–454 (1976)

Korpelevich, G.M.: The extragradient method for finding saddle points and other problems. Ekonomika i Mat. Metody 12, 747–756 (1976)

Kraikaew, R., Saejung, S.: Strong convergence of the Halpern subgradient extragradient method for solving variational inequalities in Hilbert spaces. J. Optim. Theory Appl. 163, 399–412 (2014)

Liu, L.S.: Ishikawa and Mann iteration process with errors for nonlinear strongly accretive mappings in Banach space. J. Math. Anal. Appl. 194, 114–125 (1995)

Maingé, P.E.: A hybrid extragradient-viscosity method for monotone operators and fixed point problems. SIAM J. Control Optim. 47, 1499–1515 (2008)

Malitsky, Y.V.: Projected reflected gradient methods for monotone variational inequalities. SIAM J. Optim. 25, 502–520 (2015)

Malitsky, Y.V., Semenov, V.V.: A hybrid method without extrapolation step for solving variational inequality problems. J. Glob. Optim. 61, 193–202 (2015)

Moudafi, A.: Viscosity approximating methods for fixed point problems. J. Math. Anal. Appl. 241, 46–55 (2000)

Opial, Z.: Weak convergence of the sequence of successive approximations for nonexpansive mappings. Bull. Am. Math. Soc. 73, 591–597 (1967)

Reich, S.: Constructive Techniques for Accretive and Monotone Operators. Applied Nonlinear Analysis, pp 335–345. Academic Press, New York (1979)

Solodov, M.V., Svaiter, B.F.: A new projection method for variational inequality problems. SIAM J. Control Optim. 37, 765–776 (1999)

Thong, D.V., Hieu, D.V.: Modified subgradient extragradient method for variational inequality problems. Numer. Algorithms. 79, 597–610 (2018)

Thong, D.V., Hieu, D.V.: Inertial extragradient algorithms for strongly pseudomonotone variational inequalities. J. Comput. Appl. Math. 341, 80–98 (2018)

Thong, D.V., Shehu, Y., Iyiola, O.S.: A new iterative method for solving pseudomonotone variational inequalities with non-Lipschitz operators. Comp. Appl. Math. 39, 108 (2020). https://doi.org/10.1007/s40314-020-1136-6

Thong, D.V., Shehu, Y., Iyiola, O.S.: New hybrid projection methods for variational inequalities involving pseudomonotone mappings. Optim. Eng. https://doi.org/10.1007/s11081-020-09518-7 (2020)

Vuong, P.T.: On the weak convergence of the extragradient method for solving pseudo-monotone variational inequalities. J. Optim. Theory Appl. 176, 399–409 (2018)

Vuong, P.T., Shehu, Y.: Convergence of an extragradient-type method for variational inequality with applications to optimal control problems. Numer. Algorithms 81, 269–291 (2019)

Xu, H.K.: Iterative algorithms for nonlinear operators. J. Lond. Math. Soc. 66, 240–256 (2002)

Acknowledgments

All the authors are grateful to an anonymous referee for several helpful comments and useful suggestions.

Funding

Simeon Reich was partially supported by the Israel Science Foundation (Grant 820/17), the Fund for the Promotion of Research at the Technion and by the Technion General Research Fund. Vu Tien Dung was partially supported by the National Foundation for Science and Technology Development under Grant 101.01-2019.320.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Reich, S., Thong, D.V., Dong, QL. et al. New algorithms and convergence theorems for solving variational inequalities with non-Lipschitz mappings. Numer Algor 87, 527–549 (2021). https://doi.org/10.1007/s11075-020-00977-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-020-00977-8

Keywords

- Projection-type method

- Pseudomonotone operator

- Strong convergence

- Variational inequality

- Viscosity method

- Weak convergence