Abstract

The purpose of this paper is to investigate pseudomonotone variational inequalities in real Hilbert spaces. For solving this problem, we introduce a new method. The proposed algorithm combines the advantages of the subgradient extragradient method and the projection and contraction method. We establish the strong convergence of the proposed algorithm under conditions pseudomonotonicity and Lipschitz continuity assumptions. Moreover, under additional strong pseudomonotonicity and Lipschitz continuity assumptions, the linear convergence of the sequence generated by the proposed algorithm is obtained. Numerical examples provide to illustrate the potential of our algorithms as well as compare their performances to several related results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let H be a real Hilbert space with inner product \(\langle \cdot ,\cdot \rangle\) and norm \(\Vert \cdot \Vert\). Let C be a nonempty, closed and convex subset of H. Let \(F: H\rightarrow H\) be a single-valued continuous mapping. We consider classical variational inequality (VI) in the sense of Fichera [14] and Stampacchia [30] (see also Kinderlehrer and Stampacchia [21]) which is formulated as follows: find a point \(x^{*}\in C\) such that

We denote by Sol(C, F) the solution set of the VI (1), which is assumed to be nonempty.

In this work, we assume that the following conditions hold:

Condition 1

The solution set \(\text {Sol}(C,F)\) is nonempty.

Condition 2

The mapping \(F:H\rightarrow H\) is pseudomonotone on H, that is,

Condition 3

The mapping \(F:H\rightarrow H\) is Lipschitz continuous with constant \(L>0\), that is, there exists a number \(L>0\) such that

Variational inequality (VI) is a very general mathematical model with numerous applications in economics, engineering mechanics, transportation, and many more, see, for example, [2, 13, 21, 23]. During the last decades, many algorithms for solving VIs have been proposed in the literature, see, e.g., [3, 10, 13, 15, 16, 21, 34, 37].

The most well-known one is extragradient method proposed by Korpelevich [22] (also by Antipin [1] independently). However, the extragradient method requires the evaluation of two orthogonal projections onto C per iteration. The first method which overcomes this obstacle is the projection and contraction method (PC) of He [18] and Sun [33]. Their algorithm is of the form:

and then the next iterate \(x_{n+1}\) is generated via the following

where \(\gamma \in (0,2)\),

and

where \(F: C\rightarrow \mathbb {H}\) be monotone and L-Lipschitz continuous operator and \(\tau _{n}\in (0,1/L)\) or \(\tau _{n}\) is updated by some adaptive rule as follows:

Recently, projection and contraction type methods for solving VI have received great attention by many authors, see, e.g., [4, 11, 12, 23, 27, 36].

The second extension of the extragradient method is known as the subgradient extragradient method proposed by Censor et al. [6,7,8]. In this algorithm, the second projection onto the feasible set C is replaced by a projection onto an easy and constructible set that contains C. For each \(n\in \mathbb {N}\) generate the following sequences,

where \(\tau \in (0,1/L)\).

Since the projection and contraction and the subgradient extragradient methods require calculating only one projection onto C per iteration, their computational efforts and performance have an advantage over other existing results in the literature. Recently, [12] introduced a modification of the subgradient extragradient method by using the direction of the projection and contraction method and stepsize rule \(\tau _{n}\) satisfying (2).

This paper is motivated and inspired by the work of Censor et al. [6], He [18] and Sun [33], first, we investigate the strong convergence for solving the problem (VI) by our new algorithm which is a combination of the subgradient extragradient method and the projection and contraction method in Hilbert spaces. In the proposed method, we show that an advantage of the proposed algorithm is the computation of only two values of the variational inequality mapping and one projection onto the feasible set per one iteration, which distinguishes our method from most other projection-type methods for variational inequality problems with pseudomonotone mappings. Second, the convergence rate of the algorithm is presented under strong pseudomonotonicity and Lipschitz continuity of the cost operator. Specifically, the proposed algorithm improves the results in the literature in the following ways:

-

at each iteration step, a single projection is required to perform;

-

an inertial term for speeding up convergence;

-

step-sizes are not decreasing;

-

without knowledge of the Lipschitz constant of the underline operator;

-

without the assumption on the sequential weak continuity of the underline operator;

-

strong convergence and moreover, a convergence estimate is established.

This paper is organized as follows: Section 2 consists of the notations and basic definitions which are useful throughout the paper. In Section 3, we propose our algorithm and prove the strong convergence of the iterative sequence to a solution of the variational inequality (1). The convergence rate of the proposed algorithm is presented in Section 4. In Section 5, some numerical results in optimal control problems are reported to demonstrate the performance of the proposed method. Final conclusions are given in Section 6.

2 Preliminaries

Let H be a real Hilbert space with inner product \(\left\langle \cdot , \cdot \right\rangle\) and norm \(\Vert \cdot \Vert\). The weak convergence of \(\{x_{n}\}\) to x is denoted by \(x_{n}\rightharpoonup x\) as \(n\rightarrow \infty\), while the strong convergence of \(\{x_{n}\}\) to x is written as \(x_{n}\rightarrow x\) as \(n\rightarrow \infty .\) For all \(x,y\in H\) we have

Definition 2.1

Let \(T:H\rightarrow H\) be an operator. Then

-

1.

T is called L-Lipschitz continuous with constant \(L>0\) if

$$\begin{aligned} \Vert Tx-Ty\Vert \le L \Vert x-y\Vert \ \ \ \forall x,y \in H, \end{aligned}$$if \(L=1\) then the operator T is called nonexpansive and if \(L\in (0,1)\), T is called a contraction.

-

2.

T is called monotone if

$$\begin{aligned} \langle Tx-Ty,x-y \rangle \ge 0 \ \ \ \forall x,y \in H; \end{aligned}$$ -

3.

T is called pseudomonotone in the sense of Karamardian [19] if

$$\begin{aligned} \langle Tx,y-x \rangle \ge 0 \Longrightarrow \langle Ty,y-x \rangle \ge 0 \ \ \ \forall x,y \in H; \end{aligned}$$(3) -

4.

T is called \(\alpha\)-strongly monotone if there exists a constant \(\alpha >0\) such that

$$\begin{aligned} \langle Tx-Ty,x-y\rangle \ge \alpha \Vert x-y\Vert ^{2} \ \ \forall x,y\in H; \end{aligned}$$ -

5.

T is called \(\alpha\)-strongly pseudomonotone if there exists a constant \(\alpha >0\) such that

$$\begin{aligned} \langle Tx,y-x \rangle \ge 0 \Longrightarrow \langle Ty,y-x \rangle \ge \alpha \Vert x-y\Vert ^{2} \ \ \ \forall x,y \in H; \end{aligned}$$ -

6.

The operator T is called sequentially weakly continuous if for each sequence \(\{x_{n}\}\) we have: \(x_{n}\) converges weakly to x implies \({Tx_{n}}\) converges weakly to Tx.

We note that (3) is only one of the definitions of pseudomonotonicity which can be found in the literature. For every point \(x\in H\), there exists a unique nearest point in C, denoted by \(P_{C}x\) such that \(\Vert x-P_{C}x\Vert \le \Vert x-y\Vert \ \forall y\in C\). \(P_{C}\) is called the metric projection of H onto C. It is known that \(P_{C}\) is nonexpansive. For properties of the metric projection, the interested reader could be referred to Section 3 in [16].

Lemma 2.1

([16]) Let C be a nonempty closed convex subset of a real Hilbert space H. Given \(x\in H\) and \(z\in C\). Then \(z=P_{C}x\Longleftrightarrow \langle x-z,z-y\rangle \ge 0 \ \ \forall y\in C.\) Moreover,

Lemma 2.2

Let H be a real Hilbert space. Then the following results hold:

-

i)

\(\Vert x+y\Vert ^{2}=\Vert x\Vert ^{2}+2\langle x,y\rangle +\Vert y\Vert ^{2} \ \forall x,y\in H;\)

-

ii)

\(\Vert x+y\Vert ^{2}\le \Vert x\Vert ^{2}+2\langle y,x+y\rangle \ \forall x,y\in H.\)

Lemma 2.3

([9]) Consider the problem Sol(C, F) with C being a nonempty, closed, convex subset of a real Hilbert space H and \(F: C \rightarrow H\) being pseudomonotone and continuous. Then, \(x^{*}\) is a solution of Sol(C, F) if and only if

Lemma 2.4

([28]) Let \(\{a_{n}\}\) be sequence of nonnegative real numbers, \(\{\alpha _{n}\}\) be a sequence of real numbers in (0, 1) with \(\sum _{n=1}^{\infty }\alpha _{n}=\infty\) and \(\{b_{n}\}\) be a sequence of real numbers. Assume that

If \(\limsup _{k\rightarrow \infty } b_{n_{k}} \le 0\) for every subsequence \(\{a_{n_{k}}\}\) of \(\{a_{n}\}\) satisfying \(\liminf _{k\rightarrow \infty }(a_{n_{k}+1}-a_{n_{k}})\ge 0\) then \(\lim _{n\rightarrow \infty }{a_{n}} = 0\).

Definition 2.2

([26]) Let \(\{x_{n}\}\) be a sequence in H.

-

i)

\(\{x_{n}\}\) is said to converge R-linearly to \(x^{*}\) with rate \(\rho \in [0, 1)\) if there is a constant \(c>0\) such that

$$\begin{aligned} \Vert x_{n}-x^{*}\Vert \le c\rho ^{n} \ \ \forall n\in \mathbb {N}. \end{aligned}$$ -

ii)

\(\{x_{n}\}\) is said to converge Q-linearly to \(x^{*}\) with rate \(\rho \in [0, 1)\) if

$$\begin{aligned} \Vert x_{n+1}-x^{*}\Vert \le \rho \Vert x_{n}-x^{*}\Vert \ \ \forall n\in \mathbb {N}. \end{aligned}$$

3 Strong convergence analysis

First, we introduce the proposed algorithm:

Observe that the projection onto half-space \(T_{n}\) in Step 2 is explicit [5, Section 4.1.3, p. 133], therefore, Algorithm 1 requires only one projection in Step 1. Moreover, the stepsize \(\tau _{n}\) is updated adaptively in Step 3 without requiring the knowledge of the Lipschitz constant L. We start the convergence analysis by proving the following Lemmas.

Lemma 3.5

([24]) Assume that F is L-Lipschitz continuous on H. Let \(\left\{ \tau _{n}\right\}\) be the sequence generated by (5). Then

where \(\alpha =\sum _{n=1}^{\infty } \alpha _{n}\). Moreover

Lemma 3.6

Assume that F is Lipschitz continuous on H and pseudomonotone on C. Then for every \(x^{*} \in Sol(C,F)\), there exists \(n_{0} > 0\) such that

Proof

Using (6), we have

Since \(\lim _{n\rightarrow \infty }\left( 1- \dfrac{\mu \tau _{n}}{\tau _{n+1}}\right) =1-\mu >0\), there exists \(n_{0}\in \mathbb {N}\) such that

Therefore, it follows from (7) that for all \(n\ge n_{0}\) we get

Since \(x^{*}\in Sol(C,F) \subset C\subset T_{n}\), using Lemma 2.1 we have

This implies that

Since \(v_{n}\in C\) and \(x^{*}\in Sol(C,F)\),we get \(\langle Fx^{*},v_{n}-x^{*}\rangle \ge 0\). By the pseudomonotonicity of F, we have \(\langle Fv_{n},v_{n}-x^{*}\rangle \ge 0\), which implies

Thus, we obtain

On the other hand, from \(u_{n+1}\in T_{n}\) we have

This implies that

thus

Hence

On the other hand, we have

From (8), we have \(d_{n}\ne 0 \ \ \forall n\ge n_{0}\), thus \(\eta _{n}=\dfrac{\langle w_{n}-v_{n},d_{n}\rangle }{\Vert d_{n}\Vert ^{2}}\), which means

Moreover

Substituting (13) and (14) into (12) we get for all \(n \ge n_{0}\) that

Combining (11) and (15), we obtain

Again, combining (10) and (16), we get

Substituting (17) into (9) we get

Theorem 3.1

Assume that Conditions 1–3 hold. In addition, we assume that the mapping \(F:H\rightarrow H\) satisfies the following condition

Then the sequence \(\{u_{n}\}\) is generated by Algorithm 1 converges strongly to an element \(z\in Sol(C,F)\), where \(z=P_{Sol(C,F)}(0)\).

Proof

Claim 1. The sequence \(\{u_{n}\}\) is bounded. Indeed, we have

On the other hand, since (4) we have

this implies that \(\lim _{n\rightarrow \infty }\bigg [ (1-\gamma _{n})\dfrac{\theta _{n}}{\gamma _{n}}\Vert u_{n}-u_{n-1}\Vert +\Vert z\Vert \bigg ]=\Vert z\Vert\), thus there exists \(M>0\) such that

Combining (20) and (21) we obtain

Moreover, we have \(\lim _{n\rightarrow \infty }(1-\mu \dfrac{\tau _{n}}{\tau _{n+1}})=1-\mu >\dfrac{1-\mu }{2}\), thus there exists \(n_{0}\in \mathbb {N}\) such that \(1-\mu \dfrac{\tau _{n}}{\tau _{n+1}}>0 \ \ \forall n\ge n_{0},\) by Claim 1 we obtain

Thus

Therefore, the sequence \(\{u_{n}\}\) is bounded.

Claim 2.

Indeed, we have \(\Vert w_{n}-z\Vert \le (1-\gamma _{n})\Vert u_{n}-z\Vert +\gamma _{n} M\), this implies that

where \(M_{1}:=\max \{2(1-\gamma _{n})M \Vert u_{n}-z\Vert +\gamma _{n} M^{2}:\ \ n\in \mathbb {N}\}\). Substituting (23) into (18) we get

Or equivalently

Claim 3.

We have

Hence

or equivalently

Again, we find

Hence for all \(n\ge n_{0}\)

and

Combining (24) and (25), we get

Claim 4.

\(\forall n\ge n_{0}\). Indeed, using Lemma 2.2 ii) and (22) we get

Claim 5. \(\{\Vert u_{n}-z\Vert ^{2}\}\) converges to zero. Indeed, by Lemma 2.4 it suffices to show that \(\limsup _{k\rightarrow \infty }\langle -z, u_{n_{k}+1}-z\rangle \le 0\) and \(\limsup _{k\rightarrow \infty }\Vert u_{n_{k}}-u_{n_{k}+1}\Vert \le 0\) for every subsequence \(\{\Vert u_{n_{k}}-z\Vert \}\) of \(\{\Vert u_{n}-z\Vert \}\) satisfying

For this, suppose that \(\{\Vert u_{n_{k}}-z\Vert \}\) is a subsequence of \(\{\Vert u_{n}-z\Vert \}\) such that \(\liminf _{k\rightarrow \infty }(\Vert u_{n_{k}+1}-z\Vert -\Vert u_{n_{k}}-z\Vert )\ge 0.\) Then

By Claim 2 we obtain

This implies that

We have

Combining (25) and (28) we get

This implies that

From Claim 3 we also have

Moreover, we have

From (29), (30) and (31), we get

Since the sequence \(\{u_{n_{k}}\}\) is bounded, it follows that there exists a subsequence \(\{u_{n_{k_{j}}}\}\) of \(\{u_{n_{k}}\}\), which converges weakly to some \(z^{*}\in H\), such that

Using (31), we get

Using (30), we obtain

Now, we show that \(z^{*}\in Sol(C,F)\). Indeed, since \(v_{n_{k}}=P_{C}(w_{n_{k}}-\tau _{n_{k}}Fw_{n_{k}})\), we have

or equivalently

Consequently

Being weakly convergent, \(\{w_{n_{k}}\}\) is bounded. Then, by the Lipschitz continuity of F, \(\{Fw_{n_{k}}\}\) is bounded. As \(\Vert w_{n_{k}}-v_{n_{k}}\Vert \rightarrow 0\), \(\{v_{n_{k}}\}\) is also bounded and \(\tau _{n_{k}} \ge \min \{\tau _{1},\dfrac{\mu }{L}\}\). Passing (34) to limit as \(k\rightarrow \infty\), we get

Moreover, we have

Since \(\lim _{k\rightarrow \infty }\Vert w_{n_{k}}-v_{n_{k}}\Vert =0\) and F is L-Lipschitz continuous on H, we get

which, together with (35) and (36) implies that

Next, we choose a sequence \(\{\epsilon _{k}\}\) of positive numbers decreasing and tending to 0. For each k, we denote by \(N_{k}\) the smallest positive integer such that

Since \(\{ \epsilon _{k}\}\) is decreasing, it is easy to see that the sequence \(\{N_{k}\}\) is increasing. Furthermore, for each k, since \(\{v_{N_{k}}\}\subset C\) we can suppose \(Fv_{N_{k}}\ne 0\) (otherwise, \(v_{N_{k}}\) is a solution) and, setting

we have \(\langle Fv_{N_{k}}, t_{N_{k}}\rangle = 1\) for each k. Now, we can deduce from (37) that for each k

From F is pseudomonotone on H, we get

This implies that

Now, we show that \(\lim _{k\rightarrow \infty }\epsilon _{k} t_{N_{k}}=0\). Indeed, since \(w_{n_{k}}\rightharpoonup z\) and \(\lim _{k\rightarrow \infty }\Vert w_{n_{k}}-v_{n_{k}}\Vert =0,\) we obtain \(v_{N_{k}}\rightharpoonup z \text { as } k \rightarrow \infty\). By \(\{v_{n}\}\subset C\), we obtain \(z^{*}\in C\). Since F satisfies Condition (19), we have

Since \(\{v_{N_{k}}\}\subset \{v_{n_{k}}\}\) and \(\epsilon _{k} \rightarrow 0\) as \(k \rightarrow \infty\), we obtain

which implies that \(\lim _{k\rightarrow \infty } \epsilon _{k} t_{N_{k}} = 0.\)

Now, letting \(k\rightarrow \infty\), then the right-hand side of (38) tends to zero by F is uniformly continuous, \(\{w_{N_{k}}\}, \{t_{N_{k}}\}\) are bounded and \(\lim _{k\rightarrow \infty }\epsilon _{k} t_{N_{k}}=0\). Thus, we get

Hence, for all \(x\in C\) we have

By Lemma 2.3, we get

Since (33) and the definition of \(z=P_{Sol(C,F)}(0)\), we have

Combining (32) and (39), we have

Hence, by (40), \(\lim _{n\rightarrow \infty }\dfrac{\theta _{n}}{\gamma _{n}}\Vert u_{n}-u_{n-1}\Vert =0\), \(\lim _{k\rightarrow \infty }\Vert u_{n_{k}+1}-u_{n_{k}}\Vert =0\), Claim 5 and Lemma 2.4, we have \(\lim _{n\rightarrow \infty }\Vert u_{n}-z\Vert =0\). That is the desired result.

4 Convergence rate

In this section, we provide a result on the convergence rate of the iterative sequence generated by Algorithm 1 with \(\gamma _{n}=0\) and the mapping F is L-Lipschitz continuous on H and \(\kappa\)-strongly pseudomonotone on C. The algorithm is of the form:

Using the technique in [35] we obtain the following result.

Theorem 4.2

Assume that F is L-Lipschitz continuous on H and \(\kappa\)-strongly pseudomonotone on C. Let \(\delta \in (0,1)\) be arbitrary and \(\theta\) be such that

where \(\xi :=\dfrac{1-\beta }{\beta }.\) Then the sequence \(\{u_{n}\}\) generated by Algorithm 2 converges strongly to the unique solution \(x^{*}\) of (1) with an R-linear rate.

Proof

First, we will shall that, there exists \(\rho ,\xi \in (0,1)\) such that

Indeed, under assumptions made, it was proved that (1) has a unique solution [20]. From the \(\kappa\)-strong pseudomonotonicity of F, we have \(\langle Fv_{n},v_{n}-x^{*}\rangle \ge \kappa \Vert v_{n}-x^{*}\Vert ^{2}\). This implies that

Therefore

Substituting (42) into (9), we get

Again, substituting (16) into (43), we obtain

Combining (26) and (44), we get

It follows from (45) that

On the other hand, we have

Since the definition of \(\{u_{n}\}\) we deduce \(w_{n}-z_{n}=\dfrac{1}{\beta }(u_{n+1}-w_{n})\). Thus,

Substituting (46) into (47), we get

Substituting (25) into (48), we deduce

Setting

we have

and

Using (49), hence there exists \(n_{1}>n_{0}\) such that for all \(n \ge n_{1}\)

where \(\rho := 1 - \beta ^{*} \in (0,1)\) and \(\xi :=\dfrac{1-\beta }{\beta }\).

Next, we prove that the sequence \(\{u_{n}\}\) converges strongly to \(x^{*}\) with an R linear rate. Indeed, we have

and

Combining these inequalities with (50) we obtain for all \(n \ge n_{1}\) that

or equivalently

Setting

since \(\rho \in (0,1)\) we can write

Note that from (41) we have

which implies

Since

it holds

or equivalently

Hence

Moreover, since \(\theta \le \frac{\xi }{2+\xi }\), we have \(\theta \le \frac{\xi (1-\theta )}{2}\), which implies

Hence for all \(n \ge n_{1}\) it holds

This follows that

which implies that \(\left\{ u_{n} \right\}\) converges R-linearly to \(x^{*}\).

5 Numerical experiments

In this section, we first present computational experiments to illustrate our newly proposed algorithm for solving variational inequality arising in optimal control problem.

Let T be a positive number. Denote by \(L_{2}([0,T],\mathbb {R}^{m})\) the Hilbert space of square integrable, measurable vector functions \(u:[0,T]\rightarrow \mathbb {R}^{m}\) with inner product \(\langle u,v\rangle ={\int _{0}^{T}}\langle u(t),v(t)\rangle dt,\) and norm \(\Vert u\Vert _{2}=\sqrt{\langle u,u\rangle }.\) We consider the following optimal control problem:

on the interval [0, T] and assume that such a control exists. Here U is the set of admissible controls, which has the form of an m-dimensional box and consists of piecewise continuous function:

Specially, the control can be bang-bang (piecewise constant function). The terminal objective has the form

where \(\phi\) is a convex and differentiable function, defined on the attainability set.

Suppose that the trajectory \(x(t) \in L_{2}([0,T])\) satisfies constraints in the form of a system of linear differential equation:

where \(A(t)\in \mathbb {R}^{n\times n}\), \(B(t)\in \mathbb {R}^{n\times m}\) are given continuous matrices for every \(t\in [0,T]\). By the Pontryagin Maximum Principle there exists a function \(p^{*}\in L_{2}([0,T])\) such that the triple \((x^{*},p^{*},u^{*})\) solves for a.e. \(t\in [0,T]\) the system

where \(N_{U}(u)\) is the normal cone to U at u. Denoting \(Gu(t):=B(t)^{\top } p(t)\), it is known that Gu is the gradient of the objective cost function z (see [38] and the references contained therein). We can write the inclusion problem (54) as a variational inequality problem: find \(u\in U\) such that

Discretizing the continuous functions and choosing a natural number N with the mesh size \(h:=T/N\), we identify any discretized control \(u^{N}:=(u_{0}, u_{1},\ldots ,u_{N-1})\) with its piecewise constant extension:

Moreover, we identity any discretized state \(x^{N}:=(x_{0},x_{1},\ldots ,x_{N})\) with its piecewise linear interpolation

Similarly we get the co-state variable \(p^{N}:=(p_{0},p_{1},\ldots ,p_{N})\) (see [38] for more details).

Next, we consider three examples in which the terminal function is not linear.

In the numerical results listed in the following tables, ’Iter.’ denotes the number of iterations and ’CPU in s’ denotes the execution time in seconds. Besides, ’–’ means that the algorithm can’t reach the error conditions because the inner loop of the algorithm reaches infinite loop after some steps.

In the following three examples, we take \(N=100\). The initial control \(u_{0}(t)\) is chosen randomly in \([-1,1]\), and the termination condition is controlled by the relative solution error, defined by (Table 1)

at the current \(u_{n}\).

Example 1

(See [39, Rocket Car])

The corresponding exact optimal control is

where \(\tau =3.5174292\).

In Fig. 1 and Fig. 2, we take \(\text {RSE} \le 0.3\). Figure 1 displays the approximate optimal control and the corresponding trajectories. Other parameters are selected as follows:

Algorithm 1: \(\tau _{1}=0.5, \mu =0.7, \gamma =1.9, \theta =0.5, \gamma _{n}=\frac{1}{3000(n+1)}, \epsilon _{n}=\frac{1}{3000(n+1)^{1.1}}\) and \(\alpha _{n}=\frac{1}{n^{2}}\);

Algorithm 3.3 in [31]: \(\sigma =0.1, \gamma =0.6\) and \(\alpha _{n}=\frac{1}{3000(n+1)}\);

Algorithm 2 in [32]: \(\lambda _{0}=0.6, \mu =0.9\) and \(\alpha _{n}=\frac{1}{3000(n+1)}\).

The corresponding results reported in Fig. 2 and Table 1 illustrate that Algorithm 1 behaves better than Algorithm 3.3 in [31] and Algorithm 2 in [32] in term of time and steps.

Example 2

(See [40, Example 6.3])

The corresponding exact optimal control is

In Fig. 3 and Fig. 4, we take \(\text {RSE} \le 10^{-5}\). Figure 3 displays the approximate optimal control and the corresponding trajectories. Other parameters are selected as follows:

Algorithm 1: \(\tau _{1}=0.2, \mu =0.8, \gamma =1.9, \theta =0.5, \gamma _{n}=\frac{1}{10000(n+1)}, \epsilon _{n}=\frac{1}{10000(n+1)^{1.1}}\) and \(\alpha _{n}=\frac{1}{n^{2}}\);

Algorithm 3.3 in [31]: \(\sigma =0.1, \gamma =0.3\) and \(\alpha _{n}=\frac{1}{10000(n+1)}\);

Algorithm 2 in [32]: \(\lambda _{0}=0.3, \mu =0.9\) and \(\alpha _{n}=\frac{1}{10000(n+1)}\).

In Fig. 4 and Table 2, we can obtain that the performance of our Algorithm 1 in time and steps is better than Algorithm 2 in [32] and Algorithm 3.3 in [31].

Example 3

(control of a harmonic oscillator)

The exact optimal control in this problem is known:

In Fig. 5 and Fig. 6, we take \(\text {RSE} \le 0.1\). Figure 5 displays the approximate optimal control and the corresponding trajectories. Other parameters are selected as follows:

Algorithm 1: \(\tau _{1}=1.9, \mu =0.8, \gamma =1.2, \theta =0.5, \gamma _{n}=\frac{1}{100(n+1)}, \epsilon _{n}=\frac{1}{100(n+1)^{1.1}}\) and \(\alpha _{n}=\frac{1}{n^{2}}\);

Algorithm 3.3 in [31]: \(\sigma =0.0001, \gamma =0.1\) and \(\alpha _{n}=\frac{1}{100(n+1)}\);

Algorithm 2 in [32]: \(\lambda _{0}=1.9, \mu =0.9\) and \(\alpha _{n}=\frac{1}{100(n+1)}\).

As shown in Fig. 6 and Table 3, Algorithm 1 performs better than Algorithm 3.3 in [31] and Algorithm 2 in [32].

Next we provide several numerical examples to demonstrate the efficiency of the proposed algorithm compared to some known algorithms.

Example 4

In the example, we study an important Nash-Cournot oligopolistic market equilibrium model, which was proposed originally by Murphy et. al. [25] as a convex optimization problem. Later, Harker reformulated it as a monotone variational inequality in [17]. We provide only a short description of the problem, for more details we refer to [13, 17, 25]. There are N firms, each of them supplies a homogeneous product in a non-cooperative fashion. Let \(q_{i} \ge 0\) be the ith firm’s supply at cost \(f_{i}(q_{i})\) and p(Q) be the inverse demand curve, where \(Q \ge 0\) is the total supply in the market, i.e., \(Q =\sum _{i=1}^{N} q_{i}\). A Nash equilibrium solution for the market defined above is a set of nonnegative output levels \((q_{1}^{*},q_{2}^{*},...,q_{N}^{*})\) such that \(q_{i}^{*}\) is an optimal solution to the following problem for all \(i= 1,2 ...,N\):

where

A variational inequality equivalent to (53) is (see [17])

where \(F(q^{*}) = (F_{1}(q^{*}), F_{2} (q^{*}), ..., F_{N}(q^{*}))\) and

As in the classical example of the Nash-Cournot equilibrium [17, 25], we consider an oligopoly with \(N=5\) firms, each with the inverse demand function p and the cost function \(f_{i}\) take the form

and the parameters \(c_{i}, L_{i}, \beta _{i}\) as in [25], see Table 4.

The process is started with the initial \(x_{0}=(10,10,10, 10)^{T}\) and \(x_{1} =0.9*x_{0}\) and stopping conditions is \(\text {Residual} := \Vert w_{n} - v_{n}\Vert \le 10^{-9}\) or the number of iterations \(\ge\) 200 for all algorithms. Other parameters are selected as follows:

Algorithm 1: \(\tau _{1}=1.8, \mu =0.7, \gamma =1.99, \theta =0.5, \gamma _{n}=\frac{1}{10000000*(n+1)}\) and \(\alpha _{n}=\frac{1}{n^{2}}\)

Algorithm 1 in [29]: \(\nu _{0} =1, \theta = 0.9\) and \(\rho _{n} = \rho = 0.4\).

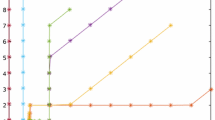

Algorithm 2 in [32]: \(\lambda _{0}=1, \mu =0.7\) and \(\alpha _{n}=\frac{1}{10000000*(n+1)}\). The numerical results are described in Fig. 7.

Example 5

Consider the following fractional programming problem:

subject to \(x \in X:=\{x \in \mathbb {R}^{m}: b^{T}x+b_{0}>0\}\),

where Q is an \(m \times m\) symmetric matrix, \(a, b \in \mathbb {R}^{m}\), and \(a_{0}, b_{0} \in \mathbb {R}\). It is well known that f is pseudo-convex on X when Q is positive-semidefinite. We consider the following cases:

Case 1:

We minimize f over \(C := \{x \in \mathbb {R}^{4} : 1 \le x_{i} \le 10, i = 1,..., 4\} \subset X\). It is easy to verify that Q is symmetric and positive definite in \(\mathbb {R}^{4}\) and consequently f is pseudo-convex on X.

The process is started with the initial \(x_{0}=(20,-20, 20,-20)^{T}\) and \(x_{1} =0.9*x_{0}\) and stopping conditions is \(\text {Residual} := \Vert u_{n} - q_{n}\Vert \le 10^{-9}\) or the number of iterations \(\ge\) 200 for all algorithms. Other parameters are selected as follows:

Algorithm 1: \(\tau _{1}=0.6, \mu =0.6, \gamma =1.5, \theta =0.5, \alpha _{n}=\frac{1}{n^{2}}\) and \(\gamma _{n}=\frac{1}{C*(n+1)}\) with \(C=10^{7}\).

Algorithm 1 in [29]: \(\nu _{0} =1, \theta = 0.9.\mu =0.6\) and \(\rho _{n} = \rho = 0.4\).

Algorithm 2 in [32]: \(\lambda _{0}=0.6, \mu =0.6\) and \(\alpha _{n}=\frac{1}{C*(n+1)}\). The numerical results are described in Fig. 8.

Case 2: In the second experiment, to make the problem even more challenging. Let matrix \(A:\mathbb {R}^{m \times m} \rightarrow \mathbb {R}^{m \times m}\), vectors \(c, d,y_{0} \in \mathbb {R}^{m}\) and \(c_{0},d_{0}\) are generated from a normal distribution with mean zero and unit variance. We put \(e=(1,1,...,1)^{T} \in \mathbb {R}^{m}, Q=A^{T}A+I, a:=e+c, b:=e+d, a_{0}=1+c_{0}, b_{0}=1+d_{0}\). We minimize f over \(C := \{x \in \mathbb {R}^{m} : 1 \le x_{i} \le 10, i = 1,..., m\} \subset X\). Because Matrix Q is symmetric and positive definite in \(\mathbb {R}^{m}\) and consequently f is pseudo-convex on X. The process is started with the initial \(x_{0}:=m*y_{0}\) and \(x_{1} =0.9*x_{0}\), stopping conditions and parameters as Case 1. The numerical results are described in Fig. 9.

The corresponding results reported in Figs. 7, 8, and 9 show that Alg. 1 behaves better than Algorithm 1 in [29] and Algorithm 2 in [32].

6 Conclusions

In this paper, we introduce some results of the modified subgradient extragradient method for solving pseudomonotone variational inequalities in real Hilbert spaces. The algorithm only needs to calculate one projection onto the feasible set C per iteration and does not require the prior information of the Lipschitz constant of the cost mapping. First, we prove a sufficient condition for a strong convergence of the proposed algorithm under relaxed assumptions. Second, the proposed algorithm is proved to converge strongly with an R-linear convergence rate, under Lipschitz continuity and strong pseudomonotonicity assumptions. Finally, several numerical results are presented to illustrate the efficiency and advantages of the proposed method.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Antipin, A.S.: On a method for convex programs using a symmetrical modification of the Lagrange function. Ekonomika i Mat. Metody. 12, 1164–1173 (1976)

Baiocchi, C., Capelo, A.: Variational and Quasivariational Inequalities. Applications to Free Boundary Problems. Wiley, New York (1984)

Boţ, R.I., Csetnek, E.R., Vuong, P.T.: The forward-backward-forward method from continuous and discrete perspective for pseudo-monotone variational inequalities in Hilbert spaces. Eur. J. Oper. Res. 287, 49–60 (2020)

Cai, X., Gu, G., He, B.: On the \(O(1/t)\) convergence rate of the projection and contraction methods for variational inequalities with Lipschitz continuous monotone operators. Comput. Optim. Appl. 57, 339–363 (2014)

Cegielski, A.: Iterative Methods for Fixed Point Problems in Hilbert Spaces. Lecture Notes in Mathematics, vol. 2057. Springer, Berlin (2012)

Censor, Y., Gibali, A., Reich, S.: The subgradient extragradient method for solving variational inequalities in Hilbert space. J. Optim. Theory Appl. 148, 318–335 (2011)

Censor, Y., Gibali, A., Reich, S.: Strong convergence of subgradient extragradient methods for the variational inequality problem in Hilbert space. Optim. Meth. Softw. 26, 827–845 (2011)

Censor, Y., Gibali, A., Reich, S.: Extensions of Korpelevich’s extragradient method for the variational inequality problem in Euclidean space. Optimization 61, 1119–1132 (2011)

Cottle, R.W., Yao, J.C.: Pseudo-monotone complementarity problems in Hilbert space. J. Optim. Theory Appl. 75, 281–295 (1992)

Denisov, S.V., Semenov, V.V., Chabak, L.M.: Convergence of the modified extragradient method for variational inequalities with non-Lipschitz operators. Cybern. Syst. Anal. 51, 757–765 (2015)

Dong, L.Q., Cho, J.Y., Zhong, L.L., Rassias, MTh.: Inertial projection and contraction algorithms for variational inequalities. J. Glob. Optim. 70, 687–704 (2018)

Dong, Q.L., Gibali, A., Jiang, D.: A modified subgradient extragradient method for solving the variational inequality problem. Numer. Algor. 79, 927–940 (2018)

Facchinei, F., Pang, J.S.: Finite-Dimensional Variational Inequalities and Complementarity Problems. Springer Series in Operations Research, vols. I and II. Springer, New York (2003)

Fichera, G.: Sul problema elastostatico di Signorini con ambigue condizioni al contorno. Atti Accad. Naz. Lincei, VIII. Ser., Rend., Cl. Sci. Fis. Mat. Nat. 34, 138–142 (1963)

Gibali, A., Reich, S., Zalas, R.: Iterative methods for solving variational inequalities in Euclidean space. J. Fixed Point Theory Appl. 17, 775–811 (2015)

Goebel, K., Reich, S.: Uniform Convexity, Hyperbolic Geometry, and Nonexpansive Mappings. Marcel Dekker, New York (1984)

Harker, P.T.: A variational inequality approach for the determination of oligopolistic market equilibrium. Mathematical Programming 30, 105–111 (1984)

He, B.S.: A class of projection and contraction methods for monotone variational inequalities. Appl. Math. Optim. 35, 69–76 (1997)

Karamardian, S., Schaible, S.: Seven kinds of monotone maps. J. Optim. Theory Appl. 66, 37–46 (1990)

Kim, D.S., Vuong, P.T., Khanh, P.D.: Qualitative properties of strongly pseudomonotone variational inequalities. Optim. Lett. 10, 1669–1679 (2016)

Kinderlehrer, D., Stampacchia, G.: An introduction to variational inequalities and their applications. Academic, New York (1980)

Korpelevich, G.M.: The extragradient method for finding saddle points and other problems. Ekonomika i Mat. Metody. 12, 747–756 (1976)

Linh, H.M., Reich, S., Thong, D.V., et al.: Analysis of two variants of an inertial projection algorithm for finding the minimum-norm solutions of variational inequality and fixed point problems. Numer. Algor. 89, 1695–1721 (2022)

Liu, H., Yang, J.: Weak convergence of iterative methods for solving quasimonotone variational inequalities. Comput. Optim. Appl. 77, 491-508 (2020)

Murphy, F.H., Sherali, H.D., Soyster, A.L.: A mathematical programming approach for determining oligopolistic market equilibrium. Mathematical Programming 24, 92–106 (1982)

Ortega, J.M., Rheinboldt, W.C.: Iterative Solution of Nonlinear Equations in Several Variables. Academic Press, New York (1970)

Reich, S., Thong, D.V., Cholamjiak, P., et al.: Inertial projection-type methods for solving pseudomonotone variational inequality problems in Hilbert space. Numer. Algor. 88, 813–835 (2021)

Saejung, S., Yotkaew, P.: Approximation of zeros of inverse strongly monotone operators in Banach spaces. Nonlinear Anal. 75, 742–750 (2012)

Shehu, S., Iyiola, O.S., Yao, J.C.: New projection methods with inertial steps for variational inequalities. Optimization (2021). https://doi.org/10.1080/02331934.2021.1964079

Stampacchia, G.: Formes bilineaires coercitives sur les ensembles convexes. C. R. Acad. Sci. 258, 4413–4416 (1964)

Vuong, P.T., Shehu, Y.: Convergence of an extragradient-type method for variational inequality with applications to optimal control problems. Numer. Algor. 81, 269–291 (2019)

Yang, J., Liu, H., Liu, Z.: Modified subgradient extragradient algorithms for solving monotone variational inequalities. Optimization 67, 2247–2258 (2018)

Sun, D.F.: A class of iterative methods for solving nonlinear projection equations. J. Optim. Theory Appl. 91, 123–140 (1996)

Thong, D.V., Shehu, Y., Iyiola, O.S.: Weak and strong convergence theorems for solving pseudo-monotone variational inequalities with non-Lipschitz mappings. Numer. Algor. 84, 795–823 (2020)

Thong, D.V., Vuong, P.T.: R-linear convergence analysis of inertial extragradient algorithms for strongly pseudo-monotone variational inequalities. J. Comput. Appl. Math. 406, 114003 (2022)

Thong, D.V., Dong, Q.L., Liu, L.L., Triet, N.A., Lan, P.N.: Two fast converging inertial subgradient extragradient algorithms with variable stepsizes for solving pseudo-monotone VIPs in Hilbert spaces. J. Comput. Appl. Math. 410, 114260 (2022)

Vuong, P.T.: On the weak convergence of the extragradient method for solving pseudo-monotone variational inequalities. J. Optim. Theory Appl. 176, 399–409 (2018)

Vuong, P.T., Shehu, Y.: Convergence of an extragradient-type method for variational inequality with applications to optimal control problems. Numer. Algor. 81(1), 269–291 (2019)

Alt, W., Baier, R., Gerdts, M., Lempio, F.: Error bounds for Euler approximation of linear-quadratic control problems with bang-bang solutions. Numer. Algebra. Control Optim. 2, 547–570 (2012)

Bressan, B., Piccoli, B.: Introduction to the mathematical theory of control, In: Volume 2 of AIMS Series on Applied Mathematics. American Institute of Mathematical Sciences. (AIMS), Springfield, MO (2007)

Acknowledgements

The authors are thankful to the handling editor and two anonymous reviewers for comments and remarks which substantially improved the quality of the paper. The authors would like to thank Professor Pham Ky Anh for drawing our attention to the subject and for many useful discussions. This research is funded by National Economics University, Hanoi, Vietnam.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Thong, D.V., Li, X., Dong, QL. et al. Strong and linear convergence of projection-type method with an inertial term for finding minimum-norm solutions of pseudomonotone variational inequalities in Hilbert spaces. Numer Algor 92, 2243–2274 (2023). https://doi.org/10.1007/s11075-022-01386-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-022-01386-9

Keywords

- Subgradient extragradient method

- Projection and contraction method

- Variational inequality problem

- Pseudomonotone mapping

- Convergence rate