Abstract

In this paper, two novel classes of implicit exponential Runge–Kutta (ERK) methods are studied for solving highly oscillatory systems. First of all, symplectic conditions for two kinds of exponential integrators are derived, and we present a first-order symplectic method. High accurate implicit ERK methods (up to order four) are formulated by comparing the Taylor expansion of the exact solution, it is shown that the order conditions of two new kinds of exponential methods are identical to the order conditions of classical Runge–Kutta (RK) methods. Moreover, we investigate the linear stability properties of these exponential methods. Numerical examples not only present the long time energy preservation of the first-order symplectic method, but also illustrate the accuracy and efficiency of these formulated methods in comparison with standard ERK methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

It is well known that classical Runge–Kutta methods have a wide range of applications in scientific computing. Especially, symplectic methods can preserve the symplecticity of the original systems, and symplectic or symmetric methods provide long time energy preservation applied to a Hamiltonian system. Symplectic algorithms for Hamiltonian systems appeared in 1980s, and the earliest significant contribution to this field were due to Feng Kang (see [1, 2]). It is worth noting the earlier important work on symplectic integration by Sanz-Serna, who first found and analyzed symplectic Runge–Kutta schemes for Hamiltonian systems (see [3]). Symplectic exponential Runge–Kutta methods for solving Hamiltonian systems were proposed by Mei et al. [4], and shown better performance than classical symplectic Runge–Kutta schemes. However, the coefficients of these exponential integrators are strongly dependent on the computing or approximating the product of a matrix exponential function with a vector. As a consequence, we try to design two novel classes of implicit exponential Runge–Kutta methods with lower computational cost.

In this work, we consider the first-order initial value problem

where the matrix \(M\in {\mathbb {R}}^{m\times m}\) is symmetric positive definite or skew-Hermitian with eigenvalues of large modulus. Problems of the form (1) arise frequently in a variety of applied science such as quantum mechanics, flexible mechanics, and electrodynamics. Some highly oscillatory problems (see, e.g. [5, 6]), Schrödinger equations (see, e.g. [7,8,9]) and KdV equations (see, e.g. [10]) can be converted into (1) with appropriate spatial discretizations. Using the Volterra integral formula

Hochbruch and Ostermann [11] formulated exponential Runge–Kutta methods of collocation type. It is noted that exponential Runge–Kutta (ERK) methods were based on the stiff-order conditions (comprise the classical order conditions) [5, 11,12,13]. In formula (2), \(e^{-hM}\) is generally the matrix exponential function, and exponential integrators can exactly integrate the linear equation \(y^{\prime }(t)+My(t)=0\), which indicates that exponential integrators have unique advantages for solving highly oscillatory problems than non-exponential integrators. Exponential integrators have received more attention [5, 14,15,16,17,18,19,20,21,22]. It is also worth mentioning that extended Runge–Kutta-Nyström (ERKN) methods [23,24,25,26,27,28,29,30], as the exponential integrators, which were formulated for effectively solving second-order oscillatory systems.

On the other hand, (1) frequently possesses some important geometrical or physical properties. When \(f(y)=J^{-1}\nabla U(y)\) and \(-M=J^{-1}Q\), with the skew-symmetric

where U(y) is a smooth potential function, Q is a symmetric matrix and I is the identity matrix, the problem (1) can be converted into a Hamiltonian system. Owing to this, our study starts by deriving symplectic conditions of these methods. In this paper, the coefficients of implicit exponential methods are real constants, therefore our study is related to the classical order, not satisfy the stiff order conditions. We have presented that two new kinds of explicit ERK methods up to order four reduce to classical Runge–Kutta (RK) methods once \(M\rightarrow {\textbf{0}}\) [31, 32]. In what follows, we will study the implicit ERK methods.

The paper is organized as follows. In Sect. 2, we investigate symplectic conditions for the simplified version of ERK (SVERK) and modified version of ERK (MVERK) methods, respectively, and present a first-order symplectic SVERK method. The order conditions of implicit SVERK and MVERK methods are derived in Sect. 3, which are identical to the order conditions of RK methods. Section 4 is devoted to linear stability regions of implicit SVERK and MVERK methods. In Sect. 5, numerical experiments are carried out to show the structure-preserving property of the symplectic method, and present the comparable accuracy and efficiency of these implicit ERK methods. The last section is concerned with concluding remarks.

2 Symplectic conditions for two new classes of ERK methods

In [31], we have formulated the modified and simplified versions of explicit ERK methods for stiff or highly oscillatory problems, and analyzed the convergence of explicit exponential methods. Meanwhile, it has been pointed out that the internal stages and update of SVERK methods preserve some properties of matrix-valued functions, and MVERK methods inherit the internal stages and modify the update of classical RK methods, but their coefficients are independent of matrix exponentials \(\varphi _k(-hM)\) defined by (30).

Definition 2.1

([31]) An s-stage SVERK method for the numerical integration (1) is defined as

where \(a_{ij} \), \(b_i\) are real constants for \(i,j=1,\ldots , s\), \(Y_i\approx y(t_0+c_ih)\) for \(i=1,\ldots ,s\), \(w_s(z)\) depends on h, M, and \(w_s(z)\rightarrow 0\) when \(M\rightarrow {\textbf{0}}\).

Definition 2.2

([31]) An s-stage MVERK method for the numerical integration (1) is defined as

where \({\bar{a}}_{ij}\), \({\bar{b}}_i\) are real constants for \({i,j=1,\ldots , s}\), \({\bar{Y}}_i\approx y(t_0+{\bar{c}}_ih) \) for \(i=1,\ldots ,s\), \({\bar{w}}_s(z)\) is related to h and M, and \({\bar{w}}_s(z)\rightarrow 0\) once \(M\rightarrow {\textbf{0}}\).

The \(w_s(z)\) and \({\bar{w}}_s(z)\) also depend on the term \(f(\cdot )\) and initial value \(y_0\) once we consider the order of SVERK and MVERK methods which satisfies \(p\ge 2\). If we consider the first-order methods, then \(w_s(z)=0\) and \({\bar{w}}_s(z)=0\). It should be noted that the SVERK or MVERK methods with the same order share the same \(w_s(z)\) or \({\bar{w}}_s(z)\), and \(w_s(z)\) is different from \({\bar{w}}_s(z)\) when \(p\ge 3\) in [31, 32]. It is clear that SVERK and MVERK methods reduce to classical RK methods when \(M\rightarrow {\textbf{0}}\), and these methods exactly integrate the first-order homogeneous linear system

with the exact solution

The SVERK method (3) can be displayed by the following Butcher Tableau

where \(c_i=\sum _{j=1}^sa_{ij}\) for \(i=1,\ldots ,s\). Similarly, the MVERK method (4) also can be expressed in the Butcher tableau

with \({\bar{c}}_i=\sum _{j=1}^s {\bar{a}}_{ij}\) for \(i=1,\ldots ,s\).

It is true that (1) becomes a Hamiltonian system when \(f(y)=J^{-1}\nabla U(y)\) and \(M=-J^{-1}Q\), where U(y) is a smooth potential function and Q is a symmetric matrix. Thus, we consider the following Hamiltonian system

Under the assumptions of \(w_s(z)=0\) and \({\bar{w}}_s(z)=0\), we will analyze symplectic conditions for SVERK and MVERK methods. In fact, \(w_s(z)=0\) and \({\bar{w}}_s(z)=0\) mean that the order of SVERK and MVERK methods satisfies \(p\le 1\). Since \(w_s(z)\ne 0\) and \({\bar{w}}_s(z)\ne 0\), the \(w_s(z)\) and \({\bar{w}}_s(z)\) will break the formal conservation of the symplectic invariant, hence the following analysis does not involve high order methods.

Theorem 2.3

Suppose that the coefficients of an s-stage SVERK method with \(w_s(z)=0\), which satisfy the following conditions:

where \(G^{-1}_k=\nabla ^2U(y_n+h\sum _{l=1}^s a_{kl}\xi _l)\) and \(\xi _k =f(y_n+h\sum _{l=1}^s a_{kl}\xi _l)\), then the SVERK method is symplectic.

Proof

A numerical method is said to be symplectic if the numerical solution \(y_{n+1}\) satisfies \((\frac{\partial y_{n+1}}{\partial y_0})^{\intercal }J(\frac{\partial y_{n+1}}{\partial y_0})\) \(=(\frac{\partial y_{n}}{\partial y_0})^{\intercal }J(\frac{\partial y_{n}}{\partial y_0})\). Under the assumption \(w_s(z)=0\), we rewrite the SVERK method as

Letting \(\Xi _k=\frac{\partial \xi _k}{\partial y_0}\), \(\Psi _{n+1}=\frac{\partial y_{n+1}}{\partial y_0}\), and \(G_k=\nabla ^2U\big (e^{-c_khM}y_n+h\sum _{l=1}^sa_{kl}\xi _l\big )\) for \( k=1, \ldots , s\). Moreover, assuming the symmetric matrices \(G_1, \ldots , G_s\) are nonsingular, we apply (10) to a Hamiltonian system (8), the derivative of \(y_{n+1}\) with respect to \(y_0\) is

It is easy to obtain

It follows from (10) that

thus

In view of (14), we have

and

Inserting (15) and (16) into (12) yields

As the coefficients of a SVERK method satisfy the conditions (9), a direct calculation leads to

Therefore the SVERK method is symplectic. The proof is complete. \(\square \)

Remark 2.4

It can be observed that symplectic conditions of SVERK methods with \(w_s(z)=0\) reduce to symplectic conditions of classical RK methods once \(M\rightarrow {\textbf{0}}\). A choice is that \(b_1=1\), \(c_1=1\) and \(a_{11}=1/2\), we can obtain the first-order symplectic SVERK method

For (1), the method (17) reduces to the implicit midpoint method when \(M\rightarrow {\textbf{0}}\). Unfortunately, there is no existing the symplectic SVERK method with order \(p\ge 2\) due to \(w_s(z)\ne 0\).

The next theorem will present symplectic conditions of MVERK methods with \({\bar{w}}_s(z)=0\).

Theorem 2.5

Suppose that the coefficients of an s-stage MVERK method with \({\bar{w}}_s(z)=0\), which satisfy the following conditions:

with \(D_i=\frac{\partial f({\bar{Y}}_i)}{\partial y_0}\), then the MVERK method is symplectic.

Proof

Setting \(D_i=\frac{\partial f({\bar{Y}}_i)}{\partial y}\), \(X_i=\frac{\partial {\bar{Y}}_i}{\partial y_0}\), and \(\Psi _{n+1}=\frac{\partial {\bar{y}}_{n+1}}{y_0}\). Similarly, we apply the MVERK method (4) with \({\bar{w}}_s(z)=0\) to a Hamiltonian system (8), the derivative of this scheme with respect to \(y_0\) is

Then, we have

Using the first formula of (19), we obtain

thus

Inserting (22) into (20) leads to

As the coefficients of the MVERK method satisfy conditions (18), it can be verified that

whence the MVERK method is symplectic. The proof is complete. \(\square \)

Remark 2.6

Theorem 2.5 presents symplectic conditions of MVERK methods with \(w_s(z)=0\). However, when \(b_i\) (\(i=1,\ldots ,s\)) are constants, the second formula of (18) can never be satisfied. Symplectic conditions of MVERK methods with \(w_s(z)\ne 0\) can be analyzed by the same way, unfortunately, the MVERK methods with \({\bar{w}}_s(z)\ne 0\) can not preserve the symplectic invariant.

Corollary 2.7

There does not exist any symplectic MVERK methods.

3 Highly accurate implicit ERK methods

Section 2 is concerned with symplectic conditions for SVERK and MVERK methods. However, the symplectic SVERK method only has order one, and there is no existing symplectic MVERK methods. In practice, we need some highly accurate and effective numerical methods, hence the high order implicit SVERK (IMSVERK) and implicit MVERK (IMMVERK) methods are studied in this section.

We now consider a one-stage IMSVERK method with \(w_2(z)=-\frac{h^2Mf(y_0)}{2!}\)

and one-stage IMMVERK method with \({\bar{w}}_2(z)=-\frac{h^2Mf(y_0)}{2!}\)

A numerical method is said to be of order p if the Taylor expansion of the numerical solution \(y_1\) or \({\bar{y}}_1\) and the exact solution \(y(t_0+h)\) coincides up to \(h^p\) about \(y_0\). For convenience, we denote \(g(t_0)=-My(t_0)+f(y(t_0))\), the Taylor expansion for the exact solution \(y(t_0+h)\) is

Under the assumption \(y_0=y(t_0)\), the Taylor expansions for numerical solutions \(y_1\) and \({\bar{y}}_1\) are

and

If we consider the implicit second-order ERK methods with one stage, then \(b_1={\bar{b}}_1=1\) and \(a_{11}={\bar{a}}_{11}=\frac{1}{2}\). Therefore, the second-order IMSVERK method with one stage is given by

which can be denoted by the Butcher tableau

The second-order IMMVERK method with one stage is shown as

which also can be indicated by the Butcher tableau

ERK methods of collocation type were formulated by Hochbruck and Ostermann, and their convergence properties also were analyzed in [11]. Using the collocation code \(c_1=1/2\), then the implicit second-order (stiff) ERK method with one stage is

with

We notice that when \(M\rightarrow {\textbf{0}}\), the methods (26), (28), and (29) reduce to the implicit midpoint rule.

Now, we consider fourth-order IMSVERK and IMMVERK methods with two stages. The order conditions for the implicit fourth-order RK method with two stages are

Under the assumptions \(c_1=a_{11}+a_{12}\) and \(c_2=a_{21}+a_{22}\), it has a unique solution [33, 34]. The following theorem verifies that the order conditions of fourth-order IMSVERK methods with two stages are identical to (31).

Theorem 3.1

Suppose that the coefficients of a two-stage IMSVERK method with \(w_4(z)\)

where

which satisfy (31), then the IMSVERK method has order four.

Proof

Ignoring the term \({\mathcal {O}}(h^5)\), and the Taylor expansion for numerical solution \(y_1\) is shown by

Under the appropriate assumptions \(c_i=\sum _{j=1}^s a_{ij}\) for \(i=1,\ldots ,s\), we have

Inserting \(Y_1\) and \(Y_2\) of (32) into \(f(Y_1)\) and \(f(Y_2)\) yields

and

Hence, we obtain

and

Likewise, a direct calculation leads to

and

To sum up, the Taylor expansion for the numerical solution \(y_1\) is

By comparing with the Taylor expansion of the exact solution \(y(t_0+h)\), it can be verified that the IMSVERK method with coefficients satisfying the order conditions (31) has order four. The proof of the theorem is complete. \(\square \)

Theorem 3.1 indicates that there exists a unique fourth-order IMSVERK method with two stages:

The method (34) can be displayed by the Butcher tableau

The following theorem shows the order conditions of the fourth-order IMSVERK method with two stages are exactly identical to (31) as well.

Theorem 3.2

Suppose that the coefficients of a two-stage IMMVERK method with \({\bar{w}}_4(z)\)

where

which satisfy (31), then the IMMVERK method has order four.

Proof

Under the assumptions \({\bar{c}}_1={\bar{a}}_{11}+{\bar{a}}_{12}\) and \({\bar{c}}_2={\bar{a}}_{21}+{\bar{a}}_{22}\), by comparing the Taylor expansion of numerical solution \({\bar{y}}_1\) with exact solution \(y(t_0+h)\), the result can be proved in a same way as Theorem 3.1. Therefore, we omit the details. \(\square \)

Then, we present the unique fourth-order IMMVERK method with two stages

with \(g(y_0)=-My_0+f(y_0)\), which can be denoted by the Butcher tableau

In view of (34) and (37), two methods reduce to the classical implicit fourth-order RK method with two stages by Hammer and Hollingsworth (see, e.g. [33]) once \(M\rightarrow {\textbf{0}}\). It should be noted that (34) and (37) use the Jacobian matrix and Hessian matrix of f(y) with respect to y at each step, however, to our knowledge, the idea for stiff problems is no by means new (see, e.g. [35,36,37]). Using the Gaussian points \(c_1=\frac{1}{2}-\frac{\sqrt{3}}{6}\) and \(c_2=\frac{1}{2}+\frac{\sqrt{3}}{6}\), the ERK method of collocation type with two stages has been formulated by Hochbruck et al. [11], which can be represented by the Butcher tableau

It is clear that the coefficients of (39) are matrix exponentials \(\varphi _k(-hM)\) \((k>0)\), their implementation depends on the computing or approximating the product of a matrix exponential function with a vector. As we consider the variable stepsize technique, the coefficients of these exponential integrators are needed to recalculate at each step. In contrast, the coefficients of SVERK and MVERK methods are the real constants, their operations can reduce the computation of matrix exponentials to some extent. On the other hand, compared with standard RK methods, these ERK methods possess some properties of matrix-valued functions and exactly integrate the homogeneous linear equation (5).

4 Linear stability

In what follows we investigate the linear stability properties of IMMVERK and IMSVERK methods. For classical RK methods, the linear stability analysis is related to the Dahlquist test equation [34]

When we consider exponential integrators, the stability properties of an exponential method are analyzed by applying the method to the partitioned Dalquist equation [38]

i.e., solving (40) by a partitioned exponential integrator, and treating the \(i\lambda _1\) exponentially and the \(i\lambda _2\) explicitly, then we have the explicit scalar form

Definition 4.1

For an exponential method with \(R(ik_1,ik_2)\) given by (41), the set S of the stability function \(R(ik_1,ik_2)\)

is called the stability region of the exponential method.

Applying the IMMVERK method (27) and IMSVERK method (25) to (40), the stability regions of implicit second-order ERK methods are respectively depicted in Fig. 1b and c, and we also plot the stability region of the explicit second-order MVERK method with two stages (see, e.g. [31]) in Fig. 1a. For fourth-order ERK methods, we select the IMMVERK method (37), IMSVERK method (34) and the explicit SVERK method with four stages (see, e.g. [32]) to make a comparison, the stability regions of these methods are shown in Fig. 2. It can be observed that implicit exponential methods possess the comparable stability regions than explicit exponential methods.

5 Numerical Experiments

In this section, we apply the symplectic method (17) and high accurate IMSVERK and IMMVERK methods to highly oscillatory problems. In order to illustrate the accuracy and efficiency of these exponential methods, we select implicit ERK methods to make comparison. These methods are implicit, and we use the fixed-point iteration, the iteration will be stopped once the norm of the difference of two successive approximations is smaller than \(10^{-14}\). Matrix exponentials \(\varphi _k(-hM)\) \((k>0)\) are evaluated by the Krylov subspace method [39], which possesses the fast convergence. In all numerical experiments, the explicit fourth-order (stiff) ERK method with five stages in [13] with smaller time stepsize is employed as the reference solutions, and we take the Euclidean norm for global errors (GE) and denote by GEH the global error of Hamiltonian. The following numerical methods are chosen for comparison:

-

First-order methods:

-

Second-order methods:

-

Fourth-order methods:

Problem 1

The Hénon–Heiles Model is used to describe the stellar motion (see, e.g. [34]), which has the following identical form

The Hamiltonian of this system is given by

We select the initial values as

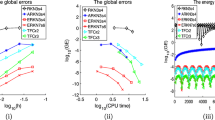

At first, Fig. 3a presents the problem is solved on the interval [0, 10] with stepsizes \(h=1/2^{k},\ k=2,\ldots ,6\) for IMSVERK1s1, EEuler, IMEEuler, it can be observed that the IMSVERK1s1 method has higher accuracy than first-order exponential Euler methods. We integrate this problem with stepsize \(h=1/30\) on the interval [0, 100], the relative errors \(RGEH=\frac{GEH}{H_0}\) of Hamiltonian energy for IMSVERK1s1, EEuler and IMEEuler are presented by Figs. 3b and 4, which reveals the structure-preserving properties of the symplectic method. Finally, we integrate this system over the interval [0, 10] with stepsizes \(h=1/2^{k}\) for \(k=2,\ldots ,6\), Figs. 5 and 6 display the global errors against the stepszies and the CPU time (seconds) for IMMVERK12, IMSVERK12, IMERK12, IMMVERK24, IMSVERK24, IMERK24.

Results for Problem 1. a The \(\log \)–\(\log \) plots of global errors (GE) against h. b The energy preservation for method IMSVERK1s1

Results for Problem 1: the energy preservation for methods EEuler (a) and IMEEuler (b)

Results for Problem 1. a The \(\log \)–\(\log \) plots of global errors (GE) against h. b The \(\log \)–\(\log \) plots of global errors against the CPU time

Results for Problem 1. a The \(\log \)–\(\log \) plots of global errors (GE) against h. b The \(\log \)–\(\log \) plots of global errors against the CPU time

Problem 2

Consider the Duffing equation [4]

where \(0\le k <\omega \).

Set \(p={\dot{q}}\), \(z=(p,q)^{\intercal }\). We rewrite the Duffing equation as

It is a Hamiltonian system with the Hamiltonian

Let \(\omega =30\), \(k=0.01\). This problem is solved on the interval [0, 100] with the stepsize \(h=1/30\), Figs. 7 and 8 show the energy preservation behaviour for IMSVERK1s1, EEuler and IMEEuler. We also integrate the system over the interval [0, 10] with stepsizes \(h=1/2^k,\ k=4,\ldots ,8\) for IMSVERK1s1, EEuler, IMEEuler, IMMVERK12, IMSVERK12, IMERK12, IMMVERK24, IMSVERK24, IMERK24, which are shown in Figs. 7a, 9 and 10.

Results for Problem 2. a The \(\log \)–\(\log \) plots of global errors (GE) against h. b the energy preservation for method IMSVERK1s1

Results for Problem 2: the energy preservation for methods EEuler (a) and IMEEuler (b)

Results for Problem 2. a The \(\log \)–\(\log \) plots of global errors (GE) against h. b The \(\log \)–\(\log \) plots of global errors against the CPU time

Results for Problem 2. a The \(\log \)–\(\log \) plots of global errors (GE) against h. b The \(\log \)–\(\log \) plots of global errors against the CPU time

Problem 3

Consider the sine-Gorden equation with periodic boundary conditions [4]

Discretising the spatial derivative \(\partial _{xx}\) by the second-order symmetric differences, which leads to the following Hamiltonian system

whose Hamiltonian is shown by

In here, \(U(t)=(u_1(t),\ldots ,u_N(t))^T\) with \(u_i(t)\approx u(x_i,t)\) for \(i=1,\ldots ,N\), with \(\Delta x=2/N\) and \(x_i=-1+i\Delta x\), \(F(t,U)=-\sin (u)=-(\sin (u_1),\ldots ,\sin (u_N))^T\), and

In this test, we choose the initial value conditions

with \(N=48\), and solve the problem on the interval [0, 1] with stepsizes \(h=1/2^k,\ k=4,\ldots ,8\). The global errors GE against the stepsizes and the CPU time (seconds) for IMSVERK1s1, EEuler, IMEEuler, IMMVERK12, IMSVERK12, IMERK12, IMMVERK24, IMSVERK24, IMERK24, which are respectively presented in Figs. 11a, 12 and 13. Then we integrate this problem on the interval [0, 100] with stepsize \(h=1/40\), the energy preservation behaviour for IMSVERK1s1, EEuler, IMEEuler are shown in Figs. 11b and 14.

Results for Problem 3. a The \(\log \)–\(\log \) plots of global errors (GE) against h. b the energy preservation for method IMSVERK1s1

Results for Problem 3. a The \(\log \)–\(\log \) plots of global errors (GE) against h. b The \(\log \)–\(\log \) plots of global errors against the CPU time

Results for Problem 3. a The \(\log \)–\(\log \) plots of global errors (GE) against h. b The \(\log \)–\(\log \) plots of global errors against the CPU time

Results for Problem 3: the energy preservation for methods EEuler (a) and IMEEuler (b)

6 Conclusion

Exponential Runge–Kutta methods have the unique advantage for solving highly oscillatory problems, however the implementation of ERK methods generally requires the evaluations of products of matrix functions with vectors. To reduce the computational cost, two new classes of explicit ERK integrators were formulated in [31, 32], and we studied the implicit ERK methods in this paper. Firstly, we analyzed symplectic conditions and verified the existence of the symplectic method, however, the symplectic method only had order one. Then we designed some practical and effective numerical methods (up to order four). Furthermore, we plotted linear stability regions for implicit ERK methods. Numerical results presented the energy preservation behaviour for IMSVERK1s1, and demonstrated the comparable accuracy and efficiency for IMSVERK1s1, IMMVERK12, IMMVERK24, IMSVERK12, IMSVERK24, when applied to the Hénon–Heiles Model, the Duffing equation and the sine-Gordon equation.

Exponential integrators show the better performance than non-exponential integrators. High accuracy and structure preservation for exponential integrators can be further investigated.

Data availability

No datasets were generated or analysed during the current study.

References

K. Feng, On difference schemes and symplectic geometry. In: Proceedings of the 1984 Beijing Symposium on Differential Geometry and Differential Equations, pp. 42–58. Science Press, Beijing (1985)

K. Feng, Difference schemes for Hamiltonian formalism and symplectic geometry. J. Comput. Math. 4(3), 279–289 (1986)

J.M. Sanz-Serna, Runge–Kutta schemes for Hamiltonian systems. BIT 28(4), 877–883 (1988). https://doi.org/10.1007/BF01954907

L. Mei, X. Wu, Symplectic exponential Runge–Kutta methods for solving nonlinear Hamiltonian systems. J. Comput. Phys. 338, 567–584 (2017). https://doi.org/10.1016/j.jcp.2017.03.018

M. Hochbruck, A. Ostermann, Exponential integrators. Acta Numer 19, 209–286 (2010). https://doi.org/10.1017/S0962492910000048

B. Wang, X. Wu, F. Meng, Y. Fang, Exponential Fourier collocation methods for solving first-order differential equations. J. Comput. Math. 35(6), 711–736 (2017). https://doi.org/10.4208/jcm.1611-m2016-0596

L. Brugnano, C. Zhang, D. Li, A class of energy-conserving Hamiltonian boundary value methods for nonlinear Schrödinger equation with wave operator. Commun. Nonlinear Sci. Numer. Simul. 60, 33–49 (2018). https://doi.org/10.1016/j.cnsns.2017.12.018

E. Celledoni, D. Cohen, B. Owren, Symmetric exponential integrators with an application to the cubic Schrödinger equation. Found. Comput. Math. 8(3), 303–317 (2008). https://doi.org/10.1007/s10208-007-9016-7

J.-B. Chen, M.-Z. Qin, Multi-symplectic Fourier pseudospectral method for the nonlinear Schrödinger equation. Electron. Trans. Numer. Anal. 12, 193–204 (2001)

M. Wang, D. Li, C. Zhang, Y. Tang, Long time behavior of solutions of gKdV equations. J. Math. Anal. Appl. 390(1), 136–150 (2012). https://doi.org/10.1016/j.jmaa.2012.01.031

M. Hochbruck, A. Ostermann, Exponential Runge–Kutta methods for parabolic problems. Appl. Numer. Math. 53(2–4), 323–339 (2005). https://doi.org/10.1016/j.apnum.2004.08.005

M. Hochbruck, C. Lubich, H. Selhofer, Exponential integrators for large systems of differential equations. SIAM J. Sci. Comput. 19(5), 1552–1574 (1998). https://doi.org/10.1137/S1064827595295337

M. Hochbruck, A. Ostermann, Explicit exponential Runge-Kutta methods for semilinear parabolic problems. SIAM J. Numer. Anal. 43(3), 1069–1090 (2005). https://doi.org/10.1137/040611434

Hv. Berland, B. Owren, Br. Skaflestad, \(B\)-series and order conditions for exponential integrators. SIAM J. Numer. Anal. 43(4), 1715–1727 (2005). https://doi.org/10.1137/040612683

Y. Fang, X. Hu, J. Li, Explicit pseudo two-step exponential Runge-Kutta methods for the numerical integration of first-order differential equations. Numer. Algorithms 86(3), 1143–1163 (2021). https://doi.org/10.1007/s11075-020-00927-4

M. Hochbruck, C. Lubich, On Krylov subspace approximations to the matrix exponential operator. SIAM J. Numer. Anal. 34(5), 1911–1925 (1997). https://doi.org/10.1137/S0036142995280572

S. Krogstad, Generalized integrating factor methods for stiff PDEs. J. Comput. Phys. 203(1), 72–88 (2005). https://doi.org/10.1016/j.jcp.2004.08.006

J.D. Lawson, Generalized Runge–Kutta processes for stable systems with large Lipschitz constants. SIAM J. Numer. Anal. 4, 372–380 (1967). https://doi.org/10.1137/0704033

J.D. Lambert, S.T. Sigurdsson, Multistep methods with variable matrix coefficients. SIAM J. Numer. Anal. 9, 715–733 (1972). https://doi.org/10.1137/0709060

L. Mei, L. Huang, X. Wu, Energy-preserving continuous-stage exponential Runge–Kutta integrators for efficiently solving Hamiltonian systems. SIAM J. Sci. Comput. 44(3), 1092–1115 (2022). https://doi.org/10.1137/21M1412475

Y.-W. Li, X. Wu, Exponential integrators preserving first integrals or Lyapunov functions for conservative or dissipative systems. SIAM J. Sci. Comput. 38(3), 1876–1895 (2016). https://doi.org/10.1137/15M1023257

B. Wang, X. Wu, Exponential collocation methods for conservative or dissipative systems. J. Comput. Appl. Math. 360, 99–116 (2019). https://doi.org/10.1016/j.cam.2019.04.015

Y. Fang, Q. Ming, Embedded pair of extended Runge-Kutta-Nyström type methods for perturbed oscillators. Appl. Math. Model. 34(9), 2665–2675 (2010). https://doi.org/10.1016/j.apm.2009.12.004

Y. Fang, Q. Ming, X. Wu, Extended RKN-type methods with minimal dispersion error for perturbed oscillators. Comput. Phys. Comm. 181(3), 639–650 (2010). https://doi.org/10.1016/j.cpc.2009.11.013

B. Wang, X. Wu, Explicit multi-frequency symmetric extended RKN integrators for solving multi-frequency and multidimensional oscillatory reversible systems. Calcolo 52(2), 207–231 (2015). https://doi.org/10.1007/s10092-014-0114-z

X. Wu, X. You, W. Shi, B. Wang, ERKN integrators for systems of oscillatory second-order differential equations. Comput. Phys. Commun. 181(11), 1873–1887 (2010). https://doi.org/10.1016/j.cpc.2010.07.046

X. Wu, X. You, B. Wang, Structure-preserving Algorithms for Oscillatory Differential Equations (Springer, Beijing, 2013), p.236. https://doi.org/10.1007/978-3-642-35338-3

X. Wu, K. Liu, W. Shi, Structure-preserving Algorithms for Oscillatory Differential Equations. II (Springer, Beijing, 2015), p.298. https://doi.org/10.1007/978-3-662-48156-1

X. Wu, B. Wang, Recent Developments in Structure-Preserving Algorithms for Oscillatory Differential Equations (Springer, Beijing, 2018), p.345. https://doi.org/10.1007/978-981-10-9004-2

X. Wu, B. Wang, Geometric Integrators for Differential Equations with Highly Oscillatory Solutions (Springer, Beijing, 2021), p.499. https://doi.org/10.1007/978-981-16-0147-7

B. Wang, X. Hu, X. Wu, Two new classes of exponential Runge–Kutta integrators for efficiently solving stiff systems or highly oscillatory problems. Int. J. Comput. Math. (2022). https://doi.org/10.1080/00207160.2023.2294432

X. Hu, Y. Fang, B. Wang, Two new families of fourth-order explicit exponential Runge–Kutta methods with four stages for stiff or highly oscillatory systems. arXiv Preprint. arXiv:2210.12407 (2022)

P.C. Hammer, J.W. Hollingsworth, Trapezoidal methods of approximating solutions of differential equations. Math. Tables Aids Comput. 9, 92–96 (1955)

E. Hairer, C. Lubich, G. Wanner, Geometric Numerical Integration, 2nd edn. Springer Series in Computational Mathematics, vol. 31 (Springer, Berlin, 2006), p. 644

C.E. Abhulimen, Exponentially fitted third derivative three-step methods for numerical integration of stiff initial value problems. Appl. Math. Comput. 243, 446–453 (2014). https://doi.org/10.1016/j.amc.2014.05.096

J.R. Cash, Second derivative extended backward differentiation formulas for the numerical integration of stiff systems. SIAM J. Numer. Anal. 18(1), 21–36 (1981). https://doi.org/10.1137/0718003

W.H. Enright, Second derivative multistep methods for stiff ordinary differential equations. SIAM J. Numer. Anal. 11, 321–331 (1974). https://doi.org/10.1137/0711029

T. Buvoli, M.L. Minion, On the stability of exponential integrators for non-diffusive equations. J. Comput. Appl. Math. 409, 114126–17 (2022). https://doi.org/10.1016/j.cam.2022.114126

H. Berland, B. Skaflestad, W.M. Wright, Expint—a Matlab package for exponential integrators. ACM Trans. Math. Softw. (2007). https://doi.org/10.1145/1206040.1206044

Funding

This research is partially supported by the National Natural Science Foundation of China (Nos. 12071419).

Author information

Authors and Affiliations

Contributions

X. Hu wrote the main manuscript text. W. Wang, B. Wang and Y. Fang made a revision to the manuscript. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare there is no conflict of interest regarding the publication of this paper.

Ethical approval

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Hu, X., Wang, W., Wang, B. et al. Cost-reduction implicit exponential Runge–Kutta methods for highly oscillatory systems. J Math Chem 62, 2191–2221 (2024). https://doi.org/10.1007/s10910-024-01646-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10910-024-01646-0