Abstract

This paper is devoted to the explicit pseudo two-step exponential Runge–Kutta (EPTSERK) methods for the numerical integration of first-order ordinary differential equations. These methods inherit the structure of explicit pseudo two-step Runge–Kutta methods and explicit exponential Runge–Kutta methods. We analyze the order conditions and the global errors of the new methods. The new methods are of order s + 1 with s-stages for some suitable nodes. Numerical experiments are reported to show the convergence and the efficiency of the new methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we are concerned with the numerical integration of the system of the form

where \(g: R^{m}\rightarrow R^{m}\) is an analytic function and A ∈ Rm×m is a symmetric positive definite or skew-Hermitian with eigenvalues of large modulus. Accordingly, in this case, e−tA is exactly the matrix exponential function and ∥e−tA∥≤ C,t ≥ 0. Such problems frequently arise in different fields of applied science such as fluid mechanics, quantum mechanics, and spatial semi-discretization of semilinear parabolic problems. These problems can be solved with the adapted methods by considering the special structure of the problems (see, e.g., [7, 23, 27]). The idea of exponential integrators is not a new one and has been discussed by many authors [14, 20, 24, 25]. However, it has been widely perceived that exponential Runge–Kutta (ERK) methods possess more advantages over the classical Runge–Kutta methods for the numerical integration of (1.1).

Generally, the problem (1.1) may have the following equivalent form

with f(y) = −Ay + g(y) and many well-known numerical methods have been presented for (1.2) in the scientific literatures. Compared with multistep methods whose implementation requires a series of starting values, Runge–Kutta (RK) methods are favorable because the initial values are available for them to run [17,18,19]. Runge–Kutta methods were initially proposed for (1.2) by Runge [21] and Kutta [15]. Since then, many Runge–Kutta methods with special structure, such as implicit methods with strong stability, exponential methods for stiff problems, trigonometrically fitted methods for oscillatory problems, and smyplectic/energy-preserving methods for Hamiltonian systems, were investigated [8, 9].

With the development of parallel computers, several kinds of parallel RK-type methods were designed and studied in [3,4,5,6]. In fact, the parallel methods consume less time than the sequential methods with the same order accuracy. Cong et al. presented a general class of explicit pseudo two-step Runge–Kutta (EPTSRK) methods as well as explicit pseudo two-step Runge–Kutta–Nyström (EPTSRKN) methods for the numerical integration of first-order differential equations and second-order differential equations, respectively. In [16], Li et al. constructed extended explicit pseudo two-step RKN methods for second-order oscillatory system and derived the error bounds of the new methods.

In this paper, we focus on the construction of explicit pseudo two-step exponential RK methods (EPTSERK) adapted to the special structure of (1.1). This paper is organized as follows: Section 2 summarizes some basic results for pseudo two-step Runge–Kutta methods and the explicit pseudo two-step exponential Runge–Kutta methods. The local truncation error and order conditions are analyzed in Section 3. In Section 4, we present the global error analysis of the new method. Several practical numerical methods are constructed in Section 5. In Section 6, the numerical experiments are reported to show the efficiency of the new methods. The concluding remarks are included in the last section.

2 Explicit pseudo two-step exponential Runge–Kutta methods

As is known, the solution of the semilinear problem (1.1) satisfies the variation-of-constants formula

which motivates the following numerical method

with z = −hA. We call this an exponential quadrature rule with weights bi(z) and nodes ci [14, 20]. The order conditions of order p are given by

where φj(z) is determined by

which have the following property:

We approximate the integral (2.1) with some quadrature formulas and obtain the following explicit pseudo two-step exponential Runge–Kutta methods.

Definition 1

An s-stage explicit pseudo two-step exponential Runge–Kutta method for the numerical integration (1.1) is defined as

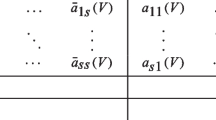

where aij(z),bi(z) with i,j = 1,…n are matrix-valued function of z = −hA and \(Y_{i}^{[n]}\approx y(t_{n}+c_{i}h)\) for i = 1,⋯ ,s. The method (2.2) can be displayed by the following Butcher Tableau

It follows from g(y) = f(y) − Ay that the classical explicit pseudo two-step Runge–Kutta methods considered in [3] are recovered, once \(A\rightarrow 0\). It should be noted that EPTSERK is a new kind of ERK method which only needs to compute s function evaluations for each step. These s function evaluations can be computed in parallel on s processors. Therefore, the s-stage EPTSERK only requires one sequential function evaluation per step evaluated on an s-processor computer.

3 Order conditions of the EPTSERK methods

Definition 2

Suppose that the n −th step numerical solution of (2.2) satisfies yn = y(tn) and \(Y_{i}^{[n-1]}=y(t_{n-1}+c_{i}h)+\mathcal {O}(h^{p_{1}+1})\), then the methods are said to be of step order p = p2 and stage order \(q=\min \limits \{p_{1},p_{2}\}\), if

The main task in this section is to derive the order conditions of the method (2.2), combining the exact solution with (2.2) yields

in which \(\hat g(t)=g(y(t))\) and the expression of Δni can be given by

Substituting \(\hat g(t_{n}+c_{i}h\tau )\) and \(\hat g(t_{n}+(c_{i}-1)h)\) with their Taylor series gives

Likewise, we have

It can be observed that for arbitrary numbers p1 and p2, the error \({\Delta }_{ni}=\mathcal {O}(h^{p_{1}+1})\) and \(\delta _{n+1}=\mathcal {O}(h^{p_{2}+1})\) if

We will discuss the relation between (3.4) and the order of the EPTSERK method (2.2) in the next theorem.

Theorem 1

Suppose that the function g(y) is Lipschitz continuous on y and the coefficients of EPTSERK (2.2)) satisfies (3.4), then the method EPTSERK (2.2) is of step order \(p=\min \limits \{p_{1}+1,p_{2}\}\) and stage order \(q=\min \limits \{p_{1},p_{2}\}\).

Proof

Given the numerical solution of methods EPTSERK (2.2) satisfying yn = y(tn) and \(Y_{i}^{[n-1]}=y(t_{n-1}+c_{i}h)+\mathcal {O}(h^{p_{1}+1})\). We derive the following error for order estimation

Using a similar way, we have

In light of (3.5), (3.6), and Definition 2, we prove the theorem. □

4 Global error analysis

In this section, we will conduct the global error analysis of the new methods which satisfy the conditions (3.4). In what follows, we will use the Euclidean norm and its induced matrix norm which will be denoted by ∥⋅∥. For the error analysis, the Gronwall inequality (see, e.g., [13]) is useful.

Lemma 1

Let α,ϕ,φ, and χ be nonnegative functions defined for t = nΔt,n = 0,1,…,N and assume that χ is nondecreasing. If

and if there is a positive constant \(\hat c\) such that \({\Delta }_{t}\sum \limits _{n=1}^{k-1}\alpha _{n}\leq \hat c,\) then

where the subscript indices k and n denote the values of function at tk = kΔt and tn = nΔt, respectively.

To begin with the global error, we introduce the notations

which have following error recursions

and

Theorem 2

Let \(\|E_{n}\|=\max \limits _{1\leq i\leq s}\|E_{ni}\|\) and \(\|{\Delta }_{n}\|=\max \limits _{1\leq i\leq s}\|{\Delta }_{ni}\|\), and suppose that the function g(y) is Lipschitz continuous on y and A is a symmetric positive definite or skew-Hermitian in the initial value problems (1.1). Furthermore, we assume that \(\|g^{\prime }(y)\|\) is uniformly bounded, and then for the EPTSERK method (2.2), we have

where C1 depends on \(g^{\prime }(y)\) but is independent of ∥A∥.

Proof Applying the mean value theorem yields

where Cf depends on \(g^{\prime }(y)\) but is independent of ∥A∥. It follows from (4.1) that

Therefor, we obtain

Theorem 3

Under the assumption of Theorem 2, we consider the s-stages EPTSERK method (2.2) with the coefficients given by (3.4) and the stepsize is sufficient small. If

with \(p=\min \limits \{p_{1}, p_{2}\}\), then we have

where the constant C depends on \(s, t_{end}, \|g^{\prime }(y)\|\) but is independent of ∥A∥ and n.

Proof

It follows from (4.2) that

in which C2 depends on \(\|g^{\prime }(y)\|\) but is independent of ∥A∥. For the sufficiently small h > 0, we have

Substituting (4.5) into (4.4) yields

According to the computation of (4.4), (4.5), and (4.6), it is clear that C3 and C4 depend on s, \(\|g^{\prime }(y)\|\), and tend but are independent of n and ∥A∥. In Lemma (1), we set

We then have

Applying the Gronwall Lemma to (4.6) obtains

where C depends on \(\|g^{\prime }(y)\|\) but is independent of ∥A∥. □

5 Construction of the methods

Next, we will construct some EPTSERK methods (2.2) based on the condition (3.4). Suppose that the nodes ci for i = 1,…,s are distinct and p1 = p2 = s in (3.4), we have

The coefficients aij(z) and bi(z) can be derived from (5.1) with the errors

Therefore, the method is of step order and stage order s.

In order to express the parameters vector b(z) and matrix A(z) simplified in terms of the collocation vector c, we give the following notes

with e = (1,⋯ ,1)T. Then, the order conditions (5.1) have the following equivalent form

where I is the identity matrix with the same order as z. Since the nodes ci, i = 1…,s are distinct, the matrices Q1 and Q2 are nonsingular. It follows from (5.2) that

Although (5.3) provides a way to determine the coefficients of method (2.2), it is very complicated to solve the equation (5.3) for large s and d. The following theorem provides an alternative way to obtain the coefficients of the EPTSERK method (2.2) which satisfy the order conditions (5.1).

Theorem 4

The following coefficients

with the Lagrange interpolation function \(l_{i}(\tau )=\prod \limits _{j=1,j\neq i}^{s}\frac {\tau -c_{j}}{c_{i}-c_{j}}\), satisfy order conditions (5.1).

Proof

Since for distinct nodes ci, the coefficients can be obtained uniquely from (5.1), we only need to verify the correction of (5.1) for the coefficients determined in (5.4). For m = 0,…,s − 1, we obtain

Let τ = 1 + uci, and we have

This implies that the (5.1) holds for aij(z). Similarly, we can prove that bi(z) satisfies (5.1). It should be noted that the condition (5.1) defines a method of order at least s for distinct ci. It is possible to obtain order p beyond s by some orthogonality assumptions. Thus, we give the following theorem. □

Theorem 5

For any distinct collocation points ci, the EPTSERK method (2.2) with s −stage is of order p = s and stage order q = s. It has order p = s + 1 provided the following conditions

are satisfied.

Proof

The first conclusion follows from Theorem 3.2. We next show the second one. Using (3.1), we have

Inserting the Taylor series of \(\hat g(t_{n}+h\tau )\) and \(\hat g(t_{n}+c_{i}h)\) at tn into (5.5) yields

For s ≤ m ≤ s + l − 1, we can write

where rm−s is a polynomial of degree m − s. Substituting (5.7) into (5.6) yields

where n satisfies l − 1 ≤ n + m − s ≤ l. Hence, we obtain

where s ≤ m ≤ s + l − 1. Combining (5.7) and (5.9), we have

□

It can be concluded from Theorem 3.2 that the step order \(p=\min \limits \{s+1,s+l\}=s+1\).

On the basis of the Theorem 5, we can construct EPTSERK methods with arbitrarily high algebraic order. In this paper, we will construct EPTSERK methods and compare the new methods with other exponential Runge–Kutta methods for first-order differential equations. Therefore, we consider EPTSERK methods expressed by the tableau (2) with the coefficients aij(z),bi(z) given by Theorem 4. We take an example of a family of 2-stage EPTSERK methods with the collocation vectors c :

with orders 2 and 3 respectively. The resulting methods are denoted as EPTSERK2 and EPTSERK3. For more precise requirements, we present a 3-stage EPTSERK method of order four with the collocation vectors c :

and we denote this method as EPTSERK4.

6 Numerical experiments

In this section, we will show the numerical performance of the new methods compared with some existing codes proposed in the scientific literature. The criterion used in the numerical comparisons is the decimal logarithm of the maximum global error (LOG10 (ERROR)) versus the computational effort measured by the number of function evaluations (NUMBER OF FUNCTION EVALUATIONS) required by each method. The methods for comparison are denoted as:

-

EERK2: the explicit exponential Runge–Kutta method of order two given in [25].

-

EERK3: the explicit exponential Runge–Kutta method of order three given in [25].

-

EPTSRK2: the explicit pseudo two-step Runge–Kutta methods of order two derived in [3].

-

EPTSRK3: the explicit pseudo two-step Runge–Kutta methods of order three derived in [3].

-

EPTSERK2: the explicit pseudo two-step exponential Runge–Kutta methods of order two presented in this paper.

-

EPTSERK3: the explicit pseudo two-step exponential Runge–Kutta methods of order three presented in this paper.

-

EPTSERK4: the explicit pseudo two-step exponential Runge–Kutta methods of order four presented in this paper.

Problem 1

We study the Hénon-Heiles problem

which can be used to depicting stellar motion [2, 12]. We select the initial values

The problem is solved on the interval [0,50] and the numerical results are given in Fig. 1 with the stepsize h = 1/2i for EERK3, h = 1/(3 ⋅ 2i− 1) for EERK2, and h = 1/(3 ⋅ 2i) for EPTSRK2, EPTSRK2, EPTSERK2, EPTSERK3, and EPTSERK4, where i = 1,…,5.

Problem 2

We consider the Fermi-Pasta-Ulam problem in [12, 22]. The Hamiltonian function is given by

which leads to a nonlinear oscillatory system

where

and

In this problem, we choose

and set the remaining initial values to zeros. The problem is solved on the interval [0,10] and the numerical results are plotted in Fig. 2 with the stepsize h = 1/2i+ 5 for EERK3, h = 1/(3 ⋅ 2i+ 4) for EERK2, and h = 1/(3 ⋅ 2i+ 5) for EPTSRK2, EPTSRK2, EPTSERK2, EPTSERK3, and EPTSERK4, where i = 1,…,5.

Problem 3

We consider the Allen-Cahn equation [1, 10]

with the periodic boundary condition u(− 1,t) = u(1,t). The Allen-Cahn equation was presented by Allen and Cahn in [1] to describe the motion of anti-phase boundaries in crystalline solids. We approximate the spatial derivatives with the classical second-order central difference operator and obtain

where U(t) = (u1(t),⋯ ,uN(t))T with ui(t) ≈ u(xi,t), xi = − 1 + iΔx, i = 1,…,N and Δx = 2/N. The matrix M has the form

and \(F(t,U)=u-u^{3}=(u_{1}-{u_{1}^{3}},\cdots ,u_{N}-{u_{N}^{3}})^{T}.\) We select the parameters 𝜖 = 0.01,N = 64 and solve this problem on interval [0,20]. The numerical results are stated in Fig. 3 with the stepsize h = 1/2i+ 4 for EERK3, h = 1/(3 ⋅ 2i+ 3) for EERK2, and h = 1/(3 ⋅ 2i+ 4) for EPTSRK2, EPTSRK2, EPTSERK2, EPTSERK3, and EPTSERK4, where i = 1,…,4.

Problem 4

We study the sine-Gorden equation with periodic boundary conditions [11, 26]

By using the semi-discretization on the spatial variable with second-order symmetric differences, we have the following first-order differential system

where U(t) = (u1(t),⋯ ,uN(t))T with ui(t) ≈ u(xi,t) for i = 1,…,N,

with Δx = 2/N, xi = − 1 + iΔx and \(F(t,U)=-\sin \limits (u)=-(\sin \limits (u_{1}),\cdots ,\sin \limits (u_{N}))^{T}.\) The initial value conditions for this test are

with N = 64, and the problem is solved on the interval [0,10]. The numerical results are stated in Fig. 4 with the stepsize h = 1/(10 ⋅ (4 ∗ i)) for EERK3, h = 1/(10 ⋅ 3 ⋅ (2 ∗ i)) for EERK2, and h = 1/(3 ⋅ 10 ⋅ (2 ∗ i)) for EPTSRK2, EPTSRK3, EPTSERK2, EPTSERK3, and EPTSERK4, where i = 1,…,4.

From Figs. 1, 2, 3, and 4, we can conclude the explicit pseudo two-step exponential Runge–Kutta methods show more advantages over the explicit pseudo two-step Runge–Kutta methods as well as the explicit exponential Runge–Kutta methods in the literature.

In order to show the convergence order of the constructed methods, in Figs. 5, 6, 7, and 8, we plot the global errors against the stepsize for methods EPTSERK2, EPTSERK3, and EPTSERK4 when solving problems (1–4). The slopes of dotted lines indicate theoretical order two (red), order three (blue), and order four (green), respectively. From Figs. 5, 6, 7, and 8, we find that the numerical solution of EPTSERK2 method has second order of convergence as expected. Surprisingly, the numerical solutions of EPTSERK3 and EPTSERK4 methods exceed their theoretical order three and order four, respectively. The analysis of the convergence of explicit pseudo two-step exponential Runge–Kutta methods is an interesting and challenging topic for future work.

7 Conclusion

In this paper, we investigate and study the construction of explicit pseudo two-step exponential Runge–Kutta methods for the first-order differential equations. The global error analysis is carried out and the error bounds are given. The order conditions are discussed and the two practical numerical methods of order two and three with two-stage are obtained. Numerical experiments are accompanied to verify the efficiency and convergence of the new methods. From numerical results, we can see that, for first-order differential equations of form (1.1), our new methods work better than the classical exponential Runge–Kutta methods as well as the explicit pseudo two-step Runge–Kutta methods in the literature. The constructions and discussions of the higher order methods and the variable stepsize methods of this type are in process.

Data availability

All data, models, or code generated or used during the study are available from the corresponding author by request.

References

Allen, S.M., Cahn, J.W.: A microscopic theory for antiphase boundary motion and its application to antiphase domain coarsening. Acta Metall. 27, 1085–1095 (1979)

Brugnano, L., Iavernaro, F., Trigiante, D.: Energy and quadratic invariants preserving integrators based upon Gauss collocation formulae. SIAM J. Numer. Anal. 50, 2897–2916 (2012)

Cong, N.H.: Explicit pseudo two-step Runge-Kutta methods for parallel computers. Int. J. Comput. Math. 73, 77–91 (1999)

Cong, N.H.: Explicit pseudo two-step RKN methods with stepsize control. Appl. Numer. Math. 38, 135–144 (2001)

Cong, N.H., Strehmel, K., Weiner, R.: Runge-kutta-nyström-type parallel block predictor-corrector methods. Adv. Comput Math. 10, 115–133 (1999)

Cong, N.H., Strehmel, K., Weiner, R.: A general class of explicit pseudo two-step RKN methods on parallel computers. Comput. Math. Appl. 38, 17–39 (1999)

Cox, S., Matthews, P.: Exponential time differencing for stiff systems. J. Comput. Phys. 176, 430–455 (2002)

Fang, Y.L., Liu, C.Y., Wang, B.: Efficient energy-preserving methods for general nonlinear oscillatory Hamiltonian systems. Act. Math. Sin. 34, 1863–1878 (2018)

Fang, Y.L., Yang, Y.P., You, X.: A new family of A stable Runge Kutta methods with equation dependent coefficients for stiff problems. Numer. Algo. 81, 1235–1251 (2019)

Feng, X.L., Song, H.L., Tang, T., Yang, J.: Nonlinear stability of the implicit-explicit methods for the Allen-Cahn equation. Inve. Prob. Imag. 7, 679–695 (2013)

Franco, J.M.: New methods for oscillatory systems based on ARKN methods. Appl. Numer. Math. 56, 1040–1053 (2006)

Hairer E., Lubich C., Wanner G.: Geometric Numerical Integration: Structure-Preserving Algorithms for Ordinary Differential Equations, 2nd edn. Springer, Berlin (2006)

Hayes, L.J.: Gelerkin alternating direction methods for nonrectangular regions using patch approximations. SIAM J. Numer. Anal. 18, 727–643 (1987)

Hochbruck, M., Lubich, C., Selhofer, H.: Exponential integrators for large systems of differential equations. SIAM J. Sci. Comput. 19, 1552–1574 (1998)

Kutta, W.: Beritrag zur näherungsweisen integration totaler differentialgleichungen. Zeitschr. für Math. u. Phys. 46, 435–453 (1901)

Li, J.Y., Deng, S., Wang, X.F.: Extended explicit pseudo two-step RKN methods for oscillatory systems \(y^{\prime \prime }+my=f(y)\). Numer. Algo. 78, 673–700 (2018)

Liu, C., Iserles, A., Wu, X.: Symmetric and arbitrarily high-order Birkhoff-Hermite time integrators and their long-time behaviour for solving nonlinear Klein-Gordon equations. J. Comput. Phys. 356, 1–30 (2018)

Liu, C., Wu, X.: Nonlinear stability and convergence of ERKN integrators for solving nonlinear multi-frequency highly oscillatory second-order ODEs with applications to semi-linear wave equations. Appl. Numer. Math. 153, 352–380 (2020)

Liu, C., Wu, X., Shi, W.: New energy-preserving algorithms for nonlinear Hamiltonian wave equation equipped with Neumann boundary conditions. Appl. Math. Comput. 339, 588–606 (2018)

Mei, L., Wu, X.: Symplectic exponential Runge-Kutta methods for solving nonlinear Hamiltonian systems. J. Comput. Phys. 338, 567–584 (2017)

Runge, C.: Ueber die numerische auflösung von differentialgleichungen. Math. Ann. 46, 167–178 (1895)

Wang, B., Wu, X.: A new high precision energy-preserving integrator for system of oscillatory second-order differential equations. Phys. Lett. A. 376, 1185–1190 (2012)

Wang, B., Wu, X., Meng, F., Fang, Y.: Exponential Fourier collocation methods for solving first-order differential equations. J. Comput. Math. 35, 711–736 (2017)

Weiner, R., El-Azab, T.: Exponential peer methods. Appl. Numer. Math. 62, 1335–1348 (2012)

Hochbruch, M., Ostermann, A.: Explicit exponential Runge-Kutta methods for semilinear parabolic problems. SIAM J. Numer. Anal. 43, 1069–1090 (2005)

Wu, X.Y., Wang, B.: Multidimensional adapted Runge-Kutta-nyström methods for oscillatory systems. Comput. Phys. Commun. 181, 1955–1962 (2010)

Wu, Y.J., Wang, B.: Symmetric and symplectic exponential integrators for nonlinear Hamiltonian systems. Appl. Math. Lett. 90, 215–222 (2019)

Acknowledgments

The authors are very grateful to the editor and anonymous referees for their invaluable comments and suggestions which helped to improve the manuscript.

Funding

This research is partially supported by the National Natural Science Foundation of China (No. 11571302), the Natural Science Foundation of Shandong Province, China (No. ZR2018MA024), the project of Shandong Province higher Educational Science and Technology Program (Nos. J18KA247, J17KA190), and the foundation of innovative science and technology for youth in universities of Shandong Province (2019KJI001).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Fang, Y., Hu, X. & Li, J. Explicit pseudo two-step exponential Runge–Kutta methods for the numerical integration of first-order differential equations. Numer Algor 86, 1143–1163 (2021). https://doi.org/10.1007/s11075-020-00927-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-020-00927-4