Abstract

The aim of the present analysis is to implement a relatively recent computational algorithm, reproducing kernel Hilbert space, for obtaining the solutions of systems of first-order, two-point boundary value problems for ordinary differential equations. The reproducing kernel Hilbert space is constructed in which the initial–final conditions of the systems are satisfied. Whilst, three smooth kernel functions are used throughout the evolution of the algorithm in order to obtain the required grid points. An efficient construction is given to obtain the numerical solutions for the systems together with an existence proof of the exact solutions based upon the reproducing kernel theory. In this approach, computational results of some numerical examples are presented to illustrate the viability, simplicity, and applicability of the algorithm developed. Finally, the utilized results show that the present algorithm and simulated annealing provide a good scheduling methodology to such systems compared with other numerical methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Systems of first-order ordinary differential equations (ODEs) subject to given separated two-point boundary conditions (BCs) are an important branch of applied mathematics that result directly from the mathematical models, or indirectly from converting the partial differential equations and the optimal control problems into ODEs [1,2,3,4,5]. Henceforth and not to conflict unless stated otherwise, we denote the symbol “BVPs” for such systems. There are a range of physical phenomena for which BVPs provide the model examples, can be found in many areas of engineering and science ranging, from simple beam bending problems in mechanics to the chemical engineering areas of absorption phenomena, chemical reactions, radiation effects and problems connected with heat transfer, fluid flow, dissipation of energy, and control theory. Generally, it is difficult to obtain the closed form solutions for BVPs in terms of elementary functions, especially, for nonlinear and nonhomogeneous cases. Factually, in most cases, only approximate solutions or numerical solutions can be expected; therefore, it has attracted much attention and has been studied by many authors. In this regards, there are many iterative methods have been proposed to be one of the suitable and successful classes of numerical techniques for obtaining the solutions of numerous types of BVPs (see, for instance, [6,7,8,9,10,11,12,13,14,15] and the references therein).

The reproducing kernel algorithm is a numerical, as well as, analytical technique for solving a large variety of ordinary and partial differential equations associated to different kind of BCs, and usually provides the solutions in term of rapidly convergent series with components that can be elegantly computed. In this study, a general technique based on the reproducing kernel theory is proposed for solving BVPs in the appropriate reproducing kernel Hilbert space (RKHS). The main idea is to construct the direct sum of the RKHSs that satisfying the initial–final conditions of the given systems in order to determining their exact and their numerical solutions. The exact and the numerical solutions are represented in the form of series through the functions value at the right-hand side of the corresponding differential equations.

Anyhow, BVPs have been investigated systematically in this article for the development, analysis, and implementation of an accurate algorithm which allows for the use of some form of concurrent processing technique. More precisely, we consider the following set of ODEs:

subject to the following BCs:

where \( t \in \left[ {t_{0} ,t_{f} } \right] \), \( \left\{ {u_{j} } \right\}_{j = 1}^{p} \subset W_{2}^{2} \left[ {t_{0} ,t_{f} } \right] \), \( \left\{ {u_{j} } \right\}_{j = p + 1}^{n} \subset \tilde{W}_{2}^{2} \left[ {t_{0} ,t_{f} } \right] \) are unknown functions to be determined, \( \left\{ {f_{j} \left( {t,v_{1} ,v_{2} , \ldots ,v_{n} } \right)} \right\}_{j = 1}^{p} \) and \( \left\{ {f_{j} \left( {t,v_{1} ,v_{2} , \ldots ,v_{n} } \right)} \right\}_{j = p + 1}^{n} \) are continuous terms in \( W_{2}^{1} \left[ {t_{0} ,t_{f} } \right] \) as \( \left\{ {v_{j} } \right\}_{j = 1}^{p} \subset W_{2}^{2} \left[ {t_{0} ,t_{f} } \right] \) and \( \left\{ {v_{j} } \right\}_{j = p + 1}^{n} \subset \tilde{W}_{2}^{2} \left[ {t_{0} ,t_{f} } \right] \), \( - \infty < \{v_{j}\}_{j = 1}^{n} < \infty, \) and \( W_{2}^{1} \left[ {t_{0} ,t_{f} } \right],W_{2}^{2} \left[ {t_{0} ,t_{f} } \right],\tilde{W}_{2}^{2} \left[ {t_{0} ,t_{f} } \right] \) are three RKHSs. Here, the set of functions \( \left\{ {p_{j} \left( t \right)} \right\}_{j = 1}^{n} \) are continuous real-valued functions on \( \left[ {t_{0} ,t_{f} } \right] \) and may take the values \( p_{j} \left( {t_{\lambda } } \right) = 0 \) for some \( t_{\lambda } \in \left[ {t_{0} ,t_{f} } \right] \) and some \( j \in \left\{ {1,2, \ldots ,n} \right\} \) which make Eqs. (1) and (2) to be singular at \( t = t_{\lambda } \). Through this paper, we assume that Eqs. (1) and (2) have a unique analytical solutions on \( \left[ {t_{0} ,t_{f} } \right] \).

The theory of reproducing kernel was used for the first time at the beginning of the 20th century as a novel solver for the BVPs of harmonic and biharmonic functions types. This theory, which is representative in the RKHS method, has been successfully applied to various important application in numerical analysis, computational mathematics, image processing, machine learning, finance, and probability and statistics [16,17,18,19]. The RKHS method is a useful framework for constructing numerical solutions of great interest to applied sciences. In the recent years, based on this theory, extensive work has been proposed and discussed for the numerical solutions of several integral and differential operators side by side with their theories. The reader is kindly requested to go through [20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44] in order to know more details about the RKHS method, including its modification and scientific applications, its characteristics and symmetric kernel functions, and others.

In fact, the RKHS method can be applied for different categories of differential equations subject to different types of BCs such as: Neumann, Robin, Dirichlet, integral, and periodic with respect to ordinary, partial, fractional, or fuzzy derivatives. To mention a few, this method have been applied to the solutions of: parabolic partial differential equations subject to integral BCs [21], Bagley–Torvik and Painlevé equations of fractional order subject to Dirichlet BCs [23], mixed-type integrodifferential equations subject to periodic BCs [26, 27], fuzzy differential equations subject to Dirichlet BCs [29], differential algebraic systems subject to integral BCs [31], time-fractional partial differential equations subject to Neumann BCs [32], heat and fluid flows problems subject to Robin BCs [33], Time-fractional partial integrodifferential equations subject to Dirichlet BCs [34, 35], Diffusion-Gordon types equations of fractional order subject to Dirichlet BCs [36], time-fractional Tricomi and Keldysh equations subject to Dirichlet BCs [37], singularly perturbed differential equations subject to twin layers BCs [39, 41, 44], and anomalous subdiffusion equation of fractional order subject to integral BCs [40].

The organization of the article is as follows: for clarity of presentation, in Sect. 2, two extended RKHSs needed in the analysis are constructed, and two extended reproducing kernel functions are obtained. After that, in Sect. 3, the solutions and the essential theoretical results are presented based upon the reproducing kernel theory. In Sect. 4, an efficient iterative technique for the solutions and convergent theorem are described. In order to capture the behaviour of solutions; in Sect. 5 error estimations and error bounds are presented. Numerical algorithm and numerical analysis are discussed to demonstrate the accuracy and the applicability of the presented method as utilized in Sect. 6. In Sect. 7, relative comparative analysis between the classical numerical method and the RKHS method is presented. Finally, in Sect. 8 some concluding remarks and brief conclusions are listed.

2 Constructing appropriate inner product spaces

The reproducing kernel approach builds on a Hilbert space \( H \) and requires that all Dirac evaluation functional in \( H \) are bounded and continuous. In this section, two extended RKHSs \( H\left[ {t_{0} ,t_{f} } \right] \) and \( W\left[ {t_{0} ,t_{f} } \right] \) are constructed. Then, we utilize the reproducing kernel concept to obtain two extended reproducing kernel functions \( R_{t} \left( s \right) \) and \( r_{t} \left( s \right) \) in order to formulate the solutions in the mentioned spaces.

Before the construction, it is necessary to present some notations, definitions, and preliminary facts upon the reproducing kernel theory that will be used further in the remainder of the article. Throughout this analysis, \( L^{2} \left[ {t_{0} ,t_{f} } \right] = \{ u|\int_{{t_{0} }}^{{t_{f} }} {u^{2} } \left( t \right)dt < \infty \} \) and \( l^{2} = \{ A|\mathop \sum \nolimits_{i = 1}^{\infty } A_{i}^{2} < \infty \} \).

Definition 1

[19] Let \( {\text{H}} \) be a Hilbert space of function \( \theta :\varOmega \to H \) on a set \( \varOmega \). A function \( R:\varOmega \times \varOmega \to {\mathbb{C}} \) is a reproducing kernel of \( H \) if the following conditions are met. Firstly, \( R\left( { \cdot ,t} \right) \in H \) for each \( t \in \varOmega \). Secondly, \( \langle\theta \left( \cdot \right),R\left( { \cdot ,t} \right)\rangle = \theta \left( t \right) \) for each \( \theta \in H \) and each \( t \in \varOmega \).

The condition \( \langle\theta \left( \cdot \right),R\left( { \cdot ,t} \right)\rangle = \theta \left( t \right) \) is called the reproducing property, which means that, the value of \( \theta \) at the point \( t \) is reproducing by the inner product of \( \theta \) with \( R\left( { \cdot ,t} \right) \). Indeed, a Hilbert space which possesses a reproducing kernel is called a RKHS.

Definition 2

[20] The space \( W_{2}^{1} \left[ {t_{0} ,t_{f} } \right] \) is defined as \( W_{2}^{1} \left[ {t_{0} ,t_{f} } \right] = \{ z:z \) is absolutely continuous function on \( \left[ {t_{0} ,t_{f} } \right] \) and \( z^{\prime} \in L^{2} \left[ {t_{0} ,t_{f} } \right]\} \). On the other hand, the inner product and the norm in \( W_{2}^{1} \left[ {t_{0} ,t_{f} } \right] \) are defined, respectively, by

and \( \|z_{1}\|_{{W_{2}^{1} }} = \sqrt {\langle z_{1} \left( t \right),z_{1} \left( t \right)\rangle_{{W_{2}^{1} }} } \), where \( z_{1} ,z_{2} \in W_{2}^{1} \left[ {t_{0} ,t_{f} } \right] \).

Definition 3

[21] The space \( W_{2}^{2} \left[ {t_{0} ,t_{f} } \right] \) is defined as \( W_{2}^{2} \left[ {t_{0} ,t_{f} } \right] = \{ z:z,z^{\prime} \) are absolutely continuous functions on \( \left[ {t_{0} ,t_{f} } \right] \), \( z^{\prime\prime} \in L^{2} \left[ {t_{0} ,t_{f} } \right] \), and \( z\left( {t_{0} } \right) = 0\} \). On the other hand, the inner product and the norm in \( W_{2}^{2} \left[ {t_{0} ,t_{f} } \right] \) are defined, respectively, by

and \( \|z_1\|_{{W_{2}^{2} }} = \sqrt {\langle z_{1} \left( t \right),z_{1} \left( t \right)\rangle_{{W_{2}^{2} }} } \), where \( z_{1} ,z_{2} \in W_{2}^{2} \left[ {t_{0} ,t_{f} } \right] \).

Definition 4

The space \( \tilde{W}_{2}^{2} \left[ {t_{0} ,t_{f} } \right] \) is defined as \( \tilde{W}_{2}^{2} \left[ {t_{0} ,t_{f} } \right] = \{ z:z,z^{\prime} \) are absolutely continuous functions on \( \left[ {t_{0} ,t_{f} } \right] \), \( z^{\prime\prime} \in L^{2} \left[ {t_{0} ,t_{f} } \right] \), and \( z\left( {t_{f} } \right) = 0\} \). On the other hand, the inner product and the norm in \( \tilde{W}_{2}^{2} \left[ {t_{0} ,t_{f} } \right] \) are defined, respectively, by

and \( \|z_1\|_{{\tilde{W}_{2}^{2} }} = \sqrt {\langle z_{1} \left( t \right),z_{1} \left( t \right)\rangle_{{\tilde{W}_{2}^{2} }} } \), where \( z_{1} ,z_{2} \in \tilde{W}_{2}^{2} \left[ {t_{0} ,t_{f} } \right] \).

It is easy to see that \( \langle z_{1} \left( t \right),z_{2} \left( t \right)\rangle_{{\tilde{W}_{2}^{2} }} \) satisfies all the requirements of the inner product as follows; firstly, \( \langle z_{1} \left( t \right),z_{1} \left( t \right)\rangle_{{\tilde{W}_{2}^{2} }} \ge 0 \); secondly, \( \langle z_{1} \left( t \right),z_{2} \left( t \right)\rangle_{{\tilde{W}_{2}^{2} }} = \langle z_{2} \left( t \right),z_{1} \left( t \right)\rangle_{{\tilde{W}_{2}^{2} }} \); thirdly, \( \langle \gamma z_{1} \left( t \right),z_{2} \left( t \right)\rangle_{{\tilde{W}_{2}^{2} }} = \gamma \langle z_{1} \left( t \right),z_{2} \left( t \right)\rangle_{{\tilde{W}_{2}^{2} }} \); fourthly, \( \langle z_{1} \left( t \right) + z_{2} \left( t \right),z_{3} \left( t \right)\rangle_{{\tilde{W}_{2}^{2} }} = \langle z_{1} \left( t \right),z_{3} \left( t \right)_{{\tilde{W}_{2}^{2} }} + \langle z_{2} \left( t \right),z_{3} \left( t \right)\rangle_{{\tilde{W}_{2}^{2} }} \). Indeed, \( \langle z_{1} \left( t \right),z_{1} \left( t \right)\rangle_{{\tilde{W}_{2}^{2} }} = 0 \) if and only if \( z_{1} \left( t \right) = 0 \). To see this, when \( z_{1} \left( t \right) = 0 \), then \( \langle z_{1} \left( t \right),z_{1} \left( t \right)\rangle_{{\tilde{W}_{2}^{2} }} = 0 \), whilst, if \( \langle z_{1} \left( t \right),z_{1} \left( t \right)\rangle_{{\tilde{W}_{2}^{2} }} = 0 \), then \( \left( {z_{1} \left( {t_{f} } \right)} \right)^{2} + \left( {z_{1}^{\prime} \left( {t_{f} } \right)} \right)^{2} + \int_{{t_{0} }}^{{t_{f} }} \left( {z_{1}^{\prime\prime} \left( t \right)} \right)^{2} dt = 0 \), therefore \( z_{1} \left( {t_{f} } \right) = z_{1}^{\prime} \left( {t_{f} } \right) = z_{1}^{\prime\prime} \left( t \right) = 0 \) or \( z_{1} \left( t \right) = 0 \).

Theorem 1

[20] The Hilbert space \( W_{2}^{1} \left[ {t_{0} ,t_{f} } \right] \) is a complete reproducing kernel with the reproducing kernel function

Theorem 2

[21] The Hilbert space \( W_{2}^{2} \left[ {t_{0} ,t_{f} } \right] \) is a complete reproducing kernel with the reproducing kernel function

Theorem 3

The Hilbert space \( \tilde{W}_{2}^{2} \left[ {t_{0} ,t_{f} } \right] \) is a complete reproducing kernel with the reproducing kernel function

Proof

The proof of the completeness and the reproducing property of \( \tilde{W}_{2}^{2} \left[ {t_{0} ,t_{f} } \right] \) is similar to the proof in [22]. Let us find out the expression form of \( \tilde{R}_{t}^{{\left\{ 2 \right\}}} \left( s \right) \) in \( \tilde{W}_{2}^{2} \left[ {t_{0} ,t_{f} } \right] \). By applying the tabular integration formula on \( z^{\prime\prime}\left( s \right)\partial_{s}^{3} \tilde{R}_{t}^{{\left\{ 2 \right\}}} \left( s \right) \), we get \( \int_{{t_{0} }}^{{t_{f} }} z^{\prime\prime}\left( s \right)\partial_{s}^{3} \tilde{R}_{t}^{{\left\{ 2 \right\}}} \left( s \right)ds = \mathop \sum \limits_{i = 0}^{1} \left( { - 1} \right)^{1 - i} z^{\left( i \right)} \left( s \right)\partial_{s}^{3 - i} \tilde{R}_{t}^{{\left\{ 2 \right\}}} \left( s \right)|_{{s = t_{0} }}^{{s = t_{f} }} + \int_{{t_{0} }}^{{t_{f} }} z\left( s \right)\partial_{s}^{4} \tilde{R}_{t}^{{\left\{ 2 \right\}}} \left( s \right)ds \).

According to Eq. (5), one can write

Since \( \tilde{R}_{t}^{{\left\{ 2 \right\}}} \left( s \right) \in \tilde{W}_{2}^{2} \left[ {t_{0} ,t_{f} } \right] \), yields that \( \tilde{R}_{t}^{{\left\{ 2 \right\}}} \left( {t_{f} } \right) = 0 \), also since \( z\left( t \right) \in \tilde{W}_{2}^{2} \left[ {t_{0} ,t_{f} } \right] \), yields that \( z\left( {t_{f} } \right) = 0 \). Thus, if \( \partial_{s} \tilde{R}_{t}^{{\left\{ 2 \right\}}} \left( {t_{f} } \right) - \partial_{s}^{2} \tilde{R}_{t}^{{\left\{ 2 \right\}}} \left( {t_{f} } \right) = 0 \), \( \partial_{s}^{3} R_{t}^{{\left\{ 3 \right\}}} \left( {t_{0} } \right) = 0 \), and \( \partial_{s}^{2} \tilde{R}_{t}^{{\left\{ 2 \right\}}} \left( {t_{0} } \right) = 0 \), then Eq. (9) implies that \( \langle z\left( s \right),\tilde{R}_{t}^{{\left\{ 2 \right\}}} \left( s \right)\rangle_{{\tilde{W}_{2}^{2} }} = \int_{{t_{0} }}^{{t_{f} }} z \left( s \right)\partial_{s}^{4} \tilde{R}_{t}^{{\left\{ 2 \right\}}} \left( s \right)ds \). Now, for each \( t \in \left[ {t_{0} ,t_{f} } \right] \), if \( \tilde{R}_{t}^{{\left\{ 2 \right\}}} \left( s \right) \) satisfies

then \( \langle z\left( s \right),\tilde{R}_{t}^{{\left\{ 2 \right\}}} \left( s \right)\rangle_{{\tilde{W}_{2}^{2} }} = z\left( t \right) \). Obviously, \( \tilde{R}_{t}^{{\left\{ 2 \right\}}} \left( s \right) \) is the reproducing kernel function of \( \tilde{W}_{2}^{2} \left[ {t_{0} ,t_{f} } \right] \). For the conduct of proceedings in the proof, it requires the expression form of \( \tilde{R}_{t}^{{\left\{ 2 \right\}}} \left( s \right) \). The auxiliary formula of Eq. (10) is \( \lambda^{4} = 0 \) and its auxiliary values are \( \lambda = 0 \) with multiplicity 4. So, let the expression form of \( \tilde{R}_{t}^{{\left\{ 2 \right\}}} \left( s \right) \) be defined as

But on the other aspect as well, for Eq. (10), let \( \tilde{R}_{t}^{{\left\{ 2 \right\}}} \left( s \right) \) satisfy \( \partial_{s}^{m} \tilde{R}_{t}^{{\left\{ 2 \right\}}} \left( {t + 0} \right) = \partial_{s}^{m} \tilde{R}_{t}^{{\left\{ 2 \right\}}} \left( {t - 0} \right) \), \( m = 0,1,2 \). Integrating Eq. (10) from \( t - \varepsilon \) to \( t + \varepsilon \) with respect to \( s \) and let \( \varepsilon \to 0 \), we have the jump degree of \( \partial_{s}^{3} \tilde{R}_{t}^{{\left\{ 2 \right\}}} \left( s \right) \) at \( s = t \) given by \( \partial_{s}^{3} \tilde{R}_{t}^{{\left\{ 2 \right\}}} \left( {t + 0} \right) - \partial_{s}^{3} \tilde{R}_{t}^{{\left\{ 2 \right\}}} \left( {t - 0} \right) = - 1 \). Through the last descriptions and by using Maple 13 software package, the unknown coefficients of \( \tilde{R}_{t}^{{\left\{ 2 \right\}}} \left( s \right) \) in Eq. (11) can be obtained as given in the representation form of Eq. (8). This completes the proof. □

Remark 1

Henceforth and not to conflict unless stated otherwise, we denote

Definition 5

The inner product Hilbert space \( H\left[ {t_{0} ,t_{f} } \right] \) can be defined as \( H\left[ {t_{0} ,t_{f} } \right] = \left\{ {\left( {z_{1} ,z_{2} , \ldots ,z_{n} } \right)^{T} :\left\{ {z_{j} } \right\}_{j = 1}^{n} \subset W_{2}^{1} \left[ {t_{0} ,t_{f} } \right]} \right\} \). The inner product and the norm in \( H\left[ {t_{0} ,t_{f} } \right] \) are building as \( \langle z\left( t \right),w\left( t \right)\rangle_{H} = \mathop \sum \nolimits_{j = 1}^{n} \langle z_{j} \left( t \right),w_{j} \left( t \right)\rangle_{{W_{2}^{1} }} \) and \( \|z\|_{H} = \sqrt {\mathop \sum \nolimits_{j = 1}^{n} \|z_{j}\|_{{W_{2}^{1} }}^{2} } \), respectively, where \( z,w \in H\left[ {t_{0} ,t_{f} } \right] \).

Definition 6

The inner product Hilbert space \( W\left[ {t_{0} ,t_{f} } \right] \) can be defined as

The inner product and the norm in \( W\left[ {t_{0} ,t_{f} } \right] \) are building as

and \( \|z\|_{W} = \sqrt {\mathop \sum \nolimits_{j = 1}^{p} \|z_{j}\|_{{W_{2}^{2} }}^{2} + \mathop \sum \nolimits_{j = p + 1}^{n} \|z_{j}\|_{{\tilde{W}_{2}^{2} }}^{2} } \), respectively, where \( z,w \in W\left[ {t_{0} ,t_{f} } \right] \).

The spaces \( H\left[ {t_{0} ,t_{f} } \right] \) and \( W\left[ {t_{0} ,t_{f} } \right] \) are complete Hilbert with some special properties. So, all the properties of the Hilbert space will be hold. Further, theses spaces possesses some special and better properties which could make some systems be solved easier.

3 Representation of exact and numerical solutions

In this section, we will show how to solve the BVPs by using the RKHS method in detail and we will see what the influence choice of the continuous linear operators. Anyhow, the formulation and the implementation method of the solutions are given in the extended RKHSs \( W\left[ {t_{0} ,t_{f} } \right] \) and \( H\left[ {t_{0} ,t_{f} } \right] \). Meanwhile, we construct an orthogonal function systems of the space \( W\left[ {t_{0} ,t_{f} } \right] \) based on the use of the Gram–Schmidt orthogonalization process.

Now, to apply the RKHS method on the extended spaces \( H\left[ {t_{0} ,t_{f} } \right] \) and \( W\left[ {t_{0} ,t_{f} } \right] \), we will define the following differential linear operators:

For the conduct of proceedings in the algorithm construction, put

Thus, based on this, the BVPs to be solved can be converted into the following equivalent form:

subject to the following BCs:

Lemma 1

The operators \( \left\{ {L_{j} } \right\}_{j = 1}^{p} :W_{2}^{2} \left[ {t_{0} ,t_{f} } \right] \to W_{2}^{1} \left[ {t_{0} ,t_{f} } \right] \) and \( \left\{ {L_{j} } \right\}_{j = p + 1}^{n} :\tilde{W}_{2}^{2} \left[ {t_{0} ,t_{f} } \right] \to W_{2}^{1} \left[ {t_{0} ,t_{f} } \right] \) are bounded and linear.

Proof

In this proof, we are focusing on \( \left\{ {L_{j} } \right\}_{j = 1}^{p} :W_{2}^{2} \left[ {t_{0} ,t_{f} } \right] \to W_{2}^{1} \left[ {t_{0} ,t_{f} } \right] \). The linearity part is obvious, for the boundedness part, we need to prove that \( \|L_{j} u_{j}\|_{{W_{2}^{1} }}^{2} \le M\|u_{j}\|_{{W_{2}^{2} }}^{2} \), where \( M > 0 \). From the definition of the inner product and the norm of \( W_{2}^{1} \left[ {t_{0} ,t_{f} } \right] \), we have \( \|L_{j} u_j\|_{{W_{2}^{1} }}^{2} = \langle L_{j} u_{j} \left( t \right),L_{j} u_{j} \left( t \right)\rangle_{{W_{2}^{1} }} = \left[ {L_{j} u_{j} \left( {t_{0} } \right)} \right]^{2} + \int_{{t_{0} }}^{{t_{f} }} \left[ {\left( {L_{j} u_{j} } \right)^{\prime} \left( t \right)} \right]^{2} dt \). By the reproducing property of \( R_{t}^{{\left\{ 2 \right\}}} \left( s \right) \), we have \( u_{j} \left( t \right) = \langle u_{j} \left( s \right),R_{t}^{{\left\{ 2 \right\}}} \left( s \right)\rangle_{{W_{2}^{2} }} \), \( \left( {L_{j} u_{j} } \right)\left( t \right) = \langle u_{j} \left( s \right),\left( {L_{j} R_{t}^{{\left\{ 2 \right\}}} } \right)\left( s \right)\rangle_{{W_{2}^{2} }} \), and \( \left( {L_{j} u_{j} } \right)^{\prime} \left( t \right) = \langle u_{j} \left( s \right),(L_{j} R_{t}^{{\left\{ 2 \right\}}} )^{\prime} \left( s \right)\rangle_{{W_{2}^{2} }} \). Again, by the Schwarz inequality, one can write

Thus, \( \|L_{j} u_{j}\|_{{W_{2}^{1} }}^{2} \le \left( {\left( {M_{j}^{{\left\{ 1 \right\}}} } \right)^{2} + \left( {M_{j}^{{\left\{ 2 \right\}}} } \right)^{2} \left( {t_{f} - t_{0} } \right)} \right)\|u_j\|_{{W_{2}^{2} }}^{2} \) or \( \|L_{j} u_{j}\|_{{W_{2}^{1} }} \le M\|u_{j}\|_{{W_{2}^{2} }} \), where \( M^{2} = \left( {M_{j}^{{\left\{ 1 \right\}}} } \right)^{2} + \left( {M_{j}^{{\left\{ 2 \right\}}} } \right)^{2} \left( {t_{f} - t_{0} } \right) \). Similarly, for the remaining operators \( \left\{ {L_{j} } \right\}_{j = p + 1}^{n} :\tilde{W}_{2}^{2} \left[ {t_{0} ,t_{f} } \right] \to W_{2}^{1} \left[ {t_{0} ,t_{f} } \right] \). □

Theorem 4

The operator \( L:W\left[ {t_{0} ,t_{f} } \right] \to H\left[ {t_{0} ,t_{f} } \right] \) is bounded and linear.

Proof

Clearly, \( L \) is linear operator from \( W\left[ {t_{0} ,t_{f} } \right] \) into \( H\left[ {t_{0} ,t_{f} } \right] \). The boundedness part is shown as follows: for each \( u \in W\left[ {t_{0} ,t_{f} } \right] \), we have

Considering Lemma 1; the boundedness of \( \left\{ {L_{j} } \right\}_{j = 1}^{p} \) and \( \left\{ {L_{j} } \right\}_{j = p + 1}^{n} \) implies that \( L \) is bounded. So, the proof of the theorem is complete. □

Next, we construct an orthogonal function systems of the space \( W\left[ {t_{0} ,t_{f} } \right] \) as follows: put

and \( \psi_{ij} \left( t \right) = L^{ *} \varphi_{ij} \left( t \right) \), \( i = 1,2,\ldots \), \( j = 1,2, \ldots ,n \), where \( L^{ *} = \left[ {L_{ji}^{ *} } \right]_{n \times n} \) is the adjoint operator of \( L = \left[ {L_{ij} } \right]_{n \times n} \), \( R_{t}^{{\left\{ 1 \right\}}} \left( s \right) \) is the reproducing kernel function of \( W_{2}^{1} \left[ {t_{0} ,t_{f} } \right] \), and \( \left\{ {t_{i} } \right\}_{i = 1}^{\infty } \) is dense on \( \left[ {t_{0} ,t_{f} } \right] \).

Algorithm 1

The orthonormal function systems \( \left\{ {\bar{\psi }_{ij} \left( t \right)} \right\}_{{\left( {i,j} \right) = \left( {1,1} \right)}}^{{\left( {\infty ,n} \right)}} \) of the space \( W\left[ {t_{0} ,t_{f} } \right] \) can be derived from the Gram–Schmidt orthogonalization process of \( \left\{ {\psi_{ij} \left( t \right)} \right\}_{{\left( {i,j} \right) = \left( {1,1} \right)}}^{{\left( {\infty ,n} \right)}} \) as follows.

- Step 1: :

-

For \( l = 1,2,\ldots \) and \( k = 1,2,\ldots,l \) do the following:

If \( l = k = 1 \), then set \( \mu_{lk}^{ij} = \frac{1}{{\|\psi_{11}\|_{W} }} \);

If \( l = k \ne 1 \), then set \( \mu_{lk}^{ij} = \frac{1}{{\sqrt {\|\psi_{lk}\|_{W}^{2} - \mathop \sum \nolimits_{p = 1}^{l - 1} \langle\psi_{lk} \left( t \right),\bar{\psi }_{lp} \left( t \right)\rangle_{W}^{2} } }} \);

If \( l > k \), then set \( \mu_{lk}^{ij} = - \frac{1}{{\sqrt {\|\psi_{lk}\|_{W}^{2} \mathop \sum \nolimits_{p = 1}^{l - 1} \langle \psi_{lk} \left( t \right),\bar{\psi }_{lp} \left( t \right)\rangle_{W}^{2} } }}\mathop \sum \limits_{p = k}^{l - 1} \langle\psi_{lk} \left( t \right),\bar{\psi }_{lp} \left( t \right)\rangle_{W} \mu_{pk}^{ij} \);

- Output: :

-

The orthogonalization coefficients \( \mu_{lk}^{ij} \) of the orthonormal systems \( \bar{\psi }_{ij} \left( t \right) \).

- Step 2: :

-

For \( i = 1,2,\ldots \) and \( j = 1,2, \ldots ,n \) set

$$ \bar{\psi }_{ij} \left( t \right) = \mathop \sum \limits_{l = 1}^{i} \mathop \sum \limits_{k = 1}^{j} \mu_{lk}^{ij} \psi_{lk} \left( t \right); $$(24) - Output: :

-

The orthonormal function systems \( \left\{ {\bar{\psi }_{ij} \left( t \right)} \right\}_{{\left( {i,j} \right) = \left( {1,1} \right)}}^{{\left( {\infty ,n} \right)}} \).

Here, it is easy to see that, \( \psi_{ij} \left( t \right) = L^{ *} \varphi_{ij} \left( t \right) = \langle L^{ *} \varphi_{ij} \left( s \right),R_{t} \left( s \right)\rangle_{W} = \langle\varphi_{ij} \left( s \right),L_{s} R_{t} \left( s \right)\rangle_{H} = \left. {L_{s} R_{t} \left( s \right)} \right|_{{s = t_{i} }} \in W\left[ {t_{0} ,t_{f} } \right] \). Thus, \( \psi_{ij} \left( t \right) \) can be expressed in the form of \( \psi_{ij} \left( t \right) = \left. {L_{s} R_{t} \left( s \right)} \right|_{{s = t_{i} }} \).

Theorem 5

For Eqs. (19) and (20), if \( \left\{ {t_{i} } \right\}_{i = 1}^{\infty } \) is dense on \( \left[ {t_{0} ,t_{f} } \right] \), then \( \left\{ {\psi_{ij} \left( t \right)} \right\}_{{\left( {i,j} \right) = \left( {1,1} \right)}}^{{\left( {\infty ,n} \right)}} \) is the complete function systems of the space \( W\left[ {t_{0} ,t_{f} } \right] \).

Proof

For each fixed \( u \in W\left[ {t_{0} ,t_{f} } \right] \), let \( \langle u\left( t \right),\psi_{ij} \left( t \right)\rangle_{W} = 0 \). Then, \( \langle u\left( t \right),\psi_{ij} \left( t \right)\rangle_{W} = \langle u\left( t \right),L^{ *} \varphi_{ij} \left( t \right)\rangle_{W} = \langle Lu\left( t \right),\varphi_{ij} \left( t \right)\rangle_{H} = Lu\left( {t_{i} } \right) = 0 \). Whilst \( u\left( t \right) = \mathop \sum \nolimits_{j = 1}^{n} u_{j} \left( t \right)e_{j} = \mathop \sum \nolimits_{j = 1}^{n} \langle u\left( \cdot \right),\left( {R_{t} \left( \cdot \right)} \right)_{j} e_{j}\rangle_{W} e_{j} \), where

Hence, \( Lu\left( {t_{i} } \right) = \mathop \sum \nolimits_{j = 1}^{n} \langle Lu\left( t \right),\varphi_{ij} \left( t \right)\rangle_{H} e_{j} = 0 \). But since \( \left\{ {t_{i} } \right\}_{i = 1}^{\infty } \) is dense on \( \left[ {t_{0} ,t_{f} } \right] \), we must have \( Lu\left( t \right) = 0 \). It follows that \( u\left( t \right) = 0 \) from the existence of \( L^{ - 1} \). So, the proof of the theorem is complete. □

Theorem 6

If \( \left\{ {t_{i} } \right\}_{i = 1}^{\infty } \) is dense on \( \left[ {t_{0} ,t_{f} } \right] \) and the solution of Eqs. (19) and (20) is unique, then their exact solution satisfies the infinite expansion form

where \( \mu_{lk}^{ij} \) are the orthogonalization coefficients of the orthonormal systems \( \bar{\psi }_{ij} \left( t \right) \) obtained from the Gram–Schmidt process.

Proof

Applying Theorem 5, it is easy to see that \( \left\{ {\bar{\psi }_{ij} \left( t \right)} \right\}_{{\left( {i,j} \right) = \left( {1,1} \right)}}^{{\left( {\infty ,n} \right)}} \) is the complete orthonormal basis of \( W\left[ {t_{0} ,t_{f} } \right] \). Since, \( \langle u\left( t \right),\varphi_{ij} \left( t \right)\rangle = u_{j} \left( {t_{i} } \right) \) for each \( u \in W\left[ {t_{0} ,t_{f} } \right] \), whilst, \( \mathop \sum \nolimits_{i = 1}^{\infty } \mathop \sum \nolimits_{j = 1}^{n} \langle u\left( t \right),\bar{\psi }_{ij} \left( t \right)\rangle_{W} \bar{\psi }_{ij} \left( t \right) \) is the Fourier series expansion about \( \left\{ {\bar{\psi }_{ij} \left( t \right)} \right\}_{{\left( {i,j} \right) = \left( {1,1} \right)}}^{{\left( {\infty ,n} \right)}} \). Then the series \( \mathop \sum \nolimits_{i = 1}^{\infty } \mathop \sum \nolimits_{j = 1}^{n} \langle u\left( t \right),\bar{\psi }_{ij} \left( t \right)\rangle_{W} \bar{\psi }_{ij} \left( t \right) \) is convergent in the sense of \( \| \cdot \|_{W} \). Thus, using Eq. (24), we have

Therefore, the form of Eq. (26) is the exact solution of Eqs. (19) and (20). The proof is complete. □

Anyhow, the numerical solution \( u_{\eta } \left( t \right) \) of \( u\left( t \right) \) for Eqs. (19) and (20) can be obtained directly by taking finitely many terms in the series representation form of \( u\left( t \right) \) and is given as

4 Construct and implement the iterative technique

In this section, we consider the given BVP and construct an iterative technique to find its solutions in the space \( W\left[ {t_{0} ,t_{f} } \right] \) for linear and nonlinear case simultaneously. Also, numerical solutions of the same system, obtained using proposed method with existing BCs are proved to converge to the exact solutions with decreasing absolute difference between the exact values and the values obtained using RKHS method.

Here, we shall make use of the following facts about the linear and the nonlinear case depending on the internal structure of the function \( F \). Firstly, if Eq. (19) is linear, then the exact and the numerical solutions can be obtained directly from Eqs. (26) and (28), respectively. Secondly, if Eq. (19) is nonlinear, then the exact and the numerical solutions can be obtained by using the following iterative process: According to Eq. (26), the representation form of the exact solution of Eqs. (19) and (20) can be written as

where \( A_{ij} = \mathop \sum \nolimits_{l = 1}^{i} \mathop \sum \nolimits_{k = 1}^{j} \mu_{ik}^{ij} f_{k} \left( {t_{l} ,u\left( {t_{l} } \right)} \right) \). For the conduct of proceedings in the solutions, put \( t_{1} = t_{0} \), it follows that \( u\left( {t_{1} } \right) \) is known from the constraints conditions of Eq. (20). Thus, the exact value of \( F\left( {t_{1} ,u\left( {t_{1} } \right)} \right) \) is known. For numerical computations, we put the initial function \( u_{0} \left( {t_{1} } \right) = u\left( {t_{1} } \right) \) and define the \( \eta \)-term numerical solution of \( u\left( t \right) \) by

where the coefficients \( B_{ij} \) and the successive approximations \( u_{i} \left( t \right) \), \( i = 1,2,\ldots,\eta \) are given as follows:

In the iterative process of Eqs. (30) and (31), we can guarantee that the numerical solution \( u_{\eta } \left( t \right) \) satisfies the BCs of Eq. (20). Next, we will proof that \( u_{\eta } \left( t \right) \) is converge to the exact solution \( u\left( t \right) \).

Lemma 2

If \( u \in W\left[ {t_{0} ,t_{f} } \right] \), then the numerical solution \( u_{\eta } \left( t \right) \) and its derivative \( u_{\eta }^{\prime} \left( t \right) \) are converging uniformly to the exact solution \( u\left( t \right) \) and its derivative \( u^{\prime}\left( t \right) \) as \( \eta \to \infty \), respectively.

Proof

For each \( t \in \left[ {t_{0} ,t_{f} } \right] \), one can write

Hence, if \( \|u_{\eta } - u\|_{W} \to 0 \) as \( \eta \to \infty \), then \( u_{\eta } \left( t \right) \) and \( u_{\eta }^{\prime} \left( t \right) \) are converge uniformly to \( u\left( t \right) \) and \( u^{\prime}\left( t \right) \), respectively. The proof is complete. □

Theorem 7

If \( \|u_{\eta - 1} - u\|_{W} \to 0 \), \( t_{\eta } \to s \) as \( \eta \to \infty \), \( \|u_{\eta - 1}\|_{W} \) is bounded, and \( F\left( {t,u\left( t \right)} \right) \) is continuous, then \( F\left( {t_{\eta } ,u_{\eta - 1} \left( {t_{\eta } } \right)} \right) \to F\left( {s,u\left( s \right)} \right) \) as \( \eta \to \infty \).

Proof

Firstly, we will prove that \( u_{\eta - 1} \left( {t_{\eta } } \right) \to u\left( s \right) \). Since,

By reproducing property of the kernel function \( R_{t} \left( s \right) \), we have \( u_{\eta - 1} \left( {t_{\eta } } \right) = \mathop \sum \nolimits_{j = 1}^{n} \langle u_{\eta - 1} \left( t \right),\left( {R_{{t_{\eta } }} \left( t \right)} \right)_{j} e_{j}\rangle_{W} e_{j} \) and \( u_{\eta - 1} \left( s \right) = \mathop \sum \nolimits_{j = 1}^{n} \langle u_{\eta - 1} \left( t \right),\left( {R_{s} \left( t \right)} \right)_{j} e_{j}\rangle_{W} e_{j} \). Thus,

From the symmetry of \( R_{t} \left( s \right) \), it follows that \( \|\left( {R_{{t_{\eta } }} \left( t \right) - R_{s} \left( t \right)} \right)_{j} e_{j}\|_{W} \to 0 \) as \( t_{\eta } \to s \) and \( \eta \to \infty \). In terms of the boundedness of \( \|u_{\eta - 1}\|_{W} \), one obtains that \( \left| {u_{\eta - 1} \left( {t_{\eta } } \right) - u_{\eta - 1} \left( s \right)} \right| \to 0 \) as soon as \( t_{\eta } \to s \) and \( \eta \to \infty \). Again, by Lemma 2, for each \( s \in \left[ {t_{0} ,t_{f} } \right] \), it holds that \( \left| {u_{\eta - 1} \left( s \right) - u\left( s \right)} \right| \le M_{1} \|u_{\eta - 1} - u\|_{W} \to 0 \). Therefore, \( u_{\eta - 1} \left( {t_{\eta } } \right) \to u\left( s \right) \) in the sense of the norm of \( W\left[ {t_{0} ,t_{f} } \right] \) as \( t_{\eta } \to s \) and \( \eta \to \infty \). As a result, by the means of the continuation of \( F \), it is implies that \( F\left( {t_{\eta } ,u_{\eta - 1} \left( {t_{\eta } } \right)} \right) \to F\left( {s,u\left( s \right)} \right) \) as \( \eta \to \infty \). So, the proof of the theorem is complete. □

Theorem 8

Suppose that \( \|u_{\eta }\|_{W} \) is bounded in Eqs. (30) and (31), \( \left\{ {t_{i} } \right\}_{i = 1}^{\infty } \) is dense on \( \left[ {t_{0} ,t_{f} } \right] \), and Eqs. (19) and (20) have a unique solution on \( \left[ {t_{0} ,t_{f} } \right] \). Then the \( \eta \)-term numerical solution \( u_{\eta } \left( t \right) \) converges to the exact solution \( u\left( t \right) \) with \( u\left( t \right) = \mathop \sum \limits_{l = 1}^{\infty } \mathop \sum \limits_{j = 1}^{n} B_{ij} \bar{\psi }_{ij} \left( t \right) \).

Proof

Similar to the proof of Theorem 7 in [23]. □

5 Error estimations and error bounds

In this section, we derive error bounds for the present algorithm and problems. Herein, we suppose that \( {\mathcal{M}} = \left\{ {t_{1} ,t_{2} , \ldots ,t_{\eta } } \right\} \subset \left( {t_{0} ,t_{f} } \right) \) such that \( t_{0} < t_{1} \le t_{2} \le \cdots \le t_{\eta } < t_{f} = t_{\eta + 1} \) be the selected points for generating the basis functions \( \left\{ {\bar{\psi }_{ij} \left( t \right)} \right\}_{{\left( {i,j} \right) = \left( {1,1} \right)}}^{{\left( {\infty ,n} \right)}} \), \( h = t_{i + 1} - t_{i} \) with \( 0 \le i \le \eta \) is the fill distance for the uniform partition of \( \left[ {t_{0} ,t_{f} } \right] \), and \( \|L^{ - 1}\| = { \sup }_{{0 \ne u \in W\left[ {t_{0} ,t_{f} } \right]}} \|u\|_{H}^{ - 1} \|L^{ - 1}\|_{W} \).

Lemma 3

[23] Suppose that \( u_{j} \in C^{m} \left[ {t_{0} ,t_{f} } \right] \) and \( u_{j}^{{\left( {m + 1} \right)}} \in L^{2} \left[ {t_{0} ,t_{f} } \right] \) for some \( m \ge 1 \). If \( u_{j} \) vanishes at \( {\mathcal{M}} \) with \( \eta \ge m + 1 \), then \( u_{j} \in W_{2}^{1} \left[ {t_{0} ,t_{f} } \right] \) and there is a constant \( A_{j} \) such that

We mention here that for the next results the hypotheses of Lemma 3 are hold.

Theorem 9

If \( u\left( t \right) = \left( {u_{1} \left( t \right),u_{2} \left( t \right),\ldots,u_{n} \left( t \right)} \right) \) , then there is a constant \( A = \left( {A_{1} ,A_{2} ,\ldots,A_{n} } \right) \) such that

Proof

Considering Definition 5, one can write

Herein, \( { \hbox{max} }_{{t \in \left[ {t_{0} ,t_{f} } \right]}} \left| {u^{{\left( {m + 1} \right)}} \left( t \right)} \right| = { \hbox{max} }_{{t \in \left[ {t_{0} ,t_{f} } \right]}} \left( u_{1}^{{\left( {m + 1} \right)}} \left( t \right),u_{2}^{{\left( {m + 1} \right)}} \left( t \right),\ldots,u_{n}^{{\left( {m + 1} \right)}} \left( t \right) \right) \).□

In the case of multidimensional function, we may use a norm that represents the maximum value at each function. That means, if \( u\left( t \right) = \left( {u_{1} \left( t \right),u_{2} \left( t \right),\ldots,u_{n} \left( t \right)} \right) \), then

Lemma 4

If \( u \in W\left[ {t_{0} ,t_{f} } \right] \), then there exist a positive number \( K \) such that \( \|u^{\left( i \right)}\|_{\infty } \le K\|u\|_{W} \).

Proof

For each \( \left\{ {u_{j} } \right\}_{j = 1}^{p} \subset W_{2}^{2} \left[ {t_{0} ,t_{f} } \right] \), one has \( \left| {u_{j}^{\left( i \right)} \left( t \right)} \right| \le \|\partial_{t}^{i} R_{t}^{{\left\{ 2 \right\}}} \left( t \right)\|_{{W_{2}^{2} }} \|u_{j}\|_{{W_{2}^{2} }} \le M_{i} \|u_{j}\|_{{W_{2}^{2} }} \). Similarly, for each \( \left\{ {u_{j} } \right\}_{j = p + 1}^{n} \subset \tilde{W}_{2}^{2} \left[ {t_{0} ,t_{f} } \right] \), one has \( \left| {u_{j}^{\left( i \right)} \left( t \right)} \right| \le \|\partial_{t}^{i} \tilde{R}_{t}^{{\left\{ 2 \right\}}} \left( t \right)\|_{{\tilde{W}_{2}^{2} }} \|u_{j}\|_{{\tilde{W}_{2}^{2} }} \le N_{i} \|u_{j}\|_{{\tilde{W}_{2}^{2} }} \). Whilst, if \( u \in W\left[ {t_{0} ,t_{f} } \right] \), then \( u = \left( {u_{1} ,u_{2} , \ldots ,u_{p} ,u_{p + 1} , \ldots ,u_{n} } \right)^{T} \) with \( \left\{ {u_{j} } \right\}_{j = 1}^{p} \subset W_{2}^{2} \left[ {t_{0} ,t_{f} } \right] \) and \( \left\{ {u_{j} } \right\}_{j = p + 1}^{n} \subset \tilde{W}_{2}^{2} \left[ {t_{0} ,t_{f} } \right] \). Thus, \( \mathop \sum \nolimits_{j = 1}^{p} \left| {u_{j}^{\left( i \right)} \left( t \right)} \right|^{2} \le M_{i}^{2} \mathop \sum \nolimits_{j = 1}^{p} \|u_{j}\|_{{W_{2}^{2} }}^{2} \) and \( \mathop \sum \nolimits_{j = p + 1}^{n} \left| {u_{j}^{\left( i \right)} \left( t \right)} \right|^{2} \le N_{i}^{2} \mathop \sum \nolimits_{j = p + 1}^{n} \|u_{j}\|_{{\tilde{W}_{2}^{2} }}^{2} \). So as to this, one can write

where \( K^{2} = n{ \hbox{max} }_{i} \left\{ {M_{i}^{2} ,N_{i}^{2} } \right\} \). In spite of \( { \sup }_{{t \in \left[ {t_{0} ,t_{f} } \right]}} \left| {u_{j}^{\left( i \right)} \left( t \right)} \right| \le { \sup }_{{t \in \left[ {t_{0} ,t_{f} } \right]}} \mathop \sum \limits_{j = 1}^{n} \left| {u_{j}^{\left( i \right)} \left( t \right)} \right| \le K\|u\|_{W} \), we get \( \max_{j} \left( {\sup_{{t \in \left[ {t_{0} ,t_{f} } \right]}} \left| {u_{j}^{\left( i \right)} \left( t \right)} \right|} \right) \le K\|u\|_{W} \) or \( \|u^{\left( i \right)}\|_{\infty } \le K\|u\|_{W} \). □

Theorem 10

Let \( u\left( t \right) \) and \( u_{\eta } \left( t \right) \) are given by Eqs. (26) and (28), respectively, then there is a constant \( B \) such that

Proof

The proof will be obtained by mathematical induction as follows: from Eq. (30) for \( j \le \eta \), we see that

Using the orthogonality of \( \left\{ {\psi_{ij} \left( t \right)} \right\}_{{\left( {i,j} \right) = \left( {1,1} \right)}}^{{\left( {\infty ,n} \right)}} \), yields that

Consequently, if \( l = 1 \), then \( \left( {Lu} \right)_{j} \left( {t_{1} } \right) = f_{j} \left( {t_{1} ,u_{0} \left( {t_{1} } \right)} \right) \) or \( Lu\left( {t_{1} } \right) = F\left( {t_{1} ,u_{0} \left( {t_{1} } \right)} \right) \). Again, if \( l = 2 \), then \( \left( {Lu} \right)_{j} \left( {t_{2} } \right) = f_{j} \left( {t_{2} ,u_{1} \left( {t_{2} } \right)} \right) \) or \( Lu\left( {t_{2} } \right) = F\left( {t_{2} ,u_{1} \left( {t_{2} } \right)} \right) \). In the same layout, we can discover the pattern form \( Lu\left( {t_{j} } \right) = F\left( {t_{j} ,u_{j - 1} \left( {t_{j} } \right)} \right) \), \( j = 1,2, \ldots ,\eta \). Clearly \( R_{\eta j} \in C^{m} \left[ {t_{0} ,t_{f} } \right] \) and \( R_{\eta j}^{{\left( {m + 1} \right)}} \in L^{2} \left[ {t_{0} ,t_{f} } \right] \). Thus, from Theorem 9 there is a constant \( D = \left( {D_{1} ,D_{2} ,\ldots,D_{n} } \right) \) such that

Recalling that the error function \( R_{\eta } \left( t \right) = Lu_{\eta } \left( t \right) - F\left( {t,u\left( t \right)} \right) = Lu_{\eta } \left( t \right) - Lu\left( t \right) = L\left( {u_{\eta } \left( t \right) - u\left( t \right)} \right) \). Hence, \( u_{\eta } - u = L^{ - 1} R_{\eta } \), then there exists a constant \( E \) such that

Finally, in view of Lemma 4, one can find that

where \( B = KE\|D\|_{2} \|\mathop { \hbox{max} }\limits_{{t \in \left[ {t_{0} ,t_{f} } \right]}} \left| {R_{\eta }^{{\left( {m + 1} \right)}} \left( t \right)} \right|\|_{2} \) is a positive real number. □

Corollary 1

Suppose that \( h = \frac{{t_{f} - t_{0} }}{\eta } \) is the fill distance for the uniform partition of \( \left[ {t_{0} ,t_{f} } \right] \). Let \( u\left( t \right) \) and \( u_{\eta } \left( t \right) \) are given by Eqs. (26) and (28), respectively, then

Proof

The proof follows directly from Theorem 10. □

Theorem 11

Let \( \varepsilon_{\eta } = \|u - u_{\eta }\|_{W} \), where \( u\left( t \right) \) and \( u_{\eta } \left( t \right) \) are the exact and the numerical solution, respectively. Then, the sequence of numbers \( \left\{ {\varepsilon_{\eta } } \right\} \) are decreasing in the sense of the norm of \( W\left[ {t_{0} ,t_{f} } \right] \) and \( \varepsilon_{\eta } \to 0 \) as \( \eta \to \infty \).

Proof

Using the expansions form of \( u\left( t \right) \) and \( u_{\eta } \left( t \right) \) in Eqs. (29), (30), and (31), one can write \( \varepsilon_{\eta }^{2} = \|\mathop \sum \nolimits_{i = \eta + 1}^{\infty } \mathop \sum \nolimits_{j = 1}^{n} B_{ij} \bar{\psi }_{ij} \left( t \right)\|^{2} = \mathop \sum \nolimits_{i = \eta + 1}^{\infty } \left( {\mathop \sum \nolimits_{j = 1}^{n} B_{ij} } \right)^{2} \) and \( \varepsilon_{\eta - 1}^{2} = \|\mathop \sum \nolimits_{i = \eta }^{\infty } \mathop \sum \nolimits_{j = 1}^{n} B_{ij} \bar{\psi }_{ij} \left( t \right)\|^{2} = \mathop \sum \nolimits_{i = \eta }^{\infty } \left( {\mathop \sum \nolimits_{j = 1}^{n} B_{ij} } \right)^{2} \). Clearly, \( \varepsilon_{\eta - 1} \ge \varepsilon_{\eta } \), and consequently \( \left\{ {\varepsilon_{\eta } } \right\}_{\eta = 1}^{\infty } \) are decreasing in the sense of \( \| \cdot \|_{W} \). By Theorem 6, we know that \( \mathop \sum \nolimits_{i = 1}^{\infty } \mathop \sum \nolimits_{j = 1}^{n} B_{ij} \bar{\psi }_{ij} \left( t \right) \) is convergent. Thus, \( \varepsilon_{\eta }^{2} \to 0 \) or \( \varepsilon_{\eta } \to 0 \). So, the proof of the theorem is complete. □

6 Numerical algorithm and numerical analysis

In numerical analysis problems there are some basic unknowns. If they are found, the behavior of the entire structure can be predicted. The basic unknowns or the field variables which are encountered are displacements in the applied marhematics and engineering problems. This section presents the numerical solutions for three different BVPs of differen types using the RKHS method. Results obtained by the proposed method are compared systematically with some other well-known methods and are found outperforms in terms of accuracy and generality.

By generating the finite direct sum between the spaces \( W_{2}^{1} \left[ {t_{0} ,t_{f} } \right],W_{2}^{2} \left[ {t_{0} ,t_{f} } \right],\tilde{W}_{2}^{2} \left[ {t_{0} ,t_{f} } \right] \) and merge the kernel functions \( R_{t}^{{\left\{ 1 \right\}}} \left( s \right),R_{t}^{{\left\{ 2 \right\}}} \left( s \right),\tilde{R}_{t}^{{\left\{ 2 \right\}}} \left( s \right) \) in one vector space that satisfying the corresponding BCs, we can directly obtain the exact and the numerical solutions by applying the following algorithm.

Algorithm 2

To approximate the solution of Eqs. (19) and (20), we do the following steps:

- Input: :

-

The interval \( \left[ {t_{0} ,t_{f} } \right] \), the integers \( \eta \), the kernel functions \( R_{t} \left( s \right),r_{t} \left( s \right) \), the differential operator \( L \), and the function \( F \).

- Output: :

-

Numerical solution \( u_{\eta } \left( t \right) \) of \( u\left( t \right) \) at each grid points in the independent interval \( \left[ {t_{0} ,t_{f} } \right] \).

- Step1: :

-

Fixed \( t \) in \( \left[ {t_{0} ,t_{f} } \right] \) and set \( s \in \left[ {t_{0} ,t_{f} } \right] \);

If \( s \le t, \) set \( R_{t} \left( s \right) = \Big( R_{t,1}^{{\left\{ 2 \right\}}} \left( s \right),R_{t,1}^{{\left\{ 2 \right\}}} \left( s \right), \ldots ,R_{t,1}^{{\left\{ 2 \right\}}} \left( s \right)_{{p{\text{th}}}} ,\tilde{R}_{t,1}^{{\left\{ 2 \right\}}} \left( s \right)_{{\left( {p + 1} \right){\text{th}}}} ,\tilde{R}_{t,1}^{{\left\{ 2 \right\}}} \left( s \right), \ldots ,\tilde{R}_{t,1}^{{\left\{ 2 \right\}}} \left( s \right)_{{n{\text{th}}}} \Big)^{T} \);

Else set \( R_{t} \left( s \right) = \Big( R_{t,2}^{{\left\{ 2 \right\}}} \left( s \right),R_{t,2}^{{\left\{ 2 \right\}}} \left( s \right), \ldots ,R_{t,2}^{{\left\{ 2 \right\}}} \left( s \right)_{{p{\text{th}}}} ,\tilde{R}_{t,2}^{{\left\{ 2 \right\}}} \left( s \right)_{{\left( {p + 1} \right){\text{th}}}} ,\tilde{R}_{t,2}^{{\left\{ 2 \right\}}} \left( s \right), \ldots ,\tilde{R}_{t,2}^{{\left\{ 2 \right\}}} \left( s \right)_{{n{\text{th}}}} \Big)^{T} \).

For \( i = 1,2,\ldots,\eta \) and \( j = 1,2, \ldots ,n \), do the following:

Set \( t_{i} = \frac{i - 1}{\eta - 1} \);

Set \( \psi_{i,j} \left( {t_{i} } \right) = L_{s} \left[ {R_{{t_{i} }} \left( s \right)} \right]_{{s = t_{i} }} \);

- Output: :

-

The orthogonal function systems \( \psi_{i,j} \left( {t_{i} } \right) \).

- Step2: :

-

For \( l = 2,3,\ldots,\eta \) and \( k = 1,2,\ldots,l, \) do Algorithm 1 for \( l \) and \( k \);

- Output: :

-

The orthogonalization coefficients \( \mu_{lk}^{ij} \).

- Step3: :

-

For \( l = 2,3,\ldots,\eta - 1 \) and \( k = 1,2,\ldots,l - 1, \) do the following:

Set \( \bar{\psi }_{ij} \left( {t_{i} } \right) = \mathop \sum \nolimits_{l = 1}^{i} \mathop \sum \nolimits_{k = 1}^{j} \mu_{lk}^{ij} \psi_{lk} \left( {t_{i} } \right) \);

- Output: :

-

The orthonormal function system \( \bar{\psi }_{ij} \left( {t_{i} } \right) \).

- Step4: :

-

Set \( u_{0} \left( {t_{1} } \right) = u\left( {t_{1} } \right) = 0 \);

Set \( B_{ij} = \mathop \sum \nolimits_{l = 1}^{i} \mathop \sum \nolimits_{k = 1}^{j} \mu_{lk}^{ij} f_{k} \left( {t_{l} ,u_{i - 1} \left( {t_{l} } \right)} \right) \);

Set \( u_{i} \left( {t_{i} } \right) = \mathop \sum \nolimits_{l = 1}^{i} \mathop \sum \nolimits_{j = 1}^{n} B_{ij} \bar{\psi }_{ij} \left( {t_{i} } \right) \);

- Output: :

-

The numerical solution \( u_{\eta } \left( {t_{i} } \right) \) of \( u\left( {t_{i} } \right) \).

Using RKHS algorithm, taking \( t_{i} = \frac{i - 1}{\eta - 1} \), \( i = 1,2,\ldots,\eta \) in \( u_{\eta } \left( {t_{i} } \right) \) of Eq. (28), generating the reproducing kernel functions \( r_{t} \left( s \right),R_{t} \left( s \right) \) on \( \left[ {t_{0} ,t_{f} } \right] \), and applying Algorithms 1 and 2 throughout the numerical computations; some graphical results, tabulate data, and numerical comparison are presented and discussed quantitatively at some selected grid points on \( \left[ {t_{0} ,t_{f} } \right] \) to illustrate the numerical solutions for the following BVPs. In the process of computation, all the symbolic and numerical computations are performed by using MAPLE 13 software package.

Example 1

Consider the following linear BVP of three variables:

subject to the following BCs:

where \( t \in \left[ {0,1} \right] \). Here, the exact solutions are \( u_{1} \left( t \right) = - 0.05t^{5} + 0.25t^{4} + t + 2 - e^{ - t} \), \( u_{2} \left( t \right) = t^{3} + 1 \), and \( u_{3} \left( t \right) = 0.25t^{4} + t - e^{ - t} \).

Example 2

Consider the following nonlinear BVP of three variables:

subject to the following BCs:

where \( t \in \left[ {0,1} \right] \). Here, the exact solutions are \( u_{1} \left( t \right) = 0.5{ \sin }\left( {2t} \right) - \frac{1}{3}{ \cos }\left( {3t} \right) + \frac{4}{3} \), \( u_{2} \left( t \right) = e^{t} + 1 \), and \( u_{3} \left( t \right) = e^{2t} + t \).

Example 3

Consider the following nonlinear singular BVP of four variables:

subject to the following BCs:

where \( t \in \left( {0,1} \right) \). Here, the exact solutions are \( u_{1} \left( t \right) = { \ln }\left( {t + 1} \right) \), \( u_{2} \left( t \right) = e^{{t^{2} }} - 2t \), \( u_{3} \left( t \right) = { \sin }\left( {1 - t} \right){ \cos }\left( t \right) \), and \( u_{4} \left( t \right) = 2^{ - t} \).

Our next goal is to illustrate some numerical results of the RKHS solutions of the aforementioned BVPs in numeric values. In fact, results from numerical analysis are an approximation, in general, which can be made as accurate as desired. Because a computer has a finite word length, only a fixed number of digits are stored and used during computations. Next, the agreement between the exact and the numerical solutions is investigated for Examples 1 and 2 at various \( t \) in [0,1] by computing the numerical approximating of their exact solutions for the corresponding equivalent equations as shown in Tables 1 and 2, respectively.

Numerical comparisons for Examples 1 and 2 at various t in [0,1] are studied next. The numerical methods that are used for comparison with the RKHS method include the continuous genetic algorithm (CGA) [15], the finite difference (FD) method [5, 15], the orthogonal collocation (OC) method [5, 15], and the quasilinearization (QL) method [5, 15]. It is clear from the tables that, for Example 1, the FD method is suited with great difficulty and the QL method is failed, whilst, when solving Example 2, all the aforementioned methods except the CGA are failed in approximating the required solutions. As a result, it was found that the RKHS method in comparison is much better with a view to accuracy and applicability followed directly by the CGA in the mean of accuracy and applicability. Anyhow, Tables 3, 4, and 5, show a comparisons between the absolute errors of our method together with the other aforementioned methods for Example 1, while Tables 6, 7, and 8 show a comparisons for Examples 2.

To analyze the most comprehensive and accurate; the average absolute errors across all grid points and across all dependent variables for Examples 1 and 2 in all the aforementioned methods are shown in Table 9. As fast statistical analysis the following comments and results are clearly observed:

-

The best method for the solutions is the RKHS method followed closely by the CGA.

-

The average absolute errors for the RKHS method are the lowest one among all other aforementioned numerical ones.

-

The average absolute errors using the RKHS method are relatively of the same order for the linear and the nonlinear cases which is of the order 10−8.

-

The RKHS method is three manifolds better than the CGA, six manifolds better than the FD method, four manifolds better than the OC method for Example 1.

-

The FD method, the OC method, and the QL method are failed in finding the solutions for Example 2, whilst the QL method failed in finding the solutions for Example 1.

In fact, we can notice that all methods using in comparison are only directly suitable for some kinds of systems and often require that the system to have special structure and not good enough to solve the systems of BVPs in general. The results obtained in this article make it very clear that the RKHS method out stands the performance of all other existing methods in terms of accuracy and applicability.

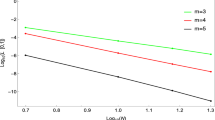

Finally, the computational values of the numerical solutions and their first derivatives for Example 3 have been plotted in Fig. 1a, b for various t in [0,1]. As the plots show, while the value of t moving a way from the boundary of [0,1], the values of the numerical solutions various smoothly along the horizontal axis by satisfying the initial conditions \( u_{1} \left( 0 \right) = 0,u_{2} \left( 0 \right) = 1 \) for the first dependent variables and \( u_{3} \left( 1 \right) = 0,u_{4} \left( 1 \right) = 0.5 \) for the second dependent variables of the corresponding BVPs. The similar results can be obtained for the first derivative of the numerical solutions. We recall that the accuracy and duration of a simulation depends directly on the size of the steps taken by the solver. Generally, decreasing the step size increases the accuracy of the results, while increasing the time required to simulate the problem.

Graphical results for the computational values of Example 3: blue: uη1(t) \( \left( {u_{\eta 1}^{\prime} \left( t \right)} \right) \); red: uη2(t) \( \left( {u_{\eta 2}^{\prime} \left( t \right)} \right) \); green: uη3(t) \( \left( {u_{\eta 3}^{\prime} \left( t \right)} \right) \); black: uη4(t) \( \left( {u_{\eta 4}^{\prime} \left( t \right)} \right) \): a the numerical solutions and b the first derivatives of the numerical solutions (colour figure online)

7 Relative comparative analysis

Comparative analysis is a study that compares and contrasts different things and widely used in various of sciences. This section summarizes several key features of the classical numerical method that usually used for solving such systems in comparison with the RKHS method.

Five classical numerical techniques are widely used for the solution of BVPs; the shooting method, the OC method, the FD method, the QL method, and the CGA. The solution accuracy of some of these methods is poor for linear BVPs, and all of these methods except the CGA provide either unsatisfactory solutions or diverged solutions for nonlinear BVPs. In fact, when solving nonlinear BVPs, most of these methods require some major modifications that include the use of some root-finding technique or other numerical method such that Runge–Kutta method. Furthermore, the error in the approximated nodal values obtained using these methods in general increase as the node of concern is moved away from the given initial–final conditions.

The shooting method for approximating the solutions to the nonlinear BVPs involves solving a sequence of initial value problems, it is important to use a root-finding technique that converges rapidly like Newton–Raphson method. It should be noted that Newton’s method used with the shooting technique requires the solution of an additional initial value problem. Anyhow, the nonlinear shooting method is confronted with the following difficulties; first, it is required to solve an initial value problem over the period \( \left[ {t_{0} ,t_{f} } \right] \) in each iteration of the used root-finding technique. Second, it requires good initial guesses that lie in the domain of convergence. Whilst, the linear shooting method suffers from rounding errors, which may cause cancellation of significant digits.

The FD method for numerically approximating the solutions to the BVPs consists of replacing each derivative in the differential equations by a difference quotient that approximates that derivative. If \( N \) stands for the number of mesh points, then the analytic problem of solving the BVPs is converted to an algebraic problem of solving \( nN \) equations with \( nN \) unknowns. For linear BVPs, this process results in a system of linear equations, which involves an \( nN \times nN \) matrix that can be easily solved, while for nonlinear BVPs, the system of equations that are derived will be nonlinear. The \( nN \times nN \) nonlinear system obtained from this method is then solved using Newton’s method. A sequence of iterates is generated which converges to the solution of the problem provided that the initial approximation is sufficiently close to the solution and the Jacobian matrix for the system is nonsingular. The FD method for the solution of the nonlinear BVPs is by no means without drawbacks; first, Newton’s method for the solution of nonlinear systems requires an \( nN \times nN \) linear system to be solved at each iteration. Second, since a good initial approximation is required, an upper bound for the number of iterations should be specified and, if exceeded, a new initial approximation should be considered.

The QL method depending on dividing the solutions into two stage; the homogenous solutions and the particular solutions. In this direction, the homogenous solutions are generated by using the given initial conditions and the particular solutions are generated by using the remaining final conditions. In the meantime, other numerical method such as Runge–Kutta must be used. Note that the divergence in this method may result from poor guess. Also if the column matrix of the homogenous solutions is singular, then the method cannot solve the problem. Moreover, this method cannot be solved unless the number of equations is even and the number of equations with initial conditions equal the number of equations with final conditions.

The OC method is based on the concept of interpolation of \( N \) collocation points, that is, choosing a function, usually orthogonal polynomials, that approximates the solutions of the given BVPs in the range of integration, \( \left[ {t_{0} ,t_{f} } \right] \), and determining the coefficients of these functions from a set of base points. This method chooses the trial functions to be the linear combination of series of orthogonal polynomials. Anyhow, the drawbacks in this method is the need to solve \( n\left( {N + 1} \right) \) simultaneous nonlinear algebraic equations whose solution can be obtained using Newton’s method for nonlinear algebraic equations. Generally, the orthogonal OC method is more accurate than either the FD difference method or the QL method.

The CGA might be considered as a variation of the FD method in the sense that each of the derivatives is replaced by an appropriate difference-quotient approximation. The CGA depending on generating several random smooth solutions curves throughout the evolution of the algorithm in order to obtain the required nodal values. In this technique the BVP is converted into an optimization problem based on the residual of the nodal values where the optimal solution is obtained when the fitness approaches unity. This technique results in three main drawbacks: firstly, the complexity in formulating the optimization problem, especially, for nonhomogeneous initial–final conditions; secondly, the randomness in the algorithm could made the residual of the nodal values not reflect the accuracy of the solutions; thirdly, the algorithm normally suffers from computational burden when applied on sequential machines; this means that the time required for solving certain problem will be relatively large.

The RKHS method originated as a solver for the boundary value problems of harmonic and biharmonic function types. It started as an extension of the Green function theory of structural analysis. In this technique all the complexities of the problems, like varying shape, BCs, and loads are maintained as they are but the solutions obtained are approximate. Because of its diversity and flexibility as an analysis tool, it is receiving much attention in applied mathematics, physics, and engineering. The fast improvements in computer hardware technology and slashing of cost of computers have boosted this technique, since the computer is the basic need for the application of this algorithm.

The advantages of the utilized RKHS method lie in the following powerful points; firstly, it can produce good globally smooth numerical solutions, and with ability to solve many differential systems with complex constraints conditions, which are difficult to solve; secondly, the numerical solutions and their derivatives are converge uniformly to the exact solutions and their derivatives, respectively; thirdly, the method is mesh-free, easily implemented and capable in treating various differential systems and various BCs; fourthly, the method does not require discretization of the variables, and one is not faced with necessity of large computer memory and time; fifthly, in the proposed method, it is possible to pick any point in the interval of integration and as well the approximate solutions and their derivatives will be applicable; sixthly, it is accurate, needless effort to achieve the results, and is developed especially for the nonlinear case; seventhly, in the RKHS method the error in the approximated nodal values does not affected by the distance from the given initial–final conditions.

8 Concluding remarks

The reproducing kernel algorithm is a powerful method for solving various linear and nonlinear differential systems of different types and orders. In this article, we introduce the reproducing kernel approach to enlarge its application range. It is analyzed that the proposed method is well suited for use in BVPs for ODEs of volatile orders and resides in its simplicity in dealing with initial–final conditions. However, the RKHS method does not require discretization of the variables, it provides the best solution in a less number of iterations and reduces the computational work. Numerical experiments are carried out to illustrate that the present method is an accurate and reliable analytical technique for treating BVPs of regular-singular types. It is worth to be pointed out that the RKHS method is still suitable and can be employed for solving other strongly linear and nonlinear systems of differential equations.

References

Strang, G., Fix, G.: An Analysis of the Finite Element Method. Wellesley-Cambridge, Cambridge (2008)

Pytlak, R.: Numerical Methods for Optimal Control Problems with State Constraints. Springer, Berlin (1999)

Kubicek, M., Hlavacek, V.: Numerical Solution of Nonlinear Boundary Value Problems with Applications. Dover Publications, Mineola (2008)

Keller, H.B.: Numerical Methods for Two-Point Boundary-Value Problems. Dover Publications, Mineola (1993)

Ascher, U.M., Mattheij, R.M.M., Russell, R.D.: Numerical Solution of Boundary Value Problems for Ordinary Differential Equations (Classics in Applied Mathematics). SIAM, Philadelphia (1995)

Trent, A., Venkataraman, R., Doman, D.: Trajectory generation using a modified simple shooting method. In: Aerospace Conference, Proceedings: 2004 IEEE, vol. 4, pp. 2723–2729 (2004)

Holsapple, R.W., Venkataraman, R., Doman, D.: New, fast numerical method for solving two-point boundary-value problems. J. Guid. Control Dyn. 27, 301–304 (2004)

Zhao, J.: Highly accurate compact mixed methods for two point boundary value problems. Appl. Math. Comput. 188, 1402–1418 (2007)

Cash, J.R., Wright, M.H.: A deferred correction method for nonlinear two-point boundary value problems: implementation and numerical evaluation. SIAM J. Sci. Stat. Comput. 12, 971–989 (1991)

Cash, J.R., Moore, D.R., Sumarti, N., Daele, M.V.: A highly stable deferred correction scheme with interpolant for systems of nonlinear two-point boundary value problems. J. Comput. Appl. Math. 155, 339–358 (2003)

Lentini, M., Pereyra, V.: An adaptive finite difference solver for nonlinear two-point boundary value problems with mild boundary layers. SIAM J. Numer. Anal. 14, 91–111 (1977)

Cash, J.R., Moore, G., Wright, R.W.: An automatic continuation strategy for the solution of singularly perturbed nonlinear boundary value problems. ACM Trans. Math. Softw. 27, 245–266 (2001)

Badakhshan, K.P., Kamyad, A.V.: Numerical solution of nonlinear optimal control problems using nonlinear programming. Appl. Math. Comput. 187, 1511–1519 (2007)

Abo-Hammour, Z.S., Asasfeh, A.G., Al-Smadi, A.M., Alsmadi, O.M.K.: A novel continuous genetic algorithm for the solution of optimal control problems. Optim. Control Appl. Methods 32, 414–432 (2011)

Alsayyed, O.: Numerical Solution of Temporal Two-Point Boundary Value Problems Using Continuous Genetic Algorithms, Ph.D. Thesis, University of Jordan, Jordan (2006)

Cui, M., Lin, Y.: Nonlinear Numerical Analysis in the Reproducing Kernel Space. Nova Science, New York (2009)

Berlinet, A., Agnan, C.T.: Reproducing Kernel Hilbert Space in Probability and Statistics. Kluwer Academic Publishers, Boston (2004)

Daniel, A.: Reproducing Kernel Spaces and Applications. Springer, Basel (2003)

Weinert, H.L.: Reproducing Kernel Hilbert Spaces: Applications in Statistical Signal Processing. Hutchinson Ross, London (1982)

Lin, Y., Cui, M., Yang, L.: Representation of the exact solution for a kind of nonlinear partial differential equations. Appl. Math. Lett. 19, 808–813 (2006)

Zhoua, Y., Cui, M., Lin, Y.: Numerical algorithm for parabolic problems with non-classical conditions. J. Comput. Appl. Math. 230, 770–780 (2009)

Yang, L.H., Lin, Y.: Reproducing kernel methods for solving linear initial-boundary-value problems. Electron. J. Differ. Equ. 2008, 1–11 (2008)

Abu Arqub, O., Maayah, B.: Solutions of Bagley–Torvik and Painlevé equations of fractional order using iterative reproducing kernel algorithm. Neural Comput. Appl. 29, 1465–1479 (2018)

Abu Arqub, O.: The reproducing kernel algorithm for handling differential algebraic systems of ordinary differential equations. Math. Methods Appl. Sci. 39, 4549–4562 (2016)

Abu Arqub, O., Al-Smadi, M., Shawagfeh, N.: Solving Fredholm integro-differential equations using reproducing kernel Hilbert space method. Appl. Math. Comput. 219, 8938–8948 (2013)

Abu Arqub, O., Al-Smadi, M.: Numerical algorithm for solving two-point, second-order periodic boundary value problems for mixed integro-differential equations. Appl. Math. Comput. 243, 911–922 (2014)

Momani, S., Abu Arqub, O., Hayat, T., Al-Sulami, H.: A computational method for solving periodic boundary value problems for integro-differential equations of Fredholm-Voltera type. Appl. Math. Comput. 240, 229–239 (2014)

Abu Arqub, O., Al-Smadi, M., Momani, S., Hayat, T.: Numerical solutions of fuzzy differential equations using reproducing kernel Hilbert space method. Soft. Comput. 20, 3283–3302 (2016)

Abu Arqub, O., Al-Smadi, M., Momani, S., Hayat, T.: Application of reproducing kernel algorithm for solving second-order, two-point fuzzy boundary value problems. Soft. Comput. 21, 7191–7206 (2017)

Abu Arqub, O.: Adaptation of reproducing kernel algorithm for solving fuzzy Fredholm-Volterra integrodifferential equations. Neural Comput. Appl. 28, 1591–1610 (2017)

Abu Arqub, O.: Approximate solutions of DASs with nonclassical boundary conditions using novel reproducing kernel algorithm. Fundam. Inf. 146, 231–254 (2016)

Abu Arqub, O.: Fitted reproducing kernel Hilbert space method for the solutions of some certain classes of time-fractional partial differential equations subject to initial and Neumann boundary conditions. Comput. Math Appl. 73, 1243–1261 (2017)

Abu Arqub, O.: Numerical solutions for the Robin time-fractional partial differential equations of heat and fluid flows based on the reproducing kernel algorithm. Int. J. Numer. Methods Heat Fluid Flow 28, 828–856 (2018)

Abu Arqub, O., Al-Smadi, M.: Numerical algorithm for solving time-fractional partial integrodifferential equations subject to initial and Dirichlet boundary conditions. Numer. Methods Partial Differ. Equ. (2017). https://doi.org/10.1002/num.22209

Abu Arqub, O.: Computational algorithm for solving singular Fredholm time-fractional partial integrodifferential equations with error estimates. J. Appl. Math. Comput. (2018). https://doi.org/10.1007/s12190-018-1176-x

Abu Arqub, O., Shawagfeh, N.: Application of reproducing kernel algorithm for solving Dirichlet time-fractional diffusion-Gordon types equations in porous media. J. Porous Med. (2017). In Press

Abu Arqub, O.: Solutions of time-fractional Tricomi and Keldysh equations of Dirichlet functions types in Hilbert space. Numer. Methods Partial Differ. Equ. (2017). https://doi.org/10.1002/num.22236

Abu Arqub, O., Rashaideh, H.: The RKHS method for numerical treatment for integrodifferential algebraic systems of temporal two-point BVPs. Neural Comput. Appl. (2017). https://doi.org/10.1007/s00521-017-2845-7

Geng, F.Z., Qian, S.P.: Reproducing kernel method for singularly perturbed turning point problems having twin boundary layers. Appl. Math. Lett. 26, 998–1004 (2013)

Jiang, W., Chen, Z.: A collocation method based on reproducing kernel for a modified anomalous subdiffusion equation. Numer. Methods Partial Differ. Equ. 30, 289–300 (2014)

Geng, F.Z., Qian, S.P., Li, S.: A numerical method for singularly perturbed turning point problems with an interior layer. J. Comput. Appl. Math. 255, 97–105 (2014)

Geng, F.Z., Cui, M.: A reproducing kernel method for solving nonlocal fractional boundary value problems. Appl. Math. Lett. 25, 818–823 (2012)

Jiang, W., Chen, Z.: Solving a system of linear Volterra integral equations using the new reproducing kernel method. Appl. Math. Comput. 219, 10225–10230 (2013)

Geng, F.Z., Qian, S.P.: Modified reproducing kernel method for singularly perturbed boundary value problems with a delay. Appl. Math. Model. 39, 5592–5597 (2015)

Acknowledgements

The author would like to express his gratitude to the unknown referees for carefully reading the paper and their helpful comments.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that they have no conflict of interest.

Rights and permissions

About this article

Cite this article

Abu Arqub, O. Numerical solutions of systems of first-order, two-point BVPs based on the reproducing kernel algorithm. Calcolo 55, 31 (2018). https://doi.org/10.1007/s10092-018-0274-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10092-018-0274-3