Abstract

This paper presents a computational method to solve nonlinear boundary value problems with multi-point boundary conditions. These problems have important applications in the theoretical physics and engineering problems. The method is based on reproducing kernel Hilbert spaces operational matrices and an iterative technique is used to overcome the nonlinearity of the problem. Furthermore, a rigorous convergence analysis is provided and some numerical tests reveal the high efficiency and versatility of the proposed method. The results of numerical experiments are compared with analytical solutions and the best results reported in the literature to confirm the good accuracy of the presented method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The developments of the numerical methods for the solution of multi-point boundary value problems are important since such problems arise in many branches of science as mathematical models of various real-world processes. Multi-point boundary value problems arise in several branches of engineering, applied mathematical sciences and physics, for instance modeling large-size bridges (Geng and Cui 2010), problems in the theory of elastic stability (Timoshenko 1961) and the flow of fluid such as water, oil and gas through ground layers and fluid flow through multi-layer porous medium (Hajji 2009). Bitsadze and Samarskii (1969) have studied a new problem in which the multi-point boundary conditions depend on the values of the solution in the interior and boundary of the domain. The Bitsadze–Samarskii multi-point boundary value problems (Bitsadze and Samarskii 1969) arise in mathematical modeling of plasma physics processes. The well-posedness, existence, uniqueness and multiplicity of solutions of Bitsadze–Samarskii-type multi-point boundary value problems have been investigated by many authors, see Hajji (2009), Kapanadze (1987), Ma (2004), Ashyralyev and Ozturk (2014) and the references given there. However, research for numerical solutions of the Bitsadze–Samarskii-type boundary value problems, has proceeded slowly. In recent years, the approximate solutions to multi-point boundary value problems were given by shooting method (Zou et al. 2007), the Sinc-collocation method (Saadatmandi and Dehghan 2012), shooting reproducing kernel Hilbert space method (Abbasbandy et al. 2015), difference scheme (Ashyralyev and Ozturk 2014) and method of successive iteration (Yao 2005). Methods of solution of the Bitsadze–Samarskii multi-point boundary value problems have been considered by some researchers (Geng and Cui 2010; Zou et al. 2007; Saadatmandi and Dehghan 2012; Ali et al. 2010; Tatari and Dehghan 2006; Reutskiy 2014; Azarnavid and Parand 2018; Ascher et al. 1994). Here, we use an iterative reproducing kernel Hilbert space pseudospectral (RKHS–PS) method for the solution of nonlinear Bitsadze–Samarskii boundary value problems with multi-point boundary conditions. In this article, we consider the nonlinear boundary value problems in the following form

with the nonhomogeneous Bitsadze–Samarskii-type multi-point boundary conditions

where \(\psi _{1},\psi _{2}\) are some constant and \(\xi _{1},\xi _{2},\ldots ,\xi _{J}\) are some points in the interior of the domain and also

Recently, several techniques based on the reproducing kernel Hilbert spaces have attracted great attention and are extensively used for the numerical solving of the various types of ordinary and partial differential equations (Abbasbandy and Azarnavid 2016; Azarnavid et al. 2015, 2018a, b; Emamjome et al. 2017; Arqub 2016a, b, 2017a, b; Arqub et al. 2013, 2016, 2017; Al-Smadi et al. 2016; Niu et al. 2012a, b, 2018; Lin et al. 2012; Akgül and Baleanu 2017; Akgül and Karatas 2015; Akgül et al. 2015, 2017; Inc et al. 2012, 2013a, b; Sakar et al. 2017; Inc and Akgül 2014; Akgül 2015). This paper presents an iterative approach based on reproducing kernel Hilbert space pseudospectral method to find the numerical solution of nonlinear boundary value problems with multi-point boundary conditions. There are two main techniques to deal with the boundary conditions for pseudospectral methods, restrict attention to the basis functions that satisfy the boundary conditions exactly or do not restrict the basis functions, but the boundary conditions are enforced by adding some additional equations. Using the basis functions that satisfy exactly the boundary conditions, is great if one can manage it, but it is often very difficult to achieve. Here, the reproducing kernels are constructed in such way that they satisfy the multi-point boundary conditions exactly, so the approximate solution also satisfies the boundary conditions exactly. Then, the operational matrices are constructed using the reproducing kernel Hilbert spaces and an iterative technique is used to overcome the nonlinearity of the problem. The convergence of the iterated technique for the nonlinear boundary problems with multi-point boundary conditions is proved and some test examples are presented to demonstrate the accuracy and versatility of the proposed method.

The advantages of the proposed reproducing kernel pseudospectral method lie in the following; first, the method eliminates the treatment of boundary conditions using the reproducing kernels which satisfies the boundary conditions exactly; second, the method can produce good globally smooth numerical solutions, and with the ability to solve many problems with complex conditions, such as multi-point boundary conditions; third, the numerical solutions and their derivatives are converging uniformly to the exact solutions and their derivatives, respectively; fourth, the numerical solutions and all their derivatives are calculable for each arbitrary point in the given domain.

2 Reproducing kernel Hilbert space pseudospectral method

In this section, we give a brief review of reproducing kernel Hilbert space pseudospectral (RKHS–PS) method. Here, the operational matrices are constructed using the reproducing kernel Hilbert spaces. In pseudospectral methods, we usually seek an approximate solution of the differential equation in the form

where \(\{\lambda _{j}\}_{j=1}^N\) are unknown coefficients and \(\{\phi _{i}\}_{j=1}^N\) are the basis functions. An important feature of pseudospectral methods is the fact that we want to obtain an approximation of the solution on a discrete set of grid points. Here, for the grid points \(x_{i},i=1,\ldots ,N,\) we use the basis functions \(\phi _{i}(x)= K(x,x_{i}),\) where K(., .) is the reproducing kernel of a Hilbert space. If we evaluate the unknown function \(u_{N}(x)\) at grid points \(x_{i},i=1,\ldots ,N,\) then we have,

or in matrix notation,

where \(\varvec{\lambda }=[\lambda _{1},\ldots ,\lambda _{N}]^\mathrm{T}\) is the coefficient vector, the evaluation matrix A has the entries \(A_{i,j}=\phi _{j}(x_{i})\) and \(\varvec{u}=[u_{N}(x_{1}),\ldots ,u_{N}(x_{N})]^\mathrm{T}\). Let L be a linear operator, we can use the expansion (2.1) to compute the \(Lu_{N}\) by operating L on the basis functions,

If we again evaluate at the grid points \(x_{i},i=1,\ldots ,N,\) then we get in matrix notation,

where \(\varvec{u}\) and \(\varvec{\lambda }\) are as above and the matrix \(A_{L}\) has entries \(L\phi _{j}(x_{i})\). Then, we can use (2.3) to solve the coefficient vector \(\varvec{\lambda }=A^{-1}\varvec{u},\) and then (2.5) yields,

so that the operational matrix \(\varvec{L}\) corresponding to linear operator L is given by,

To obtain the differentiation matrix \(\varvec{L}\) we need to ensure invertibility of the evaluation matrix A. This generally depends both on the basis functions and the locations of the grid points \(x_{i},i=0,\ldots ,N\). The reproducing kernel of a Hilbert space is positive definite and then the evaluation matrix A is invertible for any set of distinct grid points. Suppose we have a linear differential equation of the form

by ignoring boundary conditions. An approximate solution at the grid points can be obtained by solving the discrete linear system

where \(\varvec{u}=[u_{N}(x_{1}),\ldots ,u_{N}(x_{N})]^\mathrm{T}\) and \(\varvec{f}=[f(x_{1}),\ldots ,f(x_{N})]^\mathrm{T}\) contain the value of u and f at grid points and \(\varvec{L}\) is the mentioned operational matrix that corresponds to linear differential operator L.

3 Multi-point boundary condition

Multi-point boundary value problems have received considerable interest in the mathematical applications in different areas of science and engineering. In this chapter, we consider nonlinear boundary value problem (1.1) with multi-point boundary conditions (1.2). Let

then the boundary conditions (1.2) can be homogenized using

and if

then u satisfies the multi-point boundary conditions (1.2). After homogenization of the boundary conditions, the problem (1.1) and (1.2) can be converted in the following form

where \(G(x,v)=g(x,v+h_{1}+h_{2},v'+h'_{1}+h'_{2})-h''_{1}(x)-h''_{2}(x)\). To solve the problem (3.4), reproducing kernel spaces \(W_{2}^{s}[a,b]\) with \(s=1,2,3,\ldots \) are defined in the following, for more details and proofs we refer to Cui and Lin (2009).

Definition 3.1

The inner product space \(W_{2}^{s}[a,b]\) is defined as \(W_{2}^{s}[a,b]=\{ u(x)|u^{(s-1)}\) is absolutely continuous real-valued function, \(u^{(s)}\in L^{2}[a,b]\}\). The inner product in \(W_{2}^{s}[a,b]\) is given by

and the norm \(\Vert u\Vert _{W_{2}^{s}}\) is denoted by \(\Vert u\Vert _{W_{2}^{s}}=\sqrt{(u,u)_{W_{2}^{s}}}\),where \(u,v\in W_{2}^{s}[a,b]\).

Theorem 3.1

(Cui and Lin 2009) The space \(W_{2}^{s}[a,b]\) is a reproducing kernel space. That is, for any \(u(.)\in W_{2}^{s}[a,b]\) and each fixed \(x\in [a,b]\), there exists \(K(x,.)\in W_{2}^{s}[a,b]\), such that \((u(.),K(x,.))_{W_{2}^{s}}=u(x)\). The reproducing kernel K(x, .) can be denoted by

where \(c_{i}\) and \(d_{i}\) are the coefficients of reproducing kernel and can be determined by solving a uniquely solvable linear system of algebraic equations, which is completely explained in Cui and Lin (2009). For more details about the method of obtaining kernel K(x, y), refer to Cui and Lin (2009), Geng and Cui (2007), and Li and Cui (2003). \(W_{2,0}^{s}[a,b]\) is defined as \(W_{2,0}^{s}[a,b]=\{u\in W_{2}^{s}[a,b]:u(a)-\sum _{j=1}^{J}\alpha _{j}u(\xi _{j})=0, u(b)-\sum _{j=1}^{J}\beta _{j}u(\xi _{j})=0\}\). Clearly, \(W_{2,0}^{s}[a,b]\) is a closed subspace of \(W_{2}^{s}[a,b]\) and, therefore, it is also a reproducing kernel space. In the following theorem (Geng and Cui 2012), the reproducing kernel of \(W_{2,0}^{s}[a,b]\) is introduced.

Theorem 3.2

Let \(L_{a}u(x)=u(a)-\sum _{j=1}^{J}\alpha _{j}u(\xi _{j}),\) \(L_{b}u(x)=u(b)-\sum _{j=1}^{J}\beta _{j}u(\xi _{j}),\)

and

where the subscript x, y on the operators indicates that the operators are applied to the function of x, y, respectively. If \(L_{a,x}L_{a,y} K(x,y)\ne 0\) and \(L_{b,x}L_{b,y} K_{1}(x,y)\ne 0\), then \(K_{2}(x,y)\) is the reproducing kernel of \(W_{2,0}^{s}[a,b]\).

In Azarnavid and Parand (2016), the authors show that the new constructed kernel satisfies required conditions and if the reference kernel is positive definite then new constructed kernel is positive definite, also. In the proposed method, first, the nonhomogeneous problem is reduced to a homogeneous one, after that we determine the reproducing kernel of \(W_{2}^{s}[a,b]\) for some \(s>2\). Then, \(K_2(x,.)\) the reproducing kernel of \(W_{2,0}^{s}[a,b]\) is constructed using (3.7) and (3.8) and then the functions \(\phi _{j}(x)=K_{2}(x,x_{j}),j=1,\ldots ,N\) are used as the basis functions in (2.1) to approximate the solution of the homogenized problem, hence the approximate solution satisfies the boundary conditions (3.3) exactly.

Theorem 3.3

Suppose that the boundary value problem (3.4) has a unique solution and \(G(x,v,v')\) satisfies Lipschitz condition, i.e., there exists constants \(\mathfrak {l}_{1}\) and \(\mathfrak {l}_{2}\) such that

If \((\frac{(b-a)^{2}}{8}\mathfrak {l}_{1}+\frac{b-a}{2}\mathfrak {l}_{2})<1\) then the sequence \(v_{n}\) is the solution of the following iterative scheme

converges to the unique solution of (3.4).

Proof

Let \(C^{1}[a,b]\) be a Banach space with norm defined by

Suppose that v be the unique solution of problem (3.4) and let \(v(\xi _{j})=\mathfrak {v}_{j},j=0,\ldots ,J+1\) where \(\xi _{0}=a\) and \(\xi _{J+1}=b\). Now, we divide problem (3.4) into \(J+1\) subproblems as follows:

for \(j=1,\ldots ,J+1\). Let \(\mathfrak {h}_{j}(x)=\frac{\xi _{j-1}\mathfrak {v}_{j}-\xi _{j}\mathfrak {v}_{j-1}+(\mathfrak {v}_{j-1}-\mathfrak {v}_{j})x}{\xi _{j-1}-\xi _{j}}\), the solution of the two-point boundary value problem \(P_{j}\) for \(j=1,\ldots ,J+1\) has the following form

where

is the Green’s function of problem \(P_{j}\). For \(j=1,\ldots ,J+1\), we define \(\mathcal {T}_{j}:C^{1}[a,b]\rightarrow C^{1}[a,b]\) as

For any \(u,v \in C^{1}[a,b]\) we have

and also

it is easy to see that

and

Combining (3.16) and (3.17), we have

If \((\frac{(b-a)^{2}}{8}\mathfrak {l}_{1}+\frac{b-a}{2}\mathfrak {l}_{2})< 1\), then \(\mathcal {T}_{j}:C^{1}[a,b]\rightarrow C^{1}[a,b]\) is a contraction mapping and Banach fixed-point theorem implies that operator has a unique fixed point \(v_{j}=\mathcal {T}_{j}v_{j}\). If we let \(v(x)=v_{j}(x)\) for \(x\in [\xi _{j-1},\xi _{j}]\) the v is the unique solution of problem (3.4) and if we let \(v_{n}(x)=v_{j,n}(x)\) for \(x\in [\xi _{j-1},\xi _{j}]\) then it is easy to see that \(v_{n}\) satisfies the boundary condition (3.3) for each n and is the solution of problem (3.10). Hence, the sequence \(v_{n}\), the solution of the iterative scheme (3.10) converges to the unique solution of (3.4). \(\square \)

4 Iterative RKHS-PS method

In this section, we consider the general form of the differential equation

where \(\mathcal {L}\) is a linear differential operator, \(\mathcal {N}\) is a nonlinear operator involving spatial derivatives and f is the nonhomogeneous term. An approximate solution at the grid points can be obtained by solving the discrete linear system

where \(\varvec{u}_{n}\) and \(\varvec{f}\) contains the value of the nth approximate solution \(u_{n}\) and f at grid points and \(\varvec{L}\) is the operational matrix corresponds to the linear differential operator \(\mathcal {L}\) as defined in Sect. 2. Then, the \((n+1) th\) approximate solution at the grid points is given by

The condition number and the spectral radius of the matrix L are dependent on the basis functions and the number of collocation points.

Theorem 4.1

Suppose that \(\mathcal {N}(u)\) satisfies the Lipschitz condition with respect to u

where \(\mathfrak {L}\) is the Lipschitz constant. The proposed scheme (4.3) for the operator problem (4.1) is convergent, if \(\rho (L^{-1})<\frac{1}{\mathfrak {L}}\), where \(\rho (L^{-1})\) is the spectral radius of iteration matrix.

Proof

Let \(\Vert \varvec{u}\Vert _{\infty }=\max _{1\le i\le N}|u(x_{i})|\) for any \(\varvec{u}\in \mathbb {R}^{N}\). Using the Lipschitz condition, it is easy to see that

Then, from (4.3) we have

Let \(n\in \mathbb {N}\) and \(q:=\mathfrak {L}\times \rho (L^{-1})\) then we have

Let \(m,n\in \mathbb {N}\) such that \(m>n\) then

Let \(\epsilon >0\) be arbitrary, since \(q\in [0,1)\), there exists an enough large \(p\in \mathbb {N}\) such that

therefore, for \(m>n>p\) we have

this proves that \({\varvec{u}_{n}}\) is a cauchy sequence in \(\mathbb {R}^{N}\) and it is convergent. \(\square \)

From the previous section it is easy to see that the approximate solution satisfies the boundary conditions exactly.

5 Numerical experiments

In this section, we show the efficiency of the proposed method with the numerical results of two examples. To access both the applicability and the accuracy of the method, we apply the algorithm to the multi-point boundary value problem as follows. The reproducing kernel of \(W^{10}_{2,0}[a,b]\) is used for all examples, except those that are specified. To show the efficiency of the proposed method in comparison with the other methods in the literature and the exact solution, we report maximum absolute errors of the approximate solutions, defined by

where N is the number of the collocation points and \(u_{i}\) and \(\hat{u}_{i}\) are the exact and computed values of solution u at point i. We report results of a very high accuracy even when we have used the proposed method with a relatively small number of data points and iterations.

Example 5.1

Here, we consider the following three-point second-order nonlinear differential equation

with the boundary conditions

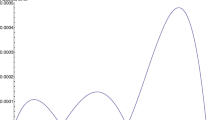

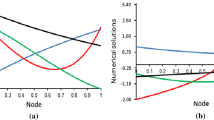

Since the exact solution of this problem is unknown, the approximated solutions are compared with the approximated solutions given by Yao (2005). The comparison of approximate solutions obtained by presented method and successive iteration method (Yao 2005) are given in Fig. 1. The comparison of the values of approximate solutions obtained by different methods given in the literature are reported in Table 1. In the absence of the exact solution, we compare the obtained approximate solution using the proposed method with the reported approximate solutions in the literature. The results reported in Fig. 1 and Table 1 show the good agreements between the approximate solutions obtained by the proposed method and other approved methods.

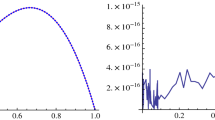

Graph of absolute error for Example 5.2 with \(N=50\) data points and \(n=5,10,15\) iterations, respectively

Example 5.2

In this example, we consider the four-point second-order nonlinear differential equation

with the boundary conditions

where

The exact solution is given by \(y(x)=\frac{1}{3}\sin (x-x^{2})\). The proposed method is applied on Example 5.2 using various n and N and the results are as follows. The absolute error of approximate solutions with \(N=50\) data points and \(n=5,10,15\) iterations are given in Fig. 2.

Graph of absolute error for Example 5.2 with \(N=20\) data points and \(n=15\) iterations in \(W^{8}_{2,0},W^{10}_{2,0},W^{12}_{2,0}\) reproducing kernel Hilbert spaces, respectively

The absolute errors for Example 5.2 with \(N=20\) data points and \(n=15\) iterations in \(W^{8}_{2,0},W^{10}_{2,0},W^{12}_{2,0}\) reproducing kernel Hilbert spaces are presented in Fig. 3. The maximal absolute errors and comparison with the best results reported in Geng and Cui (2010), Saadatmandi and Dehghan (2012), Reutskiy (2014), and Azarnavid and Parand (2018) for Example 5.2 are shown in Table 2 with different numbers of data points \(N=20,30,40\) and \(n=15\) iteration. Table 2 shows the good accuracy of presented method even using a relatively small number of data points and iterations. Results show that more accurate approximations can be obtained using more data points, more iterations, and smoother reproducing kernel spaces.

6 Conclusions

In this paper, an iterative technique based on the reproducing kernel Hilbert spaces operational matrices and pseudospectral method is used to solve the nonlinear Bitsadze–Samarskii boundary value problems with multi-point boundary conditions. Furthermore, the convergence of the presented method is proved and some numerical tests reveal the high efficiency and versatility of the proposed method. To show how good and accurate the presented method is, the results of numerical experiments are compared with analytical solutions and the best results reported in the literature. The results confirm the good accuracy of the proposed technique.

References

Abbasbandy S, Azarnavid B (2016) Some error estimates for the reproducing kernel Hilbert spaces method. J Comput Appl Math 296:789–797

Abbasbandy S, Azarnavid B, Alhuthali MS (2015) A shooting reproducing kernel Hilbert space method for multiple solutions of nonlinear boundary value problems. J Comput Appl Math 279:293–305

Akgül A (2015) New reproducing kernel functions. Math Probl Eng 2015:10. https://doi.org/10.1155/2015/158134

Akgül A, Baleanu D (2017) On solutions of variable-order fractional differential equations. Int J Optim Control Theor Appl (IJOCTA) 7(1):112–116

Akgül A, Karatas E (2015) Reproducing kernel functions for difference equations. Discret Contin Dyn Syst Ser S 8(6):1055–1064

Akgül A, Karatas E, Baleanu D (2015) Numerical solutions of fractional differential equations of Lane–Emden type by an accurate technique. Adv Differ Equ 2015(1):220

Akgül A, Khan Y, Akgül EK, Baleanu D, Al Qurashi MM (2017) Solutions of nonlinear systems by reproducing kernel method. J Nonlinear Sci Appl 10:4408–4417

Ali J, Islam S, Zaman G (2010) The solution of multi-point boundary value problems by the optimal homotopy asymptotic method. Comput Math Appl 59(6):2000–2006

Al-Smadi M, Arqub OA, Shawagfeh N, Momani S (2016) Numerical investigations for systems of second-order periodic boundary value problems using reproducing kernel method. Appl Math Comput 291:137–148

Arqub OA (2016a) Approximate solutions of DASs with nonclassical boundary conditions using novel reproducing kernel algorithm. Fund Inform 146(3):231–254

Arqub OA (2016b) The reproducing kernel algorithm for handling differential algebraic systems of ordinary differential equations. Math Methods Appl Sci 39(15):4549–4562

Arqub OA (2017a) Fitted reproducing kernel Hilbert space method for the solutions of some certain classes of time-fractional partial differential equations subject to initial and Neumann boundary conditions. Comput Math Appl 73(6):1243–1261

Arqub OA (2017b) Adaptation of reproducing kernel algorithm for solving fuzzy Fredholm–Volterra integrodifferential equations. Neural Comput Appl 28(7):1591–1610

Arqub OA, Al-Smadi M, Shawagfeh N (2013) Solving Fredholm integro-differential equations using reproducing kernel Hilbert space method. Appl Math Comput 219(17):8938–8948

Arqub OA, Mohammed AS, Momani S, Hayat T (2016) Numerical solutions of fuzzy differential equations using reproducing kernel Hilbert space method. Soft Comput 20(8):3283–3302

Arqub OA, Al-Smadi M, Momani S, Hayat T (2017) Application of reproducing kernel algorithm for solving second-order, two-point fuzzy boundary value problems. Soft Comput 21(23):7191–7206

Ascher Uri M, Mattheij Robert MM, Russell Robert D (1994) Numerical solution of boundary value problems for ordinary differential equations, vol 13. SIAM, Philadelphia

Ashyralyev A, Ozturk E (2014) On a difference scheme of second order of accuracy for the Bitsadze–Samarskii type nonlocal boundary-value problem. Bound Value Probl 2014(1):1–19

Azarnavid B, Parand K (2016) Imposing various boundary conditions on radial basis functions. arXiv preprint arXiv:1611.07292

Azarnavid B, Parand K (2018) An iterative reproducing kernel method in Hilbert space for the multi-point boundary value problems. J Comput Appl Math 328:151–163

Azarnavid B, Parvaneh F, Abbasbandy S (2015) Picard-reproducing kernel Hilbert space method for solving generalized singular nonlinear Lane–Emden type equations. Math Model Anal 20(6):754–767

Azarnavid B, Shivanian E, Parand K, Soudabeh Nikmanesh (2018a) Multiplicity results by shooting reproducing kernel Hilbert space method for the catalytic reaction in a flat particle. J Theor Comput Chem 17(02):1850020

Azarnavid B, Parand K, Abbasbandy S (2018b) An iterative kernel based method for fourth order nonlinear equation with nonlinear boundary condition. Commun Nonlinear Sci Numer Simul 59:544–552

Bitsadze AV, Samarskii AA (1969) On some simplest generalizations of linear elliptic problems. Doklady Akademii Nauk SSSR 185:69–74

Cui MG, Lin Y (2009) Nonlinear numerical analysis in the reproducing kernel space. Nova Science, New York

Emamjome M, Azarnavid, B, Ghehsareh HR (2017) A reproducing kernel Hilbert space pseudospectral method for numerical investigation of a two-dimensional capillary formation model in tumor angiogenesis problem. Neural Comput Appl. https://doi.org/10.1007/s00521-017-3184-4

Geng FZ, Cui MG (2007) Solving singular nonlinear second-order periodic boundary value problems in the reproducing kernel space. Appl Math Comput 192:389–398

Geng F, Cui M (2010) Multi-point boundary value problem for optimal bridge design. Int J Comput Math 87(5):1051–1056

Geng FZ, Cui MG (2012) A reproducing kernel method for solving nonlocal fractional boundary value problems. Appl Math Lett 25(5):818–823

Hajji MA (2009) Multi-point special boundary-value problems and applications to fluid flow through porous media. In: Proceedings of international multi-conference of engineers and computer scientists (IMECS 2009), vol 31, Hong Kong

Inc M, Akgül A (2014) Numerical solution of seventh-order boundary value problems by a novel method. Abstr Appl Anal 2014:9. https://doi.org/10.1155/2014/745287

Inc M, Akgül, A, Kiliçman A (2012) Explicit solution of telegraph equation based on reproducing kernel method. J Funct Spaces Appl 2012:23. https://doi.org/10.1155/2012/984682

Inc M, Akgül, A, Kiliçman A (2013a) A new application of the reproducing kernel Hilbert space method to solve MHD Jeffery -Hamel flows problem in nonparallel walls. Abstr Appl Anal 2013:12. https://doi.org/10.1155/2013/239454

Inc M, Akgül A, Kiliçman A (2013b) Numerical solutions of the second-order one-dimensional telegraph equation based on reproducing kernel Hilbert space method. Abstr Appl Anal 2013:13. https://doi.org/10.1155/2013/768963

Kapanadze DV (1987) On the Bitsadze–Samarskii nonlocal boundary value problem. J Differ Equ 23(3):543–545

Li CL, Cui MG (2003) The exact solution for solving a class of nonlinear operator equation in the reproducing kernel space. Appl Math Comput 143:393–399

Lin YZ, Niu J, Cui MG (2012) A numerical solution to nonlinear second order three-point boundary value problems in the reproducing kernel space. Appl Math Comput 218(14):7362–7368

Ma R (2004) Multiple positive solutions for nonlinear \(m\)-point boundary value problems. Appl Math Comput 148(1):249–262

Niu J, Lin YZ, Zhang CP (2012a) Numerical solution of nonlinear three-point boundary value problem on the positive half-line. Math Methods Appl Sci 35(13):1601–1610

Niu J, Lin YZ, Zhang CP (2012b) Approximate solution of nonlinear multi-point boundary value problem on the half-line. Math Model Anal 17(2):190–202

Niu J, Xu M, Lin Y, Xue Q (2018) Numerical solution of nonlinear singular boundary value problems. J Comput Appl Math 331:42–51

Reutskiy SY (2014) A method of particular solutions for multi-point boundary value problems. Appl Math Comput 243:559–569

Saadatmandi A, Dehghan M (2012) The use of Sinc-collocation method for solving multi-point boundary value problems. Commun Nonlinear Sci Numer Simul 17(2):593–601

Sakar MG, Akgül A, Baleanu D (2017) On solutions of fractional Riccati differential equations. Adv Differ Equ 2017(1):39

Tatari M, Dehghan M (2006) The use of the Adomian decomposition method for solving multi-point boundary value problems. Phys Scr 73(6):672–676

Timoshenko S (1961) Theory of elastic stability. McGraw-Hill, New York

Yao Q (2005) Successive iteration and positive solution for nonlinear second-order three-point boundary value problems. Comput Math Appl 50(3–4):433–444

Zou YK, Hu QW, Zhang R (2007) On the numerical studies of multi-point boundary value problem and its fold bifurcation. Appl Math Comput 185(1):527–537

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Antonio José Silva Neto.

Rights and permissions

About this article

Cite this article

Nabati, M., Emamjome, M. & Jalalvand, M. Reproducing kernel pseudospectral method for the numerical investigation of nonlinear multi-point boundary value problems. Comp. Appl. Math. 37, 6530–6543 (2018). https://doi.org/10.1007/s40314-018-0704-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40314-018-0704-5

Keywords

- Multi-point boundary condition

- Reproducing kernel Hilbert spaces

- Nonlinear Bitsadze–Samarskii boundary value problem

- Iterative method

- Convergence