Abstract

Probabilistic methods are the most efficient methods to account for different types of uncertainties encountered in the estimated rock properties required for the stability analysis of rock slopes and tunnels. These methods require estimation of various parameters of probability distributions like mean, standard deviation (SD) and distributions types of rock properties, which requires large amount of data from laboratory and field investigations. However, in rock mechanics, the data available on rock properties for a project are often limited since the extents of projects are usually large and the test data are minimal due to cost constraints. Due to the unavailability of adequate test data, parameters (mean and SD) of probability distributions of rock properties themselves contain uncertainties. Since traditional reliability analysis uses these uncertain parameters (mean and SD) of probability distributions of rock properties, they may give incorrect estimation of the reliability of rock slope stability. This paper presents a method to overcome this limitation of traditional reliability analysis and outlines a new approach of rock mass characterization for the cases with limited data. This approach uses Sobol’s global sensitivity analysis and bootstrap method coupled with augmented radial basis function based response surface. This method is capable of handling the uncertainties in the parameters (mean and SD) of probability distributions of rock properties and can include their effect in the stability estimates of rock slopes. The proposed method is more practical and efficient, since it considers uncertainty in the statistical parameters of most commonly and easily available rock properties, i.e. uniaxial compressive strength and Geological Strength Index. Further, computational effort involved in the reliability analysis of rock slopes of large dimensions is comparatively smaller in this method. Present study also demonstrates this method through reliability analysis of a large rock slope of an open pit gold mine in Karnataka region of India. Results are compared with the results from traditional reliability analysis to highlight the advantages of the proposed method. It is observed that uncertainties in probability distribution type and its parameters (mean and SD) of rock properties have considerable effect on the estimated reliability index of the rock slope and hence traditional reliability methods based on the parameters of probability distributions estimated using limited data can make incorrect estimation of rock slope stability. Further, stability of the rock slope determined from proposed approach based on bootstrap method is represented by confidence interval of reliability index instead of a fixed value of reliability index as in traditional methods, providing more realistic estimates of rock slope stability.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Rock mass characterization is a challenging task due to high uncertainties involved in its various geological and mechanical properties. These uncertainties in rock properties can be inherent, statistical or systematic based on their characterization (Duzgun et al. 2002). Uncertainties in rock properties make the stability estimation of rock slopes difficult due to unavailability of a single deterministic value of a rock property that can be used in stability analysis. In practice, average of the sample properties is generally used in the stability analysis in deterministic analysis (Pain et al. 2014; Tiwari and Latha 2016), resulting in overestimation of the stability of rock slopes. Probabilistic approaches provide a better alternative as compared to deterministic approaches for the stability analysis of rock structures since they can include the effect of uncertainties in rock properties (Duzgun et al. 1995; Mauldon and Ureta 1996; Duzgun and Bhasin 2009; Li et al. 2015a; Tiwari and Latha 2017). Different probabilistic methods like first-order reliability method (FORM), second-order reliability method (SORM), Monte Carlo (MC) simulation have been developed by researchers over the years. In these approaches, stability of rock structures is defined in terms of reliability index or probability of failure, which requires accurate values of the statistical parameters, i.e. mean, SD and type of distributions of different rock properties, which are random variables. To estimate these parameters of rock properties, generally rock samples are collected from a few locations of the site for laboratory testing or some in situ tests are carried out in excavated drifts. Samples collected for laboratory testing are often limited because of high costs involved in samples extraction and testing. Limited number of in situ tests are generally carried out inside the excavated drift due to high costs and practical difficulties (Ramamurthy 2013). Considering the variable nature of rock masses, it is impossible to represent the properties of entire rock mass in its spatial extents through properties determined from these limited number of tests. Reliability of rock slope stability estimated using these uncertain statistical parameters and distribution of various rock properties from limited test data becomes questionable and the design of slopes based on these estimates may lead to errors.

The type of epistemic uncertainty associated with the limited availability of samples is called estimation uncertainty (Rocquingny 2012). It includes limited size of samples, observation errors, discrepancies in expert opinion, etc. The impact of this type of epistemic uncertainty leads to uncertainty in plausible values of statistical parameters of input variables and the selection of appropriate model type (probabilistic distribution). It is important to incorporate estimation uncertainty along with the inherent variability in the analysis, when the number of samples available to characterize input parameters is small.

To include the above-mentioned uncertainties associated with limited number of samples, resampling techniques such as bootstrap (Efron 1979; Luo et al. 2013; Most and Knabe 2010; Li et al. 2015b) can provide an efficient alternative. Resampling techniques provide complimentary cumulative distribution function (CCDF) representation of aleatory and epistemic uncertainty combination (Helton 1993). These resampling techniques could be more useful in the field of rock mechanics, since the projects are large in scale and data are often limited. Limited studies have been carried out in the past to estimate the effect of uncertainty in the statistical parameters and distributions of input soil properties in reliability estimation of small-scale soil slopes (Most and Knabe 2010; Luo et al. 2013). However, no studies are so far available in the literature which have tried to provide a method to overcome the difficulty regarding limited available data on rock properties in the stability analysis of rock slopes and which can be used for slopes of large dimensions with small computational effort.

In this study, a practical probabilistic rock mass characterization approach is proposed, which considers the effect of uncertainties in the probability distributions, i.e. statistical parameters and distributions of different rock properties in the reliability estimation of rock slopes. This method is based on resampling technique coupled with Sobol’s global sensitivity analysis and response surface analysis. The method uses numerical tools like finite element method (FEM)/finite difference method (FDM) for calculation and hence can be used for rock slopes of any shape and rock masses exhibiting different constitutive behaviour. This method considers uncertainty in the statistical parameters and distribution types of most commonly available rock properties, i.e. uniaxial compressive strength (UCS) and Geological Strength Index (GSI) as they contribute the most towards the variability of factor of safety (FOS) of slope among all the input parameters, as determined by sensitivity analysis. The proposed method is demonstrated through an example of rock slope of large dimensions. The reliability index of rock slope is estimated using the traditional approach for two cases, first by characterizing the rock mass strength parameters using fewer available data and second by accounting for the estimation uncertainty along with inherent variability associated with fewer samples using bootstrap method. Further, a comparison is made between both the cases and importance of the proposed method is outlined.

2 Details of the Proposed Method

The proposed method is based on four components—construction of response surface, global sensitivity analysis, applying resampling techniques (bootstrap method) and estimation of reliability index of rock structure with uncertain distributions and statistical parameters of input random variables. This section provides brief details regarding these components of the proposed method.

2.1 Details of the Response Surface

In the reliability analysis, most important step is to find out the performance function in terms of input random variables. In case of slope stability problems, performance function is usually the FOS of slope and rock properties are input random variables. In the sampling-based reliability methods, random realization of input random variables (rock properties) is generated and then using appropriate calculation methods like FEM/FDM, output (performance function) is evaluated for each realization. This process is repeated number of times which requires evaluation of numerical model many times, involving huge computational effort. To overcome this limitation of huge computational effort, a simple surrogate relation called response surface between input variables and output is constructed by solving the numerical model using limited realizations of input variables. Once the response surface is constructed, MC simulations can be carried out on this response surface by generating a large number of random realizations of input variables to obtain the output for each realization, which is then used to estimate the distribution of output and thus avoiding repeated evaluation of numerical models using FEM/FDM.

For the current study, we have adopted augmented RBF-based response surface (Krishnamurthy 2003; Pandit and Babu 2017), owing to its high accuracy as compared to global polynomial-based response surface (Krishnamurthy 2003; Fang and Horstemeyer 2006). Construction of response surface is executed in two steps. First step involves careful selection of samples from input space and evaluation of numerical model on those samples. Second step involves computation of unknown coefficients in RBF equation, so that an appropriate correlation between input and output can be made. Several space-filling designs such as Latin hypercube (LH) sampling, uniform design, etc., are employed to generate samples which are distributed throughout the input space. Also, several RBFs can be adopted to construct the response surface (Krishnamurthy 2003). Augmented RBF response surface can be mathematically represented as:

where \(g({\varvec{X}})\) is a function representing the numerical model with inputs as vector \({\varvec{X}},{P_i}({\varvec{X}})\) are monomial terms of polynomial P (x), bj are unknown coefficients, Xi is the input vector at ith sampling point, ϕ is the RBF, \(\parallel {\varvec{X}} - {{\varvec{X}}_{\varvec{i}}}\parallel\) is the Euclidean norm (distance) of vector X from Xi and λi are constants associated with ith RBF. In this study, LH sampling was adopted and compactly supported RBF type-II developed by Wu (1995) was used in the response surface. It is represented as:

where t = r/r0, where r is Euclidean distance and r0 is the radius of compact support.

RBF approximation can be visualized as a weighted contribution from the sampling points in the input space. As the point moves away from the sampling point, its contribution also reduces. The constant r0 is a free parameter and decides the region of influence of a sampling point. r0 can be different for different sampling points, but for simplicity it is assumed equal for all sampling points. Value of r0 is chosen by minimizing the leave one out cross validation error (LOOCV). LOOCV involves constructing the response surface with n − 1 points and checking approximation error at the left-out point. This procedure of leaving out one data is repeated for all n points, and squares of error are added cumulatively. Thus, the value of r0 for which LOOCV is minimum is adopted for RBF response surface:

where \({g_i}({{\varvec{X}}_{\varvec{i}}})\) is approximated output of left-out point from the response surface constructed from n − 1 points and yi is the actual output obtained from the numerical model at left-out point. Now, MC simulation can be performed by realizing random vectors from input space and substituting it in the response surface and subsequently reliability index of the structure can be estimated.

2.2 Global Sensitivity Analysis

Global sensitivity analysis (GSA) is conducted to identify the input parameters (rock properties) that significantly contribute towards the variability in the output (FOS). This generally fulfil two objectives: (1) efficiently allocate the resources towards the estimation of distribution type and its parameters of the input variables; (2) reduce the computational burden by reducing the number of input variables if they do not influence the output. Global sensitivity analysis is advantageous because it considers whole variation in the range of a parameter in the input space, keeping other parameters constant, in contrast to the local sensitivity analysis which deals with the impact of small perturbations of input parameters on the output, around a nominal point. In this study, GSA was used to identify the input parameters for which bootstrap resampling must be conducted. Other input variables which do not influence the FOS of slope by significant amount were assumed random variables whose distribution and parameters were derived from limited data available/literature and no bootstrap resampling was conducted. GSA was conducted via Sobol/Saltelli method (Saltelli et al. 2008), which involves the computation of variance-based sensitivity indices that quantify the relative contribution of each input parameter on the output variability. Sobol variance decomposition procedure involves the representation of performance function in high-dimensional model representation (HDMR), from which first order, interaction effects and total effects of the input parameters are determined. A detailed explanation of this method is mentioned in Satlelli et al. (2008). However, only first order and total effects of the parameters are determined in this study.

The first-order index gives the main contribution of each input parameter, excluding any contribution of that parameter from the interaction terms. For the ith input parameter, it is computed using the following equation:

where V is variance, E is expectation, Y is output, \(Y=g({\varvec{X}})\) is a function of input vector X and Xi is the ith component of X. Total effects of the input parameter include the main contribution (first order) as well as contribution from all the higher order interaction effects. It is computed using equation:

where \({{\varvec{X}}_{\sim i}}\) is a vector having all components except the ith component of X.

Saltelli (2002) provides an MC-based numerical procedure to compute the first and total order Sobol indices. The number of quasi-random samples must be gradually increased until the computed Sobol indices become almost constant. This procedure is described in more detail in the “Appendix”.

2.3 Bootstrap Method for Characterizing Uncertainty in Distribution Type and Its Parameters

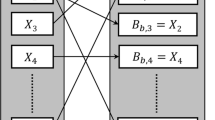

Traditional reliability analyses are often carried out in the stability analysis of different types of rock structures to counter the variability in rock mass properties (Wang et al. 2016; Dadashzadeh et al. 2017). These methods treat input parameters as random variables, define a performance function to obtain output from the input variables and aim at identifying failure region in input domain. Evaluation of probability of failure of rock structures by traditional reliability analysis is accurate only if the input parameters of distributions are accurately characterized. Characterization of distributions of input variables requires testing of large number of samples, which is often not possible in rock engineering cases due to budget constraints, effort and time involved. Therefore, sample statistics and distribution type are determined using limited observations and hence, these parameters themselves contain estimation uncertainties. Resampling technique such as bootstrap (Efron 1979; Luo et al. 2013; Most and Knabe 2010; Li et al. 2015b) can provide an alternate way to estimate the reliability of rock structures having limited data of rock properties. Basic principle of bootstrap method is to obtain the bootstrap samples by resampling from the original data (estimated rock properties) with replacement, i.e. sampling is performed directly from the empirical distribution. Consider an input random variable (rock property) X having N observations \({X_1},~{X_2},~{X_3}, \ldots ,{X_N}\) with mean \({\bar {X}_N}\) and SD SN. The bootstrap sample set \({{\varvec{B}}_{\varvec{i}}}=\left\{ {{B_{i,1}},{B_{i,2}}, \ldots ,{B_{i,N}}} \right\}\) is obtained by random sampling with replacement from original data set \({X_1},~{X_2},~{X_3}, \ldots ,{X_N}\). For each bootstrap sample, statistical parameters (mean, SD and distribution type) of a random variable can be estimated. This is called non-parametric bootstrap which brings distribution free hypotheses for estimation uncertainty (Rocquingny 2012). The above procedure is repeated Ns times to obtain Ns bootstrap sample sets which will give Ns values of statistical parameters of random variable and from these values, variability in statistical parameters (mean, SD and distribution type) of random variables, i.e. rock properties can be estimated. The uncertainty in the parameters of random variables is present in both the distribution type and statistical parameters (mean and SD) and it is important to characterize uncertainties in both as explained below.

2.3.1 Characterizing Probabilistic Distribution Type of Input Variables

Defining a single best-fit probability distribution to a random variable with small sample size is not possible. Akaike Information Criterion (AIC), developed by Akaike (1973), is generally used to rank different probability distributions as to suggest which distribution is more suited for a data set. To characterize this uncertainty in distribution type, large bootstrap sample sets are generated, these bootstrap samples are evaluated for their AIC values to identify the best-fit distribution among different candidate distributions. AIC of a dataset associated with a probability distribution D denoted as AICD is defined as:

where LD is maximum likelihood estimator of the data set associated with the distribution D and KD is the number of parameters required to fully characterize the distribution D. Among all the distributions, the best distribution is the one having the least value of AICD. Moreover, after obtaining the Ns values of AICD from bootstrap sampling, AIC value of original data set can be evaluated statistically (Li et al. 2015b), with mean AIC \(({\mu _{{\text{AI}}{{\text{C}}_D}}})\) and SD of AIC \(({\sigma _{{\text{AI}}{{\text{C}}_D}}})\) calculated as follows:

where \({\text{AI}}{{\text{C}}_{{i_D}}}\) is the AIC value of ith bootstrap sample associated with the distribution D.

2.3.2 Characterizing Uncertainty in Parameters (Mean and SD) of Input Variables

To calculate the distribution parameters, bootstrap sample statistics are evaluated for each bootstrap sample. Statistics of the bootstrap sample—mean (\({\bar {B}_i}\)) and SD (\({S_i}\)) are calculated using the equations:

Through bootstrap samples, statistical measures of original data set can be evaluated statistically, i.e. each statistic (mean, SD) investigated for original data set can be evaluated in terms of its mean and SD (Most and Knabe 2010; Luo et al. 2013; Li et al. 2015b). Thus, the bootstrap mean and SD of original data set mean value are estimated as:

Similarly, bootstrap mean and SD of original data set SD are estimated as:

A large value of Ns (Most and Knabe 2010) leads to converged values of bootstrap mean and SD of sample mean and SD.

2.4 Proposed Methodology to Estimate Reliability of Rock Structure with Uncertain Input Parameters Distributions

Present section describes the methodology adopted to estimate the reliability index of rock slopes from limited available data. Basic principle of the proposed methodology involves the estimation of the range of reliability index using bootstrap method coupled with response surface analysis. Figure 1 provides a flowchart for the proposed methodology. The following section outlines the steps to be followed in the proposed methodology.

Step 1: construct a numerical model which would take input as rock mass properties and outputs the system response. Define a failure condition of the rock structure based on exceedance of a certain threshold value of output. Further, using some sample vectors from the input space, evaluate the numerical model and construct the response surface as mentioned in Sect. 2.1. To increase the accuracy of the response surface, increase the number of sample vectors and construct the response surface again till acceptable accuracy is reached. It should be noted that any type of response surface can be used whose accuracy is sufficient throughout the input space. In this paper augmented RBF based response surface is adopted because it is accurate for both linear and highly nonlinear performance functions.

Step 2: conduct a global sensitivity analysis to identify those parameters which highly influence the variability of the output. Computation of first order and total effects Sobol indices quantifies the relative contribution of each input parameter towards variability in output. The methodology of sensitivity analysis is given in Sect. 2.2. A set of candidate probability distributions are also defined for each sensitive parameter, as given in the literature.

Step 3: determine the number of bootstrap sampling to be conducted for each sensitive parameter. The number of bootstrap samples Ns must be sufficiently large so that for each parameter X, the bootstrap mean and SD of original data set mean \(\left( {{{\left[ {{{\bar {X}}_{{N_{\text{s}}}}}} \right]}_{{\text{mean}}}},{\sigma _{{{\bar {X}}_{{N_{\text{s}}}}}}}} \right)\) and bootstrap mean and SD of original data set SD \(\left( {{{\left[ {{S_{{N_{\text{s}}}}}} \right]}_{{\text{mean}}}},{\sigma _{{S_{{N_{\text{s}}}}}}}} \right)\) becomes nearly constant.

Step 4: once bootstrap sample is obtained and its mean and SD values are determined. For each candidate probability distribution, AICD values are evaluated. Further, the best distribution is selected among the candidate distribution, and its parameters are computed based on bootstrap mean and SD. Now, MC simulation is conducted on the response surface with this best distribution and its parameters and reliability index are evaluated. This is repeated for Ns number of bootstrap samples. Thus, Ns reliability indices corresponding to each bootstrap sample are obtained and further interpretation is done.

3 Case Study

3.1 General Details

Slope selected for the case study is a part of an important mining project known as the Ganajur Gold Mining Project in Karnataka region of India. To extract gold deposits at the site, open pit mining was implemented which requires stability analysis of open pit gold mine slopes. One of these large slopes is selected for the current study to demonstrate the current methodology. The slope is situated near the Ganajur village, 14°49′54.08″–14°50′16.84″N latitude; 75°24′16.57″–75°24′48.39″E longitude. Figure 2 shows the location map of the site.

The major rock type present at the site is greywacke and inter-bedded banded auriferous ferruginous chert (the banded iron formation), which are the part of the greenstone Shimoga belt. Figure 3 shows the site photograph. Geological features of the rock joints and rock mass were varying with the locations as observed during exploratory drilling carried out at the site. Random joint sets were observed along with four major joint sets. Joints parameters were characterized using ISRM-suggested methods (ISRM 1981). Undulating joints were more often found with smooth to rough texture. Water condition along the joints varied from wet to dry along different locations at the site. Joint spacing varied from extremely close to wide at greater depth. Rock mass was classified according to various well-accepted rock mass classification systems. A range of these ratings along with their average values is provided due to the varying quality of rock mass at the site. Table 1 shows the average value and range of the values of the rock mass classification ratings for the rock mass present at the site. For more details on the geology of this site, refer to Pandit et al. (2018).

Samples of intact rock were collected during drilling to determine various intact rock properties. Statistical description of different intact rock properties, i.e. UCS, Young’s modulus (\({E_i}\)), Poisson’s ratio (ν), tensile strength (σt) and unit weight (γ) estimated from laboratory testing methods suggested by ISRM (1981) are given in Table 2.

3.2 Stability Analysis of the Slope by Characterizing the Rock Mass Strength Parameters Using Fewer Data

Traditional reliability analysis was carried out using MC simulation performed on response surface, with distribution type and its parameters of input random variables determined using limited samples. For the construction of response surface, FOS of slope is evaluated at n vectors of input parameters, i.e. UCS, Hoek–Brown constant for intact rock (mi), Ei and GSI which were obtained from LH sampling (Montgomery 2001) from input parameter space. Since, the FOS of the slope is governed by rock mass parameters, these n vectors are converted into rock mass properties using relations provided by Hoek et al. (2002) and Hoek and Diederichs (2006) and serve as input to numerical model.

Numerical calculation has been carried out using finite difference program FLAC2D (Itasca 2011) in plane strain mode. Figure 4 shows the numerical model prepared in FLAC2D for the calculation. Height of the benches in the slope is 10 m and inclination is 68°. Bottom boundary of the model is fixed with no displacement allowed in any direction. Along the lateral boundaries, displacement is allowed in vertical direction and restrained in horizontal direction. Slope face boundary and upper boundary were kept free with allowance of displacement in both directions. For the estimation of factor of safety, strength reduction factor technique was adopted. 12,349 zones were used to discretize the slope. Convergence technique as suggested by Pain et al. (2014) was used to decide the number of zones. For this method, no of zones were increased for the numerical model until factor of safety becomes constant or results becomes independent of the number of the zones. Hoek–Brown model was considered as the yield criterion to estimate the yielded zone in slope. Statistical parameters of input intact rock properties are shown in Table 2.

75 samples from input parameter space are utilized to construct the response surface. This response surface takes input values of UCS, mi, Ei and GSI to give FOS of slope as output. LOOCV optimization procedure is carried out in Matlab (2016). Estimation of the accuracy of the response surface is checked using three quantitative indices namely Nash–Sutcliffe efficiency (NSE), percent bias (PBIAS) and the ratio of root-mean-square error to SD of observed data (RSR) (Moriasi et al. 2007; Pandit and Babu 2017) by generating 25 off sample points via LH sampling. Response surface performance was found to be very good for all three indices and it produces highly accurate approximation of FOS of slope.

After construction of response surface, 106 MC simulations are performed with input parameter characterized using the original dataset of input rock properties and mean and SD of FOS of slope is computed. Failure of slope is defined as FOS value being less than 1 during MC realization. Lognormal distribution was observed to be the best fit to the FOS data obtained by MC simulations as shown in Fig. 5. Reliability index (R) is calculated as:

where μFOS is mean FOS and VFOS is coefficient of variation of FOS. Results of the reliability analysis are given in Table 3 which include mean and SD of FOS, reliability index, probability of failure and expected performance level. Expected performance level of slope determined using reliability index falls under Above Average category even for high mean FOS according to the classification given by U.S. Army Corps of Engineers (1999) due to large variation in rock properties which led to high coefficient of variation of FOS.

4 Stability Analysis of the Slope Using Proposed Method

As mentioned in the previous sections, stability analysis of the slope from the proposed methodology involves four major steps. The first step, which is the construction of response surface is already explained in Sect. 3.2. In following sections, steps two to four are described in detail for the case study to show the applicability and efficiency of the proposed method.

4.1 Global Sensitivity Analysis

In this section, global sensitivity analysis (GSA) is conducted to identify input parameters (rock mass properties) which significantly contributes towards the variability in the output (FOS) as suggested in step 2 of Fig. 1. The sensitivity indices of the four input parameters of rock mass namely, UCS, mi, Ei and GSI are computed as suggested in Sect. 2.2 (Table 4). Number of quasi-random samples k in this study was taken as 105. It can be observed that UCS and GSI contribute almost equally towards the variability of FOS, in both first order and total effect analysis. Therefore, only UCS and GSI are sensitive parameters and are considered for bootstrap analysis.

4.2 Estimation of Uncertainty in Distributions and Statistical Parameters of Rock Mass Properties

After identifying the most sensitive rock mass properties (UCS, GSI), estimation of uncertainty in the distribution and statistical parameters of these properties is carried out in this section (step 3 in Fig. 1). To estimate the probabilistic distribution of GSI, the relation between RQD and joint condition factor of RMR (Bieniawski 1989) (JCond89) was used as given in equation below. This equation was provided by Hoek et al. (2013):

Original data set of the UCS, RQD and JCond89 obtained from laboratory and field investigation is mentioned in Table 5. All the three parameters were assumed independent. Since UCS and GSI (RQD and JCond89) are most sensitive parameters which govern the stability of rock slope, bootstrap analysis is performed only on these two parameters. The bootstrap mean, SD and AIC values corresponding to three well-accepted distributions for each bootstrap sample of UCS is computed. Similarly, for bootstrap RQD and JCond89 samples, bootstrap mean, SD and AIC values associated with their candidate distributions are evaluated. Furthermore, the estimates of mean, SD and COV of sample mean, SD and AIC values of original data set are computed. Empirical distribution of bootstrap mean and SD estimates are best fitted using kernel density smoothing (Bowman and Azzalini 1997) and are plotted. A value of Ns = 1000 was deemed sufficient for the analysis in this paper as demonstrated in Fig. 6.

The candidate distributions of RQD were normal and lognormal distributions (Basarir et al. 2016; Madani et al. 2018) while JCond89 was assumed to be normally distributed (Basarir et al. 2016). First, bootstrap mean, SD and AIC values were computed for RQD and JCond89. Subsequently, bootstrap mean, SD and AIC values of GSI was estimated by conducting 105 MC simulation in Eq. (16), for each bootstrap of RQD and JCond89 after assigning them best-fit distribution and its parameters. The MC samples thus obtained for GSI were evaluated for mean, SD and AIC values associated with the candidate distributions of GSI.

For UCS, candidate distributions comprise normal, lognormal and Weibull distributions, truncated at 0 MPa and 152 MPa. For GSI, normal and lognormal distributions were considered, with truncation limits of 0 and 70. These distributions were commonly observed and adopted for UCS and GSI in previous studies (Jiang et al. 2016; Morelli 2015). Figure 7 shows PDF after kernel density smoothing of AIC values associated with respective distributions. Bootstrap mean and SD estimates of AIC are provided in Table 6. It can be observed that SD of AIC is high, which indicates large variation from the AIC estimated from fewer sample size (original data set). For UCS parameter, SD of AIC associated with normal and Weibull distributions are almost comparable, however, it is slightly higher for lognormal distribution. For GSI parameter, the AIC mean value is higher for normal distribution as compared to lognormal distribution, however the SD of AIC for former is lower than the latter. These variations in AIC values indicate the existence of uncertainty associated with type of best-fit PDF of a certain input parameter.

Figure 8 shows the probability density function (PDF) of bootstrap mean and SD of UCS and their statistical estimates are provided in Table 6. As evident from Tables 2 and 6, bootstrap sampling provides variation in statistics of estimate of mean and SD of original UCS data. The mean values of bootstrap samples match the statistics that have been estimated from original data set (small sample). However, the SD of statistics is quite high. Similar observations can be derived from Fig. 9 which gives PDF of mean and SD estimates of GSI data. These variations demonstrate existing uncertainty in mean and SD of original data sets and subsequently uncertainty in probability distribution parameters assigned to them.

The best-fit distribution among the candidate distributions can be identified by choosing the PDF having the highest probability of low AIC value associated with that PDF. 1000 bootstrap samples thus obtained are associated with 1000 best-fit distributions, choosing one among the candidate PDFs. It is not possible to have a single best-fit PDF for small sample size of parameters; hence different candidate PDFs were found to be best fits with different probabilities. The number of times a candidate PDF was chosen as best fit for UCS and GSI variables is given in Table 7. For UCS, lognormal and Weibull PDFs are found to be best fits with a probability of 67% and 33%, respectively. Lognormal has high probability of being the best-fit PDF of GSI with a probability of 96%. AIC values associated with normal and lognormal for UCS parameters are very close, leading to inaccurate judgment regarding the best-fit PDF among them.

4.3 Step 4: Estimation of Reliability Index of the Rock Slope

In this section, estimation of the variability in the reliability index of the slope is carried out using the estimated uncertainty in the statistical parameters and distribution of the sensitive rock mass properties (step 4 in Fig. 1). It includes the effect of considering the uncertainty in statistics of input parameters and type of its best-fit PDF on reliability index of rock slope. The augmented RBF-based response surface is utilized for conducting MC simulation corresponding to statistics obtained from each bootstrap sample.

As mentioned in Sect. 4.1, only GSI and UCS are considered as input parameters, owing to their high sensitivity towards the FOS of the rock slope. The best-fit distribution is chosen among the candidate distributions of UCS and GSI for every bootstrap sample. Their best-fit distribution parameters corresponding to each bootstrap sample are also computed. The distributions of UCS and GSI along with other input parameter PDFs are input in augmented RBF based response surface. 106 MC simulations are conducted and output, i.e. FOS of the slope is obtained and its statistics are determined. Thus for 1000 bootstrap samples, 1000 statistics of FOS are obtained (Fig. 10). Finally, 1000 reliability indices of the slope are evaluated. They are plotted in Fig. 11 after kernel density smoothing of empirical distribution of reliability index. 1000 reliability indices are also found to best fit by lognormal distribution.

Results indicated that reliability index of slope varies from 3.12 to 5.26, which corresponds to 105 order of magnitude when viewed in terms of probability of failure. The mean and SD of reliability index are 3.83 and 0.29, respectively. The mean of bootstrap estimates of reliability index matches well with the value found from traditional reliability method. Thus, inclusion of uncertainties associated with distribution parameters and type of GSI and UCS, allows interpretation of reliability index in terms of confidence intervals, instead of a fixed value. Table 8 shows three different reliability index intervals for 90%, 95% and 99% confidence intervals. Expected performance level also ranges between “Above average–High” according to the classification given by U.S. Army Corps of Engineers (1999).

5 Discussion

Stability of a large rock slope is analysed for both the approaches, using the traditional reliability method and results are compared. Although both approaches are showing that slope is stable, the stability or performance is evaluated in different terms and hence implication of the results obtained from these approaches is different. Mean FOS evaluated using both these methods is close to 3.5. However, as we express the stability or performance level of slope in terms of reliability index computed using first approach which characterizes the rock mass strength parameters using fewer available data, expected performance level of slope is “Above average”, which shows the effect of considering uncertainty in rock properties while analysing the stability of slope. Although mean FOS is very high, performance level is above average due to high SD in the estimated FOS. When we analyse the slope stability using proposed methodology which accounts for the estimation uncertainty along with inherent variability associated with fewer samples using the bootstrap method, it was observed that expected performance level of slope is ranging between “Above Average–High”. It can be observed that by the proposed approach, a better estimate of performance of the slope is made which can help in the more accurate determination of stabilization measures if required for the slope. It is more important to implement this technique specifically for the stability analysis of rock slopes due to their large scale and hence engineers remain more uncertain regarding rock properties data obtained from limited laboratory or in situ investigations. Although for this case study slope seems to be stable for both the methods, there could be a possibility that while the slope is stable according to one method and unstable according to other method.

From the practical point of view, it is well known in the field of rock mechanics that even to determine a single property of rock mass in the field, different types of tests are required to be conducted at the site and results of one type of test cannot be relied upon. One such example is the determination of deformation modulus of rock mass in the field which needs to be estimated using different types of field tests, i.e. plate load test, dilatometer test, radial jack test, etc., to get a better idea regarding rock mass deformability. Hence, conducting different types of tests at the site and that too in large number is almost impossible due to high costs involved. Further, most of the times, these tests are conducted inside the drifts which may give variability in rock mass properties inside a small area as compared to large slope dimensions. These practical issues make the reliable estimate of the rock mass properties at the site difficult and this difficulty increases for small projects where budget constraints are high. Estimation of statistical parameters (mean, SD) of rock properties from these limited tests and that too inside a small area of drift will not give reliable estimates of the distribution type and parameters of rock mass properties which may finally lead to incorrect estimation of reliability of rock slope. To overcome these problems, the method proposed here uses two most easily and commonly available parameters of rock mass, i.e. UCS and GSI and gives much more reliable estimate of slope stability which considers estimation uncertainty along with inherent variability in the parameters of rock mass properties distributions, is computationally efficient and practical too.

Proposed methodology in the current article is mostly applicable to the rock slopes which are expected to show a circular failure. Circular failure is expected in the rock slopes which are heavily jointed or weathered and possibility of structurally controlled failures, i.e. planar, wedge or toppling failures do not exist. However, this study can be extended to the rock slopes having the possibility of structurally controlled failures by carrying out bootstrap on the strength and orientation parameters of discontinuities in a similar manner. Moreover, the method successfully accounts for inherent variability and some types of the epistemic uncertainty such as estimation uncertainty and propagation uncertainty arising due to limited number of MC simulations. Epistemic uncertainty arising due to numerical errors involved in deterministic method of solving (FEM/FDM methods) is not considered in the proposed method and can be taken up in further studies.

6 Summary and Conclusion

Due to natural formation of the rocks, variability exists in their properties making determination of the precise values of rock properties impossible. This problem becomes worse when the available data of rock properties are not sufficient enough to use traditional deterministic or probabilistic stability analysis methods as observed mostly in small projects due to budget constraints or in the initial stages of large projects. This paper presents an approach for the evaluation of the stability of rock slopes of large dimensions efficiently by addressing three major issues: (a) variability in the rock properties; (b) availability of limited data of rock properties as explained in the previous section; (c) computational effort required in stability analysis of large slopes. This approach is based on four important steps: (a) response surface construction; (b) global sensitivity analysis; (c) bootstrap technique; (d) estimation of reliability index of rock structure. Major advantage of this is that approach requires estimation of GSI along with UCS which is now widely used to derive engineering design parameters such as the Hoek–Brown strength parameters and deformation moduli of jointed rock masses. This approach is demonstrated for a real rock slope of large dimensions required to be excavated at a gold mine site. It is evident from the analysis that uncertainty in defining input parameter distribution type and its parameters can have significant influence on the reliability index and hence must be included in the analysis. It was observed from the analysis that expected performance level of slope was varying from above average–high with the reliability index varying in the range of 3.19–4.87 for the confidence interval of 0.5–99.5%. Minimum value of reliability index is 3.19 which come in the range of above–average performance level of slope; however, this can be improved by using some stabilization techniques like bench flattening commonly used for mining slopes. Various other stabilization measures like rock bolting can also be used for the rock slopes which come under civil engineering practices. However, for now it seems that the possibility of slope failure is very small, and this small uncertainty can be dealt with using the observational construction method.

Abbreviations

- SD:

-

Standard deviation

- UCS:

-

Uniaxial compressive strength

- GSI:

-

Geological Strength Index

- RBF:

-

Radial basis function

- FOS:

-

Factor of safety

- CCDF:

-

Complimentary cumulative distribution function

- \({X_1},~{X_2},~{X_3}, \ldots ,{X_N}\) :

-

Original data set with N observations

- \({\bar {X}_N}\) :

-

Mean of original data set

- \({S_N}\) :

-

SD of original data set

- \({{\varvec{B}}_{\varvec{j}}}\) :

-

jth bootstrap sample set of input parameter X

- \({N_{\text{s}}}\) :

-

Total number of bootstraps

- \(k\) :

-

No. of quasi-random samples for estimation of Sobol indices

- AIC:

-

Akaike Information Criterion

- AICD :

-

Akaike Information Criterion value associated with distribution D

- \({L_D}\) :

-

Maximum likelihood estimator of the data set associated with the distribution D

- \({K_D}\) :

-

Number of parameters required to fully characterize the distribution D

- \({\mu _{{\text{AI}}{{\text{C}}_D}}}\) :

-

Mean AIC value of distribution D

- \({\sigma _{{\text{AI}}{{\text{C}}_D}}}\) :

-

SD of AIC value of distribution D

- \({\bar {B}_i}\) :

-

Mean of ith bootstrap sample

- \({S_i}\) :

-

SD of ith bootstrap sample

- \({\left[ {{{\bar {X}}_{{N_{\text{s}}}}}} \right]_{{\text{mean}}}}\) :

-

Mean of Ns bootstrap sample means

- \({\sigma _{{{\bar {X}}_{{N_{\text{s}}}}}}}\) :

-

SD of Ns bootstrap sample means

- \({\left[ {{S_{{N_{\text{s}}}}}} \right]_{{\text{mean}}}}\) :

-

Mean of Ns bootstrap sample SDs

- \({\sigma _{{S_{{N_{\text{s}}}}}}}\) :

-

SD of Ns bootstrap sample SDs

- MC:

-

Monte Carlo

- PDF:

-

Probability density function

- FEM:

-

Finite element method

- FDM:

-

Finite difference method

- JCond 89 :

-

Joint condition factor of RMR 89

- LH:

-

Latin hypercube

- \(g({\varvec{X}})\) :

-

Performance function with X vector as input

- \(\phi (r)\) :

-

Radial basis function

- \({P_j}({\varvec{X}})\) :

-

Monomial terms of augmented polynomial P (x)

- \({\lambda _i}\) :

-

Unknown constants associated with ith RBF

- \({b_j}\) :

-

Unknown coefficients

- \(r\) :

-

Euclidean norm (distance) of vector X from Xi

- \({r_0}\) :

-

Radius of compact support of RBF

- \(t\) :

-

r/r0

- LOOCV:

-

Leave-one-out cross-validation error

- \({y_i}\) :

-

Output obtained from \(g({{\varvec{X}}_{\varvec{i}}})\)

- n :

-

Number of LH samples drawn from input space

- HDMR:

-

High dimensional model representation

- \({S_i}\) :

-

First-order Sobol index for ith input parameter

- \({S_{{T_i}}}\) :

-

Total effects Sobol index for ith input parameter

- \({{\varvec{X}}_{\sim i}}\) :

-

Input vector having all components except the ith component

- RQD:

-

Rock quality designation

- RMR:

-

Rock mass rating

- \({E_i}\) :

-

Young’s modulus

- \(\nu\) :

-

Poisson’s ratio

- \({\sigma _t}\) :

-

Tensile strength

- \(\gamma\) :

-

Unit weight of rock mass

- SSR:

-

Shear strength reduction

- \({m_i}\) :

-

Hoek–Brown constant for intact rock

- NSE:

-

Nash–Sutcliffe efficiency

- PBIAS:

-

Percent bias

- RSR:

-

Ratio of root-mean-square error to SD of observed data

- R :

-

Reliability index

- \({\mu _{{\text{FOS}}}}\) :

-

Mean FOS for obtained from single bootstrap sample as input

- \({V_{{\text{FOS}}}}\) :

-

Coefficient of variation of FOS for obtained from single bootstrap sample as input

References

Akaike H (1973) Information theory and an extension of the maximum likelihood principle. In: Petrov BN, Csáki F (eds) 2nd International symposium on information theory, Tsahkadsor, Armenia, USSR, September 2–8, 1971, Akadémiai Kiadó, Budapest, pp 267–281

Basarir H, Akdağ S, Karrech A, Özyurt M (2016) The estimation of rock mass strength properties using probabilistic approaches and quantified GSI chart. In: ISRM Eurock 2016

Bieniawski ZT (1989) Engineering rock mass classifications. Wiley, New York

Bowman AW, Azzalini A (1997) Applied smoothing techniques for data analysis: the kernel approach with s-plus illustrations. Oxford University Press, New York

Dadashzadeh N, Duzgun HSB, Yesiloglu-Gultekin N (2017) Reliability-based stability analysis of rock slopes using numerical analysis and response surface method. Rock Mech Rock Eng 50(8):2119–2133

De Rocquigny E (2012) Modelling under risk and uncertainty: an introduction to statistical, phenomenological and computational methods. Wiley, New York

Duzgun HSB, Bhasin RK (2009) Probabilistic stability evaluation of Oppstadhornet rock slope Norway. Rock Mech Rock Eng 42(5):729–749

Duzgun HSB, Pasamehmetoglu AG, Yucemen MS (1995) Plane failure analysis of rock slopes: a reliability approach. Int J Surf Min Reclam Environ 9(1):1–6

Duzgun HSB, Yuceman MS, Karpuz CA (2002) A probabilistic model for the assessment of uncertainties in the shear strength of rock discontinuities. Int J Rock Mech Min Sci Geomech Abstr 39:743–754

Efron B (1979) Bootstrap methods: another look at the jackknife. Ann Stat 7(1):1–26

Fang H, Horstemeyer MF (2006) Global response approximation with radial basis functions. Eng Optim 38(4):407–424

Helton JC (1993) Uncertainty and sensitivity analysis techniques for use in performance assessment for radioactive waste disposal. Reliab Eng Syst Saf 42:327–367

Hoek E, Diederichs MS (2006) Empirical estimation of rock mass modulus. Int J Rock Mech Min Sci 43(2):203–215

Hoek E, Carranza-Torres C, Corkum B (2002) Hoek–Brown failure criterion—2002 edition. In: Proceedings of the 5th North American rock mechanics symposium, Toronto, Canada, pp 267–273

Hoek E, Carter TG, Diederichs MS (2013) Quantification of the Geological Strength Index chart. In: Paper prepared for presentation at the 47th US rock mechanics/geomechanics symposium held in San Francisco

ISRM (1981) Rock characterization, testing and monitoring. ISRM suggested methods. Pergamon Press, New York

Itasca (2011) FLAC—Fast Lagrangian analysis and continua, version 7.0. Itasca Consulting Group, Inc., Minneapolis

Jiang Q, Zhong S, Cui J, Feng XT, Song L (2016) Statistical characterization of the mechanical parameters of intact rock under triaxial compression: an experimental proof of the Jinping marble. Rock Mech Rock Eng 49(12):4631–4646

Krishnamurthy T (2003) Response surface approximation with augmented and compactly supported radial basis functions. In: Proceedings of 44th aiaa/asme/asce/ahs/asc structures, structural dynamics, and materials conference, Virginia

Li DQ, Jiang SH, Cao ZJ, Zhou CB, Li XY, Zhang LM (2015a) Efficient 3-D reliability analysis of the 530 m high abutment slope at Jinping I hydropower station during construction. Eng Geol 195:269–281

Li DQ, Tang XS, Phoon KK (2015b) Bootstrap method for characterizing the effect of uncertainty in shear strength parameters on slope reliability. Reliab Eng Syst Saf 140:99–106

Luo Z, Atamturktur S, Juang CH (2013) Bootstrapping for characterizing the effect of uncertainty in sample statistics for braced excavations. J Geotech Geoenviron Eng 139(1):13–23

Madani N, Yagiz S, Adoko AC (2018) Spatial mapping of the rock quality designation using multi-Gaussian Kriging method. Minerals 8(11):530

Matlab 9.0 (2016) The MathWorks, Inc., Natick, Massachusetts

Mauldon M, Ureta J (1996) stability analysis of rock wedges with multiple sliding surfaces. Int J Rock Mech Min Sci Geomech Abstr 33:51–66

Montgomery DC (2001) Design and analysis of experiments. Wiley, New York

Morelli GL (2015) Variability of the GSI index estimated from different quantitative methods. Geotech Geol Eng 33:983–995

Moriasi DN, Arnold JG, Van LMW, Bingner RL, Harmel RD, Veith TL (2007) Model evaluation guidelines for systematic quantification of accuracy in watershed simulations. Trans ASABE 50(3):885–900

Most T, Knabe T (2010) Reliability analysis of the bearing failure problem considering uncertain stochastic parameters. Comput Geotech 37(3):299–310

Pain A, Kanungo DP, Sarkar S (2014) Rock slope stability assessment using finite element based modelling—examples from the Indian Himalayas. Geomech Geoeng 00:1–16

Pandit B, Babu GLS (2017) Reliability-based robust design for reinforcement of jointed rock slope. Georisk Assess Manag Risk Eng Syst Geohazards 12(2):152–168

Pandit B, Tiwari G, Latha GM, Babu GLS (2018) Stability analysis of a large gold mine open pit slope using advanced probabilistic method. Rock Mech Rock Eng 51(7):2153–2174

Ramamurthy T (2013) Engineering in rocks for slopes, foundations and tunnels. Prentice Hall of India (Pubs.), New Delhi

Saltelli A (2002) Making best use of model valuations to compute sensitivity indices. Comput Phys Commun 145:280–297

Saltelli A, Ratto M, Andres T, Campolongo F, Cariboni J, Gatelli D, Saisana M, Tarantola S (2008) Global sensitivity analysis. The primer. Wiley, New York

Tiwari G, Latha GM (2016) Design of rock slope reinforcement: an Himalayan case study. Rock Mech Rock Eng 49(6):2075–2097

Tiwari G, Latha GM (2017) Reliability analysis of jointed rock slope considering uncertainty in peak and residual strength parameters. Bull Eng Geol Environ 78(2):913–930

U.S. Army Corps of Engineers (1999) Risk-based analysis in geotechnical engineering for support of planning studies, engineering and design. Department of Army, Washington

Wang Q, Horstemeyer H, Shen L (2016) Reliability analysis of tunnels using a metamodeling technique based on augmented radial basis functions. Tunn Undergr Sp Technol 56:45–53

Wu Z (1995) Compactly supported positive definite radial function. Adv Comput Math 4:283–292

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Monte Carlo method to determine first order and total effect Sobol indices (Saltelli 2002).

-

1.

A quasi-random number was generated in form of matrix of size (k, 2d), where k is called base sample and d is the dimension of the input vector. k in this study was taken as 105. Quasi-random numbers were generated in the Matlab 2016. Further, two matrices A and B are defined having half of the sample.

$$A=\left[ {\begin{array}{*{20}{l}} {X_{1}^{{(1)}}}&{X_{2}^{{(1)}}}& \cdots &{X_{i}^{{(1)}}}& \cdots &{X_{d}^{{(1)}}} \\ \vdots & \vdots & \ddots & \vdots & \ddots & \vdots \\ {X_{1}^{{(k)}}}&{X_{2}^{{(k)}}}& \cdots &{X_{i}^{{(k)}}}& \cdots &{X_{d}^{{(k)}}} \end{array}} \right]$$(17)$$B=\left[ {\begin{array}{*{20}{l}} {X_{{d+1}}^{{(1)}}}&{X_{{d+2}}^{{(1)}}}& \cdots &{X_{{d+i}}^{{(1)}}}& \cdots &{X_{{2d}}^{{(1)}}} \\ \vdots & \vdots & \ddots & \vdots & \ddots & \vdots \\ {X_{{d+1}}^{{(k)}}}&{X_{{d+2}}^{{(k)}}}& \cdots &{X_{{d+i}}^{{(k)}}}& \cdots &{X_{{2d}}^{{(k)}}} \end{array}} \right].$$(18) -

2.

Another matrix Ci is defined which contains all elements of B, except the ith column, which is taken from A.

$${C_i}=\left[ {\begin{array}{*{20}{c}} {X_{{d+1}}^{{(1)}}}&{X_{{d+2}}^{{(1)}}}& \cdots &{X_{i}^{{(1)}}}& \cdots &{X_{{2d}}^{{(1)}}} \\ \vdots & \vdots & \ddots & \vdots & \ddots & \vdots \\ {X_{{d+1}}^{{(k)}}}&{X_{{d+2}}^{{(k)}}}& \cdots &{X_{i}^{{(k)}}}& \cdots &{X_{{2d}}^{{(k)}}} \end{array}} \right].$$(19) -

3.

Compute the output from of A, B and Ci matrices to obtain column matrix of outputs YA, YB and \({Y_{{C_i}}}\).

-

4.

Now, the first-order sensitivity index Si and total effects \({S_{{T_i}}}\) are calculated via Eqs. (20) and (21):

$${S_i}=\frac{{\left( {\frac{1}{k}} \right)\mathop \sum \nolimits_{{j=1}}^{k} y_{A}^{{(j)}}y_{{{C_i}}}^{{(j)}} - f_{0}^{2}}}{{\left( {\frac{1}{k}} \right)\mathop \sum \nolimits_{{j=1}}^{k} {{\left( {y_{A}^{{(j)}}} \right)}^2} - f_{0}^{2}}},$$(20)$${S_{{T_i}}}=1 - \frac{{\left( {\frac{1}{k}} \right)\mathop \sum \nolimits_{{j=1}}^{k} y_{B}^{{(j)}}y_{{{C_i}}}^{{(j)}} - f_{0}^{2}}}{{\left( {\frac{1}{k}} \right)\mathop \sum \nolimits_{{j=1}}^{k} {{\left( {y_{A}^{{(j)}}} \right)}^2} - f_{0}^{2}}},$$(21)where \(y_{A}^{{(j)}}\), \(y_{B}^{{(j)}}\) and \(y_{{{C_i}}}^{{(j)}}\) are the jth element of column vectors YA, YB and \({Y_{{C_i}}}\), and

$$f_{0}^{2}=~{\left( {\frac{1}{k}\mathop \sum \limits_{{j=1}}^{k} y_{A}^{{(i)}}} \right)^2}.$$(22)

Rights and permissions

About this article

Cite this article

Pandit, B., Tiwari, G., Latha, G.M. et al. Probabilistic Characterization of Rock Mass from Limited Laboratory Tests and Field Data: Associated Reliability Analysis and Its Interpretation. Rock Mech Rock Eng 52, 2985–3001 (2019). https://doi.org/10.1007/s00603-019-01780-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00603-019-01780-1