Abstract

This paper probes into the synchronization for memristor-based hybrid neural networks via nonlinear coupling. At first, a new condition is established to judge whether quadratic functions are negative or not on a closed interval regardless of their concavity or convexity. Then, by utilizing Legendre orthogonal polynomials, a recent extended integral inequality with free matrices is popularized to get tighter lower bound of some integral terms. Next, based on a novel Lyapunov functional, by applying our new integral inequality with free matrices, linear convex combination method and the new criterion, a new delay-dependent condition is gained to reach the global synchronization for the considered neural networks. At last, an example is presented to account for the validity of our results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

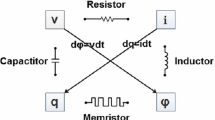

For the sake of retaining symmetry with capacitor, resistor, and inductor in logicality, Chua [5] conceived there must exist a fourth elementary circuit component that connects flux and charge with a curvilinear relation. Chua entitled it memristor, as a condensation of memory and resistor (cf Fig. 1). In 2008, Chua’s conjecture was corroborated by the Hewlett–Packard Labs [34]. This scientific research team produced the model of memristor. Since the memristor’s resistance relies on the charge that had previously passed through the device, the memristor is thought as a auspicious successor to simulate biological synapses in circuit implementation of neural networks. Replacing resistors with memristors as the connection weights of neural networks in the circuit realization, it will produce neural networks called as MNNs. MNNs have a lot of applications in image processing, brain emulation, and pattern recognition, therefore get widespread attention from scientific researchers (see [1, 3, 7, 16, 28, 38, 40, 43, 44, 51]).

Relationship between the four fundamental circuit components [8]

As we know, since the potential paper [27] was revealed to the world, many researchers have strenuously committed to exploring various synchronization issues of chaos. So far, a great variety of synchronization results have been presented due to their potential applications in cryptography, biological system, secure communication, information processing, chemical reactions [15, 19, 39, 49, 50]. By introducing linear diffusive term and sign function term, Guo et al. [7] derived several global exponential synchronization criteria for coupled MNNs (CMNNs) which are based on some suitable Lyapunov–Krasovskii functionals (LKFs). By the Lyapunov stability theory, Yang et al. [43] proposed a set of global robust synchronization conditions and a pinning adaptive coupling issue for a class of CMNNs with nonidentical uncertain parameters a discontinuous diffusive term. By using Halanay inequality and the matrix measure method, Rakkiyappan et al. [28] established a sufficient condition that ensures the exponential synchronism of coupled inertial MNNs based on a state feedback controller. Based on Lyapunov functional and matrix inequality method, Zhang et al. [51] designed periodically intermittent controller to ensure exponential synchronism of CMNNs with time-varying delays. By means of simple feedback controllers and adaptive feedback controllers, the authors [3, 44] put forward sufficient conditions to assure exponential synchronism of CMNNs with impulsive and stochastic turbulence. Based on Lyapunov functions, matrix inequalities, and Halanay inequality, Bao et al. [1] obtained sufficient conditions of exponential synchronism of stochastic CMNNs with probabilistic delay coupling and impulsive delay. By utilizing differential inclusion and Halanay inequality, Li et al. [16] proposed some new sufficient conditions to achieve synchronization of inertial CMNNs with linear coupling. In order to provide deep applications, it is important to investigate synchronization problem of MNNs with less conservativeness.

Over the past years, Jensen integral inequality [6] has been extensively utilized in time-delay systems because of its high efficiency in acquiring easy-to-verify stability criteria expressed as linear matrix inequality. To get less conservative result, the authors [13, 22, 30] presented Wirtinger-based integral inequalities of single, double and multiple integral forms which include the Jensen ones and obtain greater lower bounds of integral term; by means of auxiliary functions, Park et al. [26] presented some integral ones which include those in [6, 13, 22, 30]. To further abate conservativeness, Chen et al. [4] established two general integral inequalities which include those [6, 13, 22, 26, 30] and are greater than all existing ones. In fact, there still exists some space to advance with respect to integral inequality.

Stimulated by mentioned before, in this paper we discuss the global synchronization of a class of CMNNs with linear diffusive and discontinuous sign terms. The main devotion of this paper can be epitomized as follows:

-

(1)

A new condition (see Lemma 5) is established to ascertain whether quadratic functions are negative or not on a general closed interval regardless of their concavity or convexity, which includes Lemma 2 [12] and Lemma 4 [45] as its special cases and raises another different confirming criterion with (i), (ii), (iii)\('\).

-

(2)

On the basis of Lemma 2 [24], a further developed integral inequality with free matrices is established in Lemma 4 by utilizing Legendre polynomials, which encompasses Lemma 2 [24] and Lemma 5 [4] as its special cases. In fact, Lemma 5 [4] can be acquired by fixing some slack matrices of Lemma 4.

-

(3)

Enlightened by [31] and [14], a new Lyapunov functional is constructed based on the sector condition of the activation function. Due to this new functional, less conservative delay-dependent synchronism criteria can be obtained from linear matrix inequality technology.

-

(4)

Proper integration of Chen et al.’s integral inequality (cf Lemma 3) with Lemmas 4, 5 can result in less conservative synchronization conditions than existing ones. It is proved [4] that Lemma 3 includes the Jensen inequality, Wirtinger-based one and auxiliary function-based ones as its peculiar cases, and is greater than all existing ones.

The developed results thus provide insight into hybrid neural networks via nonlinear coupling with memristors, which may help appreciate biological evolution and neural learning.

Notation Throughout this paper, solution of a system is in Filippov’s sense. \(Q^{-1},Q^T\) mean the inverse and the transpose of a matrix separately. \(Q<0(>0)\) means a definite negative (positive) symmetric matrix, \(0_{n},\ I_{n}\) mean the zero matrix and the identity matrix of \(n-\)dimension separately, \(0_{m\times n}\) means an \(m\times n\) zero matrix, symbols \(\alpha Q(*)^T, \) \(\alpha ^T Q(*)\) mean \(\alpha Q\alpha ^T\) and \(\alpha ^T Q\alpha \), respectively. The expression \({\mathrm{col}}\{Q_1,Q_2,\ldots ,Q_k\}\) means a column matrix with the matrices \(Q_1,Q_2,\ldots ,Q_k.\) sym(Z) means \(Z+Z^T,\) \({\mathrm{diag}}\{\cdot \}\) means a diagonal or block-diagonal matrix. For \(\chi >0, {\mathcal {C}}\big ([-\chi ,0];{\mathbb {R}}^n\big )\) means the set of all continuous functions \(\phi \) from \([-\chi ,0]\) to \({\mathbb {R}}^n\) with norm \(||\phi ||=\sup _{-\chi \le s\le 0}|\phi (s)|.\) If not declared in advance, matrices are required to have proper dimensions. \(\bigg [\begin{array}{cc} A&{} B\\ *&{}C\end{array}\bigg ]\) means \(\bigg [\begin{array}{cc} A&{} B\\ B^T &{}C\end{array}\bigg ].\)

2 Problem description

As shown in [39], a single memristor-based recurrent network can be expressed as the following simple form:

where \(y(t)=(y_{1}(t),y_{2}(t),\ldots ,y_{n}(t))^T\in {\mathbb {R}}^n\) denotes the state vector of the networks at time \(t,\ n\) indicates the number of neurons, A is a diagonal positive matrix indicating neuron self-inhibitions, \(B(y)=(b_{jq}(y(t)))_{n\times n},C(y)=(c_{jq}(y(t)))_{n\times n}\) are the feedback connection matrix and the delayed feedback connection matrix, respectively. \(k(y(\cdot ))=\big (k_1(y_{1}(\cdot )),k_2(y_{2}(\cdot )),\ldots ,k_n(y_{n}(\cdot ))\big )^T\in {\mathbb {R}}^n\) is the neural activation function. The bounded function \(\omega (t)\) is unknown time-varying delay with \(0\le \omega (t)\le {\bar{\omega }}, \omega _1\le \dot{\omega }(t)\le \omega _2,\) where \({\bar{\omega }}>0,\omega _1\) and \(\omega _2\) are scalars. v(t) is an external input vector. On the basis of the feature of memristor and the current-voltage characteristics, we define

and

for \(j,q\in {\mathcal {N}}=\{1,2,\ldots ,n\},\) where \(b'_{jq},b''_{jq},c'_{jq},c''_{jq}\) being known constants. Throughout this paper, we denote \({\bar{B}}=({\bar{b}}_{jq})_{n\times n},{\bar{C}}=({\bar{c}}_{jq})_{n\times n}\) with \({\bar{b}}_{jq}=\max \{b'_{jq},b''_{jq}\},{\bar{c}}_{jq}=\max \{c'_{jq},c''_{jq}\},\) and \({\hat{b}}_{jq}=|b'_{jq}-b''_{jq}|,{\hat{c}}_{jq}=|c'_{jq}-c''_{jq}|.\)

As well known, because of disturbances from environment noises or modeling errors, the network parameters often embody uncertainties. Therefore, (1) can be revised as a more practical one

where matrices \(\varDelta B(t)\) and \(\varDelta C(t)\) indicate the parameter uncertainties.

Now, we discuss a system containing m identical MNNs with nonlinear coupling

where \(y_p(t)=(y_{p1}(t),y_{p2}(t),\ldots ,y_{pn}(t))^T\in {\mathbb {R}}^n\) denotes the state vector of the pth MNN. \(D_\imath =(d^\imath _{p\jmath })_{m\times m},\imath =1,2\) and \(\varXi =(\varsigma _{p\jmath })_{m\times m}\) indicate outer coupling matrices satisfying conditions: \(d^\imath _{p\jmath }\ge 0,\varsigma _{p\jmath }>0(p\ne \jmath ),\ d^\imath _{pp}=-\sum ^m_{\jmath =1,\jmath \ne p}d^\imath _{p\jmath },\varsigma _{pp}=0,\ p,\jmath \in {\mathcal {M}}.\) Matrices \(\varLambda _1,\varLambda _2\) and \(\varTheta ={\mathrm{diag}}\{\theta _1,\theta _2,\ldots ,\) \(\theta _n\}>0\) indicate inner coupling interests between two states. \(\mathrm{sgn}(x)=({\mathrm{sign}}(x_1),{\mathrm{sign}}(x_2),\ldots ,{\mathrm{sign}}(x_n))^T\) for \(x=(x_1,x_2,\ldots ,x_n)^T\in {\mathbb {R}}^n\) with

Remark 1

Many existing results suppose the coupling matrices being symmetric, see for instance [17, 20, 21, 32, 36, 39, 48]. In this paper, this requirement is deleted. Thus our conditions are more efficacious than those results.

Similar to [43], the uncertain matrices \(\varDelta B_p(t)\) and \(\varDelta C_p(t)\) are supposed as follows:

where E and \(L_1,L_2\) are known real matrices, and \(N_{ip}(t)\) is unknown matrix with

and \(||\cdot ||_1\) is the 1-norm of a matrix.

The initial conditions of (3) are \(y_p(s)=\varphi _p(s)\in {\mathcal {C}}\big ([-{\bar{\omega }},0];{\mathbb {R}}\big ),p\in {\mathcal {M}}.\)

With different initial conditions, MNN (1) or (2) will have different dynamical trajectories in general. But in the coupled system (3), all MNNs’ states may be synchronized finally although with different initial conditions.

The following suppositions are needed for our result.

Assumption 1

The activation functions are bounded, i.e., there is constant \({\bar{k}}_j>0\) such that \(\big |{k}_j(\cdot )\big |\le {\bar{k}}_j,\ j\in {\mathcal {N}}\). Furthermore, there exist real constants \(k_j^-,k_j^+\) such that

Denote \({K _1} = {\mathrm{diag}}\left\{ {k_1^-k_1^+, k_2^-k_2^+, \cdots ,k_n^-k_n^+} \right\} ,\) \({K _2} =\frac{1}{2} {\mathrm{diag}}\left\{ k_1^-+k_1^ + , k_2^-\right. \) \(\left. +k_2^ + , \cdots ,k_n^-+k_n^ + \right\} ,\) \({K} = {\mathrm{diag}}\left\{ {k_1^2,\ k_2^2,\ \cdots ,k_n^2} \right\} \) with \(k_j=\max \{|k_j^-|,\) \(|k_j^+|\},\ j\in {\mathcal {N}}\) and \({\bar{k}}=\sum _{j=1}^{n}{\bar{k}}_j.\)

Remark 2

In Assumption 1, \(k_j^-,k_j^+(j\in {\mathcal {N}})\) can be negative, zero or positive. Such a description was raised in [18] at first, which includes monotonic nondecreasing or the Lipschitz condition as particular cases. Thus, the activation functions satisfying Assumption 1 can be more common than the usual sigmoid functions. Further, when utilizing the Lyapunov theory to discuss the stability, this assumption is particularly appropriate since it quantifies the activation functions that supply the feasibility of reducing the conservativeness.

The following definition and lemmas are required.

Definition 1

The coupled networks (4) are said to be globally robustly synchronized if \(\lim _{ t\rightarrow \infty }\) \(\{||y_p(t)-y_l(t)||_1\} = 0, \ \forall p, l\in {\mathcal {M}}\) holds for any initial values and any parameter uncertainties \(\varDelta B_p(t)\) and \(\varDelta C_p(t)\) with (4) and (5).

Definition 2

(Wu et al [42]). Given a ring \({\hat{R}},\) denote \({\mathcal {T}}({\hat{R}},\epsilon )\) the set of matrices with entries in \({\hat{R}}\) satisfying that the sum of the entries in each row equals \(\epsilon \) for some \(\epsilon \in {\hat{R}}.\)

Lemma 1

(Horn et al [9]). Let \(\otimes \) indicate the Kronecker product, X, Y, Z and W are matrices with proper dimensions. The following properties hold:

-

(1)

\((cX)\otimes Y = X\otimes (cY),\) where c is a constant;

-

(2)

\((X+Y)\otimes Z = X\otimes Z+Y\otimes Z;\)

-

(3)

\((X\otimes Y)(Z\otimes W) = (XZ)\otimes (YW).\)

Lemma 2

(Wu et al [42]). Let G be an \(m\times m\) matrix in the set \({\mathcal {T}}({\hat{R}},\epsilon )\). Then the \((m-1)\times (m-1)\)matrix Q defined by \(Q= JGP\) satisfies \(JG=QJ\), where

where \({\mathbf {1}}\) is the multiplicative identity of \({\hat{R}}\).

Lemma 3

(Chen et al [4]). Assume that matrix \(Q>0\) and function \(\mu :[\alpha ,\beta ]\rightarrow {\mathbb {R}}\) \(^n\) is continuous, the following inequalities are correct:

-

(i)

$$\begin{aligned}&(\beta -\alpha )\int ^\beta _\alpha \mu (\upsilon )^TQ\mu (\upsilon ){\mathrm{d}}\upsilon \ge \\&\quad \pi _1^TQ\pi _1+3(*)^TQ(\pi _1-\pi _2)+5{\bar{\pi }}_1^TQ{\bar{\pi }}_1+7{\bar{\pi }}_2^TQ{\bar{\pi }}_2,\\ \end{aligned}$$

-

(ii)

$$\begin{aligned}&2\int ^{\beta }_{\alpha }(\upsilon -\alpha )\mu (\upsilon )^TQ\mu (\upsilon ){\mathrm{d}}\upsilon \ge \\&\quad \pi _2^TQ\pi _2+8(*)^TQ(\pi _2-\pi _3) +3{\bar{\pi }}_3^TQ{\bar{\pi }}_3,\\ \end{aligned}$$

-

(iii)

$$\begin{aligned}&\frac{1}{\beta -\alpha }\int ^{\beta }_{\alpha }(\upsilon -\alpha )^2\mu (\upsilon )^TQ\mu (\upsilon ){\mathrm{d}}\upsilon \\&\quad \ge \frac{1}{3}\pi _3^TQ\pi _3+5(*)^TQ(\pi _3-\pi _4), \end{aligned}$$

where \(\pi _1=\int ^\beta _\alpha {\mu }(\upsilon ){\mathrm{d}}\upsilon ,\ \pi _2=\frac{2}{\beta -\alpha }\int _\alpha ^\beta (\upsilon -\alpha ){\mu }(\upsilon ){\mathrm{d}}\upsilon ,\) \({\bar{\pi }}_1=\pi _1-3\pi _2+2\pi _3,\ {\bar{\pi }}_2=\pi _1-6\pi _2+10\pi _3-5\pi _4,\ {\bar{\pi }}_3=3\pi _2-8\pi _3+5\pi _4\) with \(\pi _3=\frac{3}{(\beta -\alpha )^2}\int ^\beta _\alpha (\upsilon -\alpha )^2{\mu }(\upsilon ){\mathrm{d}}\upsilon ,\ \pi _4=\frac{4}{(\beta -\alpha )^3}\int ^\beta _\alpha (\upsilon -\alpha )^3{\mu }(\upsilon ){\mathrm{d}}\upsilon .\)

Inspired by [24], we establish the following lemma.

Lemma 4

(The proof is put in "Appendix 1"). Assume that matrix \(U>0\) and function \(\mu :[\alpha ,\beta ]\rightarrow {\mathbb {R}}\) \(^n\) is continuous, vector \(\chi \) and matrices \(T_\zeta (\zeta =1,2,3,4)\) are with proper dimensions, the following inequality is correct:

where \(\pi _1,\pi _2,{\bar{\pi }}_1,{\bar{\pi }}_2\) are defined in Lemma 3.

Remark 3

Letting \(\chi ^TT_1=-\frac{1}{\beta -\alpha }\pi _1^TU,\chi ^TT_2=-\frac{3}{\beta -\alpha }(\pi _2-\pi _1)^TU,\chi ^TT_3=-\frac{5}{\beta -\alpha }{\bar{\pi }}_1^TU\) and \(\chi ^TT_4=\frac{7}{\beta -\alpha }{\bar{\pi }}_2^TU\) yields

Then Lemma 4 reduces to Lemma 3 (i). Thus, Lemma 4 encompasses Lemma 3 (i). That is, Lemma 3 (i) is a particular case of Lemma 4 and can be acquired by fixing some slack matrices. Thus, Lemma 4 is less conservative due to additional freedom from the slack matrices.

Lemma 5

(The proof is put in "Appendix 2"). Define a quadratic function \(f(x) =a_2x^2 +a_1x+a_0,\) where \(a_0, a_1,a_2\in {\mathbb {R}}.\) if

\({\mathrm{(i)}}\ f(\alpha )<0,\ \mathrm{(ii)} \ f(\beta )<0\), \(\ \mathrm{(iii)} \ -(\beta -\alpha )^2a_2+f(\alpha )<0,\) or \(\ \mathrm{(iii)}' \ -(\beta -\alpha )^2a_2+f(\beta )<0,\) then \(f(x)<0, \forall x\in [\alpha ,\beta ]\).

Remark 4

Lemma 5 presents a condition to ascertain whether quadratic functions are negative or not on a closed interval \([\alpha ,\beta ]\) taking no account of their concavity or convexity, which includes Lemma 2 [12] and Lemma 4 [45] as its special cases. This lemma will play important role in establishing our main result.

3 Main result

For a clear presentation, we define

By means of the Kronecker product, the coupled neural networks (3) can be changed into a compact form:

where \({\mathbf {A}}=I_m\otimes A,\ {\mathbf {D}}_\imath =D_\imath \otimes \varLambda _\imath ,\imath =1,2,\) and

For simplicity, denote \({\mathbf {J}} = J\otimes I_n,{\mathbf {y}}_{t}={\mathbf {y}}(t),{\mathbf {y}}_{\omega }={\mathbf {y}}(t-\omega (t)),{\mathbf {y}}_{\bar{\omega }}={\mathbf {y}}(t-{\bar{\omega }}),\dot{{\mathbf {y}}}_t=\dot{{\mathbf {y}}}(t),\dot{{\mathbf {y}}}_\omega =\dot{{\mathbf {y}}}(t-\omega (t)),\dot{{\mathbf {y}}}_{{\bar{\omega }}}=\dot{{\mathbf {y}}}(t-{\bar{\omega }}),\) where J is defined in Lemma 2 with \({\hat{R}}={\mathbb {R}}.\) Define

where

From the integral mean-value theorem, we have that

Therefore \(\tau _1,\ldots ,\tau _{6}\) are well defined if we set

Denote \(n'=(m-1)n\) and

Next we derive the following synchronism result for system (7).

Theorem 1

(The proof is put in "Appendix 3"). Under Assumption 1 is satisfied. Given scalars \({\bar{\omega }}>0,\omega _1,\omega _2,\) the system (7) is globally robustly synchronized for \(0\le \omega (t)\le {\bar{\omega }},\) \(\omega _1\le \dot{\omega }(t)\le \omega _2,\) if there exist positive definite matrices \(\mathcal {X}_\jmath ,{\mathcal {Q}},Q_l(l=7,8,9,10),\) positive diagonal matrices \(U_\imath ,W_\imath ,F_{\imath \jmath },R_{\imath \jmath }\) \((\jmath =1,2,3),H={\mathrm{diag}}\{h_1,h_2,\ldots ,h_n\},\) symmetric matrices \(G_\imath (\imath =1,2),\) real matrices \({\mathcal {Q}}_2,M,Y_p(p=1,2,\ldots ,8)\) of appropriate dimensions such that

and one of the following two groups of inequalities holds:

-

(1)

\({\widetilde{\varXi }}_{\rho \imath }<0, \rho =1,2,3; \imath =1,2;\)

-

(2)

\({\widetilde{\varXi }}_{\rho \imath }<0, \rho =1,2,4; \imath =1,2;\)

where

and

with \(\varXi _{1\imath }={\varXi }(0,\omega _\imath ),\) \(\varXi _{2\imath }={\varXi }({\bar{\omega }},\omega _\imath ),\) \(\varXi _{3\imath }=\varXi _{1\imath }\) \(-{\bar{\omega }}^2\varPi _{12},\) \(\varXi _{4\imath }=-{\bar{\omega }}^2\varPi _{12}+\varXi _{2\imath }.\)

Remark 5

For continuous networks, there are a lots of skills to get less conservative result, for instance delay partitioning technique, triple integrals term, quadruple integrals term, and multiple integrals terms. All these skills can also be applied for delayed memristive neural networks to cut down conservatism. To present a concise result, we utilize a simple Lyapunov–Krasovskii functional in this paper.

Remark 6

Noting that stochastic disturbances, impulsive perturbations, bounded and unbounded distributed delays can be embedded into MNNs. To emphasize our new analysis technique, this paper considers networks (3) such that the obtained results are not too intricate.

4 Illustrative example

This section proposes an example to reveal the effectiveness of Theorem 1.

Example 1

Consider system (3) with following parameters:

where \(k_{jq}(t)=k_q(y_q(t))-y_j(t),k_{jq}(t-\omega (t))=k_q(y_q(t-\omega (t)))-y_j(t),\ j,q=1,2.\) The parameter uncertainties are supposed as \(\varDelta B_p(t)=0.1\sin (t)I_2,\) \(\varDelta C_p(t)=0.1\cos (t)I_2,p=1,2,\ldots ,9.\) Set \(E=I_2,L_\imath =0.1I_2,N_{1p}=\sin (t),N_{2p}=\cos (t),\) then \(\varDelta B_p(t),\) \(\varDelta C_p(t)\) can be expressed as (4). Therefore, we have \(||E||_1=1,||L_\imath ||_1= 0.1\), and \(||N_{\imath p}||_1\le 1,\imath =1,2;p=1,2,\ldots ,9.\) Then condition (5) is satisfied. The inner coupling gains are given by \(\varLambda _\imath =I_2,\imath =1,2;\varTheta =9I_2.\)

Calculation yields that \({\bar{\omega }}=0.8,\omega _1=-0.8,\omega _2=0.8,\varrho _1=1.72,\varrho _2=1.94,\vartheta _1=1.9,\vartheta _2=1.56,\)

and Assumption 1 is satisfied with \(k^-_\imath =0,k^+_\imath =1,{\bar{k}}_\imath =1,\imath =1,2.\) Thus, \(K_1=0,K_2=0.5I_2,K=I_2,{\bar{k}}=2.\)

Furthermore, outer coupling matrices are taken as

Computation gives that \(\mu _i=0.5,\sigma _{i1}=-0.08,\) \(\sigma _{i2}=-0.20, i=1,2,\ldots ,8.\) Therefore, condition (9) holds. Seeking the solutions of the inequalities in Theorem 1 by utilizing the Matlab LMI Toolbox, we can get a feasible solution. Portion of the decision matrices are made a list as follows:

To simulate numerically, we select nine values at random in \((-0.2,0.2)^T\) and \((-0.5,0.2)^T\), respectively, as the initial states. The state curves y(t) are drawn in Fig. 2 and the synchronism error \(\varepsilon _1(t),\varepsilon _2(t)\) are drawn in Figs. 3 and 4, respectively, where \(\varepsilon _j(t)=(y_{pj}(t)-y_{1j}(t)),\ p=2,\ldots ,9,\ j=1,2\).

It has been verified that none of the conditions in [1, 3, 7, 43, 51] can testify whether system (3) is synchronized or not for this example.

However, the conditions of [2, 10, 11, 33, 35, 37, 39,40,41, 46, 47] were all established under the following representative hypotheses:

It is easy to verify that the above hypotheses are not satisfied by this model. That is, none of these conditions can be applied to justify the synchronism of this example.

Therefore we may say that the result of this paper is less conservative than the conditions in [1,2,3, 7, 10, 11, 33, 35, 37, 39,40,41, 43, 46, 47, 51].

5 Conclusion

This paper inquires into the synchronism of a class of CMNNs with linear diffusive and discontinuous sign terms. The proposed conditions are expressed in terms of linear matrix inequalities (LMIs) which can be checked numerically very efficiently by using the interior-point algorithms, such as the Matlab LMI Control Toolbox. In the future, there are some issues that deserve further investigation, such as (1) the adaptive synchronization control of MNNs because adaptive control can avoid high control gains effectively, (2) synchronization of the MNNs with mismatch features since nonidentical characteristics often exist between the drive and response systems, (3) investigations other control schemes, such as pinning control, event-triggered control, sample-data control, intermittent control, quantized control and event-based control.

6 Appendix 1: Proof of Lemma 4

Take the first four Legendre orthogonal polynomials on \([\alpha ,\beta ]\) ( [29]): \(l_0(\upsilon )=1,l_1(\upsilon )=\frac{1}{\beta -\alpha }(2\upsilon -\alpha -\beta ),l_2(\upsilon )=\frac{1}{(\beta -\alpha )^2}[6\upsilon ^2-6(\alpha +\beta )\upsilon +(\alpha ^2+4\alpha \beta +\beta ^2)],l_3(\upsilon )=\frac{1}{(\beta -\alpha )^3}[20\upsilon ^3-30(\alpha +\beta )\upsilon ^2+12(\alpha ^2+3\alpha \beta +\beta ^2)\upsilon -(\alpha ^3+9\alpha ^2\beta +9\alpha \beta ^2+\beta ^3)].\) Simple calculation derives

For continuous function \(x(\upsilon )\) and continuous differentiable function \(f(\upsilon ),\) calculation on the basis of integration by parts gives

and

Then the following equalities are derived

Denote \({\hat{l}}(\upsilon )={\mathrm{col}}\{l_0(\upsilon ),l_1(\upsilon ),l_2(\upsilon ),l_3(\upsilon )\},\mathcal T={\mathrm{col}}\{T_1,T_2,T_3,T_4\},\) the following equality is derived

Due to \(U>0,\) by Schur Complement, the following inequality holds

thus

which completes the proof.

7 Appendix 2: Proof of Lemma 5

The group of conditions (i), (ii) and (iii) is Lemma 4 ( [45]). Now we prove the group of conditions (i), (ii) and (iii)\('.\) For \(a_2\ge 0,\ f(x)\) is convex. So, (i) and (ii) ensure \(f(x)<0, \forall x\in [\alpha ,\beta ].\) Otherwise, \(a_2<0,\ f(x)\) is concave. \(\dot{f}(x)=2a_2x+a_1,\ \dot{f}(\alpha )=2a_2\alpha +a_1,\ \ddot{f}(x)=2a_2<0.\) By Maclaurin formula, there exists real scalar \(\theta \) between x and \(\alpha \) such that

Notice that g(x) is convex about x. Thus \(g(\alpha )=f(\alpha )<0\) follows from (i) and \(g(\beta )=-(\beta -\alpha )^2a_2+f(\beta )<0\) from (iii)\('\). Thus we have \(g(x)<0, \forall x\in [\alpha ,\beta ].\) From \(f(x)\le g(x),\) this completes the proof of Lemma 5.

8 Appendix 3: Proof of Theorem 1

Based on Assumption 1, the following inequality is correct for any \(j\in {\mathcal {N}}\) and \(\varsigma ,\zeta \in {\mathbb {R}}\) with \(\varsigma \ne \zeta \)

Thus, for any positive scalar \( u^{\imath }_{pj} ({\imath }=1,2;p=1,2,\ldots ,m-1;\ j\in {\mathcal {N}})\) the following inequalities hold

Denoting \(U^{\imath }_{p}={\mathrm{diag}}\{u^{\imath }_{p1},u^{\imath }_{p2},\ldots ,u^{\imath }_{pn}\}({\imath }=1,2;\) \(p=1,2,\ldots ,m-1),\) the following inequalities are derived

Summing both ends of the aforementioned inequalities from \(p=1\) to \(m-1\) gives

where \(U_{{\imath }}={\mathrm{diag}}\{U^{\imath }_{1},U^{\imath }_{2},\ldots ,U^{\imath }_{m-1}\}({\imath }=1,2).\) Similarly the following inequality is true

with \(W_{{\imath }}={\mathrm{diag}}\{W^{\imath }_{1},W^{\imath }_{2},\ldots ,W^{\imath }_{m-1}\},W^{\imath }_{p}={\mathrm{diag}}\{w^{\imath }_{p1},\) \(w^{\imath }_{p2},\ldots ,w^{\imath }_{pn}\}>0\ ({\imath }=1,2;p=1,2,\ldots ,m-1).\)

Stimulated by [14] and [31], we consider the following Lyapunov functional

where

with

It follows from the assumptions and inequalities (10)–(11) that \(V(t,\omega _{t})\ge 0\) for any \(t\ge 0\) with \(0\le {\omega }(t)\le {\bar{\omega }}.\)

The time derivative of \(V(t,{\mathbf {y}}_{t})\) along the system (7) can be calculated as

where

Based on the fact that \(\int _{a}^{b}\dot{f}(s){\mathrm{d}}s = f (b)-f (a) ,\) the equality

holds for any symmetric matrix G, where \(\rho (s)\) is a vector function (see [23]). Thus, the following equality is also true

where

For \(0<{\omega }(t)<{\bar{\omega }},\) applying Wirtinger-based integral inequality (see [30]) to \({\mathcal {Q}}-\) and \(\mathcal {G}_1-\) dependent integral terms gives

Similarly, for \(0<{\omega }(t)<{\bar{\omega }},\) we get

Utilizing Lemma 4 and the Leibniz-Newton formula to \(Q_7-\) dependent integral term gives

For \(0<{\omega }(t)<{\bar{\omega }},\) utilizing Lemma 3 to \(Q_8-\) dependent integral term derives

It is easy to verify the following equations

For \(0<{\omega }(t)<{\bar{\omega }},\) applying Lemma 3 to \(Q_9-\) and \(Q_{10}-\) dependent integral terms gives

Denote \(\epsilon =\frac{\omega (t)}{{{\bar{\omega }}}},\) for \(0<{\omega }(t)<{\bar{\omega }},\) the following inequality holds based on condition (8) ( [25])

Therefore, for \(0<{\omega }(t)<{\bar{\omega }},\) we get

Also from

for any matrix M with proper dimension we get

Similar to (10)–(11), we propose

with \({F}_g(t)=\omega (t)F_{1g}+[{\bar{\omega }}-\omega (t)]F_{2g},{R}_g(t)=\omega (t)R_{1g}+[{\bar{\omega }}-\omega (t)]R_{2g},g=1,2,3.\)

Thus the following inequalities are acquired

Noticing networks (5), the following zero equality holds for any positive matrix H

By Lemmas 1 and 2, we have: \({\mathbf {J}}{\mathbf {A}}={\mathbf {A}}'{\mathbf {J}}=J\otimes A,\ {\mathbf {J}}{\mathbf {v}}(t)=0.\)

As \(D_1,D_2\in {\mathcal {T}}({\mathbb {R}},0),\) applying Lemma 2 yields that \(JD_{\imath }=(J{D_\imath }P)J,\imath =1,2.\) On the basis of Lemmas 1 and 2, the following equalities are correct: \({\mathbf {J}}{\mathbf {D}}_\imath =(J\otimes I_n)({D}_\imath \otimes {\varLambda _\imath })=(J{D_\imath })\otimes {\varLambda _\imath }=[(J{D_\imath }P)J]\otimes ({\varLambda _\imath }I_n)=[(J{D_\imath }P)\otimes {\varLambda _\imath }](J\otimes I_n)={{\mathbf {D}}'_\imath }{\mathbf {J}}.\)

Note that

and

Similarly we obtain

In addition

Applying inequalities (9) and (37)-(42) to equality (36) yields

Combining (12)–(35) and (43) gives

where \(\varGamma (r)=({{\bar{\omega }}}-r)\varGamma _1+r\varGamma _2\) with

From Lemma 3, it is easy to verify that inequality (44) still holds for \({\omega }(t)=0\) or \({\omega }(t)={\bar{\omega }}.\)

As \({\varXi }(\omega (t),\dot{\omega }(t))+\varGamma (\omega (t))\) is linear in \(\dot{\omega }(t),\) condition \({\varXi }(\omega (t),\dot{\omega }(t))+\varGamma (\omega (t))<0\) is equal to two boundary ones \({\varXi }(\omega (t),\omega _\imath )+\varGamma (\omega (t))<0\ (\imath =1,2),\) one for \(\dot{\omega }(t)=\omega _1,\) and the other for \(\dot{\omega }(t)=\omega _2.\) Obviously, for fixed \(\imath =1,2,\) \({\varXi }(\omega (t),\omega _\imath )+\varGamma (\omega (t))\) is a quadratic matrix function of \(\omega (t),\) based on Lemma 5, condition \({\varXi }(\omega (t),\omega _\imath )+\varGamma (\omega (t))<0\) can be assured by any one of the two groups of conditions: one is \(\varXi _{\rho \imath }+\varGamma _\rho <0\) with \(\rho =1,2,3,\) and the other one with \(\rho =1,2,4.\)

From Schur complement Lemma, for allocated \(\imath =1,2,\) \(\varXi _{\rho \imath }+\varGamma _\rho <0\) is equal to inequality \({\widetilde{\varXi }}_{\rho \imath }<0,\ \rho =1,2,3,4.\) Thus, the criteria of Theorem 1 mean \(\dot{V}(t,{\mathbf {y}}_{t})<0\) for all \(t\ge 0.\) Therefore system (7) is globally robustly synchronized. This finishes the proof of Theorem 1.

References

Bao H, Park JH, Cao J (2016) Exponential synchronization of coupled stochastic memristor-based neural networks with time-varying probabilistic delay coupling and impulsive delay. IEEE Trans Neural Netw Learn Syst 27(1):190–201

Bao H, Park JH, Cao J (2015) Matrix measure strategies for exponential synchronization and anti-synchronization of memristor-based neural networks with time-varying delays. Appl Math Comput 2701:543–556

Chen C, Li L, Peng H, Yang Y, Li T (2018) Synchronization control of coupled memristor-based neural networks with mixed delays and stochastic perturbations. Neural Process Lett 47(2):679–696

Chen J, Xu S, Chen W, Zhang B, Ma Q, Zou Y (2016) Two general integral inequalities and their applications to stability analysis for systems with time-varying delay. Int J Robust Nonlinear Control 26:4088–4103

Chua LO (1971) Memristor-the missing circuit element. IEEE Trans Circuit Theory 18:507–519

Gu K (2000) An integral inequality in the stability problem of time-delay systems. In: Proceedings of 39th IEEE conference on decision and control, pp 2805–2810

Guo Z, Yang S, Wang J (2015) Global exponential synchronization of multiple memristive neural networks with time delay via nonlinear coupling. IEEE Trans Neural Netw Learn Syst 26(6):1300–1311

Ho Y, Huang GM, Li P (2011) Dynamical properties and design analysis for nonvolatile memristor memories. IEEE Trans Circuits Syst I Reg Pap 58(4):724–736

Horn RA, Johnson CR (1990) Matrix analysis. Cambridge University Press, Cambridge

Jiang M, Mei J, Hu J (2015) New results on exponential synchronization of memristor-based chaotic neural networks. Neurocomputing 156:60–67

Jiang M, Wang S, Mei J, Shen Y (2015) Finite-time synchronization control of a class of memristor-based recurrent neural networks. Neural Netw 63:133–140

Kim JH (2016) Further improvement of Jensen inequality and application to stability of time-delayed systems. Automatica 64:121–125

Lee TH, Park JH, Park M-J, Kwon O-M, Jung H-Y (2015) On stability criteria for neural networks with time-varying delay using Wirtinger-based multiple integral inequality. J Frankl Inst 352(12):5627–5645

Lee TH, Trinh HM, Park JH (2018) Stability analysis of neural networks with time-varying delay by constructing novel Lyapunov functionals. IEEE Trans Neural Netw Learn Syst 29(9):4238–4247

Li C, Zhang Y, Xie EY (2019) When an attacker meets a cipher-image in 2018: a year in review. J Inf Secur Appl 48:102361

Li N, Cao J (2018) Synchronization criteria for multiple memristor-based neural networks with time delay and inertial term. Sci China Technol Sci 61(4):612–622

Liu Y, Wang Z, Liang J, Liu X (2013) Synchronization of coupled neutral-type neural networks with jumping-mode-dependent discrete and unbounded distributed delays. IEEE Trans Cybern 43(1):102–114

Liu Y, Wang Z, Liu X (2006) Global exponential stability of generalized recurrent neural networks with discrete and distributed delays. Neural Netw 19(5):667–675

Liu Z, Zhang H, Zhang Q (2010) Novel stability analysis for recurrent neural networks with multiple delays via line integral-type L-K functional. IEEE Trans Neural Netw 21(11):1710–1718

Lu R, Yu W, Lu J, Xue A (2014) Synchronization on complex networks of networks. IEEE Trans Neural Netw Learn Syst 25(11):2110–2118

Ma Q, Xu S, Zou Y (2011) Stability and synchronization for Markovian jump neural networks with partly unknown transition probabilities. Neurocomputing 74(47):3404–3411

Park MJ, Kwon OM, Park JH, Lee SM, Cha EJ (2015) Stability of time-delay systems via Wirtinger-based double integral inequality. Automatica 55(1):204–208

Park MJ, Kwon OM, Ryu JH (2018) Advanced stability criteria for linear systems with time-varying delays. J Frankl Inst 355:520–543

Park MJ, Kwon OM, Ryu JH (2018) Passivity and stability analysis of neural networks with time-varying delays via extended free-weighting matrices integral inequality. Neural Netw 106:67–78

Park P, Ko JW, Jeong C (2011) Reciprocally convex approach to stability of systems with time-varying delays. Automatica 47(1):235–238

Park P, Lee W-I, Lee S-Y (2015) Auxiliary function-based integral inequalities for quadratic functions and their applications to time-delay systems. J Frankl Inst 352(4):1378–1396

Pecora L, Carroll T (1990) Synchronization in chaotic systems. Phys Rev Lett 64:821–824

Rakkiyappan R, Udhaya Kumari E, Chandrasekar A, Krishnasamy R (2016) Synchronization and periodicity of coupled inertial memristive neural networks with supremums. Neurocomputing 214:739–749

Seuret A, Gouaisbaut F (2015) Hierarchy of LMI conditions for the stability analysis of time delay systems. Syst Control Lett 81:1–7

Seuret A, Gouaisbaut F (2013) Wirtinger-based integral inequality: application to time-delay systems. Automatica 49:2860–2866

Shao H, Li H, Zhu C (2017) New stability results for delayed neural networks. Appl Math Comput 311:324–334

Song B, Park JH, Wu Z-G, Zhang Y (2012) Global synchronization of stochastic delayed complex networks. Nonlinear Dyn 70(4):2389–2399

Song Y, Wen S (2015) Synchronization control of stochastic memristor-based neural networks with mixed delays. Neurocomputing 156:121–128

Struko DB, Snider GS, Stewart GR, Williams RS (2008) The missing memristor found. Nature 453:80–83

Wang G, Shen Y (2014) Exponential synchronization of coupled memristive neural networks with time delays. Neural Comput Appl 24(6):1421–1430

Wang W, Li L, Peng H, Xiao J, Yang Y (2014) Stochastic synchronization of complex network via a novel adaptive nonlinear controller. Nonlinear Dyn 76(1):591–598

Wang X, Li C, Huang T, Chen L (2015) Dual-stage impulsive control for synchronization of memristive chaotic neural networks with discrete and continuously distributed delays. Neurocomputing 149(B):621–628

Wang Y, Zhang H, Wang X, Yang D (2010) Networked synchronization control of coupled dynamic networks with time-varying delay. IEEE Trans Systems Man Cybern B Cybern 40(6):1468–1479

Wu A, Wen S, Zeng Z (2012) Synchronization control of a class of memristor-based recurrent neural networks. Inf Sci 183(1):106–116

Wu A, Wen S, Zeng Z, Zhu X, Zhang J (2011) Exponential synchronization of memristor-based recurrent neural networks with time delays. Neurocomputing 74(17):3043–3050

Wu A, Zeng Z (2013) Anti-synchronization control of a class of memristive recurrent neural networks. Commun Nonlinear Sci Numer Simul 18(2):373–385

Wu CW, Chua L (1995) Synchronization in an array of linearly coupled dynamical systems. IEEE Trans Circuits Syst I Reg Pap 42(8):430–447

Yang S, Guo Z, Wang J (2015) Synchronization of multiple memristive neural networks with uncertain parameters via nonlinear coupling. IEEE Trans Syst Man Cybern A Syst 45(7):1077–1086

Yuan M, Luo X, Wang W, Li L, Peng H (2019) Pinning synchronization of coupled memristive recurrent neural networks with mixed time-varying delays and perturbations. Neural Process Lett 49(1):239–262

Zhang F, Li Z (2018) Auxiliary function-based integral inequality approach to robust passivity analysis of neural networks with interval time-varying delay. Neurocomputing 306:189–199

Zhang G, Shen Y (2013) New algebraic criteria for synchronization stability of chaotic memristive neural networks with time-varying delays. IEEE Trans Neural Netw Learn Syst 24(10):1701–1707

Zhang G, Shen Y, Yin Q, Sun J (2013) Global exponential periodicity and stability of a class of memristor-based recurrent neural networks with multiple delays. Inf Sci 232:386–396

Zhang H, Gong D, Chen B, Liu Z (2013) Synchronization for coupled neural networks with interval delay: a novel augmented Lyapunov–Krasovskii functional method. IEEE Trans Neural Netw Learn Syst 24(1):58–70

Zhang H, Liu Z, Huang G-B, Wang Z (2010) Novel weighting-delay-based stability criteria for recurrent neural networks with time-varying delay. IEEE Trans Neural Netw 21(1):91–106

Zhang H, Yang F, Liu X, Zhang Q (2013) Stability analysis for neural networks with time-varying delay based on quadratic convex combination. IEEE Trans Neural Netw Learn Syst 24(4):513–521

Zhang W, Li C, Huang T, Huang J (2016) Stability and synchronization of memristor-based coupling neural networks with time-varying delays via intermittent control. Neurocomputing 173(3):1066–1072

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Grant Nos. 61273022, 61433004, 61627809) and the Research Foundation of Department of Education of Liaoning Province (No. JDL2017031).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declared that they have no conflict of interest to this work.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zheng, CD., Zhang, L. & Zhang, H. Global synchronization of memristive hybrid neural networks via nonlinear coupling. Neural Comput & Applic 33, 2873–2887 (2021). https://doi.org/10.1007/s00521-020-05166-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-020-05166-1