Abstract

This paper pays attention to the synchronization control methodology for stochastic memristive system. On the framework of Lyapunov functional, stability theory and free-weighting matrices technique, some brand-new solvability criteria are established to ensure the exponential synchronization goal of the target model. Considering the introduce of some free-weighting matrices, the obtained synchronization verdict will be much more applicable. Finally, the living example is included to show the effectiveness of the presented methodology.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

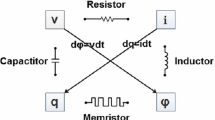

Memristor, which was first raised in 1971 [1], this device was proposed based on a nonlinear relationship between charge q and magnetic flux \(\varphi \). Shortly after, this new device was employed in a system, and thus caused a great response in the world [2]. However, considering the particularity of this circuit element, it takes a long time since it is first proposed to physical implementation, i.e., a device that contains memristive character was available until 2008, this mileage breakthrough awakened more and more attention being paid to memristors [3].

Recent researches have showcased unprecedented worldwide interests of memristor, as shown by S. Williams and its coworkers, the solid-state memristor can be used to realize crossbar latches, which will be substituted transistors in the future computers. The basis of the above meaningful application lies in the nonvolatile nature of memristor, i.e., the amount of the charge which passed though the device determines its resistance. This discovery promised applications in the next-generation memory technology, especially in the next generation computers, which can ensure the computer starting up instantly with no need for the “booting time”.

Neural networks, which can be seen as a powerful tool in dealing with practical problems [4,5,6,7,8,9,10], and these interesting results have attracted more and more researchers’ attention [11,12,13,14,15,16,17,18,19,20,21,22,23,24,25]. Among which, fixed-time synchronization of memristive system was considered in [9], and [11] explored the finite-time synchronization of coupled models, furthermore, draw support from the passivity theory, the relevant control criterion were addressed in [25].

However, as a practical matter, the effects caused by external interference are inevitable, i.e., a small fluctuations (such as temperature, humidity, air pressure, air flow, electric field, magnetic field, etc) in the environment may destroy the stability of a system, besides, the friction, lubrication, force, elastic deformation and other fluctuations within the system may also a very important factor of instability for the control system. Thus this contributed another motivation of this paper.

It is worth mentioning that, there are very few results show solicitude for the dynamic analysis of memristive system [26,27,28,29,30,31,32]. Among which, the dissipativity findings for stochastic memristive model were addressed in [26], besides, [27] and [32] interested in the memristive model with markovian jump, while, most of the existing results employed the differential inclusion theory and Filippov solution. However, the special characteristics of memristive model may lead to the parameters not compatible for different initial values. To overcome this shortcomings, a new robust algorithm was proposed, in this way, the target model can be treated as a class of system with uncertain parameters, and this constituted another main elements of this brief.

According to the above analysis, this paper deliberated the ES of stochastic memristive model. The verdicts of this brief can be abstracted as: (i) The effect brought by the stochastic disturbance is considered; (ii) Considering the specificity of memristor, the target network was translated into an uncertain parameter model, thus, the derived findings can also be employed to deliberate this kinds of issues.

Notations: The \(*\) means the term that induced by a symmetry matrix. \((\varvec{\Omega }, \mathcal {F}, \mathcal {P})\) is the probability space, \( \varvec{\Omega }\) is the sample space, \(\mathcal {F}\) is the \(\sigma \)-algebra of subsets of the sample space, and \(\mathcal {P} \) is the probability measure on \(\mathcal {F}\), \(\mathbb {E}\) refers to the expectation operator with some probability measure \(\mathcal {P} \). Set \(\overline{a}_{ij}=\max \{ a_{ij}^{\star } , a_{ij}^{\star \star } \}\), \(\underline{a}_{ij}=\min \{ a_{ij}^{\star } , a_{ij}^{\star \star } \}\) , \(a^+_{ij}=\frac{1}{2}(\overline{a}_{ij}+\underline{a}_{ij})\), \(a^-_{ij}=\frac{1}{2}(\overline{a}_{ij}-\underline{a}_{ij})\), \(\overline{b}_{ij}=\max \{ b_{ij}^{\star } , b_{ij}^{\star \star } \}\), \(\underline{b}_{ij}=\min \{ b_{ij}^{\star }, b_{ij}^{\star \star } \}\) , \(b^+_{ij}=\frac{1}{2}(\overline{b}_{ij}+\underline{b}_{ij})\), \(b^-_{ij}=\frac{1}{2}(\overline{b}_{ij}-\underline{b}_{ij})\).

2 Model Description and Preliminaries

The target stochastic memristive model which will be deliberated is given in the following form:

where x(t) signifies the neuron states, \(H=\text {diag}(h_{ 1},h_{ 2},\cdots ,h_{ n})>0\), \(H_1\), \(H_2\), \(H_3\) and \(H_4\) are connection matrices, \(A(t )=(a_{ij}(t ))_{n \times n}\), \(B(t )=(b_{ij}(t ))_{n \times n}\) are the connection weight matrices, the neuron activation functions f(x(t)) means the neuron at time t , \(\omega (t)\) is a one-dimensional Brownian motion on \((\varvec{\Omega }, \mathcal {F}, \mathcal {P})\) that subjected by \(\mathbb {E}\{d\omega (t)\}=0\), \(\mathbb {E}\{d\omega (t)^2\}=dt\). \(\delta (t)\) restricted by

where \(\mu >0\) is a scalar, and

\(a_{ij}^\star , a_{ij}^{\star \star }, b_{ij}^\star , b_{ij}^{\star \star }\) are scalars.

Lemma 2.1

For real matrices \(\underline{A }\in \mathbb {R}^{n \times n}\), \(\bar{A} \in \mathbb {R}^{n \times n}\), \(A(t)\in [\underline{A }, \bar{A}]\), then there exist possess matrices G, H and F(t), satisfies:

and

Proof

Let \(\underline{A }=(\underline{a}_{ij})_{n \times n}\), \(\bar{A}=(\bar{a}_{ij})_{n \times n}\), and \(A(t)=(a_{ij}(t))_{n \times n}\), considering the fact that \(A(t)\in [\underline{A }, \bar{A }]\), then, one has

Let \(\psi (x)=\frac{a_{ij}^+ +a_{ij}^-}{2}+\frac{a_{ij}^+ - a_{ij}^- }{2}x\), which implies that \(\psi (-1)=a_{ij}^- \), and \(\psi ( 1)=a_{ij}^+ \). Then, associate with the Intermediate Value Theorem, one can read that there possess \(F_{ij}(t)\in [-1,1]\), such that:

i.e.,

Let \(G=[G_1, G_2, \cdots , G_n]\), \(H=[H_1, H_2, \cdots , H_n]\), \(F(t)=\text {diag}\{F_{11}(t), \cdots , F_{1n}(t), \cdots , F_{ n1}(t), \cdots , F_{nn}(t)\}\), where

and \(\lambda \in [0,1]\). Obviously, \(F^T(t)F(t)\le I\), and \(A(t)=\frac{1}{2}(\underline{A} +\bar{A} )+G F(t) H\).

Thus, system (1) can be moulded as:

where

where \(\zeta _i\in \mathbb {R}^n\) be the column vector with the ith element to be 1 and others to be 0, besides, \(\Theta _i^T(t)\Theta _i(t)\le I\), \(i=1,2\).

To reach the synchronization goal, the response system is shaped as:

where u(t) is the controller to reach synchronization control goal. Then, repeat the above analysis, one can easily read that the response system (4) can be future modified as:

where \(\Theta _k^T(t )\Theta _k(t )\le I\), \(k=3,4\).

Denote the error expression as:

then, the detailed description of the error system can be illustrated as:

where \(g(\theta (\cdot ))=f(y( \cdot ))-f(x( \cdot ))\), the initial condition of (6) is given by:

Before moving on, the following two new state variables for the error memristive neural network (6) are presented:

then, the error model (6) can be modified as:

\((A_1)\): For \(x_1, x_2 \in \mathbb {R}\), \(x_1\ne x_2\), the neural function \(f_i(\cdot )\) satisfies:

where \(\beta _i^-\), \(\beta _i^+\), \(F_i>0\) are scalars. \(\square \)

Definition 2.1

The trivial solution of (6) is exponentially stable in the mean square, if there possess constants \(\gamma > 0\), \( \varrho >0\), such that

is true.

Lemma 2.2

([33]) For matrices \(\mathfrak {U}\), \(\mathfrak {H}\) , and symmetric matrix \(\mathfrak {R}\),

is true if and only if

holds, where \(\epsilon >0\), \(\mathfrak {F}^T\mathfrak {F}<I\).

Lemma 2.3

([33]) Given matrices \(\Delta _1,\Delta _2,\Delta _3\) where \(\Delta _1=\Delta _1^T\), \(0<\Delta _2=\Delta _2^T\), then

if and only if

3 Main Conclusions

The following limes specialized on the ES control of the memristive model with stochastic terms, to reach this goal, the following control strategy is necessary:

where \(\Gamma =\text {diag}\{r_1, r_2, \cdots , r_n\}\).

Theorem 3.1

Under \((A_1)\), the trivial solution of the (6) is ES in mean square, if there exist positive definite matrices P, Q, diagonal matrices \(M_1>0\), \(M_2>0\), matrix \(N= (N_1, N_2, N_3, N_4, N_5)^T\), and constants \(\varsigma _1>0\), \(\varsigma _2>0\), such that:

where

The parameters in (9) are subjected to the following restriction:

and the control gain can be checked by \(k=P^{-1}R\).

Proof

Consider the following Lyapunov functional:

Then, by means of Itô’s differential formula, one has:

where

considering that:

thus, a more compact estimation of (14) can be described as:

Moreover, for matrix N, the following line is true:

Draw support from \((A_1)\) and diagonal matrices \(M_1>0\),\(M_2>0\), one has:

Then, adding (16)–(18) to (13), yields:

where

with

then, taking consideration of \(\tilde{\Omega }_{14}\), \(\tilde{\Omega }_{15}\), \(\tilde{\Omega }\) can be further regulated as:

consulting from the Lemmas 2.2 and 2.3, one can read that for any constants \(\varsigma _1>0\), \(\varsigma _2>0\), \( \Omega \) in (10) can ensure

thus, the results derived in (19) yields:

Moreover, based on the expression of V(t), one can conclude that there possess two scalars \(\rho _1>0\), \(\rho _2>0\), such that:

where \(\rho _1=\lambda _{\max }(P)\), \(\rho _2=\lambda _{\max }(Q)\).

One knows that there must be a constant \(\beta >0\), such that:

therefore,

Thus, integrating both sides of (24) from 0 to \(T>0\), and operating the mathematical expectation gives:

while,

thus, considering the restriction expressed in (26), (25) can be future developed as:

as a result, one can see that:

i.e.,

Thus, based on Definition 2.1, one can read that the error system is ES in mean square. The proof is thus completed. \(\square \)

If the stochastic terms are removed from (1), then it will be degenerated into a memristive model shaped as:

then, repeat the above analysis gives:

To reach the ES goal, the response model can be described by:

equivalently to:

then, argued as the above procedures, the error signal can be modified as:

where u(t) has the same expression as defined in (9).

Corollary 3.1

Under \((A_1)\), if there possess positive definite matrices P, Q, diagonal matrices \(M_1>0\), \(M_2>0\), and constants \(\varsigma _1>0\), \(\varsigma _2>0\), such that:

where

then, the trivial solution of (34) is ES. Besides, the parameters in (9) are subjected to:

and \(k=P^{-1}R\).

Proof

By taking \(\phi (t)=0\), \(N=0\) in Theorem 3.1, the conclusion can be easily derived. \(\square \)

Remark 3.1

[34] pay attention to the anti-synchronization control of stochastic memristive neural networks, while form the derived main conclusions, one can see that the control algorithm is associated with dimension n of the target model, which is unreasonable considering the memristive model is a very large scale system. In [31], a stochastic memristive system is considered, while the model discussed in this paper is independent of the delayed terms, as a result the derived conclusions are also ignored the effect driven by delays. Besides, [26, 35] concentrated on the discrete-time stochastic memristive neural networks, what should be pointed is that the above analysis results are derived based on the differential inclusion theory, while in this note, by a Lemma, the parameters in the memristive system is translated into a model with uncertain terms.

4 Numerical Examples

One example and some simulation diagrams are furnished to illustrate the validity of the proposed findings.

Example 1

A stochastic memristive model is considered in this paper, in which, the parameters are given by:

where

Thus, the above lines implies:

Now we will evaluate the synchronization performance of the target model. the delays is given by \(\delta (t)=0.2+0.1\sin (t)\), a straightforward calculation gives \(\tau =3\) and \(\mu =0.1\). Let \(f(s)= \tanh (s)+3\), then, according to assumption (\(A_1\)), one has \(\beta _j^-=2, \beta _j^+=4\) and \(F_j=4\). According to the above parameters, through a simple calculation, the control parameter in (9) can be illustrated as:

For numerical simulations, choosing \(r_1= 4.5\), \(r_2= 3.5\), \(r_3=4.5\).

Moreover, by solving (10), parts of the feasible solutions are emerged as:

thus, k can be calculated as:

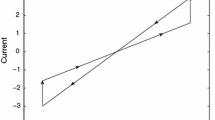

According to the derived control gains, the simulation figures can be seen in Figs. 1, 2, 3, 4, 5 and 6. The dynamic behavior of the drive-response systems are given in Figs. 3, 4, 5, and 6 describes the time responses of synchronization error system \(\theta _i(t)\), which trends to be zero concerning to t. Thus, the obtained control gains ensure the error system converges to zero exponentially.

5 Conclusion

This paper introduced the ES control methodology for a class of stochastic memristive neural networks. On the framework of Lyapunov–Krasovskii functional, the stochastic stability theory and free-weighting matrices method, some brand-new solvability criteria are established to achieve ES goal of the target memristive systems. Considering the special characteristics of memristive system, a new robust control algorithm was proposed, in this way, the target model can be treated as a system with uncertain parameters. Finally, the derived findings are confirmed by a simulation example.

References

Chua LO (1971) Memristor-the missing circut element. IEEE Trans Circuit Theory 18:507–519

Chua LO, Kang SM (1976) Memristive devices and systems. Proc IEEE 64:209–223

Strukov DB, Snider GS, Stewart DR, Williams RS (2008) The missing memristor found. Nature 453:80–83

Yang X, Feng Z, Feng J, Cao J (2017) Synchronization of discrete-time neural networks with delays and Markov jump topologies based on tracker information. Neural Netw 85:157–164

Cohen MA, Grossberg S (1987) Absolute stability of global pattern formation and parallel memory storage by competitive neural networks. IEEE Trans Systems Man Cybern 13:815–826

Chen S, Cao J (2012) Projective synchronization of neural networks with mixed time-varying delays and parameter mismatch. Nonlinear Dyn 67:1397–1406

Haykin S (1998) Neural networks: a comprehensive foundation. Prentice-Hall, Englewood Cliffs

Yang X, Cao J (2014) Hybrid adaptive and impulsive synchronization of uncertain complex networks with delays and general uncertain perturbations. Appl Math Comput 227:480–493

Zhang X, Lv X, Li X (2017) Sampled-data-based lag synchronization of chaotic delayed neural networks with impulsive control. Nonlinear Dyn 90:2199–2207

Song Q, Cao J (2008) Dynamical behaviors of discrete-time fuzzy cellular neural networks with variable delays and impulses. J Franklin Inst 345:39–59

Yang X, Lu J (2016) Finite-time synchronization of coupled networks with Markovian topology and impulsive effects. IEEE Trans Autom Control 61:2256–2261

Lu J, Ho DWC (2011) Stabilization of complex dynamical networks with noise disturbance under performance constraint. Nonlinear Anal Ser B Real World Appl 12:1974–1984

Wang Z, Ding S, Huang Z, Zhang H (2015) Exponential stability and stabilization of delayed memristive neural networks based on quadratic convex combination method. IEEE Trans Neural Netw Learn Syst 129:2029–2035

Lu J, Ding C, Lou J, Cao J (2015) Outer synchronization of partially coupled dynamical networks via pinning impulsive controllers. J Franklin Inst 352:5024–5041

Li X, Zhu Q, O’Regan D (2014) pth Moment exponential stability of impulsive stochastic functional differential equations and application to control problems of NNs. J Franklin Inst 351:4435–4456

Lu J, Ho DWC (2010) Globally exponential synchronization and synchronizability for general dynamical networks. IEEE Trans Syst Man Cybern 40:350–361

Li Y, Li B, Liu Y, Lu J, Wang Z, Alsaadi F (2018) Set stability and set stabilization of switched Boolean networks with state-based switching. IEEE Access 6:35624–35630

Zhang G, Shen Y (2013) New algebraic criteria for synchronization stability of chaotic memristive neural networks with time-varying delays. IEEE Trans Neural Netw Learn Syst 24:1701–1707

Li Y, Lou J, Wang Z, Alsaadi FE (2018) Synchronization of nonlinearly coupled dynamical networks under hybrid pinning impulsive controllers. J Franklin Inst 355:6520–6530

Li Y, Zhong J, Lu J, Wang Z (2018) On robust synchronization of drive-response boolean control networks with disturbances. Math Probl Eng. https://doi.org/10.1155/2018/1737685

Lu J, Wang Z, Cao J, Ho DWC, Kurths J (2012) Pinning impulsive stabilization of nonlinear dynamical networks with time-varying delay. Int J Bifurc Chaos 22:1250176

Yan M, Qiu J, Chen X, Chen X, Yang C, Zhang A, Alsaadi F (2018) The global exponential stability of the delayed complex-valued neural networks with almost periodic coefficients and discontinuous activations. Neural Process Lett 48:577–601

Yang X, Cao J, Liang J (2017) Exponential synchronization of memristive neural networks with delays: interval matrix method. IEEE Trans Neural Netw Learn Syst 28:1878–1888

Li R, Cao J, Alsaedi A, Ahmad B (2017) Passivity analysis of delayed reaction-diffusion Cohen–Grossberg neural networks via Hardy-Poincarè inequality. J Franklin Inst 354:3021–3038

Wang J, Wu H, Huang T (2015) Passivity-based synchronization of a class of complex dynamical networks with time-varying delay. Automatica 56:105–112

Ding S, Wang Z, Zhang H (2018) Dissipativity analysis for stochastic memristive neural networks with time-varying delays: a discrete-time case. IEEE Trans Neural Netw Learn Syst 29:618–630

Li R, Wei H (2016) Synchronization of delayed Markovian jump memristive neural networks with reaction-diffusion terms via sampled data control. Int J Mach Learn Cybern 7:157–169

Zhang L, Yang Y (2018) Different impulsive effects on synchronization of fractional-order memristive BAM neural networks. Nonlinear Dyn. https://doi.org/10.1007/s11071-018-4188-z

Zhang L, Yang Y, Wang F (2017) Lag synchronization for fractional-order memristive neural networks via period intermittent control. Nonlinear Dyn 89:367–381

Li R, Wu H, Zhang X, Yao R (2015) Adaptive projective synchronization of memristive neural networks with time-varying delays and stochastic perturbation. Math Control Relat Fields 5:827–844

Wang W, Li L, Peng H, Kurths J, Xiao J, Yang Y (2016) Finite-time anti-synchronization control of memristive neural networks with stochastic perturbations. Neural Process Lett 43:49–63

Li R, Cao J (2016) Finite-time stability analysis for markovian jump memristive neural networks with partly unknown transition probabilities. IEEE Trans Neural Netw Learn Syst 28:2924–2935

Boyd S, Ghaoui LE, Feron E, Balakrishnan V (1994) Linear matrix inequalities in system and control theory. SIAM, Philadelphia

Yu M, Wang W, Luo X, Liu L, Yuan M (2017) Exponential antisynchronization control of stochastic memristive neural networks with mixed time-varying delays based on novel delay-dependent or delay-independent adaptive controller. Math Probl Eng. https://doi.org/10.1155/2017/8314757

Liu H, Wang Z, Shen B, Liu X (2017) Event-triggered \(H_\infty \) state estimation for delayed stochastic memristive neural networks with missing measurements: the discrete time case. IEEE Trans Neural Netw Learn Syst 29:3726–3737

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by National Natural Science Foundation of China (Grant Nos. 61803247, 61802243, 61273311 and 61173094), Project Funded by China Postdoctoral Science Foundation 2018M640948, the Fundamental Research Funds for the Central Universities under Grant No. GK201903003, the Jiangsu Provincial Key Laboratory of Networked Collective Intelligence under Grant No. BM2017002.

Rights and permissions

About this article

Cite this article

Li, R., Gao, X. & Cao, J. Exponential Synchronization of Stochastic Memristive Neural Networks with Time-Varying Delays. Neural Process Lett 50, 459–475 (2019). https://doi.org/10.1007/s11063-019-09989-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-019-09989-5