Abstract

This paper investigates the synchronization control problem of coupled memristor-based neural networks (CMNNs) with mixed delays and stochastic perturbations. By utilizing simple feedback controllers, some novel sufficient conditions are derived to ensure the exponential synchronization of CMNNs with mixed delays and stochastic perturbations in mean square. In addition, by means of adaptive feedback controllers, the asymptotic synchronization of CMNNs with mixed delays and stochastic perturbations in mean square can also be achieved via stochastic LaSalle invariance principle. Numerical simulations are presented to illustrate the effectiveness of the theoretical results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In the coupled systems, synchronization is an important collective dynamical behavior [1]. In recent years, the synchronization problem of coupled neural networks has attracted great attention due to its vast application prospects in secure communications [2], pattern recognition [3], associative memory [4] and optimization [5]. Up to now, many results about the synchronization of coupled neural networks have been obtained [6,7,8,9,10,11,12]. Especially, the pinning synchronization of coupled neural networks was investigated in [11] via impulsive control, and the authors of [12] studied the finite-time synchronization of switched coupled neural networks.

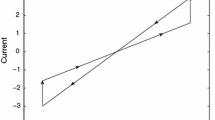

As we know, resistor, capacitor and inductor are three basic circuit elements, which reflect the relations between four fundamental electrical quantities: voltage, current, charge and flux. Specifically, resistor reflects the relationship between voltage and current, capacitor reflects the relationship between charge and voltage, and inductor reflects the relationship between flux and current. In 1971, the concept of memristor was proposed by Chua [13] as the fourth basic circuit element. Memristor has attracted considerable attention since it was prototyped in 2008 [14]. Memristor, which is the abbreviation of memory resistor, reflects the relationship between flux and charge (see Fig. 1). The memristance of memristor varies with the quantity of the passed charge [15], so memristor has the function of memory. In the circuit implementation of neural network, synapses are usually simulated by resistors. However, we know that the synapses play an important part in the formation of memory, but the common resistors don’t have the function of memory. If the resistors used in the circuit implementation of neural network are replaced by memristors, the usual artificial neural network becomes a memristor-based neural network, which is the suitable candidate for simulating the human brain [16]. So far, considerable achievements have been made in the field of memristive neurodynamics, such as stability and synchronization [17,18,19,20,21,22].

Recently, the research on synchronization problem has been extended to CMNNs [23,24,25]. In [23], some sufficient conditions that can guarantee the exponential synchronization of CMNNs with delays and nonlinear coupling were derived. [24, 25] investigated the synchronization problem of CMNNs with delays. Additionally, stochastic effects inevitably exist in nervous systems and the signal transmission between synapses is a noisy process in fact. When there exist stochastic perturbations in networks, it is more difficult to achieve the synchronization of networks. So it is necessary to study the networks with stochastic perturbations [26,27,28]. As far as we know, there have been some results on the drive-response synchronization of memristor-based neural networks with stochastic perturbations [29, 30]. What’s more, the synchronization of CMNNs with delays and stochastic perturbations has also been investigated in [31, 32]. In [31], the global synchronization of CMNNs with delays and stochastic perturbations was studied via pinning impulsive control. In [32], the pth moment exponential synchronization of CMNNs with mixed delays and stochastic perturbations was investigated via the delayed impulsive controllers. However, it should be pointed out that both the controllers used in [31] and [32] were very complex and difficult to be manipulated.

Motivated by the above analysis, in this paper, the feedback control is firstly used to study the synchronization of CMNNs with mixed delays and stochastic perturbations. By designing simple feedback controllers and utilizing a lemma given in [33], some novel sufficient conditions ensuring the exponential synchronization of CMNNs with mixed delays and stochastic perturbations in mean square are derived. Adaptive control can be used even when there is no perfect knowledge of the coupled systems, and the adaptive control can reduce the control gains effectively. By designing suitable adaptive feedback controllers and utilizing stochastic LaSalle invariance principle, the asymptotic synchronization of CMNNs with mixed delays and stochastic perturbations in mean square can be achieved. We believe that the methods used in this paper can also be applied to analyze the synchronization control of other stochastic systems and coupled systems.

The rest of this paper is organized as follows. In Sect. 2, some necessary preliminaries are introduced. We derive the main results of the paper in Sect. 3. In Sect. 4, numerical simulations are presented to verify the effectiveness of the theoretical results. Conclusions are given in Sect. 5.

2 Preliminaries

A memristor-based neural network with mixed delays can be described as follows:

where \(z(t)=(z_{1}(t),z_{2}(t),\ldots ,z_{n}(t))^{T}\) is the state vector; \(D(z(t))=diag\left( d_{1}(z_{1}(t)),\right. \left. d_{2}(z_{2}(t)),\ldots , d_{n}(z_{n}(t))\right) \), where \(d_{i}(\cdot )\,{>}\,0, i=1,2,\ldots ,n,\) denote the neuron self-inhibitions; \(A(z(t))=(a_{rj}(z_{r}(t)))_{n\times n}\), \(B(z(t))=(b_{rj}(z_{r}(t)))_{n\times n}\) and \(C(z(t))=(c_{rj}(z_{r}(t)))_{n\times n}\) are the memristive connection weight matrices; \(f(z(\cdot ))=\left( f_{1}(z_{1}(\cdot )),\right. \left. f_{2}(z_{2}(\cdot )),\ldots ,f_{n}(z_{n}(\cdot ))\right) ^{T}\), where \(f_{i}(\cdot ), i=1,2,\ldots ,n\), are the activation functions; \(J=(J_{1},J_{2},\ldots ,J_{n})^{T}\), where \(J_{i}, i=1,2,\ldots ,n\), are external inputs; \(\tau _{1}(t)\) is the time-varying discrete delay; \(K\,{:}[0,+\infty )\rightarrow [0,+\infty )\) is the delay kernel of the unbounded distributed delay; \(d_{r}(z_{r}(t))\), \(a_{rj}(z_{r}(t))\), \(b_{rj}(z_{r}(t))\) and \(c_{rj}(z_{r}(t))\) are defined as

for \(r,j=1,2,\ldots ,n\), where \(T_{r}>0\), \(d_{r}^{*},d_{r}^{**}, a_{rj}^{*},a_{rj}^{**},b_{rj}^{*},b_{rj}^{**},c_{rj}^{*},c_{rj}^{**}\) are known constants. The interested readers can refer to some published works [34, 35], which gave detailed explanations about how to build memristor-based neural networks.

A memristor-based neural network with mixed delays and stochastic perturbations can be written in the following form:

where \(\tau _{2}(t)\) is a time-varying delay satisfying \(0\le \tau _{2}(t)\le \tau _{2}\); \(\beta :R^{+}\times R^{n}\times R^{n}\rightarrow R^{n\times n}\) represents the noise intensity function matrix; \(\omega (t)=(\omega _{1}(t),\omega _{2}(t),\ldots ,\omega _{n}(t))^{T}\) is a n-dimensional Brown notion. The initial value of system (3) is \(z(s)=\varphi (s)\in C((-\infty ,0],R^{n})\), where \(C((-\infty ,0],R^{n})\) is the Banach space of all continuous functions that map \((-\infty ,0]\) into \(R^{n}\).

CMNNs with mixed delays and stochastic perturbations can be described by the following differential equations:

where \(x_{i}(t)=(x_{i1}(t),x_{i2}(t),\ldots ,x_{in}(t))^{T}\); \(D(x_{i}(t))=diag\left( d_{1}(x_{i1}(t)),d_{2}(x_{i2}(t)),\right. \left. ..,d_{n}(x_{in}(t))\right) \); \(A(x_{i}(t))=(a_{rj}(x_{ir}(t)))_{n\times n}\), \(B(x_{i}(t))=(b_{rj}(x_{ir}(t)))_{n\times n}\), \(C(x_{i}(t)) =(c_{rj}(x_{ir}(t)))_{n\times n}\); \(h_{i}:R^{nN}\rightarrow R^{n}\) is the coupling function, which satisfies \(h_{i}(z(t),z(t),\ldots ,z(t))=0\); \(d_{r}(x_{ir}(t))\), \(a_{rj}(x_{ir}(t))\), \(b_{rj}(x_{ir}(t))\) and \(c_{rj}(x_{ir}(t))\) are defined as

for \(r,j=1,2,\ldots ,n\). The initial value of CMNNs (4) is \(x_{i}(s)=\phi _{i}(s)\in C((-\infty ,0],R^{n})\).

In order to synchronize all the states of CMNNs (4) onto z(t) of system (3), suitable controllers will be needed. The controlled CMNNs are presented as

where \(R_{i}(t),~i=1,2,\ldots ,N,\) are the controllers that will be designed.

It is noticed that systems (3) and (6) are discontinuous systems since they switch in view of states. Considering that their solutions in the conventional sense do not exist, we can discuss their solutions in the sense of Filippov. Next, we will give the definition of Filippov solution.

Consider the following differential equation:

where \(x(t)\in R^{n}\), \(f{:}\,R^{n}\rightarrow R^{n}\) is discontinuous and locally measurable.

Definition 1

[36]. The set-valued map of f(x) at \(x\in R^{n}\) is defined by

where \(\overline{co}[E]\) is the convex closure of set E, \(\mu (N)\) denotes the Lebesgue measure of set N, and \(B(x,\delta )=\{y{:}\,\Vert y-x\Vert \le \delta \}\).

Definition 2

[37]. A vector function x(t) defined on interval [0, T) is said to be a Filippov solution of system (7) if it is absolutely continuous on any compact subinterval of [0, T) and satisfies differential inclusion \(\dot{x}(t)\in K[f](x(t))\) for almost all \(t\in [0,T)\).

Throughout this paper, set \(\underline{d}_{r}=\min \{d_{r}^{*},d_{r}^{**}\}, {a}_{rj}^{+}=\max \Big \{|a_{rj}^{*}|,|a_{rj}^{**}|\}\), \({b}_{rj}^{+}=\max \{|b_{rj}^{*}|,|b_{rj}^{**}|\}\), \({c}_{rj}^{+}=\max \{|c_{rj}^{*}|,|c_{rj}^{**}|\Big \}\), for \(r,j=1,2,\ldots ,n\).

The synchronization errors are defined as \(e_{i}(t)=x_{i}(t)-z(t),i=1,2,\ldots ,N\). From (3) and (6), it follows that:

where \(\varrho (t,e_{i}(t),e_{i}(t-\tau _{2}(t)))=\beta (t,x_{i}(t),x_{i}(t-\tau _{2}(t)))-\beta (t,z(t),z(t-\tau _{2}(t))),\) \(H_{i}(e_{1}(t),e_{2}(t),\ldots ,e_{N}(t))=h_{i}(x_{1}(t),x_{2}(t),\ldots ,x_{N}(t))-h_{i}(z(t),z(t),\ldots ,z(t)),\)

The initial value of system (8) is \(e_{i}(s)=\phi _{i}(s)-\varphi (s)\in C((-\infty ,0],R^{n})\).

The following assumptions will be used in this paper.

- \((A_{1})\) :

-

\(\dot{\tau }_{2}(t)\le \sigma _{2}<1\), where \(\sigma _{2}\) is a positive constant.

- \((A_{2})\) :

-

Activation functions are bounded, that is, there exist constants \(M_{j}>0,~j=1,2,\ldots ,n\), such that \(\left| f_{j}(\cdot )\right| \le M_{j}\).

- \((A_{3})\) :

-

There are some constants \(\gamma _{ij}\ge 0, i,j=1,2,\ldots ,N,\) such that

$$\begin{aligned} \Vert h_{i}(x_{1}(t),x_{2}(t),\ldots ,x_{N}(t))-h_{i}(z(t),z(t),\ldots ,z(t))\Vert \le \sum \limits _{j=1}^{N}\gamma _{ij}\Vert x_{j}(t)-z(t)\Vert . \end{aligned}$$ - \((A_{4})\) :

-

For \(x_{1},y_{1},x_{2},y_{2}\in R^{n}\), there exist constants \(\rho _{1}\ge 0\) and \(\rho _{2}\ge 0\) such that

$$\begin{aligned} \begin{aligned}&\mathrm{trace}\left\{ \left[ \beta (t,x_{1},y_{1})-\beta (t,x_{2},y_{2})\right] ^{T}\left[ \beta (t,x_{1},y_{1})-\beta (t,x_{2},y_{2})\right] \right\} \\ \le&\rho _{1}\Vert x_{1}-x_{2}\Vert ^{2}+\rho _{2}\Vert y_{1}-y_{2}\Vert ^{2}. \end{aligned} \end{aligned}$$(10) - \((A_{5})\) :

-

There is a constant \(K>0\) such that \(\int _{0}^{+\infty }K(s)ds\le K\).

Lemma 1

Proof

We consider the following four cases:

-

(1)

When \(\left| x_{ij}(t)\right| <T_{j}\) and \(\left| z_{j}(t)\right| <T_{j}\),

$$\begin{aligned} \begin{aligned}&sign(e_{ij}(t))(-d_{j}(x_{ij}(t))x_{ij}(t)+d_{j}(z_{j}(t))z_{j}(t))\\&\quad =-sign(e_{ij}(t))(d_{j}^{*}x_{ij}(t)-d_{j}^{*}z_{j}(t))\\&\quad =-d_{j}^{*}\left| e_{ij}(t)\right| \le -\underline{d}_{j}\left| e_{ij}(t)\right| . \end{aligned} \end{aligned}$$(11) -

(2)

When \(\left| x_{ij}(t)\right| >T_{j}\) and \(\left| z_{j}(t)\right| >T_{j}\),

$$\begin{aligned} \begin{aligned}&sign(e_{ij}(t))(-d_{j}(x_{ij}(t))x_{ij}(t)+d_{j}(z_{j}(t))z_{j}(t))\\&\quad =-d_{j}^{**}\left| e_{ij}(t)\right| \le -\underline{d}_{j}\left| e_{ij}(t)\right| . \end{aligned} \end{aligned}$$(12) -

(3)

When \(\left| x_{ij}(t)\right| \ge T_{j}\) and \(\left| z_{j}(t)\right| \le T_{j}\),

$$\begin{aligned} \begin{aligned}&sign(e_{ij}(t))(-d_{j}(x_{ij}(t))x_{ij}(t)+d_{j}(z_{j}(t))z_{j}(t))\\&\quad =-sign(e_{ij}(t))[d_{j}(x_{ij}(t))e_{ij}(t)+(d_{j}(x_{ij}(t))-d_{j}(z_{j}(t)))z_{j}(t)]\\&\quad \le -\underline{d}_{j}\left| e_{ij}(t)\right| +T_{j}\left| d_{j}^{*}-d_{j}^{**}\right| . \end{aligned} \end{aligned}$$(13) -

(4)

When \(\left| x_{ij}(t)\right| \le T_{j}\) and \(\left| z_{j}(t)\right| \ge T_{j}\),

$$\begin{aligned} \begin{aligned}&sign(e_{ij}(t))(-d_{j}(x_{ij}(t))x_{ij}(t)+d_{j}(z_{j}(t))z_{j}(t))\\&\quad =-sign(e_{ij}(t))[(d_{j}(x_{ij}(t))-d_{j}(z_{j}(t)))x_{ij}(t)+d_{j}(z_{j}(t))e_{ij}(t)]\\&\quad \le -\underline{d}_{j}\left| e_{ij}(t)\right| +T_{j}\left| d_{j}^{*}-d_{j}^{**}\right| . \end{aligned} \end{aligned}$$(14)

The proof is completed. \(\square \)

Lemma 2

Let \(F_{i}(t)=(F_{i1}(t),F_{i2}(t),\ldots ,F_{in}(t))^{T}\), then \(\left| F_{ij}(t)\right| \le \Lambda _{j},\) where \(\Lambda _{j}=\sum \nolimits _{l=1}^{n}2(a_{jl}^{+}+b_{jl}^{+}+c_{jl}^{+}K)M_{l}\), for \(i=1,2,\ldots ,N\), \(j=1,2,\ldots ,n\).

Proof

In view of Assumptions \(A_{2}\) and \(A_{5}\), the proof is obvious. \(\square \)

Lemma 3

[33]. Suppose that the continuous function V(t) satisfies \(V(t)\ge 0,~ \forall t\in (a-\theta ,+\infty )\) and

where \(k_{1}>k_{2}>0\). Then V(t) satisfies

where \(\gamma \) is the unique positive solution of the equation \(\gamma -k_{1}+k_{2}e^{\gamma \theta }=0\).

Definition 3

CMNNs (6) are said to be exponentially synchronized onto the system (3) in mean square, if there exist constants \(\varpi >0\) and \(\alpha >0\) such that

Definition 4

CMNNs (6) are said to be asymptotically synchronized onto the system (3) in mean square, if we can derive

3 Main Results

In order to synchronize all the solutions of CMNNs (6) onto z(t) of system (3), we design the following feedback controllers:

where \(\xi \) is a constant and \(\eta =diag(\eta _{1},\eta _{2},\ldots ,\eta _{n})\) is a matrix.

Theorem 1

Suppose Assumptions \(A_{2}\)–\(A_{5}\) hold. For given constant \(\lambda \ge 0\), if \(\xi >\frac{1}{2}(\lambda +\rho _{1}+\rho _{2}e^{\lambda \tau _{2}})-\min \limits _{j}\left\{ \underline{d}_{j}\right\} +\Vert \Gamma \Vert \) and \(\eta _{j}\ge T_{j}\left| d_{j}^{*}-d_{j}^{**}\right| +\Lambda _{j},~j=1,2,\ldots ,n\), CMNNs (6) can be exponentially synchronized onto the system (3) in mean square under the controllers (15).

Proof

We design the following Lyapunov function:

Differentiating V(t) along system (8), we have

where

According to Lemma 1,

According to Lemma 2,

Let \(\Gamma =(\gamma _{ij})_{N\times N}\) and \(\zeta =(\Vert e_{1}(t)\Vert ,\Vert e_{2}(t)\Vert ,\ldots ,\Vert e_{N}(t)\Vert )^{T}\), we have

where Assumption \(A_{3}\) has been used.

It is obvious that

According to Assumption \(A_{4}\),

Then we have

Since \(\eta _{j}\ge T_{j}\left| d_{j}^{*}-d_{j}^{**}\right| +\Lambda _{j},j=1,2,\ldots ,n\), it follows that

Taking mathematical expectation yields

That means

Since \(\xi >\frac{1}{2}(\lambda +\rho _{1}+\rho _{2}e^{\lambda \tau _{2}})-\min \limits _{j}\left\{ \underline{d}_{j}\right\} +\Vert \Gamma \Vert \), we can derive from Lemma 3 that

where \(\mu \) is the unique positive solution of the equation

Then we have

Therefore,

This completes the proof. \(\square \)

In Theorem 1, the control gains of feedback controllers (15) may be much larger than those needed actually because of the conservativeness of theoretical analysis. Since adaptive feedback controllers can avoid the high control gains perfectly, we will consider adaptive feedback controllers in Theorem 2.

Theorem 2

If Assumptions \(A_{1}\)–\(A_{5}\) hold, CMNNs (6) will be asymptotically synchronized onto the system (3) in mean square under the adaptive feedback controllers:

where \(\eta _{i}(t)=diag(\eta _{i1}(t),\eta _{i2}(t),\ldots ,\eta _{in}(t))\), \(\xi _{i}(t)\) and \(\eta _{ij}(t)\) satisfy

for \(i=1,2,\ldots ,N,j=1,2,\ldots ,n,\) where \(p_{i}>0\) and \(q_{ij}>0\) are constants.

Proof

We design the following Lyapunov function:

where

Differentiating V(t) along system (8), we get that

where \(LV(t)=LV_{1}(t)+LV_{2}(t)+LV_{3}(t)\).

Similarly to the proof of Theorem 1, it can be derived that

Based on Assumption \(A_{1}\),

It is obvious that

It follows that

Choose \(r\ge -\min \nolimits _{j}\left\{ \underline{d}_{j}\right\} +\Vert \Gamma \Vert +\frac{\rho _{1}}{2}+\frac{\rho _{2}}{2(1-\sigma _{2})}+\varepsilon \) and \(s_{j}\ge T_{j}\left| d_{j}^{*}-d_{j}^{**}\right| +\Lambda _{j},j=1,2,\ldots ,n,\) where \(\varepsilon >0\).

Then we have

According to LaSalle invariance principle for stochastic delayed differential equations [38,39,40], we have \(\lim \nolimits _{t\rightarrow +\infty }e_{i}(t)=0\), \(i=1,2,\ldots ,N\), which means that \(\lim \nolimits _{t\rightarrow +\infty }\sum \nolimits _{i=1}^{N{}^{\phantom {{1}}}}E\left[ \Vert e_{i}(t)\Vert ^{2}\right] =0\). Based on Definition 4, we can derive that CMNNs (6) can be asymptotically synchronized onto the system (3) in mean square.

This completes the proof. \(\square \)

Remark 1

The CMNNs model studied in this paper is the same as that of [32]. In [32], the upper bounds of the solutions of the isolated node system are assumed to be known in advance, i.e., there exist some known positive constants \(M_{j}^{z}\) such that \(\left| z_{j}(t)\right| \le M_{j}^{z}\), \(j=1,2,\ldots ,n\). However, in our paper, we don’t need the assumption that the upper bounds of the solutions of the isolated node system are known in advance. Moreover, the activation functions \(f_{j}(\cdot ),~j=1,2,\ldots ,n\), in our paper are only required to be bounded, while in [32] they are assumed to be bounded and satisfy the Lipschitz condition.

Remark 2

It should be pointed out that only the asymptotic synchronization of CMNNs (6) is proved in Theorem 2, while Theorem 1 gives the exponential synchronization criteria of CMNNs (6). Furthermore, the proof of Theorem 2 requires an extra assumption condition \(\dot{\tau }_{2}(t)\le \sigma _{2}<1\), while the proof of Theorem 1 doesn’t need this assumption condition.

Remark 3

In this paper, feedback controllers are used in Theorem 1. Because of the conservativeness of theoretical analysis, the control gains of feedback controllers (15) may be much larger than those needed actually. To overcome this drawback, the adaptive control is a good choice. In Theorem 2, we utilize adaptive feedback controllers, which can reduce the control gains effectively.

Remark 4

There have been many results about the synchronization control of memristor-based neural networks, such as [21, 22]. For CMNNs (6) and system (3), if we set \(\beta (t,\cdot ,\cdot )=0\) and \(N=1\), then the results of this paper can be generalized to the drive-response synchronization of common memristor-based neural networks. In this sense, compared with the memristor-based neural networks model considered in [21, 22], the model of our paper is more general. However, the finite time synchronization is also investigated in [21], while only the asymptotic synchronization and exponential synchronization are studied in this paper. Hence, the finite time synchronization of CMNNs will be our future research direction.

4 Numerical Simulations

In this section, an example is given to illustrate the effectiveness of the theoretical results in this paper.

Consider the following 2-dimensional memristor-based stochastic neural network, which is a special case of system (3).

where \(z(t)=(z_{1}(t),z_{2}(t))^{T}\), \(f_{1}(v)=f_{2}(v)=\frac{\left| v+1\right| -\left| v-1\right| }{2}\), \(\tau _{1}(t)=2+sint\), \(\tau _{2}(t)=1+0.3cost\), \(J=(0,0)^{T}\), \(K(t)=e^{-0.5t}\), \(T_{1}=T_{2}=1\), \(d_{1}^{*}=0.9\), \(d_{1}^{**}=1.1\), \(d_{2}^{*}=1.1\), \(d_{2}^{**}=0.9\), \(a_{11}^{*}=3.4\), \(a_{11}^{**}=2.9\), \(a_{12}^{*}=-0.4\), \(a_{12}^{**}=-0.22\), \(a_{21}^{*}=4.2\), \(a_{21}^{**}=3.9\), \(a_{22}^{*}=5.2\), \(a_{22}^{**}=5\), \(b_{11}^{*}=-1.4\), \(b_{11}^{**}=-1.2\), \(b_{12}^{*}=0.2\), \(b_{12}^{**}=-0.1\), \(b_{21}^{*}=0.5\), \(b_{21}^{**}=-0.2\), \(b_{22}^{*}=-9.2\), \(b_{22}^{**}=-6\), \(c_{11}^{*}=-1.3\), \(c_{11}^{**}=-1.18\), \(c_{12}^{*}=0.12\), \(c_{12}^{**}=0.05\), \(c_{21}^{*}=-0.3\), \(c_{21}^{**}=-0.2\), \(c_{22}^{*}=-1.2\), \(c_{22}^{**}=-0.6\), \(\beta (t,z(t),z(t-\tau _{2}(t)))=0.6diag(z_{1}(t),z_{2}(t-\tau _{2}(t)))\). Then we have \(M_{1}=M_{2}=1\), \(\sigma _{2}=0.3\), \(K=2\), \(\rho _{1}=\rho _{2}=0.36\).

Evolutions of \(\Vert e_{i}(t)\Vert \) with controllers (30), \(i=1,2,3,4\)

Evolutions of control gains \(\xi _{1}(t), \eta _{11}(t)\) and \(\eta _{12}(t)\) of controllers (30)

Evolutions of control gains \(\xi _{2}(t), \eta _{21}(t)\) and \(\eta _{22}(t)\) of controllers (30)

Evolutions of control gains \(\xi _{3}(t), \eta _{31}(t)\) and \(\eta _{32}(t)\) of controllers (30)

Evolutions of control gains \(\xi _{4}(t), \eta _{41}(t)\) and \(\eta _{42}(t)\) of controllers (30)

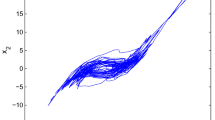

The initial value of system (39) is \(\varphi (t)=(0.5,0.2)^{T}\) for \(t\in [-5,0]\) and \(\varphi (t)=(0,0)^{T}\) for \(t\in (-\infty ,-5).\) The trajectories of \(z_{1}(t)\) and \(z_{2}(t)\) are presented in Fig. 2.

This is the controlled CMNNs with mixed delays and stochastic perturbations, which is a special case of CMNNs (6).

where \(x_{i}(t)=(x_{i1}(t),x_{i2}(t))^{T}\). The initial value of system (40) is \(\phi _{1}(t)=(0.2,0.6)^{T{}^{\phantom {\frac{1}{2}}}}\), \(\phi _{2}(t)=(-0.3,1.2)^{T}\), \(\phi _{3}(t)=(1,-0.5)^{T}\), \(\phi _{4}(t)=(1.3,-0.6)^{T}\) for \(t\in [-5,0]\), and \(\phi _{i}(t)=(0,0)^{T}\) for \(t\in (-\infty ,-5), i=1,2,3,4.\)

Suppose \(h_{i}(x_{1}(t),x_{2}(t),x_{3}(t),x_{4}(t))\) satisfies \(h_{i}(x_{1}(t),x_{2}(t),x_{3}(t),x_{4}(t))=0.1diag(x_{i1}(t)-x_{i+1,1}(t),x_{i2}(t)-x_{i+1,2}(t)),\) \(i=1,2,3,4,\) where \(x_{5}(t)=x_{1}(t)\). Figure 3 presents the evolutions of \(\Vert e_{i}(t)\Vert \) , \(i=1,2,3,4\), without control inputs.

Since \(\Vert h_{i}(x_{1}(t),x_{2}(t),x_{3}(t),x_{4}(t))-h_{i}(z(t),z(t),z(t),z(t))\Vert ^{2} \le 0.02(\Vert e_{i}(t)\Vert +\Vert e_{i+1}(t)\Vert )^{2},\) we obtain that \(\Vert h_{i}(x_{1}(t),x_{2}(t),x_{3}(t),x_{4}(t))-h_{i}(z(t),z(t),z(t),z(t))\Vert \le 0.1\sqrt{2}(\Vert e_{i}(t)\Vert +\Vert e_{i+1}(t)\Vert )\), \(i=1,2,3,4\). So Assumption \(A_{3}\) holds.

Choose \(p_{i}=q_{ij}=1\), \(i=1,2,3,4,j=1,2\). Figure 4 shows the evolutions of \(\Vert e_{i}(t)\Vert \), \(i=1,2,3,4\), with controllers (30). It is obvious that systems (39) and (40) achieve the asymptotic synchronization. The evolutions of the control gains \(\xi _{i}(t), \eta _{i1}(t)\) and \(\eta _{i2}(t)\), \(i=1,2,3,4\), of controllers (30) are given in Figs. 5, 6, 7 and 8, respectively. It should be pointed out that the control gains are all very small.

5 Conclusions

This paper is concerned with the synchronization control problem of CMNNs with mixed delays and stochastic perturbations. Some novel sufficient conditions guaranteeing the exponential synchronization of CMNNs with mixed delays and stochastic perturbations in mean square are derived via feedback controllers. Additionally, by using adaptive feedback controllers and stochastic LaSalle invariance principle, the asymptotic synchronization of CMNNs with mixed delays and stochastic perturbations in mean square can also be achieved. Numerical simulations are given to illustrate the validity and the effectiveness of our theoretical results.

References

Yang X, Wu Z, Cao J (2013) Finite-time synchronization of complex networks with nonidentical discontinuous nodes. Nonlinear Dyn 73(4):2313–2327

Zaghloul ME, Milanović V (1996) Synchronization of chaotic neural networks and applications to communications. Int J Bifurcat Chaos 6:2571–2585

Lu W, Chen T (2004) Synchronization of coupled connected neural networks with delays. IEEE Trans Circuits Syst I Regular Pap 51:2491–2503

Tan Z, Ali MK (2011) Associative memory using synchronization in a chaotic neural network. Int J Mod Phys C 12(12):19–29

Liu Q,Yang S,Wang J (2016) A collective neurodynamic approach to distributed constrained optimization. IEEE Trans Neural Netw Learn Syst. doi:10.1109/TNNLS.2016.2549566

Chen G, Zhou J, Liu Z (2004) Global synchronization of coupled delayed neural networks and applications to chaotic CNN models. I J Bifurcat Chaos 14:2229–2240

Wu W, Chen T (2008) Global synchronization criteria of linearly coupled neural network systems with time-varying coupling. IEEE Trans Neural Netw 19:319–332

Yang S, Guo Z, Wang J (2016) Global synchronization of multiple recurrent neural networks with time delays via impulsive interactions. IEEE Trans Neural Netw Learn Syst 28(7):1657–1667

Cao J, Chen G, Li P (2008) Global synchronization in an array of delayed neural networks with hybrid coupling. IEEE Trans Syst Man Cybern B Cybern 38:488–498

Yang X, Cao J, Yang Z (2013) Synchronization of coupled reaction-diffusion neural networks with time-varying delays via pinning-impulsive controller. SIAM J Control Optim 51:3486–3510

He W, Qian F, Cao J (2017) Pinning-controlled synchronization of delayed neural networks with distributed-delay coupling via impulsive control. Neural Netw 85:1–9

Liu X, Cao J, Yu W, Song Q (2015) Nonsmooth finite-time synchronization of switched coupled neural networks. IEEE Trans Cybern 46(10):2360–2371

Chua LO (1971) Memristor-the missing circuit element. IEEE Trans Circuit Theory 18:507–519

Struko DB, Snider GS, Stewart GR, Williams RS (2008) The missing memristor found. Nature 453:80–83

Sharifiy M, Banadaki Y (2010) General spice models for memristor and application to circuit simulation of memristor-based synapses and memory cells. J Circuits Syst Comput 19:407–424

Jo SH, Chang T, Ebong I, Bhadviya BB, Mazumder P, Lu W (2010) Nanoscale memristor device as synapse in neuromorphic systems. Nano Lett 10:1297–1301

Wu H, Li R, Zhang X, Yao R (2015) Adaptive finite-time complete periodic synchronization of memristive neural networks with time delays[J]. Neural Process Lett 42(3):563–583

Wang W, Li L, Peng H, Kurths J, Xiao J, Yang Y (2016) Finite-time anti-synchronization control of memristive neural networks with stochastic perturbations. Neural Process Lett 43(1):49–63

Han X, Wu H, Fang B (2016) Adaptive exponential synchronization of memristive neural networks with mixed time-varying delays. Neurocomputing 201:40–50

Yang X, Cao J, Yu W (2014) Exponential synchronization of memristive cohen–grossberg neural networks with mixed delays. Cogn Neurodyn 8(3):239–249

Yang X, Ho DW (2015) Synchronization of delayed memristive neural networks: robust analysis approach. IEEE Trans Cybern 46(12):3377–3387

Yang X, Cao J, Liang J (2016) Exponential synchronization of memristive neural networks with delays: interval matrix method. IEEE Trans Neural Netw Learn Syst. doi:10.1109/TNNLS.2016.2561298

Guo Z, Yang S, Wang J (2015) Global exponential synchronization of multiple memristive neural networks with time delay via nonlinear coupling. IEEE Trans Neural Netw Learn Syst 26:1300–1311

Zhang W, Li C, Huang T, He X (2015) Synchronization of memristor-based coupling recurrent neural networks with time-varying delays and impulses[J]. IEEE Trans Neural Netw Learn Syst 26(12):3308–3313

Zhang W, Li C, Huang T, Huang J (2016) Stability and synchronization of memristor-based coupling neural networks with time-varying delays via intermittent control[J]. Neurocomputing 173(P3):1066–1072

Yang X, Cao J (2014) Hybrid adaptive and impulsive synchronization of uncertain complex networks with delays and general uncertain perturbations. Appl Math Comput 227(15):480–493

He W, Zhang B, Han Q, Qian F, Kurths J, Cao J (2017) Leader-following consensus of nonlinear multi-agent systems with stochastic sampling. IEEE Trans Cybern 47(2):327–338

Wang J, Feng J, Xu C, Zhao Y (2013) Exponential synchronization of stochastic perturbed complex networks with time-varying delays via periodically intermittent pinning. Commun Nonlinear Sci Numer Simul 18(11):3146–3157

Song Y, Wen S (2015) Synchronization control of stochastic memristor-based neural networks with mixed delays. Neurocomputing 156(C):121–128

Guo Z, Yang S, Wang J (2016) Global synchronization of stochastically disturbed memristive neurodynamics via discontinuous control laws. IEEE/CAA J Autom Sin 3:121–131

Guo Z, Yang S, Wang J (2016) Global synchronization of memristive neural networks subject to random disturbances via distributed pinning control. Neural Netw 84:67–79

Yang X, Cao J, Qiu J (2015) \(p\)th moment exponential stochastic synchronization of coupled memristor-based neural networks with mixed delays via delayed impulsive control[J]. Neural Netw 65(C):80–91

Cao J, Wang J (2004) Absolute exponential stability of recurrent neural networks with lipschitz-continuous activation functions and time delays. Neural Netw 17:379–390

Wu A, Zeng Z, Zhu X, Zhang J (2011) Exponential synchronization of memristor-based recurrent neural networks with time delays. Neurocomputing 74(17):3043–3050

Wu A, Wen S, Zeng Z (2012) Synchronization control of a class of memristor-based recurrent neural networks. Inf Sci 183(1):106–116

Filippov AF (1988) Differential equations with discontinuous righthand sides. Kluwer Academic Publishers, Dordrecht

Forti M, Nistri P (2003) Global convergence of neural networks with discontinuous neuron activations. IEEE Trans Circuits Syst I Regular Pap 50(11):1421–1435

Mao X (1999) A note on the lasalle-type theorems for stochastic differential delay equations. J Math Anal Appl 236(2):350–369

Cao J, Wang Z, Sun Y (2007) Synchronization in an array of linearly stochastically coupled networks with time delays. Physica A 385(2):718–728

Li X, Cao J (2008) Adaptive synchronization for delayed neural networks with stochastic perturbation. J Franklin Inst 345(7):779–791

Acknowledgements

The work is supported by the National Key Research and Development Program (Grant Nos. 2016YFB0800602 and 2016YFB0800604), and the National Natural Science Foundation of China (Grant Nos. 61573067 and 61472045).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chen, C., Li, L., Peng, H. et al. Synchronization Control of Coupled Memristor-Based Neural Networks with Mixed Delays and Stochastic Perturbations. Neural Process Lett 47, 679–696 (2018). https://doi.org/10.1007/s11063-017-9675-6

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-017-9675-6