Abstract

Evolutionary game theory is a mathematical approach to studying how social behaviors evolve. In many recent works, evolutionary competition between strategies is modeled as a stochastic process in a finite population. In this context, two limits are both mathematically convenient and biologically relevant: weak selection and large population size. These limits can be combined in different ways, leading to potentially different results. We consider two orderings: the \(wN\) limit, in which weak selection is applied before the large population limit, and the \(Nw\) limit, in which the order is reversed. Formal mathematical definitions of the \(Nw\) and \(wN\) limits are provided. Applying these definitions to the Moran process of evolutionary game theory, we obtain asymptotic expressions for fixation probability and conditions for success in these limits. We find that the asymptotic expressions for fixation probability, and the conditions for a strategy to be favored over a neutral mutation, are different in the \(Nw\) and \(wN\) limits. However, the ordering of limits does not affect the conditions for one strategy to be favored over another.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Evolutionary game theory (Maynard Smith 1982; Maynard Smith and Price 1973; Hofbauer and Sigmund 1998; Weibull 1997; Broom and Rychtár 2013) is a framework for modeling the evolution of behaviors that affect others. Interactions are represented as a game, and game payoffs are linked to reproductive success. Originally formulated for infinitely large, well-mixed populations, the theory has been extended to populations of finite size (Taylor et al. 2004; Nowak et al. 2004; Imhof and Nowak 2006; Lessard and Ladret 2007) and a wide variety of structures (Nowak and May 1992; Blume 1993; Ohtsuki et al. 2006; Tarnita et al. 2009; Nowak et al. 2010; Allen and Nowak 2014).

Calculating evolutionary dynamics in finite and/or structured populations can be difficult—in some cases, provably so (Ibsen-Jensen et al. 2015). To obtain closed-form results, one often must pass to a limit. Two limits in particular have emerged as both mathematically convenient and biologically relevant: large population size and weak selection. The weak selection limit means that the game has only a small effect on reproductive success (Nowak et al. 2004). With these limits, many results become expressible in closed form that would not be otherwise.

Often one is interested in combining these limits. However, a central theme in mathematical analysis is that limits can be combined in (infinitely) many ways. It is therefore important, when applying the large-population and weak-selection limits, to be clear how they are being combined. As a first step, Jeong et al. (2014) introduced the terms Nw limit and wN limit. In the \(Nw\) limit, the large population limit is taken before the weak selection limit, while in the \(wN\) limit the order is reversed. Informally, in the \(Nw\) limit, the population becomes large “much faster” than selection becomes weak, while the reverse is true for the \(wN\) limit. While there are infinitely many ways of combining the large-population and weak-selection limits, the Nw and wN limits represent two extremes in which one limit is taken entirely before the other.

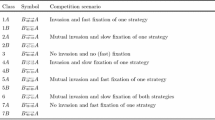

Here we provide formal mathematical definitions of the wN and Nw limits, which were lacking in the work of Jeong et al. (2014). We then apply these limits to the Moran process in evolutionary game theory (Moran 1958; Taylor et al. 2004; Nowak et al. 2004). We obtain asymptotic expressions for fixation probability under these limits, and show how these expressions differ depending on the order in which limits are taken. We also analyze criteria for evolutionary success under these limits. Our results are summarized in Table 1 and Fig. 1. We show how these limits shed new light on familiar game-theoretic concepts such as evolutionary stability, risk dominance, and the one-third rule. We also formalize and strengthen some previous results in the literature (Nowak et al. 2004; Antal and Scheuring 2006; Bomze and Pawlowitsch 2008).

Our paper is organized as follows. First we describe the model and define the \(wN\) and \(Nw\) limits. We then consider the case of constant fitness as a motivating example. Finally, we present the results of our analysis, first for the \(wN\) limit and then the \(Nw\) limit. For each limit, we derive the fixation probability for a strategy, as well as determine two conditions that measure the success of that strategy. The first condition compares the strategy’s fixation probability to that of a neutral mutation. The second compares the fixation probability of one strategy to the other.

Summary of our results. a Asymptotic expressions for \(\rho _A\) under the \(Nw\) limit in different parameter regions. The dashed line indicates the border case \(a+b=c+d\). b In both the \(wN\) limit and \(Nw\) limit, \(\rho _A > \rho _B\) if \(a+b>c+d\). c The order of limits matters when comparing the fixation probability of A (\(\rho _A\)) with that of a neutral mutation (1 / N). In the \(Nw\) limit, \(\rho _A>1/N\) if \(b>d\) and \(a+b \ge c+d\). d In the \(wN\) limit, \(\rho _A>1/N\) if \(a+2b > c+2d\)

2 Model

In the Moran process (Moran 1958; Taylor et al. 2004; Nowak et al. 2004), a population of size N consists of A and B individuals. Interactions are described by a game

The fitnesses of A and B individuals are defined, respectively, as expected payoffs:

where i indicates the number of A individuals. Each time-step, an individual is chosen to reproduce proportionally to its fitness, and an individual is chosen with uniform probability to be replaced.

This process has two absorbing states: \(i=N\), where type A has become fixed, and \(i=0\), where type B has become fixed. The fixation probability of A, denoted \(\rho _A\), is the probability that type A will become fixed when starting from a state with a single A individual (\(i=1\)). Similarly, the fixation probability of B is denoted \(\rho _B\) and defined as the probability that type B will become fixed when starting from a state with single B individual (\(i=N-1\)). The fixation probability of A can be calculated as (Taylor et al. 2004)

The ratio of fixation probabilities is given by

For weak selection, we use the rescaled payoffs \(F_A(i) = 1 + w f_A(i)\) and \(F_B(i) = 1 + w f_B(i)\) in place of the original payoffs \(f_A(i)\) and \(f_B(i)\), respectively. This is equivalent to replacing the original game matrix (1) with the transformed matrix

Above, the parameter \(w>0\) quantifies the strength of selection. A result is said to hold under weak selection if it holds to first order in w as \(w \rightarrow 0\) (Nowak et al. 2004).

The success of strategy A is quantified in two ways (Nowak et al. 2004). The first, \(\rho _A > 1/N\), is the condition that selection will favor strategy A over a neutral mutation (a type for which all payoff matrix entries are equal to 1). The second condition compares the two fixation probabilities. If \(\rho _A > \rho _B\), we say that strategy A is favored over strategy B.

3 Limit definitions

We provide here formal mathematical definitions of the \(wN\) limit, in which the weak selection is applied prior to taking the large population limit, and the \(Nw\) limit, in which these are reversed. We define what it means for a statement to hold true, as well as for a function to have a particular asymptotic expansion, in each of these limits.

First, we define a statement to be true in the \(wN\) limit if it holds for all sufficiently large N and all sufficiently small w, where N must be fixed first and w may depend on N. The formal statement is as follows:

Definition 1

Statement S(N, w) is True in the \(wN\) limit if

Second, we define what it means for two functions to be asymptotically equivalent to first order in w in the \(wN\) limit:

Definition 2

For functions f(N, w) and g(N, w), we say that \(f(N,w)\sim g(N,w)+o(w)\) in the \(wN\) limit if and only if

where \( \lim _{N\rightarrow \infty } \lim _{w\rightarrow 0} R(N,w) = 0\).

In words, f and g must differ by w times a remainder term that disappears as first \(w \rightarrow 0\) and then \(N \rightarrow \infty \).

Third, we formalize what it means for a statement to be true in the \(Nw\) limit. As in the \(wN\) limit, it must hold for all sufficiently large N and all sufficiently small w, but here w must be fixed first and N may depend on w.

Definition 3

Statement S(N, w) is True in the \(Nw\) limit if

Finally, we define what it means for two functions to be asymptotically equivalent to first order in w in the \(Nw\) limit. The only difference from Definition 2 is that the order of limits is reversed:

Definition 4

For functions f(N, w) and g(N, w), we say that \(f(N,w)\sim g(N,w)+o(w)\) in the \(Nw\) limit if and only if

where \(\lim _{w\rightarrow 0} \lim _{N\rightarrow \infty } R(N,w) = 0\).

4 Example: constant fitness

We illustrate the difference between the \(Nw\) and \(wN\) limits using the special case of constant fitness. In this case, the payoffs to A and B are set to constant values \(f_A = 1+s\) and \(f_B = 1\), independent of the population state i, where \(s>-1\) is the selection coefficient of A. The fixation probability of A is (Moran 1958)

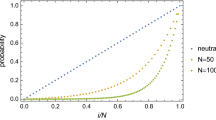

In the limits of large population size (\(N\rightarrow \infty \)) and weak selection (\(s\rightarrow 0\)), the asymptotic expansion of \(\rho _A\) is different depending on the order in which the limits are taken (Fig. 2). (Note that in the constant-fitness case, selection strength can be quantified by |s| rather than w.) In the \(wN\) limit, we have

whereas in the \(Nw\) limit,

The asymptotic expressions (7) and (8) hold in the sense specified by Definitions 2 and 4, respectively. Note that \(\rho _A\) is linear in the \(wN\) limit and piecewise-linear in the \(Nw\) limit. Moreover, the slope of \(\rho _A\) with respect to s in the wN limit is the average of the two corresponding slopes in the \(Nw\) limit (see also Fig. 2d, e).

Although the asymptotic expressions (7) and (8) differ under the two limit orderings, the conditions for the success of type A are the same. This is because, for any \(s>-1\) and \(N\ge 2\), type A is favored over a neutral mutation (\(\rho _A > 1/N\)), according to Eq. (6), if and only if \(s > 0\). Likewise, A is favored over B (\(\rho _A > \rho _B\)) if and only if \(s > 0\). Since these conditions apply to arbitrary s and N, they remain valid under any limits of these parameters.

Fixation probability versus selection coefficient for constant selection. a Fixation probability \(\rho _A\), given by Eq. (6), is an increasing function of the selection coefficient s. b When selection is weak (\(|s|\ll 1\)), fixation probability is approximately linear in s. c For large population size (\(N \rightarrow \infty \)), fixation probability goes to zero for \(s \le 0\), and there is a corner in the graph at \(s=0\). d In the \(wN\) limit, weak selection is applied first followed by large population size, resulting in \(\rho _A \sim 1/N+s/2+o(s)\). e In the \(Nw\) limit, the limit \(N\rightarrow \infty \) is applied first followed by weak selection. The result is a piecewise-linear function which is zero for \(s \le 0\) and has slope 1 for \(s>0\). Population size is \(N=5, 10, 10^3, 10^3,\) and \(10^4\) in panels a–e, respectively

5 Results

Having motivated our investigation using the case of constant selection, we now consider an arbitrary payoff matrix (1). We analyze the \(wN\) limit first, followed by the \(Nw\) limit.

5.1 \(wN\) Limit

In the \(wN\) limit we first apply weak selection and then consider large population size. Results for \(\rho _A\) are presented first, followed by conditions for success.

Theorem 1

In the \(wN\) limit,

This theorem formalizes a result of Nowak et al. (2004), and can also be considered a special case of Eq. (92) of Lessard and Ladret (2007).

Proof

We apply weak selection to the fitnesses in Eq. (2):

Substituting Eq. (10) for \(f_A(i)\) and \(f_B(i)\) in (3) and taking a Taylor expansion about \(w=0\) gives

where \(\lim _{w\rightarrow 0} Q(N,w) = 0\). We regroup,

defining the remainder term as \(R(N,w) = Q(N,w)-\frac{1}{6N}(2a+b+c-4d)\). By taking the limit of R(N, w) as first \(w\rightarrow 0\) and then \(N\rightarrow \infty \), we find that

By Definition 2, \(\rho _A \sim \frac{1}{N}+\frac{w}{6}(a+2b-c-2d)+o(w)\) in the \(wN\) limit. \(\square \)

5.1.1 Conditions for success

Theorem 2

In the \(wN\) limit, \(\rho _A > \frac{1}{N}\) if and only if one of the following holds:

-

(i)

\(a+2b>c+2d\)

-

(ii)

\(a+2b=c+2d\) and \(b > c\).

An equivalent result was obtained by Bomze and Pawlowitsch (2008).

Proof

Under weak selection, it is apparent from Eq. (11) that \(\rho _A > 1/N\) if \(N(a+2b-c-2d)-(2a+b+c-4d)>0\) and \(\rho _A < 1/N\) if \(N(a+2b-c-2d)-(2a+b+c-4d)<0\). Thus \(\rho _A > 1/N\) for sufficiently large N if \(a+2b>c+2d\) or if \(a+2b=c+2d\) and \(2a+b+c-4d<0\). The second condition is equivalent to \(a+2b=c+2d\) and \(b > c\).

For the border case, \(a+2b=c+2d\) and \(b = c\), we take a second-order expansion of \(\rho _A\):

For \(N>2\) and \(a\ne b\), the second order term is always negative, which implies that \(\rho _A < 1/N\). Lastly, if \(a = b = c = d\) then \(\rho _A = 1/N\). \(\square \)

Theorem 3

In the \(wN\) limit, \(\rho _A > \rho _B\) if and only if one of the following holds:

-

(i)

\(a+b>c+d\)

-

(ii)

\(a+b=c+d\) and \(b > c\).

Case (i) of this result was stated informally by Nowak et al. (2004).

Proof

Substituting Eq. (10) for \(f_A(i)\) and \(f_B(i)\) into Eq. (4) and taking a Taylor expansion about \(w=0\), we get

where \(\lim _{w\rightarrow 0} Q(N,w) = 0\). Clearly, \(\rho _A\) is greater than (less than) \(\rho _B\) under weak selection if \(N(a+b-c-d)-2a+2d\) is positive (negative). The expression is positive for sufficiently large N if \(a+b>c+d\) or if \(a+b=c+d\) and \(a<d\). The second condition is equivalent to \(a+b=c+d\) and \(b > c\). Lastly, if \(b=c\) and \(a=d\), then from Eq. (4), \(\rho _A = \rho _B\). \(\square \)

5.2 \(Nw\) Limit

In this section, we first determine the limit of \(\rho _A\) as \(N\rightarrow \infty \) (Theorem 4) and then find an asymptotic expression for \(\rho _A\) in the \(Nw\) limit. We then turn to conditions for success, first in the \(N \rightarrow \infty \) limit (Theorems 5 and 6) and then the \(Nw\) limit.

Theorem 4

The fixation probability \(\rho _A\) has the following large-population limit:

where

and

Some aspects of this result were obtained by Antal and Scheuring (2006), using a mixture of exact and approximate methods. Our proof confirms the results of Antal and Scheuring (2006) except in the case \(b>d\), \(a< c\), and \(I=0\), as we detail in the Discussion.

Proof

We first establish some basic definitions and results before considering various cases. From Eq. (2), define the function \(f\left( \frac{i}{N},N\right) \) as

\(\tilde{f}\left( \frac{i}{N}\right) \) of Eq. (14) serves as an approximation to \(f\left( \frac{i}{N},N\right) \) with error:

Importantly, \(\epsilon _N(i)\) is uniformly bounded in the sense that, for N sufficiently large, there exists a positive constant L such that \(|\epsilon _N(i)|\le \frac{L}{N}\) for all \(i=1,\ldots ,N\). Specifically, for \(N\ge \frac{2a}{\min \left\{ a,b\right\} }\), we can set

Therefore, \(\lim _{N\rightarrow \infty } f(x,N) = \tilde{f}(x) \text { uniformly in } x\).

In our proof, we will make use of some properties of the function \(\tilde{f}(x)\). The derivative

implies that \(\tilde{f}(x)\) is monotonic; it is always constant (\(bc= ad\)), strictly increasing (\(bc>ad\)), or strictly decreasing (\(bc<ad\)). Since extrema must occur at the endpoints (\(\tilde{f}(0)=d/b\) and \(\tilde{f}(1)=c/a\)), set

Our proof also makes frequent use of the integral I of Eq. (13), which is evaluated as:

An illustration of this integral is given in Fig. 3.

Plot of \(\ln \tilde{f}(x)\) versus x, where \(\tilde{f}(x)\) is defined as in Eq. (14). This figure illustrates the case that \(b>d\), \(c>a\) and \(I>0\) (the net area under the curve is positive). The point \(x^*\) satisfies \(\int _0^{x^*}\ln \tilde{f}(x)\; dx = 0\)

Our overall objective is to investigate the fixation probability of Eq. (3), which can be written

where S is the sum defined as

Since \(\tilde{f}\) is a simpler function than f, we rewrite the product in Eq. (20) as \(\prod _{i=1}^k \left[ \tilde{f}\left( \frac{i}{N}\right) +\epsilon _N(i)\right] \). The bound on \(\epsilon _N(i)\) implies that for sufficiently large N,

Using \(\tilde{m}\), the minimum of \(\tilde{f}\) given in Eq. (17), we obtain

These inequalities allow for the comparison between f and \(\tilde{f}\).

The main idea of the proof going forward is to determine under which conditions the sum S diverges and under which conditions the sum converges (and to what value it converges to) as N gets arbitrarily large. To accomplish this, we first split the sum of Eq. (20) as \(S = S_1+S_2\), where \(S_1\) and \(S_2\) are non-negative sums defined as

Let

Since f converges uniformly to the monotonic function \(\tilde{f}\),

Useful inequalities obtained from Eqs. (22) and (24) are

The geometric series gives

as long as \(m_1\ne 1\) and \(M_1\ne 1\), respectively.

Now that we have some basic definitions and results, we pursue \(\lim _{N\rightarrow \infty }\rho _A\) by considering cases. We first compare b and d. If necessary, we then compare a and c and if further required, consider the sign of I.

-

1.

Case \(b<d\) In this case, \(\lim _{N\rightarrow \infty } m_1 = d/b >1\) and

$$\begin{aligned} \lim _{N\rightarrow \infty }\frac{m_1-m_1^{\lfloor \ln {N}\rfloor +1}}{1-m_1}=\infty . \end{aligned}$$It follows from Eq. (27) that \(\lim _{N\rightarrow \infty }S_1=\infty \) and consequently, \(\lim _{N\rightarrow \infty }S = \infty \). Eq. (19) gives \(\lim _{N\rightarrow \infty }\rho _A = 0\).

-

2.

Case \(b=d\) In this case, \(\lim _{N\rightarrow \infty } m_1 = d/b =1\). We will show that the sum \(\sum _{k=1}^{\lfloor \ln {N}\rfloor } m_1^k\) diverges. Fix an arbitrary positive integer B so that

$$\begin{aligned} \lim _{N\rightarrow \infty }\sum _{k=1}^{B+1}m_1^k =B+1. \end{aligned}$$This implies that for all sufficiently large N,

$$\begin{aligned} \sum _{k=1}^{B+1}m_1^k > B. \end{aligned}$$In particular, for \(\lfloor \ln {N} \rfloor > B+1\),

$$\begin{aligned} \sum _{k=1}^{\lfloor \ln {N}\rfloor } m_1^k>\sum _{k=1}^{B+1} m_1^k >B. \end{aligned}$$Since B was arbitrary \(\sum _{k=1}^{\lfloor \ln {N}\rfloor } m_1^k\) becomes larger than any positive integer as \(N\rightarrow \infty \). This proves that

$$\begin{aligned} \lim _{N\rightarrow \infty }\sum _{k=1}^{\lfloor \ln {N} \rfloor }m_1^k=\infty . \end{aligned}$$From Eq. (26) we conclude that \(\lim _{N\rightarrow \infty }S_1 = \infty \) and consequently \(\lim _{N\rightarrow \infty }\rho _A = 0\).

-

3.

Case \(b>d\) Under this case \(\lim _{N\rightarrow \infty } m_1 = \lim _{N\rightarrow \infty } M_1 = d/b <1\). From Eq. (27), \(S_1\) is bounded, and it follows from taking the limit as \(N\rightarrow \infty \) of Eq. (27) and applying the Squeeze Theorem (Thomson et al. 2001) that

$$\begin{aligned} \lim _{N\rightarrow \infty }S_1 = \frac{d}{b-d}. \end{aligned}$$(28)We now turn our attention to \(S_2\), which requires the consideration of subcases.

-

(a)

Subcase \(a > c\) Eq. (25) implies \(\lim _{N\rightarrow \infty }m_2<1\) and \(\lim _{N\rightarrow \infty }M_2<1\). Furthermore, \(S_2\) of Eq. (23) is bounded:

$$\begin{aligned} \sum _{k=\lfloor \ln {N}\rfloor +1}^{N-1}m_2^k&\le S_2\le \sum _{k=\lfloor \ln {N}\rfloor +1}^{N-1}M_2^k \nonumber \\ \frac{{m_2}^{\lfloor \ln {N}\rfloor +1}-{m_2}^{N}}{1-{m_2}}&\le S_2\le \frac{{M_2}^{\lfloor \ln {N}\rfloor +1}-{M_2}^{N}}{1-{M_2}}. \end{aligned}$$Applying the Squeeze Theorem (Thomson et al. 2001),

$$\begin{aligned} \lim _{N\rightarrow \infty }S_2 = 0. \end{aligned}$$(29)Eqs. (28) and (29) together give \(\lim _{N\rightarrow \infty } S = d/(b-d)\) and by Eq. (19), \(\lim _{N\rightarrow \infty } \rho _A = (b-d)/b\).

-

(b)

Subcase \(a < c\) In this case, \(\tilde{f}\) is an increasing function with minimum value of \(\tilde{f}(0) = d/b<1\) and maximum value of \(\tilde{f}(1) = c/a>1\). The behavior of \(\rho _A\) depends on the sign of the integral I. Therefore, we must consider subcases to this subcase. An illustration is given in Fig. 3 for the subcase \(I>0\).

-

i

Subcase \(I<0\) We will show that \(S_2\rightarrow 0\) as \(N\rightarrow \infty \). Set

$$\begin{aligned} \tilde{A}_k = \sum _{i=1}^{k} \ln \tilde{f}\left( \frac{i}{N}\right) , \end{aligned}$$(30)and

$$\begin{aligned} \tilde{S_2}&= \sum _{k=\lfloor \ln {N}\rfloor +1}^{N-1} \prod _{i=1}^k \tilde{f}\left( \frac{i}{N}\right) =\sum _{k=\lfloor \ln {N}\rfloor +1}^{N-1} \exp \tilde{A}_k. \end{aligned}$$(31)We will prove \(\tilde{S}_2\rightarrow 0\) as \(N\rightarrow \infty \) by first showing that \(\exp \tilde{A}_k\) is less than or equal to some constant multiple of \(e^{kI}\), where I is defined in Eq. (13). Consider the integral \(\int _0^{k/N} \ln \tilde{f}\left( x\right) dx\). Since \(\ln \tilde{f}\left( x\right) \) is a monotonically increasing function, the left Riemann sum is a lower bound:

$$\begin{aligned} \nonumber \int _0^{k/N} \ln \tilde{f}\left( x\right) dx&>\frac{1}{N}\sum _{i=0}^{k-1} \ln \tilde{f}\left( \frac{i}{N}\right) \\&=\frac{1}{N}\left( \tilde{A}_k+\ln \tilde{f}\left( 0\right) -\ln \tilde{f}\left( \frac{k}{N}\right) \right) . \end{aligned}$$(32)Furthermore, the maximum value of \(\ln \tilde{f}(x)\) is \(\ln \tilde{f}(1)\). Substituting this bound into (32) and rearranging, we have that for all \(k=1,...,N\),

$$\begin{aligned} \tilde{A}_k&< N\int _0^{k/N} \ln \tilde{f}\left( x\right) dx+\ln \frac{\tilde{f}\left( 1\right) }{\tilde{f}\left( 0\right) }. \end{aligned}$$(33)Since \(\ln \tilde{f}\) is increasing, the average value of \(\ln \tilde{f}(x)\) over intervals [0, y] must be increasing in y. Hence for \(y\in [0,1]\),

$$\begin{aligned} \frac{1}{y}\int _0^{y} \ln \tilde{f}\left( x\right) dx \le \int _0^{1} \ln \tilde{f}\left( x\right) dx = I. \end{aligned}$$Let \(y = k/N\) to obtain

$$\begin{aligned} N\int _0^{k/N} \ln \tilde{f}\left( x\right) dx \le kI. \end{aligned}$$Combining with Eq. (33),

$$\begin{aligned} \tilde{A}_k< kI +\ln \frac{\tilde{f}\left( 1\right) }{\tilde{f}\left( 0\right) }. \end{aligned}$$(34)Substitute Eq. (34) into Eq. (31) to obtain

$$\begin{aligned} \tilde{S}_2&< \sum _{k=\lfloor \ln {N}\rfloor +1}^{N-1} \frac{\tilde{f}\left( 1\right) }{\tilde{f}\left( 0\right) } e^{kI}=\frac{\tilde{f}\left( 1\right) }{\tilde{f}\left( 0\right) }\cdot \frac{e^{I(\lfloor \ln {N}\rfloor +1)}-e^{IN}}{1-e^{I}}. \end{aligned}$$Therefore since \(I<0\),

$$\begin{aligned} \lim _{N\rightarrow \infty }\tilde{S}_2&= 0. \end{aligned}$$(35)We must now show how \(\tilde{S}_2\) relates to \(S_2\). Substitute \(\tilde{m} = \tilde{f}(0)=d/b\) into Eq. (21) and sum over k to obtain an upper bound for \(S_2\):

$$\begin{aligned} \sum _{k=\lfloor \ln {N}\rfloor +1}^{N-1}\prod _{i=1}^k f\left( \frac{i}{N},N\right)&\le \sum _{k=\lfloor \ln {N}\rfloor +1}^{N-1}\left( 1+\frac{bL}{dN}\right) ^k\prod _{i=1}^k \tilde{f}\left( \frac{i}{N}\right) \\&\le \left( 1+\frac{bL}{dN}\right) ^N\sum _{k=\lfloor \ln {N}\rfloor +1}^{N-1}\prod _{i=1}^k \tilde{f}\left( \frac{i}{N}\right) . \end{aligned}$$Thus,

$$\begin{aligned} S_2&\le \left( 1+\frac{bL}{dN}\right) ^N \tilde{S}_2. \end{aligned}$$The limit

$$\begin{aligned} \lim _{N\rightarrow \infty }\left( 1+\frac{bL}{dN}\right) ^N = e^{bL/d}, \end{aligned}$$(36)together with Eq. (35) gives

$$\begin{aligned} \lim _{N\rightarrow \infty }S_2&= 0. \end{aligned}$$(37)Adding Eqs. (28) and (37), we find \(\lim _{N\rightarrow \infty } S = d/(b-d)\) and consequently \(\lim _{N\rightarrow \infty } \rho _A = (b-d)/b\).

-

ii

Subcase \(I>0\) We will show that \(S_2\rightarrow \infty \) as \(N\rightarrow \infty \). Break up \(S_2\) of Eq. (23) so that \(S_2 = S_3+S_4\), where

$$\begin{aligned} S_3&=\sum _{k=\lfloor \ln {N}\rfloor +1}^{\lfloor Nx^* \rfloor -1} \prod _{i=1}^k f\left( \frac{i}{N},N\right) ,\nonumber \\ S_4&=\sum _{k=\lfloor Nx^* \rfloor }^{N-1} \prod _{i=1}^k f\left( \frac{i}{N},N\right) , \end{aligned}$$(38)and \(x^*\) is defined as the point where \(\int _0^{x^*} \ln \tilde{f}\left( x\right) dx=0\) (see Fig. 3). Define

$$\begin{aligned} m_4&= \min _{\lfloor Nx^* \rfloor \le i \le N-1}\left\{ f\left( \frac{i}{N},N\right) \right\} . \end{aligned}$$(39)This implies the inequality:

$$\begin{aligned} S_4&\ge \sum _{k=\lfloor Nx^* \rfloor }^{N-1} \prod _{i=1}^k m_4 = \sum _{k=\lfloor Nx^* \rfloor }^{N-1} m_4^k = \frac{m_4^{\lfloor Nx^* \rfloor }-m_4^{N}}{1-m_4}. \end{aligned}$$(40)Since \(\tilde{f}\) is increasing, \(m_4\) has the limit: \(\lim _{N\rightarrow \infty } m_4 = \tilde{f}\left( x^*\right) >1\). Therefore, \(\lim _{N\rightarrow \infty }S_4 =\infty \), which implies that \(\lim _{N\rightarrow \infty }S = \infty \) and \(\lim _{N\rightarrow \infty } \rho _A = 0\).

-

iii

Subcase \(I = 0\) We will show that limit of \(S_2\) as \(N\rightarrow \infty \) is positive and finite. Let \(\hat{x} = (b-d)/(b-d+c-a)\) be the point for which \(\tilde{f}\left( \hat{x}\right) = 1\) (see Fig. 3). Consider a sequence \(\beta _N\) that satisfies

$$\begin{aligned} \hat{x}< \lim _{N\rightarrow \infty } \frac{\beta _N}{N} < 1, \end{aligned}$$and converges to a limit \(\beta =\lim _{N\rightarrow \infty } \beta _N/N\). Split \(S_2\) of Eq. (23) at \(k = \beta _N\), such that \(S_2 = S_5+S_6\), where \(S_6\) is the right tail-end of the sum. We will show that \(S_5\rightarrow 0\) and \(S_6\) approaches a positive constant as \(N\rightarrow \infty \). Set

$$\begin{aligned} S_5&=\sum _{k=\lfloor \ln {N}\rfloor +1}^{\beta _N-1} \prod _{i=1}^k f\left( \frac{i}{N},N\right) \nonumber \\ S_6&=\sum _{k=\beta _N}^{N-1} \prod _{i=1}^k f\left( \frac{i}{N},N\right) . \end{aligned}$$(41)To obtain the limit of \(S_5\) we define

$$\begin{aligned} \tilde{S}_5 = \sum _{k=\lfloor \ln {N}\rfloor +1}^{\beta _N-1}\prod _{i=1}^k \tilde{f}\left( \frac{i}{N}\right) = \sum _{k=\lfloor \ln {N}\rfloor +1}^{\beta _N-1} \exp \tilde{A}_k, \end{aligned}$$where \(\tilde{A}_k\) is given in Eq. (30). Set \(C = \int _0^{\beta }\ln \tilde{f}(x) \; dx\). Importantly, \(C<0\) since \(\beta <1\), \(I=0\) and \(\ln \tilde{f}(x)\) is monotonic. Similar arguments as in case 3(b)i show that

$$\begin{aligned} \tilde{S}_5< \sum _{k=\lfloor \ln {N}\rfloor +1}^{\beta _N-1} \frac{\tilde{f}\left( 1\right) }{\tilde{f}\left( 0\right) } \, e^{kC} =\frac{\tilde{f}\left( 1\right) }{\tilde{f}\left( 0\right) }\cdot \frac{e^{C(\lfloor \ln {N}\rfloor +1)}-e^{C\beta _N}}{1-e^{C}}. \end{aligned}$$Since \(C<0\), it follows that

$$\begin{aligned} \lim _{N\rightarrow \infty }\tilde{S}_5&= 0. \end{aligned}$$(42)To relate \(\tilde{S}_5\) to \(S_5\), we substitute \(\tilde{m} = d/b\) into Eq. (21) to obtain an upper bound for \(S_5\),

$$\begin{aligned} S_5&\le \left( 1+\frac{bL}{dN}\right) ^N \tilde{S}_5. \end{aligned}$$Consequently, from Eqs. (36) and (42),

$$\begin{aligned} \lim _{N\rightarrow \infty }S_5 = 0. \end{aligned}$$(43)We now turn our attention to \(S_6\) of Eq. (41). Define

$$\begin{aligned} \begin{aligned} m_6&= \min _{\beta _N \le i \le N-1}\left\{ f\left( \frac{i}{N},N\right) \right\} ,\\ M_6&= \max _{\beta _N \le i \le N-1}\left\{ f\left( \frac{i}{N},N\right) \right\} , \end{aligned} \end{aligned}$$(44)which have the limits \(\lim _{N\rightarrow \infty } m_6 = \tilde{f}(\beta ) > 1\) and \(\lim _{N\rightarrow \infty } M_6= \tilde{f}\left( 1\right) =c/a>1\). Rewrite \(S_6\) as

$$\begin{aligned} S_6&=\left[ \prod _{i=1}^{N-1} f\left( \frac{i}{N},N\right) \right] \left[ 1+\sum _{k=\beta _N}^{N-2} \prod _{j=k+1}^{N-1} \left( f\left( \frac{j}{N},N\right) \right) ^{-1}\right] \nonumber \\&=\left[ \prod _{i=1}^{N-1} f\left( \frac{i}{N},N\right) \right] \left[ 1+\sum _{\ell =1}^{N-\beta _N-1} \prod _{h=1}^{\ell } \left( f\left( \frac{N-h}{N},N\right) \right) ^{-1}\right] . \end{aligned}$$(45)Denote the second factor on the right-hand side of Eq. (45) by \(\hat{S}_6\). From Eq. (44), we have the bounds

$$\begin{aligned} 1+\sum _{\ell =1}^{N-\beta _N-1} M_6^{-\ell }&\le \hat{S}_6\le 1+\sum _{\ell =1}^{N-\beta _N-1}m_6^{-\ell }\\ 1+\frac{M_6^{-N+\beta _N}-M_6^{-1}}{M_6^{-1}-1}&\le \hat{S}_6 \le 1+\frac{m_6^{-N+\beta _N}-m_6^{-1}}{m_6^{-1}-1}. \end{aligned}$$Now taking \(N\rightarrow \infty \),

$$\begin{aligned} \lim _{N\rightarrow \infty }\frac{M_6}{M_6-1}&\le \lim _{N\rightarrow \infty } \hat{S}_6 \le \lim _{N\rightarrow \infty }\frac{m_6}{m_6-1}\nonumber \\ \frac{\tilde{f}(1)}{\tilde{f}(1)-1}&\le \lim _{N\rightarrow \infty } \hat{S}_6 \le \frac{\tilde{f}(\beta )}{\tilde{f}(\beta )-1}. \end{aligned}$$(46)Since Eq. (46) is true for all \(\beta \) with \(\hat{x}<\beta <1\), then

$$\begin{aligned} \lim _{N\rightarrow \infty } \hat{S}_6 = \frac{\tilde{f}(1)}{\tilde{f}(1)-1}=\frac{c}{c-a}. \end{aligned}$$(47)We now analyze the first factor of Eq. (45) by first investigating the integral I. Apply the Extended Trapezoidal Rule (Abramowitz and Stegun 1964) to I:

$$\begin{aligned} I&= \int _0^1\ln \tilde{f}(x)dx \\ {}&= \frac{1}{N}\left[ \frac{\ln \tilde{f}(0)+\ln \tilde{f}(1)}{2}+\sum _{i=1}^{N-1}\ln \tilde{f}\left( \frac{i}{N}\right) \right] +\mathcal {O}\left( N^{-2}\right) . \end{aligned}$$Recalling that \(I=0\), \(\tilde{f}(0)=d/b\) and \(\tilde{f}(1)=c/a\), we obtain the asymptotic expansion:

$$\begin{aligned} \sum _{i=1}^{N-1}\ln \tilde{f}\left( \frac{i}{N}\right)&=\ln \sqrt{\frac{ab}{cd}}+\mathcal {O}(N^{-1}). \end{aligned}$$(48)Next we compare the sum in Eq. (48) with \(\sum _{i=1}^{N-1}\ln f\left( \frac{i}{N},N\right) \) by looking at their difference:

$$\begin{aligned} \sum _{i=1}^{N-1}&\ln f\left( \frac{i}{N},N\right) - \sum _{i=1}^{N-1} \ln \tilde{f}\left( \frac{i}{N}\right) \\&=\sum _{i=1}^{N-1} \ln \frac{f\left( \frac{i}{N},N\right) }{\tilde{f}\left( \frac{i}{N}\right) }\\&= \sum _{i=1}^{N-1} \ln \left[ 1+\frac{1}{N}\left( \frac{a}{b+\frac{i}{N}(a-b)-\frac{a}{N}}-\frac{d}{d+\frac{i}{N}(c-d)}\right) \right. \\&\left. -\frac{1}{N^2}\frac{ad}{\left( d+\frac{i}{N}(c-d)\right) \left( b+\frac{i}{N}(a-b)-\frac{a}{N}\right) }\right] . \end{aligned}$$As \(N \rightarrow \infty \), we have the asymptotic expression

$$\begin{aligned} \sum _{i=1}^{N-1}&\ln f\left( \frac{i}{N},N\right) - \sum _{i=1}^{N-1} \ln \tilde{f}\left( \frac{i}{N}\right) \nonumber \\&=\frac{1}{N}\sum _{i=1}^{N-1}\left( \frac{a}{b+\frac{i}{N}(a-b)}-\frac{d}{d+\frac{i}{N}(c-d)}\right) +\mathcal {O}(N^{-1}). \end{aligned}$$If we add and subtract \((a-b)/(bN)\) to the right-hand side, we obtain a left Riemann sum, which can be replaced as \(N \rightarrow \infty \) by an integral:

$$\begin{aligned} \sum _{i=1}^{N-1}&\ln f\left( \frac{i}{N},N\right) - \sum _{i=1}^{N-1} \ln \tilde{f}\left( \frac{i}{N}\right) \nonumber \\&= \frac{1}{N}\sum _{i=0}^{N-1} \left( \frac{a}{b+\frac{i}{N}(a-b)}-\frac{d}{d+\frac{i}{N}(c-d)}\right) -\frac{a-b}{bN} +\mathcal {O}(N^{-1}) \nonumber \\&=\int _0^1 \left( \frac{a}{b+x(a-b)}-\frac{d}{d+x(c-d)}\right) dx +\mathcal {O}(N^{-1})\nonumber \end{aligned}$$Evaluate the integral to obtain:

$$\begin{aligned} \sum _{i=1}^{N-1}&\ln f\left( \frac{i}{N},N\right) - \sum _{i=1}^{N-1} \ln \tilde{f}\left( \frac{i}{N}\right)&=\ln \left( \frac{a^{\frac{a}{a-b}}d^{\frac{d}{c-d}}}{b^{\frac{a}{a-b}}c^{\frac{d}{c-d}}}\right) +\mathcal {O}(N^{-1}). \end{aligned}$$(49)The logarithm can be simplified using the condition \(I=0\). Eq. (18) gives \(a^{\frac{a}{a-b}}d^{\frac{d}{c-d}}=b^{\frac{b}{a-b}}c^{\frac{c}{c-d}}\), therefore

$$\begin{aligned} \ln \left( \frac{a^{\frac{a}{a-b}}d^{\frac{d}{c-d}}}{b^{\frac{a}{a-b}}c^{\frac{d}{c-d}}}\right)&=\ln \left( \frac{b^{\frac{b}{a-b}}c^{\frac{c}{c-d}}}{b^{\frac{a}{a-b}}c^{\frac{d}{c-d}}}\right) =\ln \left( \frac{c}{b}\right) . \end{aligned}$$Eq. (49) then simplifies to

$$\begin{aligned} \sum _{i=1}^{N-1} \ln f\left( \frac{i}{N},N\right) - \sum _{i=1}^{N-1} \ln \tilde{f}\left( \frac{i}{N}\right) =\ln \left( \frac{c}{b}\right) +\mathcal {O}(N^{-1}). \end{aligned}$$(50)Combining Eqs. (48) and (50) yields

$$\begin{aligned} \sum _{i=1}^{N-1} \ln f\left( \frac{i}{N},N\right)&= \ln \sqrt{\frac{ab}{cd}}+\ln \left( \frac{c}{b}\right) +\mathcal {O}(N^{-1})\nonumber \\&=\ln \sqrt{\frac{ac}{bd}}+\mathcal {O}(N^{-1}). \end{aligned}$$Thus, \(\prod _{i=1}^{N-1}f\left( \frac{i}{N},N\right) =\sqrt{ac/(bd)}+\mathcal {O}(N^{-1})\) and

$$\begin{aligned} \lim _{N\rightarrow \infty }\prod _{i=1}^{N-1}f\left( \frac{i}{N},N\right)&=\sqrt{\frac{ac}{bd}}. \end{aligned}$$(51)Combine Eqs. (47) and (51) with (45) to obtain

$$\begin{aligned} \lim _{N\rightarrow \infty } S_6 =\frac{c}{c-a}\sqrt{\frac{ac}{bd}}. \end{aligned}$$(52)Altogether Eqs. (28), (43) and (52) give

$$\begin{aligned} \lim _{N\rightarrow \infty } S = \lim _{N\rightarrow \infty } (S_1 + S_5 + S_6) = \frac{d}{b-d} +\frac{c}{c-a}\sqrt{\frac{ac}{bd}}, \end{aligned}$$and from Eq. (19),

$$\begin{aligned} \lim _{N\rightarrow \infty } \rho _A = \frac{(b-d)(c-a)}{b(c-a)+c(b-d)\sqrt{\frac{ac}{bd}}}. \end{aligned}$$(53)

-

i

-

(c)

Subcase \(a = c\) In this case, \(\tilde{f}\) is a strictly increasing function with minimum value \(\tilde{f}(0) = d/b <1\) and maximum value \(\tilde{f}(1) = c/a =1\). Thus, \(\ln \tilde{f}(x) < 0\) for all \(x\in [0,1)\) implying that \(I<0\). The same argument used in the case 3(b)i applies here. We obtain the result \(\lim _{N\rightarrow \infty }\rho _A = (b-d)/b\). \(\square \)

-

(a)

Theorem 4 gives the large-population limit of \(\rho _A\). We now introduce weak selection to obtain asymptotic expressions for \(\rho _A\) in the \(Nw\) limit.

Corollary 1

In the \(Nw\) limit,

Proof

We introduce weak selection according to Eq. (5). The integral I, given in closed form in Eq. (18), has the following expansion as \(w \rightarrow 0\):

We now separate into the cases of Theorem 4.

-

1.

Case \((b\le d) \text { or } \left( b>d, \, a<c \text { and } I>0\right) \) First note that given the expansion of Eq. (55), the condition \((b>d) \,\wedge (a<c) \,\wedge \, (I>0)\) is equivalent to \((b>d) \wedge (a+b<c+d)\). Since \(\lim _{N\rightarrow \infty }\rho _A=0\), \(\rho _A \sim o(w)\) by Definition 4.

-

2.

Case \(\left( b>d \text { and } a\ge c\right) \text { or }\left( b>d, \, a< c \text { and } I<0 \right) \) Using Eq. (55), these two conditions are described by one condition under weak selection: \((b>d) \wedge (a+b>c+d)\). Apply weak selection to \((b-d)/b\) and take \(N\rightarrow \infty \) to get

$$\begin{aligned} \lim _{N\rightarrow \infty }\rho _A=\frac{w(b-d)}{1+wb}= w(b-d) + wR(w), \end{aligned}$$where \(\lim _{w\rightarrow 0} R(w) = 0\). By Definition 4, \(\rho _A \sim (b-d)w +o(w)\).

-

3.

Case \(b>d, a< c \text { and } I=0 \) Given Eq. (55), this case under weak selection is equivalent to \((b>d)\wedge (a+b=c+d)\). In particular, we have \(b-d=c-a\), which allows the cancellation of a factor of \(b-d\) from the numerator and denominator of Eq. (53). Applying weak selection and taking \(N\rightarrow \infty \) yields

$$\begin{aligned} \lim _{N\rightarrow \infty }\rho _A =\frac{b-d}{2}w+wR(w), \end{aligned}$$where \(\lim _{w\rightarrow 0} R(w) = 0\). By Definition 4, \(\rho _A \sim \frac{b-d}{2}w +o(w)\). \(\square \)

5.2.1 Conditions for success

To determine conditions for success (\(\rho _A>1/N\) and \(\rho _A>\rho _B\)) in the \(Nw\) limit, we must first determine such conditions in the limit of large population size. To do so, we note that

Theorem 5

\(\rho _A > 1/N\) for sufficiently large N if and only if one of the following holds:

-

(i)

\(b>d\) and \(a\ge c\)

-

(ii)

\(b>d\), \(a<c\) and \(I \le 0\)

-

(iii)

\(b=d\) and \(a>c\)

-

(iv)

\(b=d\), \(a=c\) and \(b>c\)

Proof

\(\rho _A > 1/N\) for sufficiently large N if \(\lim _{N\rightarrow \infty }N\rho _A > 1\). From Eq. (19), we have the relation

Consider the following cases.

-

1.

Case (\(b >d\) and \(a\ge c\)) or (\(b>d\), \(a<c\) and \(I \le 0\)) From Eq. (12), \(\lim _{N\rightarrow \infty }\rho _A\) is positive and finite. Thus, \(\lim _{N\rightarrow \infty }N\rho _A =\infty \).

-

2.

Case \( b > d\), \(a<c\) and \(I>0\) Given \(S \ge S_4\) and \(\lim _{N\rightarrow \infty }m_4 =\tilde{f}(x^*)> 1\), where \(S_4\) and \(m_4\) are defined in Eqs. (38) and (39), respectively, we use the inequality of Eq. (40) to obtain

$$\begin{aligned} \lim _{N\rightarrow \infty }\frac{S}{N}&\ge \lim _{N\rightarrow \infty } \frac{m_4^{\lfloor Nx^* \rfloor }-m_4^{N}}{N(1-m_4)}=\infty . \end{aligned}$$Therefore from Eq. (56), \(\lim _{N\rightarrow \infty }N\rho _A =0\).

-

3.

Case \(b<d\) Given Eq. (27),

$$\begin{aligned} S&\ge S_1\ge \frac{m_1^{\lfloor \ln N\rfloor +1}-m_1}{m_1-1}. \end{aligned}$$Since \(\lim _{N\rightarrow \infty } m_1 = \frac{d}{b} >1\),

$$\begin{aligned} \lim _{N\rightarrow \infty }\frac{S}{N}&\ge \lim _{N\rightarrow \infty }\frac{m_1^{\lfloor \ln N\rfloor +1}-m_1}{N(m_1-1)}=\infty . \end{aligned}$$Therefore, \(\lim _{N\rightarrow \infty }N\rho _A =0\).

-

4.

Case \(b=d\)

-

(a)

Subcase \(a = b=c=d\) \(f\left( \frac{i}{N},N\right) =1\) for all i with \(\rho _A = \frac{1}{N}\). Therefore, \(\lim _{N\rightarrow \infty }N\rho _A = 1\).

-

(b)

Subcase \(a = c\) and \(a\ne b \) Here

$$\begin{aligned} \frac{\partial f}{\partial x}&=-\frac{(a-b)^2}{N\left( a\left( \frac{1}{N}-x\right) +b(x-1)\right) ^2}. \end{aligned}$$Therefore, f is a decreasing function. Let

$$\begin{aligned} \begin{aligned} m&= \min _{1\le i \le N-1}\left\{ f\left( \frac{i}{N},N\right) \right\} = f\left( \frac{N-1}{N},N\right) =\frac{a-\frac{a}{N}}{a+\frac{b-2a}{N}},\\ M&= \max _{1\le i \le N-1}\left\{ f\left( \frac{i}{N},N\right) \right\} = f\left( \frac{1}{N},N\right) = \frac{b+\frac{a-2b}{N}}{b-\frac{b}{N}}. \end{aligned} \end{aligned}$$Then

$$\begin{aligned} \sum _{k=1}^{N-1}m^k&\le S\le \sum _{k=1}^{N-1}M^k \nonumber \\ \frac{m^N-m}{N(m-1)}&\le \frac{S}{N}\le \frac{M^N-M}{N(M-1)} \end{aligned}$$(57)Note that \(\lim _{N\rightarrow \infty } m =\lim _{N\rightarrow \infty } M = 1\). To determine the limit of S / N as \(N\rightarrow \infty \), requires the derivatives:

$$\begin{aligned} \frac{dm}{dN}&= \frac{a(b-a)}{(Na+b-2a)^2}, \end{aligned}$$(58)$$\begin{aligned} \frac{dM}{dN}&= \frac{b-a}{b(N-1)^2}. \end{aligned}$$(59)Applying L’Hôpital’s Rule (Abramowitz and Stegun 1964) and using Eqs. (58) and (59), we obtain the following limits:

$$\begin{aligned} \lim _{N\rightarrow \infty } N(m-1)&= \lim _{N\rightarrow \infty } \frac{\frac{dm}{dN}}{-N^{-2}}= \frac{a-b}{a},\\ \lim _{N\rightarrow \infty } N(M-1)&=\lim _{N\rightarrow \infty } \frac{\frac{dM}{dN}}{-N^{-2}}=\frac{a-b}{b},\\ \lim _{N\rightarrow \infty } N\ln m&= \lim _{N\rightarrow \infty } \frac{\frac{1}{m}\frac{dm}{dN}}{-N^{-2}}= \frac{a-b}{a}\\ \lim _{N\rightarrow \infty } N\ln M&= \lim _{N\rightarrow \infty } \frac{\frac{1}{M}\frac{dM}{dN}}{-N^{-2}}= \frac{a-b}{b} \end{aligned}$$Therefore,

$$\begin{aligned} \begin{aligned} \lim _{N\rightarrow \infty } m^N&= \lim _{N\rightarrow \infty } e^{N\ln m }=\exp \left( \frac{a-b}{a}\right) ,\\ \lim _{N\rightarrow \infty } M^N&= \lim _{N\rightarrow \infty } e^{N\ln M }=\exp \left( \frac{a-b}{b}\right) . \end{aligned} \end{aligned}$$Take the limit of Eq. (57) to obtain

$$\begin{aligned} \frac{\exp \left( \frac{a-b}{a}\right) -1}{\frac{a-b}{a}}&\le \lim _{N\rightarrow \infty }\frac{S}{N}\le \frac{\exp \left( \frac{a-b}{b}\right) -1}{\frac{a-b}{b}}. \end{aligned}$$If \(a>b\) (equivalently \(b<c\)) then \(\lim _{N\rightarrow \infty }S/N>1\). If \(a<b\) (equivalently \(b>c\)) then \(\lim _{N\rightarrow \infty }S/N<1\). Thus, \(\lim _{N\rightarrow \infty }N\rho _A>1\) if \(b=d, a=c\) and \(b>c\) by Eq. (56).

-

(c)

Subcase \(a < c\) Set

$$\begin{aligned} m_7&= \min _{N-\lfloor \ln N\rfloor \le i\le N-1}\left\{ f\left( \frac{i}{N},N\right) \right\} . \end{aligned}$$Note that \(\lim _{N\rightarrow \infty }m_7 = \tilde{f}(1)= c/a>1\) given that f converges uniformly to \(\tilde{f}\). Then

$$\begin{aligned} \lim _{N\rightarrow \infty } \frac{S}{N}&\ge \lim _{N\rightarrow \infty } \frac{1}{N}\sum _{k=N-\lfloor \ln N \rfloor }^{N-1}m_7^k\\&=\lim _{N\rightarrow \infty } \frac{m_7^N-m_7^{N-\lfloor \ln N \rfloor }}{N(m_7-1)}=\infty \end{aligned}$$By Eq. (56), \(\lim _{N\rightarrow \infty }\rho _A =0\).

-

(d)

Subcase \(a > c\) Here

$$\begin{aligned} \frac{\partial f}{\partial x}&=\frac{b(c-a)+\frac{2ab-b^2-ac}{N}}{N^2\left( b+x(a-b)-\frac{a}{N}\right) ^2}. \end{aligned}$$Therefore for \(N > \frac{2ab-b^2-ac}{b(a-c)}, f\) is strictly decreasing. Break up the sum S as \(S=S_8+S_9\), where

$$\begin{aligned} S_8&= \sum _{k=1}^{\lfloor \sqrt{N}\rfloor -1} \prod _{i=1}^k f\left( \frac{i}{N},N\right) \\ S_9&=\sum _{k=\lfloor \sqrt{N}\rfloor }^{N-1} \prod _{i=1}^k f\left( \frac{i}{N},N\right) . \end{aligned}$$Given \(N > \frac{2ab-b^2-ac}{b(a-c)}\), define

$$\begin{aligned} M_8&= \max _{1\le i \le \lfloor \sqrt{N}\rfloor -1} f\left( \frac{i}{N},N\right) = f\left( \frac{1}{N},N\right) = \frac{b+\frac{c-2b}{N}}{b-\frac{b}{N}},\\ M_9&=\max _{\lfloor \sqrt{N}\rfloor \le i \le N-1} f\left( \frac{i}{N},N\right) = f\left( \frac{\lfloor \sqrt{N}\rfloor }{N},N\right) = \frac{b+\frac{1}{\lfloor \sqrt{N}\rfloor }(c-b)-\frac{b}{N}}{b+\frac{1}{\lfloor \sqrt{N}\rfloor }(a-b)-\frac{a}{N}}. \end{aligned}$$If \(c = b\) then \(M_8 = 1\) and we have the bound

$$\begin{aligned} S_8 \le \sum _{k=1}^{\lfloor \sqrt{N}\rfloor -1} M_8^k = \lfloor \sqrt{N}\rfloor -1. \end{aligned}$$Dividing by N and taking \(N\rightarrow \infty \) we obtain

$$\begin{aligned} \lim _{N\rightarrow \infty }\frac{S_8}{N} \le \frac{\lfloor \sqrt{N}\rfloor -1}{N} = 0. \end{aligned}$$If \(c \ne b\), we have the bound

$$\begin{aligned} S_8 \le \sum _{k=1}^{\lfloor \sqrt{N}\rfloor -1} M_8^k = \frac{M_8^{\lfloor \sqrt{N}\rfloor }-M_8}{M_8-1}. \end{aligned}$$(60)Note that \(\lim _{N\rightarrow \infty } M_8 = 1\). We use L’Hôpital’s Rule (Abramowitz and Stegun 1964) to determine the limit of \(S_8/N\) as \(N\rightarrow \infty \), which requires the derivative: \(\frac{dM_8}{dN} = \frac{b-c}{b(N-1)^2}\). It follows that

$$\begin{aligned} \lim _{N\rightarrow \infty } N(M_8-1)&=\lim _{N\rightarrow \infty } \frac{\frac{dM_8}{dN}}{-N^{-2}}= \frac{c-b}{b},\\ \lim _{N\rightarrow \infty } \lfloor \sqrt{N}\rfloor \ln M_8&=\lim _{N\rightarrow \infty } \frac{\frac{1}{M_8}\frac{dM_8}{dN}}{-\frac{1}{2}N^{-3/2}} = 0. \end{aligned}$$Therefore, \(\lim _{N\rightarrow \infty }M_8^{\lfloor \sqrt{N}\rfloor }=1\), and consequently from Eq. (60),

$$\begin{aligned} \lim _{N\rightarrow \infty }\frac{S_8}{N} \le \lim _{N\rightarrow \infty } \frac{M_8^{\lfloor \sqrt{N}\rfloor }-M_8}{N(M_8-1)}=0. \end{aligned}$$(61)We also have an upper bound for \(S_9\):

$$\begin{aligned} S_9&\le \sum _{k=\lfloor \sqrt{N}\rfloor }^{N-1} M_9^k \le \frac{1}{1-M_9}=\frac{b+\frac{a-b}{\lfloor \sqrt{N}\rfloor }-\frac{a}{N}}{\frac{a-c}{\lfloor \sqrt{N}\rfloor }+\frac{b-a}{N}} \end{aligned}$$Divide by N and take the \(N\rightarrow \infty \) limit to obtain

$$\begin{aligned} \lim _{N\rightarrow \infty }\frac{S_9}{N}&\le \lim _{N\rightarrow \infty } \frac{b+\frac{a-b}{\lfloor \sqrt{N}\rfloor }-\frac{a}{N}}{\sqrt{N}(a-c)+b-a}=0 \end{aligned}$$(62)Equations (61) and (62) imply \(\lim _{N\rightarrow \infty } S/N = 0\), and consequently \(\lim _{N\rightarrow \infty }N\rho _A =\infty \). \(\square \)

-

(a)

We now apply weak selection to find conditions for which \(\rho _A>1/N\) in the \(Nw\) limit.

Corollary 2

Given the game matrix (1), \(\rho _A > 1/N\) in the \(Nw\) limit if and only if one of the following holds:

-

(i)

\(b>d\) and \(a+b \ge c+d\)

-

(ii)

\(b=d\) and \(a>c\)

-

(iii)

\(b=d, a=c\) and \(b>c\)

Proof

In Theorem 5, we found conditions for which \(\rho _A>1/N\) for sufficiently large populations. We introduce weak selection according to Eq. (5). Given the weak selection expansion of I in Eq. (55), Condition (ii) of Theorem 5 becomes \((b>d)\wedge (a<c)\wedge (a+b\ge c+d)\). Note that Condition (i) of Theorem 5 is equivalent to \((b>d)\wedge (a\ge c)\wedge (a+b\ge c+d)\). Therefore, Conditions (i) and (ii) of Theorem 5 together give the one condition \((b>d)\wedge (a+b\ge c+d)\). Conditions (iii) and (iv) of Theorem 5 remain the same under weak selection. \(\square \)

Finally, we will determine conditions for which \(\rho _A > \rho _B\) in the \(Nw\) limit by first investigating the large N limit.

Theorem 6

Given the game matrix (1), \(\rho _A > \rho _B\) for sufficiently large N if and only if one of the following conditions holds:

-

(i)

\(I<0\)

-

(ii)

\(I=0\) and \(ac<bd\)

Proof

Given that \(\rho _A > \rho _B\) for sufficiently large N if and only if \(\lim _{N\rightarrow \infty }\rho _B/\rho _A < 1\), we will find this limit and compare it to 1 for various cases.

We will first look at the product of \(\tilde{f}\)-terms and then compare it to the product of f-terms. Since \(\tilde{f}\) is monotonic, the left and right Riemann sums, \(\frac{1}{N}\left( \tilde{A}_{N-1} + \ln \tilde{f}(0)\right) \) and \(\frac{1}{N}\left( \tilde{A}_{N-1} + \ln \tilde{f}(1)\right) \), respectively, serve as bounds for the definite integral I (where \(\tilde{A}_{N-1}\) is defined in Eq. (30)). This implies

where the minimum, \(\tilde{m}\), and maximum, \(\tilde{M}\), of \(\tilde{f}\) are defined in Eq. (17). Keeping in mind that \(\prod _{i=1}^{N-1}\tilde{f}\left( \frac{i}{N}\right) = \exp \left( \tilde{A}_{N-1}\right) \), exponentiate Eq. (64) to obtain

Combining this with the inequality of Eq. (21), which compares f to \(\tilde{f}\), and using Eq. (63), we obtain

Thus, if \(I>0\) then \(\lim _{N\rightarrow \infty }\rho _B/\rho _A = \infty \). If \(I<0\) then \(\lim _{N\rightarrow \infty }\rho _B/\rho _A = 0\). The only case left to consider is \(I=0\). In this case, Eq. (51) implies that \(\lim _{N\rightarrow \infty }\rho _B/\rho _A=\sqrt{ac/(bd)}\). If \(ac>bd\) then the limit is greater than 1, if \(ac<bd\) then the limit is less than 1, and if \(ac=bd\) then the limit equals 1. \(\square \)

Corollary 3

Given the game matrix (1), \(\rho _A > \rho _B\) in the \(Nw\) limit if and only if one of the following holds:

-

(i)

\(a+b>c+d\)

-

(ii)

\(a+b=c+d\) and \(b>c\)

Proof

We introduce weak selection according to Eq. (5). Given the weak selection expansion of integral I in Eq. (55), \(I<0\) implies \(a+b>c+d\) and \(I=0\) implies \(a+b=c+d\). Furthermore, the inequality \(ac<bd\) is

which reduces as \(w\rightarrow 0\) to

Thus, Condition (i) of Theorem 6 becomes \(a+b>c+d\) and Condition (ii) becomes \(a+b=c+d\) and \(b>c\) (equivalently \(a+b=c+d\) and \(a<d\)) in the \(Nw\) limit. \(\square \)

6 Discussion

In the analysis of evolutionary models, the limits of large population size and weak selection are biologically relevant and mathematically convenient. We have analyzed the effect of combining these limits, in different orders, on the fixation of strategies in the Moran process with frequency dependence. Our results (summarized in Table 1) show that the \(Nw\) and \(wN\) limits yield different asymptotic expressions for fixation probability, as well as different conditions for a strategy to have larger fixation probability than a neutral mutation. Interestingly, however, the conditions are the same for \(\rho _A > \rho _B\).

To understand the relationship between the \(Nw\) and \(wN\) results, it is helpful to rewrite them in terms of two payoff differences:

In words, \(\alpha \) is the payoff difference when both types are equally abundant, while \(\beta \) is the payoff difference when A is rare, with both differences taken in the large-population limit. These quantities relate to familiar concepts in evolutionary game theory: If \(\beta <0\) then B is an evolutionary stable strategy (ESS; Maynard Smith and Price, 1973), whereas the sign of \(\alpha \) determines which of the two types is risk dominant (Harsanyi and Selten 1988; Nowak et al. 2004).

In the \(Nw\) limit, for a A-mutant to be favored in the sense \(\rho _A>1/N\) in the \(Nw\) limit requires that both \(\alpha \) and \(\beta \) are positive. This means that A must have a payoff advantage both when rare and when 50% abundant. A deficiency in either of these two situations will prevent A from reaching fixation when the large-population limit is taken first. In contrast, for the \(wN\) limit, it is only necessary that the sum \(\alpha + \beta =a+2b-c-2d\) be positive, effectively averaging over the two situations. Thus, when the weak-selection limit is taken first, a selective disadvantage in one situation is not prohibitive, and can be compensated for by an advantage in the other.

The condition \(a+2b>c+2d\) for \(\rho _A > 1/N\) in the \(wN\) limit is an instance of the one-third law of evolutionary game theory (Nowak et al. 2004; Ohtsuki et al. 2007; Bomze and Pawlowitsch 2008; Lessard and Ladret 2007; Lessard 2011; Zheng et al. 2011). This rule can be understood as stating that type A is favored to invade (in the sense \(\rho _A > 1/N\) in the \(wN\) limit, excluding borderline cases) if and only if A has a payoff advantage when comprising one-third of the population. Previous works (Traulsen et al. 2006b; Wu et al. 2010) have shown that the one-third law breaks down away from the regime \(Nw \ll 1\); correspondingly, we do not find any one-third law in the \(Nw\) limit. In light of our results, the one-third condition \(a+2b>c+2d\) can be interpreted as a superposition of the separate conditions \(\alpha >0\) and \(\beta >0\), which are jointly necessary in the \(Nw\) limit but which only need be satisfied in sum (\(\alpha +\beta >0\)) in the \(wN\) limit.

Our other results can also be expressed in terms of the payoff differences \(\alpha \) and \(\beta \). The asymptotic expressions for fixation probability, Eqs. (9) and (54), can be written as

Above, \(\theta (x)\) is the Heaviside step function:

The success condition \(\rho _A>\rho _B\) reduces to \(\alpha >0\) in both limit orderings, and is therefore equivalent (up to borderline cases) to the statement that type A is risk-dominant (Harsanyi and Selten 1988; Nowak et al. 2004).

Our analysis of the \(Nw\) limit required us to first examine the large-population limit of \(\rho _A\). Our results in Theorem 4 confirm the earlier results of Antal and Scheuring (2006), except in the borderline case \(b>d, a<c\) and \(I=0\), for which

Antal and Scheuring obtained \(\lim _{N\rightarrow \infty }\rho _A=(b-d)/2b\). These results differ, for example, for the payoff matrix

which satisfies \(b>d\), \(a<c\), and \(I=0\). The difference arises from Antal and Scheuring’s replacement of the sum \(\tilde{A}_k\), defined in our Eq. (30), by its integral approximation.

Here we have focused on the Moran model of a well-mixed population with overlapping generations. The \(Nw\) and \(wN\) limits can also be applied to other models, where they may lead to novel questions or shed new light on existing results. Instead of Moran updating, one can consider Wright–Fisher updating (Fisher 1930; Wright 1931; Imhof and Nowak 2006), in which generations are non-overlapping. In the case of a constant selection coefficient \(s>0\), Haldane (1927) obtained the well-known approximation \(\rho \approx 2s\). We expect that this approximation will be asymptotically exact in the \(Nw\) limit. For the \(wN\) limit, results of Imhof and Nowak (2006) imply

for constant selection, and more generally,

for an arbitrary \(2 \times 2\) matrix game (1). The pairwise-comparison process is another model of evolutionary game dynamics for which some limit results have been derived (Traulsen et al. 2005, 2006a, 2007; Wu et al. 2010, 2013, 2015). The Moran, Wright–Fisher, and pairwise comparison models all fall into a class of exchangeable selection models considered by Lessard and Ladret (2007), who derived general results that we can now recognize as pertaining to the \(wN\) limit. Finally, one can consider structured populations in which individuals occupy vertices of a graph (Ohtsuki et al. 2006; Szabó and Fáth 2007; Allen and Nowak 2014). For the case of the cycle (Ohtsuki and Nowak 2006), the \(wN\) and \(Nw\) limits were studied by Jeong et al. (2014), although without formal definitions and without considering borderline cases. For regular graphs of degree greater than two, there are results that appear to pertain to the \(wN\) limit (Ohtsuki et al. 2006; Chen 2013; Allen and Nowak 2014), but the \(Nw\) limit remains open.

Our results were obtained via exact computation of fixation probabilities according to Eq. (3). Alternatively, one can use the diffusion approximation (Kimura 1964; Helbing 1996; Traulsen et al. 2006a, b; Bladon et al. 2010), in which a finite-population process is approximated by a stochastic differential equation of Langevin form,

where x represents the frequency of type A, \(\xi \) is uncorrelated Gaussian white noise with variance 1, and both a(x) and b(x) vanish at the endpoints \(x=0,1\). The first term of Eq. (66) represents directional selection, while the second represents random genetic drift. The Moran, Wright–Fisher, and pairwise comparison models can all be approximated this way. In the diffusion context, the product Nw appears to determine how the dynamics behave under the large-population and weak-selection limits. If \(Nw \rightarrow \infty \) as \(N \rightarrow \infty \) and \(w \rightarrow 0\), the second term in Eq. (66) vanishes and the dynamics become deterministic (Traulsen et al. 2005). If instead \(Nw \rightarrow 0\), stochasticity is preserved (Traulsen et al. 2006b). An important question, still under active investigation (Lessard and Ladret 2007; Saakian and Hu 2016), is to determine conditions under which the diffusion approximation is asymptotically exact.

The \(Nw\) and \(wN\) limits represent two extremes out of the infinitely many ways to combine the large-population and weak-selection limits. In the most general case, one considers an arbitrary sequence of pairs \(\{(w_j, N_j)\}_{j=1}^\infty \) such that \(w_j \rightarrow 0\) and \(N_j \rightarrow \infty \) as \(j \rightarrow \infty \). It may be supposed that results for other limiting schemes will lie between the \(Nw\) and \(wN\) extremes in some sense. Based on results from the diffusion approximation (Traulsen et al. 2006b) and other approaches (Lessard and Ladret 2007), it appears plausible that the \(Nw\) results extend to all limits with \(Nw \rightarrow \infty \) and the \(wN\) results extend to all limits with \(Nw \rightarrow 0\), but we have not shown this formally.

Finally, we caution that results obtained in the weak selection limit—either alone or combined with other limits—do not always extend to stronger selection (Wu et al. 2010, 2013). Indeed, when there are more than two strategies, it is possible to find one ranking of strategies for both the weak selection and strong selection limits, but a different ranking for intermediate selection strengths (Wu et al. 2013). Such results underscore the need to assess selection strength when applying evolutionary game-theoretic results to biological populations.

References

Abramowitz M, Stegun IA (1964) Handbook of mathematical functions: with formulas, graphs, and mathematical tables, vol 55. Courier Corporation, New York

Allen B, Nowak MA (2014) Games on graphs. EMS Surv Math Sci 1(1):113–151

Antal T, Scheuring I (2006) Fixation of strategies for an evolutionary game in finite populations. Bull Math Biol 68(8):1923–1944

Bladon AJ, Galla T, McKane AJ (2010) Evolutionary dynamics, intrinsic noise, and cycles of cooperation. Phys Rev E 81(6):066122

Blume LE (1993) The statistical mechanics of strategic interaction. Games and economic behavior 5(3):387–424

Bomze I, Pawlowitsch C (2008) One-third rules with equality: second-order evolutionary stability conditions in finite populations. J Theor Biol 254(3):616–620

Broom M, Rychtár J (2013) Game-theoretical models in biology. Chapman & Hall/CRC, Boca Raton

Chen YT (2013) Sharp benefit-to-cost rules for the evolution of cooperation on regular graphs. Ann Appl Probab 23(2):637–664

Fisher RA (1930) The genetical theory of natural selection. Oxford University Press, Oxford

Haldane JBS (1927) A mathematical theory of natural and artificial selection, part V: selection and mutation. Math Proc Camb Philos Soc 23:838–844

Harsanyi JC, Selten R et al (1988) A general theory of equilibrium selection in games, vol 1. MIT Press Books, Cambridge

Helbing D (1996) A stochastic behavioral model and a ‘microscopic’ foundation of evolutionary game theory. Theor Decis 20(2):149–179

Hofbauer J, Sigmund K (1998) Evolutionary games and replicator dynamics. Cambridge University Press, Cambridge

Ibsen-Jensen R, Chatterjee K, Nowak MA (2015) Computational complexity of ecological and evolutionary spatial dynamics. Proc Nat Acad Sci 112(51):15,636–15,641

Imhof LA, Nowak MA (2006) Evolutionary game dynamics in a Wright–Fisher process. J Math Biol 52(5):667–681

Jeong HC, Oh SY, Allen B, Nowak MA (2014) Optional games on cycles and complete graphs. J Theor Biol 356:98–112

Kimura M (1964) Diffusion models in population genetics. J Appl Probab 1(2):177–232

Ladret V, Lessard S (2008) Evolutionary game dynamics in a finite asymmetric two-deme population and emergence of cooperation. J Theor Biol 255(1):137–151

Lessard S, Ladret V (2007) The probability of fixation of a single mutant in an exchangeable selection model. J Math Biol 54(5):721–744

Lessard S (2011) On the robustness of the extension of the one-third law of evolution to the multi-player game. Dyn Games Appl 1(3):408–418

Moran PAP (1958) Random processes in genetics. Math Proc Camb Philos Soc 54(01):60–71

Nowak MA, May RM (1992) Evolutionary games and spatial chaos. Nature 359(6398):826–829

Nowak MA, Sasaki A, Taylor C, Fudenberg D (2004) Emergence of cooperation and evolutionary stability in finite populations. Nature 428(6983):646–650

Nowak MA, Tarnita CE, Antal T (2010) Evolutionary dynamics in structured populations. Philos Trans R Soc B Biol Sci 365(1537):19–30

Ohtsuki H, Nowak MA (2006) Evolutionary games on cycles. Proc R Soc B Biol Sci 273(1598):2249–2256. doi:10.1098/rspb.2006.3576

Ohtsuki H, Hauert C, Lieberman E, Nowak MA (2006) A simple rule for the evolution of cooperation on graphs and social networks. Nature 441:502–505

Ohtsuki H, Bordalo P, Nowak MA (2007) The one-third law of evolutionary dynamics. J Theor Biol 249(2):289–295

Saakian DB, Hu C-K (2016) Solution of classical evolutionary models in the limit when the diffusion approximation breaks down. Phys Rev E 94(4):042422

Smith JM (1982) Evolution and the theory of games. Cambridge University Press, Cambridge

Smith JM, Price GR (1973) The logic of animal conflict. Nature 246(5427):15–18

Szabó G, Fáth G (2007) Evolutionary games on graphs. Phys Rep 446(4–6):97–216

Tarnita CE, Ohtsuki H, Antal T, Fu F, Nowak MA (2009) Strategy selection in structured populations. J Theor Biol 259(3):570–581. doi:10.1016/j.jtbi.2009.03.035

Taylor C, Fudenberg D, Sasaki A, Nowak M (2004) Evolutionary game dynamics in finite populations. Bull Math Biol 66:1621–1644

Taylor PD, Day T, Wild G (2007) Evolution of cooperation in a finite homogeneous graph. Nature 447(7143):469–472

Thomson BS, Bruckner JB, Bruckner AM (2001) Elementary real analysis. Prentice Hall Inc, Upper Saddle River

Traulsen A, Claussen JC, Hauert C (2005) Coevolutionary dynamics: from finite to infinite populations. Phys Rev Lett 95(23):238701

Traulsen A, Nowak MA, Pacheco JM (2006a) Stochastic dynamics of invasion and fixation. Phys Rev E 74(1):011909

Traulsen A, Pacheco JM, Imhof LA (2006b) Stochasticity and evolutionary stability. Phys Rev E 74(2):021905

Traulsen A, Pacheco JM, Nowak MA (2007) Pairwise comparison and selection temperature in evolutionary game dynamics. J Theor Biol 246(3):522–529

Weibull JW (1997) Evolutionary game theory. MIT press, Cambridge

Wright S (1931) Evolution in mendelian populations. Genetics 16(2):97–159

Wu B, Altrock PM, Wang L, Traulsen A (2010) Universality of weak selection. Phys Rev E 82(4):046106

Wu B, García J, Hauert C, Traulsen A (2013) Extrapolating weak selection in evolutionary games. PLoS Comput Biol 9(12):e1003381

Wu B, Bauer B, Galla T, Traulsen A (2015) Fitness-based models and pairwise comparison models of evolutionary games are typically different–even in unstructured populations. New J Phys 17(2):023043

Zheng X, Cressman R, Tao Y (2011) The diffusion approximation of stochastic evolutionary game dynamics: mean effective fixation time and the significance of the one-third law. Dyn Games Appl 1(3):462–477

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Sample, C., Allen, B. The limits of weak selection and large population size in evolutionary game theory. J. Math. Biol. 75, 1285–1317 (2017). https://doi.org/10.1007/s00285-017-1119-4

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00285-017-1119-4