Abstract

This paper presents a reformulation of the “Gappy Proper Orthogonal Decomposition” (Gappy-POD) multi-fidelity modeling approach and proposes an enrichment criterion associated with an adaptive infill algorithm. The latter is here applied to the study of the flight domain of the RAE-2822 transonic airfoil at two different levels of accuracy to demonstrate its ability to detect areas in a two-dimensional design space where the surrogate model needs improvement to better drive the optimization process.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Multiple levels of simulation are usually available to describe the behaviour of industrial systems. These levels are associated with models ranging from an immediate analytical solution to full-field finite element computations requiring high performance computing ressources. Generally, the more computationally expensive the more accurate is the solution. The aim of multi-fidelity modeling is to leverage a low-fidelity information to predict a high-fidelity representation of the physical phenomena under study.

The design process being intrinsically multi-scale, multi-fidelity, multi-disciplinary (Tromme et al. 2013); the earlier the scales, fidelities and disciplines are all integrated in the process, the better the technical solution can be (March and Willcox 2012a; Keane 2003). The “data-fusion” is a practical way to take advantage of multi-fidelity computations to build better surrogates (Forrester et al. 2008) and is often referred to as corrective approach as in scaling methods (Keane and Prasanth 2005, Section 6.1). The so-called “co-Kriging” multi-fidelity surrogates are widely applied to Multidisciplinary Design Optimization (MDO) (Kennedy and O’Hagan 2000; Forrester et al. 2008; Forrester and Keane 2009; Kuya et al. 2011; Toal and Keane 2011; Huang et al. 2013; March and Willcox 2012a; Han et al. 2010). They allow the enhancement of sparse high-fidelity information with cheaper low-fidelity data or the gradient of the modeled function (Han et al. 2010, 2013). The coupling of local scaling models with trust-region methods (Conn et al. 2000, Chapter 6-7) insures the local convergence of both unconstrained (March and Willcox 2012b) and constrained (March and Willcox 2012a) optimizations.

The main drawback of scalar multi-fidelity surrogates is the possible loss of correlation between high- and low-fidelity models through the integration of scalar objectives and/or constraints of the optimization problem. To circumvent this problem, the Shape-Preserving Response Prediction (SPRP) Koziel and Leifsson (2012); Leifsson and Koziel (2015) applies the vectorial modifications observed on a low-fidelity response to an equivalent high-fidelity reference over a reference neighbourhood. Another solution proposed in Toal (2014) introduces the “Proper Orthogonal Decomposition” (POD) of the entire aerodynamic field modeled at two different levels of fidelity. The “Gappy -POD” technique (Everson and Sirovich 1995) is then used to predict the missing high-fidelity from the low-fidelity field. The predictive ability and the insight available in POD based models are stressed in (Coelho et al. 2008) where a comparison with scalar models is provided.

This paper further investigates the use of Gappy-POD for multi-fidelity modeling. In order to integrate this type of models in an online optimization scheme, an enrichment criterion, based on the error of the available low-fidelity Gappy-POD projection on the POD space, is proposed. Its efficiency to highlight poorly predicted areas in the design space is compared to another criterion from the literature (Guénot et al. 2013).

The paper is organized as follows. Section 2 introduces the POD and Gappy-POD methods with a short review of previous applications. In Section 3, our infill criterion is presented in details and is associated to an enrichment algorithm. Section 4 presents the 2D airfoil application to illustrate the Gappy-POD multi-fidelity approach and the associated results. Finally, conclusions and perspectives are drawn in Section 5.

2 Multi-fidelity and gappy proper orthogonal decomposition

POD, also referred to as “Karhunen-Loève expansion”, was introduced in the context of turbulence by Lumley (1967) and is widely used to predict information extracted from numerical models within a lower dimensional space (Gogu et al. 2009). In opposition to “Intrusive POD” (which aims at projecting a system of “Partial Differential Equations” or PDE onto a reduced space), the “Non-Intrusive POD” (Coelho et al. 2008, 2009; Raghavan and Breitkopf 2013; Guénot et al. 2013), associated with the “snapshots” method (Sirovich 1987), is based on a Singular Value Decomposition (SVD) of a set of M snapshots of the physical field computed for a set of configurations Θ in the design space \(\mathcal {D}\subset \mathcal {K}^{p}\), a p-dimensional metric space. The coefficients of the POD decomposition (Eq. 5) are approximated or interpolated by surrogate models over the whole design space \(\mathcal {D}\) and no intervention on the PDE solver is then necessary. The prediction for a new point \(\boldsymbol {\theta }\in \mathcal {D}\) is obtained as linear combination of the POD basis previously computed. The infill sampling criterion issue has been addressed for this kind of surrogate models by Guénot et al. (2011) and Braconnier et al. (2011). In an optimization context, both the coefficients and the POD basis vectors may be further enhanced to take into account the conservancy of the objectives and constraints (Xiao et al. 2010, 2013, 2014). Multi-fidelity surrogate modeling using Gappy-POD was introduced by Toal (2014) and is here presented in Section 2.3.

2.1 Multi-fidelity modeling

We consider a multi-fidelity snapshot s obtained for any experiment 𝜃 in the design space \(\mathcal {D}\),

where n L and n H are the sizes of the discretized physical low (L)- and high (H)-fidelity solutions obtained from a Design of Experiments (DoE) Θ of M points 𝜃 (i) in the design space \(\mathcal {D}, \forall i\in [\![1,M]\!]\). Without any loss of generality, we consider centered data satisfying \( \sum \limits _{i=1}^{M}\mathbf {s}^{(i)}=\mathbf {0}\), where the set of snapshots is denoted S=[s (1),⋯ ,s (M)] and s (i) = s(𝜃 (i)),∀i∈[ [1,M] ].

2.2 POD with multi-fidelity data

Following the formulation introduced in (Raghavan and Breitkopf 2013), the POD procedure is presented as the best orthogonal projector of vectors contained in the set of snapshots S.

Each snapshot \(\mathbf {s}=\left (\begin {array}{c}\mathbf {s}^{L}\\\mathbf {s}^{H}\end {array}\right )\) is considered as a point in an Euclidean affine space \(\mathcal {E}\) on the vector space \(E \in \mathcal {R}^{n}\) (where n = n L + n H ) associated with:

-

1.

the coordinate system \((O,\mathcal {B})\) where \(\mathcal {B}\) is the canonical basis of E and O is a point chosen as origin (usually the mean snapshot \(\bar {\mathbf {s}}\));

-

2.

the usual inner product 〈u,v〉;

-

3.

the usual norm ∥u∥2=〈u,u〉.

We consider a projector \(\mathcal {P}(\mathbf {s})\) of a snapshot s on a basis Φ

The Reduced-Order Model (ROM) lies in the m-dimensional Euclidean affine subspace \(\mathcal {F}\) which is at most M-dimensional, and far smaller than the output vector space dimension n (0<m≤M≪n). Let Φ=[ϕ 1,...,ϕ m ] be an orthonormal basis generating \(\mathcal {F}\). We characterize the POD orthogonal projector \(\mathcal {P} : \mathcal {E} \rightarrow \mathcal {F}\) with the optimality and orthogonality conditions

where \(\|A {\|^{2}_{F}} = tr(A^{\top }A)\) is the Frobenius norm, yielding Φ the eigenmodes of the covariance matrix S S ⊤.

The ROM is usually obtained by limiting the basis to the m most “energetic” modes and is associated with the error

where λ is the vector of monotonically decreasing eigenvalues associated with the basis modes Φ.

Let α = Φ ⊤ s be the projection of s onto the POD space. α can be seen as the vector of coefficients minimizing the distance between s and \(\mathcal {P}(\mathbf {s})\)

2.3 Gappy-POD as a predictor of high-fidelity data

Initially introduced by Everson and Sirovich (1995) in the context of image processing, the so-called “Gappy-POD” has been used in aerodynamics by Bui-Thanh et al. (2004) especially for inverse design problems. The “gappy” denomination of this method comes from its ability to reconstruct missing information of a given vector or to fulfill the “gaps” in the corrupted data. In image processing domain, missing pixels is a problem engineers are confronted frequently with. In this frame, the Gappy-POD procedure allows to build a matrix basis from complete images and to reconstruct the missing pixels of corrupted material. The Gappy-POD can be seen as the filtered projection of a vector onto the subspace spanned by the POD basis Φ.

Once the Φ basis built with a set of snapshots \(\left (\begin {array}{c}\mathbf {s}^{L}\\\mathbf {s}^{H}\end {array}\right )\), we use the Gappy-POD to predict high- from low-fidelity data (Toal 2014). Following (Bui-Thanh et al. 2004), a mask vector is built associating 1 with low-fidelity and 0 with high-fidelity data. We start revisiting this formulation by introducing a projector \(\mathcal {G}(\mathbf {s}) : \mathcal {E} \rightarrow \mathcal {E}\) allowing the same association

We now seek to find the best projection \(\mathcal {P}_{g}=\boldsymbol {\Phi }\boldsymbol {\beta }\) of a filtered snapshot \( \boldsymbol {\Gamma }\mathbf {s}=\left (\begin {array}{c}\mathbf {s}^{L}\\\mathbf {0}\\\end {array}\right )\) by minimizing the functional \(\mathcal {J}(\boldsymbol {\beta })\)

Using the idempotence property of \(\mathcal {G} \Leftrightarrow \boldsymbol {\Gamma }\boldsymbol {\Gamma } = \boldsymbol {\Gamma }\), the diagonal property of Γ and the orthonormality of Φ, the minimization of \(\mathcal {J}(\boldsymbol {\beta })\) yields

Let \(\mathcal {H}\) be the projector associated with the diagonal matrix  , extracting the high-fidelity part

, extracting the high-fidelity part  . The Gappy-POD operator (Toal 2014) is referred to as \(\mathcal {P}_{t}\) hereafter and is obtained by replacing Γ

Φ

β with Γ

s in the reconstructed snapshot. The notations in Table 1 are used in the following sections.

. The Gappy-POD operator (Toal 2014) is referred to as \(\mathcal {P}_{t}\) hereafter and is obtained by replacing Γ

Φ

β with Γ

s in the reconstructed snapshot. The notations in Table 1 are used in the following sections.

Note that  and \(\boldsymbol {\Gamma }\mathcal {P}_{t}(\mathbf {s}) = \boldsymbol {\Gamma }\mathbf {s}\) by construction.

and \(\boldsymbol {\Gamma }\mathcal {P}_{t}(\mathbf {s}) = \boldsymbol {\Gamma }\mathbf {s}\) by construction.

3 Gappy-POD enrichment criterion

As long as the low- and high-fidelity models are well correlated and the amount of available data is sufficient, it seems natural that Gappy-POD and POD projections should be well correlated too. On the other hand, a large Gappy-POD projection error foretells a lack of precision of the POD approximation in the considered area. The proposed error estimator δ (Eq. 9) is then based on the following hypothesis: \(\textnormal {if}\; \rho _{(\mathbf {s}^{L},\mathbf {s}^{H})}\;\textnormal {high}\;\forall \boldsymbol {\theta }\in \mathcal {D}, \textnormal {then}\;\rho _{(\delta ,\|{\mathcal {P}(\mathbf {s})-\mathbf {s}}\|^{2})} \;\textnormal {high~too}\), where ρ (a,b) stands for the correlation between two general variables so that \(\rho _{(\mathbf {s}^{L},\mathbf {s}^{H})}\) pertains the low- and high-fidelity solutions correlation. Setting an arbitrary case dependent threshold 𝜖 a p p on δ allows for detecting areas of potential enrichment and results in an adaptive infill criterion associated with Algorithm 1, and used in Section 4.4.

It is important to keep in mind that the high-fidelity computations and so the POD projection \(\mathcal {P}(\mathbf {s})\) are usually available on limited locations of the design space \(\mathcal {D}\). On the contrary, the low-fidelity model has a computational cost enabling a more exhaustive simulation campaign over the design space. Therefore, we consider here a current snapshot s(𝜃) only simulated with the low-fidelity model, and propose as criterion the maximization of the error estimator δ hereafter for the DoE enrichment procedure dedicated to multi-fidelity Gappy-POD based surrogates

The numerator \(\| \boldsymbol {\Gamma }\mathcal {P}_{g}(\mathbf {s})-\boldsymbol {\Gamma }\mathbf {s}\|^{2}\) can be seen as the norm of the low-fidelity difference between the Gappy-POD projection \(\mathcal {P}_{g}(\mathbf {s})\) and the Gappy-POD prediction \(\mathcal {P}_{t}(\mathbf {s})\) given the equality \(\boldsymbol {\Gamma }\mathcal {P}_{t}(\mathbf {s}) = \boldsymbol {\Gamma }\mathbf {s}\).

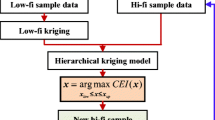

3.1 Algorithm

Maximizing the error estimator δ (Eq. 9) indicates the areas where the low-fidelity part of the Gappy-POD projection reveals high relative error with respect to the computed low-fidelity data itself. Algorithm 1 is proposed as a simple implementation of this error estimate associated with a stopping criterion within an adaptive sampling strategy involving multi-fidelity surrogate models and potentially improving the exploitation/exploration balance of an online Surrogate-Based Optimization (SBO). In the coming sections, the error estimator δ (Eq. 9) is referred to as enrichment criterion and linked to the stopping condition 𝜖 a p p . In the context of multi-fidelity optimization, the low-fidelity solution is usually considered far cheaper than the high-fidelity simulation affording the designer to compute s L on a large number of points in the design space \(\mathcal {D}\).

4 RAE2822 Application case

A usual application illustrating multi-fidelity approches deals with 2D airfoil optimization (Peña Lopez et al. 2012; Leifsson and Koziel 2010; Luliano and Quagliarella 2013; Coelho et al. 2008). We propose to use the panel theory as the low-fidelity model and a more expensive “Reynolds Averaged Navier-Stokes” computation as the high-fidelity model. The addressed application is the air flow around the RAE-2822 airfoil (Cook et al. 1979) illustrated on Fig. 1 under free stream conditions given in Table 2. The low-fidelity simulations are performed under potential flow hypothesis using the well documented panel code Xfoil (Drela 1989) enabling viscous and compressibility corrections. The high-fidelity computations are performed using the 2D RANS solver elsA (Cambier et al. 2013).

Mach number distribution around the airfoil RAE-2822, study case 9 (Cook et al. 1979) : \(\alpha _{\infty } = 2.79^{\circ }\) and \(M_{\infty } = 0.73\)

The variations in M ∞ are expected to induce appearance of shock waves for some points in the design space. These changes in the flow regime constitute the major difficulty to create a global surrogate model on the entire design space.

The low-fidelity experiments are performed on a mesh of 352 panels along the airfoil. Each simulation takes approximately one second to run on a workstation Intel Xeon 4x1.6GHz with 48 Go of memory and gives access to the pressure distribution along the shape and the lift and drag coefficients (respectively C L and C D ). The high-fidelity computation is run on a 40 chord length scaled C-H types structured mesh containing 47104 cells over 10000 iterations with a Spalart-Allmaras turbulence model (Spalart and Allmaras 1992) and a 3-level multigrid acceleration. We extract the static pressure distribution and the friction vector on the shape, the lift and drag coefficients as well as the temperature, pressure and velocity over the all domain. Each simulation takes approximately 15 minutes on 8 cores of Ivy Bridge Intel Xeon E5-2697(v2) processors with 1.8 Go of memory allocated.

We can expect the low-fidelity to fit the high-fidelity data concerning the pressure distribution but also to reveal a reduced precision of the drag coefficient for increased M ∞ and α ∞ because of missing friction information. This is due to the enlarged part of pressure and friction induced drag in the area where the low-fidelity model lacks precision (shock waves development).

4.1 Multi-fidelity snapshots

To ensure a good agreement between low- and high-fidelity extractions, the low-fidelity mesh χ L generated by Xfoil v6.1 is projected on the high-fidelity mesh χ H generated by Autogrid v8r10.3. The multi-fidelity snapshots are then built by concatenating the linear interpolation of the low-fidelity pressure \(\widetilde {P}_{s}^{L}\in \mathcal {R}^{352}\) on χ H, the skin distribution of high-fidelity pressure \({P_{s}^{H}}\in \mathcal {R}^{352}\) and the wall friction components \(\tau _{p_{x}}\in \mathcal {R}^{352}\) and \(\tau _{p_{y}}\in \mathcal {R}^{352}\),

In our case, each design point is taken in the (M ∞ −α ∞ )-space \(\mathcal {D}\subset \mathcal {R}^{2}\) (p=2). Therefore, the low- and high-fidelity meshes remain unchanged for all experiments. An intermediate mapping of each solution on a fixed reference grid should be operated to perform the “snapshot”-POD in the case of shape optimization (Quarteroni and Rozza 2014, Chapter 4). In complex industrial cases involving moving meshes, a specific attention has to be payed to the interpolation scheme (Fang et al. 2009).

4.2 Design of Experiments and POD initialization

The design space \(\mathcal {D}\) being 2D, we choose to compute an initial DoE composed of 10 snapshots. The a priori sampling method is a “Latinized Centroidal Voronoï Tesselation” (LCVT) (Saka et al. 2007; Romero et al. 2006).

As shown on Fig. 2, only two points are populating the transonic region confined between the red line and the top-right corner of the figure. This leads to a poor definition of the transonic behaviour in the snapshot matrix yielding reduced prediction capabilities of the model. In addition, the top-left corner corresponds to incidences bringing out brutal accelerations of the fluid in its path around the leading edge. This phenomenon is partially captured by the low-fidelity whereas real shocks appearing for M ∞ >0.63 are visible only on the high-fidelity. Given the low density of samples (Fig. 2), no reduction is made on the POD basis (m = M=10), built from the snapshots matrix with substracted mean.

4.3 Enrichment criterion

Figure 3 shows the correlation between three relative characteristic errors:

-

a.

the high-fidelity relative error made by the POD projection of a complete snapshot

-

b.

the high-fidelity relative error of the Gappy-POD projection of a low-fidelity snapshot

-

c.

the low-fidelity relative error of the Gappy-POD projection of a low-fidelity snapshot \(\sqrt {\frac {\|\boldsymbol {\Gamma }\mathcal {P}_{g}(\mathbf {s})-\boldsymbol {\Gamma }\mathbf {s}\|^{2}}{\|\boldsymbol {\Gamma }\mathbf {s}\|^{2}}}\).

We propose to use this information to identify the areas of poor representativeness of the POD basis. The results illustrated on Fig. 3c represent the distribution of the δ criterion proposed in Section 3 over the design space \(\mathcal {D}\). The multi-fidelity training points are shown as white squares, whereas the validation points supposed to be simulated only at the low-fidelity level are represented by circles colored according to the error value.

One can notice that the areas of high relative errors seem correlated to each other regardless of the chosen criterion  (Fig. 3a),

(Fig. 3a),  (Fig. 3b), or δ (Fig. 3c). Our previous hypothesis seems thus confirmed on this study case, even though the order of magnitude of the criterion δ if far lower than the orders of the two other errors.

(Fig. 3b), or δ (Fig. 3c). Our previous hypothesis seems thus confirmed on this study case, even though the order of magnitude of the criterion δ if far lower than the orders of the two other errors.

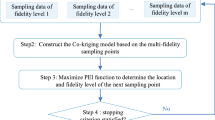

4.4 Adaptive DoE procedure

The results presented are obtained by implementing Algorithm 1 except for its 4th step (metamodeling of \(\bar {\delta }\)). Indeed, in our case, Θ v is rich enough (100 points over a 2-D design space), to only use the additional DoE as potential enrichment locations such that the criterion δ is directly computed all over the sample set Θ v .

In order to assess the efficiency of the enrichment criterion defined in Section 3, we propose to compare our approach with a cross-validation based method (Guénot et al. 2011, 2013; Braconnier et al. 2011). This method is based on an adapted leave-one-out (LOO) algorithm giving an estimation of the influence of a training snapshot on the POD basis (see Fig. 4). On Fig. 4a, one can see the evolution of the POD basis improvement coefficient (Braconnier et al. 2011) over the design space and notice the increase of its value for high M ∞ and α ∞ conditions. In Guénot et al. (2013), this coefficient is scaled by the distribution of distance to training points as shown on Fig. 4b.

As explained previously, the enrichment criterion in Guénot et al. (2013) considers a weighted distance between a new point \(\boldsymbol {\theta } \in \mathcal {D}\) and the training database \(\boldsymbol {\Theta } \in \mathcal {D}^{M}\). On the other hand, our criterion δ is based on the scaled Gappy-POD projection error over the low-fidelity data as defined in Eq. 9.

The two enrichment strategies based on both criteria (Guénot et al. 2013) and δ are illustrated on Fig. 5 where the squares are used for training the initial POD basis and 15 points are added (one at a time) according to the considered criterion. The selected points are highlighted by a color map associated with the current iteration number. One can notice that the two criteria both lead to the infill of the transonic regime (M ∞ >0.7) first. After the first iterations (5 in this case), the PBI + criterion (Guénot et al. 2013) leads to the high α ∞ and low M ∞ area whereas the δ-criterion heads to the low α ∞ and high M ∞ region. These two regimes are supposed to reveal bad correlations between the low- and high-fidelity pressure distributions because of shocks appearance.

Figure 6 shows the low- (left) and high-fidelity (right) pressure distributions of the snapshots selected at iterations 1 to 3 for POD basis enrichment by the proposed δ-criterion. The illustrated points are connected to the poor precision area of the low-fidelity code. The computed pressure distribution is depicted by the blue solid curve while the POD and Gappy-POD projections are respectively in red and green dotted curves. It is important to keep in mind the good agreement of low- and high-fidelity simulations hypothesis. In our case, the development of shocks tends to deteriorate the low-fidelity pressure distribution as shown on Fig. 6a, b, and c (left column).

Indeed, the shocks are not predicted by the low-fidelity code, but a non-negligible noise appears in these regions increasing the Gappy-POD projection error on the low-fidelity data. This increases the error δ and leads the proposed algorithm towards these areas.

At the same time, the PBI + criterion (Fig. 7) leads the algorithm towards the same region according to the space filling and the influence of each training snapshot on the POD basis (see Fig. 4).

Low-fidelity (left) and high-fidelity (right) pressure distributions of 3 enrichment points according to the PBI + criterion (Guénot et al. 2013)

Once again, the emergence of new patterns associated with shocked configurations incites the algorithm to add new points in the high M ∞ and α ∞ area. The former criterion (Guénot et al. 2013) is attracted to this region by the lack of shocked configurations in the initial training set while the proposed one δ is drawn because of the inaccuracy of the low-fidelity code in unadapted regions.

The mean and maximum projection errors over the design space can be analyzed along with the enrichment iterations for both criteria on Fig. 8. The magenta and green curves give the evolution of the error obtained with an enrichment driven by the maximum uncertainty of a Kriging metamodel built over the two aerodynamic scalars C

D

and C

L

, usually interesting in the study of the flight domain. The global projection errors \(\|{\mathcal {P}(\mathbf {s})-\mathbf {s}}\|\) present the same trends (see Fig. 8b) regardless of the chosen criterion. On the contrary, the high-fidelity Gappy-POD projection errors  (see Fig. 8a) reveal a real impact of the criterion at hand.

(see Fig. 8a) reveal a real impact of the criterion at hand.

After the first iterations, the POD based strategies diverge due to the lack of precision of the low-fidelity simulation. At the same time, the maximum uncertainty strategy remains stable but with a reduced efficiency compared to the proposed criterion and, as major drawback, its dependence to the variable the Kriging model is built on. After several experiments in the high α ∞ and low M ∞ region are added by the PBI + strategy to the training set (iteration 13 on Fig. 8a), the high-fidelity error of the Gappy-POD projection is widely increased (see Fig. 9). These experiments correspond to high angle of attack and are typically associated with a poor prediction of the low-fidelity model as shown on Fig. 10.

Figure 10a illustrates the low- and high-fidelity pressure distributions computed for a design point with high M ∞ and high α ∞ . One can see that the shock around 50 % of the chord is observed only on the high-fidelity simulation while the pressure is increased just after the leading edge on the low-fidelity solution.

As shown on Fig. 10b, the low M ∞ and high α ∞ area also presents an important lack of accuracy in the low-fidelity solution. The high-fidelity static pressure (2 nd row on Fig. 10b) suddenly increases around the leading edge of the airfoil whereas the low-fidelity solution presents a smoother evolution (1 st row on Fig. 10b). One can notice the similarity between the low-fidelity pressure distributions on Fig. 10a and b coming from different regions in the design space. This confusion drives the Gappy-POD projection unable to predict correctly the high-fidelity data from a low-fidelity simulation.

To assess the impact of randomness in DoE initialization, we built 10 different DoE with the LCVT sampling method and observed their impact on the enrichment locations chosen by each strategy. The Fig. 11 shows that the previous comments are verified for the 10 initial DoE available. The shaded areas correspond to the gap between the lowest and highest high-fidelity Gappy-POD prediction error observed at each enrichment iteration. One can see that the shaded area associated with the δ strategy is very small compared to the blue shaded area presenting the PBI + results. In addition, the mean and maximum level of Gappy-POD prediction errror along with the enrichment iterations is much higher for the PBI + enrichment strategy. A converged statistical study of the impact of the initial DoE on the enrichment performances being unaffordable, we can argue from this last comparison that the proposed enrichment method outperforms the strategies from the literature and is robust with respect to the stochastic initial sampling.

5 Conclusions and perspectives

In the context of surrogate-assisted design, non-intrusive POD models could be considered as potentially better integrators of physics, compared to classical regression surrogates. Based on this assumption, the current paper has presented a reformulation for the Gappy-POD method in the framework of multi-fidelity modeling. The infill sampling strategy constituting a key aspect in the domain of surrogate-assisted optimization and more particularly in multi-fidelity modeling, an enrichment criterion has been introduced and integrated in an adaptive infill strategy. This implementation has been tested on the flight domain study of a transonic airfoil enlarging the exploitation of the Gappy-POD technique with respect to Refs (Bui-Thanh 2003; Bui-Thanh et al. 2004; Toal 2014).

The efficiency of the proposed enrichment criterion has been compared to a POD-based infill strategy from the literature and to the maximum uncertainty of a Kriging metamodel built on scalar variables of interest. The main lesson gained from this experience is the ability of the Gappy-POD to predict the projection coefficients on the POD basis, thus removing the need for regression surrogate models on the POD coefficients. The proposed infill strategy showed a comparable potential for improving the POD basis along the enrichment iterations with respect to the reference criterion (Guénot et al. 2013). On the other hand, for an example in a two-dimensional design space, it has been found that maximizing the defined error estimator δ reduced the exploration rate of non-predictive low-fidelity regions. This produces an important decrease in the mean of relative high-fidelity error of the Gappy-POD projection over the complete design space, which outperforms the reference criterion. The addition of new points in the regions of non-predictive low-fidelity indeed shows to significantly alter the Gappy-POD projection capabilities. The use of a maximum uncertainty criterion showed on our example the expected weaknesses related to the correlation between the scalar variable the Kriging model is built on and the prediction error of the whole high-fidelity data made by the Gappy-POD.

Some issues remain to be addressed concerning the infill methodology itself and from a more general point of view for multi-fidelity modeling. The presented strategy strongly relies on the hypothesis given in Section 3, so that further investigation on the robustness of the proposed approach with respect to the level of correlation between low- and high-fidelity data needs to be performed. In the presented test case, the non-predictive area is restrained to a very thin region near the maximum boundary edges of the design space hypercube, where POD models are known to be less accurate. In addition, the a priori sampling algorithm used in this study only produced one point in this area, also reducing the POD prediction capabilities. To tackle this problem, another application test case revealing a distributed weak low- to high-fidelity correlation could be targeted. Furthermore, the increase of the number of levels of fidelity is theoretically feasible with the Gappy-POD modeling method and could be investigated. This would naturally introduce the question of the level of fidelity selected for each iteration of optimization or sampling enrichment.

In terms of general perspectives, the current paper applied the proposed enrichment strategy on a 2D design space whereas most of the recent optimization industrial problems deal with several tens of parameters. The switch to 3D cases would also be of first interest from an industrial perspective and should be considered in future work. The multi-fidelity POD basis construction method dedicated to nested sampling strategies often used within the multi -fidelity optimization framework is still to be investigated. Last but not least, different parametrizations accross the levels of fidelity would be of certain interest from an industrial point of view.

References

Braconnier T, Ferrier M, Jouhaud JC, Montagnac M, Sagaut P (2011) Towards an adaptive pod/svd surrogate model for aeronautic design. Comput Fluids 40(1):195–209. doi:10.1016/j.compfluid.2010.09.002

Brand M (2006) Fast low-rank modifications of the thin singular value decomposition. Linear Algebra Appl 415(1):20–30. doi:10.1016/j.laa.2005.07.021

Bui-Thanh T (2003) Proper orthogonal decomposition extensions and their applications in steady aerodynamics. Master?s thesis, Ho Chi Minh City University of Technology

Bui-Thanh T, Damodaran M, Willcox KE (2004) Aerodynamic data reconstruction and inverse design using proper orthogonal decompositionn. AIAA J 42(8):1505–1516. doi:10.2514/1.2159

Cambier L, Heib S, Plot S (2013) The onera elsa cfd software : input from research and feedback from industry. Mech Ind 14(3):159–174. doi:10.1051/meca/2013056

Conn AR, Gould NIM, Toint PL (2000) Trust region methods. Mos-siam series on optimization

Cook PH, McDonald MA, Firmin MCP (1979) Aerofoil rae 2822 - pressure distributions, and boundary layer and wake measurements. In: Agard report ar, vol 138

Drela M (1989). In: Drela M (ed) Xfoil: an analysis and design system for low reynolds number airfoils. Springer, pp 1–12

Everson R, Sirovich L (1995) Karhunen-loeve procedure for gappy data. J Opt Soc Am A 12(8):1657–1664. doi:10.1364/JOSAA.12.001657

Fang F, Pain CC, Navon IM, Gorman GJ, Piggott MD, Allison PA, Farrell PE, Goddard AJH (2009) A POD reduced order unstructured mesh ocean modelling method for moderate reynolds number flows 28(1):127–136. doi:10.1016/j.ocemod.2008.12.006

Coelho F, Rajan PB, Knopf-Lenoir C (2008) Model reduction for multidisciplinary optimization - application to a 2d wing. Struct Multidiscip Optim 37(1):29–48. doi:10.1007/s00158-007-0212-5

Coelho F, Rajan PB, Knopf-Lenoir C, Villon P (2009) Bi-level model reduction for coupled problems. Struct Multidiscip Optim 39(4):401–418. doi:10.1007/s00158-008-0335-3

Forrester AIJ, Keane AJ (2009) Recent advances in surrogate-based optimization. Prog Aerosp Sci 45 (1–3):50–79. doi:10.1016/j.paerosci.2008.11.001

Forrester AIJ, Sóbester A, Keane AJ (2008) Engineering design via surrogate modelling: A practical guide. Wiley

Gogu C, Haftka R, Le Riche R, Molimard J, Vautrin A (2009) Dimensionality reduction of full fields by the principal components analysis. In: Proceedings 17th international conference on composite materials (iccm17), pp 14–7

Guénot M, Lepot I, Sainvitu C, Goblet J, Coelho RF (2011) Adaptive sampling strategies for non-intrusive pod-based surrogates. In: Proceedings EUROGEN 2011 evolutionary and deterministic methods for design, optimization and control. CIRA, Capua

Guénot M, Lepot I, Sainvitu C, Goblet J, Coelho RF (2013) Adaptive sampling strategies for non-intrusive pod-based surrogates. Eng Comput 30(4):521–547. doi:10.1108/02644401311329352

Han ZH, Görtz S, Hain R (2010) A variable-fidelity modeling method for aero-loads prediction. In: Dillmann A, Heller G, Klaas M, Kreplin H-P, Nitsche W, Schröder W (eds) New results in numerical and experimental fluid mechanics vii. doi:10.1007/978-3-642-14243-7_3. Springer, pp 17–25

Han Z-H, Görtz S, Zimmermann R (2013) Improving variable-fidelity surrogate modeling via gradientenhanced kriging and a generalized hybrid bridge function. Aerosp Sci Technol 25(1):177–189. doi:10.1016/j.ast.2012.01.006

Han Z-H, Zimmermann R, Görtz S (2010) A new cokriging method for variable-fidelity surrogate modeling of aerodynamic data. In: Proceedings 48th aiaa aerospace sciences meeting including the new horizons forum and aerospace exposition. doi:10.2514/6.2010-1225. AIAA

Huang L, Gao Z, Zhang D (2013) Research on multi-fidelity aerodynamic optimization methods. Chin J Aeronaut 26(2):279–286. doi:10.1016/j.cja.2013.02.004

Keane AJ (2003) Wing optimization using design of experiment, response surface, and data fusion methods. J Aircr 40:741–750. doi:10.2514/2.3153

Keane AJ, Prasanth B (2005) Nair. Wiley, Computational approaches for aerospace design: the prusuit of excellence

Kennedy MC, O’Hagan A (2000) Predicting the output from a complex computer code when fast approximations are available. Biometrika 87(1):1–13. doi:10.1093/biomet/87.1.1

Koziel S, Leifsson L (2012) Surrogate-based aerodynamic shape optimization by variable-resolution models. AIAA J 51(1):94–106. doi:10.2514/1.J051583

Kuya Y, Takeda K, Zhang X, Forrester AIJ (2011) Multifidelity surrogate modeling of experimental and computational aerodynamic data sets. AIAA J 49(2):289–298. doi:10.2514/1.J050384

Leifsson L, Koziel S (2010) Multi-fidelity design optimization of transonic airfoils using physics-based surrogate modeling and shape-preserving response prediction. J Comput Sci 1(2):98–106. doi:10.1016/j.jocs.2010.03.007

Leifsson L, Koziel S (2015) Surrogate modelling and optimization using shape-preserving response prediction: a review

Peña Lopez F, Díaz Casäs VD, Gosset A, Duro RJ (2012) A surrogate method based on the enhancement of low fidelity computational fluid dynamics approximations by artificial neural networks. Comput Fluids 58:112–119. doi:10.1016/j.compfluid.2012.01.008

Luliano Emiliano, Quagliarella Domenico (2013) Proper orthogonal decomposition, surrogate modelling and evolutionary optimization in aerodynamic design. Comput Fluids 84:327–350. doi:10.1016/j.compfluid.2013.06.007

Lumley JL (1967). In: Lumley JL (ed) The structure of inhomogeneous turbulent flows. Nauka, pp 166–178

March A, Willcox K (2012a) Constrained multifidelity optimization using model calibration. Struct Multidiscip Optim 46(1):93–109. doi:10.1007/s00158-011-0749-1

March A, Willcox K (2012b) Provably convergent multifidelity optimization algorithm not requiring high-fidelity derivatives. AIAA J 50(5):1079–1089. doi:10.2514/1.J051125

Quarteroni A, Rozza G (2014) Reduced order methods for modeling and computational reduction. Vol. 9 of Ms&a - modeling, simulation and applications. Springer

Raghavan B, Breitkopf P (2013) Asynchronous evolutionary shape optimization based on high-quality surrogates: application to an air-conditioning duct. Engineering with Computers 29(4):467–476. doi:10.1007/s00366-012-0263-0

Romero VJ, Burkardt JV, Gunzburger MD, Peterson JS (2006) Comparison of pure and “latinized” centroidal voronoi tessellation against various other statistical sampling methods. Reliab Eng Syst Saf 91(10–11):1266–1280. The Fourth International Conference on Sensitivity Analysis of Model Output (SAMO 2004)SAMO 2004The Fourth International Conference on Sensitivity Analysis of Model Output (SAMO 2004). doi:10.1016/j.ress.2005.11.023

Saka Y, Gunzburger M, Burkardt J (2007) Latinized, improved lhs, and cvt point sets in hypercubes. Int J Numer Anal Model 4(3–4):729–743

Sirovich L (1987) Turbulence and the dynamics of coherent structures, part1: Coherent structures. Vol. 45 of Quarterly of applied mathematics. Brown University, Division of Applied Mathematics

Spalart PR, Allmaras SR (1992) A one-equation turbulence model for aerodynamic flows. In: Proceedings 30th aerospace sciences meeting & exhibit. doi:10.2514/6.1992-439. AIAA

Toal DJ (2014) On the potential of a multi-fidelity g-pod based approach for optimization & uncertainty quantification. Asme turbo expo 2014: Turbine technical conference and exposition 2B. doi:10.1115/GT2014-25184

Toal DJ, Keane AJ (2011) Efficient multipoint aerodynamic design optimization via cokriging. J Aircr 48 (5):1685–1695. doi:10.2514/1.C031342

Tromme E, Brüls O, Emonds-Alt J, Bruyneel M, Virlez G, Duysinx P (2013) Discussion on the optimization problem formulation of flexible components in multibody systems. Struct Multidiscip Optim 48 (6):1189–1206. doi:10.1007/s00158-013-0952-3

Xiao M, Breitkopf P, Coelho RF, Knopf-Lenoir C, Sidorkiewicz M, Villon P (2010) Model reduction by cpod and kriging. Struct Multidiscip Optim 41(4):555–574. doi:10.1007/s00158-009-0434-9

Xiao M, Breitkopf P, Coelho RF, Knopf-Lenoir C, Villon P, Zhang W (2013) Constrained proper orthogonal decomposition based on qrfactorization for aerodynamical shape optimization. Appl Math Comput 223 (0):254–263. doi:10.1016/j.amc.2013.07.086

Xiao M, Breitkopf P, Coelho RF, Villon P, Zhang W (2014) Proper orthogonal decomposition with high number of linear constraints for aerodynamical shape optimization. Appl Math Comput 247(0):1096–1112. doi:10.1016/j.amc.2014.09.068

Acknowledgments

The present work was partly founded by the Association Nationale de la Recherche et Technologie. The authors would like to thank Snecma from the SAFRAN Group for their support and permission to publish this study and especially Dr. Mickaël Meunier and Ir. Jean Coussirou for their technical support in this research project. Last but not least, the authors would like to acknowledge the three anonymous reviewers whose comments helped improve and clarify this manuscript.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Benamara, T., Breitkopf, P., Lepot, I. et al. Adaptive infill sampling criterion for multi-fidelity optimization based on Gappy-POD. Struct Multidisc Optim 54, 843–855 (2016). https://doi.org/10.1007/s00158-016-1440-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-016-1440-3

after 12 iterations of PBI+ enrichment

after 12 iterations of PBI+ enrichment

along with the enrichment strategy

along with the enrichment strategy