Abstract

The sine signals are widely used in signal processing, communication technology, system performance analysis and system identification. Many periodic signals can be transformed into the sum of different harmonic sine signals by using the Fourier expansion. This paper studies the parameter estimation problem for the sine combination signals and periodic signals. In order to perform the online parameter estimation, the stochastic gradient algorithm is derived according to the gradient optimization principle. On this basis, the multi-innovation stochastic gradient parameter estimation method is presented by expanding the scalar innovation into the innovation vector for the aim of improving the estimation accuracy. Moreover, in order to enhance the stabilization of the parameter estimation method, the recursive least squares algorithm is derived by means of the trigonometric function expansion. Finally, some simulation examples are provided to show and compare the performance of the proposed approaches.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The sine signal is a single frequency wave and is used widely in the communication technology, signal processing and filtering [25] and system identification [2, 37]. Many period signals under certain conditions can be decomposed into the sine combinations with different frequencies, amplitudes and phases. The signal modeling is estimating the characteristic parameters of the signals from the measured data. The sine-wave parameter estimation problems have been received much attentions. For example, Belega et al. [3] studied the accuracy of the sine-wave parameter estimation by means of the windowed three-parameter sine-fitting algorithm; Chen et al. [5] studied the multi-harmonic fitting algorithm based on four parameters sine fitting to improve the global convergence; Li et al. [19] derived a gradient-based iterative identification algorithm for estimating parameters of the signal model with known and unknown frequencies. Some of these methods are based on the statistic analysis. This paper considers the optimization algorithm for estimating the signal parameters.

The mathematical model is the basics of controller design [36, 39]. Many identification algorithms can estimate system parameters and system models [15, 26]. In general, the identification algorithm is derived by defining and minimizing a cost function [27–32, 35]. The parameters to be estimated are defined as the parameter vector. Then, the parameter estimates can be obtained by means of optimization algorithms. The identification algorithms have been used widely in industrial robot, signal processing and network communication [8, 41]; Guo et al. [14] investigated the recursive identification method for finite impulse response systems with binary-valued outputs and communication channels; Janot et al. [16] addressed a revised Durbin–Wu–Hausman test for the industrial robot identification; Zhao et al. [43] studied the multi-frequency identification algorithm to identify the amplitude and phase of a multi-frequency signal. This paper studies the application of the identification algorithm to the signal modeling.

In system identification, some identification algorithms focus on reducing the computation load and enhancing the accuracy [40]. Since the gradient optimization method only needs computing the first-order derivation, the gradient identification algorithm has low computation load [10]. However, the gradient algorithm has low computation accuracy. Many improved gradient algorithms have been proposed for enhancing the computation accuracy. Andrei [1] illustrated an adaptive conjugate gradient algorithm for large-scale unconstrained optimization by minimizing the quadratic approximation of the objective function at the current point; Deng et al. [7] developed a three-term conjugate gradient algorithm for solving large-scale unconstrained optimization problems by rectifying the steepest descent direction with the difference between the current iterative points and the gradients; Necoara et al. [24] devised a fully distributed dual gradient method based on a weighted step size and analyzed the convergence rate. Although these improved algorithms can polish up the convergence rate and the estimation accuracy, the computation load is heavy.

The innovation is the useful information that can improve the parameter estimation accuracy. It can promote the convergence of the algorithms during the recursive process. In order to enhance the estimation accuracy by using more innovation, the multi-innovation theory is used widely in the system identification. Mao et al. [23] studied a data filtering-based multi-innovation stochastic gradient algorithm for Hammerstein nonlinear systems; Zhang et al. [42] considered a multi-innovation auto-constructed least squares identification method for 4 freedom ship maneuvering identification modeling; in this paper, the multi-innovation method is expanded into the signal modeling for the sine-wave or periodic signals.

In general, the identification methods are divided into the online identification and the off-line identification. The iterative identification methods are used to the off-line identification [12, 38]. In view of the online identification, Li et al. [22] studied a parallel adaptive self-tuning recursive least squares algorithm for the time-varying system; Ding et al. [11] developed a recursive least squares parameter identification algorithms for nonlinear systems; Ding et al. [13] studied a recursive least squares parameter estimation method for a class of output nonlinear systems based on the model decomposition; Ding et al. [9] presented a least squares algorithm for a dual-rate state space system with time delay. Considering the advantages of the least squares algorithm, a recursive least squares parameter estimation algorithm is derived to estimate the parameters of a periodic signal.

The major contributions of the work in this paper are listed in the following.

-

This paper studies the problem of the parameter estimation. The proposed methods can be used not only for the sine combination signals but also for other periodic signals. These parameter estimation methods can be used in signal processing and signal modeling.

-

On the basis of the gradient searching, a stochastic gradient (SG) parameter estimation algorithm is presented. In order to improve the estimation accuracy and the convergence rate, a multi-innovation stochastic gradient (MISG) parameter estimation algorithm is presented by means of expanding the scalar innovation into the innovation vector.

-

For the purpose of enhancing the algorithm stabilization and the parameter estimation accuracy, a recursive least squares (RLS) algorithm is derived using the trigonometric function expansion. This technique transforms the nonlinear optimization into the linear optimization. Therefore, the algorithm stabilization is improved significantly.

The rest of this paper is organized in the following. Section 2 derives the SG method. Section 3 deduces the MISG algorithm. Section 4 gives the RLS parameter estimation algorithm. Section 5 provides some examples to illustrate and compare the effectiveness of the proposed parameter estimation methods. Section 6 draws some concluding remarks.

2 Stochastic Gradient Method

Let us introduce some notation.

Symbol | Meaning |

|---|---|

\({\varvec{X}}^{\tiny \text{ T }}\) | The transpose of the vector or matrix X |

\(\hat{{\varvec{\theta }}}(k)\) | The estimate of \({\varvec{\theta }}\) at recursion k |

\(A:=X\) | I.e., \(X:=A\), X is defined by A |

\(\text {tr}[{\varvec{X}}]\) | The trace of the square matrix \({\varvec{X}}\) |

\(\Vert {\varvec{X}}\Vert \) | \(\Vert {\varvec{X}}\Vert ^2:=\text {tr}[{\varvec{X}}{\varvec{X}}^{\tiny \text{ T }}]\) |

e(k) | The innovation scalar at recursion k |

\({\varvec{E}}(p,k)\) | The innovation vector, i.e., the multi-innovation at recursion k |

\({\varvec{\varphi }}(k)\) | The information vector at recursion k |

\({\varvec{\varPhi }}(p,k)\) | The information matrix at recursion k |

Consider the combination sine signals with different frequencies, phases and amplitudes:

where \(a_1\), \(a_2\), \(\ldots \), \(a_n\) are the amplitudes, \(\omega _1\), \(\omega _2\), \(\ldots \), \(\omega _n\) are the frequencies and \(\phi _1\), \(\phi _2\), \(\ldots \), \(\phi _n\) are the phases. These parameters are the characteristic parameters of the combination sine signals. The goal is to estimate these parameters by means of presenting new identification methods.

Suppose that the frequencies of the combination sine signals are known, then the phases and amplitudes are to be identified. Define the parameter vector

In the identification test, assume that the sampling period is h and the sampling time is \(t_k:=kh\). The measured data are represented as \(\{t_k,y(t_k)\}\). Let \(y(k):=y(t_k)\) for simplification. Define the difference between the observation output and the model output:

Then, define the cost function

Taking the first-order derivative of \(J({\varvec{\theta }})\) with respect to \({\varvec{\theta }}\) gives

Define the information vector

Let \(k=1,2,\ldots \) be a recursive variable and let \(\hat{{\varvec{\theta }}}(k)\) be the estimate of \({\varvec{\theta }}\) at recursion k. Utilizing the gradient search and minimizing the cost function \(J({\varvec{\theta }})\), we have the SG parameter estimation algorithm:

The steps of computing the parameter estimate \(\hat{{\varvec{\theta }}}(k+1)\) using the SG method are as follows.

-

1.

To initiate: let \(k=0\), preset the recursive length L and let \(\hat{{\varvec{\theta }}}(0)\) be an arbitrary small real vector.

-

2.

Collect the measured data y(k).

-

3.

Compute \(\hat{{\varvec{\varphi }}}(k)\) using (4) and compute \(r(k+1)\) using (5).

-

4.

Compute e(k) using (3).

-

5.

Update \(\hat{{\varvec{\theta }}}(k+1)\) using (2), if \(k=L\), then terminate the recursive procedure; otherwise, \(k:=k+1\), go to Step 2.

3 The Multi-innovation Stochastic Gradient Algorithm

For the SG algorithm, the SG algorithm has low computation accuracy. In order to enhance the computation precision, more measurement data are utilized to the algorithm at each recursion. The dynamical window data scheme is adopted to derive the MISG algorithm. The dynamical window data are a batch data, and the data length is p. In the SG algorithm, e(k) is called the scalar innovation. This innovation can promote the algorithm estimation accuracy.

Consider that the dynamical window data with length p are \(y(k), y(k-1), \ldots , y(k-p+1)\). Expand the scalar innovation e(k) into the innovation vector

where \(\hat{a}_i(k)\) denotes the estimate of \(a_i\) and \(\hat{\phi }_i(k)\) denotes the estimate of \(\phi _i\) at time \(t=kh\). Expand the information vector into the information matrix

According to the gradient searching, the MISG parameter estimation algorithm is listed in the following:

The steps of computing the parameter estimate \(\hat{{\varvec{\theta }}}(k+1)\) using the MISG method are as follows.

-

1.

To initiate: preset the recursive length L and the innovation length p; let \(\hat{{\varvec{\theta }}}(0)\) be an arbitrary small real vector.

-

2.

Collect measured data y(k); compute \(\hat{{\varvec{\varphi }}}(k-i)\) using (10); form \({\varvec{\varPhi }}(p,k)\) using (9).

-

3.

Compute \(e(k-i)\) using (8) and form \({\varvec{E}}(p,k)\) using (7).

-

4.

Compute \(r(k+1)\) using (11).

-

5.

Update the parameter estimate \(\hat{{\varvec{\theta }}}(k+1)\) using (6), if \(k=L\), then terminate the recursive procedure; otherwise \(k:=k+1\), go to Step 2.

4 The Recursive Least Squares Algorithm

The least squares optimization method is widely used in system identification. This paper expands this method to the signal modeling. It is obvious that the sine combination signal is a nonlinear function with respect to the parameters to be estimated. In order to derive the recursive least squares algorithm to estimate the parameters of the combination sine signal, rewriting the sine combination signal in (1) gives

Let \(c_i:=a_i\cos \phi _i\), \(d_i:=a_i\sin \phi _i\), thus y(t) can be expressed as

Define the parameter vector

In the identification test, the sampling time is \(t_k:=kh\) and the measured data length is L. The observation output data are \(y(k):=y(kh)\), \(k=1,2,\ldots \).

Define the information vector

Define the criterion function

where \(e(j):=y(j)-{\varvec{\varphi }}^{\tiny \text{ T }}(j){\varvec{\theta }}\).

Define the stack output vector \({\varvec{Y}}_k\) and the stack information vector \({\varvec{\varPhi }}_k\), respectively,

Then, the criterion function can be rewritten as

Minimizing the criterion function \(J({\varvec{\theta }})\) gives the least squares parameter estimate

Obviously, the least squares estimate \(\hat{\varvec{\theta }}(k)\) involves computing the inverse matrix \(({\varvec{\varPhi }}_k^{\tiny \text{ T }}{\varvec{\varPhi }}_k)^{-1}\). In order to avoid computing the inverse matrix, this paper develops a RLS algorithm to estimate the parameters of the combination sine signal for the online estimation. Define a matrix

Rewriting (14), we have

Ulteriorly, Eq. (15) can be represented as

where \({\varvec{P}}^{-1}(0)=p_0{\varvec{I}}>0\), \(p_0=10^6\).

Because \({\varvec{Y}}_k:=\left[ \begin{array}{l} {\varvec{Y}}_{k-1} \\ y(k) \end{array} \right] \in {\mathbb R}^{k}\), \({\varvec{\varPhi }}_k:=\left[ \begin{array}{l} {\varvec{\varPhi }}_{k-1} \\ {\varvec{\varphi }}^{\tiny \text{ T }}(k) \end{array} \right] \in {\mathbb R}^{k\times {2n}}\), Eq. (13) can be represented as

In order to avoid computing the inverse matrix \({\varvec{P}}^{-1}(k)\), we use the matrix inverse forum \(({\varvec{A}}+{\varvec{B}}{\varvec{C}})^{-1}={\varvec{A}}^{-1}{\varvec{B}}({\varvec{I}}+{\varvec{C}}{\varvec{A}}^{-1}{\varvec{B}})^{-1}{\varvec{C}}{\varvec{A}}^{-1}\) to \({\varvec{P}}^{-1}(k)={\varvec{P}}^{-1}(k-1)+{\varvec{\varphi }}(k){\varvec{\varphi }}^{\tiny \text{ T }}(k)\), and have

Introducing the gain vector \({\varvec{L}}(k):={\varvec{P}}(k){\varvec{\varphi }}(k)\) and multiplying by \({\varvec{\varphi }}(k)\) both side of (16), we have

From the above analysis, we obtain the following equation

However, the parameters in the parameter vector \({\varvec{\theta }}\) are not the characteristic parameters of the combination sine signal. According to the previous definition \(c_i:=a_i\cos \phi _i\), \(d_i:=a_i\sin \phi _i\), the estimates of the characteristic parameters \(a_i\) and \(\phi _i\) are computed by

Let \(\hat{{\varvec{\theta }}}_r(k):=[\hat{a}_1(k),\ldots ,\hat{a}_n(k),\hat{\phi }_1(k),\ldots ,\hat{\phi }_n(k)]^{\tiny \text{ T }}\in {\mathbb R}^{2n}\) denote the estimates of the characteristic parameters of the combination sine signal.

Finally, we obtain the RLS algorithm:

The steps of computing the characteristic parameter estimate are as follows.

-

1.

To initialize: let \(k=0\) and preset recursive length L; let \(\hat{{\varvec{\theta }}}(0)\) be an arbitrary small vector; let \(p_0=10^6\).

-

2.

Collect the measured data y(k).

-

3.

Compute \({\varvec{\varphi }}(k)\) using (20); compute \({\varvec{P}}(k)\) using (19).

-

4.

Compute \({\varvec{L}}(k)\) using (18).

-

5.

Update the parameter estimate \(\hat{{\varvec{\theta }}}(k)\) using (17) and obtain the characteristic parameter estimate \(\hat{{\varvec{\theta }}}_r(k)\) using (21)–(22), if \(k=L\), terminate the recursive procedure and obtain the parameter estimate \(\hat{{\varvec{\theta }}}_r(k)\); otherwise, go to Step 2.

5 Illustrative Examples

Example 1

Consider the combination sine signals with four different frequencies,

where \(a_1=1.8\), \(a_2=2.9\), \(a_3=4\), \(a_4=2.5\), \(\phi _1=0.95\), \(\phi _2=0.8\), \(\phi _3=0.76\), \(\phi _4=1.1\) are the true values of the parameters to be estimated and \(\omega _1=0.07\) rad/s, \(\omega _2=0.5\) rad/s, \(\omega _3=2\) rad/s, \(\omega _4=1.6\) rad/s are the known angular frequency.

Case 1

The MISG method simulation.

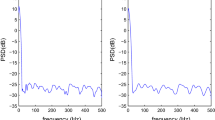

Use the proposed MISG algorithm to estimate the characteristic parameters of the combination sine signals in this example. In the simulation, the white noise sequence with zero mean and variance \(\sigma ^2=0.20^2\) is added to the signal. The sampling period is \(h=0.2\) s, and the data length is \(L=2000\). In order to test the performance of the MISG algorithm, three different innovation length data with \(p=1\), \(p=4\) and \(p=6\) are adopted. The parameter estimates and their estimation errors \(\delta :=\Vert \hat{{\varvec{\theta }}}(k)-{\varvec{\theta }}\Vert /\Vert {\varvec{\theta }}\Vert \) are listed in Table 1. The parameter estimation errors \(\delta :=\Vert \hat{{\varvec{\theta }}}(k)-{\varvec{\theta }}\Vert /\Vert {\varvec{\theta }}\Vert \) versus k are shown in Fig. 1. In addition, the estimated signal and the actual signal are compared for testing the estimation accuracy. The comparison results are shown in Fig. 2, where the dot-line denotes the estimated signal and the solid-line denotes the actual signal.

Case 2

The RLS method simulation.

Next, the proposed RLS algorithm is used to estimate the characteristic parameters. In the simulation, the white noise sequence with zero mean and variance \(\sigma ^2=0.10^2\), \(\sigma ^2=0.50^2\) are added, respectively, to the combination sine signal. In the simulation, the sampling period is \(h=1\) s and the data length is \(L=2000\). The parameter estimates and their estimation errors \(\delta :=\Vert \hat{{\varvec{\theta }}}(k)-{\varvec{\theta }}\Vert /\Vert {\varvec{\theta }}\Vert \) are shown in Table 2. The parameter estimation errors versus k are shown in Fig. 3.

For the purpose of testing the performance of the proposed RLS method, the estimated combination sine signal obtained by the RLS method and the actual combination sine signal are shown in Fig. 4.

Example 2

In this example, a period signal is provided to test the proposed parameter estimation method. Consider a period square wave with the following description,

where \(T=\frac{2\pi }{3}\) s, \(A=2\). The period square wave is shown in Fig. 5.

Using the Fourier expansion, this square wave can be expanded into the sum of odd harmonics. According to the Fourier expansion formula, the coefficients are, respectively

The RLS estimation error \(\delta \) versus k of Example 1

As a result, when i is even number, \(b_i=0\); when i is odd number, \(b_i=\frac{4A}{\pi i}\). Therefore, the Fourier expansion is given by

where \(\omega _0\) is the fundamental harmonic frequency and \(\omega _0=2\pi /T\). The fundamental harmonic frequency equals the frequency of the square wave.

Taking the 1, 3, 5, 7 harmonics, we have

Let \(a_1:=\frac{4A}{\pi }\), \(a_2:=\frac{4A}{3\pi }\), \(a_3:=\frac{4A}{5\pi }\) and \(a_4:=\frac{4A}{7\pi }\), f(t) becomes

In the above equation, the amplitudes are unknown, while the phases are zero. Using the proposed RLS parameter estimation method to estimate parameters \(a_1\), \(a_2\), \(a_3\) and \(a_4\), the parameter estimates and their estimation errors \(\delta :=\Vert \hat{{\varvec{\theta }}}(k)-{\varvec{\theta }}\Vert /\Vert {\varvec{\theta }}\Vert \) are displayed in Table 3. The parameter estimation errors versus k are shown in Fig. 6.

Choosing the estimated parameters with \(k=2000\) and \(\sigma ^2=0.20^2\), we obtain the following function

The estimated square wave and the original square wave are shown in Fig. 7.

The RLS estimation error \(\delta \) versus k of Example 2

The RLS square wave fitting curves of Example 2

From the simulation results, we can draw the following conclusions.

-

1.

Table 1 and Fig. 1 show that the parameter estimates obtained by the MISG method become more accurate with the increasing in the innovation length p.

-

2.

When \(p=1\), the MISG method degenerates into the SG method. From the last column in Table 1, it can be seen that the MISG method has higher accuracy than the SG method.

-

3.

The parameter estimation errors given by the RLS algorithm become smaller with the increasing in k—see Figs. 3 and 6. The parameter estimation accuracy is related to the noise variance. The larger the noise variance is, the lower the parameter estimation accuracy is.

-

4.

The fitting curve given by the RLS method is more closed to the actual signal curve than the fitting curve given by the MISG method—see Figs. 3 and 4. This means that the RLS algorithm has more effectiveness than the MISG method.

6 Conclusion

This paper considers the parameter estimation problems of the periodic signals based on the combination sine signals. According to the gradient searching, the SG parameter estimation algorithm for the combination sine signal is derived. On the basis of the SG algorithm, the MISG parameter estimation method is proposed for improving the estimation accuracy. Furthermore, the RLS parameter estimation algorithm is derived for enhancing the estimation accuracy by the function expansion. The simulation results show that the MISG algorithm and the RLS method can estimate the signal parameters. Because the RLS algorithm is derived by means of the linear optimization while the MISG method is deduced by means of the nonlinear optimization principle, the RLS algorithm has higher accuracy and stabilization than the MISG method. The method used in this paper can be extended to analyze the convergence of the identification algorithms for linear or nonlinear control systems [6, 20, 21] and applied to hybrid switching-impulsive dynamical networks [18] and uncertain chaotic delayed nonlinear systems [17] or applied to other fields [4, 33, 34].

References

N. Andrei, An adaptive conjugate gradient algorithm for large-scale unconstrained optimization. J. Comput. Appl. Math. 292, 83–91 (2016)

D. Belega, D. Petri, Sine-wave parameter estimation by interpolated DFT method based on new cosine windows with high interference rejection capability. Digit. Signal Process. 33, 60–70 (2014)

D. Belega, D. Petri, Accuracy analysis of the sine-wave parameters estimation by means of the windowed three-parameter sine-fit algorithm. Digit. Signal Process. 50, 12–23 (2016)

X. Cao, D.Q. Zhu, S.X. Yang, Multi-AUV target search based on bioinspired neurodynamics model in 3-D underwater environments. IEEE Trans. Neural Netw. Learn. Syst. (2016). doi:10.1109/TNNLS.2015.2482501

J. Chen, Y. Ren, G. Zeng, An improved multi-harmonic sine fitting algorithm based on Tabu search. Measurement 59, 258–267 (2015)

Z.Z. Chu, D.Q. Zhu, S.X. Yang, Observer-based adaptive neural network trajectory tracking control for remotely operated Vehicle. IEEE Trans. Neural Netw. Learn. Syst. (2016). doi:10.1109/TNNLS

S. Deng, Z. Wan, A three-term conjugate gradient algorithm for large-scale unconstrained optimization problems. Appl. Num. Math. 92, 70–81 (2015)

F. Ding, System Identification-Performances Analysis for Identification Methods (Science Press, Beijing, 2014)

F. Ding, X.M. Liu, Y. Gu, An auxiliary model based least squares algorithm for a dual-rate state space system with time-delay using the data filtering. J. Franklin Inst. 353(2), 398–408 (2016)

F. Ding, P.X. Liu, G.J. Liu, Gradient based and least-squares based iterative identification methods for OE and OEMA systems. Digit. Signal Process. 20(3), 664–677 (2010)

F. Ding, X.M. Liu, M.M. Liu, The recursive least squares identification algorithm for a class of Wiener nonlinear systems. J. Franklin Inst. 353(7), 1518–1526 (2016)

F. Ding, X.M. Liu, X.Y. Ma, Kalman state filtering based least squares iterative parameter estimation for observer canonical state space systems using decomposition. J. Comput. Appl. Math. 301, 135–143 (2016)

F. Ding, X.H. Wang, Q.J. Chen, Y.S. Xiao, Recursive least squares parameter estimation for a class of output nonlinear systems based on the model decomposition. Circuits Syst. Signal Process. 35(9), 3323–3338 (2016)

J. Guo, Y.L. Zhao, C.Y. Sun, Y. Yu, Recursive identification of FIR systems with binary-valued outputs and communication channels. Automatica 60, 165–172 (2015)

M. Jafari, M. Salimifard, M. Dehghani, Identification of multivariable nonlinear systems in the presence of colored noises using iterative hierarchical least squares algorithm. ISA Trans. 53(4), 1243–1252 (2014)

A. Janot, P. Vandanjon, M. Gautier, A revised Durbin–Wu–Hausman test for industrial robot identification. Control Eng. Pract. 48, 52–62 (2016)

Y. Ji, X.M. Liu, F. Ding, New criteria for the robust impulsive synchronization of uncertain chaotic delayed nonlinear systems. Nonlinear Dyn. 79(1), 1–9 (2015)

Y. Ji, X.M. Liu, Unified synchronization criteria for hybrid switching-impulsive dynamical networks. Circuits Syst. Signal Process. 34(5), 1499–1517 (2015)

X. Li, F. Ding, Signal modeling using the gradient search. Appl. Math. Lett. 26(8), 807–813 (2013)

H. Li, Y. Shi, W. Yan, On neighbor information utilization in distributed receding horizon control for consensus-seeking. IEEE Trans. Cybern. (2016). doi:10.1109/TCYB.2015.2459719

H. Li, Y. Shi, W. Yan, Distributed receding horizon control of constrained nonlinear vehicle formations with guaranteed \(\gamma \)-gain stability. Automatica 68, 148–154 (2016)

J. Li, Y.J. Zheng, Z.P. Lin, Recursive identification of time-varying systems: self-tuning and matrix RLS algorithms. Syst. Control Lett. 66, 104–110 (2014)

Y.W. Mao, F. Ding, A novel data filtering based multi-innovation stochastic gradient algorithm for Hammerstein nonlinear systems. Digit. Signal Process. 46, 215–225 (2015)

I. Necoara, V. Nedelcu, On linear convergence of a distributed dual gradient algorithm for linearly constrained separable convex problems. Automatica 55, 209–216 (2015)

J. Pan, X.H. Yang, H.F. Cai, B.X. Mu, Image noise smoothing using a modified Kalman filter. Neurocomputing 173, 1625–1629 (2016)

J. Vörös, Iterative algorithm for parameter identification of Hammerstein systems with two-segment nonlinearities. IEEE Trans. Autom. Control 44(11), 2145–2149 (1999)

D.Q. Wang, Hierarchical parameter estimation for a class of MIMO Hammerstein systems based on the reframed models. Appl. Math. Lett. 57, 13–19 (2016)

D.Q. Wang, F. Ding, Parameter estimation algorithms for multivariable Hammerstein CARMA systems. Inf. Sci. 355–356(10), 237–248 (2016)

Y.J. Wang, F. Ding, Novel data filtering based parameter identification for multiple-input multiple-output systems using the auxiliary model. Automatica 71, 308–313 (2016)

Y.J. Wang, F. Ding, The filtering based iterative identification for multivariable systems. IET Control Theory Appl. 10(8), 894–902 (2016)

Y.J. Wang, F. Ding, The auxiliary model based hierarchical gradient algorithms and convergence analysis using the filtering technique. Signal Process. 128, 212–221 (2016)

Y.J. Wang, F. Ding, Recursive least squares algorithm and gradient algorithm for Hammerstein–Wiener systems using the data filtering. Nonlinear Dyn. 84(2), 1045–1053 (2016)

T.Z. Wang, J. Qi, H. Xu et al., Fault diagnosis method based on FFT-RPCA-SVM for cascaded-multilevel inverter. ISA Trans. 60, 156–163 (2016)

T.Z. Wang, H. Wu, M.Q. Ni et al., An adaptive confidence limit for periodic non-steady conditions fault detection. Mech. Syst. Signal Process. 72–73, 328–345 (2016)

D.Q. Wang, W. Zhang, Improved least squares identification algorithm for multivariable Hammerstein systems. J. Franklin Inst. 352(11), 5292–5307 (2015)

L. Xu, A proportional differential control method for a time-delay system using the Taylor expansion approximation. Appl. Math. Comput. 236, 391–399 (2014)

L. Xu, The damping iterative parameter identification method for dynamical systems based on the sine signal measurement. Signal Process. 120, 660–667 (2016)

L. Xu, Application of the Newton iteration algorithm to the parameter estimation for dynamical systems. J. Comput. Appl. Math. 288, 33–43 (2015)

L. Xu, L. Chen, W.L. Xiong, Parameter estimation and controller design for dynamic systems from the step responses based on the Newton iteration. Nonlinear Dyn. 79(3), 2155–2163 (2015)

X.P. Xu, F. Wang, G.J. Liu, Identification of Hammerstein systems using key-term separation principle, auxiliary model and improved particle swarm optimisation algorithm. IET Signal Process. 7(8), 766–773 (2013)

Y. Zhang, Unbiased identification of a class of multi-input single-optput systems with correlated disturbances using bias compensation methods. Math. Comput. Model. 53(9–10), 1810–1819 (2011)

G.Q. Zhang, X.K. Zhang, H.S. Pang, Multi-innovation auto-constructed least squares identification for 4 DOF ship manoeuvring modelling with full-scale trial data. ISA Trans. 58, 186–195 (2015)

S.X. Zhao, F. Wang, H. Xu, J. Zhu, Multi-frequency identification method in signal processing. Digit. Signal Process. 19(4), 555–566 (2009)

Acknowledgments

This work was supported by the National Natural Science Foundation of China (No. 61273194) and Natural Science Fund for Colleges and Universities in Jiangsu Province (No. 12KJB120005).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Xu, L., Ding, F. Recursive Least Squares and Multi-innovation Stochastic Gradient Parameter Estimation Methods for Signal Modeling. Circuits Syst Signal Process 36, 1735–1753 (2017). https://doi.org/10.1007/s00034-016-0378-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-016-0378-4