Abstract

In this paper, we study the parameter estimation problem of a class of output nonlinear systems and propose a recursive least squares (RLS) algorithm for estimating the parameters of the nonlinear systems based on the model decomposition. The proposed algorithm has lower computational cost than the existing over-parameterization model-based RLS algorithm. The simulation results indicate that the proposed algorithm can effectively estimate the parameters of the nonlinear systems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Iterative methods and recursive methods have widely been used in system identification [7, 8, 15], system control [31, 33], signal processing [30] and multivariate pseudo-linear regressive analysis [37] and for solving matrix equations [6]. The parameter estimation methods have received much attention in system identification [28, 38, 39]. For example, Wang [35] gave a least squares-based recursive estimation algorithm and an iterative estimation algorithm for output error moving average systems using the data filtering technique. Dehghan et al. [5] studied the fourth-order variants of the Newton’s method without the second-order derivatives for solving nonlinear equations. Shi et al. [32] studied the output feedback stabilization of networked control systems with random delays modeled by the Markov chains. Li [25] developed a maximum likelihood estimation algorithm for Hammerstein CARARMA systems based on the Newton iteration. Wang and Zhang [43] proposed an improved least squares identification algorithm for multivariable Hammerstein systems.

The typical nonlinear systems include the Wiener systems [14], the Hammerstein systems [18], the Hammerstein–Wiener systems [3] and feedback nonlinear systems [1, 20]. A Wiener nonlinear model is composed of a linear dynamic block followed by a static nonlinear function and a Hammerstein model puts a nonlinear function before a linear dynamic block [2, 44]. Vörös [34] proposed the key term separation technique for identifying Hammerstein systems with multi-segment piecewise-linear characteristics. Recently, a decomposition-based Newton iterative identification method was proposed for a Hammerstein nonlinear FIR system with ARMA noise [12].

This paper considers the parameter identification problem of a special class of output nonlinear systems, whose output is the nonlinear function of the past outputs [7, 8]. For this class of nonlinear systems with colored noise, Wang et al. [36] gave a least squares-based and a gradient-based iterative identification algorithms; Hu et al. [21] proposed a recursive extended least squares parameter estimation algorithm using the over-parameterization model and a multi-innovation generalized extended stochastic gradient algorithm for nonlinear autoregressive moving average systems [22]; Bai presented an optimal two-stage identification algorithm for Hammerstein–Wiener nonlinear systems [4]. The least squares algorithms play a key role in the parameter estimation of linear systems [17, 19, 29]. This paper derives a new recursive least squares algorithm for output nonlinear systems using the hierarchical identification principle. The proposed method has less computational load and can be extended to study parameter estimation of dual-rate/multi-rate sampled systems [9, 10, 26].

This paper is organized as follows: Section 2 gives the representation of a class of nonlinear systems. Sections 3 and 4 derive a least squares algorithm and a model decomposition-based recursive least squares algorithm. Section 5 gives the computational efficiency of the proposed algorithm and the recursive extended least squares algorithm. Section 6 provides a numerical example to show the effectiveness of the proposed algorithm. Finally, some concluding remarks are offered in Sect. 7.

2 The System Description and its Identification Model

Let us define some notation. “\(A=:X\)”or “\(X:=A\)” stands for “A is defined as X”; \(\mathbf{1}_n\) denotes an n-dimensional column vector whose elements are all 1; \({\varvec{I}}\) (\({\varvec{I}}_n\)) represents an identity matrix of appropriate sizes (\(n\times n\)); z denotes a unit forward shift operator with \(zx(t)=x(t+1)\) and \(z^{-1}x(t)=x(t-1)\). Define the polynomials in the unit backward shift operator \(z^{-1}\):

and the parameter vectors:

A Hammerstein system (i.e., an input nonlinear system) can be expressed as [11]

by extending the input nonlinearity to the output nonlinearity, we can obtain a special class of nonlinear systems [21, 36]:

where u(t) and y(t) are the input and output of the system, respectively, and v(t) is white noise with zero mean and variance \(\sigma ^2\).

For simplicity, assume that the nonlinear part is a linear combination of a known basis \({\varvec{f}}:=(f_1, f_2, \ldots , f_{n_c})\) with coefficients \((c_1, c_2, \ldots , c_{n_c})\):

For the parameter identifiability, we must fix one of the coefficients \(c_i\)’s, or \(\Vert {\varvec{c}}\Vert =1\) with \(c_1>0\) [11].

Equation (1) can be rewritten as

Define the information matrix \({\varvec{F}}(t)\), the input information vector \({\varvec{\varphi }}(t)\) and the noise information vector \({\varvec{\psi }}(t)\) as

Then, Eq. (2) can be written as

The objective of identification is to present new methods for estimating the unknown parameter vector \({\varvec{c}}\) for the nonlinear part and the unknown parameter vectors \({\varvec{a}}\), \({\varvec{b}}\) and \({\varvec{d}}\) for the linear subsystems from the measurement data \(\{u(t), y(t):\ t=1, 2, 3,\ldots \}\).

3 The Least Squares Algorithm Based on the Model Decomposition

Let \(\hat{{\varvec{\theta }}}(t):=\left[ \begin{array}{c} \hat{{\varvec{a}}}(t) \\ \hat{{\varvec{d}}}(t) \end{array} \right] \) and \(\hat{{\varvec{\vartheta }}}(t):=\left[ \begin{array}{c} \hat{{\varvec{b}}}(t) \\ \hat{{\varvec{c}}}(t) \end{array} \right] \) denote the estimates of \({\varvec{\theta }}:=\left[ \begin{array}{c} {\varvec{a}} \\ {\varvec{d}} \end{array} \right] \) and \({\varvec{\vartheta }}:=\left[ \begin{array}{c} {\varvec{b}} \\ {\varvec{c}} \end{array} \right] \) at time t, respectively, and \({\varvec{\varTheta }}:=\left[ \begin{array}{c} {\varvec{\theta }} \\ {\varvec{\vartheta }} \end{array} \right] \). For the identification model in (6), using the hierarchical identification principle (the decomposition technique), define the quadratic cost functions:

Define the output vector \({\varvec{Y}}_t\) and the information matrices \({\varvec{\varPhi }}_t\), \({\varvec{\varPsi }}_t\), \({\varvec{\varOmega }}_t\) and \({\varvec{\varXi }}_t\) as

Then, \(J_1({\varvec{\theta }})\) and \(J_2({\varvec{\vartheta }})\) can be equivalently written as

For the two optimization problems, letting the partial derivatives of \(J_1({\varvec{\theta }})\) and \(J_2({\varvec{\vartheta }})\) with respect to \({\varvec{\theta }}\) and \({\varvec{\vartheta }}\) be zero gives

or

In order to ensure that the inverses of the matrices \(\hat{{\varvec{\varOmega }}}^{\tiny \text{ T }}_t\hat{{\varvec{\varOmega }}}_t\) and \(\hat{{\varvec{\varXi }}}_t^{\tiny \text{ T }}\hat{{\varvec{\varXi }}}_t\) exist, we suppose that the information matrices \(\hat{{\varvec{\varOmega }}}_t\) and \(\hat{{\varvec{\varXi }}}_t\) are persistently exciting. Let \(\hat{{\varvec{\varPsi }}}_t\), \(\hat{{\varvec{\psi }}}(t)\) \(\hat{v}(t)\) be the estimates of \({\varvec{\varPsi }}_t\), \({\varvec{\psi }}(t)\) and v(t) at time t.

Replacing the unknown \({\varvec{\varPsi }}_t\) in (12)–(13) with \(\hat{{\varvec{\varPsi }}}_t\), we have the following least squares algorithm for estimating the parameter vectors \({\varvec{\theta }}\) and \({\varvec{\vartheta }}\):

The procedures of computing the parameter estimates \(\hat{{\varvec{\theta }}}(t)\) and \(\hat{{\varvec{\vartheta }}}(t)\) are listed in the following.

-

1.

To initialize: let \(t=p\), collect the input–output data \(\{u(i),y(i): i=0, 1, 2, \ldots , p-1\}\) (\(p\gg n_a+n_b+n_c+n_d\)) and set the initial values \(\hat{{\varvec{b}}}(p-1)=\mathbf{1}_{n_b}/{p_0}\), \(\hat{{\varvec{c}}}(p-1)=\) a random vector with \(\Vert \hat{{\varvec{c}}}(p-1)\Vert =1\), \(\hat{v}(i)=\) a random number. \(p_0\) is normally a large positive number (e.g., \(p_0=10^6\)), give the basis function \(f_j(*)\).

-

2.

Collect the input–output data u(t) and y(t), form \({\varvec{Y}}_t\) using (7), \({\varvec{F}}(t)\) using (3), \({\varvec{\varphi }}(t)\) using (4), \({\varvec{\varPhi }}_t\) using (8) and \(\hat{{\varvec{\psi }}}(t)\) using (17).

-

3.

Compute \(\hat{{\varvec{\varOmega }}}_t\) using (10).

-

4.

Update the parameter estimate \(\hat{{\varvec{\theta }}}(t)\) using (14) and read \(\hat{{\varvec{a}}}(t)\) and \(\hat{{\varvec{d}}}(t)\) from \(\hat{{\varvec{\theta }}}(t)=\left[ \begin{array}{c} \hat{{\varvec{a}}}(t) \\ \hat{{\varvec{d}}}(t) \end{array} \right] \).

-

5.

Compute \(\hat{{\varvec{\varXi }}}_t\) using (11) and form \(\hat{{\varvec{\varPsi }}}_t\) using (16).

-

6.

Update the parameter estimate \(\hat{{\varvec{\vartheta }}}(t)\) using (15), read \(\hat{{\varvec{b}}}(t)\) from \(\hat{{\varvec{\vartheta }}}(t)=\left[ \begin{array}{c} \hat{{\varvec{b}}}(t) \\ \hat{{\varvec{c}}}(t) \end{array} \right] \) and normalize \(\hat{{\varvec{c}}}(t)\) using

$$\begin{aligned} \hat{{\varvec{c}}}(t)=\mathrm{sgn}\{[\hat{{\varvec{\vartheta }}}(t)](n_b+1)\}\frac{[\hat{{\varvec{\vartheta }}}(t)](n_b+1:n_b+n_c)}{\Vert [\hat{{\varvec{\vartheta }}}(t)](n_b+1:n_b+n_c)\Vert }. \end{aligned}$$ -

7.

Compute \(\hat{v}(t)\) using (18).

-

8.

Increase t by 1, go to Step 2 and continue calculation.

4 The Recursive Least Squares Algorithm Based on the Model Decomposition

For the identification model in (6), define the quadratic cost functions:

Define the information matrices \({\varvec{\varphi }}_1(t)\), \({\varvec{\varOmega }}_t\) and \({\varvec{\varXi }}_t\) as

Then, \(J_3({\varvec{\theta }})\) and \(J_4({\varvec{\vartheta }})\) can be equivalently rewritten as

Similarly, minimizing \(J_3({\varvec{\theta }})\) and \(J_4({\varvec{\vartheta }})\), we can obtain the least squares estimates:

Define the covariance matrix,

Hence, Eq. (22) can be rewritten as

Applying the matrix inversion lemma [7, 27]

to (24) gives

Pre-multiplying both sides of (24) by \({\varvec{P}}_1(t)\) gives

Substituting (27) into (25) gives the recursive estimate of the parameter vector \({\varvec{\theta }}\):

Define the gain vector \({\varvec{L}}_1(t):={\varvec{P}}_1(t){\varvec{\varphi }}_1(t)\in {\mathbb R}^{n_a+n_d}\). Using (26), it follows that

Using (29), Eq. (26) can be rewritten as

Here, we can see that the right-hand sides of (19) and (28) contain the unknown parameter vectors \({\varvec{c}}\) and \({\varvec{b}}\), respectively. The solution is to replace the unknown \({\varvec{b}}\) and \({\varvec{\varphi }}_1(t)\) in (28) and (30) with their corresponding estimates \(\hat{{\varvec{b}}}(t-1)\) and \(\hat{{\varvec{\varphi }}}_1(t)=[\hat{{\varvec{c}}}^{\tiny \text{ T }}(t-1){\varvec{F}}^{\tiny \text{ T }}(t), \hat{{\varvec{\psi }}}^{\tiny \text{ T }}(t)]^{\tiny \text{ T }}\), we have

Define the information vector \({\varvec{\varphi }}_2(t):=[{\varvec{\varphi }}^{\tiny \text{ T }}(t), {\varvec{a}}^{\tiny \text{ T }}{\varvec{F}}(t)]^{\tiny \text{ T }}\in {\mathbb R}^{n_b+n_c}\) and the covariance matrix \({\varvec{P}}_2^{-1}(t):={\varvec{\varXi }}_t^{\tiny \text{ T }}{\varvec{\varXi }}_t\in {\mathbb R}^{(n_b+n_c)\times (n_b+n_c)}\) and the gain vector \({\varvec{L}}_2(t):={\varvec{P}}_2(t){\varvec{\varphi }}_2(t)\in {\mathbb R}^{n_b+n_c}\). Similarly, some unknown terms are replaced with their estimates; we can obtain the recursive estimate of the parameter vector \({\varvec{\vartheta }}\):

Thus, we can summarize the recursive least squares algorithm for estimating the parameter vectors \({\varvec{\theta }}\) and \({\varvec{\vartheta }}\) of the nonlinear systems based on the model decomposition (the ON-RLS algorithm for short) as follows:

The procedures of computing the parameter estimation vectors \(\hat{{\varvec{\theta }}}(t)\) and \(\hat{{\varvec{\vartheta }}}(t)\) in (35)–(47) are listed in the following.

-

1.

To initialize: let \(t=1\), and set the initial values \({\varvec{P}}_1(0)=p_0{\varvec{I}}_{n_a+n_d}\), \(\hat{{\varvec{\theta }}}(0)=\mathbf{1}_{n_a+n_d}/p_0\), \(\hat{{\varvec{b}}}(0)=\mathbf{1}_{n_b}/{p_0}\), \({\varvec{P}}_2(0)=p_0{\varvec{I}}_{n_b+n_c}\), \(p_0=10^6\), \(\Vert \hat{{\varvec{c}}}(0)\Vert =1\), give the basis function \(f_j(*)\).

-

2.

Collect the input–output data u(t) and y(t), form \({\varvec{F}}(t)\) using (46), \({\varvec{\varphi }}(t)\) using (43), \(\hat{{\varvec{\psi }}}(t)\) using (44) and compute \(\hat{{\varvec{\varphi }}}_1(t)\) in (41).

-

3.

Compute \({\varvec{L}}_1(t)\) using (36) and \({\varvec{P}}_1(t)\) using (37).

-

4.

Update the parameter estimate \(\hat{{\varvec{\theta }}}(t)\) using (35) and read \(\hat{{\varvec{a}}}(t)\) and \(\hat{{\varvec{d}}}(t)\) from \(\hat{{\varvec{\theta }}}(t)=\left[ \begin{array}{c} \hat{{\varvec{a}}}(t) \\ \hat{{\varvec{d}}}(t) \end{array} \right] \).

-

5.

Form \(\hat{{\varvec{\varphi }}}_2(t)\) in (42) and compute \({\varvec{L}}_2(t)\) using (39) and \({\varvec{P}}_2(t)\) using (40).

-

6.

Update the parameter estimate \(\hat{{\varvec{\vartheta }}}(t)\) using (38) and read \(\hat{{\varvec{b}}}(t)\) from \(\hat{{\varvec{\vartheta }}}(t)=\left[ \begin{array}{c} \hat{{\varvec{b}}}(t) \\ \hat{{\varvec{c}}}(t) \end{array} \right] \) and normalize \(\hat{{\varvec{c}}}(t)\) using

$$\begin{aligned} \hat{{\varvec{c}}}(t)=\mathrm{sgn}\{[\hat{{\varvec{\vartheta }}}(t)\}(n_b+1)\}\frac{[\hat{{\varvec{\vartheta }}}(t)](n_b+1:n_b+n_c)}{\Vert [\hat{{\varvec{\vartheta }}}(t)](n_b+1:n_b+n_c)\Vert }, \end{aligned}$$(48)and let \(\hat{{\varvec{\vartheta }}}(t)=\left[ \begin{array}{c} \hat{{\varvec{b}}}(t) \\ \hat{{\varvec{c}}}(t) \end{array} \right] \).

-

7.

Compute \(\hat{v}(t)\) using (45).

-

8.

Increase t by 1, go to Step 2 and continue the recursive calculation.

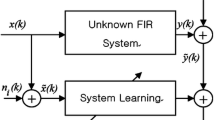

The flowchart for computing the estimates \(\hat{{\varvec{\theta }}}(t)\) and \(\hat{{\varvec{\vartheta }}}(t)\) in (35)–(47) is shown in Fig. 1.

To show the advantages of the proposed ON-RLS algorithm, the following gives the stochastic gradient algorithm with a forgetting factor \(\lambda \) for estimating the parameter vectors \({\varvec{\theta }}\) and \({\varvec{\vartheta }}\) of the nonlinear systems (the ON-SG algorithm for short) [11]:

Remark 1

The ON-RLS algorithm in (35)–(47) has faster convergence rates than the ON-SG algorithm in (49)–(52)—see the last columns in Tables 3 and 4.

5 The Comparison of the Computational Efficiency

In order to show the advantage of the ON-RLS algorithm, the following gives simply recursive extended least squares algorithm in [21] for comparison.

Define the parameter vectors,

Then, we have the following recursive extended least squares (RELS) algorithm [21]:

Remark 2

Compared with the recursive extended least squares algorithm, which involves the covariance matrix \({\varvec{P}}(t)\) of large size \((n_an_c+n_b+n_d)\times (n_an_c+n_b+n_d)\), the ON-RLS algorithm has less computational load because it involves two covariance matrices \({\varvec{P}}_1(t)\) and \({\varvec{P}}_2(t)\) of small sizes \((n_a+n_d)\times (n_a+n_d)\) and \((n_b+n_c)\times (n_b+n_c)\)—see the details in Tables 1 and 2. From the simulation example (omitted in the paper), we can see that the parameter estimation errors given by the ON-RLS algorithm are very close to those given by the RELS algorithm.

It has been just pointed out by Golub and Van Loan [16] that the flop (floating point operation) counting is a necessarily crude approach to the measuring of program efficiency since it ignores subscripting, memory traffic, and the countless other overheads associated with program execution, the flop counting is just a “quick and dirty” accounting method that captures only one of the several dimensions of the efficiency issue although multiplication/division and addition/subtraction with different lengths are different. The flop numbers of the ON-RLS and RELS algorithms at each recursion are given in Tables 1 and 2. Their total flops are, respectively, given by

In order to compare the computational efficiency of these two algorithms, we count the difference between the amount of calculation of these two algorithms. When \(n_a>2\) and \(n_b>2\), \(n_an_b>n_a+n_b\), \(N_2>4(n_a+n_b+n_c+n_d)^2+6(n_a+n_b+n_c+n_d)\). Then, we have

It is clear that the ON-RLS algorithm requires less computational load than the RELS algorithm. For example, when \(n_a=n_b=n_c=n_d=5\), we have \(N_2-N_1=5110-1060=4050\) flops.

6 Example

Consider the following nonlinear system:

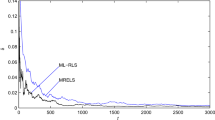

In simulation, the input \(\{u(t)\}\) is taken as a persistent excitation signal sequence with zero mean and unit variance, and \(\{v(t)\}\) as a white noise sequence with zero mean and variance \(\sigma ^2=0.50^2\). Applying the ON-RLS algorithm and the ON-SG algorithm with \(\lambda =0.99\) to estimate the parameters of this system, the parameter estimates and errors are given in Tables 3 and 4, and the parameter estimation errors \(\delta :=\Vert \hat{{\varvec{\varTheta }}}(t)-{\varvec{\varTheta }}\Vert /\Vert {\varvec{\varTheta }}\Vert \) versus t are shown in Figs. 2 and 3.

From Tables 3, 4 and Figs. 2, 3, we can draw the following conclusions.

-

It is clear that the parameter estimation errors given by two algorithms become smaller with the data length increasing.

-

The parameter estimation accuracy of the ON-RLS algorithm is higher than that of the ON-SG algorithm.

-

The parameter estimates given by the ON-RLS algorithm converge faster to their true values compared with the ON-SG algorithm for appropriate forgetting factors.

7 Conclusions

Using the hierarchical identification principle, a recursive least squares algorithm is derived for a special class of output nonlinear systems by transforming a nonlinear system into two identification models. The proposed algorithm can give a satisfactory identification accuracy and has higher computational efficiencies compared with the recursive extended least squares parameter estimation algorithm in [21]. The proposed algorithm can be extended to study identification problems of multivariable systems [13], linear-in-parameters systems [41, 42] and impulsive dynamical systems [23, 24] and applied to other fields [45–47].

References

J.C. Agüero, G.C. Goodwin, P.M.J. Van den Hof, A virtual closed loop method for closed loop identification. Automatica 47(8), 1626–1637 (2011)

M. Ahmadi, H. Mojallali, Identification of multiple-input single-output Hammerstein models using Bezier curves and Bernstein polynomials. Appl. Math. Model. 35(4), 1969–1982 (2011)

E.W. Bai, A blind approach to the Hammerstein–Wiener model identification. Automatica 38(6), 967–979 (2002)

E.W. Bai, An optimal two-stage identification algorithm for Hammerstein–Wiener nonlinear systems. Automatica 34(3), 333–338 (1998)

M. Dehghan, M. Hajarian, Fourth-order variants of Newton’s method without second derivatives for solving non-linear equations. Eng. Comput. 29(4), 356–365 (2012)

M. Dehghan, M. Hajarian, Analysis of an iterative algorithm to solve the generalized coupled Sylvester matrix equations. Appl. Math. Model. 35(7), 3285–3300 (2011)

F. Ding, System Identification-New Theory and Methods (Science Press, Beijing, 2013)

F. Ding, System Identification-Performances Analysis for Identification Methods (Science Press, Beijing, 2014)

J. Ding, C.X. Fan, J.X. Lin, Auxiliary model based parameter estimation for dual-rate output error systems with colored noise. Appl. Math. Model. 37(6), 4051–4058 (2013)

J. Ding, J.X. Lin, Modified subspace identification for periodically non-uniformly sampled systems by using the lifting technique. Circuits Syst. Signal Process. 33(5), 1439–1449 (2014)

F. Ding, X.P. Liu, G. Liu, Identification methods for Hammerstein nonlinear systems. Digit. Signal Process. 21(2), 215–238 (2011)

F. Ding, K.P. Deng, X.M. Liu, Decomposition based Newton iterative identification method for a Hammerstein nonlinear FIR system with ARMA noise. Circuits Syst. Signal Process. 33(9), 2881–2893 (2014)

F. Ding, Y.J. Wang, J. Ding, Recursive least squares parameter estimation algorithms for systems with colored noise using the filtering technique and the auxiliary model. Digit. Signal Process. 37, 100–108 (2015)

D. Fan, K. Lo, Identification for disturbed MIMO Wiener systems. Nonlinear Dyn. 55(1–2), 31–42 (2009)

G.C. Goodwin, K.S. Sin, Adaptive Filtering Prediction and Control (Prentice Hall, Englewood Cliffs, 1984)

G.H. Golub, C.F. Van Loan, Matrix Computations, 3rd edn. (Johns Hopkins University Press, Baltimore, 1996)

Y. Gu, F. Ding, J.H. Li, States based iterative parameter estimation for a state space model with multi-state delays using decomposition. Signal Process. 106, 294–230 (2015)

A. Hagenblad, L. Ljung, A. Wills, Maximum likelihood identification of Wiener models. Automatica 44(11), 2697–2705 (2008)

Y.B. Hu, Iterative and recursive least squares estimation algorithms for moving average systems. Simul. Model. Pract. Theory 34, 12–19 (2013)

P.P. Hu, F. Ding, Multistage least squares based iterative estimation for feedback nonlinear systems with moving average noises using the hierarchical identification principle. Nonlinear Dyn. 73(1–2), 583–592 (2013)

Y.B. Hu, B.L. Liu, Q. Zhou, C. Yang, Recursive extended least squares parameter estimation for Wiener nonlinear systems with moving average noises. Circuits Syst. Signal Process. 33(2), 655–664 (2014)

Y.B. Hu, B.L. Liu, Q. Zhou, A multi-innovation generalized extended stochastic gradient algorithm for output nonlinear autoregressive moving average systems. Appl. Math. Comput. 247, 218–224 (2014)

Y. Ji, X.M. Liu, Unified synchronization criteria for hybrid switching-impulsive dynamical networks. Circuits Syst. Signal Process. 34(5), 1499–1517 (2015)

Y. Ji, X.M. Liu et al., New criteria for the robust impulsive synchronization of uncertain chaotic delayed nonlinear systems. Nonlinear Dyn. 79(1), 1–9 (2015)

J.H. Li, Parameter estimation for Hammerstein CARARMA systems based on the Newton iteration. Appl. Math. Lett. 26(1), 91–96 (2013)

X.G. Liu, J. Lu, Least squares based iterative identification for a class of multirate systems. Automatica 46(3), 549–554 (2010)

L. Ljung, System Identification: Theory for the User, 2nd edn. (Prentice Hall, Englewood Cliffs, 1999)

Y.W. Mao, F. Ding, A novel data filtering based multi-innovation stochastic gradient algorithm for Hammerstein nonlinear systems. Digit. Signal Process. 46, 215–225 (2015)

P. Qin, R. Nishii, Z.J. Yang, Selection of NARX models estimated using weighted least squares method via GIC-based method and l1-norm regularization methods. Nonlinear Dyn. 70(3), 1831–1846 (2012)

Y. Shi, T. Chen, Optimal design of multi-channel transmultiplexers with stopband energy and passband magnitude constraints. IEEE Trans. Circuits Syst. II Analog Digit. Signal Process. 50(9), 659–662 (2003)

Y. Shi, B. Yu, Robust mixed H-2/H-infinity control of networked control systems with random time delays in both forward and backward communication links. Automatica 47(4), 754–760 (2011)

Y. Shi, B. Yu, Output feedback stabilization of networked control systems with random delays modeled by Markov chains. IEEE Trans. Autom. Control 54(7), 1668–1674 (2009)

J. Vörös, Modelling and identification of Wiener systems with two-segment nonlinearities. IEEE Trans. Control Syst. Technol. 11(2), 253–265 (2003)

J. Vörös, Modeling and parameter identification of systems with multi-segment piecewise-linear characteristics. IEEE Trans. Autom. Control 47(1), 184–188 (2002)

D.Q. Wang, Least squares-based recursive and iterative estimation for output error moving average systems using data filtering. IET Control Theory Appl. 5(14), 1648–1657 (2011)

D.Q. Wang, F. Ding, Least squares based and gradient based iterative identification for Wiener nonlinear systems. Signal Process. 91(5), 1182–1189 (2011)

X.H. Wang, F. Ding, Convergence of the recursive identification algorithms for multivariate pseudo-linear regressive systems. Int. J. Adapt. Control Signal Process. 30 (2016). doi:10.1002/acs.2642

X.H. Wang, F. Ding, Recursive parameter and state estimation for an input nonlinear state space system using the hierarchical identification principle. Signal Process. 117, 208–218 (2015)

D.Q. Wang, Y.P. Gao, Recursive maximum likelihood identification method for a multivariable controlled autoregressive moving average system. IMA J. Math. Control Inf. (2015). doi:10.1093/imamci/dnv021

D.Q. Wang, H.B. Liu, F. Ding, Highly efficient identification methods for dual-rate Hammerstein systems. IEEE Trans. Control Syst. Technol. 23(5), 1952–1960 (2015)

C. Wang, T. Tang, Recursive least squares estimation algorithm applied to a class of linear-in-parameters output error moving average systems. Appl. Math. Lett. 29, 36–41 (2014)

C. Wang, T. Tang, Several gradient-based iterative estimation algorithms for a class of nonlinear systems using the filtering technique. Nonlinear Dyn. 77(3), 769–780 (2014)

D.Q. Wang, W. Zhang, Improved least squares identification algorithm for multivariable Hammerstein systems. J. Frankl. Inst. Eng. Appl. Math. 352(11), 5292–5370 (2015)

A. Wills, T.B. Schön, L. Ljung, B. Ninness, Identification of Hammerstein–Wiener models. Automatica 49(1), 70–81 (2013)

D.Q. Zhu, X. Hua, B. Sun, A neurodynamics control strategy for real-time tracking control of autonomous underwater vehicles. J. Navig. 67(1), 113–127 (2014)

D.Q. Zhu, H. Huang, S.X. Yang, Dynamic task assignment and path planning of multi-AUV system based on an improved self-organizing map and velocity synthesis method in 3D underwater workspace. IEEE Trans. Cybern. 43(2), 504–514 (2013)

D.Q. Zhu, Q. Liu, Z. Hu, Fault-tolerant control algorithm of the manned submarine with multi-thruster based on quantum behaved particle swarm optimization. Int. J. Control 84(11), 1817–1829 (2012)

Acknowledgments

This work was supported by the National Natural Science Foundation of China (No. 61203111) and the PAPD of Jiangsu Higher Education Institutions.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ding, F., Wang, X., Chen, Q. et al. Recursive Least Squares Parameter Estimation for a Class of Output Nonlinear Systems Based on the Model Decomposition. Circuits Syst Signal Process 35, 3323–3338 (2016). https://doi.org/10.1007/s00034-015-0190-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-015-0190-6