Abstract

The main idea of this work is to present a novel scheme based on Bernstein wavelets for finding numerical solution of two classes of fractional optimal control problems (FOCPs) and one class of fractional variational problems (FVPs). First, we present an approximation for fractional derivative using the Laplace transform. Then, the obtained integer-order problems are converted into equivalent variational problems. By using the Bernstein wavelets, activation functions and the Gauss–Legendre integration scheme, problems are transformed to algebraic systems of equations. Finally, these systems are solved employing Newton’s iterative scheme. Error bound for the best approximation is given. Also, we propose a scheme to determine the number of basis functions necessary to get a certain precision. In order to verify that the mentioned scheme is applicable and powerful for solving FOCPs and FVP, some numerical experiments have been provided.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Recently, numerous phenomena in various fields of applied science and engineering have been simulated by fractional differential equations. In detail, these equations have appeared in electromagnetics, viscoelasticity, fluid mechanics, electrochemistry, biological population models, signals processing, continuum, heat transfer in heterogeneous media, ultracapacitor, pharmacokinetics and statistical mechanics (Chen et al. 2013; Jajarmi and Baleanu 2018; Wang and Zhou 2011; Heydari et al. 2016; Popovic et al. 2015).

Therefore, many numerical schemes have been presented for finding numerical solution of these problems, for instance, Adomian decomposition technique (Babolian et al. 2014), variational iteration technique (Yang et al. 2010), bivariate Müntz wavelets technique (Rahimkhani and Ordokhani 2020), fractional alternative Legendre functions technique (Rahimkhani and Ordokhani 2020), fractional Lucas optimization technique (Dehestani et al. 2022), fractional Chelyshkov wavelets technique (Rahimkhani et al. 2019) and orthonormal Bernoulli wavelets neural network technique (Rahimkhani and Ordokhani 2021).

The FOCP are extensions of the classical ones. In such problems, the dynamical system and/or the objective function may be involved with fractional operators. The main reason to study such problems is the fact that there are many problems in which the behavior of their dynamical systems can concisely be expressed in terms of fractional operators, for instance, in the analog fractional-order controller in temperature and motor control applications (Bohannan 2008), fractional control of heat diffusion systems (Suarez et al. 2008), a fractional-order HIV-immune system with memory (Jesus and Machado 2008), a fractional adaptation scheme for lateral control of an autonomous guided vehicle (Ding et al. 2012), mechanical systems (Kiryakova 1994), automotive vehicle design (Bell 2004), manufacturing processes (Samko et al. 1993), transportation systems (Jajarmi and Baleanu 2018), HIV/AIDS epidemic model with random testing and contact tracing (Kiryakova 1994) and physics (Tripathy et al. 2015). FOCPs can be introduced by applying various definitions of fractional derivatives, such as the Caputo fractional derivatives and the Riemann–Liouville. These problems have been studied by many authors; for example, Agrawal (2004) introduced a general formulation and a numerical technique for FOCPs. Lotfi et al. (2013) applied an approximate direct technique for finding solution of a general class of FOCPs. Alipour et al. (2013) investigated multi-dimensional FOCPs by using the Bernstein polynomials. Rabiei et al. (2018a) applied fractional-order Boubaker functions for solving a class of FOCPs. Rahimkhani et al. (2016) used the Bernoulli wavelet method to solve delay FOCPs. Mashayekhi and Razzaghi (2018) proposed a technique based on hybrid of block pulse functions and Bernoulli polynomials for finding approximate solution of FOCPs. Sabermahani et al. (2019) introduced fractional order Lagrange polynomials and used them to solve FOCPs. Rabiei and Parand (2020) investigated the Chebyshev collocation approach for finding numerical solution of FOCPs.

Also, different numerical schemes have been introduced for solving FVPs, for example, Rayleigh–Ritz scheme (Khader 2015), polynomial basis functions scheme (Lotfi and Yousefi 2013), fractional finite element scheme (Agrawal 2008), Müntz–Legendre polynomials scheme (Ordokhani and Rahimkhani 2018), fractional Jacobi functions scheme (Zaky et al. 2018), shifted Chebyshev polynomials scheme (Ezz-Eldien et al. 2018), modified wavelet scheme (Dehestani et al. 2020), etc.

Special kinds of oscillatory functions are wavelets that they have been used in time–frequency analysis, fast algorithms, edge extrapolation, image processing, signal processing and edge extrapolation (Chui 1997). We note that there are some advantages, for instance, compact support, orthogonality and the ability to show the functions at various levels of resolution. Wavelets as a useful class of bases have been applied to solve several problems of the dynamical systems. For example, Haar wavelets have been used to solve of the Riccati differential equation (Li et al. 2014). Müntz–Legendre wavelets have been introduced for finding numerical solution of fractional differential equations (FDEs) with delay (Rahimkhani et al. 2018). Bernoulli wavelets have been used for solving variable FDEs (Soltanpour Moghadam et al. 2020). Genocchi wavelets have been used for solving of various kinds of FDEs with delay (Dehestani et al. 2019). Fractional-order Bernoulli wavelets have been used for numerical analysis of the pantograph FDEs (Rahimkhani et al. 2017). Fractional Chelyshkov wavelets (Rahimkhani et al. 2019) have been introduced for the approximate solution of distributed-order FDEs.

Bernstein wavelets have many useful properties over an interval [0, 1] (we can deduce these properties from the Bernstein polynomials properties Bhatti and Bracken 2007). The Bernstein wavelets bases vanish except the first polynomial at \(t=\frac{\hat{n}}{2^{k-1}}\) and the last polynomial at \(t=\frac{\hat{n}+1}{2^{k-1}}\), over any interval \([\frac{\hat{n}}{2^{k-1}}, \frac{\hat{n}+1}{2^{k-1}}]\). It also ensures that the sum at any point t of all the Bernstein wavelet is \(2^{k-1}\beta _{i, M}\) and every Bernstein wavelets is positive for all real t on the region \(t\in (\frac{\hat{n}}{2^{k-1}}, \frac{\hat{n}+1}{2^{k-1}})\). A simple code written in Mathematica or Maple can be applied to obtain all the non-zero Bernstein wavelets of any order m over interval \(t\in [\frac{\hat{n}}{2^{k-1}}, \frac{\hat{n}+1}{2^{k-1}}]\). The Bernstein wavelets are advantageous for practical computations, on account of its intrinsic numerical stability. The Bernstein wavelets have many applications for finding numerical solution of different FDEs, fractional integral-differential equations and fractional optimal control problems. Also, the wavelet method is computer oriented; thus, solving higher-order equation becomes a matter of dimension increasing. The solution is convergent, even if the size of increment is large. Wavelet basis has two degrees of freedom which increase the accuracy of the method. The solution is of multiresolution type. Also, they have the following properties (Rahimkhani and Ordokhani 2021):

-

The basis set can be improved in an systematic way

-

Different resolutions can be used in different regions of space

-

The coupling between different resolution levels is easy

-

There are few topological constraints for increased resolution regions

-

The Laplace operator is diagonally dominant in an appropriate wavelet basis

-

The matrix elements of the Laplace operator are very easy to calculate

-

The numerical effort scales linearly with respect to system size.

Here our target is to present a new method based on Bernstein wavelets and activation functions for solving of FOCPs and FVP. First, we present an approximation for fractional derivative using the Laplace transform. Then, the under study problems are converted into equivalent variational problems. By using the Bernstein wavelets method and activation functions, the problems are converted to algebraic systems of equations. Finally, these systems are solved employing the Gauss-Legendre integration method and Newton’s iterative technique. Some of the most important advantages of the proposed scheme are listed in the following:

-

Easy computation and simple implementation.

-

The obtained numerical solution with this method is a continuous and differentiable solution; also these solutions satisfy the initial and boundary conditions.

-

We did not use any operational matrix (which reduces the calculation error and CPU time).

-

A small value of Bernstein wavelets is needed to achieve high accuracy and satisfactory results.

-

By applying this scheme, consideration problems are transformed into a system of algebraic equations that can be solved via a suitable numerical method.

-

Used approximate is based on hybrid of Bernstein wavelets and activation functions instead of a linear combination of wavelets, so applied approximate solution is more efficient.

This paper is organized as follows. In Sect. 2, we present some preliminaries about Bernstein wavelets and activation functions. In Sect. 3, we describe the understudy problems. In Sect. 4, we offer a numerical method for finding numerical solution of the fractional optimal control problems and fractional variational problems. In Sect. 5, we propose error bound for the best approximation. In Sect. 6, a criterion for choosing the number of wavelets is presented. In Sect. 7, we report our numerical findings and demonstrate the accuracy of the new numerical scheme by considering six test examples. Finally, concluding remarks are given in Sect. 8.

2 Preliminaries and Notations

2.1 Bernstein Wavelets

The Bernstein wavelets are introduced over [0, 1) as:

with

where k can assume any positive integer that determines the number of subintervals, \(n=1, 2, \ldots , 2^{k-1},\) shows the location of a subinterval and refers to the subinterval number, \(i=0, 1, \ldots , m, (m=M-1)\) is the order of the Bernstein polynomial and \(t \in [0 , 1)\) denotes the time. Also, \(B_{i,m}(t)\) are the Bernstein polynomials over [0, 1] , as

Bernstein polynomials satisfy the following property (Nemati 2017):

2.2 Introduction of Activation Functions

In this section, we express a new approximation based on two classes of activation functions for obtaining the numerical solution of FOCP and FVP. This approximation has more ability to solve equations than simple approximations based on wavelets.

The output of hybrid of these activation functions with input data t and parameter C is as

Here \(\varTheta\) is a linear combination of the Bernstein wavelets as

and C vector determined by:

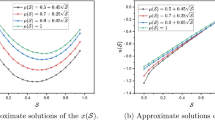

Also, AF(.) is another activation function that affects on the combination of the Bernstein wavelets. Here, we have used functions of tanh(t) and arctan(t) as activation functions. Figure 1 shows structure of hybrid these functions. Also, Fig. 2 shows graphs of \(\psi _{n,i, m}(t)\), \(arctan(\psi _{n,i, m}(t))\) and \(tanh(\psi _{n,i, m}(t))\) for \(k=2, M=4\).

3 Problem Statement

In this work, we investigate two classes of FOCPs and one class of FVP.

3.1 Type 1

Consider the following FOCP as

with the following dynamical system

and the initial condition

In aforesaid problem, \(b \ne 0\) and a(t) and h(t) are continuous functions of t and \(D^{\nu } y(t)\) is the Caputo fractional derivative of order \(\nu\) as (Rahimkhani and Ordokhani 2020)

\(D^{\nu }y(t)=\frac{1}{\Gamma (n- \nu )}\int _{0}^{t}(t-\tau )^{n-\nu -1}y^{(n)}(\tau )d\tau ,\)

\(n-1 < \nu \le n.\)

3.2 Type 2

Consider the following FOCP as

with the following dynamical system

and the boundary conditions

In aforesaid problem \(P, Q, b \ne 0\) and a(t) and h(t) are continuous functions of t.

3.3 Type 3

Consider the following FVP as

with the boundary conditions

4 The Computational Scheme

Because Caputo fractional derivative is an integral of the solution with respect to time, the numerical method for finding the solution of FDEs requires using the values of all previous time steps. This needs a large size of memory to store the necessary data when computing, which may lead to a memory problem in the computer. Therefore, first, we approximate the Caputo fraction derivative by applying the Laplace transform technique similar to Ren et al. (2016) as follows:

where L is Laplace operator. We linearize the term \(s^{\nu } (0 < \nu \le 1)\) as

Replacing Eq. (16) into Eq. (15), we get

By using the inverse Laplace transform, we conclude

4.1 Type 1

For solving problem (7)–(9), we approximate function \(D^{\nu }y(t)\) by using Eq. (18) as

Now, we estimate y(t) by hybrid of the activation functions as

According to Eq. (8), we can write

By replacing Eqs. (20) and (21) in Eq. (7), we achieve

By employing the above equation and the Gauss–Legendre integration method, we get

So, to get extremum of J, the following necessary conditions are demonstrated by

We can solve the previous equations for finding C via Newton’s iterative technique.

4.2 Type 2

For solving problem (10)–(12), we approximate function \(D^{\nu }y(t)\) by using Eq. (18) as

Now, we estimate y(t) by the activation functions as

By making use of Eq. (11), we gain

By inserting Eqs. (26) and (27) in Eq. (10), we have

By using the above equation and the Gauss–Legendre integration method, we have

So, to get extremum of J, the following necessary conditions are demonstrated by

We can solve the previous equations for finding C via Newton’s iterative technique.

4.3 Type 3

For solving problem (13)–(14), we estimate function \(D^{\nu }y(t)\) by applying Eq. (18) as

Now, we approximate y(t) by activation functions as

By replacing Eqs. (31) and (32) in Eq. (13), we achieve

By employing the Gauss–Legendre integration method and previous equation, we get

So, to get extremum of J, the following necessary conditions are demonstrated by

We can solve the previous equations for finding C via Newton’s iterative technique.

5 Error Bound for the Best Approximation

The aim of this part is to discuss the error estimate of the current scheme in Sobolev space. The norm of Sobolev ( of integer order \(\tau \ge 0\)) over (a, b) is given as Rahimkhani et al. (2018)

where \(y^{(j)}\) shows the distributional derivative of order j of y.

Theorem 1

Consider \(y \in H^{\tau } (0, 1)\) with \(\tau \ge 0\) and \(M \ge \tau ,\) and \({\tilde{y}}\) is the best approximation of y that is obtained by applying the activation functions, then we have the following estimations:

and for \(1 \le s \le \tau\) we yield

Proof

Consider \(y\in H^{\tau } (0, 1)\) with \(\tau \ge 0\) and \(P_{M-1}^{2^{k-1}}y\) is the best approximation of y that is obtained by using the Müntz–Legendre wavelets over (0, 1), we get Rahimkhani et al. (2018)

for \(1 \le s \le \tau\) we have

in above relations, c depends on \(\tau\).

Since the best approximation is unique (Kreyszig 1978), it yields

Therefore, we conclude the desired results.

5.1 Type 1

Theorem 2

Assume that \(y \in H^{\tau }(0, 1)\) with \(1\le s \le \tau , 0 < \nu \le 1\) and \({\tilde{y}}\) is the best approximation of y that given by the activation functions. If

-

F satisfy Lipschitz condition with the Lipschitz constant \(\eta\),

-

\(\frac{1}{\vert b \vert }= \kappa\),

-

\(\Vert a \Vert _{L^{2}(0, 1)} \le \gamma ,\)

then, for the error bound \(\Vert E \Vert _{L^{2}(0, 1)}\) we gain

Proof

By using Eqs. (7)–(8) and the aforesaid conditions, we have

Consider the following relation

We obtain

by using Eq. (38), we have

By considering (45)–(46) and Eq. (37), we conclude the required results.

5.2 Type 2

Theorem 3

Let \(y \in H^{\tau }(0, 1)\) with \(1\le s \le \tau , 0 < \nu \le 1\). If the assumptions in Theorem 2 are established and

then we get

Proof

By using Eqs. (10)–(11) and the aforesaid conditions, we have

Due to the definition of Sobolev norm for \(1\le s \le \tau\), we get

By applying Eqs. (38) and (49), yields

By considering (37), (46) and Eq. (48), we conclude the required results.

5.3 Type 3

Theorem 4

Let \(y \in H^{\tau }(0, 1)\) with \(1\le s \le \tau , 0 < \nu \le 1\). If the assumptions in Theorem 2 are established, then we achieve

Proof

By using Eqs. (13), we have

From Eqs. (37), (46) and above equation, the desired result is deduced.

6 A Criterion for Choosing the Number of Wavelets

In this part, we introduce a algorithm for choosing the number of basis functions (k, M). For this aim, we assume \(y(.) \in C^{2\hat{n}}([0, 1)).\)

6.1 Type 1

By applying the error of \(\hat{n}\)-point Legendre–Gauss quadrature formula given in Morgado et al. (2017), the exact solution of problem (7)–(9) satisfies the following relation as

where

and

Let

and \(Y^{1}_{k, M}(t)=\delta _{0} +tN(t, C)\) be the numerical solution of problem (7)–(9) given via the mentioned scheme in Sect. 4.

Therefore, for a given \(\epsilon > 0\), we can choose k, M such that the following criterion holds:

6.2 Type 2

Similar to type 1, we let

and \(Y^{2}_{k, M}(t)=\delta _{0}+(\delta _{1} -\delta _{0})t +t(t-1)N(t, C)\) is the numerical solution of problem (10)–(12).

So, for a given \(\epsilon > 0\), we can choose k, M such that the following criterion holds:

Remark 1

For Type 3, we can obtain a criterion for choosing k, M similar to type 1 and type 2.

7 Numerical Investigation of the Mentioned Method

In the current part, we implement the activation functions scheme for finding numerical solution of FOCP and FVP, which justify the applicability and accuracy of the mentioned scheme. The reported numerical results were done on a personal computer, and the codes are written in Mathematica 10.

7.1 Type 1

Example 1

Consider the following FOCP as (Alizadeh et al. 2017)

with the dynamics system

The aforesaid problem has the following exact solution for \(\nu =1\):

We solve the aforesaid problem via the mentioned technique in Sect. 4 with activation function arctan(.). The state and control variables are approximated by

Absolute errors of y(t) and z(t) via \(k=1, \nu =1\) and various choices M are expressed in Table 1. From this table, we notice that both the state and the control variables converge as M is increased. Also, the optimal values of cost function and CPU times for \(k=1, M=10\) are compared with Alizadeh et al. (2017) in Table 2. Numerical results of the state and control variables with various cases of \(\nu\) are portrayed in Fig. 3. From this figure, we conclude that by approaching the values of \(\nu\) to 1, the numerical result is convergent to the exact solution.

Example 2

Consider the following FOCP as (Sahu and Saha Ray (2018))

with the dynamics system

The aforesaid problem has the following exact solution:

We solve the aforesaid problem via the mentioned technique in Sect. 4 with activation function tanh(.). The state and control variables are approximated as

In Table 3, we report the optimal values of J and CPU times of the presented scheme with \(k=2, M=3, \nu =1\), LWM, Chebyshev wavelet method (CWM), Laguerre wavelet method (LaWM) and CASWM (Sahu and Saha Ray 2018). By using Table 3, we conclude that the mentioned method is more accurate than other methods in Sahu and Saha Ray (2018). Also, numerical results of the state and control variables for different choices of \(\nu\) are plotted in Fig. 4.

7.2 Type 2

Example 3

Consider the following FOCP as (Rabiei et al. 2018b)

with the dynamics system

The aforesaid problem has the following exact solution:

We solve the aforesaid problem via the mentioned technique in Sect. 4 with activation function tanh(.). The state and control variables are approximated by

The optimal values of J and CPU times of the presented scheme with \(k=2, M=8\) and Rabiei et al. (2018b) are illustrated in Table 4. In Table 5, we report the absolute errors of y(t) and z(t) for \(k=1, \nu =1\) and different cases M. From this table, we notice that by increasing the number of basis functions, the absolute error tends to zero. Diagrams of numerical results of the state and control variables for \(k=1, M=10\) and several cases of \(\nu\) are shown in Fig. 5.

Example 4

Consider the following FOCP as (Rabiei et al. (2018b))

with the dynamics system

The aforesaid problem has the following exact solution:

We solve the aforesaid problem via the mentioned technique in Sect. 4 with activation function arctan(.). The state and control variables are approximated by

The optimal values of J and CPU times of the proposed scheme with \(k=1, M=15\) and Rabiei et al. (2018b) for several cases of \(\nu\) are summarized in Table 6. In Table 7, we report values of absolute errors of the state variable, the optimal values of cost function and CPU times for \(\nu =1, k=1\) and various choices M. From this Table, it is clear that when the number of base functions increases, the absolute error tends to zero. Graphs of numerical results of the state and control variables with \(k=1, M=15\) and various cases of \(\nu\) are illustrated in Fig. 6.

7.3 Type 3

Example 5

Consider the following FVP as

with the boundary conditions as

The aforesaid problem has the following exact solution for \(\nu =1\):

We solve the aforesaid problem via the mentioned technique in Sect. 4 with activation function arctan(.). The state and control variables are approximated by

The absolute error behavior for \(k=1, M=2\) is demonstrated in Fig. 7. Also, Fig. 8 demonstrates the behavior of numerical results with \(M=2, k=1\) and different cases of \(\nu\) and the exact solution. This figure demonstrates that the numerical solution is convergent to the exact solution as the value of \(\nu\) approaches 1.

Example 6

Consider the following FVP as (Ordokhani and Rahimkhani 2018; Dehestani et al. 2020; Razzaghi and Yousefi 2000)

with the boundary conditions as

The aforesaid problem has the following exact solution for \(\nu =1\):

We solve the aforesaid problem via the mentioned technique in Sect. 4 with activation function tanh(.). The state and control variables are approximated by

The values of approximate solution of y(t) and the optimal values of cost function of the proposed scheme with \(k=1, M=4\) and LWM (Razzaghi and Yousefi 2000), Müntz–Legendre method (MLM) (Ordokhani and Rahimkhani 2018), modified wavelet method (MWM) (Dehestani et al. 2020) are summarized in Table 8. Also, the approximate solutions of y(t) for different choices of \(\nu\) are demonstrated in Fig. 9. This figure demonstrates that the numerical solution is convergent to the exact solution as the value of \(\nu\) approaches 1.

8 Conclusion and Future Work

In this study, two classes of FOCPs and one class of FVP have been investigated. A novel method based on Bernstein wavelets and activation functions was used for numerical solution of such problems. By applying the Laplace transform, fractional-order problems are converted into integer-order problems. Then, we use hybrid of the Bernstein wavelets and activation functions, Gauss–Legendre integration method and Newton’s iterative method for obtaining numerical solution of such problems. The accuracy of the mentioned scheme has been examined on different numerical examples. The obtained results confirmed that the established technique for solving the intended problems is extremely effective and powerful, even when using a limited number of bases Bernstein wavelets. We plan to do the following works in the future:

-

This method can be used to solve different problems such as fractional partial differential equations, two-dimensional FOCP, fractal-fractional differential equations, fractal-fractional OCP, inverse problems etc.

-

Wavelets base can be combined with neural network, least squares-support vector regression etc.

-

Stability analysis of the suggested scheme for numerical approximation of FOCP is an interesting problem for future work.

References

Agrawal OP (2008) A general finite element formulation for fractional variational problems. J Math Anal Appl 337:1–12

Agrawal OP (2004) A general formulation and solution scheme for fractional optimal control problems. Nonl Dyn 38:323–337

Alipour M, Rostamy D, Baleanu D (2013) Solving multidimensional fractional optimal control problems with inequality constraint by Bernstein polynomials operational matrices. J Vib Control 19:2523–2540

Alizadeh A, Effati S (2016) An iterative approach for solving fractional optimal control problems. J Vib Control 1:1–19

Alizadeh A, Effati S, Heydari A (2017) Numerical schemes for fractional optimal control problems. J Dyn Syst Meas Control 139(8):081002

Babolian E, Vahidi AR, Shoja A (2014) An efficient method for nonlinear fractional differential equations: combination of the Adomian decomposition method and spectral method. Indian J Pure Appl Math 45:1017–1028

Bell WW (2004) Special functions for scientists and engineers. Dover Publications Inc, Mineola, NY

Bhatti MI, Bracken P (2007) Solutions of differential equations in a Bernstein polynomial basis. J Comput Appl Math 205:272–280

Bohannan GW (2008) Analog fractional order controller in temperature and motor control applications. J Vib Control 14(9–10):1487–1498

Chen S, Liu F, Turner I, Anh V (2013) An implicit numerical method for the two-dimensional fractional percolation equation. Appl Math Comput 219:4322–4331

Chui CK (1997) Wavelets. A mathematical tool for signal analysis. SIAM monographs on Mathematical Modeling and Computation. Philadelphia, SIAM. 13

Dehestani H, Ordokhani Y, Razzaghi M (2020) Modified wavelet method for solving fractional variational problems. J Vib Control 27(5–6):582–596

Dehestani H, Ordokhani Y, Razzaghi M (2019) On the applicability of Genocchi wavelets method for different kinds of fractional order differential equations with delay. Numer Linear Algebra Appl 26(5):e2259

Dehestani H, Ordokhani Y, Razzaghi M (2022) Fractional-Lucas optimization method for evaluating the approximate solution of the multi-dimensional fractional differential equations. Eng Comput 38:481–495

Ding Y, Wang Z, Ye H (2012) Optimal control of a fractional-order HIV-immune system with memory. IEEE Trans Contr Syst Tech 30:763–769

Ezz-Eldien SS, Bhrawy AH, ElKalaawy AA (2018) Direct numerical method for isoperimetric fractional variational problems based on operational matrix. J Vib Control 24(14):3063–3076

Freed AD, Diethelm K (2006) Fractional calculus in biomechanics: A 3d viscoelastic model using regularized fractional derivative kernels with application to the human calcaneal fat pad. Biomech Model Mechanobiol 5:203–215

Heydari MH, Hooshmandasl MR, Maalek Ghaini FM, Cattani C (2016) Wavelets method for solving fractional optimal control problems. Appl Math Comput 286:139–154

Jajarmi A, Baleanu D (2018) Suboptimal control of fractional-order dynamic systems with delay argument. J Vib Control 24(12):1

Jesus IS, Machado JAT (2008) Fractional control of heat diffusion systems. Nonlinear Dyn 54(3):263–282

Khader MM (2015) An efficient approximate method for solving fractional variational problems. Appl Math Model 39:1643–1649

Kheiri H, Jafari M (2019) Fractional optimal control of an HIV/AIDS epidemic model with random testing and contact tracing. J Appl Math Comput 60:387–411

Kiryakova VS (1994) Generalized fractional falculus and applications. Longman Sci. Techn., Harlow, John Wiley and Sons, New York

Kreyszig E (1978) Introductory Functional Analysis with Applications. Wiley, New York

Li Y, Sun N, Zheng B, Wang Q, Zhang Y (2014) Wavelet operational matrix method for solving the Riccati differential equation. Commun Nonlinear Sci Numer Simul 19:483–493

Lotfi A, Yousefi SA (2013) A numerical technique for solving a class of fractional variational problems. J Comput Appl Math 237:633–643

Lotfi A, Yousefi SA, Dehghan M (2013) Numerical solution of a class of fractional optimal control problems via the Legendre orthonormal basis combined with the operational matrix and the Gauss quadrature rule. J Comput Appl Math 250:143–160

Mashayekhi S, Razzaghi M (2018) An approximate method for solving fractional optimal control problems by hybrid functions. J Vib Control 24(9):1621–1631

Morgado ML, Rebelo M, Ferras LL, Ford NJ (2017) Numerical solution for diffusion equations with distributed order in time using a Chebyshev collocation method. Appl Numer Math 114:108–123

Nemati A (2017) Numerical solution of 2D fractional optimal control problems by the spectral method combined with Bernstein operational matrix. Int J Control 91(12):2632–2645

Ordokhani Y, Rahimkhani P (2018) A numerical technique for solving fractional variational problems by Müntz-Legendre polynomials. J Appl Math Comput 58:75–94

Popovic JK, Spasic DT, Tosic J et al (2015) Fractional model for pharmacokinetics of high dose methotrexate in children with acutelymphoblastic leukaemia. Commun Nonlinear Sci Numer Simul 22:451–471

Rabiei K, Ordokhani Y, Babolian E (2018) Fractional-order Boubaker functions and their applications in solving delay fractional optimal control problems. J Vib Control 24(15):3370–3383

Rabiei K, Ordokhani Y, Babolian E (2018) Numerical solution of 1D and 2D fractional optimal control of system via Bernoulli polynomials. Int J Appl Comput Math 7

Rabiei K, Parand K (2020) Collocation method to solve inequality constrained optimal control problems of arbitrary order. Eng Comput 36:115–125

Rahimkhani P, Ordokhani Y (2020) Approximate solution of nonlinear fractional integro-differential equations using fractional alternative Legendre functions. J Comput Appl Math 365:112365

Rahimkhani P, Ordokhani Y (2021) Orthonormal Bernoulli wavelets neural network method and its application in astrophysics. Comput Appl Math 40(78):1–24

Rahimkhani P, Ordokhani Y (2020) The bivariate Müntz wavelets composite collocation method for solving space-time fractional partial differential equations. Comput Appl Math 39:115

Rahimkhani P, Ordokhani Y, Babolian E (2016) An efficient approximate method for solving delay fractional optimal control problems. Nonl Dyn 86:1649–1661

Rahimkhani P, Ordokhani Y, Babolian E (2018) Müntz-Legendre wavelet operational matrix of fractional-order integration and its applications for solving the fractional pantograph differential equations. Numer Algorithms 77(4):1283–1305

Rahimkhani P, Ordokhani Y, Babolian E (2017) Numerical solution of fractional pantograph differential equations by using generalized fractional-order Bernoulli wavelet. J Comput Appl Math 309:493–510

Rahimkhani P, Ordokhani Y, Lima PM (2019) An improved composite collocation method for distributed-order fractional differential equations based on fractional Chelyshkov wavelets. Appl Numer Math 145:1–27

Razzaghi M, Yousefi S (2000) Legendre wavelets direct method for variational problems. Math Comput Simul 53:185–192

Ren J, Sun Z, Dai W (2016) New approximations for solving the Caputo-type fractional partial differential equations. Appl Math Model 40(4):2625–2636

Sabermahani S, Ordokhani Y, Yousefi SA (2019) Fractional order Lagrange polynomials: an application for solving delay fractional optimal control problems. Trans Inst Meas Control 41(11):2997–3009

Sahu PK, Saha Ray S (2018) Comparison on wavelets techniques for solving fractional optimal control problems. J Vib Control 24(6):1185–1201

Samko S, Kilbas AA, Marichev O (1993) Fractional integrals and derivatives: theory and applications. Gordon and Breach, Yverdon

Soltanpour Moghadam A, Arabameri M, Baleanu D, Barfeie M (2020) Numerical solution of variable fractional order advection-dispersion equation using Bernoulli wavelet method and new operational matrix of fractional order derivative. Math Methods Appl Sci 43:3936–3953

Suarez IJ, Vinagre BM, Chen YQ (2008) A fractional adaptation scheme for lateral control of an AGV. J Vib Control 14(9–10):1499–1511

Tripathy MC, Mondal D, Biswas K, Sen S (2015) Design and performance study of phase-locked loop using fractional-order loop filter. Int J Circuit Theory Appl 43(6):776–792

Wang JR, Zhou Y (2011) A class of fractional evolution equations and optimal controls. Nonlinear Anal RealWorld Appl 12:262–272

Yang S, Xiao A, Su H (2010) Convergence of the variational iteration method for solving multi- order fractional differential equations. Comput Math Appl 60:2871–2879

Zaky MA, Doha EH, Tenreiro Machado JA (2018) A spectral framework for fractional variational problems based on fractional Jacobi functions. Appl Numer Math 132:51–72

Acknowledgements

The second author is supported by the Alzahra university within project 99/1/159. Also, we express our sincere thanks to the anonymous referees for valuable suggestions that improved the paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Rahimkhani, P., Ordokhani, Y. A Modified Numerical Method Based on Bernstein Wavelets for Numerical Assessment of Fractional Variational and Optimal Control Problems. Iran J Sci Technol Trans Electr Eng 46, 1041–1056 (2022). https://doi.org/10.1007/s40998-022-00522-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40998-022-00522-4