Abstract

The paper investigates the numerical solution of the multi-dimensional fractional differential equations by applying fractional-Lucas functions (FLFs) and an optimization method. First, the FLFs and their properties are introduced. Then, according to the pseudo-operational matrix of derivative and modified operational matrix of fractional derivative, we present the framework of numerical technique. Also, for computational technique, we evaluate the upper bound of error. As a result, we expound the proposed scheme by solving several kinds of problems. Our computational results demonstrate that the proposed method is powerful and applicable for nonlinear multi-order fractional differential equations, time-fractional convection–diffusion equations with variable coefficients, and time-space fractional diffusion equations with variable coefficients.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In recent years, fractional calculus has generated tremendous interest in various fields in sciences and engineering. For instance, this topic has been used in modeling the nonlinear oscillation of earthquake, fluid-dynamic traffic, continuum, and statistical mechanics, signal processing, control theory, heat transfer in heterogeneous media, the ultracapacitor, and beam heating [1,2,3,4,5].

Among the fractional-order problems, solving fractional partial differential equations has received considerable attention from many researchers. The solution to most problems cannot be easily obtained by the analytical methods. Therefore, many researchers considered the numerical approach to gain the approximate solution of the proposed problems. The wide type of numerical methods has been introduced such as radial basis functions method [6], adaptive finite element method [7], and optimization method [8].

During recent years, the fractional-order functions have received considerable attention in dealing with various fractional problems. These functions have many advantages which greatly simplify the numerical technique to achieve the approximate solution with high precision. These functions have drawn the attention of many mathematicians and lead to the emersion of flexible approaches for solving fractional-order problems such as fractional-order generalized Laguerre functions [9], fractional-order Genocchi functions [10], fractional-order Legendre functions [11], fractional-order Legendre–Laguerre functions [12], Fractional-order Bessel wavelet functions [13] and Genocchi-fractional Laguerre functions [14]. For some other papers on this subject, see [15, 16].

This paper includes the numerical optimization technique for solving nonlinear multi-order fractional differential equations and time-space fractional diffusion equations. To get the desired goal, we applied FLFs together with an optimization method. It is worthwhile to mention that one of the advantages of these functions is integer coefficients of individual terms, which are effective in computational error. Fractional-Lucas functions features and operational matrices create good conditions to get appropriate results.

The current paper is arranged as follows: In Sect. 2, we describe the fractional-Lucas functions and their properties, and also function approximations. Sections 3 and 4 are devoted to the technique of obtaining a modified operational matrix of the derivative and pseudo-operational matrix of the fractional derivative. The procedure of implementing the proposed method for two classes of the problems is presented in Sect. 5. Convergence analysis and error estimate for the proposed method are discussed in Sect. 6. Section 7 contains some numerical experiments to demonstrate the accuracy of the proposed algorithms. The conclusion is summarized in the last section.

In this paper, we consider two classes of the fractional differential equations:

-

Nonlinear multi-order fractional differential equations [17, 18]:

$$\begin{aligned}&D^{\nu }u(x)+D^{\gamma }u(x)={\mathcal {F}}(x,u,u'),\nonumber \\&\quad 0\le x \le 1,\quad1<\nu \le 2,\quad0<\gamma \le 1, \end{aligned}$$(1)with the initial conditions

$$\begin{aligned} u(0)=u_{0}, \quad u'(0)=u_{1}, \end{aligned}$$where the parameters \(u_{0}\) and \(u_{1}\) are constants and \({\mathcal {F}}\) is linear or nonlinear known function.

-

Nonlinear time-space fractional diffusion equations [19]:

$$\begin{aligned}&D^{\nu }_{t}u(x,t)+D^{\gamma }_{x}u(x,t) ={\mathcal {G}}(x,t,u,\frac{\partial u}{\partial x},\frac{\partial ^{2} u}{\partial x^{2}}), \nonumber \\&\quad 0\le x, t\le 1,\quad0<\nu \le 1,\quad 0<\gamma \le 2, \end{aligned}$$(2)with the initial and boundary conditions

$$\begin{aligned}&u(x,0)=f_{0}(x),\quad 0\le x \le 1,\\&u(0,t)=\varphi _{0}(t),u(1,t)=\varphi _{1}(t), \quad 0\le t \le 1, \end{aligned}$$where \(f_{0}(x),\) \(\varphi _{0}(t)\) and \(\varphi _{1}(t)\) are known functions.

Here also, \(D^{\nu }\) and \(D^{\gamma }\) denote the Caputo fractional derivatives that this operator is defined as follows [12]:

2 Fractional-Lucas functions

Fractional-Lucas functions are constructed explicitly by applying the change of variable role \(x \rightarrow x^{\alpha }\,(\alpha >0)\), on Lucas polynomials [20] on the interval [0, 1] as

These functions are defined by the following second-order linear recursive formulas:

A given function f belonging to \(L^{2}([0,1])\) can be expanded by FLFs as:

By truncating the above series, we have

and the coefficients vector are computed as follows:

3 Modified operational matrix of derivative

Throughout this section, we present the technique of calculating the modified operational matrix of the derivative. This issue is discussed in the following theorem.

Theorem 1

Let \({\mathbf {FL}}^{\alpha }(x)\) be the fractional-Lucas vector given in Eq. (5), then the modified operational matrix of the derivative for \(\alpha =1\) is defined as:

where \({\mathbf {\Upsilon }}(\alpha ,x)\) is the \(({\mathfrak {M}}_{1}+1)\times ({\mathfrak {M}}_{1}+1)\) modified operational matrix of the derivative for FLFs.

Proof

The following relation for FLFs holds [21]:

Due to the above relation, we have

Therefore, the modified operational matrix is obtained as follows:

\(\square\)

4 Pseudo-operational matrix of the fractional derivative

In the present section, the methodology of obtaining the pseudo-operational matrix of the fractional derivative in Caputo sense of order \(q-1<\nu \le q\) is presented. Thus, we define

where

According to properties of the Caputo fractional derivative, we have

Using the properties of the Caputo fractional derivative for \(m=\lceil \frac{\nu }{\alpha } \rceil ,\dots ,{\mathfrak {M}}_{1}\), each component of the pseudo-operational matrix of the fractional derivative \({\mathbf {\Theta }}^{q}(\alpha ,\nu ,x)\) is computed as follows:

where

Next, by expanding \(x^{(m-2k-q)\alpha }\) with \({\mathfrak {M}}_{1}\)-terms of FLFs, we get

Employing the two above relations, then we have

Accordingly, the vector form of the above formula can be written as follows:

In a specific case, for \({\mathfrak {M}}_{1}=2,\) \(\alpha =0.5\) and \(\nu =0.5\), the pseudo-operational matrix of the fractional derivative is obtained as follows:

5 Fractional-Lucas optimization method

In the current section, we present the novel approach for different kinds of fractional differential equations.

5.1 Implementation of the method for fractional differential equations

In this section, we provide an optimization problem for solving the fractional differential equations. For this aim, we expand the function u(x) by FLFs as follows:

In view of the modified operational matrix of derivative for \(\alpha =1,\) we have

And for \(0<\alpha <1\), we obtain

On the other hand, according to the pseudo-operational matrix of the fractional derivative, we achieve

and

By substituting Eqs. (13)–(17) into Eq. (1), the residual function \({\mathbf {R}}(x,U)\) is obtained

Thus, using the above relation and initial conditions, the following optimization problem is attained for \(\alpha =1\):

And for \(0<\alpha <1\), we deduce

For solving the above problem and obtaining the optimal value of elements of the unknown vector U, for \(\alpha =1\), we consider

Also, for \(0<\alpha <1\), we get

Then, by applying the Lagrange multipliers method, the necessary conditions can be written as:

As a result, considering the system of algebraic equations obtained from Eq. (23), the unknown vector U is determined. Accordingly, the approximate solution is obtained.

5.2 Implementation of the method for fractional diffusion equations

This section introduces the numerical optimization technique for fractional diffusion equations. To realize the purpose, we assume

Next, we obtain the approximation of other functions by FLFs with the help of the operational matrix of derivative. Therefore, for \(\alpha =1\), we have

and for \(0<\alpha <1\), we get

We also have the following approximation for the second-order derivative of the function:

Also, by considering Eq. (24) and pseudo-operational matrix of fractional derivative, we get the following relations:

and

We now introduce the following residual function with the assistance of substituting Eqs. (24)–(29) into Eq. (2), for \(\alpha =1\) and \(0<\alpha <1\), respectively:

and

From the initial and boundary conditions and Eq. (24), we conclude

As a result, the following optimization problem is obtained:

More precisely, by applying nodal points of Newton–Cotes [12] in conditions, we deduce

Then, for solving the aforesaid minimization problem and evaluating the optimal value of unknown matrix U, we define

where

and

Next, in order to obtain the unknown matrix U, we utilize Lagrange multipliers method. So, we consider the necessary conditions below for the extremum of the fractional diffusion equation

As a result, by solving the aforesaid system of algebraic equations, we determine the unknown matrix U. Then, by replacing the obtained matrix into Eq. (24), the approximate solution is obtained.

6 Convergence analysis and error estimate

Overall, this section discusses the convergence analysis and error estimate in Sobolev space. For this purpose, the Sobolev norm of integer order \(\mu \ge 0\) in the domain \(\Delta =(a,b)^{d}\) in \(R^{d}\) for \(d=2,3\) is defined [22]

where \(D^{j}_{i}\) denotes the jth derivative of u relative to the ith variable. To achieve the objectives and simplifying the way of presenting the results, we consider \({\mathfrak {M}}_{1}={\mathfrak {M}}_{2}={\mathfrak {M}}\) and \(\alpha =\beta\).

Theorem 2

Suppose that \(u\in H^{\mu }(\Delta )\), \(\mu \ge 0\) and \(\Delta =(0,1)^{2}.\) If

is the best approximation of u, then we have the following estimations:

and for \(1\le r \le \mu ,\)

where

and c depends on \(\mu .\) In addition, the symbol on the right-hand side of the above error formulas is defined as follows:

Proof

According to results presented in [22] and the known concept which the best approximation is unique [23], the following estimate holds:

and for \(1\le r \le \mu ,\)

Hence, the desired result is deduced. \(\square\)

Lemma 1

Let \(u\in H^{\mu }(\Delta )\), \(\mu \ge 0\) and \(0<\nu \le 1,\) then we have

Proof

Due to the above-mentioned results and property of the norm

where \(*\) is the convolution product and also from Riemann–Liouville fractional integral properties [12], we conclude

\(\square\)

Lemma 2

Let \(u\in H^{\mu }(\Delta )\), \(\mu \ge 0\) and \(1<\gamma \le 2\) , then we have

Proof

From the above lemma, we have

\(\square\)

Lemma 3

Let \(u\in H^{\mu }(\Delta )\), \(\mu \ge 0\) and \(1<r \le \mu\) , then we get

and

Proof

Directly, the results are obtained from Lemma 2. Thus, we have

and also

\(\square\)

Corollary 1

Let \(u\in H^{\mu }(\Delta )\), \(\mu \ge 0\) and \(1<r \le \mu .\) If the assumptions in the above theorem and lemmas are established, and Lipschitz condition with the Lipschitz constant \(\delta\) is satisfied for\({\mathcal {G}},\) then we gain

where

So that,

and

Proof

From the results of the above theorem and lemmas, we achieve the following results:

Accordingly, the proof is completed. \(\square\)

7 Numerical experiments

In this section, we implement the fractional-Lucas optimization method in solving the different classes of fractional differential equations, which justify the accuracy, applicability, and efficiency of the proposed method. The computations were performed on a personal computer, and the codes are written in MATLAB 2016.

7.1 Fractional differential equation

Example 1

For the first example, we consider the following initial value problem [24]:

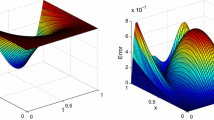

with the initial condition \(u(0)=0,u'(0)=0.\) The exact solution, when \(\nu =\frac{3}{2},\) is \(u(x)=x^{3}.\) In Table 1 the value of absolute error for various choices of \(\nu\) with \(\alpha =1\) and \({\mathfrak {M}}_{1}=3\) is computed. From this table, it can be observed that, as \(\nu\) approaches \(\frac{3}{2}\), the approximate solution converges to the exact solution. The behavior of the numerical solutions obtained by numerical technique is illustrated in Figs. 1 and 2 for different choices of parameters mentioned in Sect. 5.1.

Approximate solution and exact solution for \(\nu =1.5,1.4,1.3,1.2\) (left) and absolute error for \(\nu =1.5\) (right) with \({\mathfrak {M}}_{1}=3\) and \(\alpha =1\) of Example 1

Approximate solution and exact solution for \(\nu =1.5,1.4,1.3,1.2\) (left) and absolute error for \(\nu =1.5\) (right) with \({\mathfrak {M}}_{1}=6\) and \(\alpha =0.5\) of Example 1

Example 2

Consider the following nonlinear multi-order fractional differential equations [17, 18]:

with the initial conditions \(u(0)=0, u'(0)=-1.\) The exact solution for this problem is \(u(x)=x^{2}-x.\) In view of the presented method, for \(\alpha =1\), we have

and for \(0<\alpha <1\), we get

where

For \(\alpha =\beta =1,\) \(\nu =2,\gamma =1,\) and \({\mathfrak {M}}_{1}=2\), we obtain

Therefore, the approximate solution is

And also, for \(\alpha =\beta =1,\) \(\nu =1.5,\gamma =0.5\) and \({\mathfrak {M}}_{1}=2\), we deduce

Then,

Also, we compare our results with Chebyshev wavelet methods [17, 18] in Table 2. By comparing these results, it can be seen that there is good agreement between numerical solutions and the exact solution.

Example 3

Consider the following nonlinear fractional differential equations [25]:

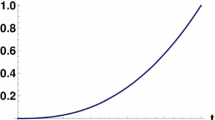

with the initial conditions \(u(0)=0.\) The exact solution, when \(\gamma =1,\) is \(u(x)=\frac{\exp (2x)-1}{\exp (2x)+1}.\) In Tables 3 and 4, the value of absolute error and \(L_{2}\)-error for various choices of \(\alpha ,\gamma\) and \({\mathfrak {M}}_{1}\) is presented. In Table 3 we notice that as the number of base functions \({\mathfrak {M}}_{1}\) increases, the absolute error tends to zero. Also, we show \(L_{2}\)-error in Table 4 to illustrate the effect of the \(\alpha\) and \(\gamma\) parameters on the numerical results. In addition, the approximate solution for \(\gamma =1,0.95,0.9,0.85,0.8,\) and \(\alpha =1\) with \({\mathfrak {M}}_{1}=5\) is plotted in Fig. 3.

Approximate solution for \(\gamma =1,0.95,0.9,0.85,0.8,\) and \(\alpha =1\) with \({\mathfrak {M}}_{1}=5\) of Example 3

7.2 Fractional diffusion equation

Example 4

Consider the following time-fractional convection–diffusion equation with variable coefficients [26,27,28]

with the initial and boundary conditions

The exact solution for this problem is \(u(x,t)=x^{2}+\frac{2\Gamma (\nu +1)}{\Gamma (2\nu +1)}t^{2\nu }.\) With the help of the proposed method in previous section, for \(\alpha =\beta =\nu =1\) and \({\mathfrak {M}}_{1}={\mathfrak {M}}_{2}=2\), we have

Therefore, the approximate solution is gained as follows:

Table 5 contains the comparison of the absolute error obtained by present method for \(\alpha =\beta =1,\nu =0.5\) and \(t=0.5\) with Haar wavelet method (HWM) [26], Sinc–Legendre method (SLM) [27], and Chebyshev wavelets method (CWM) [28]. It should be noted that our method with the number of base functions less than Chebyshev wavelets method [28] achieved the same results. Also, Figs. 4 and 5 demonstrate the behavior of the numerical technique for different choices of \(\alpha ,\beta ,\nu\) with \({\mathfrak {M}}_{1}={\mathfrak {M}}_{2}=2\).

Approximate solution (left) and absolute error (right) for \(\nu =\alpha =0.3,\beta =1\) with \({\mathfrak {M}}_{1}={\mathfrak {M}}_{2}=2\) of Example 4

Approximate solution (left) and absolute error (right) for \(\nu =\alpha =0.9,\beta =1\) with \({\mathfrak {M}}_{1}={\mathfrak {M}}_{2}=2\) of Example 4

Example 5

Consider the following nonlinear time-space fractional advection–diffusion equation [29]

with the initial and boundary conditions

The exact solution, when \(\nu =1,\) is \(u(x,t)=xt.\) According to the method presented in the earlier section, by taking \({\mathfrak {M}}_{1}={\mathfrak {M}}_{2}=1\) and \(\alpha =\beta =\nu =1,\) we get

Consequently, the approximate solution is obtained as follows:

Also, the behavior of the approximate solution for different choices of \(\nu\) is illustrated in Fig. 6.

Exact solution and approximate solutions for \(\nu =1,0.9,0.7,0.5\) and \(\alpha =\beta =1\) with \({\mathfrak {M}}_{1}={\mathfrak {M}}_{2}=2\) and \(x=0.5\) of Example 5

Example 6

Consider the following time-space fractional diffusion equation with variable coefficients [30, 31]:

with the initial and boundary conditions

The exact solution for this problem is \(u(x,t)=xt.\) Table 6 illustrates comparisons of the absolute errors of the approximate solutions with methods in [30, 31] at various times. The \(L_{2}\)-error for different values of \(\alpha ,\beta\) and \({\mathfrak {M}}_{1},{\mathfrak {M}}_{2}\) at various times on the interval \(x\in [0,2]\) is computed in Table 7. We also show the absolute errors for different choices of \(\alpha ,\beta\) and \({\mathfrak {M}}_{1},{\mathfrak {M}}_{2}\) in Fig. 7. The computational results on the tables and figures verify the accuracy and efficiency of the proposed method.

Absolute error for \(\alpha =\beta =0.5\) with \({\mathfrak {M}}_{1}={\mathfrak {M}}_{2}=2\) (left) and \(\alpha =\beta =1\) with \({\mathfrak {M}}_{1}={\mathfrak {M}}_{2}=1\) (right) of Example 6

Example 7

Consider the following space fractional diffusion equation with variable coefficients [32, 33]:

with the initial and boundary conditions

The exact solution for this problem is \(u(x,t)=(x^{2}-x^{3})\exp (-t).\) Table 8 compares the absolute errors at different points of x and t on interval [0, 1] with obtained results in methods [32, 33]. In addition, we display the approximate solution and absolute error for \(\alpha =\beta =1\) with \({\mathfrak {M}}_{1}=3,{\mathfrak {M}}_{2}=5\) in Fig. 8. The comparison of the obtained results in table and figure with those based on other methods demonstrates that the proposed scheme is a powerful tool to get the approximate solution with high accuracy.

Approximate solution (left) and absolute error (right) for \(\alpha =\beta =1\) with \({\mathfrak {M}}_{1}=3,{\mathfrak {M}}_{2}=5\) of Example 7

Example 8

Consider the following time-space fractional diffusion equation [19]:

with the initial and boundary conditions

The exact solution, when \(\nu =\gamma =1,\) is \(u(x,t)=\sin (x)+\sin (t).\) To show the effect of the number of base functions to the accuracy of approximate solution, we exhibit the absolute errors for different values of \({\mathfrak {M}}_{1},{\mathfrak {M}}_{2}\) in Table 9. Besides, the behavior of the approximate solution for different choices of \(\nu ,\gamma\) is presented in Figs. 9 and 10. These graphs are plotted to verify the accuracy and efficiency of the proposed method.

Approximate solutions for \(\nu =\gamma =1,0.9,0.8,0.7,0.6\) with \({\mathfrak {M}}_{1}={\mathfrak {M}}_{2}=3,\alpha =\beta =1\) and \(t=1\) of Example 8

Approximate solutions for (blue) \(\nu =\gamma =1,\) (orange) \(\nu =\gamma =0.8,\) (green) \(\nu =\gamma =0.6,\) (pink) \(\nu =\gamma =0.4\) with \({\mathfrak {M}}_{1}={\mathfrak {M}}_{2}=3\) and \(\alpha =\beta =1\) of Example 8

8 Conclusion

A novel numerical optimization method is constructed for evaluating the approximate solution of various classes of fractional partial differential equations. We first introduce fractional-Lucas functions and then compute the modified operational matrix of the derivative and pseudo-operational matrix of the fractional derivative by applying the properties of FLFs and Caputo fractional derivative. Despite using a few terms of base functions, the numerical results illustrate the excellent behavior of the optimization approach to gain the approximate solution. Also, the trend of the numerical approach illustrates that the method is very effective and accurate.

References

Podlubny I (1998) Fractional differential equations: an introduction to fractional derivatives. In: Fractional differential equations, to methods of their solution and some of their applications. Academic Press, New York

Chow T (2005) Fractional dynamics of interfaces between soft-nanoparticles and rough substrates. Phys Lett A 342:148–155

Heydari MH, Hooshmandasl MR, Maalek Ghaini FM, Cattani C (2016) Wavelets method for solving fractional optimal control problems. Appl Math Comput 286:139–154

Sierociuk D, Dzielinski A, Sarwas G, Petras I, Podlubny I, Skovranek T (2013) Modelling heat transfer in heterogeneous media using fractional calculus. Philos Trans R Soc 371:20130146

Dzielinski A, Sierociuk D, Sarwas G (2010) Some applications of fractional order calculus. Bull Pol Acad Sci Tech Sci 58:583–92

Zafarghandi FS, Mohammadi M, Babolian E, Javadi S (2019) Radial basis functions method for solving the fractional diffusion equations. Appl Math Comput 342:224–246

Zhao X, Hu X, Cai W, Karniadakis GE (2017) Adaptive finite element method for fractional differential equations using hierarchical matrices. Comput Methods Appl Mech Eng 325:56–76

Ma YK, Prakash P, Deiveegan A (2019) Optimization method for determining the source term in fractional diffusion equation. Math Comput Simul 155:168–176

Bhrawy A, Alhamed Y, Baleanu D, Al-Zahrani A (2014) New spectral techniques for systems of fractional differential equations using fractional-order generalized Laguerre orthogonal functions. Fract Calc Appl Anal 17(4):1137–1157

Dehestani H, Ordokhani Y, Razzaghi M (2020) Pseudo-operational matrix method for the solution of variable-order fractional partial integro-differential equations. Eng Comput. https://doi.org/10.1007/s00366-019-00912-z

Kazem S, Abbasbandy S, Kumar S (2013) Fractional-order Legendre functions for solving fractional-order differential equations. Appl Math Model 37(7):5498–5510

Dehestani H, Ordokhani Y, Razzaghi M (2018) Fractional-order Legendre–Laguerre functions and their applications in fractional partial differential equations. Appl Math Comput 336:433–453

Dehestani H, Ordokhani Y, Razzaghi M (2020) Fractional-order Bessel wavelet functions for solving variable order fractional optimal control problems with estimation error. Int J Syst Sci 51(6):1032–1052

Dehestani H, Ordokhani Y, Razzaghi M (2019) Application of the modified operational matrices in multiterm variable-order time-fractional partial differential equations. Math Methods Appl Sci 42:7296–7313

Dehestani H, Ordokhani Y, Razzaghi M (2019) Fractional-order Bessel functions with various applications. Appl Math 64(6):637–662

Wang Y, Zhu L (2018) Wang Z (2018) Fractional-order Euler functions for solving fractional integro-differential equations with weakly singular kernel. Adv Differ Equ 1:254

Yuanlu LI (2010) Solving a nonlinear fractional differential equation using Chebyshev wavelets. Commun Nonlinear Sci Numer Simul 15(9):2284–2292

Heydari MH, Hooshmandasl MR, Cattani C (2018) A new operational matrix of fractional order integration for the Chebyshev wavelets and its application for nonlinear fractional Van der Pol oscillator equation. Proc Math Sci 128(2):26

Wang L, Ma Y, Meng Z (2014) Haar wavelet method for solving fractional partial differential equations numerically. Appl Math Comput 227:66–76

Oruc O (2018) A new numerical treatment based on Lucas polynomials for 1D and 2D sinh-Gordon equation. Commun Nonlinear Sci Numer Simulat 57:14–25

Koshy T (2001) Fibonacci and Lucas numbers with applications. Wiley, New York

Canuto C, Hussaini MY, Quarteroni A, Zang TA (2006) Spectral methods, fundamentals in single domains. Springer, Berlin

Kreyszig E (1978) Introductory Functional Analysis with Applications. Wiley, New York

Bhrawy AH, Alofi AS (2013) The operational matrix of fractional integration for shifted Chebyshev polynomials. Appl Math Lett 26:25–31

Mohammadi F, Cattani C (2018) A generalized fractional-order Legendre wavelet Tau method for solving fractional differential equations. J Comput Appl Math 339:306–316

Chen Y, Wu Y, Cui Y, Wang Z, Jin D (2010) Wavelet method for a class of fractional convection-diffusion equation with variable coefficients. J Comput Sci 1:146–149

Saadatmandi A, Dehghan M, Azizi MR (2012) The Sinc-Legendre collocation method for a class of fractional convection-diffusion equations with variable coefficients. Commun Nonlinear Sci 17:4125–4136

Zhou F, Xu X (2016) The third kind Chebyshev wavelets collocation method for solving the time-fractional convection diffusion equations with variable coefficients. Appl Math Comput 280:11–29

Firoozjaee MA, Yousefi SA (2018) A numerical approach for fractional partial differential equations by using Ritz approximation. Appl Math Comput 338:711–721

Chen Y, Sun Y, Liu L (2014) Numerical solution of fractional partial differential equations with variable coefficients using generalized fractional-order Legendre functions. Appl Math Comput 244:847–858

Saadatmandi A, Dehghan M (2011) A tau approach for solution of the space fractional diffusion equation. Comput Math Appl 62:1135–1142

Khader MM (2011) On the numerical solutions for the fractional diffusion equation. Commun Nonlinear Sci Numer Simul 16:2535–2542

Ren RF, Li HB, Jiang W, Song MY (2013) An efficient Chebyshev-tau method for solving the space fractional diffusion equations. Appl Math Comput 224:259–267

Acknowledgements

We express our sincere thanks to the anonymous referees for valuable suggestions that improved the final manuscript.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Dehestani, H., Ordokhani, Y. & Razzaghi, M. Fractional-Lucas optimization method for evaluating the approximate solution of the multi-dimensional fractional differential equations. Engineering with Computers 38, 481–495 (2022). https://doi.org/10.1007/s00366-020-01048-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00366-020-01048-1

Keywords

- Fractional-Lucas functions

- Fractional differential equations

- Optimization method

- Pseudo-operational matrix of fractional derivative

- Modified operational matrix of derivative