Abstract

The problem of \(H_{\infty }\) filter design for a class of 2D systems is solved here in the presence of intermittent measurements. Data dropouts are characterized using a stochastic variable satisfying the Bernoulli random binary distribution. Our attention is focused on the design of reduced-order \(H_{\infty }\) filters such that the filtering error 2D stochastic system is robust mean-square asymptotically stable and fulfills a given \(H_{\infty }\) disturbance attenuation level. We use a new formulation for a class of 2D system Fornasini–Marchesini (FM) models. A sufficient condition is established by means of the linear matrix inequalities (LMI) technique. The efficiency and viability of the proposed techniques and tools are demonstrated through a set of numerical examples.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Design problems concerning multi-dimensional signals are currently being extensively studied in the scientific literature. In particular, two-dimensional (2D) signals and systems (Benzaouia et al. 2016) have been studied in many engineering fields, such as image processing, seismographic data processing, biomedical imaging processing, thermal processes, process control, and iterative learning control. This paper deals with general 2D signal processing: The investigation of 2D systems in signal processing applications has attracted considerable attention, and many important results have been reported to the literature. Among these results, the problem of the \(H_{\infty }\) filtering for 2D linear systems, described by the Roesser and Fornasini–Marchesini (FM) models, has been investigated in Benzaouia et al. (2016), Boukili et al. (2014b, (2016a), Souza et al. (2010) and Hmamed et al. (2013) and references there in Du and Xie (2002), El-Kasri et al. (2012, (2013a), Kririm et al. (2015), Wang and Liu (2013), Tuan et al. (2002) and Li and Gao (2014, (2013), the \(H_{\infty }\) filtering problem for 2D Takagi–Sugeno systems is addressed in Boukili et al. (2014a), the \(H_{\infty }\) filtering problems for 2D systems with delays are studied in El-Kasri et al. (2013b), and stability and stabilization of 2D systems is studied in Benhayoun et al. (2015), Li and Gao (2012a), Duan et al. (2013) and Duan and Xiang (2014).

For the 2D systems with stochastic perturbation, the \(H_{\infty }\) filtering problem is given in Gao et al. (2004) and Boukili et al. (2016b), the state estimation and of 2D stochastic systems is solved in Cui and Hu (2010), the Refs. Boukili et al. (2015) and Li et al. (2013) investigate the \(H_{\infty }\) control for TS fuzzy with stochastic perturbation, and the problems of stability and robust \(H_{\infty }\) control for 2D stochastic systems are given in Cui et al. (2011), Dai et al. (2013) and Duan et al. (2014).

In this context, we consider the problem of the \(H_{\infty }\) filter for a class of 2D systems with intermittent measurements. This \(H_{\infty }\) filtering and the related \(H_{\infty }\) control problems have already been studied in Liu et al. (2009), Bu et al. (2014a, (2014b), Shi et al. (2012) and Gao et al. (2009). Following the literature, the phenomenon of missing measurements is assumed here to satisfy a Bernoulli random binary distribution. Our attention is focused on the design of reduced-order \(H_{\infty }\) filters that make the filter error system robustly stable and provide a guaranteed \(H_{\infty }\) performance. Some slack variables are included in the design, to provide extra degrees of freedom that makes it possible to optimize the filter, by minimizing the guaranteed \(H_{\infty }\) performance. A sufficient condition is then established by means of an LMI technique, with formulas for the filter law design derived in parallel. Numerical examples are also given to illustrate the effectiveness of the proposed approach.

The remainder of this paper is organized as follows. In Sect. 2, the system description and the design objectives are presented. In Sect. 3, a sufficient condition guaranteeing robust mean-square asymptotic stability with \(H_{\infty }\) performance for such 2D stochastic systems is derived by means of LMI technique. Using this result, the filter design problem is solved in Sect. 4. Examples are given in Sect. 5, and conclusions are drawn in Sect. 6.

Notations The superscript T stands for matrix transposition; the asterisk \(*\) represents a term that is induced by symmetry; diag{\(\ldots \)} stands for a block-diagonal matrix; \(\mathbb {E}\{.\}\) denotes the mathematical expectation. Matrices, if their dimensions are not explicitly stated, are assumed to be compatible for algebraic operations.

The \(l_{2}\) norm for a 2D signal w(i, j) is given by

where w(i, j) is said to be in the space \(l_{2}\{[0,\infty ),[0,\infty )\}\) or \(l_{2}\), for simplicity, if \(\parallel w\parallel _{2}<\infty \). we define \(\parallel e\parallel _{E}\) as

2 Problem Formulation

Consider the uncertain 2D stochastic system described by the following Fornasini–Marchesini (FM) model (Liu et al. 2009):

where \(x_{i ,j}\in \mathbb {R}^{n}\) is the state vector, \(z_{i,j}\in \mathbb {R}^{p}\) is the signal to be estimated, \(y_{i ,j}\in \mathbb {R}^{m}\) is the measured output, and \(w_{i ,j}\in \mathbb {R}^{q}\) is the disturbance input that belongs to \(l_{2}\{[0,\infty ),[0,\infty )\}\). The system matrices

have partially unknown parameters. \(\varOmega (\alpha )\) is a given convex-bounded polyhedral domain, described by its s vertices as follows:

where

denotes the ith vertex of the polytope.

Throughout the paper, we make the following assumption on the boundary condition:

Assumption 2.1

Cui et al. (2011) The boundary condition is assumed to satisfy

In this paper, we consider the following \(H_{\infty }\) filter to estimate \(z_{i,j}\):

where \( \hat{x}_{i ,j}\in \mathbb {R}^{n_{f}}\) (\(n_{f}\le n\)) is the filter state vector (for reduced-order case \(n_{f}<n\)), \( \tilde{y}_{i ,j}\in \mathbb {R}^{m}\) is the input of the filter, and \(\hat{z}_{i,j}\in \mathbb {R}^{q}\) is the output of the filter. The matrices \(A_{f1}\), \(A_{f2}\), \(B_{f1}\), \(B_{f2}\) and \(L_{f}\) are the filter matrices to be determined.

It is assumed that measurements are intermittent, that is, the data may be lost during their transmission. In this case, the input \(\tilde{y}_{i,j}\) of the filter is no longer equivalent to the output \(y_{i,j}\) of the system (that is, \(\tilde{y}_{i,j}\ne \theta _{i,j}y_{i,j}\)). In this paper, the data loss phenomenon is modeled via a stochastic approach:

where the stochastic variable \(\{\theta _{i,j}\}\) is a Bernoulli distributed white sequence taking the values of 0 and 1 with

and \(\theta \) is a known positive scalar.

Based on this, we have

From (1) and (8), the filtering error system can be expressed as follows is given by:

where

\(\xi _{i , j}=[x_{i , j}^{T}\;,\;\hat{x}_{i , j}^{T}]^{T}\) and \(e_{i,j}= z_{i,j}-\hat{z}_{i,j}\). It is clear that

Before giving the main results, it is necessary to introduce some lemmas that will be used for our derivations.

Lemma 2.2

(Theorem 1 in Liu et al. (2009)): Consider system in (1) and suppose the filter matrices (\(A_{f1}\), \(A_{f2}\), \(B_{f1}\), \(B_{f2}\), \(L_{f}\)) in (6) are given. Then, the filtering error systems in (9) for any \( \alpha \in \varGamma \) is mean-square asymptotically stable with an \(H_{\infty }\) disturbance attenuation level bound \(\gamma \) if there exist matrices \(P(\alpha )>0\) and \(Q(\alpha )>0\) satisfying

where

Lemma 2.3

(Lemma 2.1 in Qiu et al. (2010)) Given matrices \(\mathcal {W}=\mathcal {W}^{T}\in \mathbf {R}^{n\times n}\), \(\mathcal {U}\in \mathbf {R}^{k\times n}\), \(\mathcal {V}\in \mathbf {R}^{m\times n}\), the following LMI problem:

is solvable with respect to the variable \(\mathcal {X}\) if and only if

where \(\mathcal {U}_{\perp }\) and \(\mathcal {V}_{\perp }\) denote the right null spaces of \(\mathcal {U}\) and \(\mathcal {V}\), respectively.

Problem Description The filtering error system (9) is said to mean-square asymptotically stable with \(H_{\infty }\) performance \(\gamma \), if the following requirements are satisfied:

-

1.

The filtering error system (9) with \(w_{i,j}\equiv 0\) is mean-square asymptotically stable.

-

2.

Under zero boundary condition, \(\parallel \bar{e}_{i,j}\parallel _{E}<\) \(\gamma \parallel \bar{w}_{i,j} \parallel _{2}\) is guaranteed for all non-zero \(w\in l_{2}\) and a prescribed \(\gamma >0\), where \(\bar{e}_{i,j}=[e^{T}_{i,j}\;\; e^{T}_{i,j}]^{T}\) and \(\bar{w}_{i,j}=[w^{T}_{i,j}\;\; w^{T}_{i,j}]^{T}\).

3 \(H_{\infty }\) Filtering Analysis

In this section, the analysis of stability and performance of the \(H_{\infty }\) filter is carried out. Thus, we temporarily assume that the filter matrices are known, to study the condition under which the filter error system is mean-square asymptotically stable with \(H_{\infty }\)-norm bounded. For this, Lemma 2.2 can be rewritten as follows:

Lemma 3.1

Consider the system in (1) and suppose that the filter matrices (\(A_{f1}\), \(A_{f2}\), \(B_{f1}\), \(B_{f2}\), \(L_{f}\)) in (6) are given. Then, the filtering error systems in (9) for any \( \alpha \in \varGamma \) is mean-square asymptotically stable and guarantees an \(H_{\infty }\) disturbance attenuation level \(\gamma \) if there exist matrices \(P(\alpha )>0\) and \(Q(\alpha )>0\) satisfying

where

and

Proof 3.2

By the Schur complement, the relation (16) is equivalent to the relation (18) given in Liu et al. (2009), which is equivalent to the relation (11). Consequently, (16) and (11) are equivalent, completing the proof. \(\square \)

Based on this, we present the following new result.

Theorem 3.3

Consider the system in (1) and suppose that the filter matrices (\(A_{f1}\), \(A_{f2}\), \(B_{f1}\), \(B_{f2}\), \(L_{f}\)) in (6) are given. Then, the filtering error system in (9) for any \( \alpha \in \varGamma \) is mean-square asymptotically stable and guarantee an \(H_{\infty }\) disturbance attenuation level \(\gamma \) if there exist matrices \(K(\alpha )\), \(E(\alpha )\), \(F(\alpha )\), \(S(\alpha )\) and symmetric positive definite matrices \(\bar{P}(\alpha )\) and \(R(\alpha )\) satisfying

where

In addition, \(\bar{P}(\alpha )\), \(R(\alpha )\), \(A(\alpha )\), \(B(\alpha )\) and \(\tilde{L}(\alpha )\) are given in (17).

Proof 3.4

To show the equivalence between Theorem 3.3 and Lemma 3.1, define the following matrices:

and

Using the projection Lemma 2.3 and the Schur complement, (16) is equivalent to (20). Thus, Theorem 3.3 is equivalent to Lemma 3.1. This completes the proof. \(\square \)

Remark 3.5

In the derivation of Theorem 3.3, four slack variables E, K, S and F are introduced. By setting \(E=diag\{V^{T},V^{T}\}\), \(K=0\), \(S=0\) and \(F=0\), Theorem 3.3 coincides with the results of Propostion 1 in Liu et al. (2009), so Theorem 3.3 would generally render a less conservative evaluation of the upper bound of the \(H_{\infty }\) norm, as will be seen in the examples at the end of the paper.

Remark 3.6

Theorem 3.3 provides a sufficient condition of the mean-square asymptotic stability and \(H_{\infty }\) disturbance attenuation level for 2D systems with intermittent measurements. If the communication link between the plant and the filter is perfect (that is, there is no packet dropout during transmission), then \(\theta =1\) and \(\beta =0\). In this case, the condition in Theorem 3.3 collapses to the condition obtained in the deterministic case.

4 \(H_{\infty }\) Filtering Design

In this section, we propose a sufficient condition for the existence of an \(H_{\infty }\) filter and characterize the filter matrices that provide the required robust stability and disturbance attenuation requirements. Using the previous result and choosing an appropriate linearizing transformation, we obtain a strict LMI condition for the filter design.

Theorem 4.1

Consider the system in (1) and suppose that the filter matrices \(A_{f1}\), \(A_{f2}\), \(B_{f1}\), \(B_{f2}\), \(L_{f}\) in (8) are given. Then, the filtering error system in (9) for any \(\alpha \in \varGamma \) is mean-square asymptotically stable and guarantees an \(H_{\infty }\) disturbance attenuation level \(\gamma \) if there exist matrices \(K_{1}(\alpha )\), \(K_{2}(\alpha )\), \(E_{1}(\alpha )\), \(E_{2}(\alpha )\), \(F_{1}(\alpha )\), \(S_{1}(\alpha )\), \(\breve{B}_{f}\), \(\breve{A}_{f}\), \(\breve{L}_{f}\), U, \(\tilde{P}_{2}(\alpha )=diag\{P_{2}(\alpha ),P_{2}(\alpha )\}\), \(\tilde{R}_{2}(\alpha )=diag\{P_{2}(\alpha )-Q_{2}(\alpha ),Q_{2}(\alpha )\}\) symmetric matrices \(\tilde{P}_{k}(\alpha )=diag\{P_{k}(\alpha ),P_{k}(\alpha )\}>0\), the scalars \(\lambda _{i},\; i=1,\ldots ,3\) and \(\tilde{R}_{k}(\alpha )\) = \(diag\{P_{k}(\alpha )-Q_{k}(\alpha ),Q_{k}(\alpha )\}>0\), \(k=1,3\) satisfying

The filter parameter obtained by

Proof 4.2

For the slack matrices in (20), we first structurize them as the following block form (Feng and Han 2015; Li and Gao 2012b):

where

with \(\varUpsilon \) in (18). Moreover, for matrix variables \(\bar{P}\) and R in (20), we introduce the following definitions:

where \(\tilde{P}_{k}(\alpha )\), \(\tilde{R}_{k}(\alpha )\) are from Theorem 4.1.

As \(\varUpsilon ^{T}\varUpsilon =I\), by substituting (9)–(18) into (20) and combining (25)–(28), we have that

where \(\varXi \) is in (20) and

This completes the proof. \(\square \)

One way to facilitate the use of Theorem 4.1 for the construction of a filter is to convert (24) into a finite set of LMI constraints. The following results give a methodology to achieve this.

Theorem 4.3

Consider the system in (1) and suppose that the filter matrices \(A_{f1}\), \(A_{f2}\), \(B_{f1}\), \(B_{f2}\), \(L_{f}\) in (8) are given. Then, the filtering error system in (9) for any \(\alpha \in \varGamma \) is mean-square asymptotically stable and guarantees an \(H_{\infty }\) disturbance attenuation level \(\gamma \) if there exist matrices \(K_{1i}\), \(K_{2i}\), \(E_{1i}\), \(E_{2i}\), \(F_{1i}\), \(S_{1i}\), \(\breve{B}_{f}\), \(\breve{A}_{f}\), \(\breve{L}_{f}\), U, \(\tilde{P}_{2i}=diag\{P_{2i},P_{2i}\}\), \(\tilde{R}_{2i}=diag\{P_{2i}-Q_{2i},Q_{2i}\}\), symmetric matrices \(\tilde{P}_{ki}=diag\{P_{ki},P_{ki}\}>0\), \(\tilde{R}_{ki}=diag\{P_{ki}-Q_{ki},Q_{ki}\}>0\), \(k=1,3\) and scalars \(\lambda _{t},\; t=1,2,3\) satisfying

where

and

and

The filter parameter obtained by

Proof 4.4

Suppose that there exist matrices \(K_{1}(\alpha )\), \(K_{2}(\alpha )\), \(E_{1}(\alpha )\), \(E_{2}(\alpha )\), \(F_{1}(\alpha )\), \(S_{1}(\alpha )\), \(\breve{B}_{f}\), \(\breve{A}_{f}\), \(\breve{L}_{f}\), U, \(\tilde{P}_{2}(\alpha )=diag\{P_{2}(\alpha ),P_{2}(\alpha )\}\),\(\tilde{R}_{2}(\alpha )=diag\{P_{2}(\alpha )-Q_{2}(\alpha ),Q_{2}(\alpha )\}\) and symmetric matrices \(\tilde{P}_{k}(\alpha )=diag\{P_{k}(\alpha ),P_{k}(\alpha )\}>0\), \(\tilde{R}_{k}(\alpha )=diag\{P_{k}(\alpha )-Q_{k}(\alpha ),Q_{k}(\alpha )\}>0\), \(k=1,3\) satisfying (24), then a filter in the form of (6) exists. Now, we use these matrices and \(\alpha \) in the unit simplex \(\varGamma \) to fix the matrices as follows:

By (36), it is easy to rewrite \(\varDelta (\alpha )\) in (24) as

where \(\varDelta _{ij}\) takes the form of (32). On the other hand, from (31), we have

Considering \(\sum _{j=1}^{s}\alpha _{i}=1,\;\; \alpha _{i}\ge 0\), then from (36)–(37), we have \(\varDelta (\alpha )<0\). Based on Theorem 4.1, there exists a filter in the form of (6) such that the filtering error system in (9) is stochastically stable with a given \(H_{\infty }\) performance. This completes the proof. \(\square \)

Remark 4.5

When the scalars \(\lambda _{1}\), \(\lambda _{2}\) and \(\lambda _{3}\) of Theorem 4.3 are fixed to be constants, then (31) is an LMI linear in the variables. To select values for these scalars, optimization can be used (for instance, fminsearch in MATLAB) to obtain the scalars that improve some measure of performance (for instance, the value of the bound on the disturbance attenuation level \(\gamma \)).

5 Numerical Examples

In this section, simulation examples are provided to illustrate the effectiveness of the proposed filtering design approach.

5.1 Example 1

Consider the 2D static field model presented in Liu et al. (2009), which is described by the following equation:

where \(\eta _{i,j}\) is the state vector at coordinates (i, j) and \(\alpha _{1}\), \(\alpha _{2}\) are the vertical and horizontal correlative coefficients, respectively, satisfying: \(\alpha _{1}^{2}<1\) and \(\alpha _{2}^{2}<1\). Defining the augmented state vector \(x_{i,j}=[\eta _{i,j+1}^{T}-\alpha _{2}\eta _{i,j}^{T}\eta _{i,j}^{T}]^{T}\), and supposing that the measurement equation and the signal to be estimated are, respectively,

It is not difficult to transform these equations into a 2D FM model in the form of (1), with the system matrices given by

The uncertain parameters \(\alpha _{1}\) and \(\alpha _{2}\) are now assumed to be \(0.15\le \alpha _{1}\le 0.45\), and \(0.35\le \alpha _{2}\le 0.85\), so the above system is represented by a four-vertex polytope. It is assumed that measurements transmitted between the plant and the filter are imperfect, that is, data may be lost during their transmission. Based on this, our aim is to design a filter in the form of (6) such that the resulting filtering error system in (9) is mean-square asymptotically stable with a guaranteed \(H_{\infty }\) disturbance attenuation level.

5.1.1 The Measurements Transmitted Between the Plant and Filter are Perfect (\(\theta \)=1)

First, the stochastic variable is assumed to be \(\theta _{i,j}=1 (\theta =1)\), which means that the measurements always reach the filter. Applying the filter design method in Theorem (4.3), for this particular case, the minimum \(H_{\infty }\) performance \(\gamma ^{*}=2.4924\) is obtained, with the associated filter matrices given by equation (41).

It is noticeable that the value of \(\gamma \) obtained in this case (\(\gamma =2.4924\)) is smaller than the one found in Liu et al. (2009) and Gao et al. (2008).

5.1.2 The Measurements Transmitted Between the Plant and Filter are Imperfect (\(\theta \)=0.8)

We assume that data may be lost during their transmission: \(\theta =0.8\), so the probability of a data packet going missing is 20 %. With this assumption, applying the filter design method in Theorem (4.3), the achieved \(H_{\infty }\) disturbance attenuation level is \(\gamma ^{*}=4.6287\), and the corresponding filter matrices are

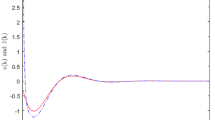

For simulation, the disturbance input w(i, j), depicted in Fig. 1, is

The filtering error signal e(i, j) obtained with the designed filter matrices is shown in Figs. 2, 3, 4 and 5 for the random data packet dropouts presented in Fig. 6: we can confirm that e(i, j) converges to zero despite the data dropouts and the disturbance.

The minimum guaranteed performance \(\gamma \) for different values of \(\theta \) are given in Table 1.

5.2 Example 2

Consider systems (1) and (2) with s = 4 and with the following data:

With these data, the optimal values \(\gamma \) of problem (31) are given in Table 2.

5.2.1 The Measurements Transmitted Between the Plant and Filter are Perfect (\(\theta \)=1)

Full Order \((n_{f}=n)\) Case In this case , for \(\lambda _{1}=2.0433\), \(\lambda _{2}=-0.0002\) and \(\lambda _{3}=0.0130\), the minimum \(H_{\infty }\) performance is \(\gamma ^{*}=5.4933\) and the filter gains obtained are:

We can notice that the value of \(\gamma =5.4933\) is smaller than the one found in Liu et al. (2009).

For simulation, the disturbance input w(i, j), is

The filtering error signal e(i, j) obtained with the designed filter matrices is shown in Figs. 7, 8, 9 and 10 for the random data packet dropouts presented in Fig. 6: we can confirm that e(i, j) converges to zero despite the disturbance.

Reduced Order \((n_{f}<n)\) Case In this case, for \(\lambda _{1}=1.9797\), \(\lambda _{2}=-0.6339\) and \(\lambda _{3}=0.0038\), the minimum \(H_{\infty }\) performance is \(\gamma ^{*}=6.1731\) and the filter gains obtained are:

5.2.2 The Measurements Transmitted Between the Plant and Filter are Imperfect (\(\theta \)=0.8)

Full Order \((n_{f}=n)\) Case In this case , for \(\lambda _{1}=2.0302\), \(\lambda _{2}=-0.0014\) and \(\lambda _{3}=0.0131\), the minimum \(H_{\infty }\) performance is \(\gamma ^{*}=6.2240\) and the filter gains obtained are:

It is noticeable that the value of \(\gamma =5.5459\) is better than the one found in Liu et al. (2009).

Reduced order \((n_{f}<n)\) case In this case, for \(\lambda _{1}=1.3162\), \(\lambda _{2}=-0.7450\) and \(\lambda _{3}=0.0108\), the minimum \(H_{\infty }\) performance is \(\gamma ^{*}=6.2240\) and the filter gains obtained are:

6 Conclusions

This paper has investigated the \(H_{\infty }\) filtering problem for a class of two-dimensional systems with intermittent measurements. These measurements are characterized using a stochastic variable that follows a Bernoulli random binary distribution, which makes it possible to derive a sufficient condition guaranteeing mean-square asymptotic stability and a certain level of \(H_{\infty }\) disturbance attenuation by means of an LMI technique. Numerical examples are provided to illustrate the effectiveness of the proposed approach. It must be pointed out that the methodology presented here can be used to solve parallel problems, such as \(H_{\infty }\) control and \(H_{\infty }\) filtering for other multi-dimensional systems, maybe with delays.

References

Benhayoun, M., Mesquine, F., & Benzaouia, A. (2015). Delay-dependent stabilizability of 2D delayed continuous systems with saturating control. Circuits, Systems and Signal Processing, 32(6), 2723–2743. doi:10.1007/s00034-013-9585-4.

Benzaouia, A., Hmamed, A., & Tadeo, F. (2016). Two-dimensional systems: From introduction to state of the art. In Studies in systems, decision and control (Vol. 28). Switzerland: Springer International Publishing. doi:10.1007/978-3-319-20116-0.

Boukili, B., Hmamed, A., Benzaouia, A., & El Hajjaji, A. (2014a). \(H_{\infty }\) filtering of two-dimensional T-S fuzzy systems. Circuits, Systems and Signal Processing, 33(6), 1737–1761. doi:10.1007/s00034-013-9720-2.

Boukili, B., Hmamed, A., & Tadeo, F. (2014b). Robust \(H_{\infty }\) filtering of 2D discrete Fornasini–Marchesini systems. International Journal on Sciences and Techniques of Automatic Control & Computer Engineering (IJ-STA), 8(1), 1998–2011.

Boukili, B., Hmamed, A., Benzaouia, A., & El Hajjaji, A. (2015). \(H_{\infty }\) state control for 2D fuzzy FM systems with stochastic perturbation. Circuits, Systems and Signal Processing, 34(3), 779–796. doi:10.1007/s00034-014-9889-z.

Boukili, B., Hmamed, A., & Tadeo, F. (2016a). Robust \(H_{\infty }\) filtering for 2-D discrete Roesser systems. Journal of Control, Automation and Electrical Systems. doi:10.1007/s40313-016-0251-5.

Boukili, B., Hmamed, A., Zoulagh, T., & Tadeo, F. (2016b). Robust \(H_{\infty }\) filtering for 2D systems with perturbation stochastic. In 5th international conference on systems and control (ICSC) (2016). doi:10.1109/ICoSC.2016.7507029.

Bu, X., Wang, H., Hou, Z., & Qian, W. (2014a). \(H_{\infty }\) control for a class of two-dimensional nonlinear systems with intermittent measurements. Applied Mathematics and Computation, 247, 651–662.

Bu, X., Wang, H., Hou, Z., & Qian, W. (2014b). Stabilisation of a class of two-dimensional nonlinear systems with intermittent measurements. IET Control Theory and Applications, 8(15), 1596–1604. doi:10.1049/iet-cta.2014.0170.

Cui, J., & Hu, G. (2010). State estimation of 2-D stochastic systems represented by FM-II model. Acta Automatica Sinica, 36(5), 755–761.

Cui, J. R., Hu, G. D., & Zhu, Q. (2011). Stability and robust stability of 2D discrete stochastic systems. Discrete Dynamics in Nature and Society, 2011(2011). doi:10.1155/2011/545361.

Dai, J., Guo, Z., & Wang, S. (2013). Robust \(H_{\infty }\) control for a class of 2-D nonlinear discrete stochastic systems. Circuits, Systems and Signal Processing, 32(5), 2297–2316. doi:10.1007/s00034-013-9573-8.

De Souza, C., Xie, L., & Coutinho, D. (2010). Robust filtering for 2D discrete-time linear systems with convex-bounded parameter uncertainty. Automatica, 46(4), 673–681.

Duan, Z., & Xiang, Z. (2014). Output feedback \(H_{\infty }\) stabilization of 2D discrete switched systems in FM LSS model. Circuits, Systems and Signal Processing, 33, 1095. doi:10.1007/s00034-013-9680-6.

Duan, Z., Xiang, Z., & Karimi, H. R. (2013). Delay-dependent \(H_{\infty }\) control for 2-D switched delay systems in the second FM model. Journal of the Franklin Institute, 350(7), 16971718.

Duan, Z., Xiang, Z., & Karimi, H. R. (2014). Robust stabilisation of 2D state-delayed stochastic systems with randomly occurring uncertainties and nonlinearities. International Journal of Systems Science, 45(7), 1402–1415.

Du, C., & Xie, L. (2002). \(H_{\infty }\) control and filtering of two-dimensional systems. Berlin: Springer.

El-Kasri, C., Hmamed, A., Alvarez, T., & Tadeo, F. (2012). Robust \(H_{\infty }\) filtering of 2D Roesser discrete systems: A polynomial approach. Mathematical Problems in Engineering, 2012, Article ID 521675, p. 15.

El-Kasri, C., Hmamed, A., & Tadeo, F. (2013a). Reduced-Order \(H_{\infty }\) filters for uncertain 2-D continuous systems, via LMIs and polynomial matrices. Circuits, Systems, and Signal Processing, 33(4), 1189–1214. doi:10.1007/s00034-013-9689-x.

El-Kasri, C., Hmamed, A., Tissir, E. H., & Tadeo, F. (2013b). Robust \(H_{\infty }\) filtering for uncertain two-dimensional continuous systems with time-varying delays. Multidimensional Systems and Signal Processing, 24(4), 685–706.

Feng, J., & Han, K. (2015). Robust full- and reduced-order energy-to-peak filtering for discrete-time uncertain linear systems. Signal Processing, 108, 183–194.

Gao, H., Lam, J., Wang, C., & Xu, S. (2004). Robust \(H_{\infty }\) filtering for 2-D stochastic systems. Circuits, Systems and Signal Processing, 23(6), 479–505.

Gao, H., Meng, X., & Chen, T. (2008). New design of Robust \(H_{\infty }\) filters for 2-D systems. IEEE Signal Processing Letters, 15, 217–220.

Gao, H., Zhao, Y., & Lam, J. (2009). \(H_{\infty }\) fuzzy filtering of nonlinear systems with intermittent measurements. IEEE Transactions on Fuzzy Systems, 17(2), 291–299.

Hmamed, A., Kasri, C. E., Tissir, E. H., Alvarez, T., & Tadeo, F. (2013). Robust \(H_{\infty }\) filtering for uncertain 2-D continuous systems with delays. International Journal of Innovative Computing, Information and Control, 9(5), 2167–2183.

Kririm, S., Hmamed, A., & Tadeo, F. (2015). Robust \(H_{\infty }\) filtering for uncertain 2D singular Roesser models. Circuits, Systems and Signal Processing, 34(7), 2213–2235.

Li, X., & Gao, H. (2012a). Generalized Kalman-Yakubovich-Popov lemma for 2-D FM LSS model and its application to finite frequency positive real control. IEEE Transactions on Signal Processing, 57(12), 3090–3103.

Li, X., & Gao, H. (2012b). Robust finite frequency \(H_{\infty }\) filtering for uncertain 2-D Roesser systems. Automatica, 48, 1163–1170.

Li, X., & Gao, H. (2013). Robust finite frequency image filtering for uncertain 2-D systems: The FM model case. Automatica, 29(8), 2446–2452.

Li, X., & Gao, H. (2014). Reduced-order generalized \(H_{\infty }\) filtering for linear discrete—Time systems with application to channel equalization. IEEE Transactions on Signal Processing, 62(13), 3393–3402.

Li, X., Wang, W., & Li, L. (2013). \(H_{\infty }\) control for 2-D T-S fuzzy FMII model with stochastic perturbation. International Journal of Systems Science,. doi:10.1080/00207721.2013.793780.

Liu, X., Gao, H., & Shi, P. (2009). Robust \(H_{\infty }\) filtering for 2-D systems with intermittent measurements. Circuits, Systems and Signal Processing, 28, 283–303. doi:10.1007/s00034-008-9081-4.

Qiu, J., Feng, G., & Yang, J. (2010). A new design of delay-dependent robust \(H_{\infty }\) filtering for continuous-time polytopic systems with time-varying delay. International Journal of Robust and Nonlinear Control, 20, 346–365. doi:10.1002/rnc.1439.

Shi, P., Luan, X., & Lui, F. (2012). \(H_{\infty }\) filtering for discrete-time systems with stochastic incomplete measurement and mixed delays. IEEE Transactions on Industrial Electronics, 59(6), 2732–2738.

Tuan, H. D., Apkarian, P., Nguyen, T. Q., & Narikiyo, T. (2002). Robust mixed \(H_{2}/H_{\infty }\) filtering for 2-D systems. IEEE Transactions on Signal Processing, 50(7), 1759–1771.

Wang, L., & Liu, X. (2013). \(H_{\infty }\) filtering design for 2-D discrete-Time linear systems with polytopic uncertainty. Circuits, Systems and Signal Processing, 32(1), 333–345. doi:10.1007/s00034-012-9436-8.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Boukili, B., Hmamed, A. & Tadeo, F. Reduced-Order \(H_{\infty }\) Filtering with Intermittent Measurements for a Class of 2D Systems. J Control Autom Electr Syst 27, 597–607 (2016). https://doi.org/10.1007/s40313-016-0271-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40313-016-0271-1