Abstract

This paper deals with designing H ∞ filters of reduced order for two dimensional (2-D) continuous systems described by Roesser models, with uncertain state space matrices. These filters are characterized in terms of linear matrix inequalities (LMI), to minimize a bound on the H ∞ noise attenuation, by using homogeneous polynomially parameter-dependent matrices of arbitrary degree. The methodology is also particularized for full order and zero order (static) filters, where more simple LMI conditions are derived. Numerical examples are presented to illustrate the proposed methodology.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

H ∞ filtering, first presented in [13], has the main aim to minimize the H ∞ norm of the error of a filtering system, in order to ensure that the L 2-induced gain from the noise signals to the estimation error will be less than a prescribed level. In contrast with Kalman filtering, H ∞ filtering does not require the exact knowledge of the noise signals, which renders this approach appropriate in some practical applications. A great number of results on the H ∞ filtering have been proposed in the literature, in both the deterministic and stochastic contexts: see, for example, [27, 29, 33], and references therein. When uncertainties appear in a system model, the robust H ∞ filtering has been also investigated: see, for example, [6, 23, 36].

Currently, there is an increased interest in the design of reduced-order H ∞ filters, as presented in [4, 16, 30, 35], since reduced-order filters are easier to implement than full-order ones: this is an important issue when fast data processing is needed.

Note that the results discussed so far were obtained for one-dimensional (1-D) systems. However, many practical systems are better modeled as two-dimensional (2-D) systems, such as those in image data processing and transmission, thermal processes, gas absorption, water stream heating, etc. [26]. The study of 2-D systems is of both practical and theoretical importance [20, 25, 38]. Therefore, in recent years, much attention has been devoted to the analysis and synthesis problems for 2-D systems: controlability [21, 22]; stability [17, 18]; the stability and stabilization in the presence of delays [2, 15, 19, 28]; 2-D dynamic output feedback control [37], model approximation [8], etc. For the specific problem of 2-D H ∞ filtering, several results have already been obtained: for example, for Roesser models [7]; for Fornasini–Marchesini second model [31, 34]; for 2-D systems with delays [7, 10–12, 34], etc.

Interested in the design of reduced-order H ∞ filters and in order to obtain less conservative results, we present a new approach, the structured polynomially parameter-dependent method, for designing robust H ∞ filters for uncertain 2-D continuous systems described by the Roesser model. Given a stable system with parameter uncertainties residing in polytope vertices, the focus is on designing a robust filter such that the filtering error system is robustly asymptotically stable and minimizing the H ∞ norm of the filtering error system for the entire uncertainty domain. It should be pointed out that not only the full-order filters are established, but also the reduced-order filters are designed. Furthermore, when the reduced-order model is restricted to be of zeroth-order, the dimension constraint is removed and a simpler condition expressed by LMIs is obtained.

In this paper, the reduced-order H ∞ filtering problem for uncertain 2-D continuous systems with new structure of the key slack variable matrix is treated. The class of 2-D systems under consideration corresponds to continuous 2-D systems described by a Roesser state space model subject to polytopic uncertainties in both the state and output matrices. A sufficient condition for the solvability of the robust H ∞ filtering problem is derived in terms of a set of LMIs, based on homogeneous polynomial dependence on the uncertain parameters of arbitrary degree. The more the degree increases, the less conservative filter designs can be obtained. It is shown that the H ∞ filter result includes the quadratic framework, and the linearly parameter-dependent framework as special cases for zeroth degree and first degree, respectively. Two examples will illustrate the feasibility of the proposed methodology.

Notation

Throughout this paper, for real symmetric matrices X and Y, the notation X≥Y (respectively, X>Y) means that the matrix X−Y is positive semi-definite (respectively, positive definite). I is the identity matrix of appropriate dimension. The superscript T represents the transpose of a matrix; \(\operatorname{diag}\{\ldots\}\), denotes a block-diagonal matrix; the Euclidean vector norm is denoted by ∥⋅∥. and the symmetric term in a symmetric matrix is denoted by ∗, e.g.,  . Finally, the ℓ

2 norm of a 2-D signal w(t

1,t

2) is given by \(\|w(t_{1},t_{2})\|=\sqrt{\int_{0}^{\infty}\int_{0}^{\infty}w(t_{1},t_{2})^{T}w(t_{1},t_{2})\,dt_{1}\,dt_{2}}\), where w(t

1,t

2) is said to be in the space ℓ

2{[0,∞],[0,∞]} or ℓ

2 if ∥w(t

1,t

2)∥<∞.

. Finally, the ℓ

2 norm of a 2-D signal w(t

1,t

2) is given by \(\|w(t_{1},t_{2})\|=\sqrt{\int_{0}^{\infty}\int_{0}^{\infty}w(t_{1},t_{2})^{T}w(t_{1},t_{2})\,dt_{1}\,dt_{2}}\), where w(t

1,t

2) is said to be in the space ℓ

2{[0,∞],[0,∞]} or ℓ

2 if ∥w(t

1,t

2)∥<∞.

2 Problem Formulation

Consider an uncertain 2-D continuous system described by the following Roesser state-space model:

where \(x^{h}(t_{1},t_{2})\in \Re^{n_{h}}\) and \(x^{v}(t_{1},t_{2})\in \Re^{n_{v}}\) are the horizontal and vertical states, respectively; y(t 1,t 2)∈ℜp is the measured output; z(t 1,t 2)∈ℜr is the signal to be estimated, and w(t 1,t 2)∈ℜm is the exogenous input with bounded energy (i.e., w(t 1,t 2)∈ℓ 2). The system matrices are assumed to belong to a known polyhedral domain Γ described by N vertices, that is,

where

with \(\mathcal{P}_{m}\triangleq\{A_{m}, B_{m}, C_{1_{m}}, D_{1_{m}}, C_{m}, D_{m}\}\) denoting the mth vertex of the polyhedral domain Γ. It is assumed that the parameter α is unknown (not measured online) and does not depend explicitly on the time variable (t 1,t 2).

The boundary conditions are defined by

Inspired by [5], we make the following assumption:

Assumption 1

The boundary conditions satisfy

Similar to [5], we give the following definition:

Definition 1

The 2-D continuous system (1)–(3) with Assumption 1 is said to be asymptotically stable if

Now, we want to find a 2-D continuous linear time-invariant filter, with input y(t 1,t 2) and output z f (t 1,t 2), which is an estimation of z(t 1,t 2). Here, we consider the following state space description for this filter:

where \(x^{h}_{f}(t_{1},t_{2})\in\Re^{n_{h_{f}}}\) is the vector of the reduced-order filter horizontal states with \(1\leq n_{h_{f}}<n_{f}\), and \(x^{v}_{f}(t_{1},t_{2})\in\Re^{n_{v_{f}}}\) is the vector of vertical states, with \(1\leq n_{v_{f}}<n_{f}\) (for full-order filter, we have \(n_{h_{f}}=n_{f}\) and \(n_{v_{f}}=n_{f}\)); A f , B f , and C f are constant matrices to be determined, partitioned as follows:

Denote

Augmenting system (1)–(3) to include the states of filter (5)–(6), we obtain the following filtering error system:

where

The matrix transfer function of the error system (9)–(10) is then given by

and the H ∞ norm of the system is, by definition,

where σ(⋅) denotes the maximum singular value.

Remark 1

By using the 2-D Parseval’s theorem [25], it is not difficult to show that, under zero boundary conditions and with asymptotic stability of (9)–(10), the condition \(\|\tilde{G}\|_{\infty}<\gamma\) is equivalent to

Our aim in is to design reduced-order H ∞ filters of the form (5)–(6) such that:

-

1.

The filter error system (9)–(10) is asymptotically stable when w(t 1,t 2)=0.

-

2.

The filter error system (9)–(10) fulfills a prescribed level γ of the H ∞ norm; i.e., under the zero boundary condition, \(\|\tilde{z}(t_{1},t_{2})\|<\gamma \|w(t_{1},t_{2})\|\) is satisfied for any w(t 1,t 2)∈ℓ 2.

Remark 2

In the reduced-order case, we consider three particular scenarios: First, (\(n_{h_{f}}\neq0\), \(n_{v_{f}}=0\)); then, (\(n_{h_{f}}=0\), \(n_{v_{f}}\neq0\)), and finally the zeroth-order filter: (\(n_{h_{f}}=0\), \(n_{v_{f}}=0\)).

Case 1: \(n_{h_{f}}\neq 0\), \(n_{v_{f}}=0\).

In this case, the reduced-order H ∞ filter in (5)–(6) is given by

Augmenting system (1)–(3) to include the states of the filter (14)–(15) and using (8), we obtain the following filtering error system:

where

Case 2: \(n_{h_{f}}=0\), \(n_{v_{f}}\neq 0\).

The reduced-order H ∞ filter in (5)–(6) is now

Augmenting system (1)–(3) to include the states of the filter (18)–(19) and using (8), we obtain the following filtering error system:

where

Case 3: \(n_{h_{f}}= 0\), \(n_{v_{f}}=0\).

The reduced-order H ∞ filter in (5)–(6) is now the following static filter:

Connecting this filter (22) to system (1)–(3), we obtain the following filtering error system:

with

3 Preliminaries

This section is devoted to some preliminary results used later.

Consider now the following 2-D continuous system:

To test the asymptotic stability of (25), the following condition, based on properties of the characteristic polynomial, could be used:

where

However, this condition is difficult to use to design filters, so an alternative is used here, based on testing stability using Lyapunov matrices. This methodology makes possible to derive a condition in terms of Linear Matrices Inequalities (LMIs).

Theorem 1

[14]

The 2-D system (25) is asymptotically stable if there exists a matrix

(block diagonal positive definite) such that

(block diagonal positive definite) such that

In this case, a Lyapunov function of system (25) is defined as

where

Definition 2

[19]

The unidirectional derivative of V(t 1,t 2) in (28) is defined to be

Note that this unidirectional derivative can be seen as a particular case of the derivative of the function V(t 1,t 2) in one direction, independently of the other direction.

Lemma 1

[19]

The 2-D system (25) is asymptotically stable if its unidirectional derivative (29) is negative definite.

Proof

We now give an alternative proof based on Definition 2. From (29) and Lemma 1 we have that

which implies

Let t 1→∞ with t 2 finite: substituting them into (30), we get V 1(∞,t 2)<V 1(∞,t 2) if ∥x h(∞,t 2)∥>0 or, equivalently,

Since both V 1(∞,t 2)<V 1(∞,t 2) and V 2(∞,t 2)<0 are false if ∥x h(∞,t 2)∥>0, it follows that (31) is false. Thus, ∥x h(∞,t 2)∥=0. Similarly, we can get that ∥x v(t 1,∞)∥>0, which completes the proof. □

By using a parameter-dependent Lyapunov function P(α) we can obtain the following result.

Lemma 2

[38]

Given γ>0, the estimation error system (9)–(10) is asymptotically stable with \(\|\tilde{G}\|_{\infty}< \gamma \) if there exists a block diagonal positive-definite matrix \(P_{\alpha}=\operatorname{diag}(P_{h_{\alpha}},P_{v_{\alpha}})>0\) satisfying

Lemma 3

Let ξ∈ℜn, Q∈ℜn×n, and \(\mathcal{B}\in\Re^{m\times n}\) with rank \(\mathcal{B}<n\) and \(\mathcal{B}^{\perp}\) such that \(\mathcal{B}\mathcal{B}^{\perp}=0\). Then, the following conditions are equivalent:

-

1.

\(\xi^{T}Q\xi<0 \ \forall \xi\neq0 : \mathcal{B}\xi=0\).

-

2.

\(\mathcal{B}^{\perp T}Q\mathcal{B}^{\perp}<0\).

-

3.

\(\exists \mu\in\Re: Q-\mu \mathcal{B}^{T}\mathcal{B}<0\).

-

4.

\(\exists \chi \in\Re^{n\times m} : Q+\chi\mathcal{B}+\mathcal{B}^{T}\chi^{T}<0\).

4 Main Results

In this section, an LMI approach will be developed to solve the robust H ∞ filtering problem formulated in the previous section. First, we propose the following results derived from those in [32] and [38].

Theorem 2

Given γ>0, the filter error system (9)–(10) is asymptotically stable with \(\|\tilde{G}\|_{\infty}< \gamma \) if there exist \(P=\operatorname{diag}(P_{h},P_{v})>0\) with \(P_{h}\in R^{n_{h}+n_{h_{f}}}\) and \(P_{v}\in R^{n_{v}+n_{v_{f}}}\) and matrices \(E_{\alpha}\in R^{(n+n_{f})\times (n+n_{f})}\), \(F_{\alpha}\in R^{p\times (n+n_{f})}\), \(K_{\alpha}\in R^{(n+n_{f})\times (n+n_{f})}\), and \(Q_{\alpha}\in R^{r\times (n+n_{f})}\) satisfying

Proof

The equivalence is obtained by considering

under condition (4) of Lemma 3, with

which, using condition (2) of Lemma 3, gives (32). □

The additional variable matrices F α and Q α provide additional degrees of freedom for the solution of the robust H ∞ filtering problems presented below. Note that when F α =0 and Q α =0, the LMI (33) reduces to LMI (34). From Theorem 2 we have the following corollary.

Corollary 1

Given γ>0, the filter error system (9)–(10) is asymptotically stable with \(\|\tilde{G}\|_{\infty}< \gamma \) if there exist \(P=\operatorname{diag}(P_{h},P_{v})>0\) with \(P_{h}\in R^{n_{h}+n_{h_{f}}}\) and \(P_{v}\in R^{n_{v}+n_{v_{f}}}\) and matrices \(E_{\alpha}\in R^{(n+n_{f})\times (n+n_{f})}\) and \(K_{\alpha}\in R^{(n+n_{f})\times (n+n_{f})}\) satisfying

Proof

The proof can be easily extended from that for 1-D systems in [9]. □

Remark 3

E α , F α , K α , and Q α act as slack variables to provide extra degrees of freedom in the solution space of the robust H ∞ filtering problem. Thanks to these matrices, we obtain an LMI in which the Lyapunov matrix P α is not involved in any product with the system matrices. This enables us to derive a robust H ∞ filtering condition that is less conservative than previous results due to the extra degrees of freedom (see the numerical example at the end of the paper).

In the sequel, based on Theorem 2, we will first design full-order parameter-independent H ∞ filters of the form (5)–(6). The results are then extended to reduced-order filters, providing the main results of the paper.

4.1 Full-Order H ∞ filter design

The following result provides sufficient conditions for the existence of a full-order H ∞ filter (\(n_{h_{f}}=h_{n}, n_{v_{f}}=n_{v}\)) for system (9)–(10) satisfying (13).

Theorem 3

Consider system (1)–(3) and let γ>0 be a given constant. Then the estimation error system (9)–(10) is asymptotically stable with \(\|\tilde{G}\|_{\infty} < \gamma\) if there exist \(\bar{P}_{\alpha}\triangleq \operatorname{diag}\{\bar{P}_{h\alpha},\bar{P}_{v\alpha}\} >0\) and matrices \(N_{\alpha}\triangleq \operatorname{diag}\{N_{h\alpha},N_{v\alpha}\}\), \(T_{\alpha}\triangleq \operatorname{diag}\{T_{h\alpha},T_{v\alpha}\}\), \(E_{1\alpha}\triangleq \operatorname{diag}\{E_{1_{h\alpha}},E_{1_{v\alpha}}\}\), \(K_{1\alpha}\triangleq \operatorname{diag}\{K_{1_{h\alpha}},K_{1_{v\alpha}}\}\), \(F_{1_{\alpha}}\), \(G_{1_{\alpha}}\), \(Q_{1_{\alpha}}\), \(X\triangleq \operatorname{diag}\{X_{h},X_{v}\}\), S a , S b , S c , and S d such that

where

In this case, the desired 2-D continuous filter in the form of (5)–(6) can be selected with the following parameters:

Proof

Let P α , E α , F α , K α , and Q α have the following structures:

Without loss of generality, we suppose that K 3h , K 4h , K 3v , and K 4v are nonsingular. Introducing the transformation matrix

and pre- and post-multiplying (33) by \(\operatorname{diag} \{\varPhi,\varPhi,I,I \}\), we get

Defining

(see the proof of (38)), we know that the transfer function of the filter in (5)–(6) from y(t 1,t 2) to z f (t 1,t 2) is \(G_{z_{f}y}(s_{1},s_{2})=C_{f}[\operatorname{diag} \{s_{1}I_{nh},s_{2}I_{nv} \}-A_{f}]^{-1}B_{f}+D_{f}\). Substituting (38) into this transfer function and considering \(X_{h}=K_{4h}K_{3h}^{-1}K_{4h}^{T}\) and \(X_{v}=K_{4v}K_{3v}^{-1}K_{4v}^{T}\), we get

Therefore, the filter can be given by (36), and the proof is completed. □

Remark 4

Observe that, for given λ 1 and λ 2, (35) is convex and can be solved using standard LMI tools. Finding optimal values of λ 1 and λ 2can be completed, for example, by using the Matlab command Fminsearch.

Similar to Theorem 3, by Corollary 1 we have the following:

Corollary 2

Consider system (1)–(3) and let γ>0 be a given constant. Then the estimation error system (9)–(10) is asymptotically stable with \(\|\tilde{G}\|_{\infty} < \gamma\) if there exist \(\bar{P}_{\alpha}\triangleq \operatorname{diag}\{\bar{P}_{h\alpha},\bar{P}_{v\alpha}\} >0\) and matrices \(N_{\alpha}\triangleq \operatorname{diag}\{N_{h\alpha},N_{v\alpha}\}\), \(T_{\alpha}\triangleq \operatorname{diag}\{T_{h\alpha},T_{v\alpha}\}\), \(E_{1\alpha}\triangleq \operatorname{diag}\{E_{1_{h\alpha}},E_{1_{v\alpha}}\}\), \(K_{1\alpha}\triangleq \operatorname{diag}\{K_{1_{h\alpha}},K_{1_{v\alpha}}\}\), \(G_{1_{\alpha}}\), \(X\triangleq \operatorname{diag}\{X_{h},X_{v}\}\), S a , S b , S c , and S d such that

where

4.2 Reduced-Order H ∞ filter design

In this subsection, we provide a solution of the H ∞ reduced-order filtering problem in terms of LMIs.

First, it must be pointed out that for the reduced-order \(1\leq n_{h_{f}}< n_{h}\), 1≤n vf <n v , the LMI (35) is no longer applicable because the matrices K 4h and K 4h are rectangular, of dimensions n hf ×n h and n vf ×n v , respectively. We get rid of this difficulty by proposing a special structure for the matrices:

Then, replacing matrices K 4h , K 4v by V h K 4h and V v K 4v , respectively, makes possible to derive the corresponding result, as it is now presented:

Theorem 4

Define

, and

\(V\triangleq \operatorname{diag}\{V_{h},V_{v}\}\). Consider system (1)–(3), and let

γ>0 be a given constant. Then, there exists a reduced-order

H

∞

filter in the form of (5)–(6) such that the estimation error system (9)–(10) is asymptotically stable with

\(\|\tilde{G}\|_{\infty} < \gamma\)

if there exist

\(\bar{P}_{\alpha}\triangleq \operatorname{diag}\{\bar{P}_{h\alpha},\bar{P}_{v\alpha}\} >0\)

and matrices

\(N_{\alpha}\triangleq \operatorname{diag}\{N_{h\alpha},N_{v\alpha}\}\), \(T_{\alpha}\triangleq \operatorname{diag}\{T_{h\alpha},T_{v\alpha}\}\), \(E_{1\alpha}\triangleq \operatorname{diag}\{E_{1_{h\alpha}},E_{1_{v\alpha}}\}\), \(K_{1\alpha}\triangleq \operatorname{diag}\{K_{1_{h\alpha}},K_{1_{v\alpha}}\}\), \(F_{1_{\alpha}}\), \(G_{1_{\alpha}}\), \(Q_{1_{\alpha}}\), \(X\triangleq \operatorname{diag}\{X_{h},X_{v}\}\), S

a

, S

b

, S

c

, and

S

d

such that

, and

\(V\triangleq \operatorname{diag}\{V_{h},V_{v}\}\). Consider system (1)–(3), and let

γ>0 be a given constant. Then, there exists a reduced-order

H

∞

filter in the form of (5)–(6) such that the estimation error system (9)–(10) is asymptotically stable with

\(\|\tilde{G}\|_{\infty} < \gamma\)

if there exist

\(\bar{P}_{\alpha}\triangleq \operatorname{diag}\{\bar{P}_{h\alpha},\bar{P}_{v\alpha}\} >0\)

and matrices

\(N_{\alpha}\triangleq \operatorname{diag}\{N_{h\alpha},N_{v\alpha}\}\), \(T_{\alpha}\triangleq \operatorname{diag}\{T_{h\alpha},T_{v\alpha}\}\), \(E_{1\alpha}\triangleq \operatorname{diag}\{E_{1_{h\alpha}},E_{1_{v\alpha}}\}\), \(K_{1\alpha}\triangleq \operatorname{diag}\{K_{1_{h\alpha}},K_{1_{v\alpha}}\}\), \(F_{1_{\alpha}}\), \(G_{1_{\alpha}}\), \(Q_{1_{\alpha}}\), \(X\triangleq \operatorname{diag}\{X_{h},X_{v}\}\), S

a

, S

b

, S

c

, and

S

d

such that

where

In this case, the 2-D filter in the form of (5)–(6) is given by

Proof

The proof is parallel to that of Theorem 3. We obtain (40) when the matrices P α , E α , F α , K α , and Q α have the following structures:

□

Remark 5

In the filter model (5)–(6), when \(n_{h_{f}}=n_{h}\) and \(n_{v_{f}}=n_{v}\), then V=I 2n , so it is a full-order filter; therefore, Theorems 3 and 4 are equivalent for this specific case. The reduced-order filter is then studied when (\(1\leq n_{h_{f}}<n_{h}, 1\leq n_{v_{f}}<n_{v}\)), as when (\(n_{h_{f}}=0\) or \(n_{v_{f}}=0\)), we directly get the following corollaries from Theorem 4.

Case 1: \(n_{h_{f}}\neq0\), \(n_{v_{f}}=0\).

Corollary 3

Define

. Consider system (1)–(3) and let

γ>0 be a given constant. Then, there exists a reduced-order

H

∞

filter in the form of (18)–(19) such that the estimation error system (20)–(21) is asymptotically stable with

\(\|\tilde{G}\|_{\infty} < \gamma\)

if there exist

\(\bar{P}_{\alpha}\triangleq \operatorname{diag}\{\bar{P}_{h\alpha},\bar{P}_{v\alpha}\} >0\)

and matrices

\(N_{\alpha}\triangleq \operatorname{diag}\{N_{h\alpha},N_{v\alpha}\}\), \(T_{\alpha}\triangleq \operatorname{diag}\{T_{h\alpha},T_{v\alpha}\}\), \(E_{1\alpha}\triangleq \operatorname{diag}\{E_{1_{h\alpha}},E_{1_{v\alpha}}\}\), K

1α

, \(F_{1_{\alpha}}\), \(G_{1_{\alpha}}\), \(Q_{1_{\alpha}}\), \(X\triangleq \operatorname{diag}\{X_{h},X_{v}\}\), S

a

, S

b

, S

c

, and

S

d

such that

. Consider system (1)–(3) and let

γ>0 be a given constant. Then, there exists a reduced-order

H

∞

filter in the form of (18)–(19) such that the estimation error system (20)–(21) is asymptotically stable with

\(\|\tilde{G}\|_{\infty} < \gamma\)

if there exist

\(\bar{P}_{\alpha}\triangleq \operatorname{diag}\{\bar{P}_{h\alpha},\bar{P}_{v\alpha}\} >0\)

and matrices

\(N_{\alpha}\triangleq \operatorname{diag}\{N_{h\alpha},N_{v\alpha}\}\), \(T_{\alpha}\triangleq \operatorname{diag}\{T_{h\alpha},T_{v\alpha}\}\), \(E_{1\alpha}\triangleq \operatorname{diag}\{E_{1_{h\alpha}},E_{1_{v\alpha}}\}\), K

1α

, \(F_{1_{\alpha}}\), \(G_{1_{\alpha}}\), \(Q_{1_{\alpha}}\), \(X\triangleq \operatorname{diag}\{X_{h},X_{v}\}\), S

a

, S

b

, S

c

, and

S

d

such that

where

Proof

Let matrices P α ,E α ,F α ,K α , and P α take the following structures:

Without loss of generality, K 3h and K 4h are nonsingular. Introduce now the transformation matrix

and define

Following proof of Theorem 3, it is possible to obtain the LMI of (42), and

which completes the proof. □

Similarly to Corollary 3, we can get the following result to design the reduced-order common filter in the form of (14)–(15).

Case 2: \(n_{h_{f}}\neq0\), \(n_{v_{f}}=0\).

Corollary 4

Define

. Consider system (1)–(3) and let

γ>0 be a given constant. Then, there exists a reduced-order

H

∞

filter in the form of (14)–(15) such that the estimation error system (16)–(17) is asymptotically stable with

\(\|\tilde{G}\|_{\infty} < \gamma\)

if there exist positive definite matrices

\(\bar{P}_{\alpha}\triangleq \operatorname{diag}\{\bar{P}_{h\alpha},\bar{P}_{v\alpha}\} >0\)

and matrices

\(N_{\alpha}\triangleq \operatorname{diag}\{N_{h\alpha},N_{v\alpha}\}\), \(T_{\alpha}\triangleq \operatorname{diag}\{T_{h\alpha},T_{v\alpha}\}\), \(E_{1\alpha}\triangleq \operatorname{diag}\{E_{1_{h\alpha}},E_{1_{v\alpha}}\}\), K

1α

, \(F_{1_{\alpha}}\), \(G_{1_{\alpha}}\), \(Q_{1_{\alpha}}\), \(X\triangleq \operatorname{diag}\{X_{h},X_{v}\}\), S

a

, S

b

, S

c

, and

S

d

such that

. Consider system (1)–(3) and let

γ>0 be a given constant. Then, there exists a reduced-order

H

∞

filter in the form of (14)–(15) such that the estimation error system (16)–(17) is asymptotically stable with

\(\|\tilde{G}\|_{\infty} < \gamma\)

if there exist positive definite matrices

\(\bar{P}_{\alpha}\triangleq \operatorname{diag}\{\bar{P}_{h\alpha},\bar{P}_{v\alpha}\} >0\)

and matrices

\(N_{\alpha}\triangleq \operatorname{diag}\{N_{h\alpha},N_{v\alpha}\}\), \(T_{\alpha}\triangleq \operatorname{diag}\{T_{h\alpha},T_{v\alpha}\}\), \(E_{1\alpha}\triangleq \operatorname{diag}\{E_{1_{h\alpha}},E_{1_{v\alpha}}\}\), K

1α

, \(F_{1_{\alpha}}\), \(G_{1_{\alpha}}\), \(Q_{1_{\alpha}}\), \(X\triangleq \operatorname{diag}\{X_{h},X_{v}\}\), S

a

, S

b

, S

c

, and

S

d

such that

where

Proof

Let matrices P α ,E α ,F α ,K α , and Q α have the following structures:

Without loss of generality, we again assume that K 3v and K 4v are nonsingular. We define the transformation matrix

and

Similarly to the proof of Theorem 3, the LMI (44) is obtained with

completing the proof. □

Case 3: \(n_{h_{f}}=0\), \(n_{v_{f}}=0\).

Corollary 5

Given γ>0, There exists a zero-order H ∞ filter in the form of (22) such that the estimation error system (23)–(24) is asymptotically stable with \(\|\tilde{G}\|_{\infty}< \gamma \) if there exist a positive-definite matrix \(P=\operatorname{diag}(P_{h},P_{v})>0\) with \(P_{h}\in R^{n_{h}}\) and \(P_{v}\in R^{n_{v}}\) and matrices E α ∈R n×n, F α ∈R p×n, K α ∈R n×n, and Q α ∈R r×n satisfying

4.3 Homogeneous Polynomial Solutions

Before presenting the formulation of Theorem 4 using homogeneous polynomially parameter-dependent matrices, some definitions and preliminaries are needed to represent and handle products and sums of homogeneous polynomials. First, we define the homogeneous polynomially parameter-dependent matrices of degree \(\mathfrak{g}\) by

Similarly, matrices N α , T α , E 1α , K 1α , F 1α , G 1α , and Q 1α take the same form.

The notations above are as follows: \(\alpha_{1}^{k_{1}}\alpha_{2}^{k_{2}}\ldots\alpha_{N}^{k_{N}}, \alpha\in\varOmega, k_{i}\in \mathbb{N}, i=1,\ldots,N\) are monomials; \(\mathfrak{K}_{j}(\mathfrak{g})\) is the jth N-tuples of \(\mathfrak{K}(\mathfrak{g})\), lexically ordered, \(j=1,\ldots,\mathfrak{J}(\mathfrak{g})\), and \(\mathfrak{K}(\mathfrak{g})\) is the set of N-tuples obtained as all possible combinations of k 1 k 2…k N that fulfill \(k_{1}+k_{2}+\cdots+k_{N}=\mathfrak{g}\). Since the number of vertices in the polytope \(\mathcal{P}\) is N, the number of elements in \(\mathfrak{K}(\mathfrak{g})\) is then given by \(\mathfrak{J}(\mathfrak{g})=(N+\mathfrak{g}-1)!/(\mathfrak{g}!(N-1)!)\).

For each i=1,…,N, we define the N-tuples \(\mathfrak{K}_{j}^{i}(\mathfrak{g})\) that are equal to \(\mathfrak{K}_{j}(\mathfrak{g})\) but with k i >0 replaced by k i −1. Note that the N-tuples \(\mathfrak{K}_{j}^{i}(\mathfrak{g})\) are defined only in the cases where the corresponding k i are positive. Note also that, when applied to the elements of \(\mathfrak{K}(\mathfrak{g}+1)\), the N-tuples \(\mathfrak{K}_{j}^{i}(\mathfrak{g}+1)\) define subscripts k 1 k 2…k N of matrices \(\bar{P}_{k_{1}k_{2}\ldots k_{N}}\), \(T_{k_{1}k_{2}\ldots k_{N}}\), \(N_{k_{1}k_{2}\ldots k_{N}}\), \(E_{1_{k_{1}k_{2}\ldots k_{N}}}\), \(F_{1_{k_{1}k_{2}\ldots k_{N}}}\), \(G_{1_{k_{1}k_{2}\ldots k_{N}}}\), \(K_{1_{k_{1}k_{2}\ldots k_{N}}}\), and \(Q_{1_{k_{1}k_{2}\ldots k_{N}}}\), associated to homogeneous polynomial parameter-dependent matrices of degree \(\mathfrak{g}\).

Finally, we define the scalar constant coefficients \(\beta^{i}_{j}(j+1)=\mathfrak{g}!/(k_{1}!k_{2}!\ldots k_{N}!)\), with \(k_{1}k_{2}\ldots k_{N}\in \mathfrak{K}_{j}^{i}(\mathfrak{g}+1)\).

To facilitate the presentation of our main results, denote \(\beta^{i}_{j}(j+1)\) by \(\mathfrak{h}\); using this notation, we now present the main result in this section.

Theorem 5

Define

,

,  , and

\(V\triangleq \operatorname{diag}\{V_{h},V_{v}\}\). Suppose that there exist symmetric parameter-dependent positive definite matrices

\(\bar{P}_{\mathfrak{K_{j}(g)}}>0 \)

and matrices

\(T_{\mathfrak{K_{j}(g)}}\), \(N_{\mathfrak{K_{j}(g)}}\), \(E_{1_{\mathfrak{K_{j}(g)}}}\), \(F_{1_{\mathfrak{K_{j}(g)}}}\), \(G_{1_{\mathfrak{K_{j}(g)}}}\), \(K_{1_{\mathfrak{K_{j}(g)}}}\), and

\(Q_{1_{\mathfrak{K_{j}(g)}}}\), \(\mathfrak{K_{j}(g)}\in\mathfrak{K(g)}\), \(j=1,\ldots,\mathfrak{J}(\mathfrak{g})\), such that the following LMIs hold for all

\(\mathfrak{K_{l}(g+1)}\in\mathfrak{K(g+l)}\), \(l=1,\ldots,\mathfrak{J}(\mathfrak{g+l})\):

, and

\(V\triangleq \operatorname{diag}\{V_{h},V_{v}\}\). Suppose that there exist symmetric parameter-dependent positive definite matrices

\(\bar{P}_{\mathfrak{K_{j}(g)}}>0 \)

and matrices

\(T_{\mathfrak{K_{j}(g)}}\), \(N_{\mathfrak{K_{j}(g)}}\), \(E_{1_{\mathfrak{K_{j}(g)}}}\), \(F_{1_{\mathfrak{K_{j}(g)}}}\), \(G_{1_{\mathfrak{K_{j}(g)}}}\), \(K_{1_{\mathfrak{K_{j}(g)}}}\), and

\(Q_{1_{\mathfrak{K_{j}(g)}}}\), \(\mathfrak{K_{j}(g)}\in\mathfrak{K(g)}\), \(j=1,\ldots,\mathfrak{J}(\mathfrak{g})\), such that the following LMIs hold for all

\(\mathfrak{K_{l}(g+1)}\in\mathfrak{K(g+l)}\), \(l=1,\ldots,\mathfrak{J}(\mathfrak{g+l})\):

where

Then the homogeneous polynomially parameter-dependent matrices given by (47) ensure (40) for all α∈Ω; moreover, if the LMI (48) is fulfilled for a given degree \(\mathfrak{g}\), then the LMIs corresponding to any degree \(\mathfrak{g} > \mathfrak{\hat{g}}\) are also satisfied.

Proof

Note that (40) for (A(α),B(α),C 1(α), D 1(α), C(α),D(α)) \(\in \mathcal{P}\) and P α , T α , N α , K 1α , E 1α , F 1α , G 1α , Q 1α given by (48) are homogeneous polynomial matrix equations of degree \(\mathfrak{g}+1\) that can be written as

Condition (48) imposed for all \(l=1,\ldots,\mathfrak{J(g}+1)\) ensures condition in (40) for all α∈Ω, and thus the first part is proved.

Suppose that the LMIs of (48) are fulfilled for a certain degree \(\mathfrak{\hat{g}}\), that is, there exist \(\mathfrak{J}(\mathfrak{\hat{g}})\) matrices \(\bar{P}_{\mathfrak{K}_{j}(\hat{g})}\), \(T_{\mathfrak{K}_{j}(\hat{g})}\), \(N_{\mathfrak{K}_{j}(\hat{g})}\), \(K_{1_{\mathfrak{K}_{j}(\hat{g})}}\), \(E_{1_{\mathfrak{K}_{j}(\hat{g})}}\), \(F_{1_{\mathfrak{K}_{j}(\hat{g})}}\), and \(Q_{1_{\mathfrak{K}_{j}(\hat{g})}}\), \(j=1,\ldots,\mathfrak{J}(\mathfrak{\hat{g}})\), such that \(\bar{P}_{\mathfrak{\hat{g}}_{\alpha}}\), \(T_{\mathfrak{\hat{g}}_{\alpha}}\), \(N_{\mathfrak{\hat{g}}_{\alpha}}\), \(K_{1_{\mathfrak{\hat{g}}_{\alpha}}}\), \(E_{1_{\mathfrak{\hat{g}}_{\alpha}}}\), \(F_{1_{\mathfrak{\hat{g}}_{\alpha}}}\), and \(Q_{1_{\mathfrak{\hat{g}}_{\alpha}}}\), are homogeneous polynomially parameter-dependent matrices ensuring condition (40). Then, the terms of the polynomial matrices \(\bar{P}_{\alpha(\mathfrak{\hat{g}+1})}=(\alpha_{1}+\dots+\alpha_{N})\bar{P}_{\alpha(\mathfrak{\hat{g}})}\), \(T_{\alpha(\mathfrak{\hat{g}+1})}=(\alpha_{1}+\dots+\alpha_{N})T_{\alpha(\mathfrak{\hat{g}})}\), \(N_{\alpha(\mathfrak{\hat{g}+1})}=(\alpha_{1}+\dots+\alpha_{N})N_{\alpha(\mathfrak{\hat{g}})}\), \(E_{1\alpha(\mathfrak{\hat{g}+1})}=(\alpha_{1}+\dots+\alpha_{N})E_{1\alpha(\mathfrak{\hat{g}})}\), \(K_{1\alpha(\mathfrak{\hat{g}+1})}=(\alpha_{1}+\dots+\alpha_{N})K_{1\alpha(\mathfrak{\hat{g}})}\), \(F_{1\alpha(\mathfrak{\hat{g}+1})}=(\alpha_{1}+\dots+\alpha_{N})F_{1\alpha(\mathfrak{\hat{g}})}\), and \(Q_{1\alpha(\mathfrak{\hat{g}+1})}=(\alpha_{1}+\dots+\alpha_{N})Q_{1\alpha(\mathfrak{\hat{g}})}\) satisfy the LMIs of Theorem 4 corresponding to the degree \(\mathfrak{\hat{g}}+1\), which can be obtained in this case by a linear combination of the LMIs of Theorem 4 for \(\mathfrak{\hat{g}}\). □

It must be pointed out that when \(n_{h_{f}}=0\) or \(n_{v_{f}}=0\), by Theorem 5, we have the following corollary.

Case 1: \(n_{h_{f}}\neq 0, n_{v_{f}}=0\).

Corollary 6

Define

. Suppose that there exist symmetric parameter-dependent positive definite matrices

\(\bar{P}_{\mathfrak{K_{j}(g)}}>0 \)

and matrices

\(T_{\mathfrak{K_{j}(g)}}\), \(N_{\mathfrak{K_{j}(g)}}\), \(E_{1_{\mathfrak{K_{j}(g)}}}\), \(F_{1_{\mathfrak{K_{j}(g)}}}\), \(G_{1_{\mathfrak{K_{j}(g)}}}\), \(K_{1_{\mathfrak{K_{j}(g)}}}\), and

\(Q_{1_{\mathfrak{K_{j}(g)}}}\), \(\mathfrak{K_{j}(g)}\in\mathfrak{K(g)}\), \(j=1,\ldots,\mathfrak{J}(\mathfrak{g})\), such that the following LMIs hold for all

\(\mathfrak{K_{l}(g+1)}\in\mathfrak{K(g+l)}\), \(l=1,\ldots,\mathfrak{J}(\mathfrak{g+l})\):

. Suppose that there exist symmetric parameter-dependent positive definite matrices

\(\bar{P}_{\mathfrak{K_{j}(g)}}>0 \)

and matrices

\(T_{\mathfrak{K_{j}(g)}}\), \(N_{\mathfrak{K_{j}(g)}}\), \(E_{1_{\mathfrak{K_{j}(g)}}}\), \(F_{1_{\mathfrak{K_{j}(g)}}}\), \(G_{1_{\mathfrak{K_{j}(g)}}}\), \(K_{1_{\mathfrak{K_{j}(g)}}}\), and

\(Q_{1_{\mathfrak{K_{j}(g)}}}\), \(\mathfrak{K_{j}(g)}\in\mathfrak{K(g)}\), \(j=1,\ldots,\mathfrak{J}(\mathfrak{g})\), such that the following LMIs hold for all

\(\mathfrak{K_{l}(g+1)}\in\mathfrak{K(g+l)}\), \(l=1,\ldots,\mathfrak{J}(\mathfrak{g+l})\):

where

Then the homogeneous polynomially parameter-dependent matrices given by (47) ensure (42) for all α∈Ω. Moreover, if the LMI of (50) is fulfilled for a given degree \(\mathfrak{g}\), then the LMIs corresponding to any degree \(\mathfrak{g} > \mathfrak{\hat{g}}\) are also satisfied.

Similar to Corollary 6 \((n_{h_{f}}= 0, n_{v_{f}}\neq0)\), we have the following corollary.

Case 2: \(n_{h_{f}}= 0, n_{v_{f}}\neq0\).

Corollary 7

Define

Suppose that there exist symmetric parameter-dependent positive definite matrices

\(\bar{P}_{\mathfrak{K_{j}(g)}}>0 \)

and matrices

\(T_{\mathfrak{K_{j}(g)}}\), \(N_{\mathfrak{K_{j}(g)}}\), \(E_{1_{\mathfrak{K_{j}(g)}}}\), \(F_{1_{\mathfrak{K_{j}(g)}}}\), \(G_{1_{\mathfrak{K_{j}(g)}}}\), \(K_{1_{\mathfrak{K_{j}(g)}}}\), and

\(Q_{1_{\mathfrak{K_{j}(g)}}}\), \(\mathfrak{K_{j}(g)}\in\mathfrak{K(g)}\), \(j=1,\ldots,\mathfrak{J}(\mathfrak{g})\), such that the following LMIs hold for all

\(\mathfrak{K_{l}(g+1)}\in\mathfrak{K(g+l)}\), \(l=1,\ldots,\mathfrak{J}(\mathfrak{g+l})\):

Suppose that there exist symmetric parameter-dependent positive definite matrices

\(\bar{P}_{\mathfrak{K_{j}(g)}}>0 \)

and matrices

\(T_{\mathfrak{K_{j}(g)}}\), \(N_{\mathfrak{K_{j}(g)}}\), \(E_{1_{\mathfrak{K_{j}(g)}}}\), \(F_{1_{\mathfrak{K_{j}(g)}}}\), \(G_{1_{\mathfrak{K_{j}(g)}}}\), \(K_{1_{\mathfrak{K_{j}(g)}}}\), and

\(Q_{1_{\mathfrak{K_{j}(g)}}}\), \(\mathfrak{K_{j}(g)}\in\mathfrak{K(g)}\), \(j=1,\ldots,\mathfrak{J}(\mathfrak{g})\), such that the following LMIs hold for all

\(\mathfrak{K_{l}(g+1)}\in\mathfrak{K(g+l)}\), \(l=1,\ldots,\mathfrak{J}(\mathfrak{g+l})\):

where

Then the homogeneous polynomially parameter-dependent matrices given by (47) ensure (44) for all α∈Ω. Moreover, if the LMI of (51) is fulfilled for a given degree \(\mathfrak{g}\), then the LMIs corresponding to any degree \(\mathfrak{g} > \mathfrak{\hat{g}}\) are also satisfied.

Case 3: \(n_{h_{f}}=0\), \(n_{v_{f}}=0\).

Corollary 8

Suppose that there exist symmetric parameter-dependent matrices \(P_{\mathfrak{K_{j}(g)}}>0 \), \(E_{\mathfrak{K_{j}(g)}}\), \(F_{\mathfrak{K_{j}(g)}}\), \(K_{\mathfrak{K_{j}(g)}}\), and \(Q_{\mathfrak{K_{j}(g)}}\) \(\mathfrak{K_{j}(g)}\in\mathfrak{K(g)}\), \(j=1,\ldots,\mathfrak{J}(\mathfrak{g})\), such that the following LMIs hold for all \(\mathfrak{K_{l}(g+1)}\in\mathfrak{K(g+l)}\), \(l=1,\ldots,\mathfrak{J}(\mathfrak{g+l})\):

where

Then the homogeneous polynomially parameter-dependent matrices given by (47) ensure (46) for all α∈Ω. Moreover, if (52) is fulfilled for a given degree \(\mathfrak{g}\), then the LMIs corresponding to any degree \(\mathfrak{g} > \mathfrak{\hat{g}}\) are also satisfied.

Remark 6

Theorem 5 presents a sufficient condition for the solvability of the reduced-order H ∞ filtering problem. A reduced-order H ∞ filter can be selected by solving the following convex optimization problem:

5 Numerical Examples

Example 1

The system under consideration corresponds to the uncertain 2-D continuous system (1)–(3) with matrices given by

By solving the convex optimization problem in (53), when the parameters λ 1=0.8851 and λ 2=1.0568 are searched, according to Theorem 5, Corollaries 6, 7, and 8, the following filter matrices were obtained:

Case 1: \(n_{h}=1, n_{v}=1, n_{h_{f}}=1, n_{v_{f}}=1\), γ=0.8272.

Case 2: \(n_{h}=1, n_{v}=1, n_{h_{f}}=0, n_{v_{f}}=1\), γ=0.9984.

Case 3: \(n_{h}=1, n_{v}=1, n_{h_{f}}=1, n_{v_{f}}=0\), γ=1.0030.

Case 4: \(n_{h}=1, n_{v}=1, n_{h_{f}}=0, n_{v_{f}}=0\), γ=1.0041.

For comparison, Theorem 3 with λ 1=−0.0031 and λ 1=0.0057 provides a guaranted H ∞ cost 0.8272, while [38] yields 0.8936.

Example 2

Consider an uncertain 2-D continuous system (1)–(3) with the following system matrices [3]:

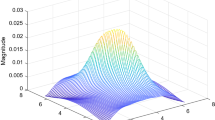

H ∞ upper bounds for the error dynamics have been computed by means of the conditions of Theorem 5 for \(\mathfrak{\hat{g}}=0,\ldots, 3\): λ 1=0.2972,λ 2=0.2940 are searched, with the results and the numbers K of scalar variables and L of LMI rows shown in Table 1.

In the full-order case, with \(\mathfrak{g}=1\) (linearly parameter-dependent approach) and λ 1=0.2972,λ 2=0.2940, Theorem 5 provides a guaranteed H ∞ cost of 0.6157, while the method provided by Corollary 1 in [38] is infeasible, and Corollary 1 yields 0.6202. It is clear that the conditions from Theorem 5 provide the best results. The H ∞ performance value achieved with parameter searching and the corresponding filter for different orders are the following:

Case 1: \(n_{h}=2, n_{v}=2, n_{h_{f}}=2, n_{v_{f}}=2\), γ=0.6157.

Case 2: \(n_{h}=2, n_{v}=2, n_{h_{f}}=2, n_{v_{f}}=1\), γ=0.6353.

Case 3: \(n_{h}=2, n_{v}=2, n_{h_{f}}=1, n_{v_{f}}=2\), γ=0.6604.

Case 4: \(n_{h}=2, n_{v}=2, n_{h_{f}}=1, n_{v_{f}}=1\), γ=0.6653.

Case 5: \(n_{h}=2, n_{v}=2,n_{h_{f}}=0, n_{v_{f}}=0\), γ=0.8866.

From the comparison it can be seen that the proposed result is less conservative than those given in Corollary 1 and [38].

6 Conclusion

A solution to the reduced-order H ∞ filtering problem has been provided for uncertain 2D systems to solve the H ∞ filter problem of the 2D continuous systems in the Roesser state space model, with uncertain matrices belonging to a given polytope. The proposed methodology, based on using polynomially parameter-dependent matrices and slack variables, provides less conservative results than those in the literature by using extra degrees of freedom in the solution space. Some numerical examples have been provided to demonstrate the feasibility and effectiveness of the proposed methodology.

It must be pointed out that the proposed approach could be extended to other related problems, such as Marchesini–Fornasini models, or even multidimensional systems of more than two dimensions (see [1] and [24]).

References

P. Agathoklis, The Lyapunov equation for n-dimensional discrete systems. IEEE Trans. Circuits Syst. CAS-35, 448–451 (1988)

M. Benhayoun, A. Benzaouia, F. Mesquine, F. Tadeo, Stabilization of 2D continuous systems with multi-delays and saturated control, in Proceedings of the 18th Mediterranean Conference on Control and Automation, MED’10, (2010), pp. 993–999

P.A. Bliman, R.C.L.F. Oliveira, V.F. Montagner, P.L.D. Peres, Existence of homogeneous polynomial solutions for parameter-dependent linear matrix inequalities with parameters in the simplex, in Proceedings of the 45th IEEE Conference on Decision & Control, San Diego, CA, USA (2006), pp. 13–15

H.C. Choi, D. Chwa, S.K. Hong, LMI approach to robust reduced-order H ∞ filter design for polytopic uncertain systems. Int. J. Control. Autom. Syst. 7, 487–494 (2009)

J.R. Cui, G.D. Hu, Q. Zhu, Stability and robust stability of 2D discrete stochastic systems. Discrete Dyn. Nat. Soc. 2011, 545361 (2011), 11 pp.

C.E. De Sousa, L. Xie, Y. Wang, H ∞ filtering for a class of uncertain nonlinear systems. Syst. Control Lett. 20, 419–426 (1993)

C. Du, L. Xie, H ∞ Control and Filtering of Two-Dimensional Systems (Springer, Heidelberg, 2002)

C. Du, L. Xie, Y.C. Soh, H ∞ Reduced-order approximation of 2-D digital filters. IEEE Trans. Circuits Syst. I, Fundam. Theory Appl. 48(6), 688–698 (2001)

Z. Duan, J. Zhang, C. Zhang, E. Mosca, Robust H 2 and H ∞ filtering for uncertain linear systems. Automatica 42, 1919–1926 (2006)

C. El-Kasri, A. Hmamed, T. Alvarez, F. Tadeo, Uncertain 2D continuous systems with state delay: filter design using an H ∞ polynomial approach. Int. J. Comput. Appl. 44(18), 13–21 (2012)

C. El-Kasri, A. Hmamed, E.H. Tissir, F. Tadeo, Robust H ∞ filtering for uncertain two-dimensional continuous systems with time-varying delays. Submitted to Multidimens. Syst. Signal. Process. (2012)

C. El-Kasri, A. Hmamed, E.H. Tissir, F. Tadeo, Robust H ∞ filtering of 2D Roesser discrete systems: a polynomial approach. Math. Probl. Eng. 2012, 1–15 (2012)

A. Elsayed, M.J. Grimble, An approach to H ∞ design of optimal digital filters. IMA J. Math. Control Inf. 6, 233–251 (1989)

K. Galkowski, LMI based stability analysis for 2D continuous systems. Int. Conf. Electron. Circuits Syst. 3, 923–926 (2002)

M. Ghamgui, N. Yeganefar, O. Bachelier, D. Mehdi, Stability and stabilization of 2D continuous time varying delay systems. Int. J. Sci. Tech. Autom. Control Comput. Eng. 6(1), 1734–1745 (2012)

K.M. Grigoriadis, J.T. Watson, Reduced-order H ∞ and L 2–L ∞ filtering via linear matrix inequalities. IEEE Trans. Aerosp. Electron. Syst. 33, 1326–1338 (1997)

T. Hinamoto, 2-D Lyapunov equation and filter design based on Fornasini–Marchesini second model. IEEE Trans. Circuits Syst. I, Fundam. Theory Appl. 40(2), 102–110 (1993)

A. Hmamed, M. Alfidi, A. Benzaouia, F. Tadeo, LMI conditions for robust stability of 2-D linear discrete-time systems. Math. Probl. Eng. 2008, 356124 (2008), 11 pp.

A. Hmamed, F. Mesquine, F. Tadeo, M. Benhayoun, A. Benzaouia, Stabilization of 2-D saturated systems by state feedback control. Multidimens. Syst. Signal Process. 21(3), 277–292 (2010)

A. Hmamed, C. El-Kasri, E.H. Tissir, T. Alvarez, F. Tadeo, Robust H ∞ filtering for uncertain 2-D continuous systems with delays. Int. J. Innov. Comput. Inf. Control 9(5), 2167–2183 (2013)

J. Klamka, Controllability of nonlinear 2-D systems. Nonlinear Anal. Theory Methods Appl. 30(5), 2963–2968 (1997)

J. Klamka, Controllability of infinite-dimensional 2-D linear systems. Adv. Syst. Sci. Appl. 1(1), 537–543 (1997)

M.J. Lacerda, R.C.L.F. Oliveira, P.D. Peres, Robust H 2 and H ∞ filter design for uncertain linear systems via LMIs and polynomial matrices. Signal Process. 91, 1115–1122 (2011)

Z. Lin, Feedback stabilizability of MIMO n-D linear systems. Multidimens. Syst. Signal Process. 9, 149–172 (1998)

W.S. Lu, A. Antonio, Two-Dimensional Digital Filters (Marcel Dekker, New York, 1992)

N.E. Mastorakis, M. Swamy, A new method for computing the stability margin of two dimensional continuous systems. IEEE Trans. Circuits Syst. I, Fundam. Theory Appl. 49(6), 869–872 (2002)

P.G. Park, T. Keileth, H ∞ via convex optimization. Int. J. Control 66, 15–22 (1997)

W. Paszke, E. Rogers, K. Galkowski, H 2/H ∞ output information-based disturbance attenuation for differential linear repetitive processes. Int. J. Robust Nonlinear Control 21(17), 1981–1993 (2011)

U. Shaked, H ∞ minimum error state estimation of linear stationary processes. IEEE Trans. Autom. Control 35, 554–558 (1990)

H.D. Tuan, P. Apkarian, T.Q. Nguyen, Robust and reduced-order filtering: new LMI based characterizations and methods. IEEE Trans. Signal Process. 49, 2975–2984 (2001)

H.D. Tuan, P. Apkarian, T.Q. Nguyen, T. Narikiys, Robust mixed H 2/H ∞ filtering of 2-D systems. IEEE Trans. Signal Process. 50, 1759–1771 (2002)

A.G. Wu, H. Dong, G. Duan, Improved Robust H ∞ estimation for uncertain continuous-time systems. J. Syst. Sci. Complex. 20, 362–369 (2007)

L. Wu, J. Lam, W. Paszke, K. Galkowski, E. Rogers, Robust H ∞ filtering for uncertain differential linear repetitive processes. Int. J. Adapt. Control Signal Process. 22, 243–265 (2008)

L. Xie, C. Du, C. Zhang, Y.C. Soh, H 2/H ∞ deconvolution filtering of 2-D digital systems. IEEE Trans. Signal Process. 50, 2319–2332 (2003)

S. Xu, J. Lam, Reduced-order H ∞ filtering for singular systems. Syst. Control Lett. 56, 48–57 (2007)

S. Xu, P. Van Dooren, Robust H ∞ filtering for a class of nonlinear systems with state delay and parameter uncertainty. Int. J. Control 75, 766–774 (2002)

L. Xu, O. Saito, K. Abe, Output feedback stabilizability and stabilization algorithms for 2-D systems. Multidimens. Syst. Signal Process. 5(1), 41–60 (1994)

S. Xu, J. Lam, Y. Zou, Z. Lin, W. Paszke, Robust H ∞ filtering for uncertain 2-D continuous systems. IEEE Trans. Signal Process. 53, 1731–1738 (2005)

Acknowledgements

This work is funded by AECI A/030426/10, AP/034911/11 and MiCInn DPI2010-21589-c05-05.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

El-Kasri, C., Hmamed, A. & Tadeo, F. Reduced-Order H ∞ Filters for Uncertain 2-D Continuous Systems, Via LMIs and Polynomial Matrices. Circuits Syst Signal Process 33, 1189–1214 (2014). https://doi.org/10.1007/s00034-013-9689-x

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-013-9689-x