Abstract

This paper adopts an optimization-oriented exponential-polynomial-closure (OEPC) approach for conducting probabilistic analyses of oscillators under correlated multi-power velocity multiplicative excitation and additive excitation using the idea of exponential polynomial and for extending the conventional EPC method. While the EPC method uses the projection of the residue of the reduced FPK equation to formulate algebraic equations, the OEPC approach minimizes the residue square to handle the stochastic problem. The optimization process entails constructing an objective function (OBJ) with a weight function. The unknown coefficients in the exponential polynomial for estimating the probabilistic solution are then determined by minimizing the OBJ using a gradient-based method. The OEPC approach leads to an approximate probabilistic solution to the system response, which allows for statistical evaluations, such as the mean up-crossing rate. Four examples are provided to demonstrate the effectiveness of the OEPC approach in computing the asymmetric probabilistic solution of the stochastic oscillators with both odd and even nonlinear terms and subjected to correlated multi-power velocity multiplicative excitation and additive excitation. In particular, the verification of the solutions is done by comparison with both Gaussian closure method and Monte Carlo simulation to test the accuracy and reliability of the OEPC approach.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The probabilistic solutions of random systems have numerous applications in the field of engineering, including the study of structural reliability and risk analysis. Unpredictable failure can appear under random loading conditions, which may result in a significant impact on the safety and reliability of the structures like dams, bridges and towers [1, 2]. Random and unpredictable natural phenomena, such as wind, can pose a hazard to buildings and other structures. There were some well-known events that were caused by wind flow, i.e. Tacoma Bridge event (1940) [3], Lodemann Bridge event (1972) [4] and Humen Bridge event (2020) [5]. For the wind-excited or self-excited tower, dynamic analysis has been applied to explore the impact of turbulence on towers, as evidenced in several studies [6,7,8,9]. However, relatively few studies have been conducted on the probabilistic analysis of the system considering the randomness of wind turbulence. In this article, a probabilistic analysis is conducted on an established system through optimization-oriented exponential-polynomial-closure (OEPC) approach. This approach is based on the exponential polynomial assumption of the traditional EPC method [10, 11] and extends it through an optimization solution procedure.

There are numerous methods to acquire the probabilistic solution, namely the probability density function (PDF), for the responses of random systems. Although exact solutions are rare to handle practical issues or problems, such solutions remain the first choice whenever available [12, 13]. Numerical methods such as Monte Carlo Simulation (MCS) are commonly employed for obtaining the numerical solutions. However, the application of MCS is always constrained by the inefficiency and time consuming [14,15,16,17,18].

The semi-analytical methods are frequently employed in handling real-world problems. Moment functions of first two orders can be obtained using equivalent linearization [19,20,21]. The Gaussian closure method (GCM) can be applied to solve weakly nonlinear problems using lower-order moments [22, 23]. Building upon the equivalent linearization method, the statistical linearization method combines the harmonic balance method to solve the system under both periodic and random excitation [24]. Utilizing the path integration (PI) technique, it is feasible to obtain the PDF of a given system without solving Fokker-Planck-Kolmogorov (FPK) equation [25,26,27,28]. The cell mapping method divides the state space into a limited quantity of cells, being computationally efficient than the path integration method in some cases [29,30,31]. An extrapolation approach is recently developed to improve the computational efficiency of PI, exhibiting high solution accuracy between the current and the extrapolated points [32]. The computational efficiency of PI can also be improved by utilizing sparse PDF expansion, which can be determined by \(L_{1/2}\)-norm minimization formulation [33]. The stochastic averaging method is applicable for the weakly damped and excited system [34,35,36]. Basing on a high-order finite difference procedure and utilizing the inverse Fourier transformation, the PDF solution can be estimated [37]. The random vibration behavior of a nonlinear oscillator can be examined through the application of the nonlinear energy sink method [38, 39]. By developing the pole-residue transfer function, the pole-residue method is able to provide nonstationary response statistics for the systems subjected to modulated white noise excitation [40]. The exponential-polynomial-closure (EPC) method can solve strongly nonlinear problems, particularly for estimating the tails of PDF solution, as well as the system under Gaussian or Poisson white noise, parametric excitation and with various nonlinear terms [10, 41,42,43]. As for high-dimensional problems, it is suggested to combine the state-space-split method for applications, such as in the analysis of geometrically nonlinear plates [44], stretched Bernoulli beams [45], and the random characteristics analysis of cables [46]. By the results from detailed balance method, the EPC method is further extended [47, 48]. Recently, the EPC method has been expanded to acquire the transient or non-stationary PDF solutions of nonlinear stochastic oscillators [11, 49,50,51,52].

This paper presents the OEPC approach for random vibration analysis, which introduces an alternative way for determining the values of variables in the traditional EPC expression. Utilizing the expression of exponential polynomial, the objective function (hereafter referred to as OBJ) is devised to be the squared residual error of the FPK equation. The unknown coefficients of OEPC are then determined by minimizing the OBJ via a gradient-based method. With this approach, this paper provides an approximate PDF solution for the system response, enabling statistical evaluations such as the mean up-crossing rate (MCR) analysis. To assess the precision of the outcome and the feasibility of the method, four examples based on the established governing equation are given in this paper. The first example is about a system with independent excitations, the second one is about a system with half-correlated excitations, and the third one is about a system with fully correlated excitations. The last example aims to evaluate the accuracy of the solution for higher values of coefficients in the nonlinear damping part. The effectiveness of the presented approach in solving nonlinear oscillations under correlated additive excitation and multiplicative excitation (on powered velocity) has been verified based on the results obtained from the examples.

2 Formulation of FPK equation

The stochastic oscillator being analyzed can be mathematically expressed using Eq. (1).

where \(\Psi \) and \(\dot{\Psi }\) are the displacement and velocity, respectively, and \([\Psi ,\dot{\Psi }]\in {\mathbb {R}}^2\); \(\xi \) and \(\omega _0\) are the damping ratio and natural frequency, respectively; \(c_1\) and \(c_2\) are the coefficients of \(\dot{\Psi }^2\) and \(\dot{\Psi }^3\), respectively. It is noted that the term \(c_1\dot{\Psi }^2\) can cause even nonlinearity and \(c_3\dot{\Psi }^3\) can cause odd nonlinearity in the oscillator. \(c_3\) denotes a constant. The system is subjected to three unit Gaussian white noise excitations denoted as \(\Omega _i(t)\) \((i=1,2,3)\) with power spectral density (PSD) \(\frac{1}{2\pi }\). \(\Omega _1(t)\) corresponds to the multiplicative excitation of the cubic velocity term (\(\dot{\Psi }^3\)), and \(\Omega _2(t)\) corresponds to the multiplicative excitation of the primary velocity term (\(\dot{\Psi }\)). These two denote the multi-power velocity multiplicative excitation. \(\Omega _3(t)\) corresponds to the additive excitation. The coefficients of each excitation, \(\gamma _i\) \((i=1,2,3)\), reflect the intensities of their respective parts.

Without loss of generality, the excitation described in Eq. (1) is capable of representing the Gaussian white noise with a power spectral density that is not equal to \(\frac{1}{2\pi }\). For instance, one can set \(\Omega _3^e(t)=\gamma _3 \Omega _3(t)\), where the power spectral density of \(\Omega _3^e(t)\) will be \(\frac{\gamma _3^2}{2\pi }\).

The current scope of this study is limited to solving problems under Gaussian excitations. However, since Poisson white noise (a typical non-Gaussian excitation) is also governed by the FPK equation [43], the OEPC method is still feasible. Therefore, the procedure for solving the problem under Gaussian excitation can be extended to solve the problem under non-Gaussian excitation using different types of FPK equations.

Setting \(\Psi =\Psi _1\), \(\dot{\Psi }=\Psi _2\) and \(h\left( \Psi _1,\Psi _2\right) =2 \xi \omega _0 \Psi _2+\omega _0^2 \Psi _1+c_1 \Psi _2^2 + c_2 \Psi _2^3 + c_3\), the Stratonovich form of Eq. (1) can be written as

\(\Omega _{1,2,3}(t)\) are characterized by

where \(\tau \) denotes the time lag; \(\delta \) denotes the Dirac function; \(\rho _{ij}\) is the correlation coefficient between \(\Omega _i(t)\) and \(\Omega _j(t)\).

The formulation of the following reduced FPK equation for the stationary PDF solution \(p\left( \psi _1,\psi _2\right) \) is a consequence of the Markovian property of the two random processes \(\Psi _1\) and \(\Psi _2\) in Eq. (2).

where

In addition, it is assumed that \(p(\psi _1,\psi _2)\) fulfills the following conditions.

3 Procedure of OEPC approach

3.1 Formulation of residual error

In accordance with the conventional EPC methods, the PDF solution to Eq. (4) is given as follows [10].

where \(\varvec{\psi }\) denotes \([\psi _1,\psi _2]\); \(\varvec{\alpha }\) is the coefficient vector to be determined; \(\tilde{p}_n\) is the approximate PDF with the same order as \(Q_n\); C is a normalization constant; the polynomial, \(Q_n\), is expressed as

where the highest order of \(\psi _i\) in \(Q_n\) is denoted by n; the number of components in \(Q_n\) is \(N=\frac{n}{2}\left( n+3\right) \); \([\alpha _1,\alpha _2,...,\alpha _{N}]^T\) forms the coefficient vector \(\varvec{\alpha }\); further details regarding the terms in \(Q_n\) are exhibited in Table 1.

The left-hand side of Eq. (4) can be used to obtain the residual error \(\Delta _n\) by substituting Eq. (7) into it.

The expression presented in Eq. (9) can be further simplified as

where

3.2 Construction of OBJ

In Eq. (10), it is observed that \(\Delta _n(\varvec{\psi };\varvec{\alpha })\) consists of two components, namely \(\tilde{p}_n(\varvec{\psi };\varvec{\alpha })\) and \(r_n(\varvec{\psi };\varvec{\alpha })\). The first component, \(\tilde{p}_n(\varvec{\psi };\varvec{\alpha })\), is obtained from Eq. (7), and solely contains exponential terms, ensuring it positive. Hence, to minimize \(\Delta (\varvec{\psi };\varvec{\alpha })\) towards zero, the second component, \(r_n(\varvec{\psi };\varvec{\alpha })\), must approach zero. Therefore, the expression of \(r_n(\varvec{\psi };\varvec{\alpha })\), Eq. (11) is adopted to formulate the OBJ for determining the values of \(\varvec{\alpha }\).

where \(\Theta _n\) is the formulated OBJ; \(\hat{p}_2(\varvec{\psi })\) is the weighting function which is from GCM, allowing the weighted error to be integrated in closed form.

The weighting function \(\hat{p}_2(\varvec{\psi })\) is designed to calculate the integral of the constructed OBJ within \((-\infty ,+\infty )\) by replacing the integral terms with the corresponding expectation values. Therefore, the weighting function ensures the integral to be calculated analytically. Comparing to the numerical integration in a finite rage, i.e., \([m-4\sigma ,m+4\sigma ]\), the analytical integration can improve the accuracy of the result. Therefore, the weighting function enhances the accuracy of the integral calculations.

By employing a gradient-based method, the values of \(\varvec{\alpha }\) can be determined through Eq. (13).

In the optimization procedure, finding the minimum value of OBJ requires an initial guess, denoted as \(\varvec{\alpha }^{(0)}\), to determine the values of the unknown variables. According to empirical evidence, using a Gaussian initial guess helps to achieve a converged solution. A good initial estimate assists in yielding a quality solution and reducing computational time. The detailed procedures for determining \(\hat{p}_2(\varvec{\psi })\) and \(\varvec{\alpha }^{(0)}\) are presented.

3.3 Weighting function and initial coefficient

When building the OBJ, introducing the weighting function into the spatial integration Eq. (12) helps to improve the integral accuracy and simplify the calculation. \(\hat{p}_2(\varvec{\psi })\) is Gaussian type PDF, which can be expressed as

where \(C_2\) serves as a constant for achieving normalization in the equation; \(\varvec{\eta }\) is a coefficient vector that can be estimated by GCM as follows.

Linearizing the system from Eq. (2) gives the linearization coefficient equations, which are expressed by

where \(\nu _1\), \(\nu _2\), and \(\nu _3\) are parameters in the linearized system Eq. (16).

The moment equations corresponding to Eq. (16) are listed in Eq. (17).

Equations (15 and 17) contains multiple unknown moments such as \(E[\Psi _1^i]\) and \(E[\Psi _2^i] (i=1,2,3, etc)\). Through simplifying with Eq. (18), only the first two order moments are retained.

After simplification, Eqs. (15 and 17) can be solved by iterative procedure, leading to the values of \(\nu _1\), \(\nu _2\), \(\nu _3\), \(E[\Psi _1]\), \(E[{\Psi _2}]\), \(E[\Psi _1^2]\) and \(E[{\Psi _2}^2]\). Then the unknowns in Eq. (14) can be given by:

Using the weight function, the integral \(\int _{-\infty }^{+\infty }\int _{-\infty }^{+\infty }*\hat{p}_2d\psi _1d\psi _2\) in Eq. (12) can be directly replaced by the value of \(E[*]\), where \(*\) denotes the polynomial term in the expansion of \(r_n^2\). Additionally, Eq. (18) enables the derivation of higher-order moments, which correspond to higher order terms in \(r_n^2\). Therefore, by utilizing \(\hat{p}_2\), Eq. (12) can be analytically integrated within \((-\infty ,+\infty )\).

Based on Eq. (19), the initial coefficient required to start the optimization procedure can be obtained. Setting \(\alpha ^{(0)}_i=0\) for \(i\ge 6\), the initial coefficient vector can be expressed as \(\varvec{\alpha }^{(0)}=[\eta _1,\eta _2,\eta _3,\eta _4,\eta _5,0,0,\ldots ,0]\).

3.4 Optimization solution procedure

To solve Eq. (13), the Broyden-Fletcher-Goldfarb-Shanno (BFGS) method is adopted [53,54,55,56]. The BFGS method is a type of quasi-Newton method, which is also a gradient-based method [57, 58]. The fundamental theory of BFGS can be represented by the following equation [59].

where j denotes the number of iteration; \(k_j\) is a coefficient to be determined in the procedure of BFGS; \(G_j\) is the estimated inverse Hessian matrix; \(\nabla \Theta _n(\varvec{\alpha ^{(j)}})\) denotes the gradient of \(\Theta _n\).

The BFGS involves initializing an approximate inverse Hessian and updating it at each iteration. The quasi-Newton method are so-called because of using an approximation inverse Hessian instead of the true one [60]. Since computing the inverse of Hessian by the original Newton method can be prohibitively expensive, the BFGS method improves the computational efficiency by updating the approximate inverse Hessian matrix without having to compute it directly [61]. The detailed procedure of BFGS algorithm is shown in Table 2.

In Table 2, \(I_N\) is an identity matrix; N is the number of variables in \(\varvec{\alpha }\); \(\varepsilon _0\) is the iteration stopping criteria which is taken as \(10^{-8}\); \(||*||\) refers to Euclidean norm. The function \(\text {argmin}_{k\ge 0} \left[ \Theta _n(\varvec{\alpha }^{(j)}+k\varvec{d}^{(j)})\right] \) means that, under the condition \({k\ge 0}\), the value of k makes \(\Theta _n(\varvec{\alpha }^{(j)}+k\varvec{d}^{(j)})\) minimized, which can be regarded as a one-dimensional optimization problem. The problem can be solved by Golden section search method [60]. The value of k can also be approximated by \(-\frac{(g^{(j)})^Td^{(j)}}{(d^{(j)})^T Hd^{(j)}}\) which is adopted in the following analysis [62].

As shown in Table 2, BFGS is able to ensure a positive-definite matrix approximation that gets updated at every iteration, improving the second-order derivative along the search direction. It is noted that the original Newton method cannot guarantee the positive definiteness of Hessian matrix [63, 64]. The loss of the positive definiteness can result in the loss of minimum point in the optimization procedure. Therefore, by approximating the inverse Hessian with a positive-definite matrix, the BFGS method enhance the solution procedure by providing an accurate estimate of the Hessian’s inverse with significantly less computational cost [62, 65].

4 Example of probability analysis

In this paper, the performance of the OEPC approach is evaluated through the analyses of four different oscillators. The first one is excited using three independent noise sources with \(\rho _{i,j}=0\) \((i\ne j)\). The second one is subjected to three correlated noise sources, where \(\rho _{i,j}=0.5\) \((i\ne j)\). The third one is excited by fully correlated noise sources, where \(\rho _{i,j}=1\) for all \(i\ne j\). The fourth oscillator extends the second one with increased nonlinearity. The accuracy of OEPC solutions is assessed by performing a comparison with MCS. Additionally, the results are also compared with those from GCM in each example. All calculations are conducted on a computer equipped with ’CPU: Intel\(\textcircled {{R}}\) Core\(^\text {TM}\) i9-12900 H Processor (24 M Cache, up to 5.00 GHz); Memory Module: DDR5(4800Mhz, 16.00GB); and SSD DISK: 512 GB’.

4.1 Example 1

The values of system parameters in Eq. (1) are specified by \(\xi =0.1\), \(\omega _0=1\), \(c_1=0.2\), \(c_2=0.4\), \(c_3=-0.5\), \(\gamma _1=0.05\), \(\gamma _2=0.5\), \(\gamma _3=0.01\). Then the governing equation is given as Eq. (21).

The correlation coefficient matrix of the three independent noises is expressed as

The OBJ for Eq. (1) is specified as

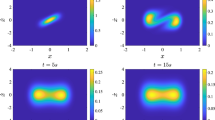

The minimum of \(\Theta _n(\varvec{\alpha })\) is located through the optimization procedure. The obtained PDF solution \(p(\psi _1,\psi _2)\) is plotted within \([m-4\sigma ,m+4\sigma ]\) in Fig. 1. m and \(\sigma \) denote the mean and standard deviation from GCM in all examples. \(m_i\) and \(\sigma _i\) correspond to the state variable \(\psi _i\) (\(i=1,2\)).

The estimated \(p(\psi _1,\psi _2)\) is shown in Fig. 1a. Figure 1b presents the solution of MCS with the sample size \(3\times 10^8\). The PDF solution obtained through the OEPC method shows a strong resemblance to the PDF solutions derived from MCS, as can be noted by observation. The PDFs \(p(\psi _1)\) and \(p(\psi _2)\) can be got by integrating \(p(\psi _1,\psi _2)\). The logarithmic marginal PDFs from MCS, GCM and OEPC (\(n=6\)) are plotted in Fig. 2.

In Fig. 2, the curve of GCM represents the solution from GCM, which is also used as the initial guess for the OEPC method. As depicted in Fig. 2, the PDFs of OEPC exhibit excellent agreement with the solutions of MCS, and significantly improve in comparison to the solutions obtained via GCM.

In application, the MCR is used to estimate the reliability of extreme events such as wind loads or fatigue crack growth in structures [66, 67]. MCR can also be used in the benchmark studies to evaluate the accuracy and reliability of civil engineering structures [68, 69]. It is known that MCR (\(\nu _G^+\)) from GCM can be directly obtained by Eq. (24) and non-Gaussian MCR (\(\nu ^+\)) need to be integrated by Eq. (25) [70].

The MCR and \(\log _{10}\text {MCR}\) are illustrated in Fig. 3. It demonstrates that the MCR obtained by the OEPC approach almost coincides with that of MCS and significantly surpasses the GCM result. These findings indicate the advantage of the OEPC technique. In addition, the total time of OEPC (45 s) is the sum of the time spent by GCM (1.95s) and the time spent on formulation and optimization procedures (42.75s). The time required for computation of MCS (\(3\times 10^8\)) is 3257 s, while the OEPC approach with \(n=6\) only requires 45 s, which highlights the efficiency of the OEPC approach.

4.2 Example 2

Equation (21) can also be used to express the system in Example 2. However, the correlation coefficient matrix for the three noises differs from that in Example 1. The matrix is given as follows:

The PDF solutions of the OEPC and MCS within \([m-4\sigma ,m+4\sigma ]\) are presented in Fig. 4. Notably, the \(p(\psi _1,\psi _2)\) obtained from the OEPC method remains excellent agreement with that determined by the MCS.

In Fig. 5, the logarithmic marginal PDF solutions are plotted with MCS, GCM and OEPC (\(n=6\)). The corresponding figures reveal that the PDFs yielded by the OEPC method are almost identical to those of MCS and outperform those by the GCM approach.

The MCR comparisons among OEPC, MCS and GCM are presented in Fig. 6. The OEPC’s MCR aligns favorably with MCS’s, surpassing GCM’s significantly. The total time of OEPC (53 s) is the sum of the time spent by GCM (1.88s) and the time spent on formulation and optimization procedures (50.66s). Remarkably, the OEPC technique completes the calculations in just 53 s, whereas the MCS (\(3\times 10^8\)) requires 3223 s. This indicates that the OEPC method offers a speed advantage of approximately 60 times compared to MCS in this case. These results suggest that the OEPC method has the potential to enhance the efficiency and accuracy for the probabilistic analysis.

4.3 Example 3

The system parameters in Example 3 are the same as those in Eq. (21), except for the correlation coefficient matrix of the noises. The matrix is expressed as follows:

The matrix Eq. (27) is not positive definite, meaning that the computation of the Cholesky decomposition for \(\rho \) is invalid, making the MCS procedure incapable. Thus, it is essential to provide an alternative way to address this issue. Since the PSDs of \(\Omega _i(t)\) \((i=1,2,3)\) are the same in this case, it is possible to describe the analyzed oscillator with a single noise term, which is denoted as \(\Omega (t)=\Omega _{i}(t)\). Hence, Eq. (21) can be further expressed as

In real application, such as the towers or bridges that are subjected to wind from different directions, the external and parametric excitations are typically considered from one wind source, resulting in full correlation. Consequently, as demonstrated in several studies [6,7,8,9], the system subjected to a single wind source can be used to analyze the impact of wind turbulence on frame towers, which provides a realistic background for Eq. (28).

The PDF solutions to Eq. (28) are presented in Fig. 7. In this case, the bivariate PDF of OEPC (with \(n=6\)) exhibits a remarkable similarity to the solution from MCS within \([m-4\sigma ,m+4\sigma ]\).

Furthermore, in Fig. 8, the logarithmic marginal PDF solutions of OEPC are almost identical to those of MCS and superior to those of GCM.

The MCRs from MCS, GCM and OEPC (\(n=6\)), are presented in Fig. 9, where it can be observed that the MCR from OEPC is consistent with MCS and surpasses that of GCM. Furthermore, the total time of OEPC (53 s) is the sum of the time spent by GCM (1.20s) and the time spent on formulation and optimization procedures (51.72s). The computational time of MCS is 2929 s, while OEPC only takes 53 s, resulting in a significant time reduction.

4.4 Impact of correlation coefficients

Examples 1, 2 and 3 correspond to the systems under independent excitations, half-correlated excitations, and fully correlated excitations, respectively. Comparing the logarithmic PDF solutions of the three examples in Fig. 10, it can be observed that the length of their left and right arms is different. This indicates that the correlation coefficients have an influence on the tail behavior of the PDF solution, which is similar to the phenomenon described in [71].

The difference in logarithmic PDFs is attributed to the variation in the PDF solutions from Figs. 1, 4 and 7. Since the contour lines in the bottom right quadrant of Fig. 1 are slightly denser than those in Figs. 4 and 7, it indicate a steeper joint PDF and faster changes of the values in that region. In addition, the peak in Fig. 7 moves slightly to the upper left compared to those in Figs. 4 and 1. Consequently, the density and peak position of the contour lines exhibit slight variations corresponding to the changes in correlation coefficients.

Furthermore, the comparison of Figs. 1, 4 and 7 is performed in Figs. 11, 12 and 13 within the union domain, where \(\Psi \in [-1.56,2.48]\) and \(\dot{\Psi }\in [-2.02,2.02]\).

The PDF solutions in Example 1, 2 and 3 (denoted as \(p_1\), \(p_2\) and \(p_3\), respectively) are compared pairwise in Figs. 11, 12 and 13. Despite the presence of random factors in the comparison conducted by MCS, both MCS and OEPC yield similar magnitudes of solution differences. The shapes of the contour lines of OEPC and MCS are nearly identical, providing additional evidence for the credibility of this comparison. Furthermore, Figs. 11, 12 and 13 directly illustrate the influence of correlation coefficients on the system.

In summary, the correlation between noise sources has an impact on the pattern of PDF solutions. In a 2D PDF image, the variation of the correlation coefficient can changes the density and peak position of the contour lines. In the logarithmic marginal PDF plot, changing the correlation coefficient induces variations in the length of curve’s left and right arms, thereby influencing the tail behavior of PDF solutions.

4.5 Example 4 (system of stronger nonlinearity)

In order to evaluate the accuracy of the solution for a system with higher nonlinearity, the values of \(c_1\) and \(c_2\) from Example 2 are doubled in this example. Consequently, \(c_1\) is set to 0.4 and \(c_2\) to 0.8 for the ensuing analysis. To facilitate a comparison between the results obtained from the OEPC method and the standard EPC method, Table 3 illustrates the values of \(\varvec{\alpha }\) (\(n=6\)) from the two methods. In the subsequent discussion, the standard EPC method, which utilizes the projection solution procedure, is denoted as PEPC.

As listed in Table 3, the values of \(\alpha _i\) obtained from OEPC and PEPC are not identical, indicating that these two methods are not equivalent.

The difference between the two methods can also be demonstrated by substituting the two different \(\varvec{\alpha }\) back into their respective solution procedures to measure the resulting errors. The error measurement function of OEPC is adopted as the OBJ \(\Theta (\varvec{\alpha })\). Meanwhile, the error from the PEPC method is measured by

where \(\Lambda \) denotes the error measurement function of PEPC; \(||*||\) refers to Euclidean norm; \(\varvec{{\mathcal {L}}}\) is a vector, and \({\mathcal {L}}_i\) (\(i=1,2,\ldots ,N\)) denotes the algebraic equation formulated by PEPC, which can be expressed as

where \(i=\frac{j(j+1)}{2}+k\); \(j=1,2,\ldots ,n\); \(k=0,1,\ldots ,j\).

The errors are measured and listed in Table 4. It is observed that both OEPC and PEPC successfully minimize the errors in their respective solution procedures. This observation further confirms the distinction between the two methods.

In Figs. 14, 15 and 16, it can be observed that the solutions of PEPC and OEPC exhibit remarkable similarity to those of MCS, indicating the effectiveness of both methods. The bivariate PDFs are plotted within the range of \(m\pm 5\sigma \), where \(m_1=0.4297\), \(m_2=0\), \(\sigma _1=0.4376\) and \(\sigma _2=0.4376\) are the values provided by GCM.

In Fig. 17, both PEPC and OEPC perform well in the range of \([m-4\sigma ,m+4\sigma ]\). However, the tail of the PEPC has lifted a bit near \(m_1+5\sigma _1\). The trend of the curve suggests that the OEPC method has a slight advantage over the PEPC method. The sample size of MCS is increased to \(10^9\) in this example to show more PDF tails in \([m-5\sigma ,m+5\sigma ]\), resulting in a time consumption of 8882 s. However, even with the sample size being \(10^9\), the tails from MCS still can not cover the entire range of \([m_2-5\sigma _2,m_2+5\sigma _2]\). In contrast, the PEPC only takes 12 s and the OEPC takes 72 s. Therefore, both OEPC and PEPC exhibit higher efficiency compared to MCS. The total time of OEPC (72 s) is the sum of the time spent by GCM (2.33s) and the time spent on formulation and optimization procedures (69.91s).

Regarding the MCR depicted in Fig. 18a, the solutions from PEPC and OEPC align well with MCS. However, in Fig. 18b, for the lo garithmic MCR, the tail trend of OEPC demonstrates enhanced alignment with MCS. This suggests that the OEPC method effectively captures the tail behavior in PDF and MCR analysis.

5 Conclusions

The current study presents a new optimization-oriented EPC approach to solve the reduced FPK equation, aiming to explore the probabilistic solutions of the stochastic systems that incorporate both even and odd nonlinear terms in velocity, under the correlated multiplicative excitation on powered velocity and additive excitation being Gaussian white noises. In contrast to the conventional EPC solution procedure, the OEPC method introduces a substitution for the projection solution procedure by implementing an optimization-oriented procedure. This change results in the formulation of the OBJ as the integration of squared residual error. By introducing the weight function, the spatial integration in the OBJ can be calculated analytically, which simplifies the computation and improves the accuracy of the integral procedure. In addition, the initial values of the unknown parameters are also provided to ensure the convergence of the solution in optimization. To comprehensively evaluate the OEPC approach, four examples with different correlated excitations are provided. In the analysis, we investigate the joint PDFs of displacement and velocity, as well as the marginal PDF solutions and MCRs. Our findings suggest that the OEPC method not only provides improved solution accuracy compared to GCM but also demonstrates superior efficiency compared to MCS. Furthermore, the impact of correlation coefficients is discussed by collectively analyzing the first three examples. It can be concluded that the variation of correlation coefficients can impact both the contour lines and tail behavior of the PDF solutions. In Example 4, the OEPC method also exhibits improved PDF and MCR solutions in their remote tails in the case of strong system nonlinearity as compared to the traditional EPC method. Consequently, the OEPC method provides an option for analyzing stochastic nonlinear oscillators under correlated multi-power velocity multiplicative excitation and additive excitation.

Data availability

The datasets analyzed in the study are available on request.

References

Lin, Y.K., Cai, G.Q.: Probabilistic Structural Dynamics: Advanced Theory and Applications. McGraw-Hill, USA (1995)

Leira, B.J.: Optimal Stochastic Control Schemes Within a Structural Reliability Framework. Springer, London (2013)

Mahrenholtz, O., Bardowicks, H.: Aeroelastic problems at masts and chimneys. J. Wind Eng. Ind. Aerodyn. 4(3–4), 261–272 (1979)

Mahrenholtz, D., Bardowicks, H.: Wind-induced oscillations of some steel structures. In: Practical Experiences with Flow-Induced Vibrations, IAHR/IUTAM Symposium, pp. 643–649 (1980). Springer, Berlin

Zhao, L., Ge, Y.: Emergency measures for vortex-induced vibration of human bridge. In: The 2020 World Congress on Advances in Civil, Environmental, and Materials Research (ACEM20), GECE, Seoul, Korea, pp. 25–28 (2020)

Luongo, A., Zulli, D.: Parametric, external and self-excitation of a tower under turbulent wind flow. J. Sound Vib. 330(13), 3057–3069 (2011)

Zulli, D., Luongo, A.: Bifurcation and stability of a two-tower system under wind-induced parametric, external and self-excitation. J. Sound Vib. 331(2), 365–383 (2012)

Belhaq, M., Kirrou, I., Mokni, L.: Periodic and quasiperiodic galloping of a wind-excited tower under external excitation. Nonlinear Dyn. 74, 849–867 (2013)

Kirrou, I., Mokni, L., Belhaq, M.: On the quasiperiodic galloping of a wind-excited tower. J. Sound Vib. 332(18), 4059–4066 (2013)

Er, G.-K.: An improved closure method for analysis of nonlinear stochastic systems. Nonlinear Dyn. 17(3), 285–297 (1998)

Luo, J., Er, G.-K., Iu, V.P., Lam, C.C.: Transient probabilistic solution of stochastic oscillator under combined harmonic and modulated Gaussian white noise stimulations. Nonlinear Dyn. 111(19), 17709–17723 (2023)

Lin, Y.K., Kozin, F., Wen, Y.K., Casciati, F., Schuëller, G.I., Der Kiureghian, A., Ditlevsen, O., Vanmarcke, E.H.: Methods of stochastic structural dynamics. Struct. Saf. 3(3–4), 167–194 (1986)

Zhu, W.Q., Huang, Z.L.: Exact stationary solutions of stochastically excited and dissipated partially integrable hamiltonian systems. Int. J. Non-linear Mech. 36(1), 39–48 (2001)

Harris, C.J.: Simulation of multivariable non-linear stochastic systems. Int. J. Numer. Methods Eng. 14(1), 37–50 (1979)

James, F.: Monte carlo theory and practice. Rep. Prog. Phys. 43(9), 1145 (1980)

Hammersley, J.: Monte Carlo Methods. Springer, Singapore (2013)

Xiao, M., Geng, G., Li, G., Li, H., Ma, R.: Analysis on dynamic precision reliability of high-speed precision press based on Monte Carlo method. Nonlinear Dyn. 90, 2979–2988 (2017)

Natarajan, H., Popov, P., Jacobs, G.: A high-order semi-lagrangian method for the consistent Monte-Carlo solution of stochastic Lagrangian drift-diffusion models coupled with Eulerian discontinuous spectral element method. Comput. Methods Appl. Mech. Eng. 384, 114001 (2021)

Booton, R.C.: Nonlinear control systems with random inputs. IRE Trans. Circuit Theory 1(1), 9–18 (1954)

Takewaki, I.: Design-oriented ductility bound of a plane frame under seismic loading. J. Vib. Control 3(4), 411–434 (1997)

Wang, B., Liu, J., Tang, B., Xu, M., Li, Y.: Dynamic performance of the energy harvester with a fractional-order derivative circuit. J. Vib. Control 29(7–8), 1498–1509 (2023)

Iyengar, R.N., Dash, P.K.: Study of the random vibration of nonlinear systems by the Gaussian closure technique. ASME J. Appl. Mech. 45(2), 393–399 (1978)

Takewaki, I.: Design-oriented ductility bound of a plane frame under seismic loading. J. Vib. Control 2(4), 415–429 (1996)

Kong, F., Spanos, P.D.: Stochastic response of hysteresis system under combined periodic and stochastic excitation via the statistical linearization method. J. Appl. Mech. 88(5), 051008 (2021)

Wiener, N.: The average of an analytic functional. Proc. Natl. Acad. Sci. USA 7(9), 253–260 (1921)

Yu, J.S., Cai, G.Q., Lin, Y.K.: A new path integration procedure based on Gauss-Legendre scheme. Int. J. Non-linear Mech. 32(4), 759–768 (1997)

Liqin, L., Yougang, T.: Stability of ships with water on deck in random beam waves. J. Vib. Control 13(3), 269–280 (2007)

Di Matteo, A., Di Paola, M., Pirrotta, A.: Path integral solution for nonlinear systems under parametric Poissonian white noise input. Prob. Eng. Mech. 44, 89–98 (2016)

Sun, J.-Q.: Random vibration analysis of a non-linear system with dry friction damping by the short-time Gaussian cell mapping method. J. Sound Vib. 180(5), 785–795 (1995)

Zhang, Z., Dai, L.: The application of the cell mapping method in the characteristic diagnosis of nonlinear dynamical systems. Nonlinear Dyn. 111(19), 18095–18112 (2023)

Sun, J.-Q., Xiong, F.-R., Schütze, O., Hernández, C.: Cell Mapping Methods: Algorithmic Approaches and Applications. Springer, Singapore (2019)

Mavromatis, I.G., Kougioumtzoglou, I.A., Spanos, P.D.: An extrapolation approach within the Wiener path integral technique for efficient stochastic response determination of nonlinear systems. Int. J. Non-linear Mech. 160, 104646 (2024)

Zhang, Y., Kougioumtzoglou, I.A., Kong, F.: Exploiting expansion basis sparsity for efficient stochastic response determination of nonlinear systems via the Wiener path integral technique. Nonlinear Dyn. 107(4), 3669–3682 (2022)

Zhu, W.Q.: Stochastic averaging methods in random vibration. ASME Appl. Mech. Rev. 41(5), 189–199 (1988)

Xu, M., Jin, X., Wang, Y., Huang, Z.: Stochastic averaging for nonlinear vibration energy harvesting system. Nonlinear Dyn. 78, 1451–1459 (2014)

Li, M., Liu, D., Li, J.: Stochastic analysis of vibro-impact bistable energy harvester system under colored noise. Nonlinear Dyn. 111(18), 17007–17020 (2023)

Wojtkiewicz, S.F., Johnson, E.A., Bergman, L.A., Grigoriu, M., Spencer, B.F.: Response of stochastic dynamical systems driven by additive Gaussian and Poisson white noise: solution of a forward generalized Kolmogorov equation by a spectral finite difference method. Comput. Methods Appl. Mech. Eng. 168(1), 73–89 (1999)

Xue, J.-R., Zhang, Y.-W., Niu, M.-Q., Chen, L.-Q.: Harvesting electricity from random vibrations via a nonlinear energy sink. J. Vib. Control 10775463221134962 (2023)

Hu, H., Chen, L., Qian, J.: Random vibration analysis of nonlinear structure with viscoelastic nonlinear energy sink. J. Vib. Control, 10775463231181645 (2023)

Cao, Q., Hu, S.-L.J., Li, H.: Nonstationary response statistics of fractional oscillators to evolutionary stochastic excitation. Commun. Nonlinear Sci. Numer. Simul. 103, 105962 (2021)

Er, G.-K.: A new non-Gaussian closure method for the PDF solution of non-linear random vibrations. In: Proceedings of The ASCE 12th Engineering Mechanics Conference, pp. 1403–1406. ASCE, San Diego, USA (1998)

Er, G.-K., Zhu, H.T., Iu, V.P., Kou, K.P.: Probabalistic solution of nonlinear oscillators under external and parametric Poisson impulses. Am. Inst. Aeronaut. Astronaut. J. 46(11), 2839–2847 (2008)

Zhu, H.: Nonzero mean response of nonlinear oscillators excited by additive Poisson impulses. Nonlinear Dyn. 69, 2181–2191 (2012)

Er, G.-K., Iu, V.P.: Probabilistic solutions of a nonlinear plate excited by Gaussian white noise fully correlated in space. Int. J. Struct. Stab. Dyn. 17(09), 1–18 (2017)

Er, G.-K., Iu, V.P., Du, H.-E.: Probabilistic solutions of a stretched beam discretized with finite difference scheme and excited by Kanai–Tajimi ground motion. Arch. Mech. 71, 433–457 (2019)

Er, G.-.K., Iu, V.P., Wang, K., Guo, S.-S.: Stationary probabilistic solutions of the cables with small sag and modeled as mdof systems excited by gaussian white noise. Nonlinear Dyn. 85(3), 1887–1899 (2016)

Chen, L., Liu, J., Sun, J.-Q.: Stationary response probability distribution of SDOF nonlinear stochastic systems. ASME J. Appl. Mech. 84(5) (2017)

Sun, Y., Hong, L., Yang, Y., Sun, J.-Q.: Probabilistic response of nonsmooth nonlinear systems under Gaussian white noise excitations. Phys. A 508, 111–117 (2018)

Wang, K., Zhu, Z., Xu, L.: Transient probabilistic solutions of stochastic oscillator with even nonlinearities by exponential polynomial closure method. J. Vib. Control 28(9–10), 1086–1094 (2022)

Zhu, Z., Gong, W., Yu, Z., Wang, K.: Investigation on the epc method in analyzing the nonlinear oscillators under both harmonic and gaussian white noise excitations. J. Vib. Control, pp. 1–15 (2022)

Guo, S.-S., Meng, F.-F., Shi, Q.: The generalized EPC method for the non-stationary probabilistic response of nonlinear dynamical system. Probab. Eng. Mech. 72, 1–15 (2023)

Guo, S.-S., Shi, Q., Xu, Z.-D.: Probabilistic solution for an MDOF hysteretic degrading system to modulated non-stationary excitations. Acta Mech. 234, 1105–1120 (2023)

Broyden, C.G.: The convergence of a class of double-rank minimization algorithms 1. general considerations. IMA J. Appl. Math. 6(1), 76–90 (1970)

Fletcher, R.: A new approach to variable metric algorithms. Comput. J. 13(3), 317–322 (1970)

Goldfarb, D.: A family of variable-metric methods derived by variational means. Math. Comput. 24(109), 23–26 (1970)

Shanno, D.F.: Conditioning of quasi-newton methods for function minimization. Math. Comput. 24(111), 647–656 (1970)

Dennis, J.E., Jr., Moré, J.J.: Quasi-newton methods, motivation and theory. SIAM Rev. 19(1), 46–89 (1977)

Berahas, A.S., Byrd, R.H., Nocedal, J.: Derivative-free optimization of noisy functions via quasi-Newton methods. SIAM J. Optim. 29(2), 965–993 (2019)

Bukshtynov, V.: Computational Optimization: Success in Practice. CRC Press (2023)

Chapra, S.C.: Numerical Methods for Engineers. Mcgraw-hill (2010)

Davidon, W.C.: Variable metric method for minimization. SIAM J. Optim. 1(1), 1–17 (1991)

Mishra, S.K., Ram, B.: Introduction to Unconstrained Optimization with R. Springer (2019)

Sreeraj, P., Kannan, T., Maji, S.: Prediction and optimization of weld bead geometry in gas metal arc welding process using RSM and fmincon. J. Mech. Eng. Res 5(8), 154–165 (2013)

Ji, W., Shao, T.: Finite element model updating for improved box girder bridges with corrugated steel webs using the response surface method and fmincon algorithm. KSCE J. Civ. Eng. 25(2), 586–602 (2021)

Novac, M., Vladu, E., Novac, O., Gordan, M.: Aspects regarding the optimization of the induction heating process using fmincon, minimax functions and simple genetic algorithm. J. Electr. Electron. Eng. 2(2), 64–70 (2009)

Grigoriu, M.: Crossings of non-Gaussian translation processes. J. Eng. Mech. 110(4), 610–620 (1984)

Naess, A., Gaidai, O.: Monte Carlo methods for estimating the extreme response of dynamical systems. J. Eng. Mech. 134(8), 628–636 (2008)

Hagen, Ø., Tvedt, L.: Vector process out-crossing as parallel system sensitivity measure. J. Eng. Mech. 117(10), 2201–2220 (1991)

Li, C.-Q., Firouzi, A., Yang, W.: Closed-form solution to first passage probability for nonstationary lognormal processes. J. Eng. Mech. 142(12), 1–9 (2016)

Er, G.-K.: Crossing rate analysis with a non-gaussian closure method for nonlinear stochastic systems. Nonlinear Dyn. 14, 279–291 (1997)

Guo, S.-S., Er, G.-K., Lam, C.C.: Probabilistic solutions of nonlinear oscillators excited by correlated external and velocity-parametric Gaussian white noises. Nonlinear Dyn. 77, 597–604 (2014)

Funding

The results presented in this paper were obtained under the supports of the Research Committee of University of Macau (Grant No. MYGR2022-00169-FST).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Bai, GP., Er, GK. & Iu, V.P. Probabilistic analysis of nonlinear oscillators under correlated multi-power velocity multiplicative excitation and additive excitation. Nonlinear Dyn (2024). https://doi.org/10.1007/s11071-024-09823-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11071-024-09823-1