Abstract

Secure data transmission over the public channels have high impact and increasingly important due to theft and manipulation in contents. The requirement of public/ private organizations to develop an efficient scheme to provide security to their contents. We developed a digital contents encryption scheme based Arnold scrambling and Lucas series, which is very simple to implement but almost impossible to breach in this article. We perform encryption at standard images by using Lucas series at different iterations of scrambled images of Arnold transform. Numerical simulation analyses performed to analyze the efficiency and effectiveness of the projected structure.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The existing digital world become a global village due to fast expansion of internet. The access of digital contents from any part of digitally advanced world is quite easy. But there are several circumstances in daily existence, when it is significant to hide the actual contents of secret information transmitted over an insecure line of communication. Many traditional cryptosystems are utilized to perform these tasks in order to secure the confidential information [47]. Digital contents such as documents, images, audio and videos files are transmitted over insecure communication medium. This easiness sure added comfort in our daily live but on the other side it creates a massive threat to our secret information. The faith of nationals highly depends upon their secret information that has to be covered from eavesdropper. The confidentially of information can be achieved through encryption process. The modern block ciphers utilized the idea of substitution-permutation network (SP-Network) which is one of the fundamental aspect while designing strong encryption algorithm. The SP-network fundamentally utilized notions of confusion and diffusion. In quantitative sense, confusion can be achieved through substitution cipher whereas diffusion can be achieved through permutation [9, 14, 15, 33, 38, 41, 47, 55, 56].

The terms diffusion and confusion were acquainted by Claude Shannon with catch the two fundamental structure hinders for any cryptographic framework. Shannon’s concern was to prevent cryptanalysis dependent on statistical security examination. Each block cipher algorithm includes a change of a block of plaintext into a block of ciphertext, where the change relies upon the key. The designing of diffusion looks to make the measurable connection between the plaintext and ciphertext as unpredictable as conceivable so as to foil endeavour’s to conclude the key. Confusion tries to make the connection between the measurements of the ciphertext and the estimation of the encryption key as mind boggling as could be allowed, again to impede endeavours to find the key.

In this manner, diffusion and confusion in catching the quintessence of the ideal properties of a block ciphers that they have turned into the foundation of present day modern block ciphers. Structuring an encryption strategy utilizes both of the standards of confusion and diffusion. Diffusion implies that the procedure definitely changes information from the contribution to the yield, for instance, by interpreting the information through a non-straight table made from the key. We have bunches of approaches to turn around straight block ciphers, so the more non-direct it is the more investigation instruments it breaks. Diffusion implies that changing a single character of the info will change numerous characters of the yield [3,4,5,6, 26, 29, 42, 43, 57, 60, 61].

In order to achieve the good quality confusion, substitution boxes were utilized in literature [26, 29]. The construction of S-boxes with optimal characteristic is one of the most interesting problems in cryptography. Different types of mathematical structures for instance Galois field, Galois ring, chaos theory and optimization techniques were utilized to construct a confusion component of modern block ciphers [23,24,25, 28, 58, 59]. Moreover, diffusion can also be achieved through different chaotic systems.

Decent diffusion process distributes those patterns widely through the output, and if there are several patterns making it through they scramble each other. This makes patterns vastly harder to spot, and massively upsurges the quantity of data to analyse to break the cipher. In recent research in the field of chaos and especially on systems with complex (chaotic) behaviour have showed potential applications in various fields including healthcare, medicine and defence. The special characteristics, such as sensitivity to initial conditions, randomness, probability and ergodicity make chaos mapping as a potential candidate to analyse security issues. Chaotic maps and cryptographic algorithms have some similar properties: both are sensitive to tiny changes in initial conditions and parameters; both have random like behaviours; and cryptographic algorithms shuffles and diffuse data by rounds of encryption, while chaotic maps spread a small region of data over the entire phase space via iterations. The only difference in this regard is that encryption operations are defined on finite sets of integers while chaos is defined on real numbers. Integrating chaotic systems into a block cipher utilizes chaotic properties rapidly to scramble and diffuse data. Two general principles that guide the design of block ciphers are diffusion and confusion. These two principles are closely related to the mixing and ergodicity properties of chaotic maps [34, 51,52,53,54]. The chaotic systems can be classified into two broader branches namely discrete dynamical systems and continuous chaotic systems. The discrete dynamical systems use discrete iterative maps whereas continuous chaotic systems use differential equations. In literature researchers utilized one dimensional, two dimensional and three dimensional chaotic iterative maps and their different combinations in order to add confusion and diffusion capabilities in modern block ciphers. The development of new chaotic systems for modern block ciphers is one of the unavoidable area of research [17,18,19,20,21,22,23,24,25,26,27,28,29, 32, 35, 36, 38,39,40,41,42,43,44,45,46,47,48,49]

In this regard, a Russian mathematician Vladimir Igorevich Arnold proposed a new two dimensional map based on continuous automorphism on the torus (CAT) in 1960’s. A complete chaotic system can be constructed by utilizing Arnold cat transformation. By randomization in digital image can be achieved by utilizing the repeated applications of Arnold cat map which randomized the order of pixels. The repeated iteration of CAT map enough number of times, produced the actual image which shows the periodic nature and occurrence of fixed points [8]. The periodicity of CAT transformation attracted researchers interest. Keating et al., discussed the periodicity and approximate solution of Arnold transformation with the help of number theory and probabilistic approach at infinity [17]. Dyson and Falk solved the period problem of Arnold map and determined the upper bound of periodicity [13]. Then after several image encryption schemes were developed based on Arnold map. W. Chen et al., proposed am encryption scheme based on inference technique and Arnold transformation [12]. Zhengjun Lie et al., combined Arnold transform with discrete cosine transforms to propose a technique for color image encryption [36]. Ali Soleymani et al., devised an encryption scheme by combining Arnold transform and Henon chaotic map [44]. A. Q Alhamad proposed optical encryption technique based on double phase encoder and Arnold transform for color image confidentiality [16]. Recently, Majid Khan utilized Fourier series in order to encryption an image [22]. Different types of series were developed and utilized in diverse image encryption techniques. Among them Lucas and Fibonacci series most famous for image encryption. Minati M. et al., projected an encryption scheme based on Lucas and Fibonacci series with quite handsome security features [37].

An efficient image encryption scheme with Arnold transform and Lucas series is proposed here, which resist the statistical analyses, known and chosen plain text attacks. In this article, we have scrambled different images of data base [50] by changing the iteration process and perform encryption on scrambled images using Lucas series. The number of iterations selected by key known to sender and receiver using Diffie Hellman algorithm. Section 2 of this article is about some preliminaries which demonstrate the Diffie Hellman algorithm, Arnold transform and Lucas series. The designed algorithm and its implementation is presented in section 3. Performance and security analyses discussed in detail in section 4 and concluding remarks given in section 5.

2 Preliminaries

This section fundamentally demonstrated some basic concepts of Diffie-Hellman algorithm, Arnold transform and Lucas series which will be used in different sections of this article. We have analyzed the scrambled images with different iterations decided by Diffie-Hellman algorithm in section 2.2.

2.1 Exchange of keys

This algorithm provides securely exchanging secrets over a public channel, i.e., establish common secret over an insecure line of communication. The common key refers the number of iterations in scrambling process and choose the starting position of Lucas series for encryption. The idea behind exchanging of secret is described by colors as follow in Fig. 1 [49].

Let both parties, Alice and Bob agree upon some prime numbers n and g, they produce common key with their own secrets as follows:

ALICE | Bob |

|---|---|

Secret = a | Secret = b |

A = ga mod n | B = gb mod n |

Common key = K1 = Ba mod n | Common key = K2 = Ab mod n |

K1 = K = K2 | |

Now, suppose both parties agree upon the prime numbers n = 11 and g = 5, they may vary their secrets each time for communication.

-

a.

When a = 4 and b = 5, then their secret key K = 1.

-

b.

When a = 2 and b = 3, then their secret key K = 5.

-

c.

When a = 4 and b = 6, then their secret key K = 9.

2.2 Arnold transform

Cat-face scrambling process transform the content similar to white noise in directive to distribute the energy of an image uniformly. Scrambling increases, the bandwidth of channel which provides the enough space for data implanting, if image is considered as information source [18]. Among the scrambling techniques, cat face transforms extensively used, which is produced by Arnold in the research of ergodic theory. This process aligns the pixels’ position of digital image by clipping and splicing [7]. The two dimensional cat face transform for (x, y) is expressed as;

where x, and y demonstrate the pixels’ position of an image, x' and y' denotes the new pixels’ position after transform and N represent the size of an image. We perform scrambling analyses for ‘T’ iterations, provided by Diffie-Hellman process for T = 1, 5 and 9, such that T[x′, y′] follow in Fig. 2. The scrambled images of Fig. 2, operated by Lucas series given in section 3.1 to enhance the security of contents. To enhance the level of security, we can increase the number of iterations of cat face transform to process scrambling of image pixels more efficiently and uniformly. We perform encryption after 9 iteration of scrambling to analyze the results given in below sections.

2.3 Lucas sequence

In nineteenth-century, a French Mathematician E. Lucas produce the sequence in the research of recurrent sequences. This is similar to Rabbits theory problem of Fibonacci sequence [31] presented in Fig. 3 except the initial conditions. The Fibonacci sequence is defined as:

while Lucas sequence is defined as:

The initial numbers of the Lucas sequence are 1 and 3, the succeeding numbers are addition of the previous two. The sequence becomes 1, 3, 4, 7, 11, 18, 29, 47, …. Lucas numbers can be generated from Fibonacci sequence as declared below:

3 Proposed algorithm

We scrambled the contents of Lena and Pepper images of size 512 × 512 in section 2.2 and also explain the Lucas series for encryption in section 2.3 (Fig. 4).

The most relevant factor of this algorithm is key. As we deal in public key, both sender and receiver use Diffie-Hellman algorithm to establish key secretly over the public channel. This key decides the number of iterations for Arnold transform and indicate the starting position of Lucas series. The steps to encrypt the digital images as follows:

-

1.

Key distribution between two parties using Diffie-Hellman algorithm.

-

2.

Apply Arnold transformation with respect to key to scramble the image.

-

3.

Select the starting point of Lucas series with key and set it with respect to image size and dimension.

-

4.

Perform XOR operation between scrambled image and the selected Lucas series to get encrypted result.

3.1 Experimentation of anticipated structure

We assume here the iteration key K = 9, discussed in section 2.1 and process the images of Fig. 2 for T = 9 with the modulus 256 of Lucas sequence starting from 76 up to 512 × 512 position number. Layer wise operation is performed on images to evaluate the algorithm results.

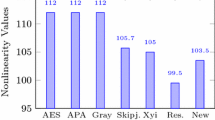

4 Performance analyses for anticipated structure

Different standard performance analyses accomplished in this section on standard digital contents to sustain the performance and sanctuary of anticipated scheme. These assessments contain the sensibility examination, factual and irregularity investigation and loophole tests for encrypted data. Different analyses discussed in subsection of 4 in detail to examine the sensitivity of proposed algorithm.

4.1 Randomness analyses

With a specific end goal to justify the prerequisites of long period, uniform scattering, high complexity and efficiency for proposed cryptosystem, we perform NIST analysis of version SP 800–2 to testify the uncertainty of digital contents. The encoded Lena image is employed to accomplish NIST tests and the aftereffects results presented in Table 1. By analyzing the outcomes, the anticipated encryption scheme effectively passes all the NIST tests. The production of random ciphers in projected scheme are irregular in the light of accomplished outcomes.

4.2 Histogram uniformity analyses

Histograms uniformity of enciphered images estimates the security of encryption framework. [22]. We have computed the histograms of 256 dark level original, scrambled and encrypted images of size 512 × 512, that have different contents. The histograms of plain and scrambled images contain sharp rises took after sharp decline, while enciphered images contain uniformity and much differ from original and scrambled ones shown in Figs. 5 and 6, which makes statistical attacks hard [25]. Consequently, it doesn’t provide any information consumed in assessable investigation attack on encrypted images (see Figs. 7 and 8).

Plain, scrambled and encoded layer wise histograms of Lena image. (a-d) Plain Lena image and its corresponding red, green and blue layer histograms, (e-h) Scrambled Lena image and its corresponding red, green and blue layer histograms, (i-l) Encrypted Lena image and its corresponding red, green and blue layer histograms

Plain, scrambled and encoded layer wise histograms of Pepper image. (a-d) Plain Pepper image and its corresponding red, green and blue layer histograms, (e-h) Scrambled Pepper image and its corresponding red, green and blue layer histograms, (i-l) Encrypted Pepper image and its corresponding red, green and blue layer histograms

4.3 Entropy analyses

The leading feature of randomness specified by Entropy. Specified an independent source of random trials from set of probable distinct events {x1, x2, x3, ..., xn} with similar possibilities, the average output of source evidence called entropy (Figs. 9, 10, 11 and 12).

where xn is the source image and 2N referred to the aggregate of data. Shannon entropy for perfectly farraginous of 8-bit digital contents is 8. Table 2 demonstrate the entropies of different standard plain images and their scrambled, encrypted contents.

The entropy esteems of encrypted images are very close to ideal Shannon esteem, which implies the leakage of data in proposed encryption algorithm is inappropriate and the mechanism is secure upon entropy attacks [59]. The information entropies of suggested scheme for encrypted images have superior results, when compared with existing approaches. Table 3 demonstrate the comparison of proposed technique with existing approaches foe Lena image, while Table 13 validate for the comparison of different standard images.

4.4 Pixels’ correlation analyses

The adjacent pixels of an image are exceedingly associated in horizontal, vertical and diagonal directions. The encrypted data must unrestraint this affiliation to improve the barrier opposing to quantifiable analysis. To testify the association among neighboring pixels in plain, scrambled and the encrypted images, initially 10,000 sets of two adjacent pixels from digital content is selected [23].

where x and y are two adjoining pixels approximation at gray scale, σx, y is the covariance and σx2, \( {\sigma}_y^2 \) are variances of random variables x and y.

The coefficients of correlation for plain, scrambled and encrypted images having dissimilar contents conveyed in Tables 4 and 5. The association among various couples of original, scrambled and encrypted images evaluated by calculating the two dimensional correlation coefficients among the original - scrambled and original – encrypted images [24, 58]. The succeeding calculation is employed to compute the correlation coefficients.

where P and C signifies the plain and cipher images, \( \overline{P} \) and \( \overline{C} \) represents the mean values of P and C, M and N demonstrates the height and width of original / cipher images. The estimated coefficients of correlation for the plain, scrambled and encrypted images in the direction of horizontal, vertical and diagonal expressed in Tables 4 and 5.

The quantifiable investigation for corresponding coefficients in dynamic symmetry conferred in Table 5 for plain, scrambled and encoded images at RGB scale.

The plain and enciphered contents are extensively dissimilar from one another and the enciphered coefficients are exceptionally near zero. The evaluation of correlation coefficients for anticipated structure with existing approaches using standard images specified in Tables 6 and 7.

The outcomes of our proposed structure have inferior values coefficients, which meet the necessities for competent technique in real time application for enciphering.

4.5 Pixels’ resemblance analyses

The pixel’s resemblance fundamentally reveals the similarity among different digital contents. There are variety of similarity measures in order to approximate the structural detail for digital contents. We have analyzed the structural similarity index metric (SSIM), normalized cross correlation (NCC) and structural content (SC) between plain (Pi, j ) and cipher images (Ci, j) in order to evaluate the structure similarity among different images from their reference. SSIM used to compare the luminance, structure and contrast of plain and enciphered contents. Let us consider here two images Pi, j and Ci, j with mean values μp, μc and the standard deviation σp c. If there will be any resemblance between plain and ciphered contents, the quantitative analysis close to 1 and the value approaches 0 for dissimilarity in contents (Table 8).

NCC measures the structural similarity between two images and if the quantitative analysis quite close to unity, the structure similarity between images is resilient. We perform this experiment at the original-scrambled and the original-encrypted images to analyze the similarity between them. The expression for determining the NCC as follows:

The structural detail of an image and quality in terms of noise level and sharpness assessed here by calculating the SC. For structure similarity between digital contents, SC value quite closed to unity, whereas away from unity specify the dissimilarity in digital contents. Higher estimation of SC indicates the low quality of picture and it tends to be determined as:

4.6 Pixels’ disparity analyses

The digital contents eminence based on pixels’ disparity analyzed here by evaluating the mean absolute error (MAE), mean square error (MSE) and peak signal to noise ratio (PSNR).

The most widely recognized method used to quantify the precision for continuous variables is MAE. The average absolute disparity between plain-scrambled and plain-encrypted images specified by MAE and its esteem must be higher to enhance the security level. It can be calculated as follows:

Essentially, scrambled and encrypted digital contents have dissimilarity concerning the plain image. The image encryption quality can be assessed by MSE and PSNR, while MSE signifies the cumulative square error measure and PSNR indicates the peak error between original and ciphered images. To improve the encryption quality, MSE esteem must be higher and PSNR values must be low or vice versa. To calculate PSNR, first we have to calculate the MSE using following expression:

where Pij and Cij refers the pixels position at ith row and jth column of plain and ciphered images distinctly. Superior the MSE esteem represents the enhancement of encryption strategy [30]. PSNR ratio determines the quality measurement between the original and ciphered image described by the succeeding expression.

where IMAX is the utmost pixel’s estimation of image. On comparing with immense difference between the plain and ciphered images, PSNR should be low esteem. The feasibility of proposed approach assessed for MSE and PSNR for standard digital images accumulated in Table 9.

4.7 Texture and visual strength analyses

To analyze the texture and visual quality of proposed strategy, distinctive gray level co-occurrence matrix (GLCM) examinations which are homogeneity, contrast and energy performed [20]. The homogeneity of a picture is characterized as:

where i and j indicates the row and column position of pixel in image. This examination executes the familiarity of dissemination in GLCM to GLCM diagonally and its range is characterized somewhere in between of 0 and 1.

Contrast analysis allow the observer to spot the object within the texture of image and it’s outlined as:

The range of contrast is characterized somewhere in between 0 and (size (image))2. Greater the value of contrast represents the large number of variations in the pixels of an image, while consistent image has 0 contrast.

The energy analysis of an image returns the aggregate of squared components in GLCM and characterized as:

The energy is characterized somewhere in the range of 0 and 1, while the consistent picture has 1 energy. GLCM analyses for scrambled and enciphered images are given in Table 10.

4.8 Differential attacks analyses

To testify the robustness against differential attacks for anticipated scheme, an adjustment of one pixel in plain image modifies the encrypted image for comparing, with a probability of half pixel altering. For a change in ith chunk of permuted digital image affects the ith chunk of ciphered image directly. We ensure that our structure has appropriate influence capacity to plain images to affirm the impact of modifying a solitary pixel in a plain image and the entire enciphered image. For a specific objective to measure the impact of minor alteration in plain image on its enciphered one, the number of pixels changing rate (NPCR) bound together to originate the unified average change intensity (UACI) [30]. The NPCR and UACI tests for two encoded images C1(i, j) and C2(i, j) in which one image just varied by one pixel can be evaluated by using the succeeding expressions.

where \( D\left(i,j\right)=\Big\{{}_{1,\kern0.36em {C}_1\left(i,j\right)\ne {C}_2\left(i,j\right)}^{0,\kern0.36em {C}_1\left(i,j\right)={C}_2\left(i,j\right)}\kern0.24em \)

To evaluate the sensitivity of plain image, encrypt the plain image and randomly choose and altered one pixel in plain image. Better the encryption analysis by higher the UACI esteem depicted in Tables 11, 12 and 13, which provides the experimental outcomes for scrambled and encrypted results and comparison with latest techniques.

The evaluation of the entropy and differential analyses for our projected technique with contemporary approaches using standard images presented in Table 14. The outcomes of our proposed structure have superior results for entropy and differential analyses, which are suitable in real time applications for image enciphering.

Table 12 validates the NPCR esteems for anticipated scheme which is closed to the perfect estimation of 1 and UACI esteems also have better results than existing approaches depicted in Table 13. These outcomes show that projected scheme has great degree sensitive to minor change in plain image, irrespective of whether the two ciphered images have 1-bit alteration [30]. The anticipated structure has superior ability to hostile the differential attacks in investigation with alternative approaches (see Table 14).

4.9 Time complexity analyses

Most of the time algorithms consider and analyzed by time and space complexity. The amount of time in seconds taken by proposed algorithm to run as a function of length of input referred as time complexity, while memory taken by algorithm referred to space complexity. There are lot of things regarding time and space complexity like hardware, operating system etc. Here we are interested in execution time of proposed scheme only, so we didn’t consider any factor while analyzing the algorithm. Time sensitivity analysis of proposed strategy for standard images and comparison with existing approaches accumulated in Table 15.

The anticipated plan in this article utilizes minimum assets and least computational complexity and cost, which validate the effectiveness of proposed plan than already existing methodologies.

5 Conclusion

The proposed algorithm refers public key cryptography. The key is an important factor which is decided by Diffie-Hellman algorithm. Key decides the number of iterations performed on images to for scrambling and also decide the starting position of either Lucas or Fibonacci sequence. These ranges produce unique results to secure the data. The algorithm has low computational complexity and resist brute-force attacks. We can enhance the security level by just increase number of iterations in scrambling process before encryption. The proposed structure is suitable for real time applications because of small processing time and superior performance and capacity to hostile attacks than other encryption schemes. The future direction we would like to proposed here to use quantum iterative maps instead of Lucas series on scrambled data to enhance security level much high.

References

Ahmad J, Hwang SO (2016) A secure image encryption scheme based on chaotic maps and affine transformation. Multimed Tools Appl 75(21):13951–13976

Ahmed F, Anees A, Abbas VU, Siyal MY (2014) A noisy channel tolerant image encryption scheme. Wirel Pers Commun 77(4):2771–2791

Aljawarneh S, Aldwairi M, Yassein MB (2018) Anomaly-based intrusion detection system through feature selection analysis and building hybrid efficient model. J Comput Sci 25:152–160

Aljawarneh S, Radhakrishna V, Kumar PV, Janaki V (2016) A similarity measure for temporal pattern discovery in time series data generated by IoT. In: 2016 International conference on engineering & MIS (ICEMIS) (pp. 1–4). IEEE

Aljawarneh SA, Vangipuram R (2018) GARUDA: Gaussian dissimilarity measure for feature representation and anomaly detection in Internet of things. J Supercomput:1–38

Aljawarneh SA, Vangipuram R, Puligadda VK, Vinjamuri J (2017) G-SPAMINE: An approach to discover temporal association patterns and trends in internet of things. Futur Gener Comput Syst 74:430–443

Arnold VI (1968) The stability problem and ergodic properties for classical dynamical systems. In: Vladimir I. Arnold-Collected Works (pp. 107–113). Springer, Berlin, Heidelberg

Arnold VI, Avez A (1968) “Ergodic Problems of Classical Mechanics,” Benjamin, 1968. J Franks “Anosov diffeomorphisms” in global Analysis, proc Sympos, Pure math, American Mathematical Society 14:61–93

Belazi A, El-Latif AAA, Diaconu AV, Rhouma R, Belghith S (2017) Chaos-based partial image encryption scheme based on linear fractional and lifting wavelet transforms. Opt Lasers Eng 88:37–50

Belazi A, Khan M, El-Latif AAA, Belghith S (2017) Efficient cryptosystem approaches: S-boxes and permutation–substitution-based encryption. Nonlinear Dynamics 87(1):337–361

Boriga RE, Dăscălescu AC, Diaconu AV (2014) A new fast image encryption scheme based on 2D chaotic maps. IAENG Int J Comput Sci 41(4):249–258

Chen W, Quan C, Tay CJ (2009) Optical color image encryption based on Arnold transform and interference method. Opt Commun 282:3680–3685

Dyson FJ, Falk H (1992) Period of a discrete cat mapping. Am Math Mon 99:603–614

El-Latif AAA, Abd-El-Atty B, Talha M (2018) Robust encryption of quantum medical images. IEEE Access 6:1073–1081

El-Latif AAA, Li L, Niu X (2014) A new image encryption scheme based on cyclic elliptic curve and chaotic system. Multimed Tools Appl 70(3):1559–1584

Elshamy AM, El-Samie FEA, Faragallah OS, Elshamy EM, El-sayed HS, El-zoghdy SF, Rashed ANZ, Mohamed AE-NA, Alhamad AQ (2016) Optical image cryptosystem using double random phase encoding and Arnold’s Cat map. Opt Quant Electron, Springer 48:212

Fang L, YuKai W (2010) Restoring of the watermarking image in Arnold scrambling. In: Signal Processing Systems (ICSPS), 2010 2nd International Conference on (Vol. 1, pp. V1–771). IEEE

Guo Q, Liu Z, Liu S (2010) Color image encryption by using Arnold and discrete fractional random transforms in IHS space. Opt Lasers Eng 48(12):1174–1181

Huang HF (2010) An Image Scrambling Encryption Algorithm Combined Arnold and Chaotic Transform. In Int. Conf. China Communication (pp. 208–210)

Hussain I, Anees A, Aslam M, Ahmed R, Siddiqui N (2018) A noise resistant symmetric key cryptosystem based on S8 S-boxes and chaotic maps. The European Physical Journal Plus 133:1–23

Keating JP (1991) Asymptotic properties of the periodic orbits of the cat. Nonlinearity 4:277–307

Khan M (2015) An image encryption by using Fourier series. J Vib Control 21:3450–3455

Khan M (2015) A novel image encryption scheme based on multiple chaotic S-boxes. Nonlinear Dynamics 82(1–2):527–533

Khan M, Asghar Z (2018) A novel construction of substitution box for image encryption applications with Gingerbreadman chaotic map and S 8 permutation. Neural Comput & Applic 29(4):993–999

Khan M, Munir N (2019) A novel image encryption technique based on generalized advanced encryption standard based on field of any characteristic. Wirel Pers Commun. https://doi.org/10.1007/s11277-019-06594-6

Khan M, Shah T (2014) A construction of novel chaos base nonlinear component of block cipher. Nonlinear Dynamics 76(1):377–382

Khan M, Shah T (2014) A novel image encryption technique based on Hénon chaotic map and S 8 symmetric group. Neural Comput & Applic 25(7–8):1717–1722

Khan M, Shah T (2015) An efficient chaotic image encryption scheme. Neural Comput & Applic 26:1137–1148

Khan M, Shah T, Batool SI (2016) A new implementation of chaotic S-boxes in CAPTCHA. SIViP 10(2):293–300

Khan M, Waseem HM (2018) A novel image encryption scheme based on quantum dynamical spinning and rotations. PLoS One 13(11):e0206460

Khan M, Waseem HM (2019) A novel digital contents privacy scheme based on Kramer's arbitrary spin. Int J Theor Phys. https://doi.org/10.1007/s10773-019-04162-z

Khawaja MA, Khan M (2019) A new construction of confusion component of block ciphers. Multimed Tools Appl. https://doi.org/10.1007/s11042-019-07866-w

Khawaja MA, Khan M (2019) Application based construction and optimization of substitution boxes over 2D mixed chaotic maps. Int J Theor Phys. https://doi.org/10.1007/s10773-019-04188-3

Li L, Abd-El-Atty B, El-Latif AAA, Ghoneim A (2017) Quantum color image encryption based on multiple discrete chaotic systems. In 2017 Federated Conference on Computer Science and Information Systems (FedCSIS) (pp. 555–559). IEEE

Liu Z, Chen H, Liu T, Li P, Xu L, Dai J, Liu S (2011) Image encryption by using gyrator transform and Arnold transform. Journal of Electronic Imaging 20(1):013020

Liu Z, Xu L, Liu T, Chen H, Li P, Lin C, Liu S (2011) Color image encryption by using Arnold transform and color-blend operation in discrete cosine transform domains. Opt Commun 284:123–128

Minati M, Priyadarsini M, Adhikary MC, Sunit K (September 2012) Image encryption using fibonacci-lucas transformation. International Journal on Cryptography and Information Security (IJCIS) 2(3)

Munir, N. and Khan, M., 2018, September. A Generalization of Algebraic Expression for Nonlinear Component of Symmetric Key Algorithms of Any Characteristic p. In 2018 International Conference on Applied and Engineering Mathematics (ICAEM) (pp. 48–52). IEEE.

Norouzi B, Mirzakuchaki S, Seyedzadeh SM, Mosavi MR (2014) A simple, sensitive and secure image encryption algorithm based on hyper-chaotic system with only one round diffusion process. Multimed Tools Appl 71(3):1469–1497

Norouzi B, Seyedzadeh SM, Mirzakuchaki S, Mosavi MR (2015) A novel image encryption based on row-column, masking and main diffusion processes with hyper chaos. Multimed Tools Appl 74(3):781–811

Premaratne P, Premaratne M (2012) Key-based scrambling for secure image communication. In International Conference on Intelligent Computing (pp. 259–263). Springer, Berlin, Heidelberg

Radhakrishna V, Aljawarneh SA, Kumar PV, Choo KKR (2018) A novel fuzzy gaussian-based dissimilarity measure for discovering similarity temporal association patterns. Soft Comput 22(6):1903–1919

Radhakrishna V, Kumar GR, Aljawarneh S (2017) Optimising business intelligence results through strategic application of software process model. International Journal of Intelligent Enterprise 4(1–2):128–142

Soleymani A, Nordin J, Sundararajan E (2004) A chaotic cryptosystem for images based on Henon and Arnold cat map. Hindawi Scientic World, pp. 1–21

Stoyanov B, Kordov K (2015) Image encryption using Chebyshev map and rotation equation. Entropy 17(4):2117–2139

Wang XY, Zhang YQ, Bao XM (2015) A colour image encryption scheme using permutation-substitution based on chaos. Entropy 17(6):3877–3897

Waseem HM, Khan M (2018) Information Confidentiality Using Quantum Spinning, Rotation and Finite State Machine. Int J Theor Phys 57(11):3584–3594

Waseem HM, Khan M (2019) A new approach to digital content privacy using quantum spin and finite-state machine. Appl Phys B Lasers Opt 125(2):27

Waseem HM, Khan M, Shah T (2018) Image privacy scheme using quantum spinning and rotation. Journal of Electronic Imaging 27(6):063022

Weber AG (1997) The USC-SIPI image database version 5. USC-SIPI Report 315:1–24

Wei Z, Moroz I, Sprott JC, Wang Z, Zhang W (2017) Detecting hidden chaotic regions and complex dynamics in the self-exciting homopolar disc dynamo. International Journal of Bifurcation and Chaos 27(02):1730008

Wei Z, Pham VT, Kapitaniak T, Wang Z (2016) Bifurcation analysis and circuit realization for multiple-delayed Wang–Chen system with hidden chaotic attractors. Nonlinear Dynamics 85(3):1635–1650

Wei Z, Yu P, Zhang W, Yao M (2015) Study of hidden attractors, multiple limit cycles from Hopf bifurcation and boundedness of motion in the generalized hyperchaotic Rabinovich system. Nonlinear Dynamics 82(1–2):131–141

Wei Z, Zhu B, Yang J, Perc M, Slavinec M (2019) Bifurcation analysis of two disc dynamos with viscous friction and multiple time delays. Appl Math Comput 347:265–281

Yan X, Wang S, El-Latif AAA, Niu X (2015) New approaches for efficient information hiding-based secret image sharing schemes. SIViP 9(3):499–510

Yassein MB, Aljawarneh S, Al-huthaifi RK (2017) Enhancements of LEACH protocol: Security and open issues. In 2017 International Conference on Engineering and Technology (ICET) (pp. 1–8). IEEE.

Yassein MB, Aljawarneh S, Qawasmeh E, Mardini W, Khamayseh Y (2017) Comprehensive study of symmetric key and asymmetric key encryption algorithms. In: 2017 international conference on engineering and technology (ICET) (pp. 1–7). IEEE

Younas I, Khan M (2018) A New Efficient Digital Image Encryption Based on Inverse Left Almost Semi Group and Lorenz Chaotic System. Entropy 20(12):913

Zhang G, Liu Q (2011) A novel image encryption method based on total shuffling scheme. Opt Commun 284:2775–2780

Zhou Y, Agaian S, Joyner VM, Panetta K (2008) Two Fibonacci p-code based image scrambling algorithms. In: Image Processing: Algorithms and Systems VI (Vol. 6812, p. 681215). International Society for Optics and Photonics

Zhou NR, Hua TX, Gong LH, Pei DJ, Liao QH (2015) Quantum image encryption based on generalized Arnold transform and double random-phase encoding. Quantum Inf Process 14(4):1193–1213

Acknowledgements

Both authors Hafiz Muhammad Waseem and Dr. Syeda Iram Batool are greatly thankful to Vice Chancellor Dr. Syed Wilayat Husain, Dean Iqbal Rasool Memon and Director Cyber & information security Dr. Muhammad Amin, Institute of Space Technology, Islamabad Pakistan, for providing decent atmosphere for research and development.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

We have no conflict of interest to declare concerning the publication of this article.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Batool, S.I., Waseem, H.M. A novel image encryption scheme based on Arnold scrambling and Lucas series. Multimed Tools Appl 78, 27611–27637 (2019). https://doi.org/10.1007/s11042-019-07881-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-019-07881-x